论文地址:https://arxiv.org/pdf/2002.12046.pdf

代码地址:

1.是什么?

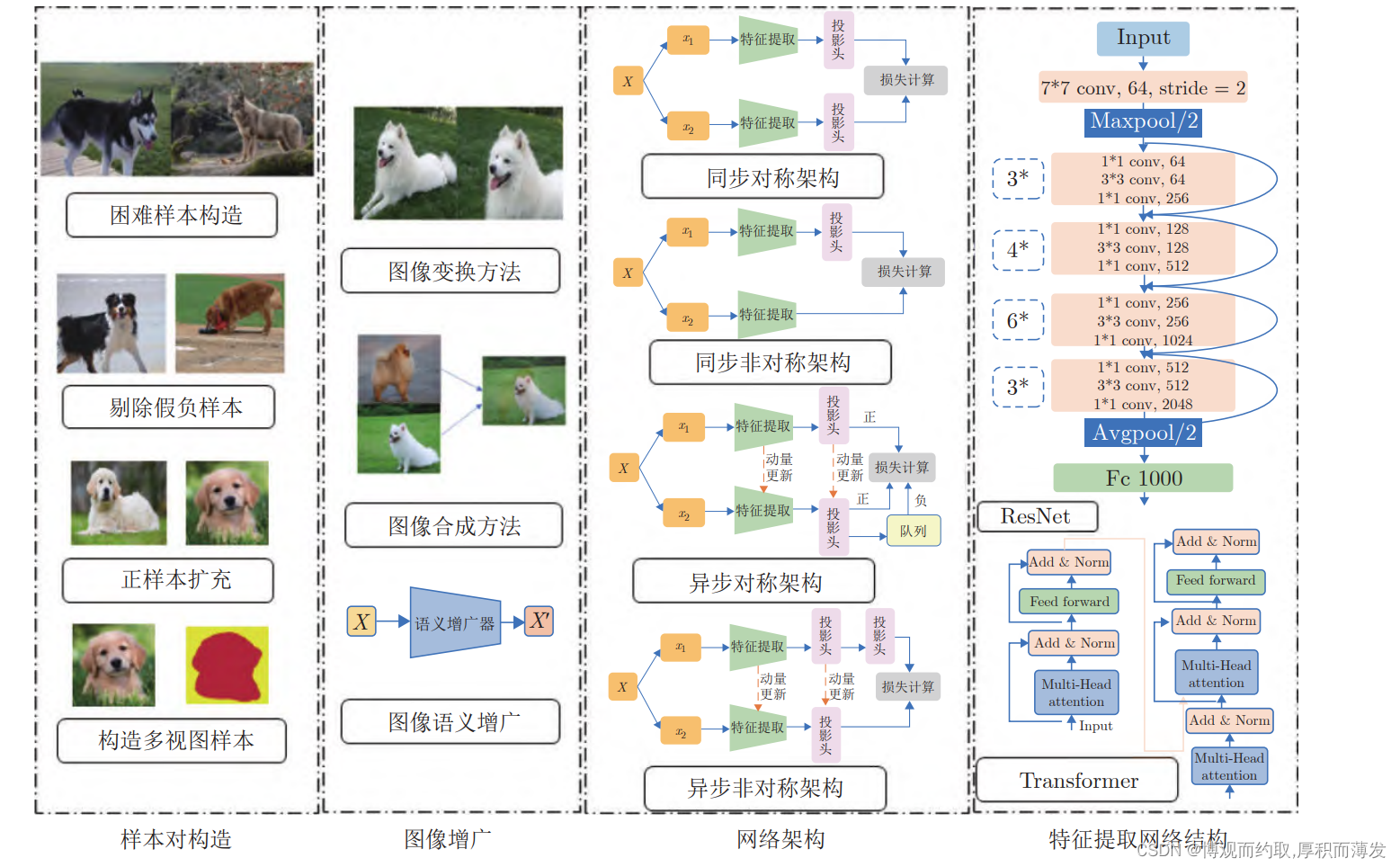

XSepConv是由清华大学提出的,它是一种新型的卷积神经网络模块,可以在保持计算量不变的情况下提高模型的性能。XSepConv的特点是将深度卷积和逐通道卷积结合起来,同时使用可分离卷积和组卷积来提高模型的性能。

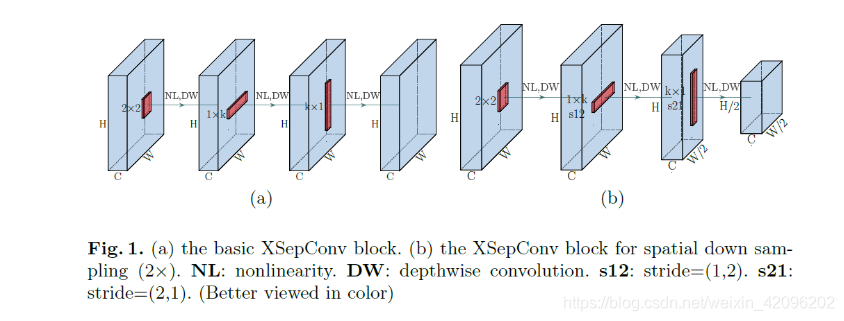

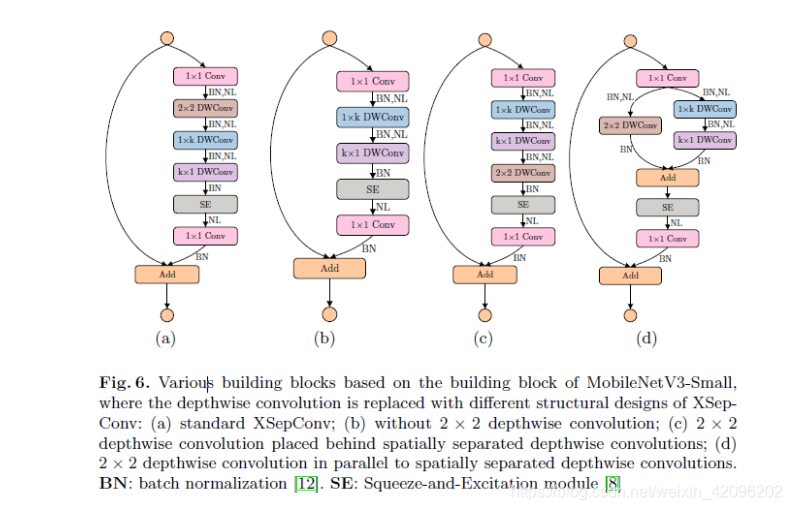

XSepConv是一种新型的极度分离卷积块,它将空间可分离卷积融合为深度卷积,以进一步降低大内核的计算成本和参数大小。XSepConv由3个部分组成:2x2深度卷积核;1个k深度卷积核;和k1个深度卷积核。1×k和k×1深度卷积一起形成所说的空间分离深度卷积,其中2×2深度卷积起着重要的作用用于捕获由于固有的结构缺陷而可能被空间分离深度卷积丢失的信息。

2.为什么?

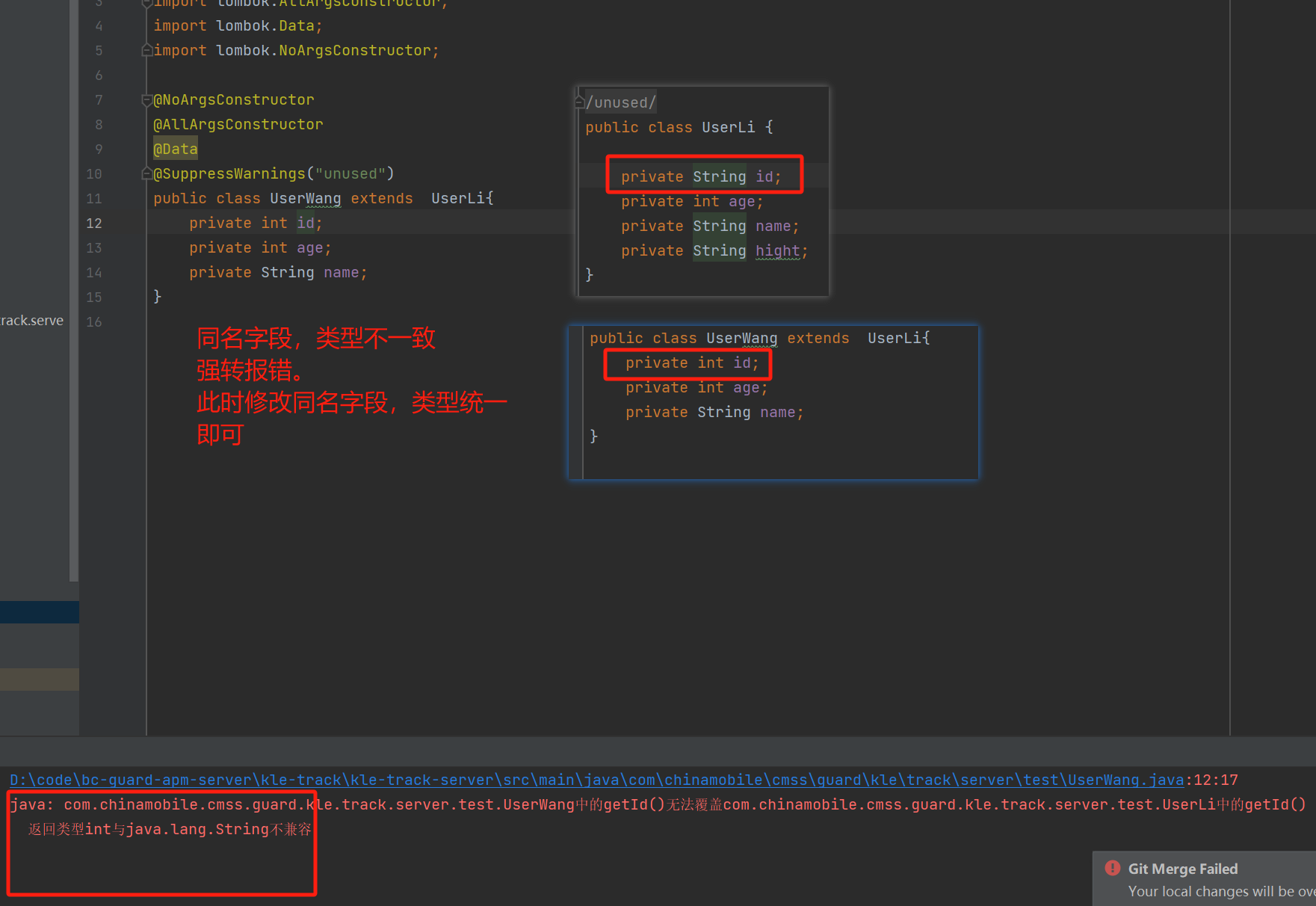

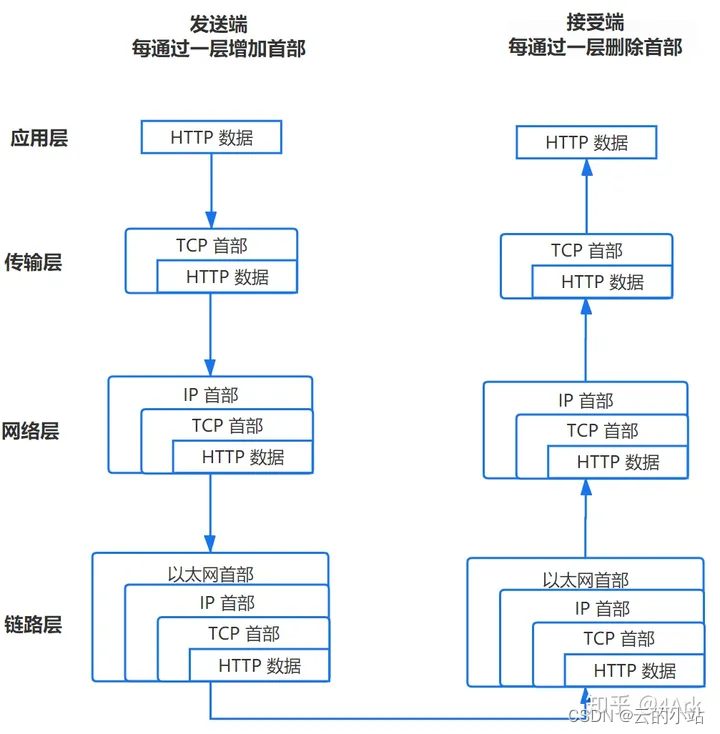

1.之前应用较多的轻量级网络大多是基于深度可分离卷积的,属于分组卷积的个例,其中

Depthwise separable convolutions = Depthwise convolution + Pointwise convolution

2.Nips2019的工作Convolution with even-sized kernels and symmetric padding,使用对称padding策略解决了偶数尺寸大小的卷积提取特征带来的位置偏移问题。与深度卷积相比,偶数大小的传统卷积也可以获得具有竞争力的精度

3.之前的相关工作如MixNet探索了卷积核大小对于性能的影响,大尺寸的卷积被证明可以有效增强网络性能

4.空间分离卷积 == KxK卷积 = Kx1卷积 + 1xK卷积,可以进一步节省计算成本。考虑到基于深度卷积的CNNs主要侧重于对通道数的探索以达到降低计算成本的目的,可以通过对正交空间空间维数的进一步分解来实现更大幅度的压缩,特别是对大深度卷积核。空间可分卷积可以看作是空间层次上的分解,由宽度方向的卷积和高度方向的卷积组成,近似替代原来的二维空间卷积,从而降低了计算复杂度。然而,直接将空间可分卷积应用在网络架构中会带来大量的信息损失。

提出了一种极致分离的卷积块XSepConv,它将深度分离卷积与空间可分卷积混合在一起,形成空间可分的深度卷积,进一步减少了大深度卷积核的参数大小和计算量。考虑到空间分离卷积特别是在水平和竖直方向上缺乏足够的捕获信息的能力,额外的操作需要捕捉信息在其他方向(e.g.对角方向)避免信息大量丢失。在这里,我们使用一个简单而有效的操作,2X2DWConv与改进的对称填充策略,在一定程度上以补偿上述副作用。

3.怎么样?

3.1网络结构

1.basic XSepConv block:

K×K DW = 2×2 DW(改进对称padding策略)+1×K DW + K×1 DW

利用空间分离卷积来减小大深度卷积核的参数大小和计算复杂度,并使用一个额外的2x2深度卷积和改进的对称填充策略来补偿空间可分卷积带来的副作用。

2.down sampling XSepConv block:

K×K DW = 2×2 DW(改进对称padding策略)+1×K DW(stride=2) + K×1 DW(stride=2)

相当于在竖直水平两个方向分别down sampling,论文中还分析了具体计算量和参数量的计算,讨论了down sampling模块适用于卷积核尺寸>7

3.2原理分析

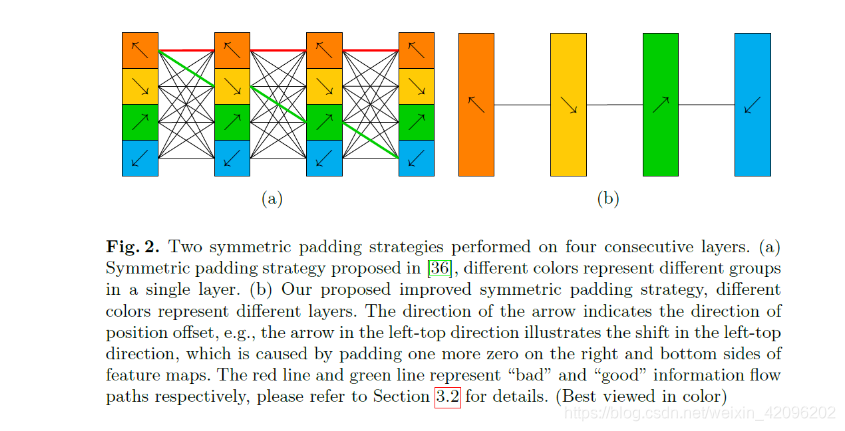

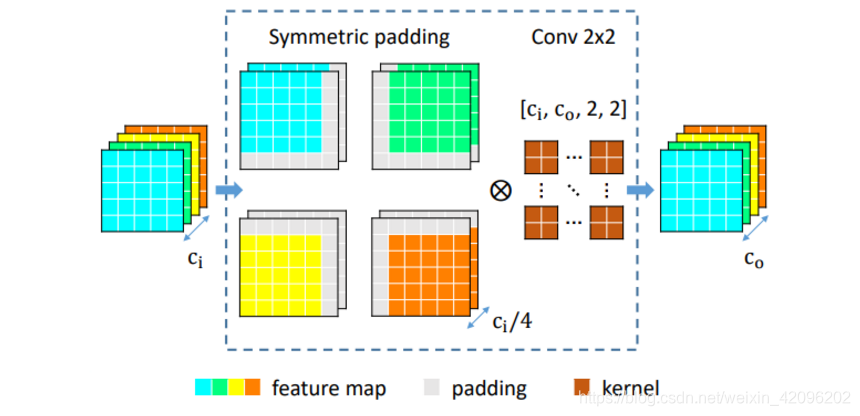

当使用偶数卷积核时,非对称padding方式,例如只在右下方向,常常用来保证特征图大小不变。因此,激活值会偏移到空间位置的左上角,这就是移位问题。为了解决这个问题上图(a)的操作为:

1.对输入特征图按照通道分为4个组

2.对4个组特征分别按照左上,左下,右上和右下方向Padding

3.对4个padding后的特征分别使用2x2卷积提取特征

4.对提取到的特征进行拼接

然而,在图像分类等大多数计算机视觉任务中,最重要的输出是最后一层而不是中间的单层。如图2(a)所示,传统对称padding策略将在最后一层的一些输出处遇到不对称。如果沿着所有四个移位方向都不同的路径(如图2(a)中的绿线)的信息流通,则在最终输出中消除位置偏移。否则,位置偏移将在至少一个方向上累积,例如,图2(a)中的红线指示左上方向的位置偏移将在最终将特征挤压到空间位置的左上角并导致性能下降的最终输出中累积。为了解决这个问题,本文提出了改进的对称padding策略,具体如图2(b):

1.定义4个padding方向

2.假设N为网络中使用2x2卷积层的个数,如果可以被4整除,则按照四个方向顺序padding:

3.如果N%4 = 2,最后两层具有偶数大小内核的padding方向是相反的,以便尽可能地消除偏移。

4.如果N%4 = 1,最后一层使用传统对称padding策略.

3.3结构设计

3.4代码实现

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.nn import init

class hswish(nn.Module):

def forward(self, x):

out = x * F.relu6(x + 3, inplace=True) / 6

return out

class hsigmoid(nn.Module):

def forward(self, x):

out = F.relu6(x + 3, inplace=True) / 6

return out

class SeModule(nn.Module):

def __init__(self, in_size, reduction=4):

super(SeModule, self).__init__()

self.se = nn.Sequential(

nn.AdaptiveAvgPool2d(1),

nn.Conv2d(in_size, in_size // reduction, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(in_size // reduction),

nn.ReLU(inplace=True),

nn.Conv2d(in_size // reduction, in_size, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(in_size),

hsigmoid()

)

def forward(self, x):

return x * self.se(x)

# DWConv

class Block(nn.Module):

'''expand + depthwise + pointwise'''

def __init__(self, kernel_size, in_size, expand_size, out_size, nolinear, semodule, stride):

super(Block, self).__init__()

self.stride = stride

self.se = semodule

self.conv1 = nn.Conv2d(in_size, expand_size, kernel_size=1, stride=1, padding=0, bias=False)

self.bn1 = nn.BatchNorm2d(expand_size)

self.nolinear1 = nolinear

self.conv2 = nn.Conv2d(expand_size, expand_size, kernel_size=kernel_size, stride=stride, padding=kernel_size//2, groups=expand_size, bias=False)

self.bn2 = nn.BatchNorm2d(expand_size)

self.nolinear2 = nolinear

self.conv3 = nn.Conv2d(expand_size, out_size, kernel_size=1, stride=1, padding=0, bias=False)

self.bn3 = nn.BatchNorm2d(out_size)

self.shortcut = nn.Sequential()

if stride == 1 and in_size != out_size:

self.shortcut = nn.Sequential(

nn.Conv2d(in_size, out_size, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(out_size),

)

def forward(self, x):

out = self.nolinear1(self.bn1(self.conv1(x)))

out = self.nolinear2(self.bn2(self.conv2(out)))

out = self.bn3(self.conv3(out))

if self.se != None:

out = self.se(out)

out = out + self.shortcut(x) if self.stride==1 else out

return out

# XSepConv

class XSepBlock(nn.Module):

''' 1*1 expand + Improved Symmetric Padding + 2*2 DW + 1*K DW + k*1 DW + 1*1 out'''

def __init__(self, kernel_size, in_size, expand_size, out_size, nolinear, semodule, stride, ith):

super(Block, self).__init__()

self.stride = stridE

self.ith = ith

# 1*1 expand

self.conv1 = nn.Conv2d(in_size, expand_size, kernel_size=1, stride=1, padding=0, bias=False)

self.bn1 = nn.BatchNorm2d(expand_size)

self.nolinear1 = nolinear

# 2*2 DW

self.conv2 = nn.Conv2d(expand_size, expand_size, kernel_size=2, stride=1, padding=0, groups=expand_size, bias=False)

self.bn2 = nn.BatchNorm2d(expand_size)

self.nolinear2 = nolinear

# 1*k DW

self.conv3 = nn.Conv2d(expand_size, expand_size, kernel_size=(1, kernel_size), stride=stride,

padding=(0, kernel_size//2), groups=expand_size, bias=False)

self.bn3 = nn.BatchNorm2d(expand_size)

self.nolinear3 = nolinear

# k*1 DW

self.conv4 = nn.Conv2d(expand_size, expand_size, kernel_size=(kernel_size, 1), stride=stride,

padding=(kernel_size//2, 0),, groups=expand_size, bias=False)

self.bn4 = nn.BatchNorm2d(expand_size)

# SE

self.se = semodule

self.nolinear_se = nolinear

# 1*1 out

self.conv5 = nn.Conv2d(expand_size, out_size, kernel_size=1, stride=1, padding=0, bias=False)

self.bn5 = nn.BatchNorm2d(out_size)

self.shortcut = nn.Sequential()

if stride == 1 and in_size != out_size:

self.shortcut = nn.Sequential(

nn.Conv2d(in_size, out_size, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(out_size),

)

def forward(self, x):

x1 = x

if self.ith % 4 == 0:

x1 = nn.functional.pad(x1,(0,1,1,0),mode = "constant",value = 0) # right top

elif self.ith % 4 == 1:

x1 = nn.functional.pad(x1,(0,1,0,1),mode = "constant",value = 0) # right bottom

elif self.ith % 4 == 2:

x1 = nn.functional.pad(x1,(1,0,1,0),mode = "constant",value = 0) # left top

elif self.ith % 4 == 3:

x1 = nn.functional.pad(x1,(1,0,0,1),mode = "constant",value = 0) # left bottom

else:

raise NotImplementedError('ith layer is not right')

# 1*1 expand

out = self.nolinear1(self.bn1(self.conv1(x1)))

# 2*2 DW

out = self.nolinear2(self.bn2(self.conv2(out)))

# 1*k DW

out = self.nolinear3(self.bn3(self.conv3(out)))

# k*1 DW

out = self.bn4(self.conv4(out))

# SE

if self.se != None:

out = self.nolinear_se(self.se(out))

# 1*1 out

out = self.bn5(self.conv5(out))

out = out + self.shortcut(x) if self.stride==1 else out

return out

#按照论文设置改的,可能会有出入

class XSepMobileNetV3_Small(nn.Module):

def __init__(self, num_classes=1000):

super(MobileNetV3_Small, self).__init__()

self.conv1 = nn.Conv2d(3, 16, kernel_size=3, stride=2, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(16)

self.hs1 = hswish()

self.bneck = nn.Sequential(

Block(3, 16, 16, 16, nn.ReLU(inplace=True), SeModule(16), 2),

Block(3, 16, 72, 24, nn.ReLU(inplace=True), None, 2),

Block(3, 24, 88, 24, nn.ReLU(inplace=True), None, 1),

Block(5, 24, 96, 40, hswish(), SeModule(40), 2), # 下采样层使用DW,因为kernel_size < 7

XSepBlock(5, 40, 240, 40, hswish(), SeModule(40), 1, 1),

XSepBlock(5, 40, 240, 40, hswish(), SeModule(40), 1, 2),

XSepBlock(5, 40, 120, 48, hswish(), SeModule(48), 1, 3),

XSepBlock(5, 48, 144, 48, hswish(), SeModule(48), 1, 4),

Block(5, 48, 288, 96, hswish(), SeModule(96), 2), # 下采样层使用DW,因为kernel_size < 7

XSepBlock(3, 96, 576, 96, hswish(), SeModule(96), 1, 1),

XSepBlock(3, 96, 576, 96, hswish(), SeModule(96), 1, 2),

)

self.conv2 = nn.Conv2d(96, 576, kernel_size=1, stride=1, padding=0, bias=False)

self.bn2 = nn.BatchNorm2d(576)

self.hs2 = hswish()

self.linear3 = nn.Linear(576, 1280)

self.bn3 = nn.BatchNorm1d(1280)

self.hs3 = hswish()

self.linear4 = nn.Linear(1280, num_classes)

self.init_params()

def init_params(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

init.kaiming_normal_(m.weight, mode='fan_out')

if m.bias is not None:

init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

init.constant_(m.weight, 1)

init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

init.normal_(m.weight, std=0.001)

if m.bias is not None:

init.constant_(m.bias, 0)

def forward(self, x):

out = self.hs1(self.bn1(self.conv1(x)))

out = self.bneck(out)

out = self.hs2(self.bn2(self.conv2(out)))

out = F.avg_pool2d(out, 7)

out = out.view(out.size(0), -1)

out = self.hs3(self.bn3(self.linear3(out)))

out = self.linear4(out)

return out

def test():

net = XSepMobileNetV3_Small()

x = torch.randn(2,3,224,224)

y = net(x)

print(y.size())

参考:

XSepConv_MobileNetV3_small | 自己实现代码

XSepConv 极致分离卷积块优于DWConv | Extremely Separated Convolution