视频地址:尚硅谷大数据项目《在线教育之实时数仓》_哔哩哔哩_bilibili

目录

第9章 数仓开发之DWD层

P031

P032

P033

P034

P035

P036

P037

P038

P039

P040

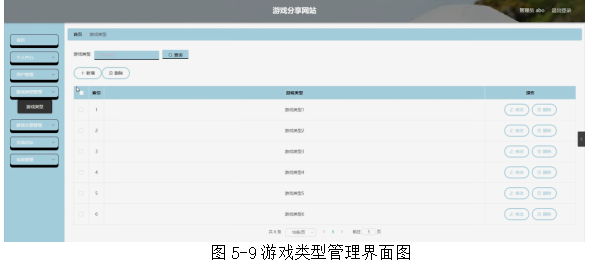

第9章 数仓开发之DWD层

P031

DWD层设计要点:

(1)DWD层的设计依据是维度建模理论,该层存储维度模型的事实表。

(2)DWD层表名的命名规范为dwd_数据域_表名。

存放事实表,从kafka的topic_log和topic_db中读取需要用到的业务流程相关数据,将业务流程关联起来做成明细数据写回kafka当中。

尚硅谷大数据学科全套教程\3.尚硅谷大数据学科--项目实战\尚硅谷大数据项目之在线教育数仓\尚硅谷大数据项目之在线教育数仓-3实时\资料\13.总线矩阵及指标体系

在线教育实时业务总线矩阵.xlsx

9.1.3 图解

P032

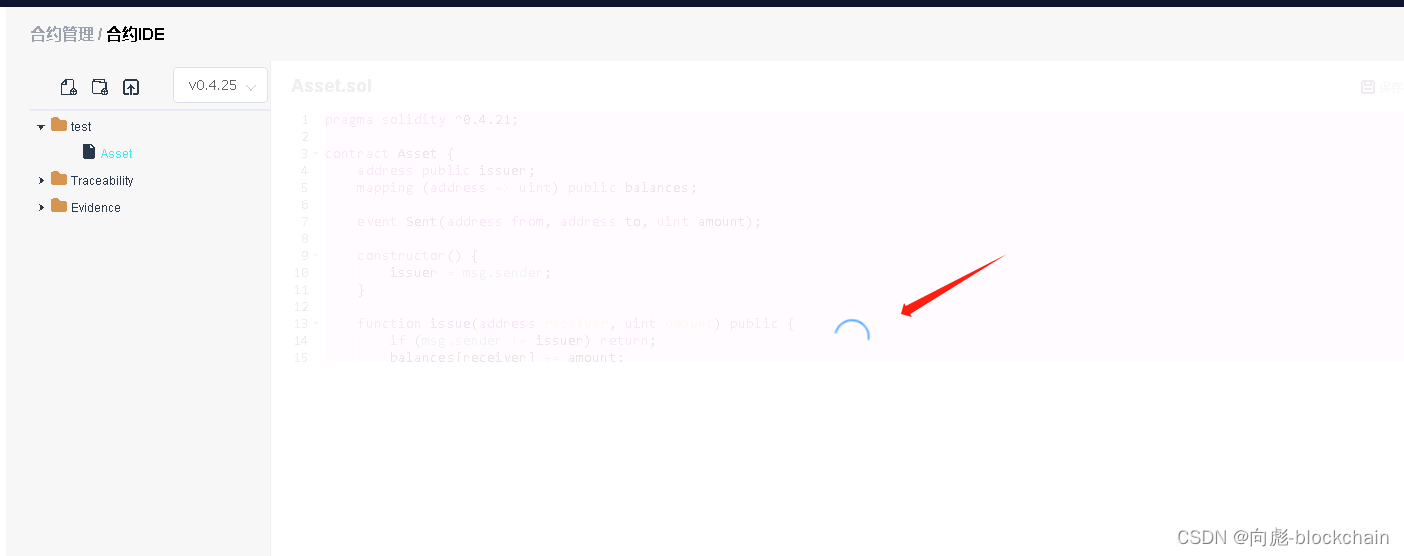

package com.atguigu.edu.realtime.app.dwd.log;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import com.atguigu.edu.realtime.util.DateFormatUtil;

import com.atguigu.edu.realtime.util.EnvUtil;

import com.atguigu.edu.realtime.util.KafkaUtil;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SideOutputDataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.util.Collector;

import org.apache.flink.util.OutputTag;

/**

* @author

* @create 2023-04-21 14:01

*/

public class BaseLogApp {

public static void main(String[] args) throws Exception {

//TODO 1 创建环境设置状态后端

StreamExecutionEnvironment env = EnvUtil.getExecutionEnvironment(1);

//TODO 2 从kafka中读取主流数据

String topicName = "topic_log";

String groupId = "base_log_app";

DataStreamSource<String> baseLogSource = env.fromSource(KafkaUtil.getKafkaConsumer(topicName, groupId),

WatermarkStrategy.noWatermarks(),

"base_log_source"

);

//TODO 3 对数据进行清洗转换

// 3.1 定义侧输出流

OutputTag<String> dirtyStreamTag = new OutputTag<String>("dirtyStream") {

};

// 3.2 清洗转换

SingleOutputStreamOperator<JSONObject> cleanedStream = baseLogSource.process(new ProcessFunction<String, JSONObject>() {

@Override

public void processElement(String value, Context ctx, Collector<JSONObject> out) throws Exception {

try {

JSONObject jsonObject = JSON.parseObject(value);

out.collect(jsonObject);

} catch (Exception e) {

ctx.output(dirtyStreamTag, value);

}

}

});

// 3.3 将脏数据写出到kafka对应的主题

SideOutputDataStream<String> dirtyStream = cleanedStream.getSideOutput(dirtyStreamTag);

String dirtyTopicName = "dirty_data";

dirtyStream.sinkTo(KafkaUtil.getKafkaProducer(dirtyTopicName, "dirty_trans"));

//TODO 4 新老访客标记修复

//TODO 5 数据分流

//TODO 6 写出到kafka不同的主题

//TODO 7 执行任务

}

}P033

KafkaUtil.java

P034

新老访客逻辑介绍

P035

BaseLogApp.java

//TODO 4 新老访客标记修复

[atguigu@node001 log]$ pwd

/opt/module/data_mocker/01-onlineEducation/log

[atguigu@node001 log]$ cat -n 200 app.2023-09-19.log

{"common":{"ar":"26","ba":"iPhone","ch":"Appstore","is_new":"0","md":"iPhone 8","mid":"mid_188","os":"iOS 13.3.1","sc":"1","sid":"b4d6c8eb-d025-4855-af0a-fe351ff16ef9","uid":"20","vc":"v2.1.134"},"page":{"during_time":901000,"item":"173","item_type":"paper_id","last_page_id":"course_detail","page_id":"exam"},"ts":1645456489411}

{

"common":{

"ar":"26",

"ba":"iPhone",

"ch":"Appstore",

"is_new":"0",

"md":"iPhone 8",

"mid":"mid_188",

"os":"iOS 13.3.1",

"sc":"1",

"sid":"b4d6c8eb-d025-4855-af0a-fe351ff16ef9",

"uid":"20",

"vc":"v2.1.134"

},

"page":{

"during_time":901000,

"item":"173",

"item_type":"paper_id",

"last_page_id":"course_detail",

"page_id":"exam"

},

"ts":1645456489411

}P036

BaseLogApp.java

//TODO 5 数据分流

P037

//TODO 6 写出到kafka不同的主题

hadoop、zookeeper、kafka。

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic page_topic

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic action_topic

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic display_topic

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic start_topic

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic error_topic

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic appVideo_topic

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic page_topic

[2023-11-01 14:36:17,581] WARN [Consumer clientId=consumer-console-consumer-7492-1, groupId=console-consumer-7492] Error while fetching metadata with correlation id 2 : {page_topic=LEADER_NOT_AVAILABLE} (org.apache.kafka.clients.NetworkClient)

[2023-11-01 14:36:18,710] WARN [Consumer clientId=consumer-console-consumer-7492-1, groupId=console-consumer-7492] Error while fetching metadata with correlation id 6 : {page_topic=LEADER_NOT_AVAILABLE} (org.apache.kafka.clients.NetworkClient)

[2023-11-01 14:36:18,720] WARN [Consumer clientId=consumer-console-consumer-7492-1, groupId=console-consumer-7492] The following subscribed topics are not assigned to any members: [page_topic] (org.apache.kafka.clients.consumer.internals.ConsumerCoordinator)

[atguigu@node001 ~]$ f1.sh start

-------- 启动 node001 采集flume启动 -------

[atguigu@node001 ~]$ cd /opt/module/data

data/ data_mocker/ datax/

[atguigu@node001 ~]$ cd /opt/module/data

data/ data_mocker/ datax/

[atguigu@node001 ~]$ cd /opt/module/data_mocker/

[atguigu@node001 data_mocker]$ cd 01-onlineEducation/

[atguigu@node001 01-onlineEducation]$ ll

总用量 30460

-rw-rw-r-- 1 atguigu atguigu 2223 9月 19 10:43 application.yml

-rw-rw-r-- 1 atguigu atguigu 4057995 7月 25 10:28 edu0222.sql

-rw-rw-r-- 1 atguigu atguigu 27112074 7月 25 10:28 edu2021-mock-2022-06-18.jar

drwxrwxr-x 2 atguigu atguigu 4096 10月 26 14:01 log

-rw-rw-r-- 1 atguigu atguigu 1156 7月 25 10:44 logback.xml

-rw-rw-r-- 1 atguigu atguigu 633 7月 25 10:45 path.json

[atguigu@node001 01-onlineEducation]$ java -jar edu2021-mock-2022-06-18.jar

SLF4J: Class path contains multiple SLF4J bindings.

P038

9.2 流量域独立访客事务事实表

P039

package com.atguigu.edu.realtime.app.dwd.log;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONAware;

import com.alibaba.fastjson.JSONObject;

import com.atguigu.edu.realtime.util.DateFormatUtil;

import com.atguigu.edu.realtime.util.EnvUtil;

import com.atguigu.edu.realtime.util.KafkaUtil;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.RichFilterFunction;

import org.apache.flink.api.common.state.StateTtlConfig;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.time.Time;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* @author yhm

* @create 2023-04-21 16:24

*/

public class DwdTrafficUniqueVisitorDetail {

public static void main(String[] args) throws Exception {

// TODO 1 创建环境设置状态后端

StreamExecutionEnvironment env = EnvUtil.getExecutionEnvironment(4);

// TODO 2 读取kafka日志主题数据

String topicName = "dwd_traffic_page_log";

DataStreamSource<String> pageLogStream = env.fromSource(KafkaUtil.getKafkaConsumer(topicName, "dwd_traffic_unique_visitor_detail"), WatermarkStrategy.noWatermarks(), "unique_visitor_source");

// TODO 3 转换结构,过滤last_page_id不为空的数据

SingleOutputStreamOperator<JSONObject> firstPageStream = pageLogStream.flatMap(new FlatMapFunction<String, JSONObject>() {

@Override

public void flatMap(String value, Collector<JSONObject> out) throws Exception {

try {

JSONObject jsonObject = JSON.parseObject(value);

String lastPageID = jsonObject.getJSONObject("page").getString("last_page_id");

if (lastPageID == null) {

out.collect(jsonObject);

}

} catch (Exception e) {

e.printStackTrace();

}

}

});

// TODO 4 安装mid分组

KeyedStream<JSONObject, String> keyedStream = firstPageStream.keyBy(new KeySelector<JSONObject, String>() {

@Override

public String getKey(JSONObject value) throws Exception {

return value.getJSONObject("common").getString("mid");

}

});

// TODO 5 判断独立访客

SingleOutputStreamOperator<JSONObject> filteredStream = keyedStream.filter(new RichFilterFunction<JSONObject>() {

ValueState<String> lastVisitDtState;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

ValueStateDescriptor<String> stringValueStateDescriptor = new ValueStateDescriptor<>("last_visit_dt", String.class);

// 设置状态的存活时间

stringValueStateDescriptor.enableTimeToLive(StateTtlConfig

.newBuilder(Time.days(1L))

// 设置状态的更新模式为创建及写入

// 每次重新写入的时候记录时间 到1天删除状态

.setUpdateType(StateTtlConfig.UpdateType.OnCreateAndWrite)

.build());

lastVisitDtState = getRuntimeContext().getState(stringValueStateDescriptor);

}

@Override

public boolean filter(JSONObject jsonObject) throws Exception {

String visitDt = DateFormatUtil.toDate(jsonObject.getLong("ts"));

String lastVisitDt = lastVisitDtState.value();

// 对于迟到的数据,last日期会大于visit日期,数据也不要

if (lastVisitDt == null || (DateFormatUtil.toTs(lastVisitDt) < DateFormatUtil.toTs(visitDt))) {

lastVisitDtState.update(visitDt);

return true;

}

return false;

}

});

// TODO 6 将独立访客数据写出到对应的kafka主题

String targetTopic = "dwd_traffic_unique_visitor_detail";

SingleOutputStreamOperator<String> sinkStream = filteredStream.map((MapFunction<JSONObject, String>) JSONAware::toJSONString);

sinkStream.sinkTo(KafkaUtil.getKafkaProducer(targetTopic, "unique_visitor_trans"));

// TODO 7 运行任务

env.execute();

}

}P040

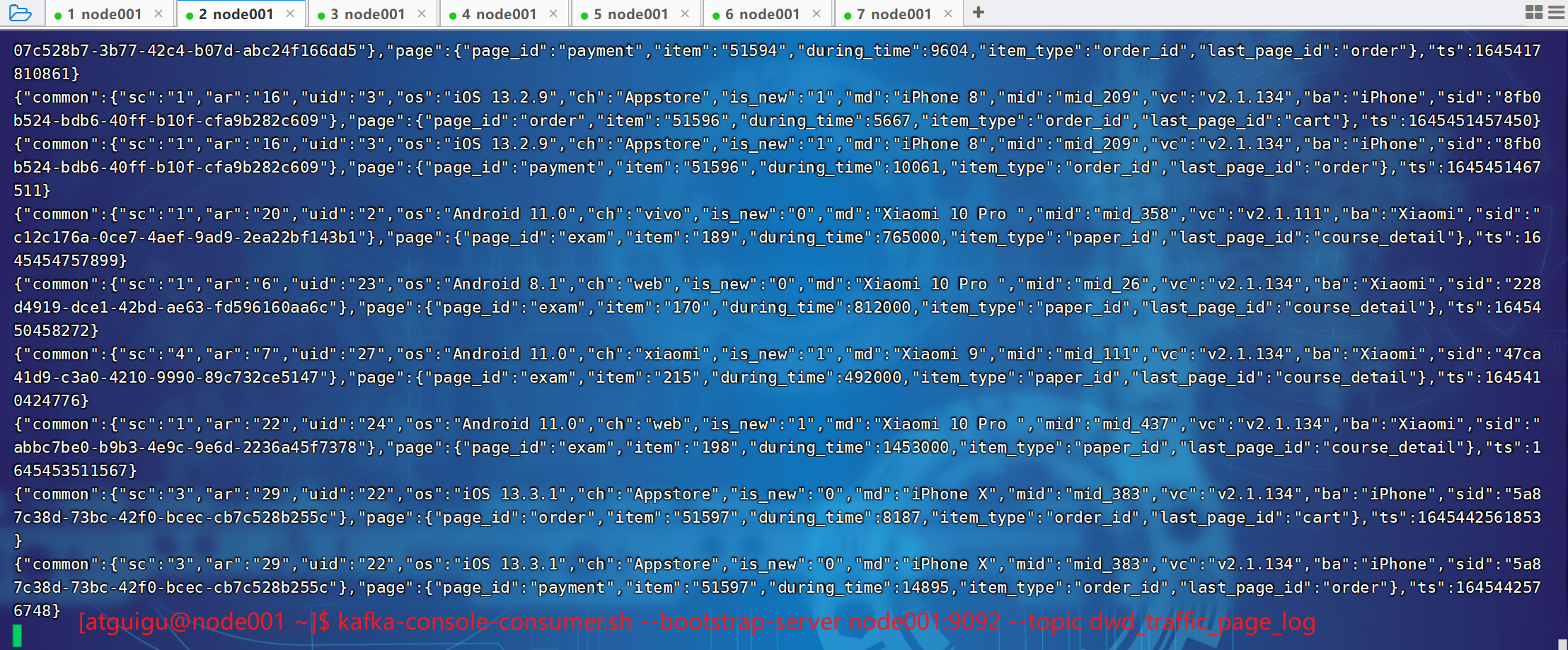

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic dwd_traffic_unique_visitor_detail

[atguigu@node001 ~]$ kafka-console-consumer.sh --bootstrap-server node001:9092 --topic dwd_traffic_page_log

[atguigu@node001 01-onlineEducation]$ cd /opt/module/data_mocker/01-onlineEducation/

[atguigu@node001 01-onlineEducation]$ java -jar edu2021-mock-2022-06-18.jar