机器学习笔记之Sigmoid信念网络——对数似然梯度

- 引言

- 回顾:贝叶斯网络的因子分解

- Sigmoid信念网络

- 对数似然梯度推导过程

- 梯度求解过程中的问题

引言

从本节开始,将介绍 Sigmoid \text{Sigmoid} Sigmoid信念网络。

回顾:贝叶斯网络的因子分解

Sigmoid

\text{Sigmoid}

Sigmoid信念网络,其本质上就是

Sigmoid

\text{Sigmoid}

Sigmoid贝叶斯网络,这说明它是一个有向图模型。在概率图模型——贝叶斯网络的结构表示中介绍过,如果使用贝叶斯网络描述概率图结构,这意味着变量/特征之间存在显式的因果关系。

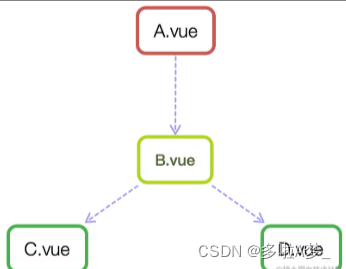

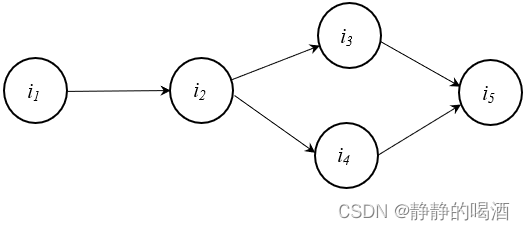

如果给定一个贝叶斯网络,可以根据该结构直接写出该模型的结构表示(Representation)。已知一个贝叶斯网络表示如下:

也可称作图中变量的‘联合概率分布’/‘概率密度函数’~

那么该模型中变量的联合概率分布

P

(

I

)

\mathcal P(\mathcal I)

P(I)可表示为:

i

3

,

i

4

∣

i

2

i_3,i_4 \mid i_2

i3,i4∣i2属于同父结构,因此可以展开,而

i

5

∣

i

3

,

i

4

i_5 \mid i_3,i_4

i5∣i3,i4属于

V

\mathcal V

V型结构,无法展开。

P

(

I

)

=

P

(

i

1

,

i

2

,

i

3

,

i

4

,

i

5

)

=

P

(

i

2

∣

i

1

)

⋅

P

(

i

3

,

i

4

∣

i

2

)

⋅

P

(

i

5

∣

i

3

,

i

4

)

=

P

(

i

2

∣

i

1

)

⋅

P

(

i

3

∣

i

2

)

⋅

P

(

i

4

∣

i

2

)

⋅

P

(

i

5

∣

i

3

,

i

4

)

\begin{aligned} \mathcal P(\mathcal I) & = \mathcal P(i_1,i_2,i_3,i_4,i_5) \\ & = \mathcal P(i_2 \mid i_1) \cdot \mathcal P(i_3,i_4 \mid i_2) \cdot \mathcal P(i_5 \mid i_3,i_4) \\ & = \mathcal P(i_2 \mid i_1) \cdot \mathcal P(i_3 \mid i_2) \cdot \mathcal P(i_4 \mid i_2) \cdot \mathcal P(i_5 \mid i_3,i_4) \end{aligned}

P(I)=P(i1,i2,i3,i4,i5)=P(i2∣i1)⋅P(i3,i4∣i2)⋅P(i5∣i3,i4)=P(i2∣i1)⋅P(i3∣i2)⋅P(i4∣i2)⋅P(i5∣i3,i4)

Sigmoid信念网络

单从名称中观察,信念网络指的就是贝叶斯网络;

Sigmoid

\text{Sigmoid}

Sigmoid自然是指

Sigmoid

\text{Sigmoid}

Sigmoid函数:

σ

(

x

)

=

1

1

+

exp

(

−

x

)

\sigma(x) = \frac{1}{1 + \exp(-x)}

σ(x)=1+exp(−x)1

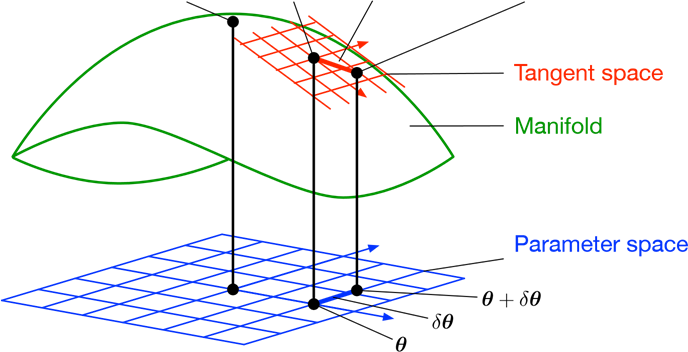

它的模型结构,以及模型表示是如何产生的?已知一个

Sigmoid

\text{Sigmoid}

Sigmoid信念网络表示如下:

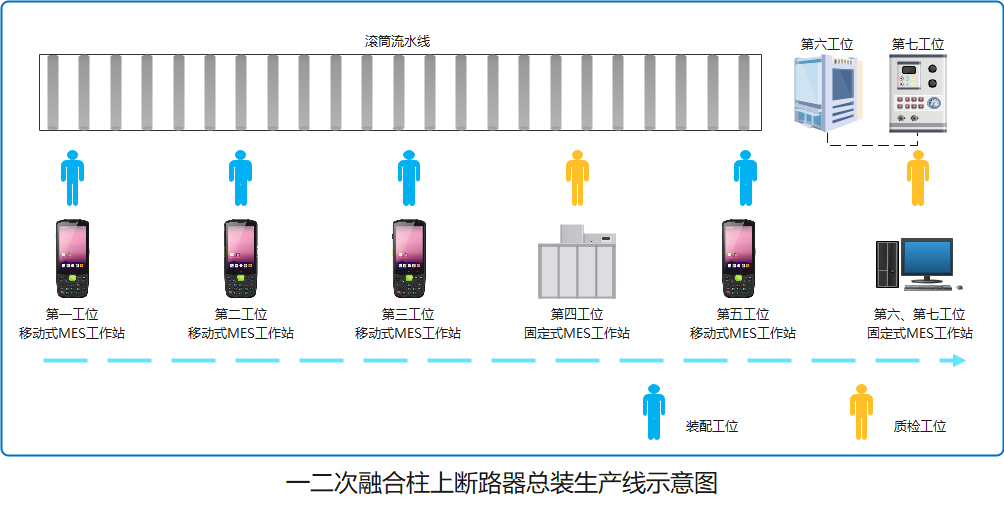

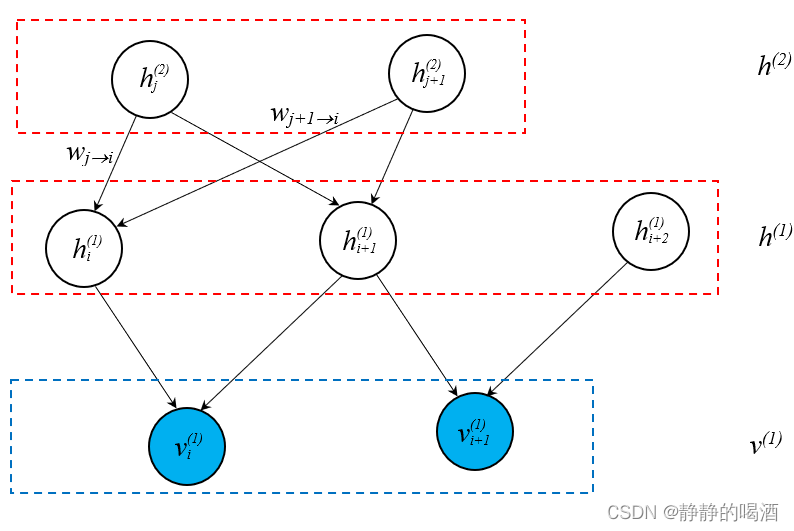

其中蓝色结点表示观测变量,蓝色虚线框中的结点表示观测变量层;同理,白色结点表示隐变量,整个隐变量包含两个隐变量层。

Neal

\text{Neal}

Neal在1990年提出该模型时,将玻尔兹曼机、贝叶斯网络相结合产生的模型产物。该模型的优势在于:

-

Sigmoid

\text{Sigmoid}

Sigmoid信念网络是有向图模型,因此在采样过程中,根据结点之间的因果关系 可以很容易地找到采样顺序(祖先采样方法);根节点(入度为零)开始,按照拓扑排序的顺序进行采样。

从根节点开始采样,与根节点相连的其他结点之间属于同父结构。一旦根节点被采样(被观测),其相连结点之间条件独立。 - 和前馈神经网络的函数逼近定理类似,如果隐藏层数量 ≥ 1 \geq 1 ≥1层,从观测变量角度,它可以逼近任意二分类离散分布。

关于随机变量集合

S

\mathcal S

S的描述如下:

v

v

v表示观测变量集合;

h

h

h表示隐变量集合。

需要注意的点:

这里的小括号上标并非表示样本编号,而是关于层的编号。如h i + 1 ( 1 ) h_{i+1}^{(1)} hi+1(1)表示第1 1 1个隐藏层中编号i + 1 i+1 i+1的随机变量结点。当然,推导过程中的小括号是样本编号~与玻尔兹曼机、受限玻尔兹曼机相同,概率模型中的每个结点均服从‘伯努利分布’。

S

=

{

v

,

h

}

=

{

v

(

1

)

,

h

(

1

)

,

h

(

2

)

}

=

{

v

i

(

1

)

,

v

i

+

1

(

1

)

,

h

i

(

1

)

,

h

i

+

1

(

1

)

,

h

i

+

2

(

1

)

,

h

j

(

2

)

,

h

j

+

1

(

2

)

}

\begin{aligned} \mathcal S = \{v,h\} & = \{v^{(1)},h^{(1)},h^{(2)}\} \\ & = \{v_i^{(1)},v_{i+1}^{(1)},h_{i}^{(1)},h_{i+1}^{(1)},h_{i+2}^{(1)},h_{j}^{(2)},h_{j+1}^{(2)}\} \end{aligned}

S={v,h}={v(1),h(1),h(2)}={vi(1),vi+1(1),hi(1),hi+1(1),hi+2(1),hj(2),hj+1(2)}

由于这是一个贝叶斯网络,我们可以通过结点之间的因果关系描述出该图的联合概率分布:

在贝叶斯网络结构表示中介绍过,受先找到入度为零的结点,再通过拓扑排序方式寻找各结点的后验概率信息。

P

(

S

)

=

P

(

v

i

(

1

)

,

v

i

+

1

(

1

)

,

h

i

(

1

)

,

h

i

+

1

(

1

)

,

h

i

+

2

(

1

)

,

h

j

(

2

)

,

h

j

+

1

(

2

)

)

=

P

(

h

j

(

2

)

)

⋅

P

(

h

j

+

1

(

2

)

)

⋅

P

(

h

i

(

1

)

∣

h

j

(

2

)

,

h

j

+

1

(

2

)

)

⋅

P

(

h

i

+

1

(

1

)

∣

h

j

(

2

)

,

h

j

+

1

(

2

)

)

⋅

P

(

v

i

(

1

)

∣

h

i

(

1

)

,

h

i

+

1

(

1

)

)

⋅

P

(

h

i

+

2

(

1

)

)

⋅

P

(

v

i

+

1

(

1

)

∣

h

i

+

1

(

1

)

,

h

i

+

2

(

1

)

)

\begin{aligned} \mathcal P(\mathcal S) & = \mathcal P (v_i^{(1)},v_{i+1}^{(1)},h_{i}^{(1)},h_{i+1}^{(1)},h_{i+2}^{(1)},h_{j}^{(2)},h_{j+1}^{(2)}) \\ & = \mathcal P(h_j^{(2)}) \cdot \mathcal P(h_{j+1}^{(2)}) \cdot \mathcal P(h_{i}^{(1)} \mid h_{j}^{(2)},h_{j+1}^{(2)}) \cdot \mathcal P(h_{i+1}^{(1)} \mid h_{j}^{(2)},h_{j+1}^{(2)}) \cdot \mathcal P(v_i^{(1)} \mid h_{i}^{(1)},h_{i+1}^{(1)}) \cdot \mathcal P(h_{i+2}^{(1)}) \cdot \mathcal P(v_{i+1}^{(1)} \mid h_{i+1}^{(1)},h_{i+2}^{(1)}) \end{aligned}

P(S)=P(vi(1),vi+1(1),hi(1),hi+1(1),hi+2(1),hj(2),hj+1(2))=P(hj(2))⋅P(hj+1(2))⋅P(hi(1)∣hj(2),hj+1(2))⋅P(hi+1(1)∣hj(2),hj+1(2))⋅P(vi(1)∣hi(1),hi+1(1))⋅P(hi+2(1))⋅P(vi+1(1)∣hi+1(1),hi+2(1))

基于

Sigmoid

\text{Sigmoid}

Sigmoid信念网络的定义,以

h

i

(

1

)

h_i^{(1)}

hi(1)结点为例,它的概率分布表示如下:

由于h i ( 1 ) h_i^{(1)} hi(1)与h j ( 2 ) , h j + 1 ( 2 ) h_j^{(2)},h_{j+1}^{(2)} hj(2),hj+1(2)存在因果关系。因此,这个‘概率分布’明显指的是条件概率分布P ( h i ( 1 ) ∣ h j ( 2 ) , h j + 1 ( 2 ) ) \mathcal P(h_i^{(1)} \mid h_j^{(2)},h_{j+1}^{(2)}) P(hi(1)∣hj(2),hj+1(2)).提醒:概率分布描述中的h j ( 2 ) , h j + 1 ( 2 ) h_j^{(2)},h_{j+1}^{(2)} hj(2),hj+1(2)并不是概率分布结果,而是具体的随机变量取值:h j ( 2 ) , h j + 1 ( 2 ) ∈ { 0 , 1 } h_j^{(2)},h_{j+1}^{(2)} \in \{0,1\} hj(2),hj+1(2)∈{0,1}.-

σ

(

−

x

)

=

1

−

σ

(

x

)

\sigma(-x) = 1- \sigma(x)

σ(−x)=1−σ(x)

是Sigmoid \text{Sigmoid} Sigmoid函数自身的性质~

P ( h i ( 1 ) ∣ h j ( 2 ) , h j + 1 ( 2 ) ) = { P ( h i ( 1 ) = 1 ∣ h j ( 2 ) , h j + 1 ( 2 ) ) = σ ( W j → i ⋅ h j ( 2 ) + W j + 1 → i ⋅ h j + 1 ( 2 ) ) P ( h i ( 1 ) = 0 ∣ h j ( 2 ) , h j + 1 ( 2 ) ) = 1 − σ ( W j → i ⋅ h j ( 2 ) + W j + 1 → i ⋅ h j + 1 ( 2 ) ) = σ [ − ( W j → i ⋅ h j ( 2 ) + W j + 1 → i ⋅ h j + 1 ( 2 ) ) ] \mathcal P(h_i^{(1)} \mid h_j^{(2)},h_{j+1}^{(2)}) =\begin{cases} \mathcal P(h_i^{(1)} =1\mid h_j^{(2)},h_{j+1}^{(2)}) = \sigma \left(\mathcal W_{j \to i} \cdot h_j^{(2)} + \mathcal W_{j+1 \to i} \cdot h_{j+1}^{(2)} \right) \\ \begin{aligned} \mathcal P(h_i^{(1)} =0\mid h_j^{(2)},h_{j+1}^{(2)}) & = 1 - \sigma \left(\mathcal W_{j \to i} \cdot h_j^{(2)} + \mathcal W_{j+1 \to i} \cdot h_{j+1}^{(2)} \right) \\ & = \sigma \left[- \left(\mathcal W_{j \to i} \cdot h_j^{(2)} + \mathcal W_{j+1 \to i} \cdot h_{j+1}^{(2)} \right)\right] \end{aligned} \end{cases} P(hi(1)∣hj(2),hj+1(2))=⎩ ⎨ ⎧P(hi(1)=1∣hj(2),hj+1(2))=σ(Wj→i⋅hj(2)+Wj+1→i⋅hj+1(2))P(hi(1)=0∣hj(2),hj+1(2))=1−σ(Wj→i⋅hj(2)+Wj+1→i⋅hj+1(2))=σ[−(Wj→i⋅hj(2)+Wj+1→i⋅hj+1(2))]

如果将其表示成通式的话,模型中某结点

h

i

h_i

hi的概率分布可表示如下:

∑

j

→

i

W

j

→

i

⋅

h

j

\sum_{j \to i} \mathcal W_{j \to i} \cdot h_j

∑j→iWj→i⋅hj表示模型中指向

h

i

h_i

hi的结点们与对应参数的线性组合结果。

P

(

h

i

∣

h

j

;

j

→

i

)

=

{

P

(

h

i

=

1

∣

h

j

;

j

→

i

)

=

σ

(

∑

j

→

i

W

j

→

i

⋅

h

j

)

P

(

h

i

=

0

∣

h

j

;

j

→

i

)

=

σ

(

−

∑

j

→

i

W

j

→

i

⋅

h

j

)

\mathcal P(h_i \mid h_j;j \to i) = \begin{cases} \mathcal P(h_i = 1 \mid h_j;j \to i) = \sigma \left(\sum_{j \to i} \mathcal W_{j \to i} \cdot h_j\right) \\ \mathcal P(h_i = 0\mid h_j;j \to i) = \sigma \left(- \sum_{j \to i} \mathcal W_{j \to i} \cdot h_j\right) \end{cases}

P(hi∣hj;j→i)=⎩

⎨

⎧P(hi=1∣hj;j→i)=σ(∑j→iWj→i⋅hj)P(hi=0∣hj;j→i)=σ(−∑j→iWj→i⋅hj)

基于随机变量

h

i

h_i

hi的伯努利分布性质,将上述分段函数描述为一个公式:

P

(

h

i

∣

h

j

;

j

→

i

)

=

σ

[

(

2

h

i

−

1

)

∑

j

→

i

W

j

→

i

⋅

h

j

]

\mathcal P(h_i \mid h_j;j \to i) = \sigma \left[(2h_i - 1)\sum_{j \to i} \mathcal W_{j \to i} \cdot h_j\right]

P(hi∣hj;j→i)=σ[(2hi−1)j→i∑Wj→i⋅hj]

关于 Sigmoid \text{Sigmoid} Sigmoid信念网络的学习任务(Learning),Neal当时提出使用MCMC采样的方式对模型参数梯度进行求解。在真实情况下,MCMC采样方式仅能对 随机变量数量较少的模型结构 进行求解。在玻尔兹曼机——基于MCMC的梯度求解中简单介绍过:首先,随着随机变量数量的增加,对应的复杂度也会指数级别地增长;其次,达到平稳分布是极困难的。

重新观察上述 Sigmoid \text{Sigmoid} Sigmoid信念网络示例: v i ( 1 ) , v i + 1 ( 1 ) v_i^{(1)},v_{i+1}^{(1)} vi(1),vi+1(1)是观测变量的随机变量信息,观测变量是样本提供的,因此和玻尔兹曼机、受限玻尔兹曼机一样, v i ( 1 ) , v i + 1 ( 1 ) v_i^{(1)},v_{i+1}^{(1)} vi(1),vi+1(1)是 可观测的。以 v i ( 1 ) v_i^{(1)} vi(1)为例,在 v i ( 1 ) v_i^{(1)} vi(1)被观测的条件下, h i ( 1 ) , h i + 1 ( 1 ) h_i^{(1)},h_{i+1}^{(1)} hi(1),hi+1(1)之间 不是条件独立关系( V \mathcal V V型结构),因此关于 h i ( 1 ) h_i^{(1)} hi(1)或者 h i + 1 ( 1 ) h_{i+1}^{(1)} hi+1(1)的后验概率分布无法求解。

下面将求解模型参数的对数似然梯度,观察对数似然梯度与后验概率之间存在什么关联关系。

对数似然梯度推导过程

关于某随机变量结点的后验概率分布可表示为:

注意,这里的s i s_i si无论是观测变量,还是隐变量,都和其父节点之间存在这种关联关系。为了推导过程方便,将线性计算中的偏置项b b b归纳仅W \mathcal W W中。为了符号使用方便,将i i i改成了k k k.

{ P ( s k ∣ s j ; j → k ) = σ [ s k ∗ ⋅ ∑ j → k W j → k ⋅ s j ] s k ∗ = 2 s k − 1 \begin{cases} \mathcal P(s_k \mid s_j;j \to k) = \sigma \left[s_k^* \cdot \sum_{j \to k} \mathcal W_{j \to k} \cdot s_j\right] \\ s_k^{*} = 2s_k - 1 \end{cases} {P(sk∣sj;j→k)=σ[sk∗⋅∑j→kWj→k⋅sj]sk∗=2sk−1

对应地,关于随机变量

S

\mathcal S

S的联合概率分布通式

P

(

S

)

\mathcal P(\mathcal S)

P(S)可表示为:

P

(

S

)

=

∏

s

k

∈

S

P

(

s

k

∣

s

j

;

j

→

k

)

\mathcal P(\mathcal S) = \prod_{s_k \in \mathcal S} \mathcal P(s_k \mid s_j;j \to k)

P(S)=sk∈S∏P(sk∣sj;j→k)

关于观测变量的对数似然(Log-Likelihood)可表示为:

这里依然需要场景构建:数据集合

V

\mathcal V

V包含

∣

V

∣

=

N

|\mathcal V| = N

∣V∣=N个样本

{

v

(

1

)

,

v

(

2

)

,

⋯

,

v

(

∣

V

∣

)

}

\{v^{(1)},v^{(2)},\cdots,v^{(|\mathcal V|)}\}

{v(1),v(2),⋯,v(∣V∣)},并且每一个样本都可看做随机变量集合(观测变量)

v

v

v的一种具体表示。

Log-Likelihood:

∑

v

(

i

)

∈

V

log

P

(

v

(

i

)

)

\text{Log-Likelihood: } \sum_{v^{(i)} \in \mathcal V} \log \mathcal P(v^{(i)})

Log-Likelihood: v(i)∈V∑logP(v(i))

对某一权重

W

j

→

i

\mathcal W_{j \to i}

Wj→i的梯度可表示为:

这里的权重

W

j

→

i

\mathcal W_{j \to i}

Wj→i是指‘某一随机变量’

s

j

s_j

sj指向'随机变量'

s

i

s_i

si的权重信息

∂

∂

W

j

→

i

[

∑

v

(

i

)

∈

V

log

P

(

v

(

i

)

)

]

\frac{\partial}{\partial \mathcal W_{j \to i}} \left[\sum_{v^{(i)} \in \mathcal V}\log \mathcal P(v^{(i)})\right]

∂Wj→i∂

v(i)∈V∑logP(v(i))

根据链式求导法则,对上式进行展开:

使用‘牛顿-莱布尼兹公式’,将积分(连加号)与梯度符号交换位置。为了后续推导方便,将对数似然梯度使用Δ \Delta Δ进行表示。

Δ = ∂ ∂ W j → i [ ∑ v ( i ) ∈ V log P ( v ( i ) ) ] = ∑ v ( i ) ∈ V ∂ ∂ W j → i log P ( v ( i ) ) = ∑ v ( i ) ∈ V 1 P ( v ( i ) ) ⋅ ∂ P ( v ( i ) ) ∂ W j → i \begin{aligned} \Delta & = \frac{\partial}{\partial \mathcal W_{j \to i}} \left[\sum_{v^{(i)} \in \mathcal V}\log \mathcal P(v^{(i)})\right] \\ & = \sum_{v^{(i)} \in \mathcal V} \frac{\partial}{\partial \mathcal W_{j \to i}} \log \mathcal P(v^{(i)}) \\ & = \sum_{v^{(i)} \in \mathcal V} \frac{1}{\mathcal P(v^{(i)})} \cdot \frac{\partial \mathcal P(v^{(i)})}{\partial \mathcal W_{j \to i}} \end{aligned} Δ=∂Wj→i∂ v(i)∈V∑logP(v(i)) =v(i)∈V∑∂Wj→i∂logP(v(i))=v(i)∈V∑P(v(i))1⋅∂Wj→i∂P(v(i))

引入 v ( i ) v^{(i)} v(i)对应在 Sigmoid \text{Sigmoid} Sigmoid信念网络中的隐变量集合 h ( i ) h^{(i)} h(i):

这里同样使用‘牛顿-莱布尼兹公式’。根据条件概率公式,将1 P ( v ( i ) ) = P ( h ( i ) ∣ v ( i ) ) P ( v ( i ) , h ( i ) ) \frac{1}{\mathcal P(v^{(i)}) }= \frac{\mathcal P(h^{(i)} \mid v^{(i)})}{\mathcal P(v^{(i)},h^{(i)})} P(v(i))1=P(v(i),h(i))P(h(i)∣v(i))代入式子中。而P ( v ( i ) , h ( i ) ) \mathcal P(v^{(i)},h^{(i)}) P(v(i),h(i))实际上就是某一样本的随机变量集合(隐变量、观测变量)的联合概率分布。这里对应使用符号S ( i ) \mathcal S^{(i)} S(i)进行表示。

Δ = ∑ v ( i ) ∈ V 1 P ( v ( i ) ) ⋅ ∂ ∂ W j → i [ ∑ h ( i ) P ( v ( i ) , h ( i ) ) ] = ∑ v ( i ) ∈ V ∑ h ( i ) 1 P ( v ( i ) ) ⋅ ∂ ∂ W j → i P ( v ( i ) , h ( i ) ) = ∑ v ( i ) ∈ V ∑ h ( i ) P ( h ( i ) ∣ v ( i ) ) P ( v ( i ) , h ( i ) ) ⋅ ∂ ∂ W j → i P ( v ( i ) , h ( i ) ) = ∑ v ( i ) ∈ V ∑ h ( i ) P ( h ( i ) ∣ v ( i ) ) ⋅ 1 P ( S ( i ) ) ⋅ ∂ P ( S ( i ) ) ∂ W j → i \begin{aligned} \Delta & = \sum_{v^{(i)} \in \mathcal V} \frac{1}{\mathcal P(v^{(i)})} \cdot \frac{\partial}{\partial \mathcal W_{j \to i}} \left[\sum_{h^{(i)}} \mathcal P(v^{(i)},h^{(i)})\right] \\ & = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}} \frac{1}{\mathcal P(v^{(i)})} \cdot \frac{\partial}{\partial \mathcal W_{j \to i}} \mathcal P(v^{(i)},h^{(i)}) \\ & = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}} \frac{\mathcal P(h^{(i)} \mid v^{(i)})}{\mathcal P(v^{(i)},h^{(i)})} \cdot \frac{\partial}{\partial \mathcal W_{j \to i}} \mathcal P(v^{(i)},h^{(i)}) \\ & = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}}\mathcal P(h^{(i)} \mid v^{(i)}) \cdot \frac{1}{\mathcal P(\mathcal S^{(i)})} \cdot \frac{\partial \mathcal P(\mathcal S^{(i)})}{\partial \mathcal W_{j \to i}} \end{aligned} Δ=v(i)∈V∑P(v(i))1⋅∂Wj→i∂[h(i)∑P(v(i),h(i))]=v(i)∈V∑h(i)∑P(v(i))1⋅∂Wj→i∂P(v(i),h(i))=v(i)∈V∑h(i)∑P(v(i),h(i))P(h(i)∣v(i))⋅∂Wj→i∂P(v(i),h(i))=v(i)∈V∑h(i)∑P(h(i)∣v(i))⋅P(S(i))1⋅∂Wj→i∂P(S(i))\

将 P ( S ( i ) ) \mathcal P(\mathcal S^{(i)}) P(S(i))使用联合概率分布通式展开,有:

很明显,S ( i ) \mathcal S^{(i)} S(i)中有多少个随机变量,∏ s k ( i ) ∈ S ( i ) P ( s k ( i ) ∣ s j ( i ) ; j → k ) \prod_{s_k^{(i)} \in \mathcal S^{(i)}}\mathcal P(s_k ^{(i)}\mid s_j^{(i)};j \to k) ∏sk(i)∈S(i)P(sk(i)∣sj(i);j→k)中就有多少项乘法。但与W j → i \mathcal W_{j \to i} Wj→i相关的项只有P ( s i ( i ) ∣ s j ( i ) ; j → i ) \mathcal P(s_i^{(i)} \mid s_j^{(i)};j \to i) P(si(i)∣sj(i);j→i)一项,其余项均可视作常数。使用符号Ω = ∏ s k ( i ) ≠ s i ( i ) ∈ S ( i ) P ( s k ( i ) ∣ s j ( i ) ; j → k ) \Omega = \prod_{s_k^{(i)} \neq s_i^{(i)} \in \mathcal S^{(i)}}\mathcal P(s_k ^{(i)}\mid s_j^{(i)};j \to k) Ω=∏sk(i)=si(i)∈S(i)P(sk(i)∣sj(i);j→k)表示除去P ( s i ( i ) ∣ s j ( i ) ; j → i ) \mathcal P(s_i^{(i)} \mid s_j^{(i)};j \to i) P(si(i)∣sj(i);j→i)这一项之外的其他项的乘积。

Δ = ∑ v ( i ) ∈ V ∑ h ( i ) P ( h ( i ) ∣ v ( i ) ) ⋅ 1 ∏ s k ( i ) ∈ S ( i ) P ( s k ( i ) ∣ s j ( i ) ; j → k ) ⋅ ∂ ∂ W j → i [ ∏ s k ( i ) ∈ S ( i ) P ( s k ( i ) ∣ s j ( i ) ; j → k ) ] = ∑ v ( i ) ∈ V ∑ h ( i ) P ( h ( i ) ∣ v ( i ) ) ⋅ 1 Ω ⋅ P ( s i ( i ) ∣ s j ( i ) ; j → i ) ⋅ [ Ω ⋅ ∂ ∂ W j → i P ( s i ( i ) ∣ s j ( i ) ; j → i ) ] = ∑ v ( i ) ∈ V ∑ h ( i ) P ( h ( i ) ∣ v ( i ) ) ⋅ 1 P ( s i ( i ) ∣ s j ( i ) ; j → i ) ⋅ [ ∂ ∂ W j → i P ( s i ( i ) ∣ s j ( i ) ; j → i ) ] \begin{aligned} \Delta & = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}}\mathcal P(h^{(i)} \mid v^{(i)}) \cdot \frac{1}{\prod_{s_k^{(i)} \in \mathcal S^{(i)}}\mathcal P(s_k ^{(i)}\mid s_j^{(i)};j \to k)} \cdot \frac{\partial}{\partial \mathcal W_{j \to i}} \left[\prod_{s_k^{(i)} \in \mathcal S^{(i)}}\mathcal P(s_k^{(i)} \mid s_j^{(i)};j \to k)\right] \\ & = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}}\mathcal P(h^{(i)} \mid v^{(i)}) \cdot \frac{1}{\Omega \cdot \mathcal P(s_i^{(i)} \mid s_j^{(i)};j \to i)} \cdot \left[\Omega \cdot \frac{\partial}{\partial \mathcal W_{j \to i}} \mathcal P(s_i^{(i)} \mid s_j^{(i)};j \to i)\right] \\ & = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}}\mathcal P(h^{(i)} \mid v^{(i)}) \cdot \frac{1}{\mathcal P(s_i^{(i)} \mid s_j^{(i)};j \to i)} \cdot \left[ \frac{\partial}{\partial \mathcal W_{j \to i}} \mathcal P(s_i^{(i)} \mid s_j^{(i)};j \to i)\right] \end{aligned} Δ=v(i)∈V∑h(i)∑P(h(i)∣v(i))⋅∏sk(i)∈S(i)P(sk(i)∣sj(i);j→k)1⋅∂Wj→i∂ sk(i)∈S(i)∏P(sk(i)∣sj(i);j→k) =v(i)∈V∑h(i)∑P(h(i)∣v(i))⋅Ω⋅P(si(i)∣sj(i);j→i)1⋅[Ω⋅∂Wj→i∂P(si(i)∣sj(i);j→i)]=v(i)∈V∑h(i)∑P(h(i)∣v(i))⋅P(si(i)∣sj(i);j→i)1⋅[∂Wj→i∂P(si(i)∣sj(i);j→i)]

至此,发现

P

(

s

i

(

i

)

∣

s

j

(

i

)

;

j

→

i

)

\mathcal P(s_i^{(i)} \mid s_j^{(i)};j \to i)

P(si(i)∣sj(i);j→i)实际上就是结点的后验概率分布。那么将

Sigmoid

\text{Sigmoid}

Sigmoid代入,有:

Δ

=

∑

v

(

i

)

∈

V

∑

h

(

i

)

P

(

h

(

i

)

∣

v

(

i

)

)

⋅

1

σ

[

(

2

s

i

(

i

)

−

1

)

⋅

∑

j

→

i

W

j

→

i

⋅

s

j

(

i

)

]

{

∂

∂

W

j

→

i

σ

[

(

2

s

i

(

i

)

−

1

)

⋅

∑

j

→

i

W

j

→

i

⋅

s

j

(

i

)

]

}

\begin{aligned} \Delta = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}}\mathcal P(h^{(i)} \mid v^{(i)}) \cdot \frac{1}{\sigma \left[(2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right]}\left\{\frac{\partial}{\partial \mathcal W_{j \to i}} \sigma \left[(2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right]\right\} \end{aligned}

Δ=v(i)∈V∑h(i)∑P(h(i)∣v(i))⋅σ[(2si(i)−1)⋅∑j→iWj→i⋅sj(i)]1{∂Wj→i∂σ[(2si(i)−1)⋅j→i∑Wj→i⋅sj(i)]}

依然使用链式求导法则进行求导。观察关于

Sigmoid

\text{Sigmoid}

Sigmoid函数的导数:

∂

∂

x

[

σ

(

x

)

]

=

e

−

x

(

1

+

e

−

x

)

2

=

1

1

+

e

−

x

⋅

e

−

x

1

+

e

−

x

=

σ

(

x

)

⋅

σ

(

−

x

)

\begin{aligned} \frac{\partial}{\partial x} \left[\sigma(x)\right] & = \frac{e^{-x}}{(1 + e^{-x})^2} = \frac{1}{1 + e^{-x}} \cdot \frac{e^{-x}}{1 + e^{-x}}\\ & = \sigma(x) \cdot \sigma(-x) \end{aligned}

∂x∂[σ(x)]=(1+e−x)2e−x=1+e−x1⋅1+e−xe−x=σ(x)⋅σ(−x)

那么上式

Δ

\Delta

Δ可表示为:

同上,关于

∑

j

→

i

W

j

→

i

⋅

s

j

(

i

)

\sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}

∑j→iWj→i⋅sj(i)对

W

j

→

i

\mathcal W_{j \to i}

Wj→i求解偏导,实际上只有一项与

W

j

→

i

\mathcal W_{j \to i}

Wj→i相关,与其他项无关,均视作常数。

p.s. 这里

j

j

j下标还是用重复了~

Δ

=

∑

v

(

i

)

∈

V

∑

h

(

i

)

P

(

h

(

i

)

∣

v

(

i

)

)

⋅

1

σ

[

(

2

s

i

(

i

)

−

1

)

⋅

∑

j

→

i

W

j

→

i

⋅

s

j

(

i

)

]

⋅

σ

[

(

2

s

i

(

i

)

−

1

)

⋅

∑

j

→

i

W

j

→

i

⋅

s

j

(

i

)

]

⋅

σ

[

−

(

2

s

i

(

i

)

−

1

)

⋅

∑

j

→

i

W

j

→

i

⋅

s

j

(

i

)

]

⋅

(

2

s

i

(

i

)

−

1

)

⋅

s

j

(

i

)

\begin{aligned} \Delta = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}}\mathcal P(h^{(i)} \mid v^{(i)}) \cdot \frac{1}{\sigma \left[(2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right]} \cdot \sigma \left[(2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right] \cdot \sigma \left[- (2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right] \cdot (2s_i^{(i)} - 1) \cdot s_j^{(i)} \end{aligned}

Δ=v(i)∈V∑h(i)∑P(h(i)∣v(i))⋅σ[(2si(i)−1)⋅∑j→iWj→i⋅sj(i)]1⋅σ[(2si(i)−1)⋅j→i∑Wj→i⋅sj(i)]⋅σ[−(2si(i)−1)⋅j→i∑Wj→i⋅sj(i)]⋅(2si(i)−1)⋅sj(i)

该消掉的消掉,最后整理有:

∂

∂

W

j

→

i

[

∑

v

(

i

)

∈

V

log

P

(

v

(

i

)

)

]

=

∑

v

(

i

)

∈

V

∑

h

(

i

)

P

(

h

(

i

)

∣

v

(

i

)

)

⋅

σ

[

−

(

2

s

i

(

i

)

−

1

)

⋅

∑

j

→

i

W

j

→

i

⋅

s

j

(

i

)

]

⋅

(

2

s

i

(

i

)

−

1

)

⋅

s

j

(

i

)

\begin{aligned} \frac{\partial}{\partial \mathcal W_{j \to i}} \left[\sum_{v^{(i)} \in \mathcal V}\log \mathcal P(v^{(i)})\right] & = \sum_{v^{(i)} \in \mathcal V} \sum_{h^{(i)}}\mathcal P(h^{(i)} \mid v^{(i)}) \cdot \sigma \left[- (2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right] \cdot (2s_i^{(i)} - 1) \cdot s_j^{(i)} \\ \end{aligned}

∂Wj→i∂

v(i)∈V∑logP(v(i))

=v(i)∈V∑h(i)∑P(h(i)∣v(i))⋅σ[−(2si(i)−1)⋅j→i∑Wj→i⋅sj(i)]⋅(2si(i)−1)⋅sj(i)

梯度求解过程中的问题

至此,观察关于 W j → i \mathcal W_{j \to i} Wj→i的梯度,其中包含一个关于隐变量集合的后验概率 P ( h ( i ) ∣ v ( i ) ) \mathcal P(h^{(i)} \mid v^{(i)}) P(h(i)∣v(i))。因为 V \mathcal V V型结构,给定观测变量条件下,根本不是条件独立,因此没有办法对 P ( h ( i ) ∣ v ( i ) ) \mathcal P(h^{(i)} \mid v^{(i)}) P(h(i)∣v(i))直接进行求解。

针对于小规模的随机变量集合,可以使用MCMC方法进行求解:

针对‘对数似然’,这里乘以一个常数1 N \frac{1}{N} N1,之前拉下了,对梯度方向不产生影响。依然是蒙特卡洛方法的逆推公式~

∂ ∂ W j → i [ 1 N ∑ v ( i ) ∈ V log P ( v ( i ) ) ] = 1 N ∑ v ( i ) ∈ V E P m o d e l ( h ( i ) ∣ v ( i ) ) [ σ ( − ( 2 s i ( i ) − 1 ) ⋅ ∑ j → i W j → i ⋅ s j ( i ) ) ⋅ ( 2 s i ( i ) − 1 ) ⋅ s j ( i ) ] ≈ E P d a t a ( v ( i ) ∈ V ) { E P m o d e l ( h ( i ) ∣ v ( i ) ) [ σ ( − ( 2 s i ( i ) − 1 ) ⋅ ∑ j → i W j → i ⋅ s j ( i ) ) ⋅ ( 2 s i ( i ) − 1 ) ⋅ s j ( i ) ] } = { E P d a t a [ σ ( − ( 2 s i ( i ) − 1 ) ⋅ ∑ j → i W j → i ⋅ s j ( i ) ) ⋅ ( 2 s i ( i ) − 1 ) ⋅ s j ( i ) ] P d a t a ⇒ P d a t a ( v ( i ) ∈ V ) ⋅ P m o d e l ( h ( i ) ∣ v ( i ) ) \begin{aligned} \frac{\partial}{\partial \mathcal W_{j \to i}} \left[\frac{1}{N}\sum_{v^{(i)} \in \mathcal V}\log \mathcal P(v^{(i)})\right] & = \frac{1}{N}\sum_{v^{(i)} \in \mathcal V} \mathbb E_{\mathcal P_{model}(h^{(i)} \mid v^{(i)})} \left[\sigma \left(- (2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right) \cdot (2s_i^{(i)} - 1) \cdot s_j^{(i)} \right] \\ & \approx \mathbb E_{\mathcal P_{data}(v^{(i)} \in \mathcal V)} \left\{\mathbb E_{\mathcal P_{model}(h^{(i)} \mid v^{(i)})} \left[\sigma \left(- (2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right) \cdot (2s_i^{(i)} - 1) \cdot s_j^{(i)} \right]\right\} \\ & = \begin{cases} \mathbb E_{\mathcal P_{data}} \left[\sigma \left(- (2s_i^{(i)} - 1)\cdot \sum_{j \to i} \mathcal W_{j \to i} \cdot s_j^{(i)}\right) \cdot (2s_i^{(i)} - 1) \cdot s_j^{(i)}\right] \\ \mathcal P_{data} \Rightarrow \mathcal P_{data}(v^{(i)} \in \mathcal V) \cdot \mathcal P_{model}(h^{(i)} \mid v^{(i)}) \end{cases} \end{aligned} ∂Wj→i∂ N1v(i)∈V∑logP(v(i)) =N1v(i)∈V∑EPmodel(h(i)∣v(i))[σ(−(2si(i)−1)⋅j→i∑Wj→i⋅sj(i))⋅(2si(i)−1)⋅sj(i)]≈EPdata(v(i)∈V){EPmodel(h(i)∣v(i))[σ(−(2si(i)−1)⋅j→i∑Wj→i⋅sj(i))⋅(2si(i)−1)⋅sj(i)]}={EPdata[σ(−(2si(i)−1)⋅∑j→iWj→i⋅sj(i))⋅(2si(i)−1)⋅sj(i)]Pdata⇒Pdata(v(i)∈V)⋅Pmodel(h(i)∣v(i))

通过观察可以发现,这种描述方式和玻尔兹曼机——基本介绍中正相部分的描述格式基本相同。如果说一句总结的话,那就是 使用传统方式求解模型参数梯度,梯度中包含隐变量的后验概率,因而没有办法直接求解。可以通过MCMC方式采样近似求解,它的弊端这里就不多说了。

下一节将介绍醒眠算法

相关参考:

(系列二十六)Sigmoid Belief Network1-背景介绍

(系列二十六)Sigmoid Belief Network2- gradient of log-likelihood

(系列二十六)Sigmoid Belief Network2- gradient of log-likelihood续