1.最好按照说明文档要求配好python3.7和pytorch1.0

2. 【已解决】 FutureWarning: The module torch.distributed.launch is deprecated and will be removed in future.

torch.distributed.launch被弃用,考虑使用torchrun模块进行替换。

解决方案:

将训练脚本中的torch.distributed.launch替换为torchrun。例如,如果原始命令如下

python -m torch.distributed.launch --nproc_per_node=2 train.py

将其修改为下面的命令:

python -m torch.distributed.run --use-env --nproc_per_node=2 train.py

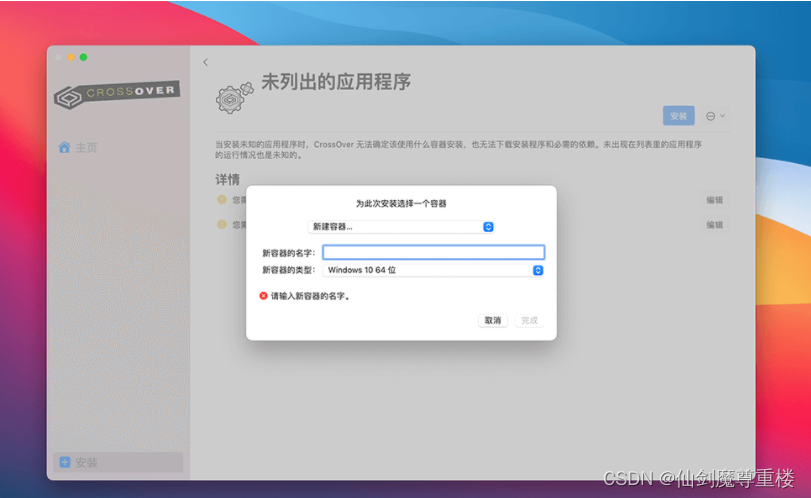

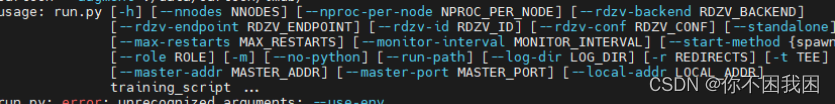

如果还是报错如下:

删掉–use-env

torch.distributed.elastic.multiprocessing.api:failed (exitcode: 1) local_rank: 0 (pid: 2344619) of binary: /home/vgg/anaco

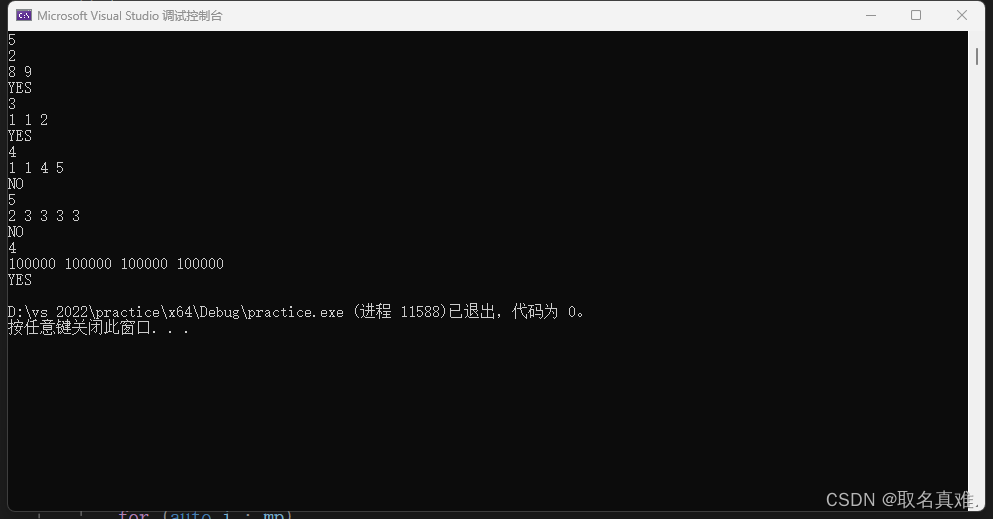

3. 【已解决】ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 1) local_rank: 0 (pid: 1447037) of binary: /usr/bin/python

解决方案:

在dataloader时参数shuffle默认False即可

4.【已解决】torch.distributed.elastic.multiprocessing.api:Sending process 2344620 closing signal SIGTERM

单卡跑就行,解决方案看5

5. 【已解决】module ‘progressbar’ has no attribute ‘Variable’

解决方案

卸载掉progressbar2和progressbar模块重装

pip uninstall progressbar2

pip uninstall progressbar

重装,建议安装低版本的progressbar2

pip install progressbar2==3.51

pip install progressbar

6. 【已解决】RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cuda:1! (when checking argument for argument weight in method wrapper__cudnn_convolution)

问题原因:参与运算的变量不在同一个gpu上,考虑将所有数据移动到同一个gpu上运行,或者干脆使用单卡运行,在训练脚本中改为:就是单卡跑

CUDA_VISIBLE_DEVICES=0,--nproc_per_node=1

7. 【已解决】CUDA out of memory.

使用查看显卡空间

gpustat

然后切换成有空的显卡

CUDA_VISIBLE_DEVICES=有空的显卡号