文章目录

- 1.基本的合流操作

- 2.1联合(Union)

- 2.2 连接(Connect)

- 2.基于时间的合流——双流联结(Join)

- 2.1 窗口联结(Window Join)

- 2.2 间隔联结(Interval Join)

- 2.3 窗口同组联结(Window CoGroup)

💎💎💎💎💎

更多资源链接,欢迎访问作者gitee仓库:https://gitee.com/fanggaolei/learning-notes-warehouse/tree/master

1.基本的合流操作

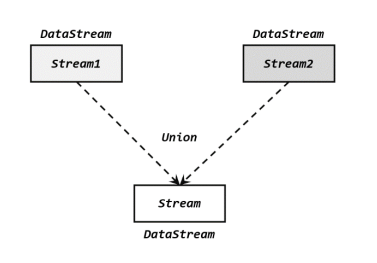

2.1联合(Union)

在代码中,我们只要基于 DataStream 直接调用.union()方法,传入其他 DataStream 作为参数,就可以实现流的联合了;得到的依然是一个 DataStream:

stream1.union(stream2, stream3, ...)

注意:union()的参数可以是多个 DataStream,所以联合操作可以实现多条流的合并。

注意:对于合流之后的水位线,也是要以最小的那个为准

数据类型不能改变

import com.atguigu.chapter05.Event;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class UnionTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Event> stream1 = env.socketTextStream("hadoop102", 7777)

.map(data -> {

String[] field = data.split(",");

return new Event(field[0].trim(), field[1].trim(), Long.valueOf(field[2].trim()));

})

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ofSeconds(2))

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event element, long recordTimestamp) {

return element.timestamp;

}

})

);

stream1.print("stream1");

SingleOutputStreamOperator<Event> stream2 = env.socketTextStream("hadoop103", 7777)

.map(data -> {

String[] field = data.split(",");

return new Event(field[0].trim(), field[1].trim(), Long.valueOf(field[2].trim()));

})

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ofSeconds(5))

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event element, long recordTimestamp) {

return element.timestamp;

}

})

);

stream2.print("stream2");

// 合并两条流

stream1.union(stream2)

.process(new ProcessFunction<Event, String>() {

@Override

public void processElement(Event value, Context ctx, Collector<String> out) throws Exception {

out.collect("水位线:" + ctx.timerService().currentWatermark());

}

})

.print();

env.execute();

}

}

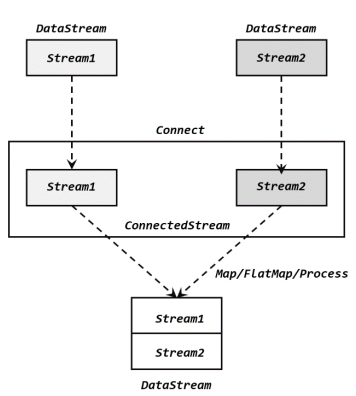

2.2 连接(Connect)

1.连接流(ConnectedStreams)

import org.apache.flink.streaming.api.datastream.ConnectedStreams;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoMapFunction;

public class ConnectTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStream<Integer> stream1 = env.fromElements(1,2,3);

DataStream<Long> stream2 = env.fromElements(1L,2L,3L);

ConnectedStreams<Integer, Long> connectedStreams = stream1.connect(stream2);

SingleOutputStreamOperator<String> result = connectedStreams.map(new CoMapFunction<Integer, Long, String>() {

@Override

public String map1(Integer value) {

return "Integer: " + value;

}

@Override

public String map2(Long value) {

return "Long: " + value;

}

});

result.print();

env.execute();

}

}

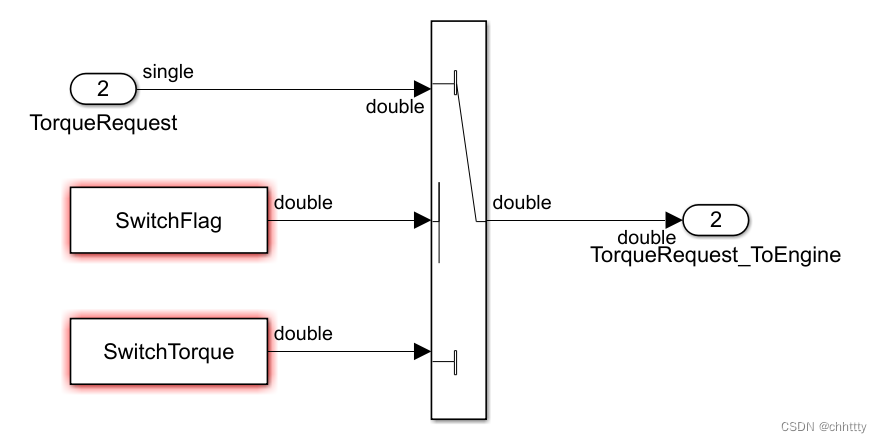

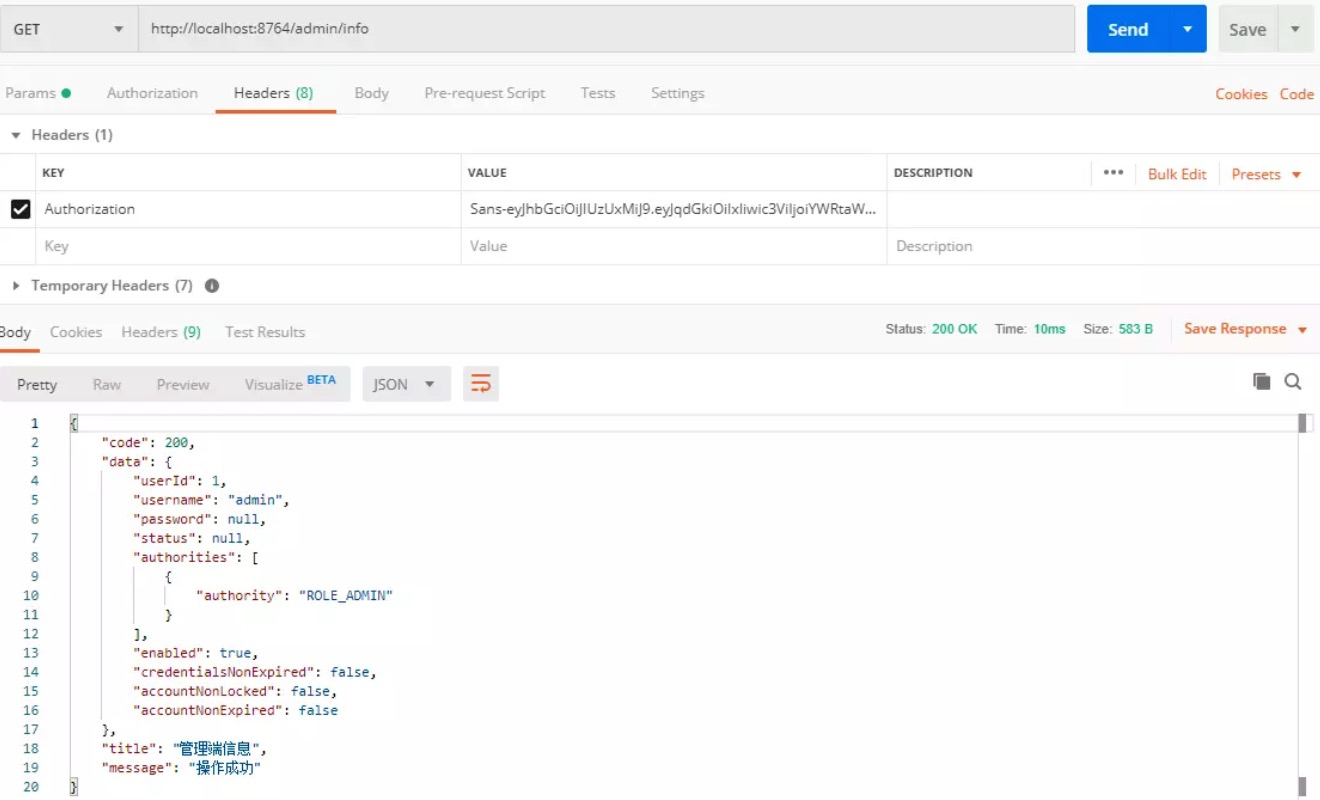

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ejyQTMlQ-1671785198433)(https://pic-1313413291.cos.ap-nanjing.myqcloud.com/image-20221223140620202.png)]

2.CoProcessFunction

对于连接流 ConnectedStreams 的处理操作,需要分别定义对两条流的处理转换,因此接口中就会有两个相同的方法需要实现,用数字“1”“2”区分,在两条流中的数据到来时分别调用。我们把这种接口叫作“协同处理函数”(co-process function)。

抽象类 CoProcessFunction 在源码中定义如下:

public abstract class CoProcessFunction<IN1, IN2, OUT> extends

AbstractRichFunction {

public abstract void processElement1(IN1 value, Context ctx, Collector<OUT> out) throws Exception;

public abstract void processElement2(IN2 value, Context ctx, Collector<OUT> out) throws Exception;

public void onTimer(long timestamp, OnTimerContext ctx, Collector<OUT> out) throws Exception {}

public abstract class Context {...}

}

我们可以看到,很明显 CoProcessFunction 也是“处理函数”家族中的一员,用法非常相似。它需要实现的就是 processElement1()、processElement2()两个方法,在每个数据到来时,会根据来源的流调用其中的一个方法进行处理。CoProcessFunction 同样可以通过上下文 ctx 来访问 timestamp、水位线,并通过 TimerService 注册定时器;另外也提供了.onTimer()方法,用于定义定时触发的处理操作。

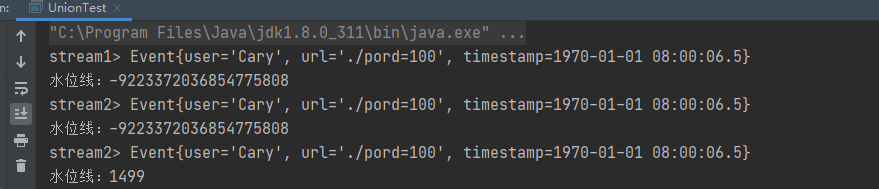

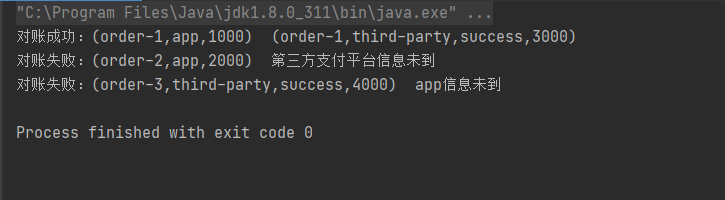

下面是 CoProcessFunction 的一个具体示例:我们可以实现一个实时对账的需求,也就是app 的支付操作和第三方的支付操作的一个双流 Join。App 的支付事件和第三方的支付事件将会互相等待 5 秒钟,如果等不来对应的支付事件,那么就输出报警信息。程序如下:

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoProcessFunction;

import org.apache.flink.util.Collector;

// 实时对账

public class BillCheckExample {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 来自app的支付日志

SingleOutputStreamOperator<Tuple3<String, String, Long>> appStream = env.fromElements(

Tuple3.of("order-1", "app", 1000L),

Tuple3.of("order-2", "app", 2000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple3<String, String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple3<String, String, Long>>() {

@Override

public long extractTimestamp(Tuple3<String, String, Long> element, long recordTimestamp) {

return element.f2;

}

})

);

// 来自第三方支付平台的支付日志

SingleOutputStreamOperator<Tuple4<String, String, String, Long>> thirdpartStream = env.fromElements(

Tuple4.of("order-1", "third-party", "success", 3000L),

Tuple4.of("order-3", "third-party", "success", 4000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple4<String, String, String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple4<String, String, String, Long>>() {

@Override

public long extractTimestamp(Tuple4<String, String, String, Long> element, long recordTimestamp) {

return element.f3;

}

})

);

// 检测同一支付单在两条流中是否匹配,不匹配就报警

appStream.connect(thirdpartStream)

.keyBy(data -> data.f0, data -> data.f0)

.process(new OrderMatchResult())

.print();

env.execute();

}

// 自定义实现CoProcessFunction

public static class OrderMatchResult extends CoProcessFunction<Tuple3<String, String, Long>, Tuple4<String, String, String, Long>, String>{

// 定义状态变量,用来保存已经到达的事件

private ValueState<Tuple3<String, String, Long>> appEventState;

private ValueState<Tuple4<String, String, String, Long>> thirdPartyEventState;

@Override

public void open(Configuration parameters) throws Exception {

appEventState = getRuntimeContext().getState(

new ValueStateDescriptor<Tuple3<String, String, Long>>("app-event", Types.TUPLE(Types.STRING, Types.STRING, Types.LONG))

);

thirdPartyEventState = getRuntimeContext().getState(

new ValueStateDescriptor<Tuple4<String, String, String, Long>>("thirdparty-event", Types.TUPLE(Types.STRING, Types.STRING, Types.STRING,Types.LONG))

);

}

@Override

public void processElement1(Tuple3<String, String, Long> value, Context ctx, Collector<String> out) throws Exception {

// 看另一条流中事件是否来过

if (thirdPartyEventState.value() != null){

out.collect("对账成功:" + value + " " + thirdPartyEventState.value());

// 清空状态

thirdPartyEventState.clear();

} else {

// 更新状态

appEventState.update(value);

// 注册一个5秒后的定时器,开始等待另一条流的事件

ctx.timerService().registerEventTimeTimer(value.f2 + 5000L);

}

}

@Override

public void processElement2(Tuple4<String, String, String, Long> value, Context ctx, Collector<String> out) throws Exception {

if (appEventState.value() != null){

out.collect("对账成功:" + appEventState.value() + " " + value);

// 清空状态

appEventState.clear();

} else {

// 更新状态

thirdPartyEventState.update(value);

// 注册一个5秒后的定时器,开始等待另一条流的事件

ctx.timerService().registerEventTimeTimer(value.f3 + 5000L);

}

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

// 定时器触发,判断状态,如果某个状态不为空,说明另一条流中事件没来

if (appEventState.value() != null) {

out.collect("对账失败:" + appEventState.value() + " " + "第三方支付平台信息未到");

}

if (thirdPartyEventState.value() != null) {

out.collect("对账失败:" + thirdPartyEventState.value() + " " + "app信息未到");

}

appEventState.clear();

thirdPartyEventState.clear();

}

}}

3.广播连接流(BroadcastConnectedStream)

关于两条流的连接,还有一种比较特殊的用法:DataStream 调用.connect()方法时,传入的参数也可以不是一个 DataStream,而是一个“广播流”(BroadcastStream),这时合并两条流得到的就变成了一个“广播连接流”(BroadcastConnectedStream)。

2.基于时间的合流——双流联结(Join)

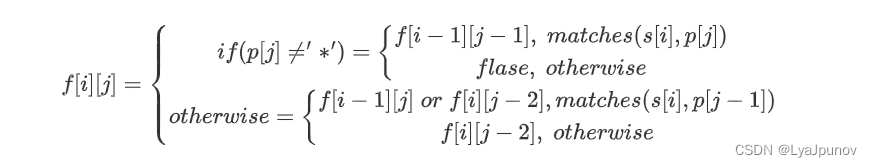

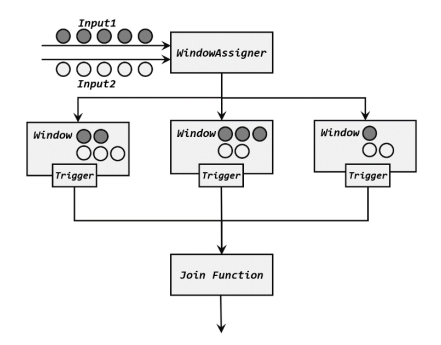

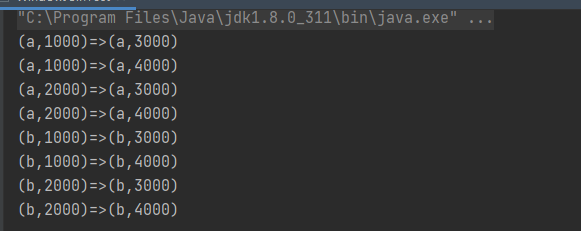

2.1 窗口联结(Window Join)

Flink 为这种场景专门提供了一个窗口联结(window join)算子,可以定义时间窗口,并将两条流中共享一个公共键(key)的数据放在窗口中进行配对处理。

1.窗口联结的调用

stream1.join(stream2)

.where(<KeySelector>)

.equalTo(<KeySelector>)

.window(<WindowAssigner>)

.apply(<JoinFunction>)

2. 窗口联结的处理流程

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.JoinFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

// 基于窗口的join

public class WindowJoinTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStream<Tuple2<String, Long>> stream1 = env

.fromElements(

Tuple2.of("a", 1000L),

Tuple2.of("b", 1000L),

Tuple2.of("a", 2000L),

Tuple2.of("b", 2000L)

)

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<Tuple2<String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(

new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}

)

);

DataStream<Tuple2<String, Long>> stream2 = env

.fromElements(

Tuple2.of("a", 3000L),

Tuple2.of("b", 3000L),

Tuple2.of("a", 4000L),

Tuple2.of("b", 4000L)

)

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<Tuple2<String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(

new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}

)

);

stream1

.join(stream2)

.where(r -> r.f0)

.equalTo(r -> r.f0)

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.apply(new JoinFunction<Tuple2<String, Long>, Tuple2<String, Long>, String>() {

@Override

public String join(Tuple2<String, Long> left, Tuple2<String, Long> right) throws Exception {

return left + "=>" + right;

}

})

.print();

env.execute();

}

}

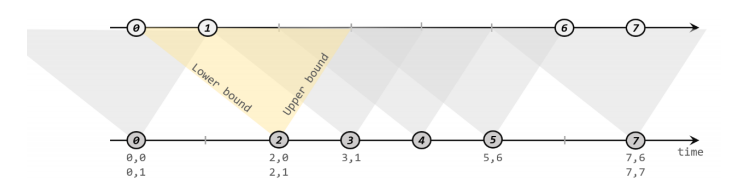

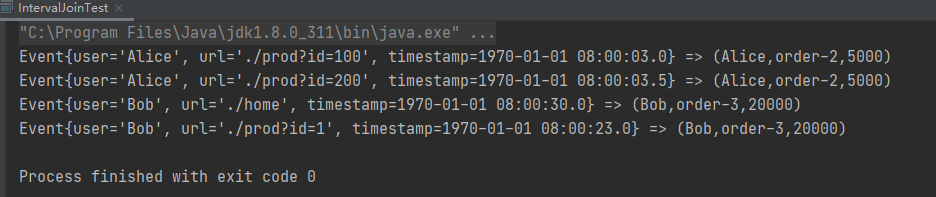

2.2 间隔联结(Interval Join)

间隔联结在代码中,是基于 KeyedStream 的联结(join)操作。DataStream 在 keyBy 得到KeyedStream 之后,可以调用.intervalJoin()来合并两条流,传入的参数同样是一个 KeyedStream,两者的 key 类型应该一致;得到的是一个 IntervalJoin 类型。后续的操作同样是完全固定的:先通过.between()方法指定间隔的上下界,再调用.process()方法,定义对匹配数据对的处理操作。调用.process()需要传入一个处理函数,这是处理函数家族的最后一员:“处理联结函数”ProcessJoinFunction。

stream1

.keyBy(<KeySelector>)

.intervalJoin(stream2.keyBy(<KeySelector>))

.between(Time.milliseconds(-2), Time.milliseconds(1))

.process (new ProcessJoinFunction<Integer, Integer, String(){

@Override

public void processElement(Integer left, Integer right, Context ctx, Collector<String> out) {

out.collect(left + "," + right);

}

});

在电商网站中,某些用户行为往往会有短时间内的强关联。我们这里举一个例子,我们有两条流,一条是下订单的流,一条是浏览数据的流。我们可以针对同一个用户,来做这样一个联结。也就是使用一个用户的下订单的事件和这个用户的最近十分钟的浏览数据进行一个联结查询。

import com.atguigu.chapter05.Event;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.ProcessJoinFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

// 基于间隔的join

public class IntervalJoinTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Tuple3<String, String, Long>> orderStream = env.fromElements(

Tuple3.of("Mary", "order-1", 5000L),

Tuple3.of("Alice", "order-2", 5000L),

Tuple3.of("Bob", "order-3", 20000L),

Tuple3.of("Alice", "order-4", 20000L),

Tuple3.of("Cary", "order-5", 51000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple3<String, String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple3<String, String, Long>>() {

@Override

public long extractTimestamp(Tuple3<String, String, Long> element, long recordTimestamp) {

return element.f2;

}

})

);

SingleOutputStreamOperator<Event> clickStream = env.fromElements(

new Event("Bob", "./cart", 2000L),

new Event("Alice", "./prod?id=100", 3000L),

new Event("Alice", "./prod?id=200", 3500L),

new Event("Bob", "./prod?id=2", 2500L),

new Event("Alice", "./prod?id=300", 36000L),

new Event("Bob", "./home", 30000L),

new Event("Bob", "./prod?id=1", 23000L),

new Event("Bob", "./prod?id=3", 33000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forMonotonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event element, long recordTimestamp) {

return element.timestamp;

}

})

);

orderStream.keyBy(data -> data.f0)

.intervalJoin(clickStream.keyBy(data -> data.user))

.between(Time.seconds(-5), Time.seconds(10))

.process(new ProcessJoinFunction<Tuple3<String, String, Long>, Event, String>() {

@Override

public void processElement(Tuple3<String, String, Long> left, Event right, Context ctx, Collector<String> out) throws Exception {

out.collect(right + " => " + left);

}

})

.print();

env.execute();

}}

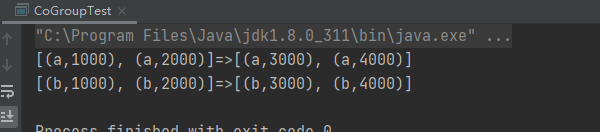

2.3 窗口同组联结(Window CoGroup)

除窗口联结和间隔联结之外,Flink 还提供了一个“窗口同组联结”(window coGroup)操作。它的用法跟 window join 非常类似,也是将两条流合并之后开窗处理匹配的元素,调用时只需要将.join()换为.coGroup()就可以了。

stream1.coGroup(stream2)

.where(<KeySelector>)

.equalTo(<KeySelector>)

.window(TumblingEventTimeWindows.of(Time.hours(1)))

.apply(<CoGroupFunction>)

内部的.coGroup()方法,有些类似于 FlatJoinFunction 中.join()的形式,同样有三个参数,分别代表两条流中的数据以及用于输出的收集器(Collector)。不同的是,这里的前两个参数不再是单独的每一组“配对”数据了,而是传入了可遍历的数据集合。也就是说,现在不会再去计算窗口中两条流数据集的笛卡尔积,而是直接把收集到的所有数据一次性传入, 至于要怎样配对完全是自定义的。这样.coGroup()方法只会被调用一次,而且即使一条流的数据没有任何另一条流的数据匹配,也可以出现在集合中、当然也可以定义输出结果了。

所以能够看出,coGroup 操作比窗口的 join 更加通用,不仅可以实现类似 SQL 中的“内连接”(inner join),也可以实现左外连接(left outer join)、右外连接(right outer join)和全外连接(full outer join)。事实上,窗口 join 的底层,也是通过 coGroup 来实现的。

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.CoGroupFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

// 基于窗口的join

public class CoGroupTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStream<Tuple2<String, Long>> stream1 = env

.fromElements(

Tuple2.of("a", 1000L),

Tuple2.of("b", 1000L),

Tuple2.of("a", 2000L),

Tuple2.of("b", 2000L)

)

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<Tuple2<String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(

new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}

)

);

DataStream<Tuple2<String, Long>> stream2 = env

.fromElements(

Tuple2.of("a", 3000L),

Tuple2.of("b", 3000L),

Tuple2.of("a", 4000L),

Tuple2.of("b", 4000L)

)

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<Tuple2<String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(

new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}

)

);

stream1

.coGroup(stream2)

.where(r -> r.f0)

.equalTo(r -> r.f0)

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.apply(new CoGroupFunction<Tuple2<String, Long>, Tuple2<String, Long>, String>() {

@Override

public void coGroup(Iterable<Tuple2<String, Long>> iter1, Iterable<Tuple2<String, Long>> iter2, Collector<String> collector) throws Exception {

collector.collect(iter1 + "=>" + iter2);

}

})

.print();

env.execute();

}

}