三、WebGPU Uniforms

Uniform有点像着色器的全局变量。你可以在执行着色器之前设置它们的值,着色器的每次迭代都会有这些值。你可以在下一次请求GPU执行着色器时将它们设置为其他值。我们将再次从第一篇文章中的三角形示例开始,并对其进行修改以使用一些统一格式。

const module = device.createShaderModule({

label: 'triangle shaders with uniforms',

code: `

struct OurStruct {

color: vec4f,

scale: vec2f,

offset: vec2f,

};

@group(0) @binding(0) var<uniform> ourStruct: OurStruct;

@vertex fn vs(

@builtin(vertex_index) vertexIndex : u32

) -> @builtin(position) vec4f {

let pos = array(

vec2f( 0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f( 0.5, -0.5) // bottom right

);

return vec4f(

pos[vertexIndex] * ourStruct.scale + ourStruct.offset, 0.0, 1.0);

}

@fragment fn fs() -> @location(0) vec4f {

return ourStruct.color;

}

`,

});

});

首先声明一个有3个成员的结构体

struct OurStruct {

color: vec4f,

scale: vec2f,

offset: vec2f,

};

然后我们声明了一个uniform变量,其类型为该结构体。变量是ourStruct,它的类型是ourStruct。

@group(0) @binding(0) var<uniform> ourStruct: OurStruct;

接下来,我们修改顶点着色器的返回值,使其使用uniform

@vertex fn vs(

...

) ... {

...

return vec4f(

pos[vertexIndex] * ourStruct.scale + ourStruct.offset, 0.0, 1.0);

}

可以看到,我们将顶点位置乘以比例,然后添加一个偏移量。这将允许我们设置三角形的大小和位置。我们还更改了fragment shader,使其返回 uniforms 的颜色

@fragment fn fs() -> @location(0) vec4f {

return ourStruct.color;

}

现在我们已经设置了使用uniform的着色器,我们需要在GPU上创建一个缓冲区来保存它们的值。

如果你从未处理过原生数据和大小,那么这是一个需要学习的领域。这是一个大话题,所以这里有一篇关于这个话题的单独文章。如果你不知道如何在内存中布局结构体,请阅读这篇文章WebGPU Data Memory Layout (webgpufundamentals.org)。那就回到这里来。本文假设您已经阅读了它。

阅读完本文后,我们可以继续使用与着色器中的结构体匹配的数据填充缓冲区。

首先,我们创建一个缓冲区,并为它分配使用标志,以便它可以与uniform一起使用,这样我们就可以通过复制数据来更新它。

const uniformBufferSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // scale is 2 32bit floats (4bytes each)

2 * 4; // offset is 2 32bit floats (4bytes each)

const uniformBuffer = device.createBuffer({

size: uniformBufferSize,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST,

});

然后我们创建一个TypedArray,这样我们就可以在JavaScript中设置值

// create a typedarray to hold the values for the uniforms in JavaScript

const uniformValues = new Float32Array(uniformBufferSize / 4);

我们将填充结构体中两个以后不会改变的值。偏移量是使用我们在关于内存布局的文章中介绍的内容计算的WebGPU Data Memory Layout (webgpufundamentals.org)。

// offsets to the various uniform values in float32 indices

const kColorOffset = 0;

const kScaleOffset = 4;

const kOffsetOffset = 6;

uniformValues.set([0, 1, 0, 1], kColorOffset); // set the color

uniformValues.set([-0.5, -0.25], kOffsetOffset); // set the offset

上面我们将颜色设置为绿色。偏移量将使三角形向左移动画布的1/4,向下移动1/8。(记住,剪辑空间从-1到1,这是2个单位的宽度,所以0.25是2的1/8)。接下来,正如第一篇文章中的图表所示,为了告诉着色器我们的缓冲区,我们需要创建一个绑定组,并将缓冲区绑定到我们在着色器中设置的@binding(?)。

const bindGroup = device.createBindGroup({

layout: pipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: uniformBuffer }},

],

});

现在,在我们提交我们的命令缓冲区之前,我们需要设置uniformValues的剩余值,然后将这些值复制到GPU上的缓冲区。我们将在渲染函数的顶部进行操作。

function render() {

// Set the uniform values in our JavaScript side Float32Array

const aspect = canvas.width / canvas.height;

uniformValues.set([0.5 / aspect, 0.5], kScaleOffset); // set the scale

// copy the values from JavaScript to the GPU

device.queue.writeBuffer(uniformBuffer, 0, uniformValues);

注意:writeBuffer是将数据复制到缓冲区的一种方法。本文还介绍了其他几种方法。WebGPU Copying Data (webgpufundamentals.org)

我们将缩放比例设置为一半,并考虑到画布的宽高比,因此无论画布大小如何,三角形都将保持相同的宽高比。最后,我们需要在绘图前设置绑定组

pass.setPipeline(pipeline);

pass.setBindGroup(0, bindGroup);

pass.draw(3); // call our vertex shader 3 times

pass.end();

以下为代码及运行结果:

HTML:

<!--

* @Description:

* @Author: tianyw

* @Date: 2022-11-11 12:50:23

* @LastEditTime: 2023-09-17 22:53:56

* @LastEditors: tianyw

-->

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>001hello-triangle</title>

<style>

html,

body {

margin: 0;

width: 100%;

height: 100%;

background: #000;

color: #fff;

display: flex;

text-align: center;

flex-direction: column;

justify-content: center;

}

div,

canvas {

height: 100%;

width: 100%;

}

</style>

</head>

<body>

<div id="005uniform-scale-triangle">

<canvas id="gpucanvas"></canvas>

</div>

<script type="module" src="./005uniform-scale-triangle.ts"></script>

</body>

</html>

TS:

/*

* @Description:

* @Author: tianyw

* @Date: 2023-04-08 20:03:35

* @LastEditTime: 2023-09-17 23:02:46

* @LastEditors: tianyw

*/

export type SampleInit = (params: {

canvas: HTMLCanvasElement;

}) => void | Promise<void>;

import shaderWGSL from "./shaders/shader.wgsl?raw";

const init: SampleInit = async ({ canvas }) => {

const adapter = await navigator.gpu?.requestAdapter();

if (!adapter) return;

const device = await adapter?.requestDevice();

if (!device) {

console.error("need a browser that supports WebGPU");

return;

}

const context = canvas.getContext("webgpu");

if (!context) return;

const devicePixelRatio = window.devicePixelRatio || 1;

canvas.width = canvas.clientWidth * devicePixelRatio;

canvas.height = canvas.clientHeight * devicePixelRatio;

const presentationFormat = navigator.gpu.getPreferredCanvasFormat();

context.configure({

device,

format: presentationFormat,

alphaMode: "premultiplied"

});

const shaderModule = device.createShaderModule({

label: "our hardcoded rgb triangle shaders",

code: shaderWGSL

});

const renderPipeline = device.createRenderPipeline({

label: "hardcoded rgb triangle pipeline",

layout: "auto",

vertex: {

module: shaderModule,

entryPoint: "vs"

},

fragment: {

module: shaderModule,

entryPoint: "fs",

targets: [

{

format: presentationFormat

}

]

},

primitive: {

// topology: "line-list"

// topology: "line-strip"

// topology: "point-list"

topology: "triangle-list"

// topology: "triangle-strip"

}

});

const uniformBufferSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // scale is 2 32bit floats (4bytes each)

2 * 4; // offset is 2 32bit floats (4bytes each)

const uniformBuffer = device.createBuffer({

size: uniformBufferSize,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST

});

const uniformValues = new Float32Array(uniformBufferSize / 4);

const kColorOffset = 0;

const kScaleOffset = 4;

const kOffsetOffset = 6;

uniformValues.set([0, 1, 0, 1], kColorOffset); // set the color

uniformValues.set([-0.5, -0.25], kOffsetOffset); // set the offset

const bindGroup = device.createBindGroup({

layout: renderPipeline.getBindGroupLayout(0),

entries: [{ binding: 0, resource: { buffer: uniformBuffer } }]

});

function frame() {

const aspect = canvas.width / canvas.height;

uniformValues.set([0.5 / aspect, 0.5], kScaleOffset); // set the scale

const renderCommandEncoder = device.createCommandEncoder({

label: "render vert frag"

});

if (!context) return;

device.queue.writeBuffer(uniformBuffer, 0, uniformValues);

const textureView = context.getCurrentTexture().createView();

const renderPassDescriptor: GPURenderPassDescriptor = {

colorAttachments: [

{

view: textureView,

clearValue: { r: 0.0, g: 0.0, b: 0.0, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

]

};

const renderPass =

renderCommandEncoder.beginRenderPass(renderPassDescriptor);

renderPass.setPipeline(renderPipeline);

renderPass.setBindGroup(0, bindGroup);

renderPass.draw(3, 1, 0, 0);

renderPass.end();

const renderBuffer = renderCommandEncoder.finish();

device.queue.submit([renderBuffer]);

requestAnimationFrame(frame);

}

requestAnimationFrame(frame);

};

const canvas = document.getElementById("gpucanvas") as HTMLCanvasElement;

init({ canvas: canvas });

Shaders:

shader:

struct OurStruct {

color: vec4f,

scale: vec2f,

offset: vec2f

};

@group(0) @binding(0) var<uniform> ourStruct: OurStruct;

@vertex

fn vs(@builtin(vertex_index) vertexIndex: u32) -> @builtin(position) vec4f {

let pos = array<vec2f, 3>(

vec2f(0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f(0.5, -0.5) // bottom right

);

return vec4f(pos[vertexIndex] * ourStruct.scale + ourStruct.offset,0.0,1.0);

}

@fragment

fn fs() -> @location(0) vec4f {

return ourStruct.color;

}

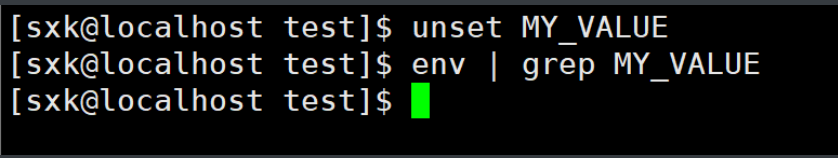

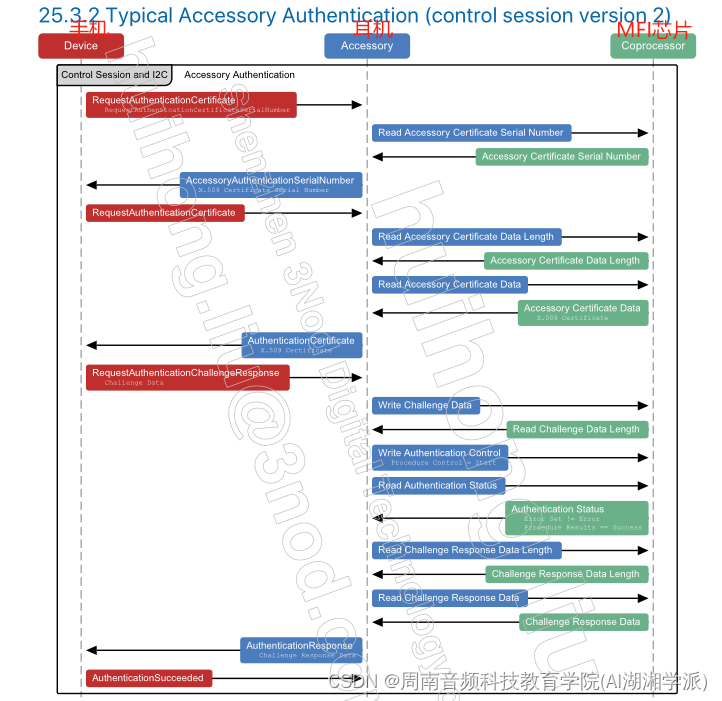

对于这个单三角形,当绘制命令被执行时,我们的状态是这样的:

到目前为止,我们在着色器中使用的所有数据要么是硬编码的(顶点着色器中的三角形顶点位置,以及片段着色器中的颜色)。现在我们可以将值传递给我们的着色器,我们可以使用不同的数据多次调用draw。

通过更新单个缓冲区,我们可以在不同的地方使用不同的偏移量、比例和颜色进行绘制。重要的是要记住,我们的命令被放在命令缓冲区中,直到我们提交它们,它们才真正执行。所以,我们不能这样做

// BAD!

for (let x = -1; x < 1; x += 0.1) {

uniformValues.set([x, x], kOffsetOffset);

device.queue.writeBuffer(uniformBuffer, 0, uniformValues);

pass.draw(3);

}

pass.end();

// Finish encoding and submit the commands

const commandBuffer = encoder.finish();

device.queue.submit([commandBuffer]);

因为,正如你在上面看到的,device.queue.xxx函数发生在一个“队列/queue”上,而不是pass。XXX函数只是在命令缓冲区中编码一个命令。

当我们使用命令缓冲区调用submit时,缓冲区中唯一的东西就是我们最后写入的值。我们可以把它改成这个

// BAD! Slow!

for (let x = -1; x < 1; x += 0.1) {

uniformValues.set([x, 0], kOffsetOffset);

device.queue.writeBuffer(uniformBuffer, 0, uniformValues);

const encoder = device.createCommandEncoder();

const pass = encoder.beginRenderPass(renderPassDescriptor);

pass.setPipeline(pipeline);

pass.setBindGroup(0, bindGroup);

pass.draw(3);

pass.end();

// Finish encoding and submit the commands

const commandBuffer = encoder.finish();

device.queue.submit([commandBuffer]);

}

上面的代码更新一个缓冲区,创建一个命令缓冲区,添加命令来绘制一个东西,然后完成命令缓冲区并提交它。这是有效的,但由于多种原因很慢。最大的好处是在单个命令缓冲区中完成更多工作是最佳实践。

因此,相反,我们可以为每个我们想要绘制的东西创建一个统一的缓冲区。而且,由于缓冲区是通过绑定组间接使用的,所以我们还需要为每个要绘制的东西创建一个绑定组。然后我们可以将所有我们想要绘制的东西放入单个命令缓冲区。

让我们来建立一个随机函数:

// A random number between [min and max)

// With 1 argument it will be [0 to min)

// With no arguments it will be [0 to 1)

const rand = (min, max) => {

if (min === undefined) {

min = 0;

max = 1;

} else if (max === undefined) {

max = min;

min = 0;

}

return min + Math.random() * (max - min);

};

现在我们用一些颜色和偏移量来设置缓冲区我们可以画一些单独的东西。

// offsets to the various uniform values in float32 indices

const kColorOffset = 0;

const kScaleOffset = 4;

const kOffsetOffset = 6;

const kNumObjects = 100;

const objectInfos = [];

for (let i = 0; i < kNumObjects; ++i) {

const uniformBuffer = device.createBuffer({

label: `uniforms for obj: ${i}`,

size: uniformBufferSize,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST,

});

// create a typedarray to hold the values for the uniforms in JavaScript

const uniformValues = new Float32Array(uniformBufferSize / 4);

uniformValues.set([rand(), rand(), rand(), 1], kColorOffset); // set the color

uniformValues.set([rand(-0.9, 0.9), rand(-0.9, 0.9)], kOffsetOffset); // set the offset

const bindGroup = device.createBindGroup({

label: `bind group for obj: ${i}`,

layout: pipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: uniformBuffer }},

],

});

objectInfos.push({

scale: rand(0.2, 0.5),

uniformBuffer,

uniformValues,

bindGroup,

});

}

我们还没有在buffer中设置值,因为我们希望它考虑到画布的长宽比/比例,并且在渲染时间之前我们不会知道画布的长宽比/比例。

在渲染时,我们将用正确的长宽比/比例更新所有缓冲区的调整比例。

function render() {

// Get the current texture from the canvas context and

// set it as the texture to render to.

renderPassDescriptor.colorAttachments[0].view =

context.getCurrentTexture().createView();

const encoder = device.createCommandEncoder();

const pass = encoder.beginRenderPass(renderPassDescriptor);

pass.setPipeline(pipeline);

// Set the uniform values in our JavaScript side Float32Array

const aspect = canvas.width / canvas.height;

for (const {scale, bindGroup, uniformBuffer, uniformValues} of objectInfos) {

uniformValues.set([scale / aspect, scale], kScaleOffset); // set the scale

device.queue.writeBuffer(uniformBuffer, 0, uniformValues);

pass.setBindGroup(0, bindGroup);

pass.draw(3); // call our vertex shader 3 times

}

pass.end();

const commandBuffer = encoder.finish();

device.queue.submit([commandBuffer]);

}

再次记住,编码器 encoder 和传递对象 pass 只是将命令编码到命令缓冲区。因此,当render函数退出时,我们实际上已经按此顺序发出了这些命令。

device.queue.writeBuffer(...) // update uniform buffer 0 with data for object 0

device.queue.writeBuffer(...) // update uniform buffer 1 with data for object 1

device.queue.writeBuffer(...) // update uniform buffer 2 with data for object 2

device.queue.writeBuffer(...) // update uniform buffer 3 with data for object 3

...

// execute commands that draw 100 things, each with their own uniform buffer.

device.queue.submit([commandBuffer]);

以下为完整代码及运行效果:

HTML:

<!--

* @Description:

* @Author: tianyw

* @Date: 2022-11-11 12:50:23

* @LastEditTime: 2023-09-18 12:28:51

* @LastEditors: tianyw

-->

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>001hello-triangle</title>

<style>

html,

body {

margin: 0;

width: 100%;

height: 100%;

background: #000;

color: #fff;

display: flex;

text-align: center;

flex-direction: column;

justify-content: center;

}

div,

canvas {

height: 100%;

width: 100%;

}

</style>

</head>

<body>

<div id="005uniform-srandom-triangle">

<canvas id="gpucanvas"></canvas>

</div>

<script type="module" src="./005uniform-srandom-triangle.ts"></script>

</body>

</html>

TS:

/*

* @Description:

* @Author: tianyw

* @Date: 2023-04-08 20:03:35

* @LastEditTime: 2023-09-18 20:51:33

* @LastEditors: tianyw

*/

export type SampleInit = (params: {

canvas: HTMLCanvasElement;

}) => void | Promise<void>;

import shaderWGSL from "./shaders/shader.wgsl?raw";

const rand = (

min: undefined | number = undefined,

max: undefined | number = undefined

) => {

if (min === undefined) {

min = 0;

max = 1;

} else if (max === undefined) {

max = min;

min = 0;

}

return min + Math.random() * (max - min);

};

const init: SampleInit = async ({ canvas }) => {

const adapter = await navigator.gpu?.requestAdapter();

if (!adapter) return;

const device = await adapter?.requestDevice();

if (!device) {

console.error("need a browser that supports WebGPU");

return;

}

const context = canvas.getContext("webgpu");

if (!context) return;

const devicePixelRatio = window.devicePixelRatio || 1;

canvas.width = canvas.clientWidth * devicePixelRatio;

canvas.height = canvas.clientHeight * devicePixelRatio;

const presentationFormat = navigator.gpu.getPreferredCanvasFormat();

context.configure({

device,

format: presentationFormat,

alphaMode: "premultiplied"

});

const shaderModule = device.createShaderModule({

label: "our hardcoded rgb triangle shaders",

code: shaderWGSL

});

const renderPipeline = device.createRenderPipeline({

label: "hardcoded rgb triangle pipeline",

layout: "auto",

vertex: {

module: shaderModule,

entryPoint: "vs"

},

fragment: {

module: shaderModule,

entryPoint: "fs",

targets: [

{

format: presentationFormat

}

]

},

primitive: {

// topology: "line-list"

// topology: "line-strip"

// topology: "point-list"

topology: "triangle-list"

// topology: "triangle-strip"

}

});

const uniformBufferSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // scale is 2 32bit floats (4bytes each)

2 * 4; // offset is 2 32bit floats (4bytes each)

const kColorOffset = 0;

const kScaleOffset = 4;

const kOffsetOffset = 6;

const kNumObjects = 100;

const objectInfos: {

scale: number;

uniformBuffer: GPUBuffer;

uniformValues: Float32Array;

bindGroup: GPUBindGroup;

}[] = [];

for (let i = 0; i < kNumObjects; ++i) {

const uniformBuffer = device.createBuffer({

label: `uniforms for obj: ${i}`,

size: uniformBufferSize,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST

});

const uniformValues = new Float32Array(uniformBufferSize / 4);

uniformValues.set([rand(), rand(), rand(), 1], kColorOffset); // set the color

uniformValues.set([rand(-0.9, 0.9), rand(-0.9, 0.9)], kOffsetOffset); // set the offset

const bindGroup = device.createBindGroup({

label: `bind group for obj: ${i}`,

layout: renderPipeline.getBindGroupLayout(0),

entries: [{ binding: 0, resource: { buffer: uniformBuffer } }]

});

objectInfos.push({

scale: rand(0.2, 0.5),

uniformBuffer,

uniformValues,

bindGroup

});

}

function frame() {

const aspect = canvas.width / canvas.height;

const renderCommandEncoder = device.createCommandEncoder({

label: "render vert frag"

});

if (!context) return;

const textureView = context.getCurrentTexture().createView();

const renderPassDescriptor: GPURenderPassDescriptor = {

label: "our basic canvas renderPass",

colorAttachments: [

{

view: textureView,

clearValue: { r: 0.0, g: 0.0, b: 0.0, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

]

};

const renderPass =

renderCommandEncoder.beginRenderPass(renderPassDescriptor);

renderPass.setPipeline(renderPipeline);

for (const {

scale,

bindGroup,

uniformBuffer,

uniformValues

} of objectInfos) {

uniformValues.set([scale / aspect, scale], kScaleOffset); // set the scale

device.queue.writeBuffer(uniformBuffer, 0, uniformValues);

renderPass.setBindGroup(0, bindGroup);

renderPass.draw(3, 1, 0, 0);

}

renderPass.end();

const renderBuffer = renderCommandEncoder.finish();

device.queue.submit([renderBuffer]);

requestAnimationFrame(frame);

}

requestAnimationFrame(frame);

};

const canvas = document.getElementById("gpucanvas") as HTMLCanvasElement;

init({ canvas: canvas });

Shaders:

shader:

struct OurStruct {

color: vec4f,

scale: vec2f,

offset: vec2f

};

@group(0) @binding(0) var<uniform> ourStruct: OurStruct;

@vertex

fn vs(@builtin(vertex_index) vertexIndex: u32) -> @builtin(position) vec4f {

let pos = array<vec2f, 3>(

vec2f(0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f(0.5, -0.5) // bottom right

);

return vec4f(pos[vertexIndex] * ourStruct.scale + ourStruct.offset,0.0,1.0);

}

@fragment

fn fs() -> @location(0) vec4f {

return ourStruct.color;

}

既然我们在这里,还有一件事要讲。你可以自由地在你的着色器中引用多个统一缓冲区。在上面的例子中,每次绘制时我们都会更新比例,然后writeBuffer将该对象的uniformValues上传到相应的统一缓冲区。但是,只有比例在更新,颜色和偏移量没有更新,所以我们在浪费时间上传颜色和偏移量。

我们可以将制服分成需要设置一次的制服和每次绘制时更新的制服。

const module = device.createShaderModule({

code: `

struct OurStruct {

color: vec4f,

offset: vec2f,

};

struct OtherStruct {

scale: vec2f,

};

@group(0) @binding(0) var<uniform> ourStruct: OurStruct;

@group(0) @binding(1) var<uniform> otherStruct: OtherStruct;

@vertex fn vs(

@builtin(vertex_index) vertexIndex : u32

) -> @builtin(position) vec4f {

let pos = array(

vec2f( 0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f( 0.5, -0.5) // bottom right

);

return vec4f(

pos[vertexIndex] * otherStruct.scale + ourStruct.offset, 0.0, 1.0);

}

@fragment fn fs() -> @location(0) vec4f {

return ourStruct.color;

}

`,

});

当我们想要绘制的每样东西需要2个统一的缓冲区时:

// create 2 buffers for the uniform values

const staticUniformBufferSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // offset is 2 32bit floats (4bytes each)

2 * 4; // padding

const uniformBufferSize =

2 * 4; // scale is 2 32bit floats (4bytes each)

// offsets to the various uniform values in float32 indices

const kColorOffset = 0;

const kOffsetOffset = 4;

const kScaleOffset = 0;

const kNumObjects = 100;

const objectInfos = [];

for (let i = 0; i < kNumObjects; ++i) {

const staticUniformBuffer = device.createBuffer({

label: `static uniforms for obj: ${i}`,

size: staticUniformBufferSize,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST,

});

// These are only set once so set them now

{

const uniformValues = new Float32Array(staticUniformBufferSize / 4);

uniformValues.set([rand(), rand(), rand(), 1], kColorOffset); // set the color

uniformValues.set([rand(-0.9, 0.9), rand(-0.9, 0.9)], kOffsetOffset); // set the offset

// copy these values to the GPU

device.queue.writeBuffer(staticUniformBuffer, 0, uniformValues);

}

// create a typedarray to hold the values for the uniforms in JavaScript

const uniformValues = new Float32Array(uniformBufferSize / 4);

const uniformBuffer = device.createBuffer({

label: `changing uniforms for obj: ${i}`,

size: uniformBufferSize,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST,

});

const bindGroup = device.createBindGroup({

label: `bind group for obj: ${i}`,

layout: pipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: staticUniformBuffer }},

{ binding: 1, resource: { buffer: uniformBuffer }},

],

});

objectInfos.push({

scale: rand(0.2, 0.5),

uniformBuffer,

uniformValues,

bindGroup,

});

}

我们的渲染代码没有任何变化。每个对象的绑定组包含对每个对象的统一缓冲区的引用。和以前一样,我们正在更新比例尺。但是现在我们只有在调用device.queue.writeBuffer来更新保存比例值的统一缓冲区时才上传比例,而之前我们是上传每个对象的颜色+偏移量+比例。

以下为完整代码及运行结果:

HTML:

<!--

* @Description:

* @Author: tianyw

* @Date: 2022-11-11 12:50:23

* @LastEditTime: 2023-09-18 12:28:51

* @LastEditors: tianyw

-->

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>001hello-triangle</title>

<style>

html,

body {

margin: 0;

width: 100%;

height: 100%;

background: #000;

color: #fff;

display: flex;

text-align: center;

flex-direction: column;

justify-content: center;

}

div,

canvas {

height: 100%;

width: 100%;

}

</style>

</head>

<body>

<div id="005uniform-srandom-triangle2">

<canvas id="gpucanvas"></canvas>

</div>

<script type="module" src="./005uniform-srandom-triangle2.ts"></script>

</body>

</html>

TS:

/*

* @Description:

* @Author: tianyw

* @Date: 2023-04-08 20:03:35

* @LastEditTime: 2023-09-18 21:16:58

* @LastEditors: tianyw

*/

export type SampleInit = (params: {

canvas: HTMLCanvasElement;

}) => void | Promise<void>;

import shaderWGSL from "./shaders/shader.wgsl?raw";

const rand = (

min: undefined | number = undefined,

max: undefined | number = undefined

) => {

if (min === undefined) {

min = 0;

max = 1;

} else if (max === undefined) {

max = min;

min = 0;

}

return min + Math.random() * (max - min);

};

const init: SampleInit = async ({ canvas }) => {

const adapter = await navigator.gpu?.requestAdapter();

if (!adapter) return;

const device = await adapter?.requestDevice();

if (!device) {

console.error("need a browser that supports WebGPU");

return;

}

const context = canvas.getContext("webgpu");

if (!context) return;

const devicePixelRatio = window.devicePixelRatio || 1;

canvas.width = canvas.clientWidth * devicePixelRatio;

canvas.height = canvas.clientHeight * devicePixelRatio;

const presentationFormat = navigator.gpu.getPreferredCanvasFormat();

context.configure({

device,

format: presentationFormat,

alphaMode: "premultiplied"

});

const shaderModule = device.createShaderModule({

label: "our hardcoded rgb triangle shaders",

code: shaderWGSL

});

const renderPipeline = device.createRenderPipeline({

label: "hardcoded rgb triangle pipeline",

layout: "auto",

vertex: {

module: shaderModule,

entryPoint: "vs"

},

fragment: {

module: shaderModule,

entryPoint: "fs",

targets: [

{

format: presentationFormat

}

]

},

primitive: {

// topology: "line-list"

// topology: "line-strip"

// topology: "point-list"

topology: "triangle-list"

// topology: "triangle-strip"

}

});

const staticUniformBufferSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // scale is 2 32bit floats (4bytes each)

2 * 4; // padding

const uniformBUfferSzie = 2 * 4; // scale is 2 32 bit floats

const kColorOffset = 0;

const kOffsetOffset = 4;

const kScaleOffset = 0;

const kNumObjects = 100;

const objectInfos: {

scale: number;

uniformBuffer: GPUBuffer;

uniformValues: Float32Array;

bindGroup: GPUBindGroup;

}[] = [];

for (let i = 0; i < kNumObjects; ++i) {

const staticUniformBuffer = device.createBuffer({

label: `staitc uniforms for obj: ${i}`,

size: staticUniformBufferSize,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST

});

{

const uniformValues = new Float32Array(staticUniformBufferSize / 4);

uniformValues.set([rand(), rand(), rand(), 1], kColorOffset); // set the color

uniformValues.set([rand(-0.9, 0.9), rand(-0.9, 0.9)], kOffsetOffset); // set the offset

device.queue.writeBuffer(staticUniformBuffer, 0, uniformValues);

}

const uniformValues = new Float32Array(uniformBUfferSzie / 4);

const uniformBuffer = device.createBuffer({

label: `changing uniforms for obj: ${i}`,

size: uniformBUfferSzie,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST

});

const bindGroup = device.createBindGroup({

label: `bind group for obj: ${i}`,

layout: renderPipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: staticUniformBuffer } },

{ binding: 1, resource: { buffer: uniformBuffer } }

]

});

objectInfos.push({

scale: rand(0.2, 0.5),

uniformBuffer,

uniformValues,

bindGroup

});

}

function frame() {

const aspect = canvas.width / canvas.height;

const renderCommandEncoder = device.createCommandEncoder({

label: "render vert frag"

});

if (!context) return;

const textureView = context.getCurrentTexture().createView();

const renderPassDescriptor: GPURenderPassDescriptor = {

label: "our basic canvas renderPass",

colorAttachments: [

{

view: textureView,

clearValue: { r: 0.0, g: 0.0, b: 0.0, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

]

};

const renderPass =

renderCommandEncoder.beginRenderPass(renderPassDescriptor);

renderPass.setPipeline(renderPipeline);

for (const {

scale,

bindGroup,

uniformBuffer,

uniformValues

} of objectInfos) {

uniformValues.set([scale / aspect, scale], kScaleOffset); // set the scale

device.queue.writeBuffer(uniformBuffer, 0, uniformValues);

renderPass.setBindGroup(0, bindGroup);

renderPass.draw(3);

}

renderPass.end();

const renderBuffer = renderCommandEncoder.finish();

device.queue.submit([renderBuffer]);

requestAnimationFrame(frame);

}

requestAnimationFrame(frame);

};

const canvas = document.getElementById("gpucanvas") as HTMLCanvasElement;

init({ canvas: canvas });

Shaders:

shader:

struct OurStruct {

color: vec4f,

offset: vec2f

};

struct OtherStruct {

scale: vec2f

};

@group(0) @binding(0) var<uniform> ourStruct: OurStruct;

@group(0) @binding(1) var<uniform> otherStruct: OtherStruct;

@vertex

fn vs(@builtin(vertex_index) vertexIndex: u32) -> @builtin(position) vec4f {

let pos = array<vec2f, 3>(

vec2f(0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f(0.5, -0.5) // bottom right

);

return vec4f(pos[vertexIndex] * otherStruct.scale + ourStruct.offset,0.0,1.0);

}

@fragment

fn fs() -> @location(0) vec4f {

return ourStruct.color;

}

虽然在这个简单的示例中,划分为多个统一的缓冲区可能有些过分,但通常根据更改的内容和时间进行划分。示例可能包括用于共享矩阵的统一缓冲区。例如一个项目矩阵,一个视图矩阵,一个相机矩阵。因为通常我们想要绘制的所有东西都是相同的,所以我们可以只创建一个缓冲区,并让所有对象使用相同的统一缓冲区。

另外,我们的着色器可能会引用另一个统一的缓冲区,它只包含特定于这个对象的东西,比如它的世界/模型矩阵和它的正常矩阵。

另一个统一缓冲区可能包含材料设置。这些设置可能由多个对象共享。当我们涉及到绘制3D时,我们会做很多这方面的工作。