四、WebGPU Storage Buffers 存储缓冲区

存储缓冲区 storage buffers 在许多方面 uniform buffers 缓冲区相似。如果我们所做的只是在JavaScript中将UNIFORM改为STORAGE,WGSL 中的 var 改为 var<storage,read>,上一节的示例代码同样可以运行并达到同样的效果。

实际上,还是有不同之处,不需要将变量重命名为更合适的名称。

const staticUniformBuffer = device.createBuffer({

label: `static uniforms for obj: ${i}`,

size: staticUniformBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST,

});

...

const uniformBuffer = device.createBuffer({

label: `changing uniforms for obj: ${i}`,

size: uniformBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST,

});

WGSL 的修改:

@group(0) @binding(0) var<storage, read> ourStruct: OurStruct;

@group(0) @binding(1) var<storage, read> otherStruct: OtherStruct;

仅需要以上少量的修改,就可以达到同样的效果。

代码及效果如下:

HTML:

<!--

* @Description:

* @Author: tianyw

* @Date: 2022-11-11 12:50:23

* @LastEditTime: 2023-09-18 21:28:13

* @LastEditors: tianyw

-->

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>001hello-triangle</title>

<style>

html,

body {

margin: 0;

width: 100%;

height: 100%;

background: #000;

color: #fff;

display: flex;

text-align: center;

flex-direction: column;

justify-content: center;

}

div,

canvas {

height: 100%;

width: 100%;

}

</style>

</head>

<body>

<div id="006storage-random-triangle">

<canvas id="gpucanvas"></canvas>

</div>

<script type="module" src="./006storage-random-triangle.ts"></script>

</body>

</html>

TS:

/*

* @Description:

* @Author: tianyw

* @Date: 2023-04-08 20:03:35

* @LastEditTime: 2023-09-18 21:30:17

* @LastEditors: tianyw

*/

export type SampleInit = (params: {

canvas: HTMLCanvasElement;

}) => void | Promise<void>;

import shaderWGSL from "./shaders/shader.wgsl?raw";

const rand = (

min: undefined | number = undefined,

max: undefined | number = undefined

) => {

if (min === undefined) {

min = 0;

max = 1;

} else if (max === undefined) {

max = min;

min = 0;

}

return min + Math.random() * (max - min);

};

const init: SampleInit = async ({ canvas }) => {

const adapter = await navigator.gpu?.requestAdapter();

if (!adapter) return;

const device = await adapter?.requestDevice();

if (!device) {

console.error("need a browser that supports WebGPU");

return;

}

const context = canvas.getContext("webgpu");

if (!context) return;

const devicePixelRatio = window.devicePixelRatio || 1;

canvas.width = canvas.clientWidth * devicePixelRatio;

canvas.height = canvas.clientHeight * devicePixelRatio;

const presentationFormat = navigator.gpu.getPreferredCanvasFormat();

context.configure({

device,

format: presentationFormat,

alphaMode: "premultiplied"

});

const shaderModule = device.createShaderModule({

label: "our hardcoded rgb triangle shaders",

code: shaderWGSL

});

const renderPipeline = device.createRenderPipeline({

label: "hardcoded rgb triangle pipeline",

layout: "auto",

vertex: {

module: shaderModule,

entryPoint: "vs"

},

fragment: {

module: shaderModule,

entryPoint: "fs",

targets: [

{

format: presentationFormat

}

]

},

primitive: {

// topology: "line-list"

// topology: "line-strip"

// topology: "point-list"

topology: "triangle-list"

// topology: "triangle-strip"

}

});

const staticUniformBufferSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // scale is 2 32bit floats (4bytes each)

2 * 4; // padding

const uniformBUfferSzie = 2 * 4; // scale is 2 32 bit floats

const kColorOffset = 0;

const kOffsetOffset = 4;

const kScaleOffset = 0;

const kNumObjects = 100;

const objectInfos: {

scale: number;

uniformBuffer: GPUBuffer;

uniformValues: Float32Array;

bindGroup: GPUBindGroup;

}[] = [];

for (let i = 0; i < kNumObjects; ++i) {

const staticUniformBuffer = device.createBuffer({

label: `staitc uniforms for obj: ${i}`,

size: staticUniformBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST

});

{

const uniformValues = new Float32Array(staticUniformBufferSize / 4);

uniformValues.set([rand(), rand(), rand(), 1], kColorOffset); // set the color

uniformValues.set([rand(-0.9, 0.9), rand(-0.9, 0.9)], kOffsetOffset); // set the offset

device.queue.writeBuffer(staticUniformBuffer, 0, uniformValues);

}

const uniformValues = new Float32Array(uniformBUfferSzie / 4);

const uniformBuffer = device.createBuffer({

label: `changing uniforms for obj: ${i}`,

size: uniformBUfferSzie,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST

});

const bindGroup = device.createBindGroup({

label: `bind group for obj: ${i}`,

layout: renderPipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: staticUniformBuffer } },

{ binding: 1, resource: { buffer: uniformBuffer } }

]

});

objectInfos.push({

scale: rand(0.2, 0.5),

uniformBuffer,

uniformValues,

bindGroup

});

}

function frame() {

const aspect = canvas.width / canvas.height;

const renderCommandEncoder = device.createCommandEncoder({

label: "render vert frag"

});

if (!context) return;

const textureView = context.getCurrentTexture().createView();

const renderPassDescriptor: GPURenderPassDescriptor = {

label: "our basic canvas renderPass",

colorAttachments: [

{

view: textureView,

clearValue: { r: 0.0, g: 0.0, b: 0.0, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

]

};

const renderPass =

renderCommandEncoder.beginRenderPass(renderPassDescriptor);

renderPass.setPipeline(renderPipeline);

for (const {

scale,

bindGroup,

uniformBuffer,

uniformValues

} of objectInfos) {

uniformValues.set([scale / aspect, scale], kScaleOffset); // set the scale

device.queue.writeBuffer(uniformBuffer, 0, uniformValues);

renderPass.setBindGroup(0, bindGroup);

renderPass.draw(3);

}

renderPass.end();

const renderBuffer = renderCommandEncoder.finish();

device.queue.submit([renderBuffer]);

requestAnimationFrame(frame);

}

requestAnimationFrame(frame);

};

const canvas = document.getElementById("gpucanvas") as HTMLCanvasElement;

init({ canvas: canvas });

Shaders:

shader:

struct OurStruct {

color: vec4f,

offset: vec2f

};

struct OtherStruct {

scale: vec2f

};

@group(0) @binding(0) var<storage,read> ourStruct: OurStruct;

@group(0) @binding(1) var<storage,read> otherStruct: OtherStruct;

@vertex

fn vs(@builtin(vertex_index) vertexIndex: u32) -> @builtin(position) vec4f {

let pos = array<vec2f, 3>(

vec2f(0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f(0.5, -0.5) // bottom right

);

return vec4f(pos[vertexIndex] * otherStruct.scale + ourStruct.offset,0.0,1.0);

}

@fragment

fn fs() -> @location(0) vec4f {

return ourStruct.color;

}

Differences between uniform buffers and storage buffers

它们主要的不同在于:

1、对于它们的典型用例,统一缓冲区可能更快

这实际上取决于用例。一个典型的应用程序需要绘制很多不同的东西。假设这是一款3D游戏。应用程序可能会绘制汽车、建筑、岩石、灌木丛、人物等,这些都需要传入方向和材料属性,就像我们上面的例子一样。在这种情况下,建议使用统一的缓冲区 uniform buffers。

2、存储缓冲区 storage buffers 可以比统一缓冲区 uniform buffers 大得多。

统一缓冲区的最小最大大小为64k

存储缓冲区的最小最大大小为128meg

通过最小最大值,存在一个特定类型的缓冲区的最大大小。对于统一缓冲区,最大大小至少为64k。存储缓冲区至少是128meg。我们将在另一篇文章中讨论限制。

3、存储缓冲区可以读写,统一缓冲区是只读的

在第一篇文章中,我们看到了在计算着色器示例中写入存储缓冲区的示例。

使用存储缓冲区实例化

考虑到上面的前两点,让我们以最后一个例子为例,并将其更改为在一次绘制调用中绘制所有100个三角形。这是一个可能适合存储缓冲区的用例。我说可能是因为WebGPU类似于其他编程语言。有很多方法可以达到同样的目的。array.forEach vs for (const element of array) vs for (let i = 0; i < array.length; ++i)。每种都有其用途。WebGPU也是如此。我们想做的每一件事都有多种方法可以实现。当涉及到绘制三角形时,WebGPU所关心的是我们从顶点着色器返回一个 builtin(position)的值,并从片段着色器返回一个location(0)的颜色/值。

我们要做的第一件事是将存储声明更改为运行时大小的数组。

@group(0) @binding(0) var<storage, read> ourStructs: array<OurStruct>;

@group(0) @binding(1) var<storage, read> otherStructs: array<OtherStruct>;

然后我们将改变着色器使用这些值:

@vertex fn vs(

@builtin(vertex_index) vertexIndex : u32,

@builtin(instance_index) instanceIndex: u32

) -> @builtin(position) {

let pos = array(

vec2f( 0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f( 0.5, -0.5) // bottom right

);

let otherStruct = otherStructs[instanceIndex];

let ourStruct = ourStructs[instanceIndex];

return vec4f(

pos[vertexIndex] * otherStruct.scale + ourStruct.offset, 0.0, 1.0);

}

我们为顶点着色器添加了一个名为 instanceIndex 的新参数,并赋予它@builtin(instance_index)属性,这意味着它从WebGPU获取每个绘制的“instance”的值。当我们调用draw时,我们可以传递第二个参数来表示实例的数量,对于每个绘制的实例,正在处理的实例的数量将传递给我们的函数。

使用instanceIndex,我们可以从结构数组中获得特定的结构元素。

我们还需要从正确的数组元素中获取颜色,并在片段着色器中使用它。片段着色器没有访问@builtin(instance_index),因为这将没有意义。我们可以将它作为一个阶段间变量传递,但更常见的是在顶点着色器中查找颜色并直接传递颜色。为了做到这一点,我们将使用另一个结构体,就像我们在讨论阶段间变量的文章中所做的那样.

struct VSOutput {

@builtin(position) position: vec4f,

@location(0) color: vec4f,

}

@vertex fn vs(

@builtin(vertex_index) vertexIndex : u32,

@builtin(instance_index) instanceIndex: u32

) -> VSOutput {

let pos = array(

vec2f( 0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f( 0.5, -0.5) // bottom right

);

let otherStruct = otherStructs[instanceIndex];

let ourStruct = ourStructs[instanceIndex];

var vsOut: VSOutput;

vsOut.position = vec4f(

pos[vertexIndex] * otherStruct.scale + ourStruct.offset, 0.0, 1.0);

vsOut.color = ourStruct.color;

return vsOut;

}

@fragment fn fs(vsOut: VSOutput) -> @location(0) vec4f {

return vsOut.color;

}

现在我们已经修改了WGSL着色器,让我们更新JavaScript。

const kNumObjects = 100;

const objectInfos = [];

// create 2 storage buffers

const staticUnitSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // offset is 2 32bit floats (4bytes each)

2 * 4; // padding

const changingUnitSize =

2 * 4; // scale is 2 32bit floats (4bytes each)

const staticStorageBufferSize = staticUnitSize * kNumObjects;

const changingStorageBufferSize = changingUnitSize * kNumObjects;

const staticStorageBuffer = device.createBuffer({

label: 'static storage for objects',

size: staticStorageBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST,

});

const changingStorageBuffer = device.createBuffer({

label: 'changing storage for objects',

size: changingStorageBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST,

});

// offsets to the various uniform values in float32 indices

const kColorOffset = 0;

const kOffsetOffset = 4;

const kScaleOffset = 0;

{

const staticStorageValues = new Float32Array(staticStorageBufferSize / 4);

for (let i = 0; i < kNumObjects; ++i) {

const staticOffset = i * (staticUnitSize / 4);

// These are only set once so set them now

staticStorageValues.set([rand(), rand(), rand(), 1], staticOffset + kColorOffset); // set the color

staticStorageValues.set([rand(-0.9, 0.9), rand(-0.9, 0.9)], staticOffset + kOffsetOffset); // set the offset

objectInfos.push({

scale: rand(0.2, 0.5),

});

}

device.queue.writeBuffer(staticStorageBuffer, 0, staticStorageValues);

}

// a typed array we can use to update the changingStorageBuffer

const storageValues = new Float32Array(changingStorageBufferSize / 4);

const bindGroup = device.createBindGroup({

label: 'bind group for objects',

layout: pipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: staticStorageBuffer }},

{ binding: 1, resource: { buffer: changingStorageBuffer }},

],

});

上面我们创建了2个存储缓冲区。一个用于OurStruct数组,另一个用于OtherStruct数组。

然后,我们用偏移量和颜色填充OurStruct数组的值,然后将该数据上传到staticStorageBuffer。我们只创建一个绑定组来引用两个缓冲区。

新的呈现代码是:

function render() {

// Get the current texture from the canvas context and

// set it as the texture to render to.

renderPassDescriptor.colorAttachments[0].view =

context.getCurrentTexture().createView();

const encoder = device.createCommandEncoder();

const pass = encoder.beginRenderPass(renderPassDescriptor);

pass.setPipeline(pipeline);

// Set the uniform values in our JavaScript side Float32Array

const aspect = canvas.width / canvas.height;

// set the scales for each object

objectInfos.forEach(({scale}, ndx) => {

const offset = ndx * (changingUnitSize / 4);

storageValues.set([scale / aspect, scale], offset + kScaleOffset); // set the scale

});

// upload all scales at once

device.queue.writeBuffer(changingStorageBuffer, 0, storageValues);

pass.setBindGroup(0, bindGroup);

pass.draw(3, kNumObjects); // call our vertex shader 3 times for each instance

pass.end();

const commandBuffer = encoder.finish();

device.queue.submit([commandBuffer]);

}

上面的代码将绘制kNumObjects 个实例。对于每个实例,WebGPU将调用顶点着色器3次,vertex_index设置为0,1,2,instance_index设置为0 到 kNumObjects - 1

我们设法绘制了所有100个三角形,每个三角形都有不同的比例、颜色和偏移量,只需要一个绘制调用。对于想要绘制同一对象的许多实例的情况,这是一种方法。

以下为完整代码及其运行效果:

HTML:

<!--

* @Description:

* @Author: tianyw

* @Date: 2022-11-11 12:50:23

* @LastEditTime: 2023-09-18 21:28:13

* @LastEditors: tianyw

-->

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>001hello-triangle</title>

<style>

html,

body {

margin: 0;

width: 100%;

height: 100%;

background: #000;

color: #fff;

display: flex;

text-align: center;

flex-direction: column;

justify-content: center;

}

div,

canvas {

height: 100%;

width: 100%;

}

</style>

</head>

<body>

<div id="006storage-random-triangle2">

<canvas id="gpucanvas"></canvas>

</div>

<script type="module" src="./006storage-random-triangle2.ts"></script>

</body>

</html>

TS:

/*

* @Description:

* @Author: tianyw

* @Date: 2023-04-08 20:03:35

* @LastEditTime: 2023-09-18 22:22:11

* @LastEditors: tianyw

*/

export type SampleInit = (params: {

canvas: HTMLCanvasElement;

}) => void | Promise<void>;

import shaderWGSL from "./shaders/shader.wgsl?raw";

const rand = (

min: undefined | number = undefined,

max: undefined | number = undefined

) => {

if (min === undefined) {

min = 0;

max = 1;

} else if (max === undefined) {

max = min;

min = 0;

}

return min + Math.random() * (max - min);

};

const init: SampleInit = async ({ canvas }) => {

const adapter = await navigator.gpu?.requestAdapter();

if (!adapter) return;

const device = await adapter?.requestDevice();

if (!device) {

console.error("need a browser that supports WebGPU");

return;

}

const context = canvas.getContext("webgpu");

if (!context) return;

const devicePixelRatio = window.devicePixelRatio || 1;

canvas.width = canvas.clientWidth * devicePixelRatio;

canvas.height = canvas.clientHeight * devicePixelRatio;

const presentationFormat = navigator.gpu.getPreferredCanvasFormat();

context.configure({

device,

format: presentationFormat,

alphaMode: "premultiplied"

});

const shaderModule = device.createShaderModule({

label: "our hardcoded rgb triangle shaders",

code: shaderWGSL

});

const renderPipeline = device.createRenderPipeline({

label: "hardcoded rgb triangle pipeline",

layout: "auto",

vertex: {

module: shaderModule,

entryPoint: "vs"

},

fragment: {

module: shaderModule,

entryPoint: "fs",

targets: [

{

format: presentationFormat

}

]

},

primitive: {

// topology: "line-list"

// topology: "line-strip"

// topology: "point-list"

topology: "triangle-list"

// topology: "triangle-strip"

}

});

const kNumObjects = 100;

const staticStorageUnitSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // scale is 2 32bit floats (4bytes each)

2 * 4; // padding

const storageUnitSzie = 2 * 4; // scale is 2 32 bit floats

const staticStorageBufferSize = staticStorageUnitSize * kNumObjects;

const storageBufferSize = storageUnitSzie * kNumObjects;

const staticStorageBuffer = device.createBuffer({

label: "static storage for objects",

size: staticStorageBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST

});

const storageBuffer = device.createBuffer({

label: "changing storage for objects",

size: storageBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST

});

const staticStorageValues = new Float32Array(staticStorageBufferSize / 4);

const storageValues = new Float32Array(storageBufferSize / 4);

const kColorOffset = 0;

const kOffsetOffset = 4;

const kScaleOffset = 0;

const objectInfos: {

scale: number;

}[] = [];

for (let i = 0; i < kNumObjects; ++i) {

const staticOffset = i * (staticStorageUnitSize / 4);

staticStorageValues.set(

[rand(), rand(), rand(), 1],

staticOffset + kColorOffset

);

staticStorageValues.set(

[rand(-0.9, 0.9), rand(-0.9, 0.9)],

staticOffset + kOffsetOffset

);

objectInfos.push({

scale: rand(0.2, 0.5)

});

}

device.queue.writeBuffer(staticStorageBuffer, 0, staticStorageValues);

const bindGroup = device.createBindGroup({

label: "bind group for objects",

layout: renderPipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: staticStorageBuffer } },

{ binding: 1, resource: { buffer: storageBuffer } }

]

});

function frame() {

const aspect = canvas.width / canvas.height;

const renderCommandEncoder = device.createCommandEncoder({

label: "render vert frag"

});

if (!context) return;

const textureView = context.getCurrentTexture().createView();

const renderPassDescriptor: GPURenderPassDescriptor = {

label: "our basic canvas renderPass",

colorAttachments: [

{

view: textureView,

clearValue: { r: 0.0, g: 0.0, b: 0.0, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

]

};

const renderPass =

renderCommandEncoder.beginRenderPass(renderPassDescriptor);

renderPass.setPipeline(renderPipeline);

objectInfos.forEach(({ scale }, ndx) => {

const offset = ndx * (storageUnitSzie / 4);

storageValues.set([scale / aspect, scale], offset + kScaleOffset); // set the scale

});

device.queue.writeBuffer(storageBuffer, 0, storageValues);

renderPass.setBindGroup(0, bindGroup);

renderPass.draw(3, kNumObjects);

renderPass.end();

const renderBuffer = renderCommandEncoder.finish();

device.queue.submit([renderBuffer]);

requestAnimationFrame(frame);

}

requestAnimationFrame(frame);

};

const canvas = document.getElementById("gpucanvas") as HTMLCanvasElement;

init({ canvas: canvas });

Shaders:

shader:

struct OurStruct {

color: vec4f,

offset: vec2f

};

struct OtherStruct {

scale: vec2f

};

struct VSOutput {

@builtin(position) position: vec4f,

@location(0) color: vec4f

};

@group(0) @binding(0) var<storage,read> ourStructs: array<OurStruct>;

@group(0) @binding(1) var<storage,read> otherStructs: array<OtherStruct>;

@vertex

fn vs(@builtin(vertex_index) vertexIndex: u32,@builtin(instance_index) instanceIndex: u32) -> VSOutput {

let pos = array<vec2f, 3>(

vec2f(0.0, 0.5), // top center

vec2f(-0.5, -0.5), // bottom left

vec2f(0.5, -0.5) // bottom right

);

let otherStruct = otherStructs[instanceIndex];

let ourStruct = ourStructs[instanceIndex];

var vsOut: VSOutput;

vsOut.position = vec4f(pos[vertexIndex] * otherStruct.scale + ourStruct.offset,0.0,1.0);

vsOut.color = ourStruct.color;

return vsOut;

}

@fragment

fn fs(vsOut: VSOutput) -> @location(0) vec4f {

return vsOut.color;

}

为顶点数据使用存储缓冲区

到目前为止,我们已经在着色器中直接使用了硬编码三角形。存储缓冲区的一个用例是存储顶点数据。就像我们在上面的例子中用instance_index索引当前存储缓冲区一样,我们可以用vertex_index索引另一个存储缓冲区来获取顶点数据。

struct OurStruct {

color: vec4f,

offset: vec2f,

};

struct OtherStruct {

scale: vec2f,

};

struct Vertex {

position: vec2f,

};

struct VSOutput {

@builtin(position) position: vec4f,

@location(0) color: vec4f,

};

@group(0) @binding(0) var<storage, read> ourStructs: array<OurStruct>;

@group(0) @binding(1) var<storage, read> otherStructs: array<OtherStruct>;

@group(0) @binding(2) var<storage, read> pos: array<Vertex>;

@vertex fn vs(

@builtin(vertex_index) vertexIndex : u32,

@builtin(instance_index) instanceIndex: u32

) -> VSOutput {

let otherStruct = otherStructs[instanceIndex];

let ourStruct = ourStructs[instanceIndex];

var vsOut: VSOutput;

vsOut.position = vec4f(

pos[vertexIndex].position * otherStruct.scale + ourStruct.offset, 0.0, 1.0);

vsOut.color = ourStruct.color;

return vsOut;

}

@fragment fn fs(vsOut: VSOutput) -> @location(0) vec4f {

return vsOut.color;

}

现在我们需要用一些顶点数据再设置一个存储缓冲区。首先让我们创建一个函数来生成一些顶点数据。让我们画一个圆。

function createCircleVertices({

radius = 1,

numSubdivisions = 24,

innerRadius = 0,

startAngle = 0,

endAngle = Math.PI * 2,

} = {}) {

// 2 triangles per subdivision, 3 verts per tri, 2 values (xy) each.

const numVertices = numSubdivisions * 3 * 2;

const vertexData = new Float32Array(numSubdivisions * 2 * 3 * 2);

let offset = 0;

const addVertex = (x, y) => {

vertexData[offset++] = x;

vertexData[offset++] = y;

};

// 2 vertices per subdivision

//

// 0--1 4

// | / /|

// |/ / |

// 2 3--5

for (let i = 0; i < numSubdivisions; ++i) {

const angle1 = startAngle + (i + 0) * (endAngle - startAngle) / numSubdivisions;

const angle2 = startAngle + (i + 1) * (endAngle - startAngle) / numSubdivisions;

const c1 = Math.cos(angle1);

const s1 = Math.sin(angle1);

const c2 = Math.cos(angle2);

const s2 = Math.sin(angle2);

// first triangle

addVertex(c1 * radius, s1 * radius);

addVertex(c2 * radius, s2 * radius);

addVertex(c1 * innerRadius, s1 * innerRadius);

// second triangle

addVertex(c1 * innerRadius, s1 * innerRadius);

addVertex(c2 * radius, s2 * radius);

addVertex(c2 * innerRadius, s2 * innerRadius);

}

return {

vertexData,

numVertices,

};

}

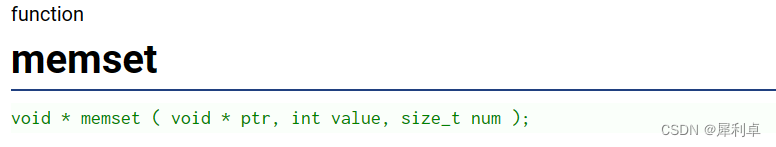

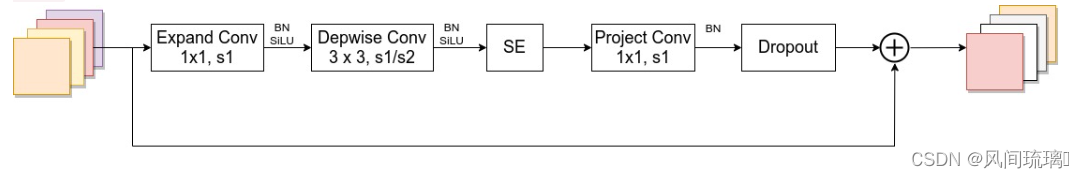

上面的代码由这样的三角形组成一个圆:

我们可以用它来填充一个存储缓冲区用一个圆的顶点

// setup a storage buffer with vertex data

const { vertexData, numVertices } = createCircleVertices({

radius: 0.5,

innerRadius: 0.25,

});

const vertexStorageBuffer = device.createBuffer({

label: 'storage buffer vertices',

size: vertexData.byteLength,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST,

});

device.queue.writeBuffer(vertexStorageBuffer, 0, vertexData);

然后我们需要将它添加到bind group 中。

const bindGroup = device.createBindGroup({

label: 'bind group for objects',

layout: pipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: staticStorageBuffer }},

{ binding: 1, resource: { buffer: changingStorageBuffer }},

{ binding: 2, resource: { buffer: vertexStorageBuffer }},

],

});

最后在渲染时我们需要渲染圆中的所有顶点。

pass.draw(numVertices, kNumObjects);

以下为完整代码及运行效果:

HTML:

<!--

* @Description:

* @Author: tianyw

* @Date: 2022-11-11 12:50:23

* @LastEditTime: 2023-09-19 21:12:49

* @LastEditors: tianyw

-->

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>001hello-triangle</title>

<style>

html,

body {

margin: 0;

width: 100%;

height: 100%;

background: #000;

color: #fff;

display: flex;

text-align: center;

flex-direction: column;

justify-content: center;

}

div,

canvas {

height: 100%;

width: 100%;

}

</style>

</head>

<body>

<div id="006storage-srandom-circle">

<canvas id="gpucanvas"></canvas>

</div>

<script type="module" src="./006storage-srandom-circle.ts"></script>

</body>

</html>

TS:

/*

* @Description:

* @Author: tianyw

* @Date: 2023-04-08 20:03:35

* @LastEditTime: 2023-09-19 21:28:58

* @LastEditors: tianyw

*/

export type SampleInit = (params: {

canvas: HTMLCanvasElement;

}) => void | Promise<void>;

import shaderWGSL from "./shaders/shader.wgsl?raw";

const rand = (

min: undefined | number = undefined,

max: undefined | number = undefined

) => {

if (min === undefined) {

min = 0;

max = 1;

} else if (max === undefined) {

max = min;

min = 0;

}

return min + Math.random() * (max - min);

};

function createCircleVertices({

radius = 1,

numSubdivisions = 24,

innerRadius = 0,

startAngle = 0,

endAngle = Math.PI * 2

} = {}) {

// 2 triangles per subdivision, 3 verts per tri, 2 values(xy) each

const numVertices = numSubdivisions * 3 * 2;

const vertexData = new Float32Array(numSubdivisions * 2 * 3 * 2);

let offset = 0;

const addVertex = (x: number, y: number) => {

vertexData[offset++] = x;

vertexData[offset++] = y;

};

// 2 vertices per subdivision

for (let i = 0; i < numSubdivisions; ++i) {

const angle1 =

startAngle + ((i + 0) * (endAngle - startAngle)) / numSubdivisions;

const angle2 =

startAngle + ((i + 1) * (endAngle - startAngle)) / numSubdivisions;

const c1 = Math.cos(angle1);

const s1 = Math.sin(angle1);

const c2 = Math.cos(angle2);

const s2 = Math.sin(angle2);

// first angle

addVertex(c1 * radius, s1 * radius);

addVertex(c2 * radius, s2 * radius);

addVertex(c1 * innerRadius, s1 * innerRadius);

// second triangle

addVertex(c1 * innerRadius, s1 * innerRadius);

addVertex(c2 * radius, s2 * radius);

addVertex(c2 * innerRadius, s2 * innerRadius);

}

return {

vertexData,

numVertices

};

}

const init: SampleInit = async ({ canvas }) => {

const adapter = await navigator.gpu?.requestAdapter();

if (!adapter) return;

const device = await adapter?.requestDevice();

if (!device) {

console.error("need a browser that supports WebGPU");

return;

}

const context = canvas.getContext("webgpu");

if (!context) return;

const devicePixelRatio = window.devicePixelRatio || 1;

canvas.width = canvas.clientWidth * devicePixelRatio;

canvas.height = canvas.clientHeight * devicePixelRatio;

const presentationFormat = navigator.gpu.getPreferredCanvasFormat();

context.configure({

device,

format: presentationFormat,

alphaMode: "premultiplied"

});

const shaderModule = device.createShaderModule({

label: "our hardcoded rgb triangle shaders",

code: shaderWGSL

});

const renderPipeline = device.createRenderPipeline({

label: "hardcoded rgb triangle pipeline",

layout: "auto",

vertex: {

module: shaderModule,

entryPoint: "vs"

},

fragment: {

module: shaderModule,

entryPoint: "fs",

targets: [

{

format: presentationFormat

}

]

},

primitive: {

// topology: "line-list"

// topology: "line-strip"

// topology: "point-list"

topology: "triangle-list"

// topology: "triangle-strip"

}

});

const kNumObjects = 100;

const staticStorageUnitSize =

4 * 4 + // color is 4 32bit floats (4bytes each)

2 * 4 + // scale is 2 32bit floats (4bytes each)

2 * 4; // padding

const storageUnitSzie = 2 * 4; // scale is 2 32 bit floats

const staticStorageBufferSize = staticStorageUnitSize * kNumObjects;

const storageBufferSize = storageUnitSzie * kNumObjects;

const staticStorageBuffer = device.createBuffer({

label: "static storage for objects",

size: staticStorageBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST

});

const storageBuffer = device.createBuffer({

label: "changing storage for objects",

size: storageBufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST

});

const staticStorageValues = new Float32Array(staticStorageBufferSize / 4);

const storageValues = new Float32Array(storageBufferSize / 4);

const kColorOffset = 0;

const kOffsetOffset = 4;

const kScaleOffset = 0;

const objectInfos: {

scale: number;

}[] = [];

for (let i = 0; i < kNumObjects; ++i) {

const staticOffset = i * (staticStorageUnitSize / 4);

staticStorageValues.set(

[rand(), rand(), rand(), 1],

staticOffset + kColorOffset

);

staticStorageValues.set(

[rand(-0.9, 0.9), rand(-0.9, 0.9)],

staticOffset + kOffsetOffset

);

objectInfos.push({

scale: rand(0.2, 0.5)

});

}

device.queue.writeBuffer(staticStorageBuffer, 0, staticStorageValues);

const { vertexData, numVertices } = createCircleVertices({

radius: 0.5,

innerRadius: 0.25

});

const vertexStorageBuffer = device.createBuffer({

label: "storage buffer vertices",

size: vertexData.byteLength,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST

});

device.queue.writeBuffer(vertexStorageBuffer, 0, vertexData);

const bindGroup = device.createBindGroup({

label: "bind group for objects",

layout: renderPipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: staticStorageBuffer } },

{ binding: 1, resource: { buffer: storageBuffer } },

{ binding: 2, resource: { buffer: vertexStorageBuffer } }

]

});

function frame() {

const aspect = canvas.width / canvas.height;

const renderCommandEncoder = device.createCommandEncoder({

label: "render vert frag"

});

if (!context) return;

const textureView = context.getCurrentTexture().createView();

const renderPassDescriptor: GPURenderPassDescriptor = {

label: "our basic canvas renderPass",

colorAttachments: [

{

view: textureView,

clearValue: { r: 0.0, g: 0.0, b: 0.0, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

]

};

const renderPass =

renderCommandEncoder.beginRenderPass(renderPassDescriptor);

renderPass.setPipeline(renderPipeline);

objectInfos.forEach(({ scale }, ndx) => {

const offset = ndx * (storageUnitSzie / 4);

storageValues.set([scale / aspect, scale], offset + kScaleOffset); // set the scale

});

device.queue.writeBuffer(storageBuffer, 0, storageValues);

renderPass.setBindGroup(0, bindGroup);

renderPass.draw(numVertices, kNumObjects);

renderPass.end();

const renderBuffer = renderCommandEncoder.finish();

device.queue.submit([renderBuffer]);

requestAnimationFrame(frame);

}

requestAnimationFrame(frame);

};

const canvas = document.getElementById("gpucanvas") as HTMLCanvasElement;

init({ canvas: canvas });

Shaders:

shader:

struct OurStruct {

color: vec4f,

offset: vec2f

};

struct OtherStruct {

scale: vec2f

};

struct Vertex {

position: vec2f

}

struct VSOutput {

@builtin(position) position: vec4f,

@location(0) color: vec4f

};

@group(0) @binding(0) var<storage,read> ourStructs: array<OurStruct>;

@group(0) @binding(1) var<storage,read> otherStructs: array<OtherStruct>;

@group(0) @binding(2) var<storage,read> pos: array<Vertex>;

@vertex

fn vs(@builtin(vertex_index) vertexIndex: u32, @builtin(instance_index) instanceIndex: u32) -> VSOutput {

let otherStruct = otherStructs[instanceIndex];

let ourStruct = ourStructs[instanceIndex];

var vsOut: VSOutput;

vsOut.position = vec4f(pos[vertexIndex].position * otherStruct.scale + ourStruct.offset, 0.0, 1.0);

vsOut.color = ourStruct.color;

return vsOut;

}

@fragment

fn fs(vsOut: VSOutput) -> @location(0) vec4f {

return vsOut.color;

}

通过存储缓冲区传递顶点越来越受欢迎。有人告诉我,在一些旧设备上,这比经典的方式要慢。我们将在下一篇关于顶点缓冲的文章中讲到。