简介

Q learning 算法是一种value-based的强化学习算法,Q是quality的缩写,Q函数 Q(state,action)表示在状态state下执行动作action的quality, 也就是能获得的Q value是多少。算法的目标是最大化Q值,通过在状态state下所有可能的动作中选择最好的动作来达到最大化期望reward。

Q learning算法使用Q table来记录不同状态下不同动作的预估Q值。在探索环境之前,Q table会被随机初始化,当agent在环境中探索的时候,它会用贝尔曼方程(ballman equation)来迭代更新Q(s,a), 随着迭代次数的增多,agent会对环境越来越了解,Q 函数也能被拟合得越来越好,直到收敛或者到达设定的迭代结束次数。

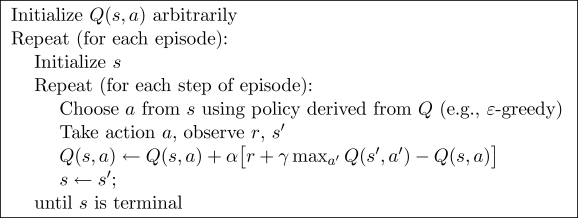

伪代码如下:

整个算法就是一直不断更新 Q table 里的值, 然后再根据新的值来判断要在某个 state 采取怎样的 action. Qlearning 是一个 off-policy 的算法, 因为里面的 max action 让 Q table 的更新可以不基于正在经历的经验(可以是现在学习着很久以前的经验,甚至是学习他人的经验). 不过这一次的例子, 我们没有运用到 off-policy, 而是把 Qlearning 用在了 on-policy 上, 也就是现学现卖, 将现在经历的直接当场学习并运用. On-policy 和 off-policy 的差别我们会在之后的 [Deep Q network (off-policy)] 学习中见识到. 而之后的教程也会讲到一个 on-policy (Sarsa) 的形式, 我们之后再对比.

算法实战

我们使用openAI的gym中的CliffWalking-v0作为环境

#!/usr/bin/env python

# -*- coding:utf-8 -*-

import numpy as np

import gym

import time

import gridworld

#Sarsa算法

class QLearning():

def __init__(self,num_states,num_actions,e_greed=0.1,lr=0.9,gamma=0.8):

#建立Q表格

self.Q = np.zeros((num_states,num_actions))

self.e_greed = e_greed #探索概率

self.num_states = num_states

self.num_actions = num_actions

self.lr = lr #学习率

self.gamma = gamma #折扣因子

def predict(self,state):

"""

通过当前状态预测下一个动作

:param state:

:return:

"""

#获取当前状态的所有动作的切片

Q_list = self.Q[state,:]

#随机选取其中最大值中的某一个(防止存在多个最大值时,总是选最前面的问题)

action = np.random.choice(np.flatnonzero(Q_list == Q_list.max()))

return action

def action(self,state):

"""

选取动作

:param state:

:return:

"""

#探索,随机选择一个动作

if np.random.uniform(0,1) < self.e_greed:

action = np.random.choice(self.num_actions)

else: #直接选取最大Q值的动作

action = self.predict(state)

return action

def learn(self,state,action,reward,next_state,done):

cur_Q = self.Q[state,action]

# 当游戏结束时,不存在next_action和next_state

target_Q = reward + (1-float(done))*self.gamma*self.Q[next_state,:].max()

self.Q[state,action] += self.lr*(target_Q - cur_Q)

#训练

def train_episode(env,agent,is_render):

total_reward = 0

#初始化环境

state,_ = env.reset()

while True:

action = agent.action(state)

#执行动作返回结果

next_state,reward,done,_,_ = env.step(action)

#更新参数

agent.learn(state,action,reward,next_state,done)

#循环执行

state = next_state

total_reward += reward

if is_render:

env.render()

if done:

break

return total_reward

#测试

def test_episode(env,agent,is_render=False):

total_reward = 0

# 初始化环境

state,_ = env.reset()

while True:

action = agent.predict(state)

next_state, reward, done, _,_ = env.step(action)

state = next_state

total_reward += reward

env.render()

time.sleep(0.5)

if done:

break

return total_reward

#训练

def train(env,episodes=500,lr=0.1,gamma=0.9,e_greed=0.1):

agent = QLearning(

num_states = env.observation_space.n,

num_actions = env.action_space.n,

lr = lr,

gamma = gamma,

e_greed = e_greed

)

is_render = False

#先训练episodes次

for e in range(episodes):

ep_reward = train_episode(env,agent,is_render)

print('Episode %s : reward= %.1f'%(e,ep_reward))

#每执行50轮就显示一次

if e%50 == 0:

is_render = True

else:

is_render = False

#训练结束后,我i们测试模型

test_reward = test_episode(env,agent)

print('test_reward= %.1f' % (test_reward))

if __name__ == '__main__':

env = gym.make("CliffWalking-v0")

env = gridworld.CliffWalkingWapper(env)

train(env)

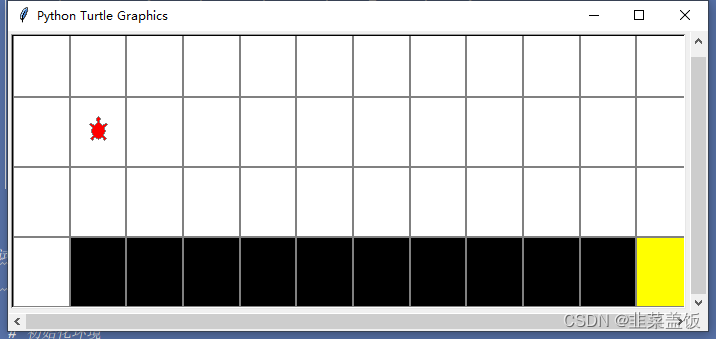

运行效果

另附工具类

用于可视化游戏界面

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# -*- coding: utf-8 -*-

import gym

import turtle

import numpy as np

# turtle tutorial : https://docs.python.org/3.3/library/turtle.html

def GridWorld(gridmap=None, is_slippery=False):

if gridmap is None:

gridmap = ['SFFF', 'FHFH', 'FFFH', 'HFFG']

env = gym.make("FrozenLake-v0", desc=gridmap, is_slippery=False)

env = FrozenLakeWapper(env)

return env

class FrozenLakeWapper(gym.Wrapper):

def __init__(self, env):

gym.Wrapper.__init__(self, env)

self.max_y = env.desc.shape[0]

self.max_x = env.desc.shape[1]

self.t = None

self.unit = 50

def draw_box(self, x, y, fillcolor='', line_color='gray'):

self.t.up()

self.t.goto(x * self.unit, y * self.unit)

self.t.color(line_color)

self.t.fillcolor(fillcolor)

self.t.setheading(90)

self.t.down()

self.t.begin_fill()

for _ in range(4):

self.t.forward(self.unit)

self.t.right(90)

self.t.end_fill()

def move_player(self, x, y):

self.t.up()

self.t.setheading(90)

self.t.fillcolor('red')

self.t.goto((x + 0.5) * self.unit, (y + 0.5) * self.unit)

def render(self):

if self.t == None:

self.t = turtle.Turtle()

self.wn = turtle.Screen()

self.wn.setup(self.unit * self.max_x + 100,

self.unit * self.max_y + 100)

self.wn.setworldcoordinates(0, 0, self.unit * self.max_x,

self.unit * self.max_y)

self.t.shape('circle')

self.t.width(2)

self.t.speed(0)

self.t.color('gray')

for i in range(self.desc.shape[0]):

for j in range(self.desc.shape[1]):

x = j

y = self.max_y - 1 - i

if self.desc[i][j] == b'S': # Start

self.draw_box(x, y, 'white')

elif self.desc[i][j] == b'F': # Frozen ice

self.draw_box(x, y, 'white')

elif self.desc[i][j] == b'G': # Goal

self.draw_box(x, y, 'yellow')

elif self.desc[i][j] == b'H': # Hole

self.draw_box(x, y, 'black')

else:

self.draw_box(x, y, 'white')

self.t.shape('turtle')

x_pos = self.s % self.max_x

y_pos = self.max_y - 1 - int(self.s / self.max_x)

self.move_player(x_pos, y_pos)

class CliffWalkingWapper(gym.Wrapper):

def __init__(self, env):

gym.Wrapper.__init__(self, env)

self.t = None

self.unit = 50

self.max_x = 12

self.max_y = 4

def draw_x_line(self, y, x0, x1, color='gray'):

assert x1 > x0

self.t.color(color)

self.t.setheading(0)

self.t.up()

self.t.goto(x0, y)

self.t.down()

self.t.forward(x1 - x0)

def draw_y_line(self, x, y0, y1, color='gray'):

assert y1 > y0

self.t.color(color)

self.t.setheading(90)

self.t.up()

self.t.goto(x, y0)

self.t.down()

self.t.forward(y1 - y0)

def draw_box(self, x, y, fillcolor='', line_color='gray'):

self.t.up()

self.t.goto(x * self.unit, y * self.unit)

self.t.color(line_color)

self.t.fillcolor(fillcolor)

self.t.setheading(90)

self.t.down()

self.t.begin_fill()

for i in range(4):

self.t.forward(self.unit)

self.t.right(90)

self.t.end_fill()

def move_player(self, x, y):

self.t.up()

self.t.setheading(90)

self.t.fillcolor('red')

self.t.goto((x + 0.5) * self.unit, (y + 0.5) * self.unit)

def render(self):

if self.t == None:

self.t = turtle.Turtle()

self.wn = turtle.Screen()

self.wn.setup(self.unit * self.max_x + 100,

self.unit * self.max_y + 100)

self.wn.setworldcoordinates(0, 0, self.unit * self.max_x,

self.unit * self.max_y)

self.t.shape('circle')

self.t.width(2)

self.t.speed(0)

self.t.color('gray')

for _ in range(2):

self.t.forward(self.max_x * self.unit)

self.t.left(90)

self.t.forward(self.max_y * self.unit)

self.t.left(90)

for i in range(1, self.max_y):

self.draw_x_line(

y=i * self.unit, x0=0, x1=self.max_x * self.unit)

for i in range(1, self.max_x):

self.draw_y_line(

x=i * self.unit, y0=0, y1=self.max_y * self.unit)

for i in range(1, self.max_x - 1):

self.draw_box(i, 0, 'black')

self.draw_box(self.max_x - 1, 0, 'yellow')

self.t.shape('turtle')

x_pos = self.s % self.max_x

y_pos = self.max_y - 1 - int(self.s / self.max_x)

self.move_player(x_pos, y_pos)

if __name__ == '__main__':

# 环境1:FrozenLake, 可以配置冰面是否是滑的

# 0 left, 1 down, 2 right, 3 up

env = gym.make("FrozenLake-v0", is_slippery=False)

env = FrozenLakeWapper(env)

# 环境2:CliffWalking, 悬崖环境

# env = gym.make("CliffWalking-v0") # 0 up, 1 right, 2 down, 3 left

# env = CliffWalkingWapper(env)

# 环境3:自定义格子世界,可以配置地图, S为出发点Start, F为平地Floor, H为洞Hole, G为出口目标Goal

# gridmap = [

# 'SFFF',

# 'FHFF',

# 'FFFF',

# 'HFGF' ]

# env = GridWorld(gridmap)

env.reset()

for step in range(10):

action = np.random.randint(0, 4)

obs, reward, done, info = env.step(action)

print('step {}: action {}, obs {}, reward {}, done {}, info {}'.format(\

step, action, obs, reward, done, info))

env.render() # 渲染一帧图像