一、乱码问题

为解决中文乱码问题,可使用chardet.detect()检测文本编码格式

详细:

Python爬虫解决中文乱码_脑子不好真君的博客-CSDN博客

二、代码

#爬取三国演义

import requests

import chardet

from bs4 import BeautifulSoup

url='https://www.shicimingju.com/book/sanguoyanyi.html'

headers={

'User-Agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.0.0 Safari/537.36 Edg/117.0.2045.47'

}

resp=requests.get(url=url,headers=headers)

encoding=chardet.detect(resp.content)["encoding"]

#print(encoding)

resp.encoding=encoding

page_text=resp.text

#print(page_text)

soup=BeautifulSoup(page_text,'lxml')

li_list=soup.select('.book-mulu > ul > li')

#print(li_list)

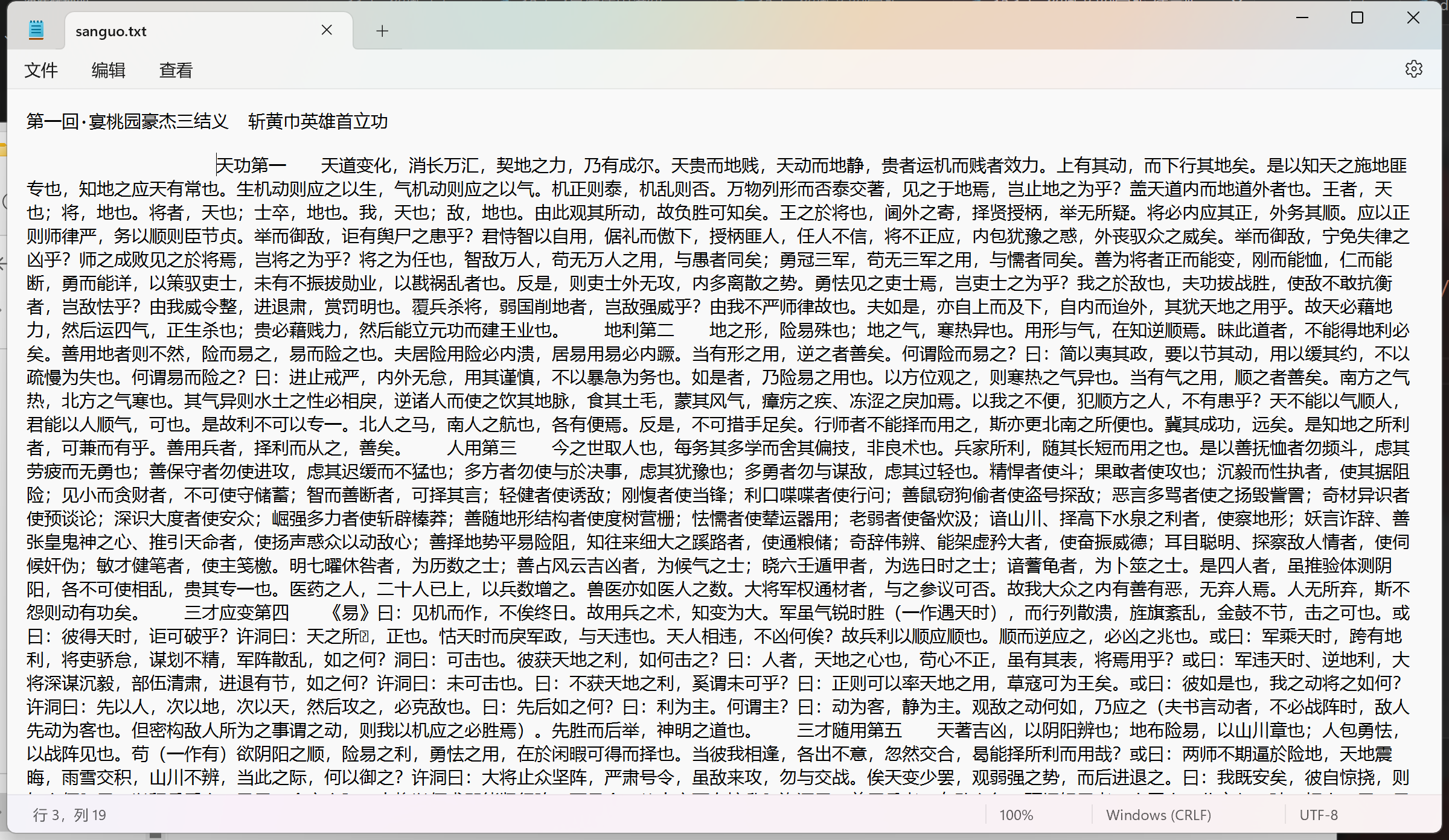

fp=open('D:\\Programming\\Microsoft VS Code Data\\WebCrawler\\data\\sanguo\\sanguo.txt',

'a+',

encoding=encoding,

)

for li in li_list:

title=li.a.string

zhangjie_url='https://www.shicimingju.com'+li.a['href']

zhangjie_page=requests.get(url=zhangjie_url,headers=headers)

encoding=chardet.detect(zhangjie_page.content)['encoding']

zhangjie_page.encoding=encoding

#print(encoding)

zhangjie_page_text=zhangjie_page.text

zhangjie_soup=BeautifulSoup(zhangjie_page_text,'lxml')

div_content=zhangjie_soup.find('div',class_='chapter_content')

content=div_content.text

fp.write(title+'\n'+content+'\n')

print(title,'爬取成功!')

fp.close()