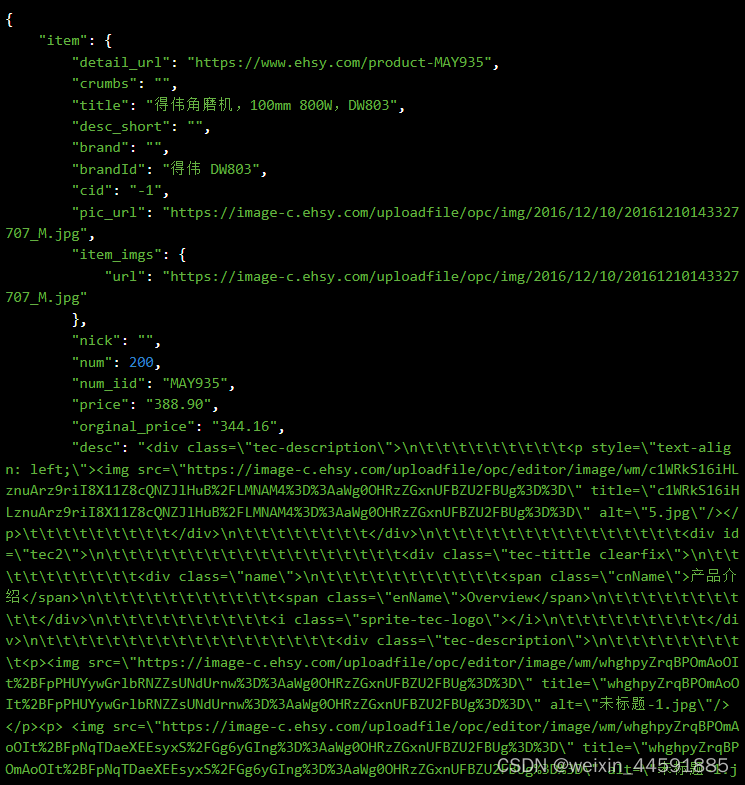

上节HDR环境贴图进行卷积后,得到的就是辐照度图,表示的是周围环境间接漫反射光的积分。

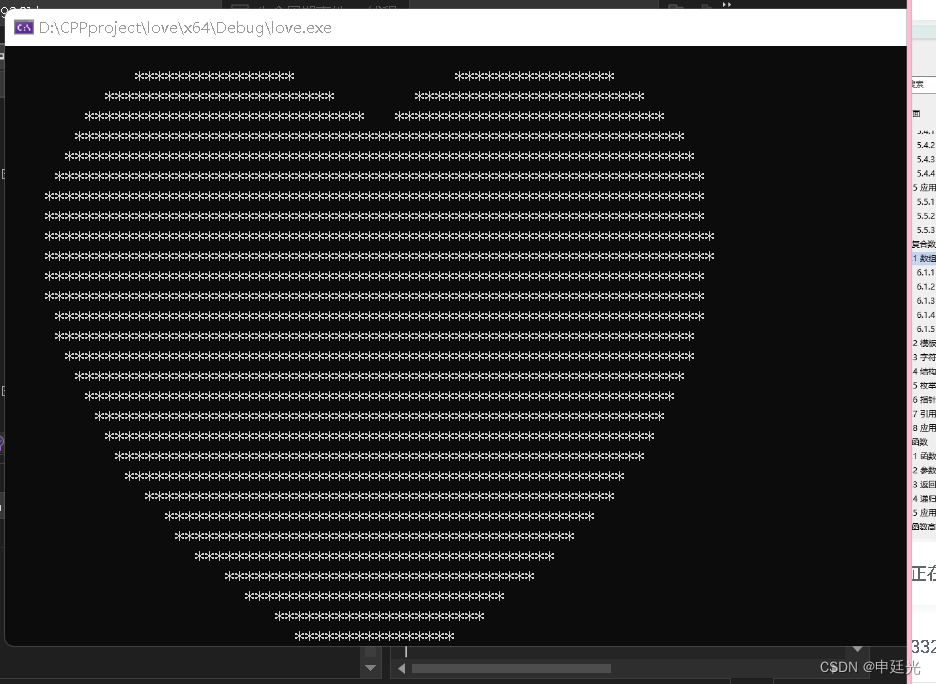

现在也进行下打印,和前面打印HDR环境贴图一样,只是由于辐照度图做了平均,失去了大量高频部分,因此,可以用较低分辨率32x32存储。

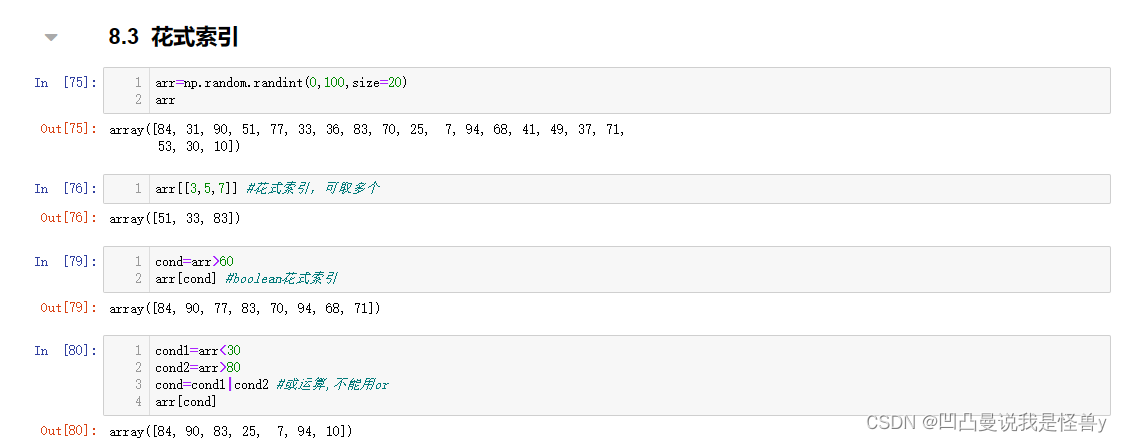

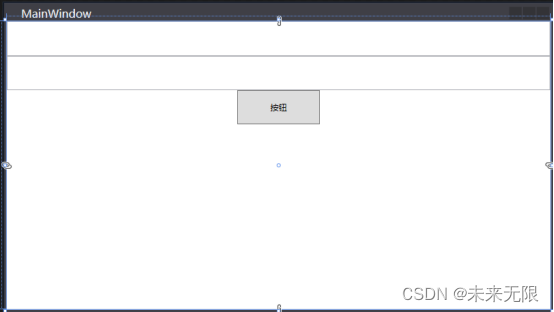

运行结果如下:

代码如下:

#include <osg/TextureCubeMap>

#include <osg/TexGen>

#include <osg/TexEnvCombine>

#include <osgUtil/ReflectionMapGenerator>

#include <osgDB/ReadFile>

#include <osgViewer/Viewer>

#include <osg/NodeVisitor>

#include <osg/ShapeDrawable>

#include <osgGA/TrackballManipulator>

#include <osgDB/WriteFile>

static const char * vertexShader =

{

“in vec3 aPos;\n”

“varying vec3 WorldPos;”

“void main(void)\n”

“{\n”

“WorldPos = aPos;\n”

" gl_Position = ftransform();\n"

“}\n”

};

static const char *psShader =

{

"varying vec3 WorldPos; "

"uniform samplerCube environmentMap; "

"const float PI = 3.14159265359; "

"void main() "

"{ "

" vec3 N = normalize(WorldPos); "

" vec3 irradiance = vec3(0.0); "

" vec3 up = vec3(0.0, 1.0, 0.0); "

" vec3 right = normalize(cross(up, N)); "

" up = normalize(cross(N, right)); "

" float sampleDelta = 0.025; "

" float nrSamples = 0.0; "

" for (float phi = 0.0; phi < 2.0 * PI; phi += sampleDelta) "

" { "

" for (float theta = 0.0; theta < 0.5 * PI; theta += sampleDelta) "

" { "

" vec3 tangentSample = vec3(sin(theta) * cos(phi), sin(theta) * sin(phi), cos(theta)); "

" vec3 sampleVec = tangentSample.x * right + tangentSample.y * up + tangentSample.z * N; "

" irradiance += texture(environmentMap, sampleVec).rgb * cos(theta) * sin(theta); "

" nrSamples++; "

" } "

" } "

" irradiance = PI * irradiance * (1.0 / float(nrSamples)); "

" gl_FragColor = vec4(irradiance, 1.0); "

“}”

};

class MyNodeVisitor : public osg::NodeVisitor

{

public:

MyNodeVisitor() : osg::NodeVisitor(osg::NodeVisitor::TRAVERSE_ALL_CHILDREN)

{

}

void apply(osg::Geode& geode)

{

int count = geode.getNumDrawables();

for (int i = 0; i < count; i++)

{

osg::ref_ptr<osg::Geometry> geometry = geode.getDrawable(i)->asGeometry();

if (!geometry.valid())

{

continue;

}

osg::Array* vertexArray = geometry->getVertexArray();

geometry->setVertexAttribArray(1, vertexArray);

}

traverse(geode);

}

};

osg::ref_ptrosg::TextureCubeMap getTextureCubeMap(osgViewer::Viewer& viewer)

{

unsigned int screenWidth, screenHeight;

osg::GraphicsContext::WindowingSystemInterface * wsInterface = osg::GraphicsContext::getWindowingSystemInterface();

wsInterface->getScreenResolution(osg::GraphicsContext::ScreenIdentifier(0), screenWidth, screenHeight);

osg::ref_ptr<osg::GraphicsContext::Traits> traits = new osg::GraphicsContext::Traits;

traits->x = 0;

traits->y = 0;

traits->width = screenWidth;

traits->height = screenHeight;

traits->windowDecoration = false;

traits->doubleBuffer = true;

traits->sharedContext = 0;

traits->readDISPLAY();

traits->setUndefinedScreenDetailsToDefaultScreen();

osg::ref_ptr<osg::GraphicsContext> gc = osg::GraphicsContext::createGraphicsContext(traits.get());

if (!gc)

{

osg::notify(osg::NOTICE) << "GraphicsWindow has not been created successfully." << std::endl;

return NULL;

}

int textureWidth = 32;

int textureHeight = 32;

osg::ref_ptr<osg::TextureCubeMap> texture = new osg::TextureCubeMap;

texture->setTextureSize(textureWidth, textureHeight);

texture->setInternalFormat(GL_RGB);

texture->setFilter(osg::Texture::MIN_FILTER, osg::Texture::LINEAR);

texture->setFilter(osg::Texture::MAG_FILTER, osg::Texture::LINEAR);

texture->setWrap(osg::Texture::WRAP_S, osg::Texture::CLAMP_TO_EDGE);

texture->setWrap(osg::Texture::WRAP_T, osg::Texture::CLAMP_TO_EDGE);

texture->setWrap(osg::Texture::WRAP_R, osg::Texture::CLAMP_TO_EDGE);

osg::Camera::RenderTargetImplementation renderTargetImplementation = osg::Camera::FRAME_BUFFER_OBJECT;

// front face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Front face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::POSITIVE_Y);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(0, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd());

}

// top face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Top face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::POSITIVE_Z);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(1, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(-90.0f), 1.0, 0.0, 0.0));

}

// left face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Left face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::NEGATIVE_X);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(2, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(-90.0f), 0.0, 1.0, 0.0) * osg::Matrixd::rotate(osg::inDegrees(-90.0f), 0.0, 0.0, 1.0));

}

// right face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Right face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::POSITIVE_X);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(3, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(90.0f), 0.0, 1.0, 0.0) * osg::Matrixd::rotate(osg::inDegrees(90.0f), 0.0, 0.0, 1.0));

}

// bottom face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setGraphicsContext(gc.get());

camera->setName("Bottom face camera");

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::NEGATIVE_Z);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(4, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(90.0f), 1.0, 0.0, 0.0) * osg::Matrixd::rotate(osg::inDegrees(180.0f), 0.0, 0.0, 1.0));

}

// back face

{

osg::ref_ptr<osg::Camera> camera = new osg::Camera;

camera->setName("Back face camera");

camera->setGraphicsContext(gc.get());

camera->setViewport(new osg::Viewport(0, 0, textureWidth, textureHeight));

camera->setAllowEventFocus(false);

camera->setRenderTargetImplementation(renderTargetImplementation);

camera->setRenderOrder(osg::Camera::PRE_RENDER);

//关联采样贴图

camera->attach(osg::Camera::COLOR_BUFFER, texture, 0, osg::TextureCubeMap::NEGATIVE_Y);

osg::ref_ptr<osg::Image> printImage = new osg::Image;

printImage->setFileName(camera->getName());

printImage->allocateImage(textureWidth, textureHeight, 1, GL_RGBA, GL_UNSIGNED_BYTE);

texture->setImage(5, printImage);

camera->attach(osg::Camera::COLOR_BUFFER, printImage);

viewer.addSlave(camera.get(), osg::Matrixd(), osg::Matrixd::rotate(osg::inDegrees(180.0f), 1.0, 0.0, 0.0));

}

viewer.getCamera()->setProjectionMatrixAsPerspective(90.0f, 1.0, 0.1, 10);

//viewer.getCamera()->setNearFarRatio(0.0001f);

return texture;

}

int main()

{

osg::ref_ptrosg::TextureCubeMap tcm = new osg::TextureCubeMap;

tcm->setTextureSize(512, 512);

tcm->setFilter(osg::Texture::MIN_FILTER, osg::Texture::LINEAR);

tcm->setFilter(osg::Texture::MAG_FILTER, osg::Texture::LINEAR);

tcm->setWrap(osg::Texture::WRAP_S, osg::Texture::CLAMP_TO_EDGE);

tcm->setWrap(osg::Texture::WRAP_T, osg::Texture::CLAMP_TO_EDGE);

tcm->setWrap(osg::Texture::WRAP_R, osg::Texture::CLAMP_TO_EDGE);

std::string strImagePosX = "D:/delete/Right face camera.bmp";

osg::ref_ptr<osg::Image> imagePosX = osgDB::readImageFile(strImagePosX);

tcm->setImage(osg::TextureCubeMap::POSITIVE_X, imagePosX);

std::string strImageNegX = "D:/delete/Left face camera.bmp";

osg::ref_ptr<osg::Image> imageNegX = osgDB::readImageFile(strImageNegX);

tcm->setImage(osg::TextureCubeMap::NEGATIVE_X, imageNegX);

std::string strImagePosY = "D:/delete/Front face camera.bmp";;

osg::ref_ptr<osg::Image> imagePosY = osgDB::readImageFile(strImagePosY);

tcm->setImage(osg::TextureCubeMap::POSITIVE_Y, imagePosY);

std::string strImageNegY = "D:/delete/Back face camera.bmp";;

osg::ref_ptr<osg::Image> imageNegY = osgDB::readImageFile(strImageNegY);

tcm->setImage(osg::TextureCubeMap::NEGATIVE_Y, imageNegY);

std::string strImagePosZ = "D:/delete/Top face camera.bmp";

osg::ref_ptr<osg::Image> imagePosZ = osgDB::readImageFile(strImagePosZ);

tcm->setImage(osg::TextureCubeMap::POSITIVE_Z, imagePosZ);

std::string strImageNegZ = "D:/delete/Bottom face camera.bmp";

osg::ref_ptr<osg::Image> imageNegZ = osgDB::readImageFile(strImageNegZ);

tcm->setImage(osg::TextureCubeMap::NEGATIVE_Z, imageNegZ);

osg::ref_ptr<osg::Box> box = new osg::Box(osg::Vec3(0, 0, 0), 1);

osg::ref_ptr<osg::ShapeDrawable> drawable = new osg::ShapeDrawable(box);

osg::ref_ptr<osg::Geode> geode = new osg::Geode;

geode->addDrawable(drawable);

MyNodeVisitor nv;

geode->accept(nv);

osg::ref_ptr<osg::StateSet> stateset = geode->getOrCreateStateSet();

stateset->setTextureAttributeAndModes(0, tcm, osg::StateAttribute::OVERRIDE | osg::StateAttribute::ON);

//shader

osg::ref_ptr<osg::Shader> vs1 = new osg::Shader(osg::Shader::VERTEX, vertexShader);

osg::ref_ptr<osg::Shader> ps1 = new osg::Shader(osg::Shader::FRAGMENT, psShader);

osg::ref_ptr<osg::Program> program1 = new osg::Program;

program1->addShader(vs1);

program1->addShader(ps1);

program1->addBindAttribLocation("aPos", 1);

osg::ref_ptr<osg::Uniform> tex0Uniform = new osg::Uniform("environmentMap", 0);

stateset->addUniform(tex0Uniform);

stateset->setAttribute(program1, osg::StateAttribute::ON);

osgViewer::Viewer viewer;

osg::ref_ptr<osgGA::TrackballManipulator> manipulator = new osgGA::TrackballManipulator();

viewer.setCameraManipulator(manipulator);

osg::Vec3d newEye(0, 0, 0);

osg::Vec3 newCenter(0, 0, 0);

osg::Vec3 newUp(0, 1, 0);

manipulator->setHomePosition(newEye, newCenter, newUp);

osg::ref_ptr<osg::TextureCubeMap> textureCubeMap = getTextureCubeMap(viewer);

viewer.setSceneData(geode.get());

bool bPrinted = false;

while (!viewer.done())

{

viewer.frame();

if (!bPrinted)

{

bPrinted = true;

int imageNumber = textureCubeMap->getNumImages();

for (int i = 0; i < imageNumber; i++)

{

osg::ref_ptr<osg::Image> theImage = textureCubeMap->getImage(i);

std::string strPrintName = "E:/irradiance/" + theImage->getFileName() + ".bmp";

osgDB::writeImageFile(*theImage, strPrintName);

}

}

}

return 0;

}