文章目录

- 01 Tutorial

- Deconstruct a basic pipeline

- Deconstruct the Stable Diffusion pipeline

- Autopipeline

- Train a diffusion model

相关链接:

GitHub: https://github.com/huggingface/diffusers

官方教程:https://huggingface.co/docs/diffusers/tutorials/tutorial_overview

StableDiffuson: https://huggingface.co/blog/stable_diffusion#how-does-stable-diffusion-work

01 Tutorial

Diffusers被设计成一个用户友好和灵活的工具箱,用于构建适合您用例的扩散系统。工具箱的核心是模型和调度器。为了方便,DiffusionPipeline将这些组件捆绑在一起,你也可以解绑pipeline,分别使用模型和调度程序来创建新的扩散系统。

在本教程中,您将学习如何使用模型和调度器来组装用于推理的扩散系统,从基本pipeline开始,然后进展到Stable Diffusion pipeline.

Deconstruct a basic pipeline

from diffusers import DDPMPipeline

ddpm = DDPMPipeline.from_pretrained("google/ddpm-cat-256", use_safetensors=True).to("cuda")

image = ddpm(num_inference_steps=25).images[0]

image

为了分别用模型和调度器重新创建pipeline,下面是自己编写去噪过程的展示。

# Load the model and scheduler

from diffusers import DDPMScheduler, UNet2DModel

scheduler = DDPMScheduler.from_pretrained("google/ddpm-cat-256")

model = UNet2DModel.from_pretrained("google/ddpm-cat-256", use_safetensors=True).to("cuda")

# Set the number of timesteps to run the denoising process for

scheduler.set_timesteps(50)

# Create some random noise with the same shape as the desired output:

import torch

sample_size = model.config.sample_size

noise = torch.randn((1, 3, sample_size, sample_size)).to("cuda")

# Write a loop to iterate over the timesteps.

input = noise

for t in scheduler.timesteps:

with torch.no_grad():

noisy_residual = model(input, t).sample

previous_noisy_sample = scheduler.step(noisy_residual, t, input).prev_sample

input = previous_noisy_sample

# This is the entire denoising process, and you can use this same pattern to write any diffusion system.

# The last step is to convert the denoised output into an image:

from PIL import Image

import numpy as np

image = (input / 2 + 0.5).clamp(0, 1).squeeze()

image = (image.permute(1, 2, 0) * 255).round().to(torch.uint8).cpu().numpy()

image = Image.fromarray(image)

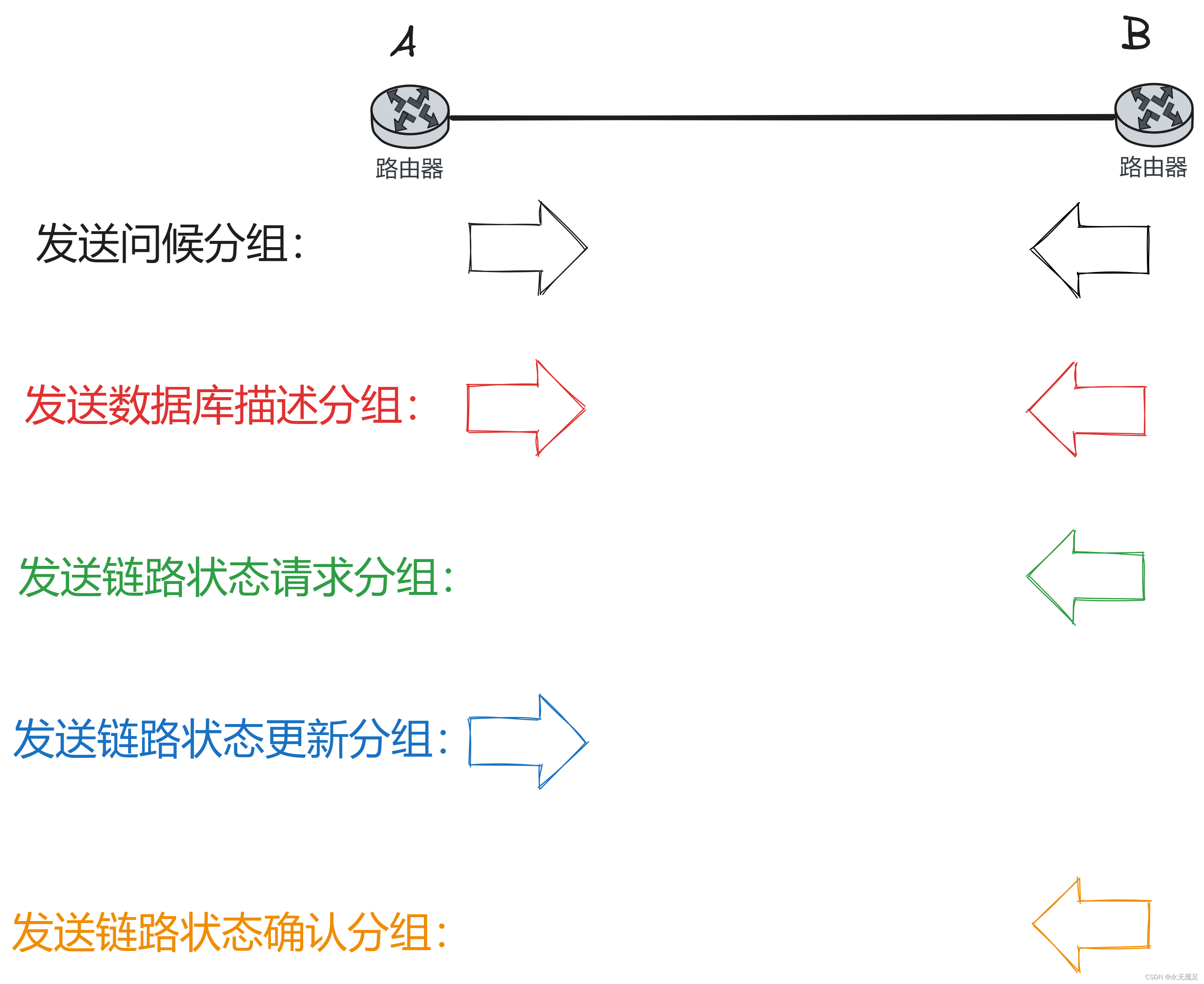

Deconstruct the Stable Diffusion pipeline

Stable Diffusion is a text-to-image latent diffusion model. It is called a latent diffusion model because it works with a lower-dimensional representation of the image instead of the actual pixel space, which makes it more memory efficient.

The encoder compresses the image into a smaller representation, and a decoder to convert the compressed representation back into an image.

For text-to-image models, you’ll need a tokenizer and an encoder to generate text embeddings. From the previous example, you already know you need a UNet model and a scheduler.

The Stable Diffusion model has three separate pretrained models:

from PIL import Image

import torch

from transformers import CLIPTextModel, CLIPTokenizer

from diffusers import AutoencoderKL, UNet2DConditionModel, PNDMScheduler

vae = AutoencoderKL.from_pretrained("CompVis/stable-diffusion-v1-4", subfolder="vae", use_safetensors=True)

tokenizer = CLIPTokenizer.from_pretrained("CompVis/stable-diffusion-v1-4", subfolder="tokenizer")

text_encoder = CLIPTextModel.from_pretrained(

"CompVis/stable-diffusion-v1-4", subfolder="text_encoder", use_safetensors=True

)

unet = UNet2DConditionModel.from_pretrained(

"CompVis/stable-diffusion-v1-4", subfolder="unet", use_safetensors=True

)

选择scheduler:

from diffusers import UniPCMultistepScheduler

scheduler = UniPCMultistepScheduler.from_pretrained("CompVis/stable-diffusion-v1-4", subfolder="scheduler")

为了加速推理,将模型移动到GPU,因为与调度程序不同,它们具有可训练的权重:

torch_device = "cuda"

vae.to(torch_device)

text_encoder.to(torch_device)

unet.to(torch_device)

参数设置

prompt = ["a photograph of an astronaut riding a horse"]

height = 512 # default height of Stable Diffusion

width = 512 # default width of Stable Diffusion

num_inference_steps = 25 # Number of denoising steps

guidance_scale = 7.5 # Scale for classifier-free guidance

generator = torch.manual_seed(0) # Seed generator to create the inital latent noise

batch_size = len(prompt)

Tokenize the text and generate the embeddings from the prompt:

text_input = tokenizer(

prompt, padding="max_length", max_length=tokenizer.model_max_length, truncation=True, return_tensors="pt"

)

with torch.no_grad():

text_embeddings = text_encoder(text_input.input_ids.to(torch_device))[0]

max_length = text_input.input_ids.shape[-1]

uncond_input = tokenizer([""] * batch_size, padding="max_length", max_length=max_length, return_tensors="pt")

uncond_embeddings = text_encoder(uncond_input.input_ids.to(torch_device))[0]

text_embeddings = torch.cat([uncond_embeddings, text_embeddings])

创建随机噪声

latents = torch.randn(

(batch_size, unet.in_channels, height // 8, width // 8),

generator=generator,

)

latents = latents.to(torch_device)

latents = latents * scheduler.init_noise_sigma

denoising loop 包含三个内容:

- Set the scheduler’s timesteps to use during denoising.

- Iterate over the timesteps.

- At each timestep, call the UNet model to predict the noise residual and pass it to the scheduler to compute the previous noisy sample.

from tqdm.auto import tqdm

scheduler.set_timesteps(num_inference_steps)

for t in tqdm(scheduler.timesteps):

# expand the latents if we are doing classifier-free guidance to avoid doing two forward passes.

latent_model_input = torch.cat([latents] * 2)

latent_model_input = scheduler.scale_model_input(latent_model_input, timestep=t)

# predict the noise residual

with torch.no_grad():

noise_pred = unet(latent_model_input, t, encoder_hidden_states=text_embeddings).sample

# perform guidance

noise_pred_uncond, noise_pred_text = noise_pred.chunk(2)

noise_pred = noise_pred_uncond + guidance_scale * (noise_pred_text - noise_pred_uncond)

# compute the previous noisy sample x_t -> x_t-1

latents = scheduler.step(noise_pred, t, latents).prev_sample

最后一步是使用vae将潜在表示解码成图像,并得到带有样本的解码输出:

# scale and decode the image latents with vae

latents = 1 / 0.18215 * latents

with torch.no_grad():

image = vae.decode(latents).sample

convert the image to a PIL.Image to see your generated image!

image = (image / 2 + 0.5).clamp(0, 1).squeeze()

image = (image.permute(1, 2, 0) * 255).to(torch.uint8).cpu().numpy()

images = (image * 255).round().astype("uint8")

image = Image.fromarray(image)

Autopipeline

旨在简化 Diffusers中的各种管道。它是一个通用的、任务优先的管道,可以让您专注于任务。autopipeline自动检测要使用的正确管道类,这使得在不知道特定管道类名称的情况下更容易为任务加载检查点。 支持text-to-image, image-to-image and inpainting.

本教程向您展示如何使用autoppipeline在给定预训练权值的情况下,自动推断要为特定任务加载的管道类。

Text-to-image

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained(

"runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True

).to("cuda")

prompt = "peasant and dragon combat, wood cutting style, viking era, bevel with rune"

image = pipeline(prompt, num_inference_steps=25).images[0]

Image-to-image

from diffusers import AutoPipelineForImage2Image

pipeline = AutoPipelineForImage2Image.from_pretrained(

"runwayml/stable-diffusion-v1-5",

torch_dtype=torch.float16,

use_safetensors=True,

).to("cuda")

prompt = "a portrait of a dog wearing a pearl earring"

url = "https://upload.wikimedia.org/wikipedia/commons/thumb/0/0f/1665_Girl_with_a_Pearl_Earring.jpg/800px-1665_Girl_with_a_Pearl_Earring.jpg"

response = requests.get(url)

image = Image.open(BytesIO(response.content)).convert("RGB")

image.thumbnail((768, 768))

image = pipeline(prompt, image, num_inference_steps=200, strength=0.75, guidance_scale=10.5).images[0]

Inpainting

from diffusers import AutoPipelineForInpainting

from diffusers.utils import load_image

pipeline = AutoPipelineForInpainting.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16, use_safetensors=True

).to("cuda")

img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

init_image = load_image(img_url).convert("RGB")

mask_image = load_image(mask_url).convert("RGB")

prompt = "A majestic tiger sitting on a bench"

image = pipeline(prompt, image=init_image, mask_image=mask_image, num_inference_steps=50, strength=0.80).images[0]

如果你传递了一个可选参数——比如禁用安全检查器——给原始管道,这个参数也会传递给新的管道:

from diffusers import AutoPipelineForText2Image, AutoPipelineForImage2Image

pipeline_text2img = AutoPipelineForText2Image.from_pretrained(

"runwayml/stable-diffusion-v1-5",

torch_dtype=torch.float16,

use_safetensors=True,

requires_safety_checker=False,

).to("cuda")

pipeline_img2img = AutoPipelineForImage2Image.from_pipe(pipeline_text2img)

print(pipe.config.requires_safety_checker)

"False"

也可以对它进行改写

pipeline_img2img = AutoPipelineForImage2Image.from_pipe(pipeline_text2img, requires_safety_checker=True, strength=0.3)

Train a diffusion model

本教程将教你如何从头开始训练unet2d模型在史密森尼蝴蝶数据集的一个子集上生成自己的蝴蝶。

构造training configuration

from dataclasses import dataclass

@dataclass

class TrainingConfig:

image_size = 128 # the generated image resolution

train_batch_size = 16

eval_batch_size = 16 # how many images to sample during evaluation

num_epochs = 50

gradient_accumulation_steps = 1

learning_rate = 1e-4

lr_warmup_steps = 500

save_image_epochs = 10

save_model_epochs = 30

mixed_precision = "fp16" # `no` for float32, `fp16` for automatic mixed precision

output_dir = "ddpm-butterflies-128" # the model name locally and on the HF Hub

push_to_hub = True # whether to upload the saved model to the HF Hub

hub_private_repo = False

overwrite_output_dir = True # overwrite the old model when re-running the notebook

seed = 0

config = TrainingConfig()

加载数据集

from datasets import load_dataset

config.dataset_name = "huggan/smithsonian_butterflies_subset"

dataset = load_dataset(config.dataset_name, split="train")

Datasets使用Image特性自动解码图像数据并将其加载为PIL。我们可以想象的图像:

import matplotlib.pyplot as plt

fig, axs = plt.subplots(1, 4, figsize=(16, 4))

for i, image in enumerate(dataset[:4]["image"]):

axs[i].imshow(image)

axs[i].set_axis_off()

fig.show()

这些图像的大小都不一样,所以你需要先对它们进行预处理:

from torchvision import transforms

preprocess = transforms.Compose(

[

transforms.Resize((config.image_size, config.image_size)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.5], [0.5]),

]

)

def transform(examples):

images = [preprocess(image.convert("RGB")) for image in examples["image"]]

return {"images": images}

dataset.set_transform(transform)

import torch

train_dataloader = torch.utils.data.DataLoader(dataset, batch_size=config.train_batch_size, shuffle=True)

Create a UNet2DModel

from diffusers import UNet2DModel

model = UNet2DModel(

sample_size=config.image_size, # the target image resolution

in_channels=3, # the number of input channels, 3 for RGB images

out_channels=3, # the number of output channels

layers_per_block=2, # how many ResNet layers to use per UNet block

block_out_channels=(128, 128, 256, 256, 512, 512), # the number of output channels for each UNet block

down_block_types=(

"DownBlock2D", # a regular ResNet downsampling block

"DownBlock2D",

"DownBlock2D",

"DownBlock2D",

"AttnDownBlock2D", # a ResNet downsampling block with spatial self-attention

"DownBlock2D",

),

up_block_types=(

"UpBlock2D", # a regular ResNet upsampling block

"AttnUpBlock2D", # a ResNet upsampling block with spatial self-attention

"UpBlock2D",

"UpBlock2D",

"UpBlock2D",

"UpBlock2D",

),

)

快速检查样本图像形状与模型输出形状是否匹配:

sample_image = dataset[0]["images"].unsqueeze(0)

print("Input shape:", sample_image.shape)

print("Output shape:", model(sample_image, timestep=0).sample.shape)

Create a scheduler

让我们来看看DDPMScheduler,并使用add_noise方法为之前的sample_image添加一些随机噪声:

import torch

from PIL import Image

from diffusers import DDPMScheduler

noise_scheduler = DDPMScheduler(num_train_timesteps=1000)

noise = torch.randn(sample_image.shape)

timesteps = torch.LongTensor([50])

noisy_image = noise_scheduler.add_noise(sample_image, noise, timesteps)

Image.fromarray(((noisy_image.permute(0, 2, 3, 1) + 1.0) * 127.5).type(torch.uint8).numpy()[0])

该模型的训练目标是预测添加到图像中的噪声。这一步的损失可以计算为:

import torch.nn.functional as F

noise_pred = model(noisy_image, timesteps).sample

loss = F.mse_loss(noise_pred, noise)

Train The model

到目前为止,您已经拥有了开始训练模型的大部分部分,剩下的就是将所有内容组合在一起。

from diffusers.optimization import get_cosine_schedule_with_warmup

optimizer = torch.optim.AdamW(model.parameters(), lr=config.learning_rate)

lr_scheduler = get_cosine_schedule_with_warmup(

optimizer=optimizer,

num_warmup_steps=config.lr_warmup_steps,

num_training_steps=(len(train_dataloader) * config.num_epochs),

)

# evaluation

# For evaluation, you can use the DDPMPipeline to generate a batch of sample images and save it as a grid:

from diffusers import DDPMPipeline

from diffusers.utils import make_image_grid

import math

import os

def evaluate(config, epoch, pipeline):

# Sample some images from random noise (this is the backward diffusion process).

# The default pipeline output type is `List[PIL.Image]`

images = pipeline(

batch_size=config.eval_batch_size,

generator=torch.manual_seed(config.seed),

).images

# Make a grid out of the images

image_grid = make_image_grid(images, rows=4, cols=4)

# Save the images

test_dir = os.path.join(config.output_dir, "samples")

os.makedirs(test_dir, exist_ok=True)

image_grid.save(f"{test_dir}/{epoch:04d}.png")

训练循环:

def train_loop(config, model, noise_scheduler, optimizer, train_dataloader, lr_scheduler):

# Initialize accelerator and tensorboard logging

accelerator = Accelerator(

mixed_precision=config.mixed_precision,

gradient_accumulation_steps=config.gradient_accumulation_steps,

log_with="tensorboard",

project_dir=os.path.join(config.output_dir, "logs"),

)

if accelerator.is_main_process:

if config.push_to_hub:

repo_name = get_full_repo_name(Path(config.output_dir).name)

repo = Repository(config.output_dir, clone_from=repo_name)

elif config.output_dir is not None:

os.makedirs(config.output_dir, exist_ok=True)

accelerator.init_trackers("train_example")

# Prepare everything

# There is no specific order to remember, you just need to unpack the

# objects in the same order you gave them to the prepare method.

model, optimizer, train_dataloader, lr_scheduler = accelerator.prepare(

model, optimizer, train_dataloader, lr_scheduler

)

global_step = 0

# Now you train the model

for epoch in range(config.num_epochs):

progress_bar = tqdm(total=len(train_dataloader), disable=not accelerator.is_local_main_process)

progress_bar.set_description(f"Epoch {epoch}")

for step, batch in enumerate(train_dataloader):

clean_images = batch["images"]

# Sample noise to add to the images

noise = torch.randn(clean_images.shape).to(clean_images.device)

bs = clean_images.shape[0]

# Sample a random timestep for each image

timesteps = torch.randint(

0, noise_scheduler.config.num_train_timesteps, (bs,), device=clean_images.device

).long()

# Add noise to the clean images according to the noise magnitude at each timestep

# (this is the forward diffusion process)

noisy_images = noise_scheduler.add_noise(clean_images, noise, timesteps)

with accelerator.accumulate(model):

# Predict the noise residual

noise_pred = model(noisy_images, timesteps, return_dict=False)[0]

loss = F.mse_loss(noise_pred, noise)

accelerator.backward(loss)

accelerator.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

progress_bar.update(1)

logs = {"loss": loss.detach().item(), "lr": lr_scheduler.get_last_lr()[0], "step": global_step}

progress_bar.set_postfix(**logs)

accelerator.log(logs, step=global_step)

global_step += 1

# After each epoch you optionally sample some demo images with evaluate() and save the model

if accelerator.is_main_process:

pipeline = DDPMPipeline(unet=accelerator.unwrap_model(model), scheduler=noise_scheduler)

if (epoch + 1) % config.save_image_epochs == 0 or epoch == config.num_epochs - 1:

evaluate(config, epoch, pipeline)

if (epoch + 1) % config.save_model_epochs == 0 or epoch == config.num_epochs - 1:

if config.push_to_hub:

repo.push_to_hub(commit_message=f"Epoch {epoch}", blocking=True)

else:

pipeline.save_pretrained(config.output_dir)