文章目录

- 一、建图

- 1.1 点云地图

- 1.2 高精地图

- 二、参数配置

- 三、传感器数据通信接口

- 3.1 雷达点云

- 3.2 图像

- 3.3 IMU

- 3.4 GNSS RTK

- 四、实车调试

- 4.1 编写启动

- 4.2 修改传感器外参

- 4.3 修改车身参数

- 4.4 实车调试

本文介绍了 Autoware.universe 在实车上的部署,本系列其他文章:

Autoware.universe部署01:Ubuntu20.04安装Autoware.universe并与Awsim联调

Autoware.universe部署02:高精Lanelet2地图的绘制

Autoware.universe部署03:与Carla(二进制版)联调

Autoware.universe部署04:universe传感器ROS2驱动

一、建图

1.1 点云地图

不同于AI,官方在universe中并未提供建图功能,官方推荐其他建图算法:Autoware-documentation

录制点云bag,使用其他SLAM算法建图(最好使用能与GNSS组合的SLAM算法,这样才能建出绝对坐标系的点云地图,方便后面使用RTK),例如LIO-SAM

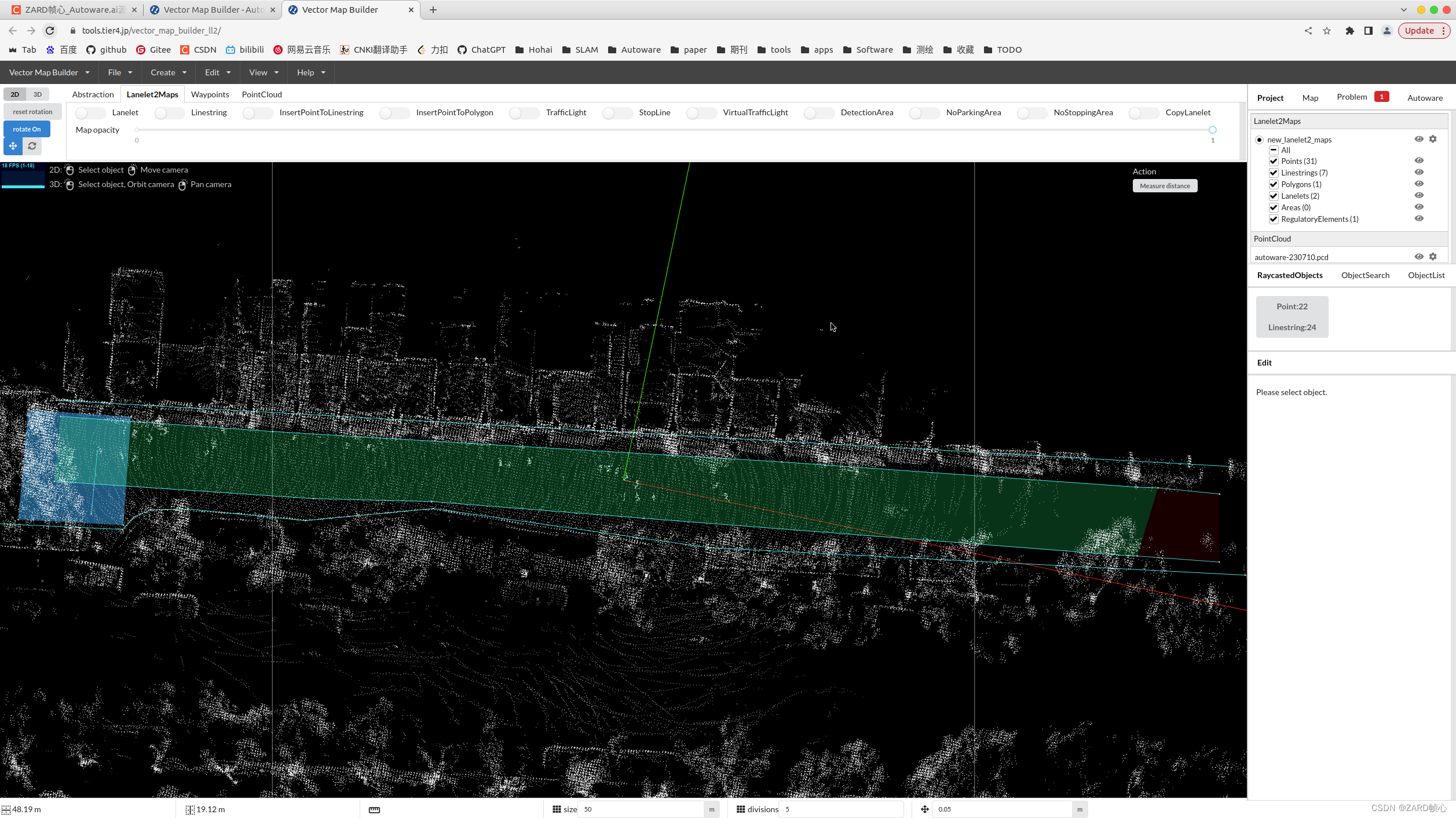

1.2 高精地图

使用Autoware.universe部署02:高精Lanelet2地图的绘制的方法绘制高精地图,由于场地有限,仅仅绘制了一条单向车道线,一个小的停车场和一个小的停车位

二、参数配置

(1)修改 autoware.launch.xml 文件(这里不改也行,后面可以外部传参)

在src/launcher/autoware_launch/autoware_launch/launch/autoware.launch.xml第17行将launch_sensing_driver中的true改成false,不使用Autoware自带的驱动程序,可以直接复制以下替换第17行。

<arg name="launch_sensing_driver" default="false" description="launch sensing driver"/>

(2)修改 gnss.launch.xml 文件

在src/sensor_kit/sample_sensor_kit_launch/sample_sensor_kit_launch/launch/gnss.launch.xml第4行将coordinate_system中的1改成2,可以直接复制以下替换第4行。

<arg name="coordinate_system" default="2" description="0:UTM, 1:MGRS, 2:PLANE"/>

在第5行添加如下:

<arg name="plane_zone" default="0"/>

(3)如果不使用GNSS,则在/src/universe/autoware.universe/launch/tier4_localization_launch/launch/util/util.launch.xml将GNSS设置为不可用

<arg name="gnss_enabled" value="false"/>

<!-- <arg name="gnss_enabled" value="true"/> -->

(4)预处理参数修改

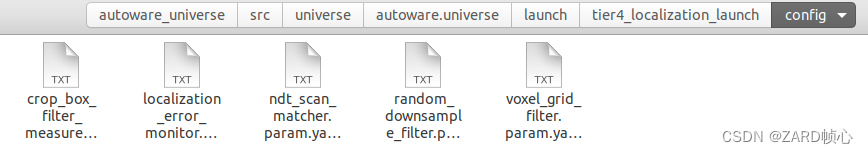

可能需要修改点云预处理的参数,可以在下面找到autoware_universe/src/universe/autoware.universe/launch/tier4_localization_launch/config,尤其是体素滤波参数:

三、传感器数据通信接口

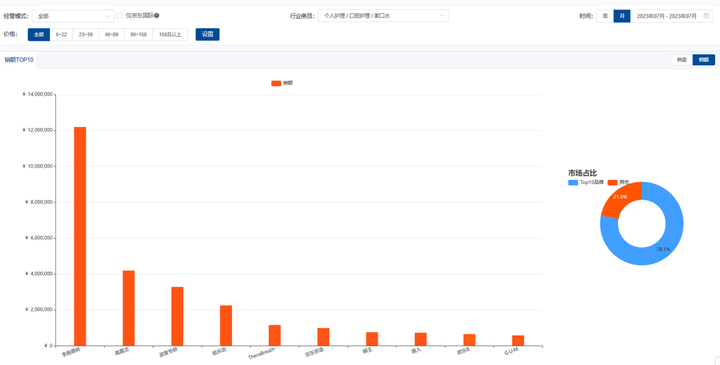

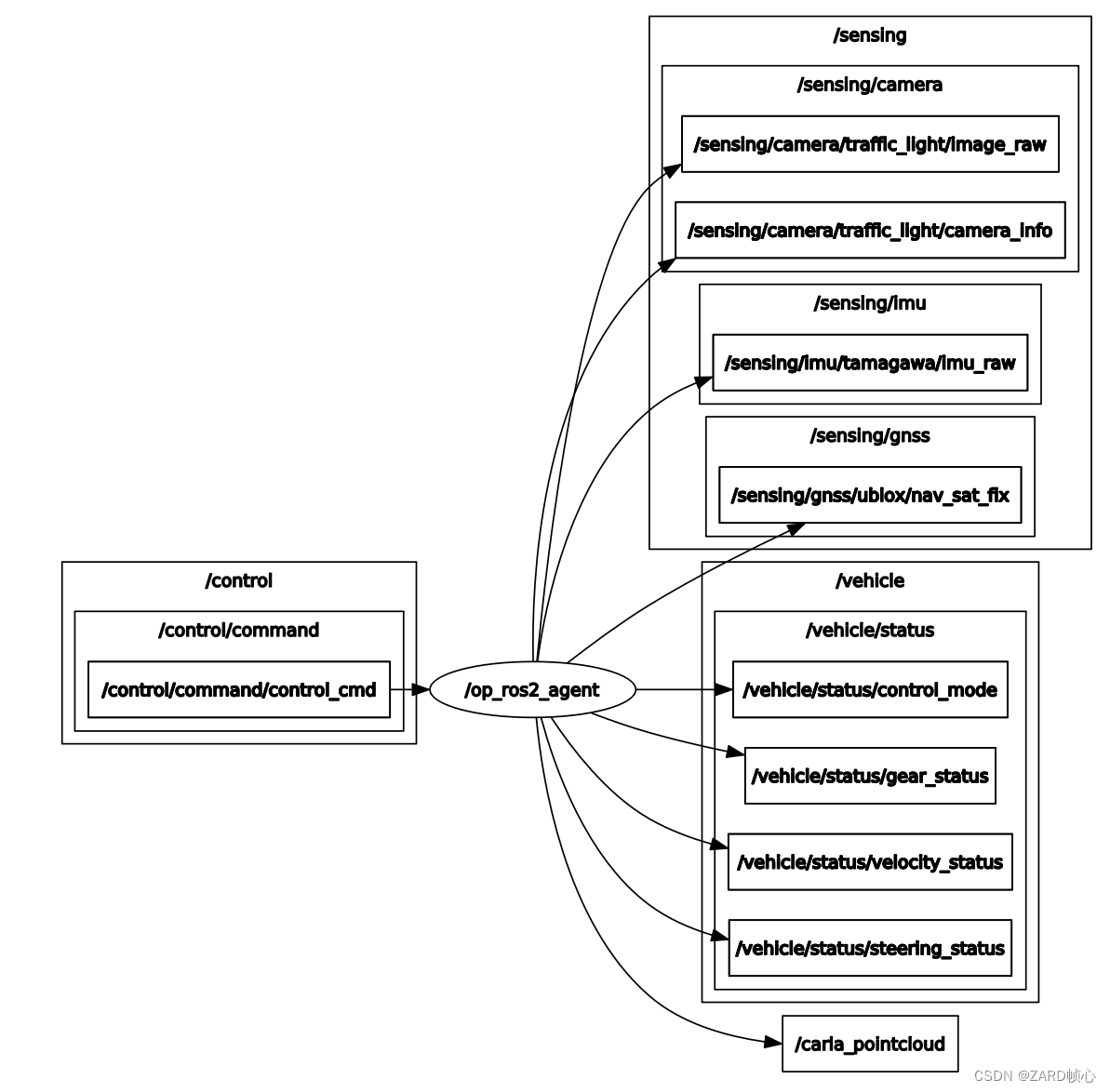

根据官方提供的流程图,可以看到各个节点之间的数据通信:https://autowarefoundation.github.io/autoware-documentation/galactic/design/autoware-architecture/node-diagram/

从Carla仿真中也可以得知,Carla输出以及接收以下消息:

其中:

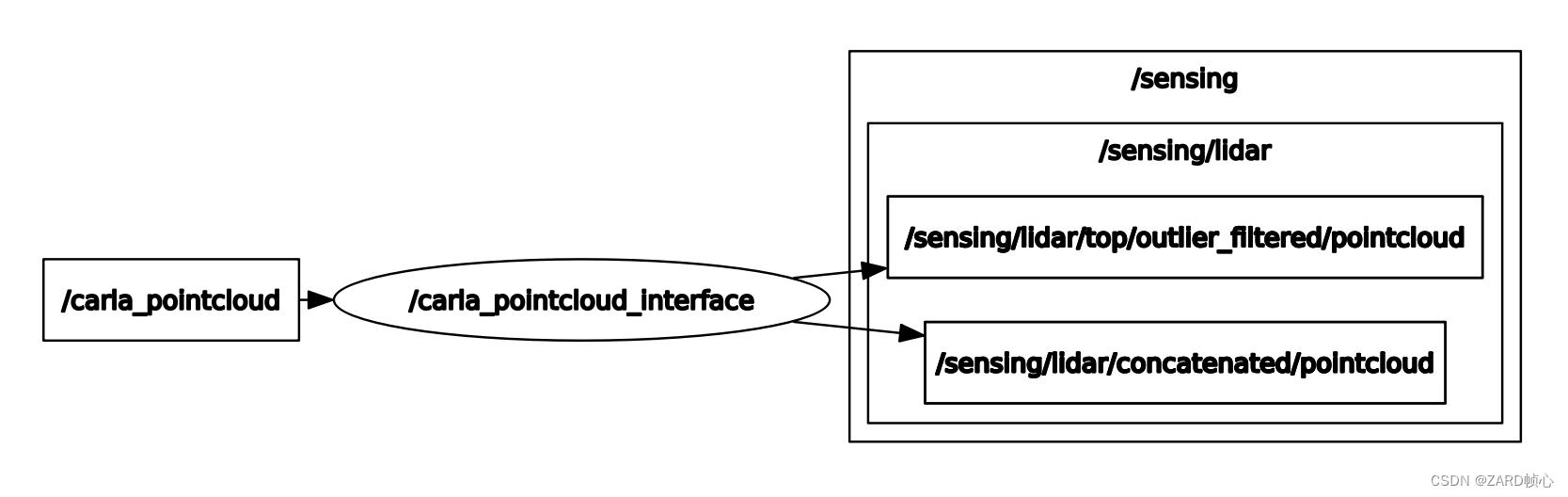

/control/command/contral_cmd为Autoware发出的用于控制小车底盘的消息,/op_ros2_agent会将其转化为CAN消息,进而控制底盘;/sensing/imu/tamagawa/imu_raw为IMU消息,用于获取里程计,frame_id为tamagawa/imu_link;/sensing/gnss/ublox/nav_sat_fix为GNSS消息,用于位姿初始化(可选),frame_id为gnss_link;/sensing/camera/traffic_light/image_raw为图像消息,用于识别红绿灯,frame_id为camera4/camera_link;/vehicla/status为车反馈的速度等状态消息(具体可以看CAN代码);/Carla_pointcloud为Caral输出的点云,Autoware输入的点云为/sensing/lidar/top/outlier_filtered/pointcloud和/sensing/lidar/concatenated/pointcloud(frame_id均为base_link),下面的/carla_pointcloud_interface节点会将/Carla_pointcloud转化,/sensing/lidar/top/outlier_filtered/pointcloud用于定位,/sensing/lidar/concatenated/pointcloud用于感知;

3.1 雷达点云

编写了如下的节点,将/points_raw(frame_id为velodyne)(或者是你的雷达驱动输出)坐标转换到base_link(需要雷达外参),然后发布 /sensing/lidar/top/outlier_filtered/pointcloud和/sensing/lidar/concatenated/pointcloud

#include <rclcpp/rclcpp.hpp>

#include <sensor_msgs/msg/point_cloud2.hpp>

#include <tf2_geometry_msgs/tf2_geometry_msgs.h>

#include <pcl_ros/transforms.hpp>

class LidarTransformNode : public rclcpp::Node

{

public:

// 构造函数

LidarTransformNode() : Node("points_raw_transform_node")

{

// 初始化坐标转换参数

// this->declare_parameter<double>("transform_x", 0.0);

// this->get_parameter("transform_x", transform_x);

transform_x = this->declare_parameter("transform_x", 0.0);

transform_y = this->declare_parameter("transform_y", 0.0);

transform_z = this->declare_parameter("transform_z", 0.0);

transform_roll = this->declare_parameter("transform_roll", 0.0);

transform_pitch = this->declare_parameter("transform_pitch", 0.0);

transform_yaw = this->declare_parameter("transform_yaw", 0.0);

std::cout << "velodyne to base_link:" << std::endl

<< " transform_x: " << transform_x << std::endl

<< " transform_y: " << transform_y << std::endl

<< " transform_z: " << transform_z << std::endl

<< " transform_roll: " << transform_roll << std::endl

<< " transform_pitch:" << transform_pitch << std::endl

<< " transform_yaw: " << transform_yaw << std::endl;

// Initialize translation

transform_stamp.transform.translation.x = transform_x;

transform_stamp.transform.translation.y = transform_y;

transform_stamp.transform.translation.z = transform_z;

// Initialize rotation (quaternion)

tf2::Quaternion quaternion;

quaternion.setRPY(transform_roll, transform_pitch, transform_yaw);

transform_stamp.transform.rotation.x = quaternion.x();

transform_stamp.transform.rotation.y = quaternion.y();

transform_stamp.transform.rotation.z = quaternion.z();

transform_stamp.transform.rotation.w = quaternion.w();

// 创建发布者

publisher_1 = this->create_publisher<sensor_msgs::msg::PointCloud2>(

"/sensing/lidar/top/outlier_filtered/pointcloud",

10);

publisher_2 = this->create_publisher<sensor_msgs::msg::PointCloud2>(

"/sensing/lidar/concatenated/pointcloud",

10);

// 订阅原始点云消息

subscription_ = this->create_subscription<sensor_msgs::msg::PointCloud2>(

"/points_raw",

10,

std::bind(&LidarTransformNode::pointCloudCallback, this, std::placeholders::_1));

}

private:

void pointCloudCallback(const sensor_msgs::msg::PointCloud2::SharedPtr msg)

{

// 过滤掉距离传感器较近的点

pcl::PointCloud<pcl::PointXYZI>::Ptr xyz_cloud(new pcl::PointCloud<pcl::PointXYZI>);

pcl::PointCloud<pcl::PointXYZI>::Ptr pcl_output(new pcl::PointCloud<pcl::PointXYZI>);

pcl::fromROSMsg(*msg, *xyz_cloud);

for (size_t i = 0; i < xyz_cloud->points.size(); ++i)

{

if (sqrt(xyz_cloud->points[i].x * xyz_cloud->points[i].x + xyz_cloud->points[i].y * xyz_cloud->points[i].y +

xyz_cloud->points[i].z * xyz_cloud->points[i].z) >= RadiusOutlierFilter && !isnan(xyz_cloud->points[i].z))

{

pcl_output->points.push_back(xyz_cloud->points.at(i));

}

}

sensor_msgs::msg::PointCloud2 output;

pcl::toROSMsg(*pcl_output, output);

output.header = msg->header;

// 执行坐标转换

sensor_msgs::msg::PointCloud2 transformed_cloud;

pcl_ros::transformPointCloud("base_link", transform_stamp, output, transformed_cloud);

// 发布转换后的点云消息

publisher_1->publish(transformed_cloud);

publisher_2->publish(transformed_cloud);

}

rclcpp::Subscription<sensor_msgs::msg::PointCloud2>::SharedPtr subscription_;

rclcpp::Publisher<sensor_msgs::msg::PointCloud2>::SharedPtr publisher_1, publisher_2;

double transform_x, transform_y, transform_z, transform_roll, transform_pitch, transform_yaw;

geometry_msgs::msg::TransformStamped transform_stamp;

};

int main(int argc, char **argv)

{

// 初始化节点

rclcpp::init(argc, argv);

// 创建实例

auto node = std::make_shared<LidarTransformNode>();

rclcpp::spin(node);

rclcpp::shutdown();

return 0;

}

3.2 图像

由于Autoware接收/sensing/camera/traffic_light/image_raw图像消息,用于交通灯识别(融合感知可以使用其他7个相机,但是是可选,因此图像不用于融合感知),frame_id为camera4/camera_link,因此再参数文件中修改frame_id:

/**:

ros__parameters:

# 设备挂载点

video_device: "/dev/video2"

# 帧率

framerate: 30.0

io_method: "mmap"

# 坐标系

frame_id: "camera4/camera_link"

# 像素格式

pixel_format: "mjpeg2rgb" # see usb_cam/supported_formats for list of supported formats

# 像素尺寸

image_width: 1920

image_height: 1080

# 相机名称

camera_name: "JR_HF868"

# 标定参数

camera_info_url: "package://usb_cam/config/camera_info.yaml"

# 亮度

brightness: 50

# 对比度

contrast: 50

# 饱和度

saturation: 40

# 清晰度

sharpness: 70

# 增益

gain: -1

# 白平衡

auto_white_balance: true

white_balance: 4000

# 曝光

autoexposure: true

exposure: 100

# 聚焦

autofocus: false

focus: -1

在节点代码中修改发布话题:

UsbCamNode::UsbCamNode(const rclcpp::NodeOptions &node_options)

: Node("usb_cam", node_options),

m_camera(new usb_cam::UsbCam()),

m_image_msg(new sensor_msgs::msg::Image()),

m_image_publisher(std::make_shared<image_transport::CameraPublisher>(

image_transport::create_camera_publisher(this, "/sensing/camera/traffic_light/image_raw",

rclcpp::QoS{100}.get_rmw_qos_profile()))),

m_parameters(),

m_camera_info_msg(new sensor_msgs::msg::CameraInfo()),

m_service_capture(

this->create_service<std_srvs::srv::SetBool>(

"set_capture",

std::bind(

&UsbCamNode::service_capture,

this,

std::placeholders::_1,

std::placeholders::_2,

std::placeholders::_3)))

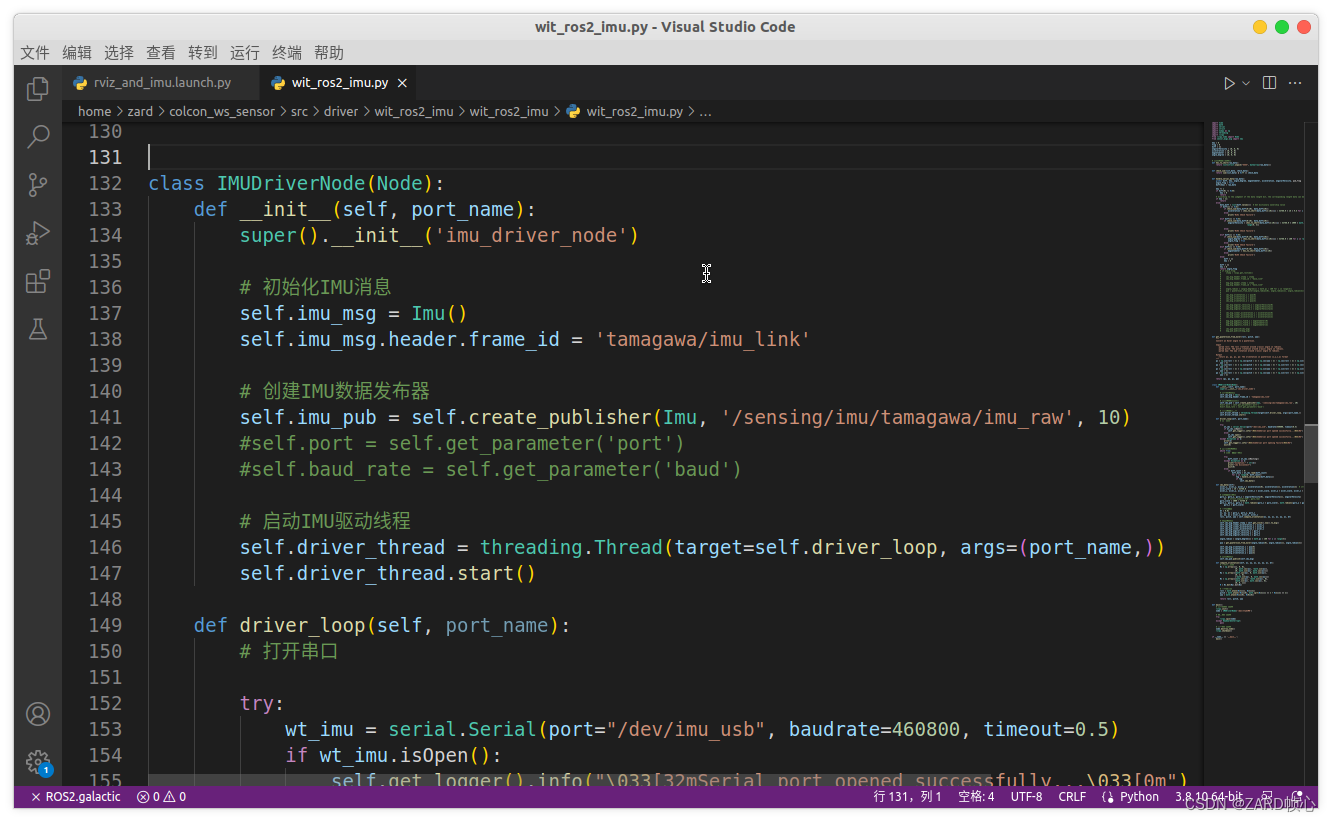

3.3 IMU

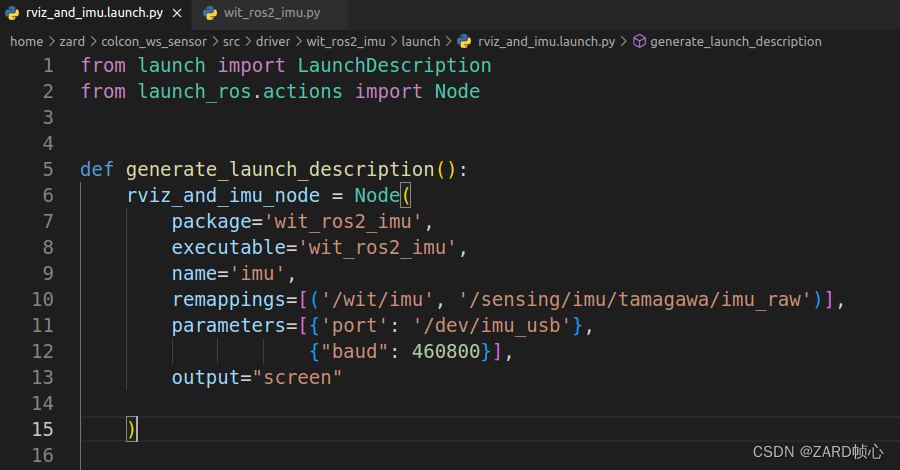

修改发布话题名称为/sensing/imu/tamagawa/imu_raw以及坐标系为tamagawa/imu_link

launch也修改一下:

3.4 GNSS RTK

四、实车调试

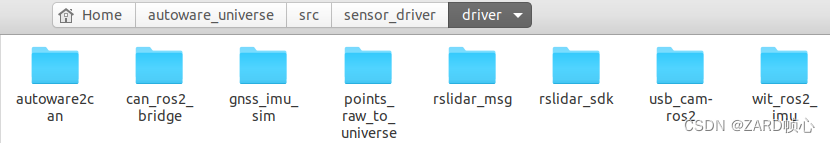

4.1 编写启动

将之前写的各个传感器驱动放入Autoware的工作空间,为他们写一个统一的启动:

<launch>

<!-- 雷达驱动 -->

<include file="$(find-pkg-share rslidar_sdk)/launch/start.py" />

<!-- 转换为Autoware需要的 -->

<include file="$(find-pkg-share points_raw_transform)/launch/points_raw_transform.launch.xml" >

<!-- LiDAR to base link -->

<arg name="transform_x" value="0.75"/>

<arg name="transform_y" value="0.35"/>

<arg name="transform_z" value="0.6"/>

<arg name="transform_roll" value="0.0"/>

<arg name="transform_pitch" value="0.0"/>

<arg name="transform_yaw" value="0.0"/>

<arg name="RadiusOutlierFilter" value="2.5"/>

</include>

<!-- 相机驱动 -->

<include file="$(find-pkg-share usb_cam)/launch/JR_HF868.launch.py" />

<!-- 生成假的GNSS和IMU数据,用于调试 -->

<!-- <include file="$(find-pkg-share gnss_imu_sim)/launch/both.launch.py" /> -->

<!-- GNSS模块驱动(可选,这里未使用) -->

<!-- <include file="$(find-pkg-share gnss_imu_sim)/launch/M620.launch.py" /> -->

<!-- IMU模块驱动 -->

<include file="$(find-pkg-share wit_ros2_imu)/rviz_and_imu.launch.py" />

<!-- CAN驱动 -->

<include file="$(find-pkg-share can_ros2_bridge)/can.launch.py" />

<!-- 启动Autoware -->

<include file="$(find-pkg-share autoware_launch)/launch/autoware.launch.xml">

<!-- 地图路径 -->

<arg name="map_path" value="/home/car/Autoware/QinHang"/>

<!-- 车辆和传感器模型 -->

<arg name="vehicle_model" value="sample_vehicle"/>

<arg name="sensor_model" value="sample_sensor_kit"/>

<!-- 不使用仿真时间 -->

<arg name="use_sim_time" value="false"/>

<!-- 不使用Autoware自带的传感器驱动 -->

<arg name="launch_sensing_driver" value="false"/>

</include>

</launch>

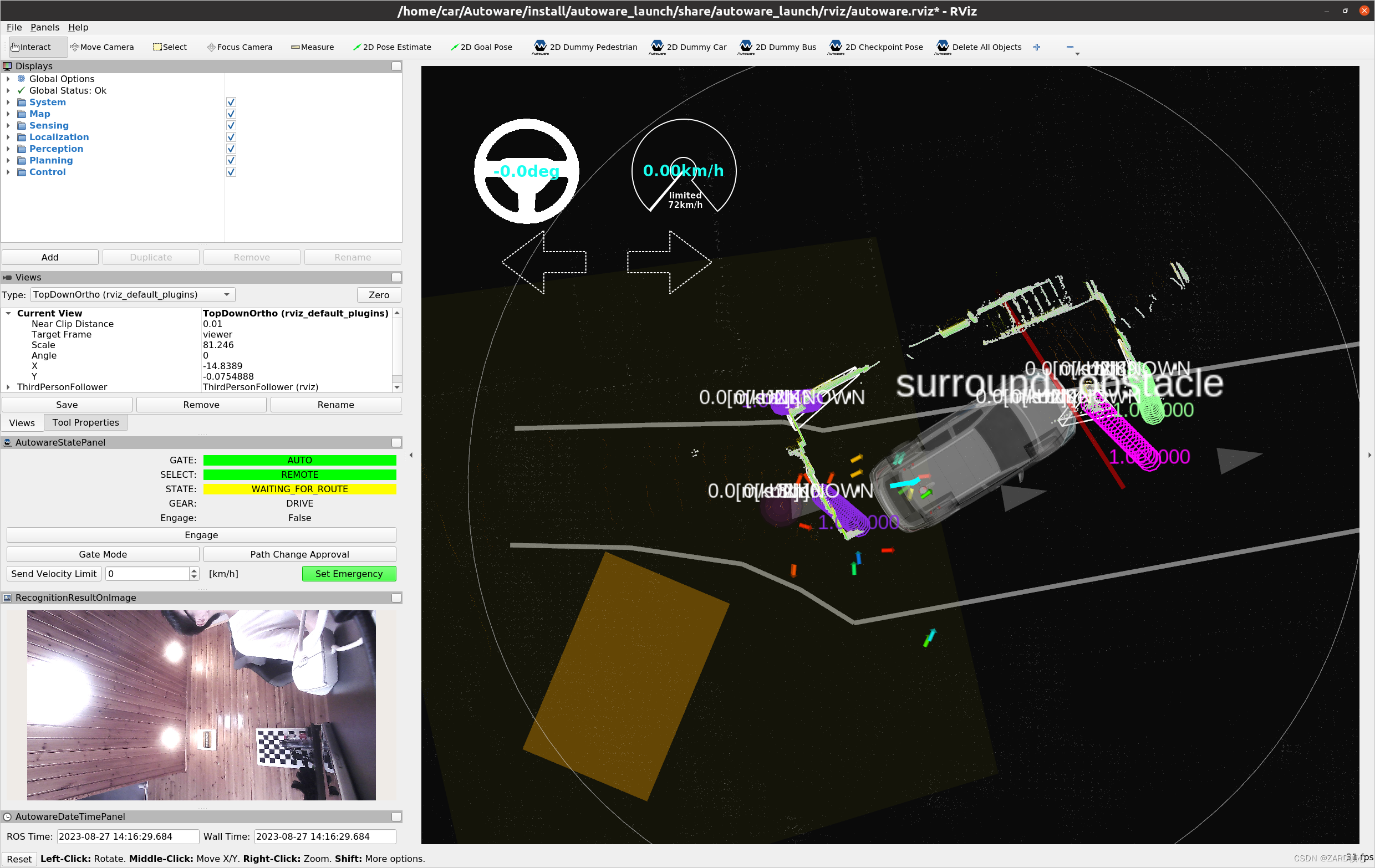

以上各个传感器调试好之后,简单测试,可以定位,可以进行红绿灯识别:

# 编译添加的几个功能包

# colcon build --symlink-install --cmake-args -DCMAKE_BUILD_TYPE=Release --packages-select colcon build --symlink-install --cmake-args -DCMAKE_BUILD_TYPE=Release --packages-select rslidar_msg rslidar_sdk points_raw_transform usb_cam wit_ros2_imu can_ros2_bridge gnss_imu_sim sensor_driver

source install/setup.bash

ros2 launch sensor_driver senser_driver.launch.xml

4.2 修改传感器外参

安装几个使用的传感器,然后修改其外参(主要是修改IMU和用于识别红绿灯的相机,雷达我们已经在转换节点将其转换到base_link坐标系),外参需要标定(也可以直接拿尺子测量,但不准确),标定方法参考我的文章:SLAM各传感器的标定总结:Camera/IMU/LiDAR

src/sensor_kit/sample_sensor_kit_launch/sample_sensor_kit_description/config/sensor_kit_calibration.yaml:

sensor_kit_base_link:

camera0/camera_link:

x: 0.10731

y: 0.56343

z: -0.27697

roll: -0.025

pitch: 0.315

yaw: 1.035

camera1/camera_link:

x: -0.10731

y: -0.56343

z: -0.27697

roll: -0.025

pitch: 0.32

yaw: -2.12

camera2/camera_link:

x: 0.10731

y: -0.56343

z: -0.27697

roll: -0.00

pitch: 0.335

yaw: -1.04

camera3/camera_link:

x: -0.10731

y: 0.56343

z: -0.27697

roll: 0.0

pitch: 0.325

yaw: 2.0943951

camera4/camera_link:

x: 0.07356

y: 0.0

z: -0.0525

roll: 0.0

pitch: -0.03

yaw: -0.005

camera5/camera_link:

x: -0.07356

y: 0.0

z: -0.0525

roll: 0.0

pitch: -0.01

yaw: 3.125

traffic_light_right_camera/camera_link:

x: 0.75

y: 0.13

z: 0.60

roll: 1.57

pitch: 0.0

yaw: 1.57

traffic_light_left_camera/camera_link:

x: 0.57

y: 0.56

z: 0.60

roll: 1.57

pitch: 0.0

yaw: 1.57

velodyne_top_base_link:

x: 0.0

y: 0.0

z: 0.0

roll: 0.0

pitch: 0.0

yaw: 1.575

velodyne_left_base_link:

x: 0.0

y: 0.56362

z: -0.30555

roll: -0.02

pitch: 0.71

yaw: 1.575

velodyne_right_base_link:

x: 0.0

y: -0.56362

z: -0.30555

roll: -0.01

pitch: 0.71

yaw: -1.580

gnss_link:

x: -0.1

y: 0.0

z: -0.2

roll: 0.0

pitch: 0.0

yaw: 0.0

tamagawa/imu_link:

x: 0.75

y: 0.35

z: 0.60

roll: 0.0

pitch: 0.0

yaw: 0.0

4.3 修改车身参数

为了适配我们的车辆,需要修改车身参数(下面这些参数可以自己测量一下你的车子)src/vehicle/sample_vehicle_launch/sample_vehicle_description/config/vehicle_info.param.yaml:

/**:

ros__parameters:

wheel_radius: 0.18 # The radius of the wheel, primarily used for dead reckoning.车轮半径,用于航位推算

wheel_width: 0.12 # The lateral width of a wheel tire, primarily used for dead reckoning.车轮横向宽度,用于航位推算

wheel_base: 1.03 # between front wheel center and rear wheel center 轮距,前后轮中心距离

wheel_tread: 0.7 # between left wheel center and right wheel center 轴距,左右轮中心距离

front_overhang: 0.18 # between front wheel center and vehicle front,前悬架,前轮中心与车头距离

rear_overhang: 0.22 # between rear wheel center and vehicle rear 后悬架

left_overhang: 0.0 # between left wheel center and vehicle left 左悬伸,左轮中心与车左侧距离

right_overhang: 0.0 # between right wheel center and vehicle right 又悬伸

vehicle_height: 1.0 # 车高

max_steer_angle: 0.45 # [rad] 最大转弯半径

以上内容修改之后重新编译:

# 更新传感器外参,更新汽车车身参数(轮距等)

colcon build --symlink-install --cmake-args -DCMAKE_BUILD_TYPE=Release --packages-select sample_sensor_kit_description sample_vehicle_description vehicle_info_util

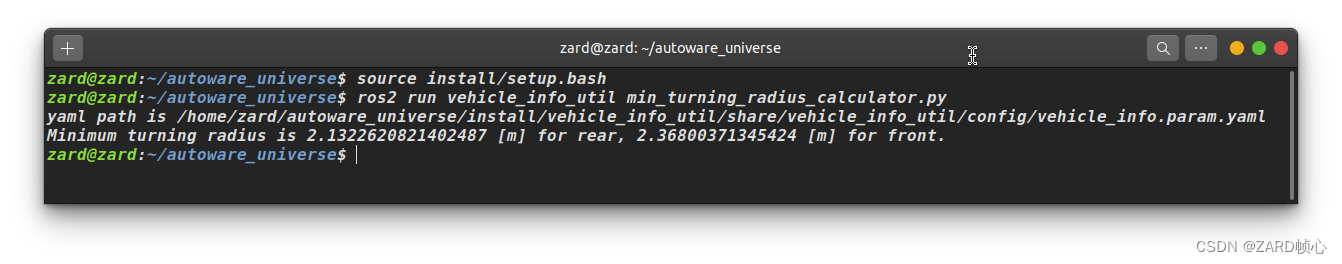

可以通过下列命令查看根据上述参数计算的车子最小转弯半径:

ros2 run vehicle_info_util min_turning_radius_calculator.py

4.4 实车调试

启动:

source install/setup.bash

ros2 launch sensor_driver senser_driver.launch.xml

电脑不插电性能太差,导致跑不动…未完待续