基于前两篇对btree的基础介绍,本文将从源码角度讲解btree的插入流程,相关至内容见:

postgres源码解析41 btree索引文件的创建–1

postgres源码解析42 btree索引文件的创建–2

数据结构

/*

* BTStackData -- As we descend a tree, we push the location of pivot

* tuples whose downlink we are about to follow onto a private stack. If

* we split a leaf, we use this stack to walk back up the tree and insert

* data into its parent page at the correct location. We also have to

* recursively insert into the grandparent page if and when the parent page

* splits. Our private stack can become stale due to concurrent page

* splits and page deletions, but it should never give us an irredeemably

* bad picture.

*/

typedef struct BTStackData

{

BlockNumber bts_blkno; // 存储该元组所在页号

OffsetNumber bts_offset; // 元组在该页中的偏移量

struct BTStackData *bts_parent; // 指向该节点的父指针

} BTStackData;

typedef BTStackData *BTStack; // 类似一个栈结构

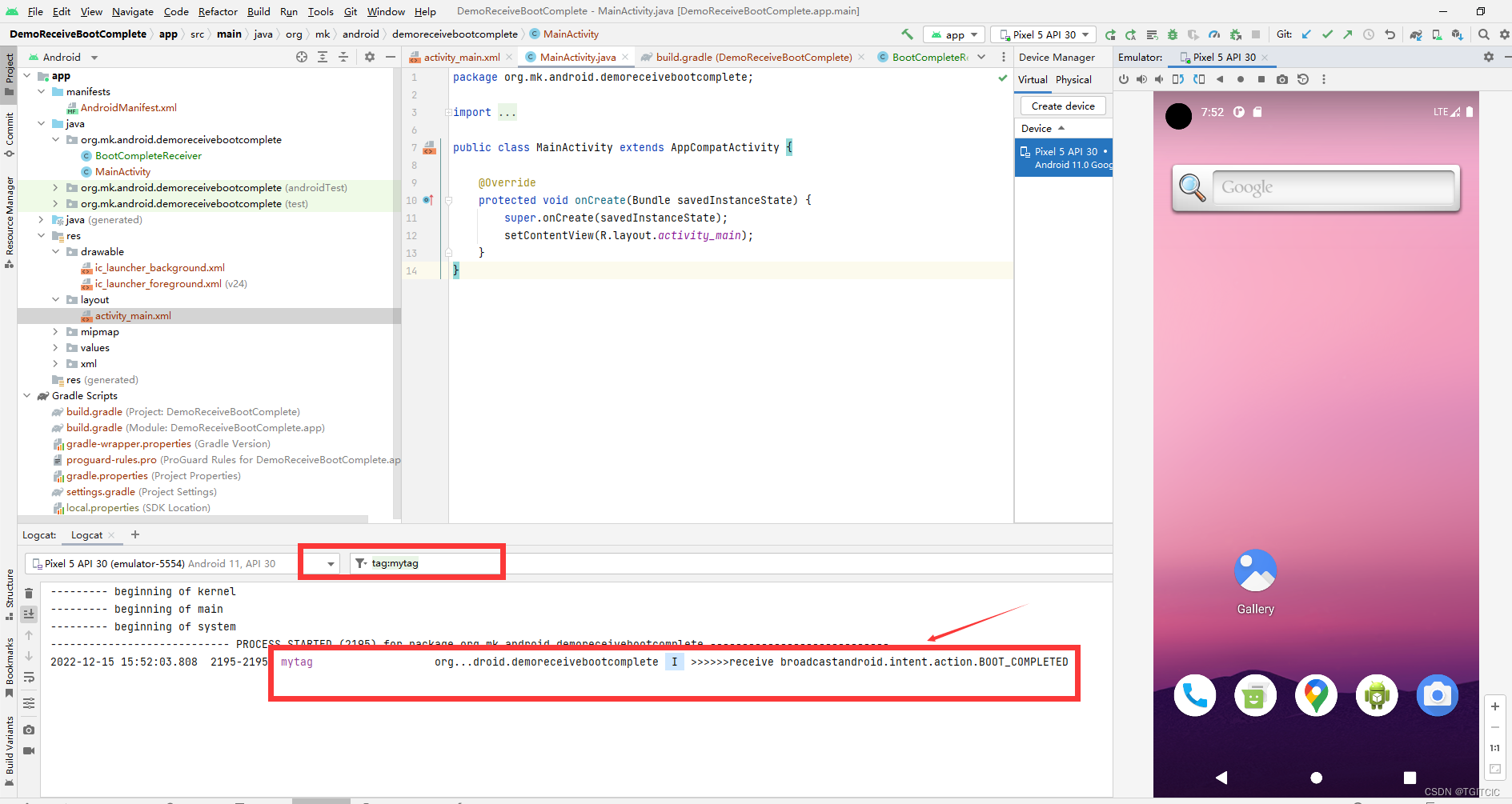

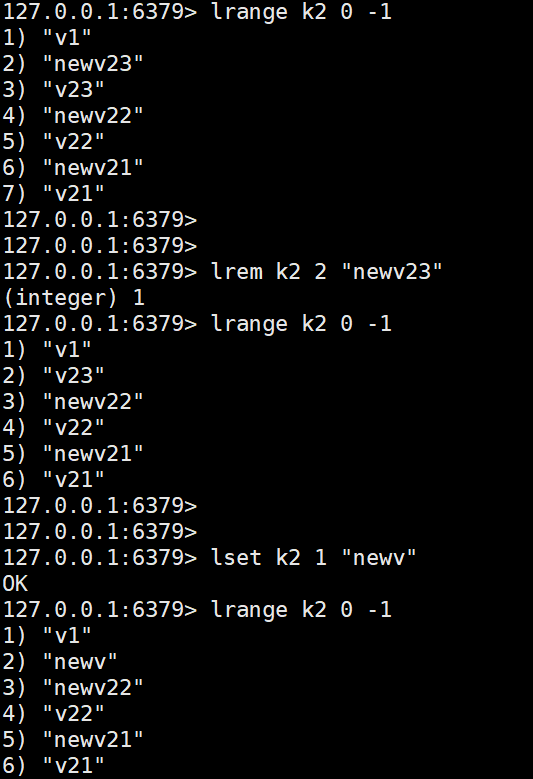

btinsert执行流程

该函数的主要工作为:首先将heap表元组对应的索引列封装成一个索引元组,然后将该索引元祖插入索引页中,最后向上层返回结果

/*

* btinsert() -- insert an index tuple into a btree.

*

* Descend the tree recursively, find the appropriate location for our

* new tuple, and put it there.

*/

bool

btinsert(Relation rel, Datum *values, bool *isnull,

ItemPointer ht_ctid, Relation heapRel,

IndexUniqueCheck checkUnique,

bool indexUnchanged,

IndexInfo *indexInfo)

{

bool result;

IndexTuple itup;

/* generate an index tuple */

itup = index_form_tuple(RelationGetDescr(rel), values, isnull);

itup->t_tid = *ht_ctid;

result = _bt_doinsert(rel, itup, checkUnique, indexUnchanged, heapRel);

pfree(itup);

return result;

}

_bt_doinsert 执行流程

- 根据索引元祖计算相应的扫描键 itup_key,即构建 BTScanInsert结构体;

2) 定义并初始化 BTInsertStateData结构体,包含索引元组、元组大小以及上述构建的扫描键;

3)调用 _bt_search_insert函数确定待插入索引原子所在的叶子结点;

4)如果是唯一约束,调用 _bt_check_unique 需进行唯一性检查,如存在重复key的索引元组事务还未结束,则需释放该leaf buf锁,然后等待其他事务完成,释放search过程建立的stack内存,之后回到第3步继续执行search操作;不存在则将 itup_key->scantid = &itup->t_tid;

5)如果待索引元组是唯一索引且存在则释放此buffer上锁,否则进行如下操作:

1. 进行序列化检查SSI;

2. 确定元组的插入位置;

3. 向指定页插入索引元祖,必要时会进行叶子结点的分裂操作

bool

_bt_doinsert(Relation rel, IndexTuple itup,

IndexUniqueCheck checkUnique, bool indexUnchanged,

Relation heapRel)

{

bool is_unique = false;

BTInsertStateData insertstate;

BTScanInsert itup_key;

BTStack stack;

bool checkingunique = (checkUnique != UNIQUE_CHECK_NO);

/* we need an insertion scan key to do our search, so build one */

itup_key = _bt_mkscankey(rel, itup);

if (checkingunique)

{

if (!itup_key->anynullkeys)

{

/* No (heapkeyspace) scantid until uniqueness established */

itup_key->scantid = NULL;

}

else

{

/*

* Scan key for new tuple contains NULL key values. Bypass

* checkingunique steps. They are unnecessary because core code

* considers NULL unequal to every value, including NULL.

*

* This optimization avoids O(N^2) behavior within the

* _bt_findinsertloc() heapkeyspace path when a unique index has a

* large number of "duplicates" with NULL key values.

*/

checkingunique = false;

/* Tuple is unique in the sense that core code cares about */

Assert(checkUnique != UNIQUE_CHECK_EXISTING);

is_unique = true;

}

}

/*

* Fill in the BTInsertState working area, to track the current page and

* position within the page to insert on.

*

* Note that itemsz is passed down to lower level code that deals with

* inserting the item. It must be MAXALIGN()'d. This ensures that space

* accounting code consistently considers the alignment overhead that we

* expect PageAddItem() will add later. (Actually, index_form_tuple() is

* already conservative about alignment, but we don't rely on that from

* this distance. Besides, preserving the "true" tuple size in index

* tuple headers for the benefit of nbtsplitloc.c might happen someday.

* Note that heapam does not MAXALIGN() each heap tuple's lp_len field.)

*/

insertstate.itup = itup;

insertstate.itemsz = MAXALIGN(IndexTupleSize(itup));

insertstate.itup_key = itup_key;

insertstate.bounds_valid = false;

insertstate.buf = InvalidBuffer;

insertstate.postingoff = 0;

search:

/*

* Find and lock the leaf page that the tuple should be added to by

* searching from the root page. insertstate.buf will hold a buffer that

* is locked in exclusive mode afterwards.

*/

stack = _bt_search_insert(rel, &insertstate);

/*

* checkingunique inserts are not allowed to go ahead when two tuples with

* equal key attribute values would be visible to new MVCC snapshots once

* the xact commits. Check for conflicts in the locked page/buffer (if

* needed) here.

*

* It might be necessary to check a page to the right in _bt_check_unique,

* though that should be very rare. In practice the first page the value

* could be on (with scantid omitted) is almost always also the only page

* that a matching tuple might be found on. This is due to the behavior

* of _bt_findsplitloc with duplicate tuples -- a group of duplicates can

* only be allowed to cross a page boundary when there is no candidate

* leaf page split point that avoids it. Also, _bt_check_unique can use

* the leaf page high key to determine that there will be no duplicates on

* the right sibling without actually visiting it (it uses the high key in

* cases where the new item happens to belong at the far right of the leaf

* page).

*

* NOTE: obviously, _bt_check_unique can only detect keys that are already

* in the index; so it cannot defend against concurrent insertions of the

* same key. We protect against that by means of holding a write lock on

* the first page the value could be on, with omitted/-inf value for the

* implicit heap TID tiebreaker attribute. Any other would-be inserter of

* the same key must acquire a write lock on the same page, so only one

* would-be inserter can be making the check at one time. Furthermore,

* once we are past the check we hold write locks continuously until we

* have performed our insertion, so no later inserter can fail to see our

* insertion. (This requires some care in _bt_findinsertloc.)

*

* If we must wait for another xact, we release the lock while waiting,

* and then must perform a new search.

*

* For a partial uniqueness check, we don't wait for the other xact. Just

* let the tuple in and return false for possibly non-unique, or true for

* definitely unique.

*/

if (checkingunique)

{

TransactionId xwait;

uint32 speculativeToken;

xwait = _bt_check_unique(rel, &insertstate, heapRel, checkUnique,

&is_unique, &speculativeToken);

if (unlikely(TransactionIdIsValid(xwait)))

{

/* Have to wait for the other guy ... */

_bt_relbuf(rel, insertstate.buf);

insertstate.buf = InvalidBuffer;

/*

* If it's a speculative insertion, wait for it to finish (ie. to

* go ahead with the insertion, or kill the tuple). Otherwise

* wait for the transaction to finish as usual.

*/

if (speculativeToken)

SpeculativeInsertionWait(xwait, speculativeToken);

else

XactLockTableWait(xwait, rel, &itup->t_tid, XLTW_InsertIndex);

/* start over... */

if (stack)

_bt_freestack(stack);

goto search;

}

/* Uniqueness is established -- restore heap tid as scantid */

if (itup_key->heapkeyspace)

itup_key->scantid = &itup->t_tid;

}

if (checkUnique != UNIQUE_CHECK_EXISTING)

{

OffsetNumber newitemoff;

/*

* The only conflict predicate locking cares about for indexes is when

* an index tuple insert conflicts with an existing lock. We don't

* know the actual page we're going to insert on for sure just yet in

* checkingunique and !heapkeyspace cases, but it's okay to use the

* first page the value could be on (with scantid omitted) instead.

*/

CheckForSerializableConflictIn(rel, NULL, BufferGetBlockNumber(insertstate.buf));

/*

* Do the insertion. Note that insertstate contains cached binary

* search bounds established within _bt_check_unique when insertion is

* checkingunique.

*/

newitemoff = _bt_findinsertloc(rel, &insertstate, checkingunique,

indexUnchanged, stack, heapRel);

_bt_insertonpg(rel, itup_key, insertstate.buf, InvalidBuffer, stack,

itup, insertstate.itemsz, newitemoff,

insertstate.postingoff, false);

}

else

{

/* just release the buffer */

_bt_relbuf(rel, insertstate.buf);

}

/* be tidy */

if (stack)

_bt_freestack(stack);

pfree(itup_key);

return is_unique;

}

_bt_search 执行流程

如果插入数据或者更新数据在同一个页面涉及多条,pg会将此页面进行缓存,方便后续快速查找,这个优化

由 _bt_search_insert函数包装,起到优化的作用,此处主要讲解 _bt_search函数的执行流程

1)首先以读锁模式获取root页,如果不存在会新建并初始化;

2)防止并发操作导致页分裂,会事先调用 _bt_moveright 函数找到同级右侧结点;

3)如果当前节点为叶子结点,则返回此过程构建的栈信息,跳转至步骤6;

4)非叶子结点则利用二分查找确定待插入元组应落在下一级页面的索引元组indextuple,并将当前页号、元组偏移量等信息记录在栈中;

5) 释放当前页lock与pin,找到元组indextuple指向的子页所在buffer,并pin住,若该页为叶子结点,则获取写锁,反之获取读锁,继续步骤2;

6)返回此过程收集的搜索路径保存至栈中,后续页分裂等场景会用到。

_bt_findinsertloc执行流程

static OffsetNumber

_bt_findinsertloc(Relation rel,

BTInsertState insertstate,

bool checkingunique,

bool indexUnchanged,

BTStack stack,

Relation heapRel)

{

BTScanInsert itup_key = insertstate->itup_key;

Page page = BufferGetPage(insertstate->buf);

BTPageOpaque opaque;

OffsetNumber newitemoff;

opaque = BTPageGetOpaque(page);

/* Check 1/3 of a page restriction */

if (unlikely(insertstate->itemsz > BTMaxItemSize(page)))

_bt_check_third_page(rel, heapRel, itup_key->heapkeyspace, page,

insertstate->itup);

Assert(P_ISLEAF(opaque) && !P_INCOMPLETE_SPLIT(opaque));

Assert(!insertstate->bounds_valid || checkingunique);

Assert(!itup_key->heapkeyspace || itup_key->scantid != NULL);

Assert(itup_key->heapkeyspace || itup_key->scantid == NULL);

Assert(!itup_key->allequalimage || itup_key->heapkeyspace);

if (itup_key->heapkeyspace)

{

/* Keep track of whether checkingunique duplicate seen */

bool uniquedup = indexUnchanged;

/*

* If we're inserting into a unique index, we may have to walk right

* through leaf pages to find the one leaf page that we must insert on

* to.

*

* This is needed for checkingunique callers because a scantid was not

* used when we called _bt_search(). scantid can only be set after

* _bt_check_unique() has checked for duplicates. The buffer

* initially stored in insertstate->buf has the page where the first

* duplicate key might be found, which isn't always the page that new

* tuple belongs on. The heap TID attribute for new tuple (scantid)

* could force us to insert on a sibling page, though that should be

* very rare in practice.

*/

if (checkingunique)

{

if (insertstate->low < insertstate->stricthigh)

{

/* Encountered a duplicate in _bt_check_unique() */

Assert(insertstate->bounds_valid);

uniquedup = true;

}

for (;;)

{

/*

* Does the new tuple belong on this page?

*

* The earlier _bt_check_unique() call may well have

* established a strict upper bound on the offset for the new

* item. If it's not the last item of the page (i.e. if there

* is at least one tuple on the page that goes after the tuple

* we're inserting) then we know that the tuple belongs on

* this page. We can skip the high key check.

*/

if (insertstate->bounds_valid &&

insertstate->low <= insertstate->stricthigh &&

insertstate->stricthigh <= PageGetMaxOffsetNumber(page))

break;

/* Test '<=', not '!=', since scantid is set now */

if (P_RIGHTMOST(opaque) ||

_bt_compare(rel, itup_key, page, P_HIKEY) <= 0)

break;

_bt_stepright(rel, insertstate, stack);

/* Update local state after stepping right */

page = BufferGetPage(insertstate->buf);

opaque = BTPageGetOpaque(page);

/* Assume duplicates (if checkingunique) */

uniquedup = true;

}

}

/*

* If the target page cannot fit newitem, try to avoid splitting the

* page on insert by performing deletion or deduplication now

*/

if (PageGetFreeSpace(page) < insertstate->itemsz)

_bt_delete_or_dedup_one_page(rel, heapRel, insertstate, false,

checkingunique, uniquedup,

indexUnchanged);

}

else

{

/*----------

* This is a !heapkeyspace (version 2 or 3) index. The current page

* is the first page that we could insert the new tuple to, but there

* may be other pages to the right that we could opt to use instead.

*

* If the new key is equal to one or more existing keys, we can

* legitimately place it anywhere in the series of equal keys. In

* fact, if the new key is equal to the page's "high key" we can place

* it on the next page. If it is equal to the high key, and there's

* not room to insert the new tuple on the current page without

* splitting, then we move right hoping to find more free space and

* avoid a split.

*

* Keep scanning right until we

* (a) find a page with enough free space,

* (b) reach the last page where the tuple can legally go, or

* (c) get tired of searching.

* (c) is not flippant; it is important because if there are many

* pages' worth of equal keys, it's better to split one of the early

* pages than to scan all the way to the end of the run of equal keys

* on every insert. We implement "get tired" as a random choice,

* since stopping after scanning a fixed number of pages wouldn't work

* well (we'd never reach the right-hand side of previously split

* pages). The probability of moving right is set at 0.99, which may

* seem too high to change the behavior much, but it does an excellent

* job of preventing O(N^2) behavior with many equal keys.

*----------

*/

while (PageGetFreeSpace(page) < insertstate->itemsz)

{

/*

* Before considering moving right, see if we can obtain enough

* space by erasing LP_DEAD items

*/

if (P_HAS_GARBAGE(opaque))

{

/* Perform simple deletion */

_bt_delete_or_dedup_one_page(rel, heapRel, insertstate, true,

false, false, false);

if (PageGetFreeSpace(page) >= insertstate->itemsz)

break; /* OK, now we have enough space */

}

/*

* Nope, so check conditions (b) and (c) enumerated above

*

* The earlier _bt_check_unique() call may well have established a

* strict upper bound on the offset for the new item. If it's not

* the last item of the page (i.e. if there is at least one tuple

* on the page that's greater than the tuple we're inserting to)

* then we know that the tuple belongs on this page. We can skip

* the high key check.

*/

if (insertstate->bounds_valid &&

insertstate->low <= insertstate->stricthigh &&

insertstate->stricthigh <= PageGetMaxOffsetNumber(page))

break;

if (P_RIGHTMOST(opaque) ||

_bt_compare(rel, itup_key, page, P_HIKEY) != 0 ||

pg_prng_uint32(&pg_global_prng_state) <= (PG_UINT32_MAX / 100))

break;

_bt_stepright(rel, insertstate, stack);

/* Update local state after stepping right */

page = BufferGetPage(insertstate->buf);

opaque = BTPageGetOpaque(page);

}

}

/*

* We should now be on the correct page. Find the offset within the page

* for the new tuple. (Possibly reusing earlier search bounds.)

*/

Assert(P_RIGHTMOST(opaque) ||

_bt_compare(rel, itup_key, page, P_HIKEY) <= 0);

newitemoff = _bt_binsrch_insert(rel, insertstate);

if (insertstate->postingoff == -1)

{

/*

* There is an overlapping posting list tuple with its LP_DEAD bit

* set. We don't want to unnecessarily unset its LP_DEAD bit while

* performing a posting list split, so perform simple index tuple

* deletion early.

*/

_bt_delete_or_dedup_one_page(rel, heapRel, insertstate, true,

false, false, false);

/*

* Do new binary search. New insert location cannot overlap with any

* posting list now.

*/

Assert(!insertstate->bounds_valid);

insertstate->postingoff = 0;

newitemoff = _bt_binsrch_insert(rel, insertstate);

Assert(insertstate->postingoff == 0);

}

return newitemoff;

}

1)检查插入元组是否合理,pg规定索引元组不能超过页面大小的1/3;

2)确定索引真正要插入的叶子结点,会有这样一种情况,key会跨越多个页,因此元组的插入可能需要右移;

3)如果不是该页的最后一项,说明该页为即将插入页;

4)如果该页为最右侧节点或者扫描键小于等于highkey,也说明此页为插入页;

5)上述3),4)均不满足则需要右移,循环步骤3)4);

6)如果目标页容纳不下插入元祖,优先会对此页面进行整理删除操作,其目的是尽量避免页分裂操作;

7)最后调用 _bt_binsrch_insert 确定插入元组所在页的偏移量;

_bt_insertonpg

_bt_findinsertloc函数只是确定插入元组在页内的偏移量,而未真正将元组数据写入索引页,这一工作由_bt_insertonpg 函数完成,其执行流程如下:

static void

_bt_insertonpg(Relation rel,

BTScanInsert itup_key,

Buffer buf,

Buffer cbuf,

BTStack stack,

IndexTuple itup,

Size itemsz,

OffsetNumber newitemoff,

int postingoff,

bool split_only_page)

{

Page page;

BTPageOpaque opaque;

bool isleaf,

isroot,

isrightmost,

isonly;

IndexTuple oposting = NULL;

IndexTuple origitup = NULL;

IndexTuple nposting = NULL;

page = BufferGetPage(buf);

opaque = BTPageGetOpaque(page);

isleaf = P_ISLEAF(opaque);

isroot = P_ISROOT(opaque);

isrightmost = P_RIGHTMOST(opaque);

isonly = P_LEFTMOST(opaque) && P_RIGHTMOST(opaque);

/* child buffer must be given iff inserting on an internal page */

Assert(isleaf == !BufferIsValid(cbuf));

/* tuple must have appropriate number of attributes */

Assert(!isleaf ||

BTreeTupleGetNAtts(itup, rel) ==

IndexRelationGetNumberOfAttributes(rel));

Assert(isleaf ||

BTreeTupleGetNAtts(itup, rel) <=

IndexRelationGetNumberOfKeyAttributes(rel));

Assert(!BTreeTupleIsPosting(itup));

Assert(MAXALIGN(IndexTupleSize(itup)) == itemsz);

/* Caller must always finish incomplete split for us */

Assert(!P_INCOMPLETE_SPLIT(opaque));

/*

* Every internal page should have exactly one negative infinity item at

* all times. Only _bt_split() and _bt_newroot() should add items that

* become negative infinity items through truncation, since they're the

* only routines that allocate new internal pages.

*/

Assert(isleaf || newitemoff > P_FIRSTDATAKEY(opaque));

/*

* Do we need to split an existing posting list item?

*/

if (postingoff != 0)

{

ItemId itemid = PageGetItemId(page, newitemoff);

/*

* The new tuple is a duplicate with a heap TID that falls inside the

* range of an existing posting list tuple on a leaf page. Prepare to

* split an existing posting list. Overwriting the posting list with

* its post-split version is treated as an extra step in either the

* insert or page split critical section.

*/

Assert(isleaf && itup_key->heapkeyspace && itup_key->allequalimage);

oposting = (IndexTuple) PageGetItem(page, itemid);

/*

* postingoff value comes from earlier call to _bt_binsrch_posting().

* Its binary search might think that a plain tuple must be a posting

* list tuple that needs to be split. This can happen with corruption

* involving an existing plain tuple that is a duplicate of the new

* item, up to and including its table TID. Check for that here in

* passing.

*

* Also verify that our caller has made sure that the existing posting

* list tuple does not have its LP_DEAD bit set.

*/

if (!BTreeTupleIsPosting(oposting) || ItemIdIsDead(itemid))

ereport(ERROR,

(errcode(ERRCODE_INDEX_CORRUPTED),

errmsg_internal("table tid from new index tuple (%u,%u) overlaps with invalid duplicate tuple at offset %u of block %u in index \"%s\"",

ItemPointerGetBlockNumber(&itup->t_tid),

ItemPointerGetOffsetNumber(&itup->t_tid),

newitemoff, BufferGetBlockNumber(buf),

RelationGetRelationName(rel))));

/* use a mutable copy of itup as our itup from here on */

origitup = itup;

itup = CopyIndexTuple(origitup);

nposting = _bt_swap_posting(itup, oposting, postingoff);

/* itup now contains rightmost/max TID from oposting */

/* Alter offset so that newitem goes after posting list */

newitemoff = OffsetNumberNext(newitemoff);

}

/*

* Do we need to split the page to fit the item on it?

*

* Note: PageGetFreeSpace() subtracts sizeof(ItemIdData) from its result,

* so this comparison is correct even though we appear to be accounting

* only for the item and not for its line pointer.

*/

// 容纳不下,则会对当前页进行分裂

if (PageGetFreeSpace(page) < itemsz)

{

Buffer rbuf;

Assert(!split_only_page);

/* split the buffer into left and right halves */

rbuf = _bt_split(rel, itup_key, buf, cbuf, newitemoff, itemsz, itup,

origitup, nposting, postingoff);

PredicateLockPageSplit(rel,

BufferGetBlockNumber(buf),

BufferGetBlockNumber(rbuf));

/*----------

* By here,

*

* + our target page has been split;

* + the original tuple has been inserted;

* + we have write locks on both the old (left half)

* and new (right half) buffers, after the split; and

* + we know the key we want to insert into the parent

* (it's the "high key" on the left child page).

*

* We're ready to do the parent insertion. We need to hold onto the

* locks for the child pages until we locate the parent, but we can

* at least release the lock on the right child before doing the

* actual insertion. The lock on the left child will be released

* last of all by parent insertion, where it is the 'cbuf' of parent

* page.

*----------

*/

_bt_insert_parent(rel, buf, rbuf, stack, isroot, isonly);

}

else

{

Buffer metabuf = InvalidBuffer;

Page metapg = NULL;

BTMetaPageData *metad = NULL;

BlockNumber blockcache;

/*

* If we are doing this insert because we split a page that was the

* only one on its tree level, but was not the root, it may have been

* the "fast root". We need to ensure that the fast root link points

* at or above the current page. We can safely acquire a lock on the

* metapage here --- see comments for _bt_newroot().

*/

if (unlikely(split_only_page))

{

Assert(!isleaf);

Assert(BufferIsValid(cbuf));

metabuf = _bt_getbuf(rel, BTREE_METAPAGE, BT_WRITE);

metapg = BufferGetPage(metabuf);

metad = BTPageGetMeta(metapg);

if (metad->btm_fastlevel >= opaque->btpo_level)

{

/* no update wanted */

_bt_relbuf(rel, metabuf);

metabuf = InvalidBuffer;

}

}

/* Do the update. No ereport(ERROR) until changes are logged */

START_CRIT_SECTION();

if (postingoff != 0)

memcpy(oposting, nposting, MAXALIGN(IndexTupleSize(nposting)));

if (PageAddItem(page, (Item) itup, itemsz, newitemoff, false,

false) == InvalidOffsetNumber)

elog(PANIC, "failed to add new item to block %u in index \"%s\"",

BufferGetBlockNumber(buf), RelationGetRelationName(rel));

MarkBufferDirty(buf);

if (BufferIsValid(metabuf))

{

/* upgrade meta-page if needed */

if (metad->btm_version < BTREE_NOVAC_VERSION)

_bt_upgrademetapage(metapg);

metad->btm_fastroot = BufferGetBlockNumber(buf);

metad->btm_fastlevel = opaque->btpo_level;

MarkBufferDirty(metabuf);

}

/*

* Clear INCOMPLETE_SPLIT flag on child if inserting the new item

* finishes a split

*/

if (!isleaf)

{

Page cpage = BufferGetPage(cbuf);

BTPageOpaque cpageop = BTPageGetOpaque(cpage);

Assert(P_INCOMPLETE_SPLIT(cpageop));

cpageop->btpo_flags &= ~BTP_INCOMPLETE_SPLIT;

MarkBufferDirty(cbuf);

}

/* XLOG stuff */

if (RelationNeedsWAL(rel))

{

xl_btree_insert xlrec;

xl_btree_metadata xlmeta;

uint8 xlinfo;

XLogRecPtr recptr;

uint16 upostingoff;

xlrec.offnum = newitemoff;

XLogBeginInsert();

XLogRegisterData((char *) &xlrec, SizeOfBtreeInsert);

if (isleaf && postingoff == 0)

{

/* Simple leaf insert */

xlinfo = XLOG_BTREE_INSERT_LEAF;

}

else if (postingoff != 0)

{

/*

* Leaf insert with posting list split. Must include

* postingoff field before newitem/orignewitem.

*/

Assert(isleaf);

xlinfo = XLOG_BTREE_INSERT_POST;

}

else

{

/* Internal page insert, which finishes a split on cbuf */

xlinfo = XLOG_BTREE_INSERT_UPPER;

XLogRegisterBuffer(1, cbuf, REGBUF_STANDARD);

if (BufferIsValid(metabuf))

{

/* Actually, it's an internal page insert + meta update */

xlinfo = XLOG_BTREE_INSERT_META;

Assert(metad->btm_version >= BTREE_NOVAC_VERSION);

xlmeta.version = metad->btm_version;

xlmeta.root = metad->btm_root;

xlmeta.level = metad->btm_level;

xlmeta.fastroot = metad->btm_fastroot;

xlmeta.fastlevel = metad->btm_fastlevel;

xlmeta.last_cleanup_num_delpages = metad->btm_last_cleanup_num_delpages;

xlmeta.allequalimage = metad->btm_allequalimage;

XLogRegisterBuffer(2, metabuf,

REGBUF_WILL_INIT | REGBUF_STANDARD);

XLogRegisterBufData(2, (char *) &xlmeta,

sizeof(xl_btree_metadata));

}

}

XLogRegisterBuffer(0, buf, REGBUF_STANDARD);

if (postingoff == 0)

{

/* Just log itup from caller */

XLogRegisterBufData(0, (char *) itup, IndexTupleSize(itup));

}

else

{

/*

* Insert with posting list split (XLOG_BTREE_INSERT_POST

* record) case.

*

* Log postingoff. Also log origitup, not itup. REDO routine

* must reconstruct final itup (as well as nposting) using

* _bt_swap_posting().

*/

upostingoff = postingoff;

XLogRegisterBufData(0, (char *) &upostingoff, sizeof(uint16));

XLogRegisterBufData(0, (char *) origitup,

IndexTupleSize(origitup));

}

recptr = XLogInsert(RM_BTREE_ID, xlinfo);

if (BufferIsValid(metabuf))

PageSetLSN(metapg, recptr);

if (!isleaf)

PageSetLSN(BufferGetPage(cbuf), recptr);

PageSetLSN(page, recptr);

}

END_CRIT_SECTION();

/* Release subsidiary buffers */

if (BufferIsValid(metabuf))

_bt_relbuf(rel, metabuf);

if (!isleaf)

_bt_relbuf(rel, cbuf);

/*

* Cache the block number if this is the rightmost leaf page. Cache

* may be used by a future inserter within _bt_search_insert().

*/

blockcache = InvalidBlockNumber;

if (isrightmost && isleaf && !isroot)

blockcache = BufferGetBlockNumber(buf);

/* Release buffer for insertion target block */

_bt_relbuf(rel, buf);

/*

* If we decided to cache the insertion target block before releasing

* its buffer lock, then cache it now. Check the height of the tree

* first, though. We don't go for the optimization with small

* indexes. Defer final check to this point to ensure that we don't

* call _bt_getrootheight while holding a buffer lock.

*/

if (BlockNumberIsValid(blockcache) &&

_bt_getrootheight(rel) >= BTREE_FASTPATH_MIN_LEVEL)

RelationSetTargetBlock(rel, blockcache);

}

/* be tidy */

if (postingoff != 0)

{

/* itup is actually a modified copy of caller's original */

pfree(nposting);

pfree(itup);

}

}

![[附源码]Node.js计算机毕业设计高校科研项目申报管理信息系统Express](https://img-blog.csdnimg.cn/857d02700cf047e5b71f8026565e0ead.png)

![[附源码]Python计算机毕业设计高校线上教学系统Django(程序+LW)](https://img-blog.csdnimg.cn/45de2795ea8f477cbbe435e043d65a69.png)

![[附源码]Nodejs计算机毕业设计基于的宠物领养管理系统Express(程序+LW)](https://img-blog.csdnimg.cn/a6d2fbbcb561430481b2a6bb1538816f.png)