urllib爬取数据

import urllib.request as request

# 定义url

url = "https://www.baidu.com"

#模拟浏览器发起请求获取响应对象

response = request.urlopen(url)

"""

read方法返回的是字节形式的二进制数据

二进制--》字符串 解码 decode( 编码的格式)

"""

content = response.read().decode('utf-8')

# 一个类型6个方法 response为对象 HTTPResponse

# 6个方法 read readline readlines getcode geturl getheaders // 读取一行 多行 获取响应码,获取url 获取请求头

print(content)read 读取字节read(5)

readline 读取一行

readlines 读取多行

getcode 获取响应码

geturl 获取url

getheaders 获取请求头

urllib下载urlretrieve

第一个参数传递资源链接url,第二个参数为要保存的文件名,源码如下

def urlretrieve(url, filename=None, reporthook=None, data=None):下载图片

import urllib.request as request

# 下载图片

url_img = "https://img1.baidu.com/it/u=1187129814,1675470074&fm=253&fmt=auto&app=138&f=JPEG?w=889&h=500"

request.urlretrieve(url_img,"test.jpg")

urllib请求对象定制

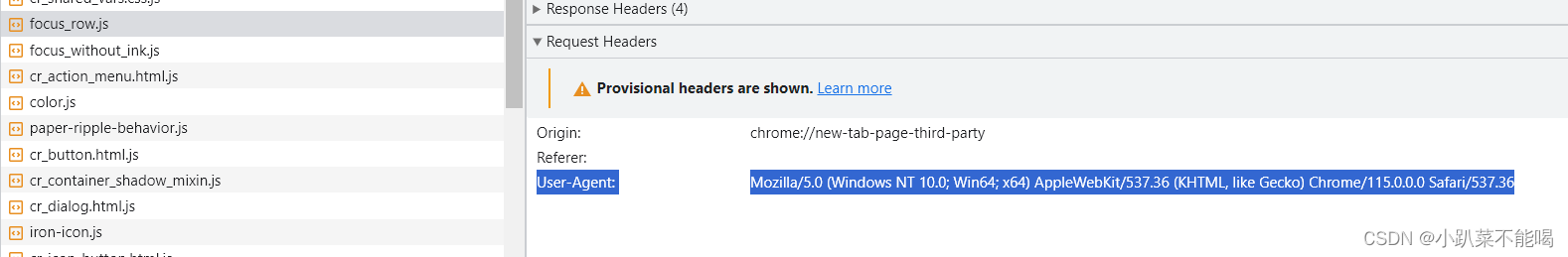

User Agent中文名为用户代理,简称 UA,它是一个特殊字符串头,使得服务器能够识别客户使用的信息,get操作系统及版本、CPU 类型、浏览器及版本。浏览器内核、浏览器染引擎、浏览器语言、浏览器插件等

https的时候需要加上ua伪装,否则返回的信息不全,存在问题,http 80 https 443

import urllib.request as request

# 下载图片

url = "https://www.baidu.com/"

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

}

# 构建的请求对象

geneRequest=request.Request(url=url,headers = header)

# 模拟浏览器发送请求

response = request.urlopen(geneRequest)

#获取内容

content = response.read().decode('utf-8')

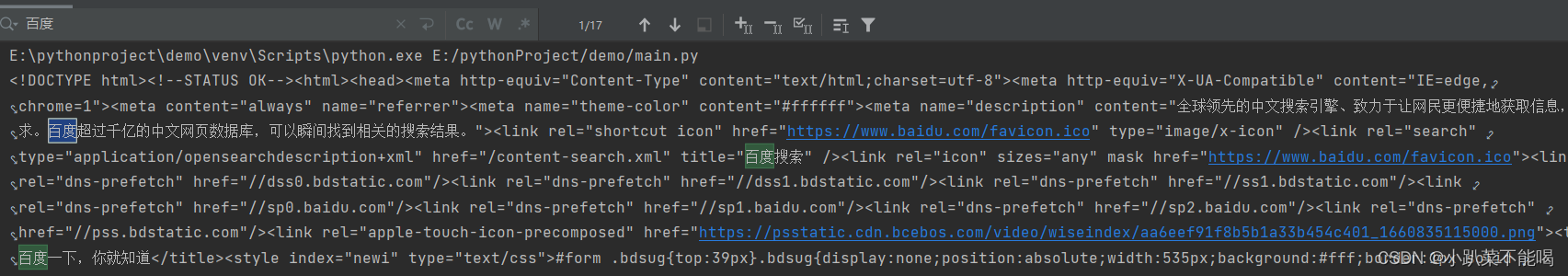

print(content)打印如下 :

请求qoute方法和urlencode方法

浏览器get请求的中文参数复制下来 会被编码成unicode,例如百度搜索陈奕迅,会变成这个样子,所以urllib提供了qoute方法和urlencode方法来解决此问题

https://www.baidu.com/s?wd=%E9%99%88%E5%A5%95%E8%BF%85

qoute

单参数封装

import urllib.request as request

import urllib.parse as parse

# 百度搜索陈奕迅,发现中文被编码unicode

url = "https://www.baidu.com/s?wd="

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

'Cookie': 'BIDUPSID=F5D1153D001F7BA92AFCBFF6B6995913; PSTM=1674736839; BD_UPN=12314753; BDUSS=WdodDZGaVk0flJIYjkzNHMtZWtYTUpwaE1HNEc3VGU1bHEtQUhmQXNia0c4TlZrRVFBQUFBJCQAAAAAAAAAAAEAAACvXzmo0-DJ-sfrtuDLr771AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAZjrmQGY65kU; BDUSS_BFESS=WdodDZGaVk0flJIYjkzNHMtZWtYTUpwaE1HNEc3VGU1bHEtQUhmQXNia0c4TlZrRVFBQUFBJCQAAAAAAAAAAAEAAACvXzmo0-DJ-sfrtuDLr771AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAZjrmQGY65kU; BAIDUID=F5D1153D001F7BA93B20A6BAB8379B5E:SL=0:NR=10:FG=1; BAIDUID_BFESS=F5D1153D001F7BA93B20A6BAB8379B5E:SL=0:NR=10:FG=1; channel=baidusearch; baikeVisitId=5ef65414-3e3e-44a2-9b90-6b842c55e2b7; BD_HOME=1; BA_HECTOR=ag0k2g8g8k2l2ka1252h04ai1idf2ef1o; ZFY=ar3QXfOOpNBISLowT0W9l3txojdtsgY2xonzVcZtFl8:C; delPer=0; BD_CK_SAM=1; PSINO=2; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; B64_BOT=1; BDRCVFR[tox4WRQ4-Km]=mk3SLVN4HKm; ab_sr=1.0.1_NjZlMTM5ZjY1OTQ5YzA5YmY2MmFhOTE2YTY1MGYzMmM5YTA1ZDBhMzY2Y2NiYjdhMTU1NWU1MzE3OWM4MWI3NThiY2JiYTczNDJhNWY3N2FiOWVjNDU5MWVlOTExM2UzMDRjODE4MWZmNDg1MWExNWY1NzY5ZGVhOThkZDFmNTJmYTZlODA3YTg0Y2IxNTI4NmFlODg0ZmE3MzY2ODhkZA==; BDRCVFR[-pGxjrCMryR]=mk3SLVN4HKm; BDRCVFR[feWj1Vr5u3D]=I67x6TjHwwYf0; H_PS_PSSID=36552_39109_38831_38880_39115_39118_39040_38917_26350_39138_39137_39101; COOKIE_SESSION=1858_0_7_9_1_6_1_0_7_6_33_1_0_0_0_0_1690964160_0_1691849533%7C9%23187206_15_1690528560%7C9; sug=3; sugstore=0; ORIGIN=0; bdime=0; H_PS_645EC=ad5fGs4ULmE01SpZnyJOET%2F2Sji4OEtA4J0bW6WTOQkhh3KutG2uM%2F3Ryak'

}

name = parse.quote("陈奕迅")

# 构建的请求对象

geneRequest=request.Request(url=url+name,headers = header)

# 模拟浏览器发送请求

response = request.urlopen(geneRequest)

#获取内容

content = response.read().decode('utf-8')

print(content)

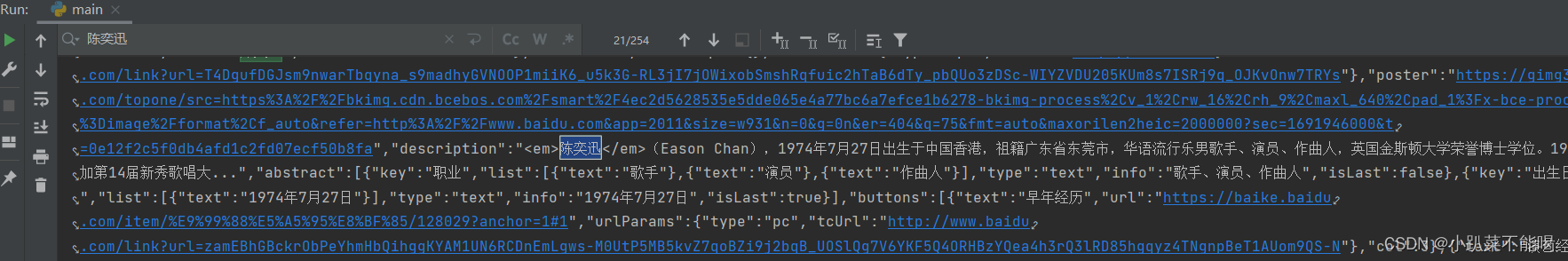

打印如下:

urlencode

urlencode

多参数封装

import urllib.request as request

import urllib.parse as parse

# 百度搜索陈奕迅,发现中文被编码unicode

url = "https://www.baidu.com/s?"

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

'Cookie': 'BIDUPSID=F5D1153D001F7BA92AFCBFF6B6995913; PSTM=1674736839; BD_UPN=12314753; BDUSS=WdodDZGaVk0flJIYjkzNHMtZWtYTUpwaE1HNEc3VGU1bHEtQUhmQXNia0c4TlZrRVFBQUFBJCQAAAAAAAAAAAEAAACvXzmo0-DJ-sfrtuDLr771AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAZjrmQGY65kU; BDUSS_BFESS=WdodDZGaVk0flJIYjkzNHMtZWtYTUpwaE1HNEc3VGU1bHEtQUhmQXNia0c4TlZrRVFBQUFBJCQAAAAAAAAAAAEAAACvXzmo0-DJ-sfrtuDLr771AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAZjrmQGY65kU; BAIDUID=F5D1153D001F7BA93B20A6BAB8379B5E:SL=0:NR=10:FG=1; BAIDUID_BFESS=F5D1153D001F7BA93B20A6BAB8379B5E:SL=0:NR=10:FG=1; channel=baidusearch; baikeVisitId=5ef65414-3e3e-44a2-9b90-6b842c55e2b7; BD_HOME=1; BA_HECTOR=ag0k2g8g8k2l2ka1252h04ai1idf2ef1o; ZFY=ar3QXfOOpNBISLowT0W9l3txojdtsgY2xonzVcZtFl8:C; delPer=0; BD_CK_SAM=1; PSINO=2; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; B64_BOT=1; BDRCVFR[tox4WRQ4-Km]=mk3SLVN4HKm; ab_sr=1.0.1_NjZlMTM5ZjY1OTQ5YzA5YmY2MmFhOTE2YTY1MGYzMmM5YTA1ZDBhMzY2Y2NiYjdhMTU1NWU1MzE3OWM4MWI3NThiY2JiYTczNDJhNWY3N2FiOWVjNDU5MWVlOTExM2UzMDRjODE4MWZmNDg1MWExNWY1NzY5ZGVhOThkZDFmNTJmYTZlODA3YTg0Y2IxNTI4NmFlODg0ZmE3MzY2ODhkZA==; BDRCVFR[-pGxjrCMryR]=mk3SLVN4HKm; BDRCVFR[feWj1Vr5u3D]=I67x6TjHwwYf0; H_PS_PSSID=36552_39109_38831_38880_39115_39118_39040_38917_26350_39138_39137_39101; COOKIE_SESSION=1858_0_7_9_1_6_1_0_7_6_33_1_0_0_0_0_1690964160_0_1691849533%7C9%23187206_15_1690528560%7C9; sug=3; sugstore=0; ORIGIN=0; bdime=0; H_PS_645EC=ad5fGs4ULmE01SpZnyJOET%2F2Sji4OEtA4J0bW6WTOQkhh3KutG2uM%2F3Ryak'

}

data={

'wd':'陈奕迅',

'sex':'男',

'location':"中国香港"

}

name = parse.urlencode(data)

# 构建的请求对象

geneRequest=request.Request(url=url+name,headers = header)

# 模拟浏览器发送请求

response = request.urlopen(geneRequest)

#获取内容

content = response.read().decode('utf-8')

print(content)

urllib发送post请求

- post请求的参数必须要进行编码

- post请求的请求参数放入请求对象的data中,也就是请求体中

- 返回的是json数据,需要转换json打印

import urllib.request as request

import urllib.parse as parse

import json

# 百度翻译

url = "https://fanyi.baidu.com/sug"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

'Cookie': 'BIDUPSID=F5D1153D001F7BA92AFCBFF6B6995913; PSTM=1674736839; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; BDUSS=WdodDZGaVk0flJIYjkzNHMtZWtYTUpwaE1HNEc3VGU1bHEtQUhmQXNia0c4TlZrRVFBQUFBJCQAAAAAAAAAAAEAAACvXzmo0-DJ-sfrtuDLr771AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAZjrmQGY65kU; BDUSS_BFESS=WdodDZGaVk0flJIYjkzNHMtZWtYTUpwaE1HNEc3VGU1bHEtQUhmQXNia0c4TlZrRVFBQUFBJCQAAAAAAAAAAAEAAACvXzmo0-DJ-sfrtuDLr771AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAZjrmQGY65kU; BAIDUID=F5D1153D001F7BA93B20A6BAB8379B5E:SL=0:NR=10:FG=1; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; H_PS_PSSID=36552_39109_38831_38880_39115_39118_39040_38917_26350_39138_39137_39101; BAIDUID_BFESS=F5D1153D001F7BA93B20A6BAB8379B5E:SL=0:NR=10:FG=1; delPer=0; PSINO=2; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1691554432,1691567796,1691658560,1691850659; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1691850659; ab_sr=1.0.1_NzlhYWEzMDAyMWUzZTBhNGI1NTFkNDdiZThjNjA4YTVkMmZmMTM4YThkNDZjMzQ5ZWNmNDFmMmMxMzlmYjczMTllM2I0ZTM2ZjM4YzcwNzY3N2MzZjJjMjE1NDk2ODBlNTFlZWFmYTUzZjcyYTc4NjY1MmVmNDRlM2Y1ZTdhYjQ1MDhhODNiZGI2NDk0ZWVlNTBkYTJjMjZjNTUwNmFiOTk1OWY2YTdiYWI1MjY0Zjg4ZGExNmQ4YjA5MzBiNWI4'

}

data={

'kw': 'result'

}

#post请求的参数必须要进行编码

data = parse.urlencode(data).encode('utf-8')

#post的请求的参数是不会拼接在url的后面的而是需要放在请求对象中,data

geneRequest = request.Request(url=url,data=data,headers=headers)

#模拟浏览器向服务器发送请求

response = request.urlopen(geneRequest)

content = response.read().decode('utf-8')

# 字符串 =》json对象

obj= json.loads(content)

print(obj)

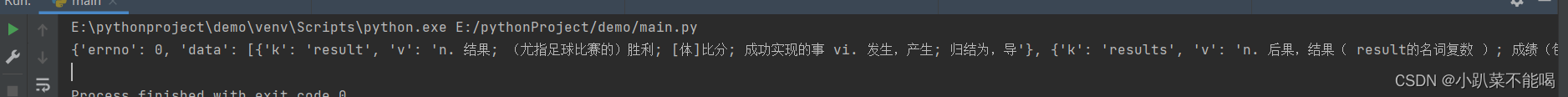

打印如下:

urllib的异常URLError和HTTPError

urllib的异常URLError和HTTPError

HTTPError类是URLError类的子类

2.导入的包urllib.error.HTTPError

urllib.error.URLError

3.http错误: http错误是针对浏览器无法连接到服务器而增加出来的错误提示。引导并告诉浏览者该页

是哪里出了问题。

4.通过url1b发送请求的时候,有可能会发送失败,这个时候如果想让你的代码更加的健壮,可以通过try-except进行捕获异常,异常有两类,URLError\HTTPError

import urllib.request as request

import urllib.error as error

url = "https://teshi.lcds.com"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

'Cookie': 'BIDUPSID=F5D1153D001F7BA92AFCBFF6B6995913; PSTM=1674736839; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; BDUSS=WdodDZGaVk0flJIYjkzNHMtZWtYTUpwaE1HNEc3VGU1bHEtQUhmQXNia0c4TlZrRVFBQUFBJCQAAAAAAAAAAAEAAACvXzmo0-DJ-sfrtuDLr771AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAZjrmQGY65kU; BDUSS_BFESS=WdodDZGaVk0flJIYjkzNHMtZWtYTUpwaE1HNEc3VGU1bHEtQUhmQXNia0c4TlZrRVFBQUFBJCQAAAAAAAAAAAEAAACvXzmo0-DJ-sfrtuDLr771AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAZjrmQGY65kU; BAIDUID=F5D1153D001F7BA93B20A6BAB8379B5E:SL=0:NR=10:FG=1; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; H_PS_PSSID=36552_39109_38831_38880_39115_39118_39040_38917_26350_39138_39137_39101; BAIDUID_BFESS=F5D1153D001F7BA93B20A6BAB8379B5E:SL=0:NR=10:FG=1; delPer=0; PSINO=2; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1691554432,1691567796,1691658560,1691850659; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1691850659; ab_sr=1.0.1_NzlhYWEzMDAyMWUzZTBhNGI1NTFkNDdiZThjNjA4YTVkMmZmMTM4YThkNDZjMzQ5ZWNmNDFmMmMxMzlmYjczMTllM2I0ZTM2ZjM4YzcwNzY3N2MzZjJjMjE1NDk2ODBlNTFlZWFmYTUzZjcyYTc4NjY1MmVmNDRlM2Y1ZTdhYjQ1MDhhODNiZGI2NDk0ZWVlNTBkYTJjMjZjNTUwNmFiOTk1OWY2YTdiYWI1MjY0Zjg4ZGExNmQ4YjA5MzBiNWI4'

}

try:

geneRequest = request.Request(url=url, headers=headers)

response = request.urlopen(geneRequest)

content = response.read().decode('utf-8')

print(content)

except error.URLError:

print('系统正在升级。。。')

打印: 系统正在升级

urllib的Cookie登录

数据采集的时候需要登录的场景,需要登录访问采集数据页面,下面以知乎为例

import urllib.request as request

url = "https://zhuanlan.zhihu.com/write"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

}

geneRequest = request.Request(url=url, headers=headers)

response = request.urlopen(geneRequest)

content = response.read().decode('utf-8')

with open("zhihu.html","w",encoding="utf-8") as fp:

fp.write(content)

下载到本地的内容为登录界面的内容,所以目前是被登录拦截啦,所以需要配置Cookie进行访问,添加如下代码则可访问文字编辑界面

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

'Cookie': '_zap=3d2d11e9-563c-4294-982b-bc1e50b92dbc; d_c0=AdDXSoDm9RaPTnCWFnbmNEw0ZgDlpiXboKQ=|1687239218; _xsrf=48c581c8-4a4b-438b-bb3e-535f42ba1927; Hm_lvt_98beee57fd2ef70ccdd5ca52b9740c49=1691853680; SESSIONID=FPFgDr4b3gQQv5Cc7KWf3dHjs8FnPIlTxUPa6tybYq2; captcha_session_v2=2|1:0|10:1691853648|18:captcha_session_v2|88:UnN3Z2t4ZlZPT0crWEJQc0xPd1hEbC9kdUU0ejVGQXJiRlZoRHNsaEdCZ0Z1by9UelpQQkYyOFBSSUE2Skpmdw==|6b31a45a03c3db7898a70ddc8ac6a98e14f4856f01721788f81926dfebfbc313; JOID=UV8WBEtS9hD3NIyCU1ZxCMQfIb9EEKJSl3P6sjVilUWiRbrFNAJVxJczjIJQS7KtuQ6GWB0uU38k6DIuInzu9OQ=; osd=VVERC05W-Bf4MYiMVFl0DMoYLrpAHqVdknf0tTpnkUulSr_BOgVawZM9i41VT7yqtguCVhohVnsq7z0rJnLp--E=; __snaker__id=E1otU20YwbURudbv; gdxidpyhxdE=kXnBfzykm%2F%2BPY7w8oPiYq3Mc7OITRL%2B%2F32Cc0JoN%5CAQDStC5S0arkZdcBdHycQf8XSzWdTgP4GrxigocwlMa09hue8hIxVPaxf2YrBPwLQiXuTM7LS%2BG%2FRick28km81nY6dJ5oVZshVboYBiPkL5GNLp888Ne8O8cJP6nYfwO1Ej8HRH%3A1691854581190; YD00517437729195%3AWM_NI=yi748HGbKMSIqOidDU4C49URWV1wzaconfHcqUJZ45hscybQkKbOqZIeBTgYAO7p%2FIDTLUCSBdJCqiIPsvkdBoC%2F%2BqhPNV8lacUqn5oWufyBmWSqXKNU55r71w1DSf4USmw%3D; YD00517437729195%3AWM_NIKE=9ca17ae2e6ffcda170e2e6eeaec16a8a87fb92e880aaef8fb7c15e938b9f83c861ededadaeb16ef5a89d93ec2af0fea7c3b92abbb7f9d5bb4485be85aed243b391a785c75ff89dfcdad85a958aff98f96ba1b1a287cb69b38b86bbb73facadad9ae15ba995aeb0f4528bbb8eb5e6538391f991ae43888c84b6c25982bc86a4f4638b90bd97ea7082b18dd0aa7bac9082b7bb68a3bd9b92ed3fad89bb87fc4aaf899d92db418e8bfbd6f13df3a9ffa5d26ef88b9d8ef637e2a3; YD00517437729195%3AWM_TID=plBlLylVh4VEBRFFRBeEhq3MIyb%2FhW7z; captcha_ticket_v2=2|1:0|10:1691853720|17:captcha_ticket_v2|704:eyJ2YWxpZGF0ZSI6IkNOMzFfMjhZMVEtclZqcURQWmN1UkN1R1lIQ1pHeVNTb0haOExpeFF0VnNTbVNyOE5Nc0s1MXRkSzZtOTdUZncyanFkVlZZdk9DRlVLWk9LbUNFYVZ5UVZ1SjJVZ2I3S1VsMlphZGVsQ0ZGTVdWRUlQNi40OUNoc3FrY1cyUzVLQWhaUGo2dF81RXBIVG9GWTVfTmJ0TW1iQzZkcUcuTEdmLmk1T1JGeWNZN05wWlAxcmZ6c3RLaEpSOENxRFFELi1hcmptYXhnaV81blluMmNOVWY3d0g3N0VLNU9hSzlfUG96SUhpLWtJc2JuOVZGWjZYNkJFcFI1eHNyTk0yX0FGWjVZZXp0a1dqV0JRUnR5SUppelA4ZGZCbGdjaW9uS0N1Vm9lVHRzOW5DRzZJNGVFa0t1RTFVVXJwemc3RHZBQTFJOEZtT0Q5V2EwQXBnS2FLZGJVOXNITy5pZTdGemFmZHZIaGM5bDZOcnFnV0duSDdoTUxBUHRCdGZlelFDU1hYLVdjN2VRS2pSSXo3dmkyc2hOWGNuMFlJemFtY1dtWlQ1WVBYNzU4TjItSGhSeEdnUWJVYm5hY1V5RjcuUnNfTEdGZTdBWnktRER1ZmtRWkxGVUdOLWtJNm13a1FxVGRXdHlvVFRSVWhJZVU4TmktRHltcHYuWUdkb29hckZqMyJ9|410a506ccaf23ec18e7a333608daf48c75c456fa73b5642fec83bc72a817f2dd; q_c1=33295412cedd47beb8ac73d2f8d799dc|1691853733000|1691853733000; tst=r; z_c0=2|1:0|10:1691853926|4:z_c0|92:Mi4xTjJBTk9RQUFBQUFCME5kS2dPYjFGaVlBQUFCZ0FsVk5wZkhFWlFBTVBnYVM1Q3hxV0daOXpnd1NVYi1TSVpaV0R3|cebdc70f00c92b22caefa8a052d557ac292179eb7bd18584831879633253e775; Hm_lpvt_98beee57fd2ef70ccdd5ca52b9740c49=1691854374; KLBRSID=dc02df4a8178e8c4dfd0a3c8cbd8c726|1691854342|1691853646'

}

urllib的Handler处理器

Handler:定制更高级的请求头,随着业务逻辑的复杂,请求对象定制满足不了我们的需求,比如动态Cookie和代理不能使用请求对象的定制

- 1、获取handler对象

- 2、获取opener对象

- 3、调用open方法

import urllib.request as request

url = "http://www.baidu.com"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

}

geneRequest = request.Request(url=url, headers=headers)

# handler build_opener open

# 获取handler对象

handler = request.HTTPHandler()

# 获取opener对象对象

opener = request.build_opener(handler)

# 调用open方法

response = opener.open(geneRequest)

content = response.read().decode('utf-8')

print(content)

urllib代理

代理的作用(使用别人的ip访问)

- 突破ip访问限制

- 访问内部资源

- 提高访问速度

- 隐藏真实ip

使用与handler一致,只不过多了代理配置

import urllib.request as request

url = "http://www.baidu.com/s?wd=ip"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

}

geneRequest = request.Request(url=url, headers=headers)

# 代理字典

proxies={

'http':'189.127.90.85:8080'

}

handler = request.ProxyHandler(proxies=proxies)

opener = request.build_opener(handler)

# 调用open方法

response = opener.open(geneRequest)

content = response.read().decode('utf-8')

print(content)

proxies={

'http':'189.127.90.85:8080'

}代理地址是网上找的,不好用的居多,如果长时间没有反应或者报错,则不好用,可以自己买

代理池

在生产中会有一堆高密的代理池,简单实现如下:

import urllib.request as request

import random

url = "http://www.baidu.com/s?wd=ip"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36',

}

geneRequest = request.Request(url=url, headers=headers)

# 简易版代理池

proxies_pool = [

{'http': '189.127.90.85:8080'},

{'http': '36.88.170.170:8089'},

]

proxies = random.choice(proxies_pool)

handler = request.ProxyHandler(proxies=proxies)

opener = request.build_opener(handler)

# 调用open方法

response = opener.open(geneRequest)

content = response.read().decode('utf-8')

print(content)

![[NDK]从Opengles到Vulkan-基础篇(9)-视口相关](https://img-blog.csdnimg.cn/img_convert/8e0a38524f68ed0900c7ded43b672f2a.png)