在前面,已经将 onnx模型转为 rknn模型。

yolov5 onnx模型 转为 rknn模型_爱钓鱼的歪猴的博客-CSDN博客

这里探讨的是:rknn模型在rv1126开发板上运行

目录

1、rknn模型在PC端进行推理测试,评估模型精度

2、模型预编译

3、rknn模型部署到rv1126开发板(交叉编译)

下载交叉编译环境:

下载rknn模型C++推理代码:

将编译好的模型部署到开发板

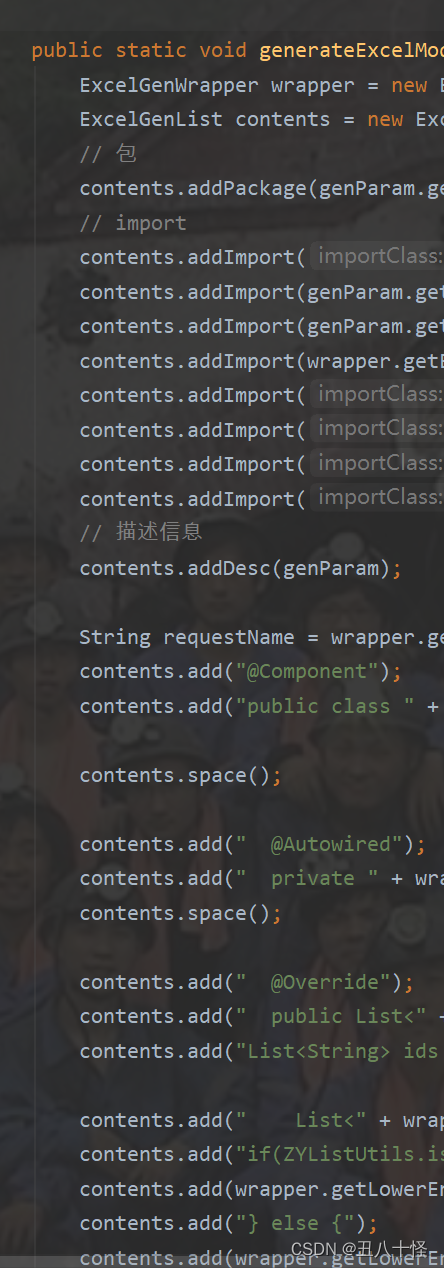

rknn模型在PC端推理代码(python):

rknn模型预编译代码(Python):

rknn模型C++推理代码--yolov5_detect.h

yolov5_detect.cpp

yolov5_detect_postprocess.h

yolov5_detect_postprocess.cpp

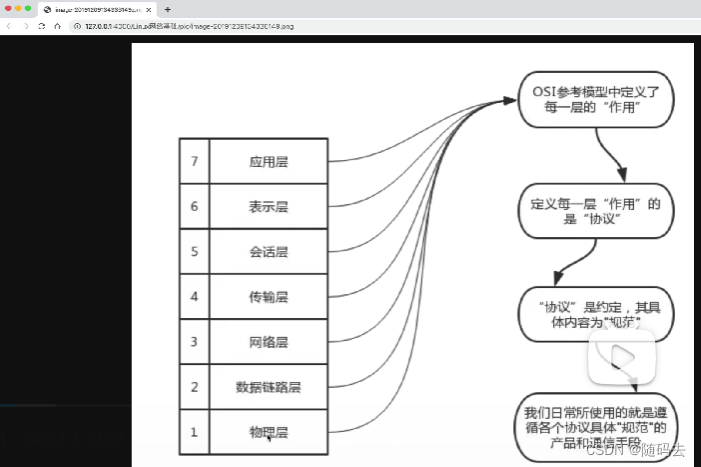

1、rknn模型在PC端进行推理测试,评估模型精度

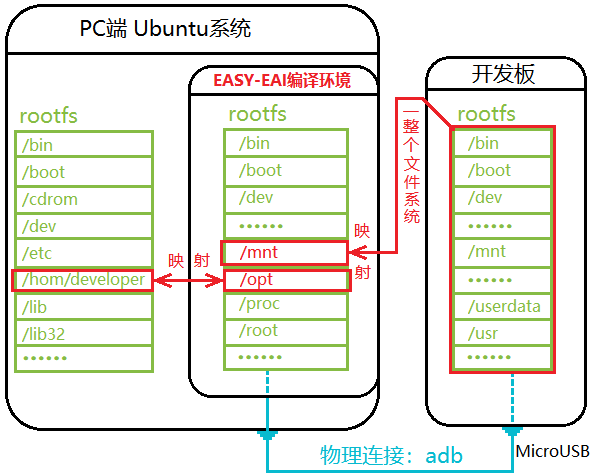

这里是在上面那个博客的提到的docker环境,即

2、模型预编译

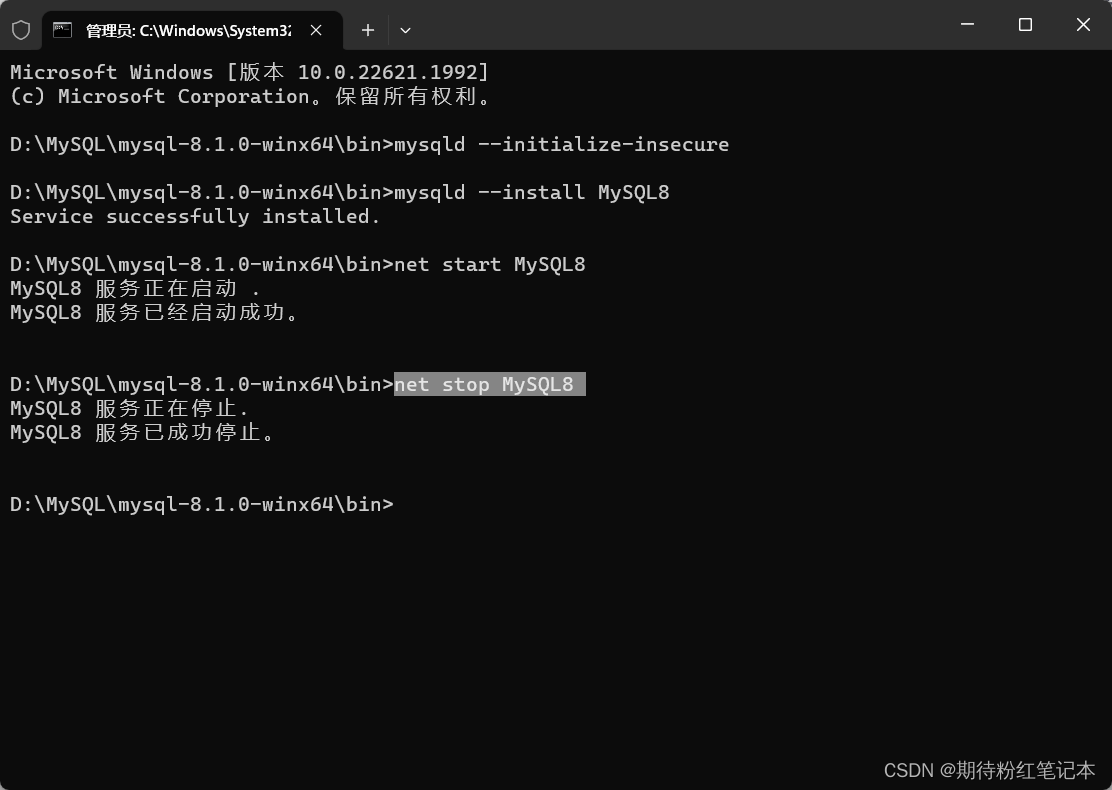

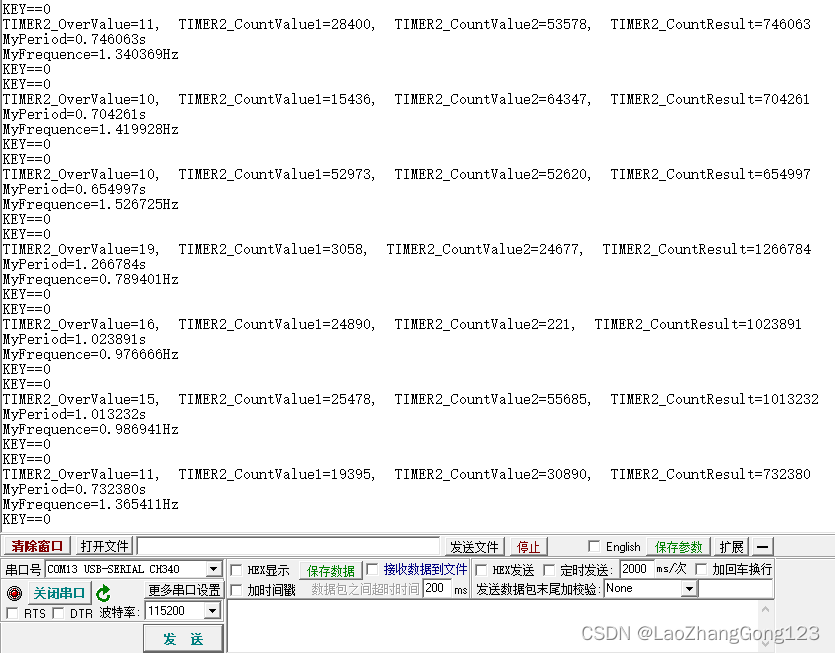

执行过第一步,可以发现rknn模型推理的时候会很慢,所以需要对模型进行预编译。预编译的时候需要经过EASY EAI Nano主板的环境,开发板与Ubuntu必须保证adb连接稳定 。

但是Ubuntu环境与docker环境对adb设备资源是竞争关系,所以需要关掉ubuntu环境的adb服务,且在docker环境通过apt-get安装adb包

在Ubuntu环境关闭adb服务:

adb kill-server在docker环境安装adb安装包:

apt-get install adb

adb service

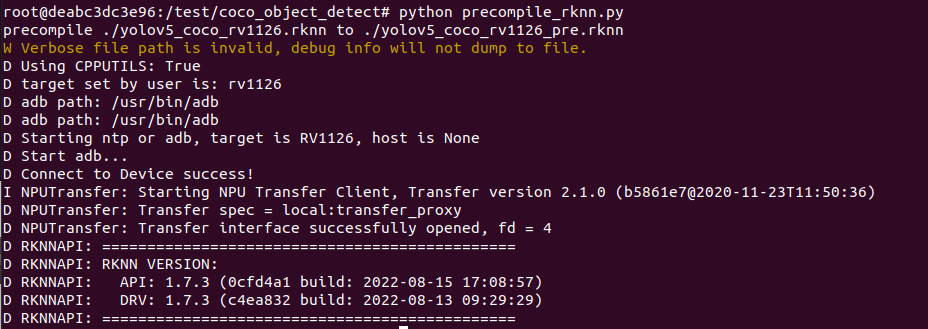

adb devices运行precompile_rknn.py脚本把模型执行预编译

python precompile_rknn.py执行效果如下图所示,生成预编译模型yolov5_coco_rv1126_pre.rknn

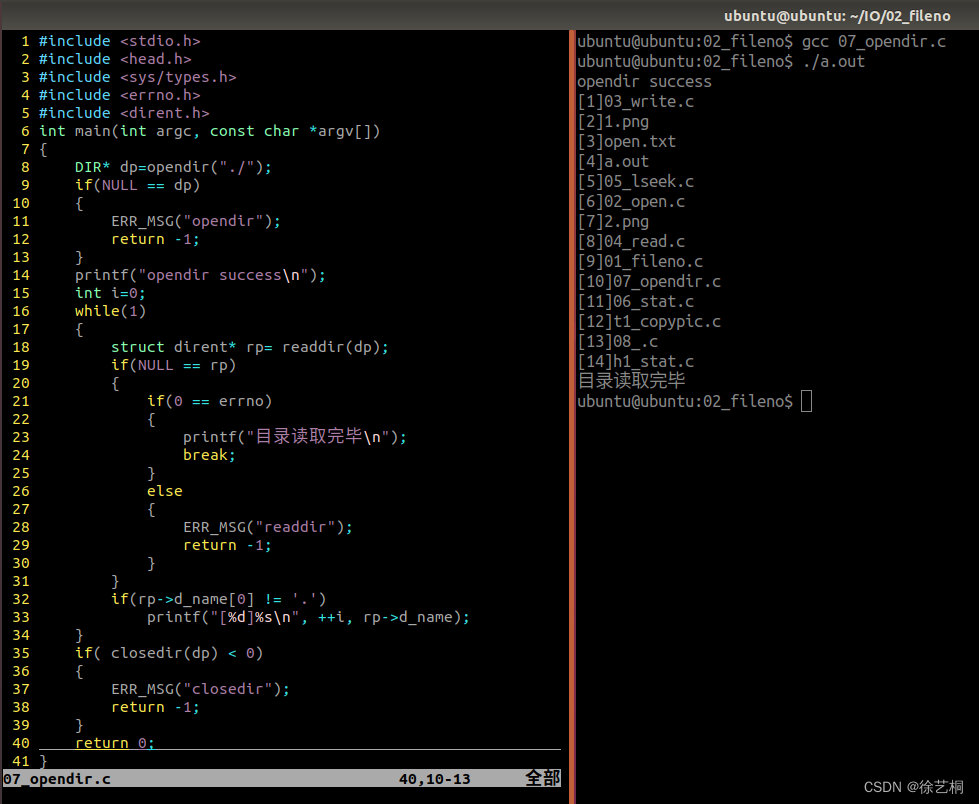

3、rknn模型部署到rv1126开发板(交叉编译)

下载交叉编译环境:

网盘链接:百度网盘 请输入提取码

提取码:i1ii

记得修改 run.sh中

VOL_SRC=你存放上面文件的目录you

--user=root这样会建立you目录到镜像的映射,十分方便

注:

* 由于依赖库部署在板卡上,因此交叉编译过程中必须保持adb连接。

下载rknn模型C++推理代码:

百度网盘链接: (百度网盘 请输入提取码 提取码:1jfb)。

解压后,在交叉环境中对其进行编译操作:

./build.sh将编译好的模型部署到开发板

在交叉编译环境中,将编译结果复制到/mnt/userdata目录,而该目录映射到开发板

cp yolov5_detect_demo_release/ /mnt/userdata/ -rf通过按键Ctrl+Shift+T创建一个新窗口,执行adb shell命令,进入板卡运行环境:

adb shell进入板卡后,定位到例程上传的位置,如下所示:

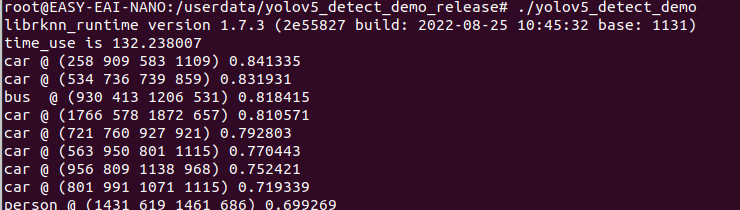

cd /userdata/yolov5_detect_demo_release/运行例程命令如下所示:

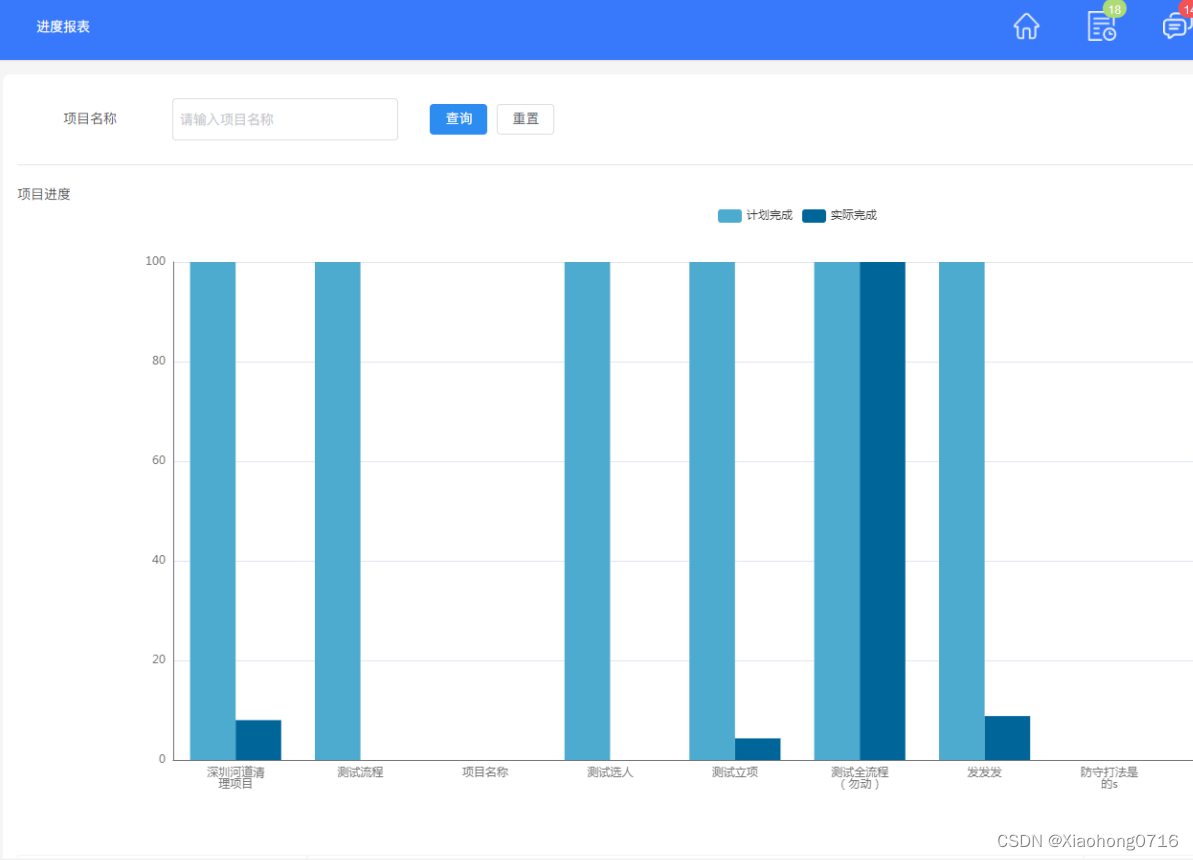

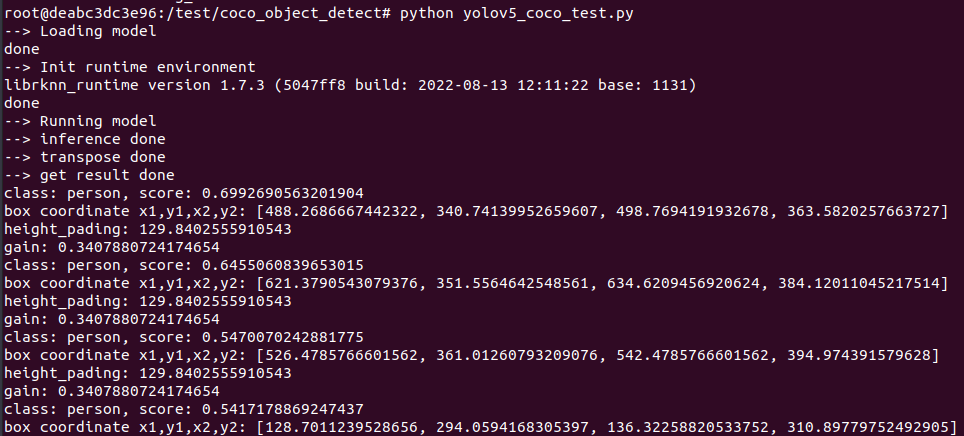

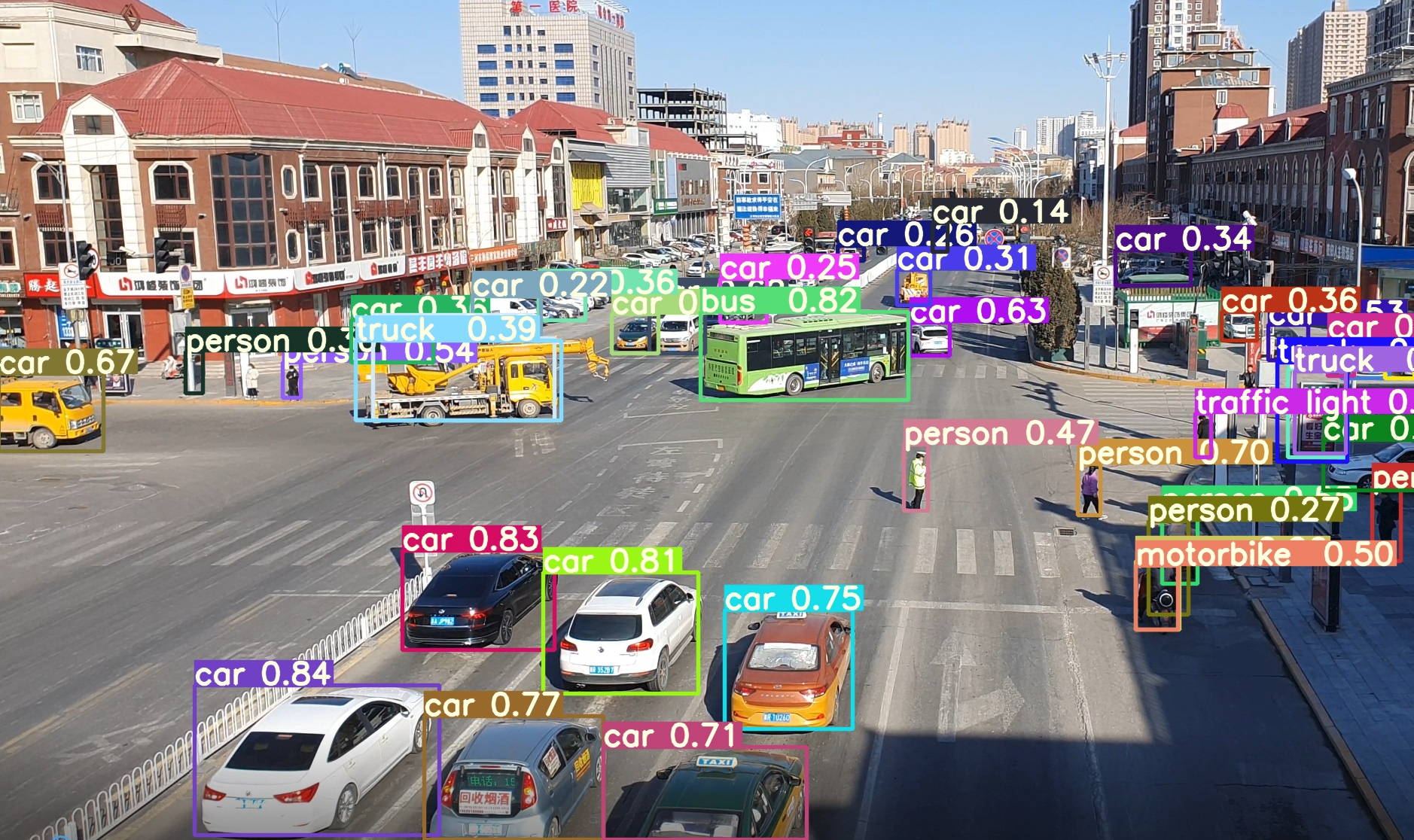

./yolov5_detect_demo执行结果如下图所示,算法执行时间约为50ms:

退出板卡环境,取回测试图片:

exit

adb pull /userdata/yolov5_detect_demo_release/result.jpg .

与onnx模型推理结果对比,rknn模型对远处的车、以及不明显的人不敏感,但是近处的物体识别效果很好与onnx模型一致!

以下是两份代码

rknn模型在PC端推理代码(python):

import os

import urllib

import traceback

import time

import sys

import numpy as np

import cv2

import random

from rknn.api import RKNN

RKNN_MODEL = 'yolov5_coco_rv1126.rknn'

IMG_PATH = './test.jpg'

DATASET = './dataset.txt'

BOX_THRESH = 0.25

NMS_THRESH = 0.6

IMG_SIZE = 640

CLASSES = ("person", "bicycle", "car","motorbike ","aeroplane ","bus ","train","truck ","boat","traffic light",

"fire hydrant","stop sign ","parking meter","bench","bird","cat","dog ","horse ","sheep","cow","elephant",

"bear","zebra ","giraffe","backpack","umbrella","handbag","tie","suitcase","frisbee","skis","snowboard","sports ball","kite",

"baseball bat","baseball glove","skateboard","surfboard","tennis racket","bottle","wine glass","cup","fork","knife",

"spoon","bowl","banana","apple","sandwich","orange","broccoli","carrot","hot dog","pizza ","donut","cake","chair","sofa",

"pottedplant","bed","diningtable","toilet ","tvmonitor","laptop","mouse","remote ","keyboard ","cell phone","microwave ",

"oven ","toaster","sink","refrigerator ","book","clock","vase","scissors ","teddy bear ","hair drier", "toothbrush")

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def xywh2xyxy(x):

# Convert [x, y, w, h] to [x1, y1, x2, y2]

y = np.copy(x)

y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x

y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y

y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x

y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y

return y

def process(input, mask, anchors):

anchors = [anchors[i] for i in mask]

grid_h, grid_w = map(int, input.shape[0:2])

box_confidence = sigmoid(input[..., 4])

box_confidence = np.expand_dims(box_confidence, axis=-1)

box_class_probs = sigmoid(input[..., 5:])

box_xy = sigmoid(input[..., :2])*2 - 0.5

col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)

row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)

col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

grid = np.concatenate((col, row), axis=-1)

box_xy += grid

box_xy *= int(IMG_SIZE/grid_h)

box_wh = pow(sigmoid(input[..., 2:4])*2, 2)

box_wh = box_wh * anchors

box = np.concatenate((box_xy, box_wh), axis=-1)

return box, box_confidence, box_class_probs

def filter_boxes(boxes, box_confidences, box_class_probs):

"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!

# Arguments

boxes: ndarray, boxes of objects.

box_confidences: ndarray, confidences of objects.

box_class_probs: ndarray, class_probs of objects.

# Returns

boxes: ndarray, filtered boxes.

classes: ndarray, classes for boxes.

scores: ndarray, scores for boxes.

"""

box_scores = box_confidences * box_class_probs

box_classes = np.argmax(box_class_probs, axis=-1)

box_class_scores = np.max(box_scores, axis=-1)

pos = np.where(box_confidences[...,0] >= BOX_THRESH)

boxes = boxes[pos]

classes = box_classes[pos]

scores = box_class_scores[pos]

return boxes, classes, scores

def nms_boxes(boxes, scores):

"""Suppress non-maximal boxes.

# Arguments

boxes: ndarray, boxes of objects.

scores: ndarray, scores of objects.

# Returns

keep: ndarray, index of effective boxes.

"""

x = boxes[:, 0]

y = boxes[:, 1]

w = boxes[:, 2] - boxes[:, 0]

h = boxes[:, 3] - boxes[:, 1]

areas = w * h

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x[i], x[order[1:]])

yy1 = np.maximum(y[i], y[order[1:]])

xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])

yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])

w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)

h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)

inter = w1 * h1

ovr = inter / (areas[i] + areas[order[1:]] - inter)

inds = np.where(ovr <= NMS_THRESH)[0]

order = order[inds + 1]

keep = np.array(keep)

return keep

def yolov5_post_process(input_data):

masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]

anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],

[59, 119], [116, 90], [156, 198], [373, 326]]

boxes, classes, scores = [], [], []

for input,mask in zip(input_data, masks):

b, c, s = process(input, mask, anchors)

b, c, s = filter_boxes(b, c, s)

boxes.append(b)

classes.append(c)

scores.append(s)

boxes = np.concatenate(boxes)

boxes = xywh2xyxy(boxes)

classes = np.concatenate(classes)

scores = np.concatenate(scores)

nboxes, nclasses, nscores = [], [], []

for c in set(classes):

inds = np.where(classes == c)

b = boxes[inds]

c = classes[inds]

s = scores[inds]

keep = nms_boxes(b, s)

nboxes.append(b[keep])

nclasses.append(c[keep])

nscores.append(s[keep])

if not nclasses and not nscores:

return None, None, None

boxes = np.concatenate(nboxes)

classes = np.concatenate(nclasses)

scores = np.concatenate(nscores)

return boxes, classes, scores

def scale_coords(x1, y1, x2, y2, dst_width, dst_height):

dst_top, dst_left, dst_right, dst_bottom = 0, 0, 0, 0

gain = 0

if dst_width > dst_height:

image_max_len = dst_width

gain = IMG_SIZE / image_max_len

resized_height = dst_height * gain

height_pading = (IMG_SIZE - resized_height)/2

print("height_pading:", height_pading)

y1 = (y1 - height_pading)

y2 = (y2 - height_pading)

print("gain:", gain)

dst_x1 = int(x1 / gain)

dst_y1 = int(y1 / gain)

dst_x2 = int(x2 / gain)

dst_y2 = int(y2 / gain)

return dst_x1, dst_y1, dst_x2, dst_y2

def plot_one_box(x, img, color=None, label=None, line_thickness=None):

tl = line_thickness or round(0.002 * (img.shape[0] + img.shape[1]) / 2) + 1 # line/font thickness

color = color or [random.randint(0, 255) for _ in range(3)]

c1, c2 = (int(x[0]), int(x[1])), (int(x[2]), int(x[3]))

cv2.rectangle(img, c1, c2, color, thickness=tl, lineType=cv2.LINE_AA)

if label:

tf = max(tl - 1, 1) # font thickness

t_size = cv2.getTextSize(label, 0, fontScale=tl / 3, thickness=tf)[0]

c2 = c1[0] + t_size[0], c1[1] - t_size[1] - 3

cv2.rectangle(img, c1, c2, color, -1, cv2.LINE_AA) # filled

cv2.putText(img, label, (c1[0], c1[1] - 2), 0, tl / 3, [225, 255, 255], thickness=tf, lineType=cv2.LINE_AA)

def draw(image, boxes, scores, classes):

"""Draw the boxes on the image.

# Argument:

image: original image.

boxes: ndarray, boxes of objects.

classes: ndarray, classes of objects.

scores: ndarray, scores of objects.

all_classes: all classes name.

"""

for box, score, cl in zip(boxes, scores, classes):

x1, y1, x2, y2 = box

print('class: {}, score: {}'.format(CLASSES[cl], score))

print('box coordinate x1,y1,x2,y2: [{}, {}, {}, {}]'.format(x1, y1, x2, y2))

x1 = int(x1)

y1 = int(y1)

x2 = int(x2)

y2 = int(y2)

dst_x1, dst_y1, dst_x2, dst_y2 = scale_coords(x1, y1, x2, y2, image.shape[1], image.shape[0])

#print("img.cols:", image.cols)

plot_one_box((dst_x1, dst_y1, dst_x2, dst_y2), image, label='{0} {1:.2f}'.format(CLASSES[cl], score))

'''

cv2.rectangle(image, (dst_x1, dst_y1), (dst_x2, dst_y2), (255, 0, 0), 2)

cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),

(dst_x1, dst_y1 - 6),

cv2.FONT_HERSHEY_SIMPLEX,

0.6, (0, 0, 255), 2)

'''

def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

# Scale ratio (new / old)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

# Compute padding

ratio = r, r # width, height ratios

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

dw /= 2 # divide padding into 2 sides

dh /= 2

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im, ratio, (dw, dh)

if __name__ == '__main__':

# Create RKNN object

rknn = RKNN()

print('--> Loading model')

ret = rknn.load_rknn(RKNN_MODEL)

if ret != 0:

print('load rknn model failed')

exit(ret)

print('done')

# init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime()

# ret = rknn.init_runtime('rv1126', device_id='1126')

if ret != 0:

print('Init runtime environment failed')

exit(ret)

print('done')

# Set inputs

img = cv2.imread(IMG_PATH)

letter_img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE, IMG_SIZE))

letter_img = cv2.cvtColor(letter_img, cv2.COLOR_BGR2RGB)

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=[letter_img])

print('--> inference done')

# post process

input0_data = outputs[0]

input1_data = outputs[1]

input2_data = outputs[2]

input0_data = input0_data.reshape([3,-1]+list(input0_data.shape[-2:]))

input1_data = input1_data.reshape([3,-1]+list(input1_data.shape[-2:]))

input2_data = input2_data.reshape([3,-1]+list(input2_data.shape[-2:]))

input_data = list()

input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))

print('--> transpose done')

boxes, classes, scores = yolov5_post_process(input_data)

print('--> get result done')

img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

if boxes is not None:

draw(img, boxes, scores, classes)

cv2.imwrite('./result.jpg', img)

cv2.imshow("post process result", img_1)

cv2.waitKeyEx(0)

rknn.release()

rknn模型预编译代码(Python):

import sys

import random

import os

import argparse

from rknn.api import RKNN

def precompile_file(fi, fo, target):

print("precompile {} to {}".format(fi, fo))

src_rknn_model_path = fi

dst_rknn_model_path = fo

rknn = RKNN(verbose=True)

rknn.load_rknn(src_rknn_model_path)

rknn.init_runtime(rknn2precompile=True, target=target)

rknn.export_rknn_precompile_model(export_path=dst_rknn_model_path)

def precompile_dir(d, out_dir, target):

"""

decrypt a directory assigned by <d>

"""

file_list = os.listdir(d)

file_count = len(file_list)

for i in range(file_count):

f = os.path.join(d, file_list[i])

target_file_name = file_list[i]

neof = os.path.join(out_dir, target_file_name)

precompile_file(f, neof, target)

print('Progress:%d/%d' % (i + 1, file_count))

print('Directory <%s> has been decrypted.' % (d))

if __name__ == '__main__':

precompile_file('./yolov5_coco_rv1126.rknn','./yolov5_coco_rv1126_pre.rknn', 'rv1126')

rknn模型C++推理代码--yolov5_detect.h

#ifndef _YOLOV5_DETECT_H_

#define _YOLOV5_DETECT_H_

#include "yolov5_detect_postprocess.h"

#include "rknn_api.h"

#include <opencv2/opencv.hpp>

/*

* COCO检测初始化函数

* ctx:输入参数,rknn_context句柄

* path:输入参数,算法模型路径

*/

int coco_detect_init(rknn_context *ctx, const char * path);

/*

* COCO检测执行函数

* ctx:输入参数,rknn_context句柄

* input_image:输入参数,图像数据输入(cv::Mat是Opencv的类型)

* output_dets:输出参数,目标检测框输出

*/

int coco_detect_run(rknn_context ctx, cv::Mat input_image, coco_detect_result_group_t *detect_result_group);

/*

* COCO检测释放函数

* ctx:输入参数,rknn_context句柄

*/

int coco_detect_release(rknn_context ctx);

#endifyolov5_detect.cpp

#include <iostream>

#include <fstream>

#include <vector>

#include <cstdint>

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <unistd.h>

#include <time.h>

#include <math.h>

#include <fcntl.h>

#include <opencv2/opencv.hpp>

#include "yolov5_detect.h"

#include "rknn_api.h"

#include <sys/time.h>

using namespace std;

using namespace cv;

//unsigned char *model;

//detection* dets;

static void printRKNNTensor(rknn_tensor_attr *attr)

{

printf("index=%d name=%s n_dims=%d dims=[%d %d %d %d] n_elems=%d size=%d "

"fmt=%d type=%d qnt_type=%d fl=%d zp=%d scale=%f\n",

attr->index, attr->name, attr->n_dims, attr->dims[3], attr->dims[2],

attr->dims[1], attr->dims[0], attr->n_elems, attr->size, 0, attr->type,

attr->qnt_type, attr->fl, attr->zp, attr->scale);

}

// 调整图片尺寸达到模型输入尺寸要求

static int letter_box(cv::Mat input_image, cv::Mat *output_image, int model_input_size)

{

// 计算缩放比

int input_width, input_height;

input_width = input_image.cols;

input_height = input_image.rows;

float ratio;

ratio = min((float)model_input_size / input_width, (float)model_input_size / input_height); // 选择较小的缩放比

// 计算缩放后的宽高尺寸

int new_width, new_height;

new_width = round(ratio * input_width );

new_height = round(ratio * input_height);

// 计算padding量

// 长或者宽,至少有一个在缩放后满足模型需求了;另一个需要padding

int height_padding = 0;

int width_padding = 0;

int top = 0;

int bottom = 0;

int left = 0;

int right = 0;

if( new_width >= new_height) // 宽已经满足要求了,高需要padding

{

height_padding = new_width - new_height; // 计算padding量

if( (height_padding % 2) == 0 ) // 如果padding量是偶数

{

top = (int)((float)(height_padding/2)); // 直接除2就好

bottom = (int)((float)(height_padding/2));

}

else // padding量是奇数

{

top = (int)((float)(height_padding/2));

bottom = (int)((float)(height_padding/2))+1;

}

}

else // 高已经满足要求了,宽需要padding

{

width_padding = new_height - new_width;

if( (width_padding % 2) == 0 )

{

left = (int)((float)(width_padding/2));

right = (int)((float)(width_padding/2));

}

else

{

left = (int)((float)(width_padding/2));

right = (int)((float)(width_padding/2))+1;

}

}

// 对长宽进行缩放

cv::Mat resize_img;

cv::resize(input_image, resize_img, cv::Size(new_width, new_height));

// padding操作

cv::copyMakeBorder(resize_img, *output_image, top, bottom, left, right, cv::BORDER_CONSTANT, cv::Scalar(0, 0, 0));

return 0;

}

// 模型为二进制格式存储,将其整个加载到内存中

int coco_detect_init(rknn_context *ctx, const char * path)

{

int ret;

// Load model

FILE *fp = fopen(path, "rb"); // 打开指定路径的模型文件

if(fp == NULL)

{

printf("fopen %s fail!\n", path);

return -1;

}

fseek(fp, 0, SEEK_END); // SEEK_EN为文件尾,文件指针移向文件的末尾

int model_len = ftell(fp); // 计算得到文件指针的偏移量

unsigned char *model_data = (unsigned char*)malloc(model_len); // 分配与模型文件长度相等的内存块,用于存储模型数据

fseek(fp, 0, SEEK_SET); //SEEK_SET为文件头,文件指针重新移动到文件开头

if(model_len != fread(model_data, 1, model_len, fp)) // 将模型文件中的数据读取到之前分配的内存块; 如果读取的数据长度与模型文件长度不一致,则

{

printf("fread %s fail!\n", path);

free(model_data); // 释放内存块

return -1;

}

fclose(fp); // 关闭文件

//init

ret = rknn_init(ctx, model_data, model_len, RKNN_FLAG_PRIOR_MEDIUM); // 初始化rknn模型上下文

if(ret < 0)

{

printf("rknn_init fail! ret=%d\n", ret);

return -1;

}

free(model_data);

return 0;

}

// 目标框的坐标信息映射到原图上

static int scale_coords(coco_detect_result_group_t *detect_result_group, int img_width, int img_height, int model_size)

{

for (int i = 0; i < detect_result_group->count; i++)

{

coco_detect_result_t *det_result = &(detect_result_group->results[i]);

int x1 = det_result->box.left;

int y1 = det_result->box.top;

int x2 = det_result->box.right;

int y2 = det_result->box.bottom;

if( img_width >= img_height )

{

int image_max_len = img_width;

float gain;

gain = (float)model_size / image_max_len;

int resized_height = img_height * gain;

int height_pading = (model_size - resized_height)/2;

y1 = (y1 - height_pading);

y2 = (y2 - height_pading);

x1 = int(x1 / gain);

y1 = int(y1 / gain);

x2 = int(x2 / gain);

y2 = int(y2 / gain);

det_result->box.left = x1;

det_result->box.top = y1;

det_result->box.right = x2;

det_result->box.bottom = y2;

}

else

{

int image_max_len = img_height;

float gain;

gain = (float)model_size / image_max_len;

int resized_width = img_width * gain;

int width_pading = (model_size - resized_width)/2;

x1 = (x1 - width_pading);

x2 = (x2 - width_pading);

x1 = int(x1 / gain);

y1 = int(y1 / gain);

x2 = int(x2 / gain);

y2 = int(y2 / gain);

det_result->box.left = x1;

det_result->box.top = y1;

det_result->box.right = x2;

det_result->box.bottom = y2;

}

}

return 0;

}

int coco_detect_run(rknn_context ctx, cv::Mat input_image, coco_detect_result_group_t *detect_result_group)

{

int img_width = 0;

int img_height = 0;

int img_channel = 0;

size_t actual_size = 0;

const float vis_threshold = 0.1;

const float nms_threshold = 0.5;

const float conf_threshold = 0.2;

int ret;

img_width = input_image.cols;

img_height = input_image.rows;

// 查询SDK版本、模型输入输出张量数量

rknn_sdk_version version;

ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &version,

sizeof(rknn_sdk_version)); // 查询SDK版本

if (ret < 0)

{

printf("rknn_init error ret=%d\n", ret);

return -1;

}

/*

printf("sdk version: %s driver version: %s\n", version.api_version,

version.drv_version);

*/

// 查询并保存输入和输出张量属性

rknn_input_output_num io_num; // 用于存储查询到的输入和输出张量数量

ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num)); // 查询模型输入输出张量数量

if (ret < 0)

{

printf("rknn_init error ret=%d\n", ret);

return -1;

}

/*

printf("model input num: %d, output num: %d\n", io_num.n_input,

io_num.n_output);

*/

rknn_tensor_attr input_attrs[io_num.n_input]; // 创建一个数组,用于存储输入张量的属性信息

memset(input_attrs, 0, sizeof(input_attrs)); // 将数组的所有元素清零

for (int i = 0; i < io_num.n_input; i++)

{

input_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]),

sizeof(rknn_tensor_attr)); // 查询模型的输入张量属性,并保存在相应的结构体中

// 包括 索引(index)、数据格式(fmt)、数据类型(type)、通道数(channel)、宽度(dims[0])和高度(dims[1])

if (ret < 0)

{

printf("rknn_init error ret=%d\n", ret);

return -1;

}

//printRKNNTensor(&(input_attrs[i]));

}

rknn_tensor_attr output_attrs[io_num.n_output];

memset(output_attrs, 0, sizeof(output_attrs));

for (int i = 0; i < io_num.n_output; i++)

{

output_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]),

sizeof(rknn_tensor_attr));

//printRKNNTensor(&(output_attrs[i]));

}

// 从输入张量属性中获取输入的高和宽

int input_channel = 3;

int input_width = 0;

int input_height = 0;

if (input_attrs[0].fmt == RKNN_TENSOR_NCHW) // 检查图片通道顺序

{

//printf("model is NCHW input fmt\n");

input_width = input_attrs[0].dims[0];

input_height = input_attrs[0].dims[1];

}

else

{

//printf("model is NHWC input fmt\n");

input_width = input_attrs[0].dims[1];

input_height = input_attrs[0].dims[2];

}

/*

printf("model input height=%d, width=%d, channel=%d\n", height, width,

channel);

*/

// 输入张量初始化

/* Init input tensor */

rknn_input inputs[1];

memset(inputs, 0, sizeof(inputs));

inputs[0].index = 0;

inputs[0].type = RKNN_TENSOR_UINT8;

inputs[0].size = input_width * input_height * input_channel;

inputs[0].fmt = RKNN_TENSOR_NHWC;

inputs[0].pass_through = 0;

// 输出张量初始化

/* Init output tensor */

rknn_output outputs[io_num.n_output];

memset(outputs, 0, sizeof(outputs));

for (int i = 0; i < io_num.n_output; i++)

{

outputs[i].want_float = 0; // 输出张量的数据类型不需要转换为浮点数

}

// 对输入图像进行信封处理,将其调整为模型制定的输入尺寸

cv::Mat letter_image;

letter_box(input_image, &letter_image, input_width);

inputs[0].buf = letter_image.data; // 预处理后的图像数据赋值给inputs[0].buf

// 推理,获取模型输出

rknn_inputs_set(ctx, io_num.n_input, inputs); //输入张量与ctx(RKNN模型上下文)关联起来

ret = rknn_run(ctx, NULL); // 运行 RKNN 模型进行推理。此时模型会根据输入张量的数据进行前向传播,生成模型的输出结果

ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL); // 获取模型的输出结果; io_num.n_output表示输出张量的数量;outputs是输出张量数组

// Post process

// 获取模型输出的缩放因子和零点信息,用于后处理

// 缩放因子是模型量化过程中得到的,会使用缩放因子和零点来确定如何将浮点数映射到整数范围内。

// 缩放因子表示浮点数在量化后,映射到整数范围内所需的缩放比例;

// 零点表示浮点数映射到整数范围内时的偏移量

std::vector<float> out_scales; // 用于存储张量的缩放因子

std::vector<uint8_t> out_zps; // 用于存储张量的零点

for (int i = 0; i < io_num.n_output; ++i)

{

out_scales.push_back(output_attrs[i].scale); // 获取第i个输出张量的缩放因子;

out_zps.push_back(output_attrs[i].zp); // 获取第i个输出张量的零点

}

// 后处理

yolov5_post_process_u8((uint8_t *)outputs[0].buf, (uint8_t *)outputs[1].buf, (uint8_t *)outputs[2].buf, input_height, input_width,

conf_threshold, nms_threshold, out_zps, out_scales, detect_result_group);

/*

yolov5_post_process_fp((float *)outputs[0].buf, (float *)outputs[1].buf, (float *)outputs[2].buf, input_height, input_width,

conf_threshold, nms_threshold, &detect_result_group);

*/

// 释放模型输出资源

rknn_outputs_release(ctx, io_num.n_output, outputs);

// 对后处理得到的目标框进行缩放,以适应原始图像的尺寸

scale_coords(detect_result_group, img_width, img_height, input_width);

return 0;

}

int coco_detect_release(rknn_context ctx)

{

rknn_destroy(ctx);

return 0;

}

yolov5_detect_postprocess.h

#ifndef _YOLOV5_DETECT_POSTPROCESS_H_

#define _YOLOV5_DETECT_POSTPROCESS_H_

#include <stdint.h>

#define COCO_NAME_MAX_SIZE 16

#define COCO_NUMB_MAX_SIZE 200

#define COCO_CLASS_NUM 80

#define COCO_PROP_BOX_SIZE (5+COCO_CLASS_NUM)

typedef struct _COCO_BOX_RECT

{

int left;

int right;

int top;

int bottom;

} COCO_BOX_RECT;

typedef struct __coco_detect_result_t

{

char name[COCO_NAME_MAX_SIZE];

int class_index;

COCO_BOX_RECT box;

float prop;

} coco_detect_result_t;

typedef struct _detect_result_group_t

{

int id;

int count;

coco_detect_result_t results[COCO_NUMB_MAX_SIZE];

} coco_detect_result_group_t;

int yolov5_post_process_u8(uint8_t *input0, uint8_t *input1, uint8_t *input2, int model_in_h, int model_in_w,

float conf_threshold, float nms_threshold,

std::vector<uint8_t> &qnt_zps, std::vector<float> &qnt_scales,

coco_detect_result_group_t *group);

int yolov5_post_process_fp(float *input0, float *input1, float *input2, int model_in_h, int model_in_w,

float conf_threshold, float nms_threshold,

coco_detect_result_group_t *group);

#endif //_RKNN_ZERO_COPY_DEMO_POSTPROCESS_H_yolov5_detect_postprocess.cpp

// Copyright (c) 2021 by Rockchip Electronics Co., Ltd. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <string.h>

#include <sys/time.h>

#include <vector>

#include "yolov5_detect_postprocess.h"

#include <stdint.h>

static char labels[COCO_CLASS_NUM][30] = {"person", "bicycle", "car","motorbike ","aeroplane ","bus ","train","truck ","boat","traffic light",

"fire hydrant","stop sign ","parking meter","bench","bird","cat","dog ","horse ","sheep","cow","elephant",

"bear","zebra ","giraffe","backpack","umbrella","handbag","tie","suitcase","frisbee","skis","snowboard","sports ball","kite",

"baseball bat","baseball glove","skateboard","surfboard","tennis racket","bottle","wine glass","cup","fork","knife",

"spoon","bowl","banana","apple","sandwich","orange","broccoli","carrot","hot dog","pizza ","donut","cake","chair","sofa",

"pottedplant","bed","diningtable","toilet ","tvmonitor","laptop","mouse","remote ","keyboard ","cell phone","microwave ",

"oven ","toaster","sink","refrigerator ","book","clock","vase","scissors ","teddy bear ","hair drier", "toothbrush"};

const int anchor0[6] = {10, 13, 16, 30, 33, 23};

const int anchor1[6] = {30, 61, 62, 45, 59, 119};

const int anchor2[6] = {116, 90, 156, 198, 373, 326};

// 将一个浮点数val限制在一个指定的最小值min和最大值max的范围内

inline static int clamp(float val, int min, int max)

{

return val > min ? (val < max ? val : max) : min;

}

// 计算两个矩形的重叠度

// 在计算之前需要对x轴进行排序,左边那个矩形是0,右边那个是1

// yolov5输出结果,检测框已经经过排序处理,按照从左到右,从上到下的顺序排列

static float CalculateOverlap(float xmin0, float ymin0, float xmax0, float ymax0, float xmin1, float ymin1, float xmax1, float ymax1)

{

float w = fmax(0.f, fmin(xmax0, xmax1) - fmax(xmin0, xmin1) + 1.0); // 重叠部分的宽

float h = fmax(0.f, fmin(ymax0, ymax1) - fmax(ymin0, ymin1) + 1.0); // 重叠部分的高

float i = w * h; // 重叠部分的面积,即两矩形交集

float u = (xmax0 - xmin0 + 1.0) * (ymax0 - ymin0 + 1.0) + (xmax1 - xmin1 + 1.0) * (ymax1 - ymin1 + 1.0) - i; // 两矩形并集面积

return u <= 0.f ? 0.f : (i / u); // 交并比

}

//

static int nms(int validCount, std::vector<float> &outputLocations, std::vector<int> &order, float threshold)

{

for (int i = 0; i < validCount; ++i) // 遍历所有检测框

{

if (order[i] == -1)

{

continue;

}

int n = order[i]; // 检测框的索引顺序,即对应于outputLocations中检测框的顺序

for (int j = i + 1; j < validCount; ++j) // 从 n+1 开始遍历剩余的检测框

{

int m = order[j];

if (m == -1)

{

continue;

}

// 当前检测框

float xmin0 = outputLocations[n * 4 + 0]; // xmin

float ymin0 = outputLocations[n * 4 + 1]; // ymin

float xmax0 = outputLocations[n * 4 + 0] + outputLocations[n * 4 + 2]; // xmin + w = xmax

float ymax0 = outputLocations[n * 4 + 1] + outputLocations[n * 4 + 3]; // ymin + h = ymax

// 剩余检测框

float xmin1 = outputLocations[m * 4 + 0];

float ymin1 = outputLocations[m * 4 + 1];

float xmax1 = outputLocations[m * 4 + 0] + outputLocations[m * 4 + 2];

float ymax1 = outputLocations[m * 4 + 1] + outputLocations[m * 4 + 3];

float iou = CalculateOverlap(xmin0, ymin0, xmax0, ymax0, xmin1, ymin1, xmax1, ymax1); // 计算交并比

if (iou > threshold) // 交并比大于阈值,即认为是同一个目标的检测框

{

order[j] = -1;

}

}

}

return 0;

}

static int quick_sort_indice_inverse(

std::vector<float> &input, // 待排序的向量

int left, // 排序范围的左右边界

int right,

std::vector<int> &indices) // 与input相对应的索引向量

{

float key;

int key_index;

int low = left;

int high = right;

if (left < right)

{

key_index = indices[left];

key = input[left];

while (low < high)

{

while (low < high && input[high] <= key)

{

high--;

}

input[low] = input[high];

indices[low] = indices[high];

while (low < high && input[low] >= key)

{

low++;

}

input[high] = input[low];

indices[high] = indices[low];

}

input[low] = key;

indices[low] = key_index;

quick_sort_indice_inverse(input, left, low - 1, indices);

quick_sort_indice_inverse(input, low + 1, right, indices);

}

return low;

}

static float sigmoid(float x)

{

return 1.0 / (1.0 + expf(-x));

}

static float unsigmoid(float y)

{

return -1.0 * logf((1.0 / y) - 1.0);

}

inline static int32_t __clip(float val, float min, float max)

{

float f = val <= min ? min : (val >= max ? max : val);

return f;

}

// 将一个浮点数(32)进行量化转换为一个固定范围内的整数值(uint8_t),并添加零点偏移(zp)和缩放因子(scale)的调整

static uint8_t qnt_f32_to_affine(float f32, uint8_t zp, float scale)

{

float dst_val = (f32 / scale) + zp;

uint8_t res = (uint8_t)__clip(dst_val, 0, 255); // 将结果限制到0~255之间,并转化为uint8_t

return res;

}

// 一个经过量化转换和调整的整数值(qnt)反量化回浮点数

static float deqnt_affine_to_f32(uint8_t qnt, uint8_t zp, float scale)

{

return ((float)qnt - (float)zp) * scale;

}

// 从输入数据中提取边界框,对边界框进行解码和筛选,并将结果存储到相应的向量中

static int process_u8(uint8_t *input, int *anchor, int grid_h, int grid_w, int height, int width, int stride,

std::vector<float> &boxes, std::vector<float> &boxScores, std::vector<int> &classId,

float threshold, uint8_t zp, float scale)

{

int validCount = 0;

int grid_len = grid_h * grid_w; // 网格的高度*宽度

float thres = unsigmoid(threshold);

uint8_t thres_u8 = qnt_f32_to_affine(thres, zp, scale); // 置信度量化为整数

for (int a = 0; a < 3; a++) // 每个网格位置有三个锚框

{

for (int i = 0; i < grid_h; i++) // 当前网格的列索引,可以理解为图像的行数

{

for (int j = 0; j < grid_w; j++) // 当前网格的行索引,可以理解为图像的列数

{

uint8_t box_confidence = input[(COCO_PROP_BOX_SIZE * a + 4) * grid_len + i * grid_w + j]; // dan

// COCO_PROP_BOX_SIZE为常量,每个边界框的属性数量,这里应该是85;

// +4是为了跳过边界框信息,以便直接获取边界框的置信度

// (COCO_PROP_BOX_SIZE * a + 4) * grid_len

// grid_len表示网格的总长度;

// 不过这里我也看不懂rknn的输出,毕竟这句代码我看得太困惑,与onnx模型后处理的时候不一样

if (box_confidence >= thres_u8) // 如果置信度大于阈值

{

int offset = (COCO_PROP_BOX_SIZE * a) * grid_len + i * grid_w + j; // 计算偏移量

uint8_t *in_ptr = input + offset; // 获取输入指针

// 计算bounding box的x坐标

float box_x = sigmoid(deqnt_affine_to_f32(*in_ptr, zp, scale)) * 2.0 - 0.5;

float box_y = sigmoid(deqnt_affine_to_f32(in_ptr[grid_len], zp, scale)) * 2.0 - 0.5;

float box_w = sigmoid(deqnt_affine_to_f32(in_ptr[2 * grid_len], zp, scale)) * 2.0;

float box_h = sigmoid(deqnt_affine_to_f32(in_ptr[3 * grid_len], zp, scale)) * 2.0;

// 根据当前点的位置和步长,缩放box的x和y坐标

box_x = (box_x + j) * (float)stride;

box_y = (box_y + i) * (float)stride;

// 根据anchor的尺寸缩放box的宽度和高度

box_w = box_w * box_w * (float)anchor[a * 2];

box_h = box_h * box_h * (float)anchor[a * 2 + 1];

// 将box的坐标转换为左上角坐标和宽度、高度,并存储在boxes数组中

box_x -= (box_w / 2.0);

box_y -= (box_h / 2.0);

boxes.push_back(box_x);

boxes.push_back(box_y);

boxes.push_back(box_w);

boxes.push_back(box_h);

// 获取最大类别概率值和对应的类别ID

uint8_t maxClassProbs = in_ptr[5 * grid_len];

int maxClassId = 0;

for (int k = 1; k < COCO_CLASS_NUM; ++k)

{

uint8_t prob = in_ptr[(5 + k) * grid_len];

if (prob > maxClassProbs)

{

maxClassId = k;

maxClassProbs = prob;

}

}

// 将box_confidence和类别概率值进行逆量化并转换位浮点数

float box_conf_f32 = sigmoid(deqnt_affine_to_f32(box_confidence, zp, scale));

float class_prob_f32 = sigmoid(deqnt_affine_to_f32(maxClassProbs, zp, scale));

// 计算Box_scores,并存储在boxScores中

boxScores.push_back(box_conf_f32* class_prob_f32);

// 将最大类别的ID存储在classId数组中

classId.push_back(maxClassId);

// 增加有效目标框的数量

validCount++;

}

}

}

}

return validCount;

}

static int process_fp(float *input, int *anchor, int grid_h, int grid_w, int height, int width, int stride,

std::vector<float> &boxes, std::vector<float> &boxScores, std::vector<int> &classId,

float threshold)

{

int validCount = 0;

int grid_len = grid_h * grid_w;

float thres_sigmoid = unsigmoid(threshold);

for (int a = 0; a < 3; a++)

{

for (int i = 0; i < grid_h; i++)

{

for (int j = 0; j < grid_w; j++)

{

float box_confidence = input[(COCO_PROP_BOX_SIZE * a + 4) * grid_len + i * grid_w + j];

if (box_confidence >= thres_sigmoid)

{

int offset = (COCO_PROP_BOX_SIZE * a) * grid_len + i * grid_w + j;

float *in_ptr = input + offset;

float box_x = sigmoid(*in_ptr) * 2.0 - 0.5;

float box_y = sigmoid(in_ptr[grid_len]) * 2.0 - 0.5;

float box_w = sigmoid(in_ptr[2 * grid_len]) * 2.0;

float box_h = sigmoid(in_ptr[3 * grid_len]) * 2.0;

box_x = (box_x + j) * (float)stride;

box_y = (box_y + i) * (float)stride;

box_w = box_w * box_w * (float)anchor[a * 2];

box_h = box_h * box_h * (float)anchor[a * 2 + 1];

box_x -= (box_w / 2.0);

box_y -= (box_h / 2.0);

boxes.push_back(box_x);

boxes.push_back(box_y);

boxes.push_back(box_w);

boxes.push_back(box_h);

float maxClassProbs = in_ptr[5 * grid_len];

int maxClassId = 0;

for (int k = 1; k < COCO_CLASS_NUM; ++k)

{

float prob = in_ptr[(5 + k) * grid_len];

if (prob > maxClassProbs)

{

maxClassId = k;

maxClassProbs = prob;

}

}

float box_conf_f32 = sigmoid(box_confidence);

float class_prob_f32 = sigmoid(maxClassProbs);

boxScores.push_back(box_conf_f32* class_prob_f32);

classId.push_back(maxClassId);

validCount++;

}

}

}

}

return validCount;

}

int yolov5_post_process_u8(uint8_t *input0, uint8_t *input1, uint8_t *input2, int model_in_h, int model_in_w,

float conf_threshold, float nms_threshold,

std::vector<uint8_t> &qnt_zps, std::vector<float> &qnt_scales,

coco_detect_result_group_t *group)

{

static int init = -1;

if (init == -1)

{

/*

int ret = 0;

ret = loadLabelName(LABEL_NALE_TXT_PATH, labels);

if (ret < 0)

{

return -1;

}

*/

init = 0;

}

// 初始化输出参数

memset(group, 0, sizeof(coco_detect_result_group_t));

// 定义储存结果的数组

std::vector<float> filterBoxes;

std::vector<float> boxesScore;

std::vector<int> classId;

// 第一个输入的步长和网格大小

int stride0 = 8;

int grid_h0 = model_in_h / stride0;

int grid_w0 = model_in_w / stride0;

int validCount0 = 0;

// 处理第一个输入,提取bounding box和类别信息

validCount0 = process_u8(input0, (int *)anchor0, grid_h0, grid_w0, model_in_h, model_in_w,

stride0, filterBoxes, boxesScore, classId, conf_threshold, qnt_zps[0], qnt_scales[0]);

// 第二个输入的步长和网格大小

int stride1 = 16;

int grid_h1 = model_in_h / stride1;

int grid_w1 = model_in_w / stride1;

int validCount1 = 0;

// 处理第二个输入,提取bounding box和类别信息

validCount1 = process_u8(input1, (int *)anchor1, grid_h1, grid_w1, model_in_h, model_in_w,

stride1, filterBoxes, boxesScore, classId, conf_threshold, qnt_zps[1], qnt_scales[1]);

// 第三个输入的步长和网格大小

int stride2 = 32;

int grid_h2 = model_in_h / stride2;

int grid_w2 = model_in_w / stride2;

int validCount2 = 0;

// 处理第三个输入,提取bounding box和类别信息

validCount2 = process_u8(input2, (int *)anchor2, grid_h2, grid_w2, model_in_h, model_in_w,

stride2, filterBoxes, boxesScore, classId, conf_threshold, qnt_zps[2], qnt_scales[2]);

// 计算有效目标框的总数

int validCount = validCount0 + validCount1 + validCount2;

// 没有检测到目标

if (validCount <= 0)

{

return 0;

}

// 创建索引数组

std::vector<int> indexArray;

for (int i = 0; i < validCount; ++i)

{

indexArray.push_back(i);

}

// 对得分进行降序排序,并更新索引数组

quick_sort_indice_inverse(boxesScore, 0, validCount - 1, indexArray);

// 进行非极大值抑制,去除冗余框

nms(validCount, filterBoxes, indexArray, nms_threshold);

int last_count = 0;

group->count = 0;

/* 处理有效的检测目标框 */

for (int i = 0; i < validCount; ++i)

{

if (indexArray[i] == -1 || boxesScore[i] < conf_threshold || last_count >= COCO_NUMB_MAX_SIZE)

{

continue;

}

int n = indexArray[i];

// 计算每个目标框的坐标

float x1 = filterBoxes[n * 4 + 0];

float y1 = filterBoxes[n * 4 + 1];

float x2 = x1 + filterBoxes[n * 4 + 2];

float y2 = y1 + filterBoxes[n * 4 + 3];

int id = classId[n];

/*

group->results[last_count].box.left = (int)((clamp(x1, 0, model_in_w) - w_offset) / resize_scale);

group->results[last_count].box.top = (int)((clamp(y1, 0, model_in_h) - h_offset) / resize_scale);

group->results[last_count].box.right = (int)((clamp(x2, 0, model_in_w) - w_offset) / resize_scale);

group->results[last_count].box.bottom = (int)((clamp(y2, 0, model_in_h) - h_offset) / resize_scale);

*/

// 更新目标检测框的边界框坐标、置信度和类别信息

group->results[last_count].box.left = (int) clamp(x1, 0, model_in_w);

group->results[last_count].box.top = (int) clamp(y1, 0, model_in_h);

group->results[last_count].box.right = (int) clamp(x2, 0, model_in_w);

group->results[last_count].box.bottom = (int) clamp(y2, 0, model_in_h);

group->results[last_count].prop = boxesScore[i];

group->results[last_count].class_index = id;

char *label = labels[id];

strncpy(group->results[last_count].name, label, COCO_NAME_MAX_SIZE);

// printf("result %2d: (%4d, %4d, %4d, %4d), %s\n", i, group->results[last_count].box.left, group->results[last_count].box.top,

// group->results[last_count].box.right, group->results[last_count].box.bottom, label);

last_count++;

}

// 更新目标检结果的数量

group->count = last_count;

return 0;

}

int yolov5_post_process_fp(float *input0, float *input1, float *input2, int model_in_h, int model_in_w,

float conf_threshold, float nms_threshold,

coco_detect_result_group_t *group)

{

static int init = -1;

if (init == -1)

{

/*

int ret = 0;

ret = loadLabelName(LABEL_NALE_TXT_PATH, labels);

if (ret < 0)

{

return -1;

}

*/

init = 0;

}

memset(group, 0, sizeof(coco_detect_result_group_t));

std::vector<float> filterBoxes;

std::vector<float> boxesScore;

std::vector<int> classId;

int stride0 = 8;

int grid_h0 = model_in_h / stride0;

int grid_w0 = model_in_w / stride0;

int validCount0 = 0;

validCount0 = process_fp(input0, (int *)anchor0, grid_h0, grid_w0, model_in_h, model_in_w,

stride0, filterBoxes, boxesScore, classId, conf_threshold);

int stride1 = 16;

int grid_h1 = model_in_h / stride1;

int grid_w1 = model_in_w / stride1;

int validCount1 = 0;

validCount1 = process_fp(input1, (int *)anchor1, grid_h1, grid_w1, model_in_h, model_in_w,

stride1, filterBoxes, boxesScore, classId, conf_threshold);

int stride2 = 32;

int grid_h2 = model_in_h / stride2;

int grid_w2 = model_in_w / stride2;

int validCount2 = 0;

validCount2 = process_fp(input2, (int *)anchor2, grid_h2, grid_w2, model_in_h, model_in_w,

stride2, filterBoxes, boxesScore, classId, conf_threshold);

int validCount = validCount0 + validCount1 + validCount2;

// no object detect

if (validCount <= 0)

{

return 0;

}

std::vector<int> indexArray;

for (int i = 0; i < validCount; ++i)

{

indexArray.push_back(i);

}

quick_sort_indice_inverse(boxesScore, 0, validCount - 1, indexArray);

nms(validCount, filterBoxes, indexArray, nms_threshold);

int last_count = 0;

group->count = 0;

/* box valid detect target */

for (int i = 0; i < validCount; ++i)

{

if (indexArray[i] == -1 || boxesScore[i] < conf_threshold || last_count >= COCO_NUMB_MAX_SIZE)

{

continue;

}

int n = indexArray[i];

float x1 = filterBoxes[n * 4 + 0];

float y1 = filterBoxes[n * 4 + 1];

float x2 = x1 + filterBoxes[n * 4 + 2];

float y2 = y1 + filterBoxes[n * 4 + 3];

int id = classId[n];

/*

group->results[last_count].box.left = (int)((clamp(x1, 0, model_in_w) - w_offset) / resize_scale);

group->results[last_count].box.top = (int)((clamp(y1, 0, model_in_h) - h_offset) / resize_scale);

group->results[last_count].box.right = (int)((clamp(x2, 0, model_in_w) - w_offset) / resize_scale);

group->results[last_count].box.bottom = (int)((clamp(y2, 0, model_in_h) - h_offset) / resize_scale);

*/

group->results[last_count].box.left = (int) clamp(x1, 0, model_in_w);

group->results[last_count].box.top = (int) clamp(y1, 0, model_in_h);

group->results[last_count].box.right = (int) clamp(x2, 0, model_in_w);

group->results[last_count].box.bottom = (int) clamp(y2, 0, model_in_h);

group->results[last_count].prop = boxesScore[i];

group->results[last_count].class_index = id;

char *label = labels[id];

strncpy(group->results[last_count].name, label, COCO_NAME_MAX_SIZE);

// printf("result %2d: (%4d, %4d, %4d, %4d), %s\n", i, group->results[last_count].box.left, group->results[last_count].box.top,

// group->results[last_count].box.right, group->results[last_count].box.bottom, label);

last_count++;

}

group->count = last_count;

return 0;

}CMakeLists.txt

cmake_minimum_required(VERSION 2.8.4)

STRING(REGEX REPLACE ".*/(.*)" "\\1" CURRENT_FOLDER ${CMAKE_CURRENT_SOURCE_DIR} )

MESSAGE("current project: " ${CURRENT_FOLDER})

set(CMAKE_SYSTEM_NAME Linux)

set(CMAKE_CROSSCOMPILING TRUE)

cmake_host_system_information(RESULT arch_value QUERY OS_PLATFORM)

if(NOT "${arch_value}" STREQUAL "armv7l")

include ($ENV{HOME}/configs/cross.cmake)

endif()

project(yolov5_detect_demo)

## 算法头文件

set(sdk_inc include/)

## 算法源码

file(GLOB file_source lib/*.cpp *.cpp)

set(source ${file_source})

find_package(OpenCV REQUIRED)

add_executable(yolov5_detect_demo ${source})

target_include_directories(yolov5_detect_demo PUBLIC ${sdk_inc} ${OpenCV_INCLUDE_DIRS})

target_link_libraries(yolov5_detect_demo pthread rknn_api ${OpenCV_LIBS})

参考:

EASY EAI灵眸科技 | 让边缘AI落地更简单