1. 环境:

ceph:octopus

OS:Kylin-Server-V10_U1-Release-Build02-20210824-GFB-x86_64、CentOS Linux release 7.9.2009

2. ceph和cephadm

2.1 ceph简介

Ceph可用于向云平台提供对象存储、块设备服务和文件系统。所有Ceph存储集群部署都从设置每个Ceph节点开始,然后设置网络。

Ceph存储集群要求:至少有一个Ceph Monitor和一个Ceph Manager,并且至少有与Ceph集群上存储的对象副本一样多的Ceph osd(例如,如果一个给定对象的三个副本存储在Ceph集群上,那么该Ceph集群中必须至少存在三个osd)。

Monitors:Ceph监视器(ceph-mon)维护集群状态的映射,包括监视器映射、管理器映射、OSD映射、MDS映射和CRUSH映射。这些映射是Ceph守护进程相互协调所需的关键集群状态。监视器还负责管理守护进程和客户端之间的身份验证。通常需要至少三个监视器来实现冗余和高可用性。

Managers:Ceph Manager守护进程(ceph-mgr)负责跟踪Ceph集群的运行时指标和当前状态,包括存储利用率、当前性能指标和系统负载。Ceph Manager守护进程还承载基于python的模块来管理和公开Ceph集群信息,包括基于web的Ceph Dashboard和REST API。通常需要至少两个管理器来实现高可用性。

Ceph OSD:对象存储守护进程(Ceph OSD, ceph-osd)存储数据,处理数据复制、恢复、再平衡,并通过检查其他Ceph OSD守护进程的心跳,为Ceph监视器和管理器提供一些监控信息。通常需要至少三个Ceph osd来实现冗余和高可用性。

MDSs: Ceph元数据服务器(MDS,ceph-mds)代表Ceph文件系统存储元数据(即,Ceph块设备和Ceph对象存储不使用MDS)。Ceph元数据服务器允许POSIX文件系统用户执行基本命令(如ls、find等),而不会给Ceph存储集群带来巨大的负担。

参考官方文档ceph

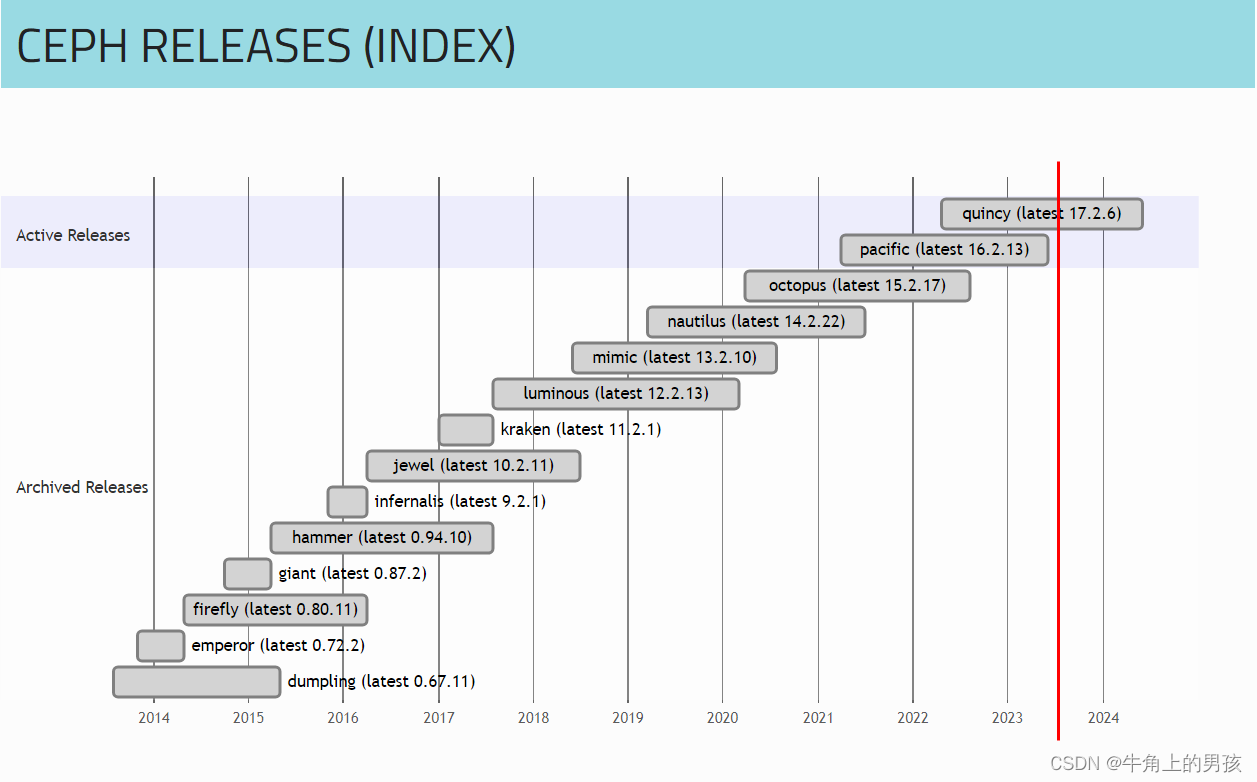

2.2 ceph releases

2.3 cephadm

cephadm是一个用于管理Ceph集群的实用程序。

- cephadm可以向集群中添加Ceph容器。

- cephadm可以从集群中删除Ceph容器。

- cephadm可以更新Ceph容器。

cephadm不依赖于外部配置工具,如Ansible、Rook或Salt。但是,这些外部配置工具可以用于自动化不是由cephadm本身执行的操作。

cephadm管理Ceph集群的整个生命周期。这个生命周期从引导过程开始,当cephadm在单个节点上创建一个小型Ceph集群时。该集群由一个监视器和一个管理器组成。然后,cephadm使用编排接口扩展集群,添加主机并提供Ceph守护进程和服务。这个生命周期的管理可以通过Ceph命令行界面(CLI)或仪表板(GUI)来执行。

参考官方文档cephadm

3. 节点规划

| 主机名 | 地址 | 角色 |

|---|---|---|

| ceph1 | 172.25.0.141 | mon, osd, mds, mgr, iscsi, cephadm |

| ceph2 | 172.25.0.142 | mon, osd, mds, mgr, iscsi |

| ceph3 | 172.25.0.143 | mon, osd, mds, mgr, iscsi |

4. 基础环境配置

4.1 地址配置

ceph1配置地址

nmcli connection modify ens33 ipv4.method manual ipv4.addresses 172.25.0.141/24 ipv4.gateway 172.25.0.2 connection.autoconnect yes

ceph2配置地址

nmcli connection modify ens33 ipv4.method manual ipv4.addresses 172.25.0.142/24 ipv4.gateway 172.25.0.2 connection.autoconnect yes

ceph3配置地址

nmcli connection modify ens33 ipv4.method manual ipv4.addresses 172.25.0.143/24 ipv4.gateway 172.25.0.2 connection.autoconnect yes

4.2 主机名配置

ceph1配置主机名

hostnamectl set-hostname ceph1

ceph2配置主机名

hostnamectl set-hostname ceph2

ceph3配置主机名

hostnamectl set-hostname ceph3

ceph1、ceph2、ceph3均配置hosts

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.0.141 ceph1

172.25.0.142 ceph2

172.25.0.143 ceph3

添加DNS解析,任意添加一个

vim /etc/resolv.conf

nameserver 223.5.5.5

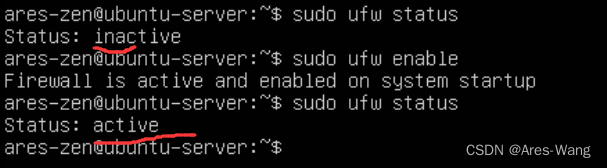

4.3 防火墙

ceph1、ceph2、ceph3均需配置

关闭防火墙

systemctl disable --now firewalld

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

4.4 配置免密

ssh-keygen -f /root/.ssh/id_rsa -P ''

ssh-copy-id -o StrictHostKeyChecking=no 172.25.0.141

ssh-copy-id -o StrictHostKeyChecking=no 172.25.0.142

ssh-copy-id -o StrictHostKeyChecking=no 172.25.0.143

4.5 配置时间同步

ceph1、ceph2、ceph3均需安装软件包

yum -y install chrony

systemctl enable chronyd

ceph1作为服务端

vim /etc/chrony.conf

pool pool.ntp.org iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

allow 172.25.0.0/24

local stratum 10

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

重启服务

systemctl restart chronyd

ceph2、ceph2及其他后续节点作为客户端

vim /etc/chrony.conf

pool 172.25.0.141 iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

重启服务

systemctl restart chronyd

使用客户端进行验证

chronyc sources -v

210 Number of sources = 1

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current synced, '+' = combined , '-' = not combined,

| / '?' = unreachable, 'x' = time may be in error, '~' = time too variable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* ceph1 11 6 17 10 -68us[ -78us] +/- 2281us

4.6 安装python3

4.6.1 Kylin V10

系统已经默认安装了python 3.7.4的版本,若未安装,配置好源后通过YUM安装

yum -y install python3

4.6.2 CentOS 7

安装python3

yum -y install epel-release

yum -y install python3

4.6 安装配置docker

4.6.1 Kylin V10

系统已经默认安装了docker-engine的版本,若未安装,配置好源后通过YUM安装

yum -y install docker-engine

4.6.2 CentOS 7

配置docker repo

yum -y install yum-utils

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum -y install docker-ce

设置开机自启

systemctl enable docker

5. 安装cephadm

5.1 Kylin V10

下载cephadm软件包

wget http://mirrors.163.com/ceph/rpm-octopus/el7/noarch/cephadm-15.2.17-0.el7.noarch.rpm

安装cephadm

chmod 600 /var/log/tallylog

rpm -ivh cephadm-15.2.17-0.el7.noarch.rpm

5.2 CentOS 7

通过cephadm脚本授予执行权限

curl https://raw.githubusercontent.com/ceph/ceph/v15.2.1/src/cephadm/cephadm -o cephadm

chmod +x cephadm

或者

wget http://mirrors.163.com/ceph/rpm-octopus/el7/noarch/cephadm

基于发行版的名称配置ceph仓库

./cephadm add-repo --release octopus

执行cephadm安装程序

./cephadm install

安装ceph-common软件包

cephadm install ceph-common

octopus软件包地址:

https://repo.huaweicloud.com/ceph/rpm-octopus/

http://mirrors.163.com/ceph/rpm-octopus/

http://mirrors.aliyun.com/ceph/rpm-octopus/

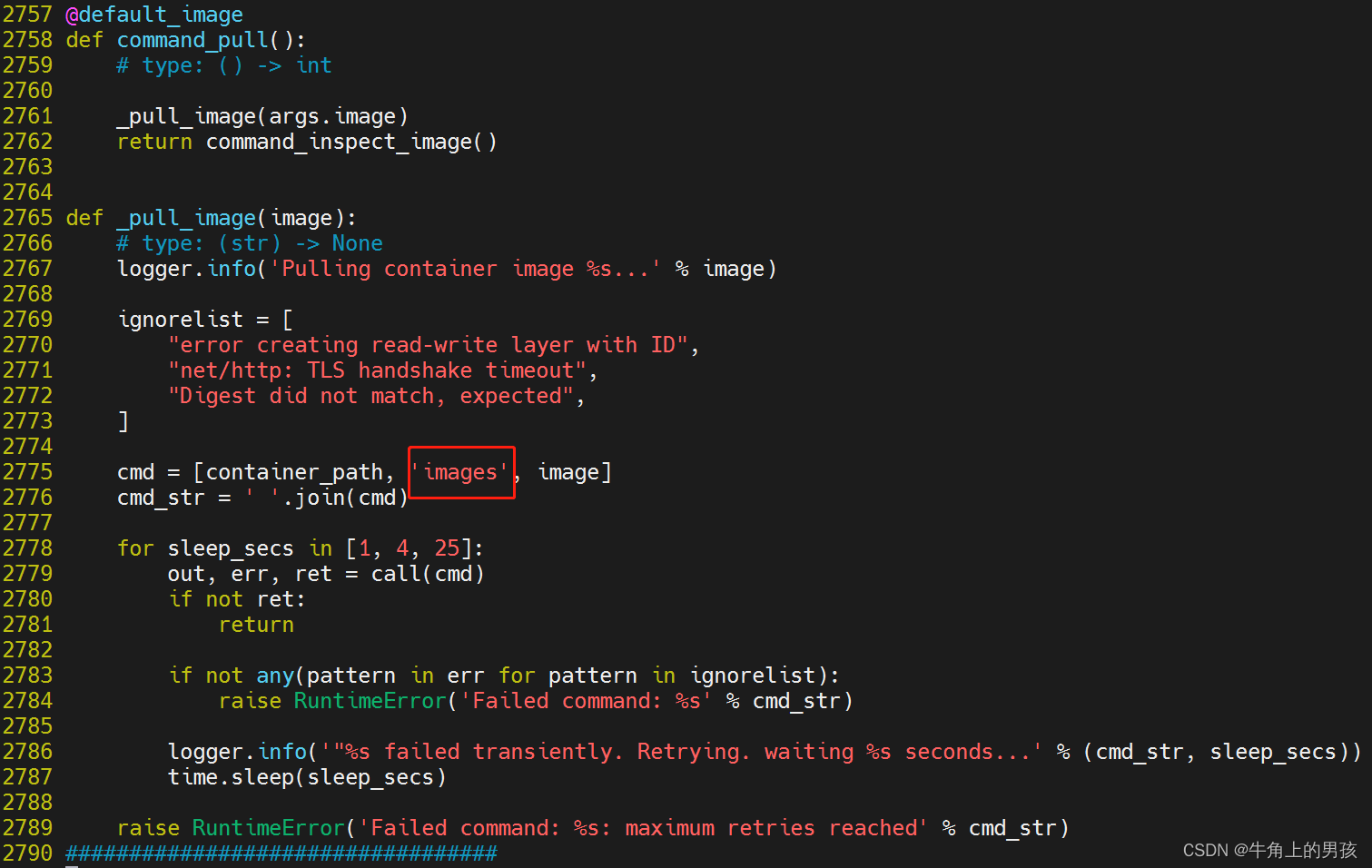

5.3 cephadm修改

离线使用本地的镜像,需要修改cephadm文件_pull_image函数的cmd列表中的pull,将其修改为images。

vim /usr/sbin/cephadm

6. 安装ceph

6.1 准备镜像

6.1.1 导入镜像

离线镜像已放置在百度云盘:

链接:https://pan.baidu.com/s/1UEkQo0XrwuCDI5u9H8sGkQ?pwd=zsd4

提取码:zsd4

离线load导入镜像

docker load < ceph-v15.img

docker load < prometheus-v2.18.1.img

docker load < node-exporter-v0.18.1.img

docker load < ceph-grafana-6.7.4.img

docker load < alertmanager-v0.20.0.img

在线pull镜像octopus

docker pull quay.io/ceph/ceph:v15

docker pull quay.io/prometheus/prometheus:v2.18.1

docker pull quay.io/prometheus/node-exporter:v0.18.1

docker pull quay.io/ceph/ceph-grafana:6.7.4

docker pull quay.io/prometheus/alertmanager:v0.20.0

quincy

docker pull quay.io/ceph/ceph:v17

docker pull quay.io/ceph/ceph-grafana:8.3.5

docker pull quay.io/prometheus/prometheus:v2.33.4

docker pull quay.io/prometheus/node-exporter:v1.3.1

docker pull quay.io/prometheus/alertmanager:v0.23.0

6.1.2 构建registryserver

docker load < registry-2.img

mkdir -p /data/registry/

docker run -d -p 4000:5000 -v /data/registry/:/var/lib/registry/ --restart=always --name registry registry:2

添加主机名解析

vim /etc/hosts

172.25.0.141 registryserver

注意:这个主机名简析需要放到第一行,便于cephadm shell使用

6.1.3 tag镜像及push进仓库

docker tag quay.io/ceph/ceph:v15 registryserver:4000/ceph/ceph:v15

docker tag quay.io/prometheus/prometheus:v2.18.1 registryserver:4000/prometheus/prometheus:v2.18.1

docker tag quay.io/prometheus/node-exporter:v0.18.1 registryserver:4000/prometheus/node-exporter:v0.18.1

docker tag quay.io/ceph/ceph-grafana:6.7.4 registryserver:4000/ceph/ceph-grafana:6.7.4

docker tag quay.io/prometheus/alertmanager:v0.20.0 registryserver:4000/prometheus/alertmanager:v0.20.0

docker push registryserver:4000/ceph/ceph:v15

docker push registryserver:4000/prometheus/prometheus:v2.18.1

docker push registryserver:4000/prometheus/node-exporter:v0.18.1

docker push registryserver:4000/ceph/ceph-grafana:6.7.4

docker push registryserver:4000/prometheus/alertmanager:v0.20.0

6.2 bootstrap部署

mkdir -p /etc/ceph

cephadm bootstrap --mon-ip 172.25.0.141

该命令执行以下操作:

- 在本地主机上为新集群创建monitor 和 manager daemon守护程序。

- 为Ceph集群生成一个新的SSH密钥,并将其添加到root用户的/root/.ssh/authorized_keys文件中。

- 将与新群集进行通信所需的最小配置文件保存到/etc/ceph/ceph.conf。

- 向/etc/ceph/ceph.client.admin.keyring写入client.admin管理(特权!)secret key的副本。

- 将public key的副本写入/etc/ceph/ceph.pub。

[root@ceph1 ~]# cephadm bootstrap --mon-ip 172.25.0.141

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

podman|docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: d20e3700-2d2f-11ee-9166-000c29aa07d2

Verifying IP 172.25.0.141 port 3300 ...

Verifying IP 172.25.0.141 port 6789 ...

Mon IP 172.25.0.141 is in CIDR network 172.25.0.0/24

Pulling container image quay.io/ceph/ceph:v15...

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network...

Creating mgr...

Verifying port 9283 ...

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Wrote config to /etc/ceph/ceph.conf

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/10)...

mgr not available, waiting (2/10)...

mgr not available, waiting (3/10)...

mgr not available, waiting (4/10)...

mgr not available, waiting (5/10)...

mgr not available, waiting (6/10)...

mgr not available, waiting (7/10)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for Mgr epoch 5...

Mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to to /etc/ceph/ceph.pub

Adding key to root@localhost's authorized_keys...

Adding host ceph1...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Enabling mgr prometheus module...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for Mgr epoch 13...

Mgr epoch 13 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

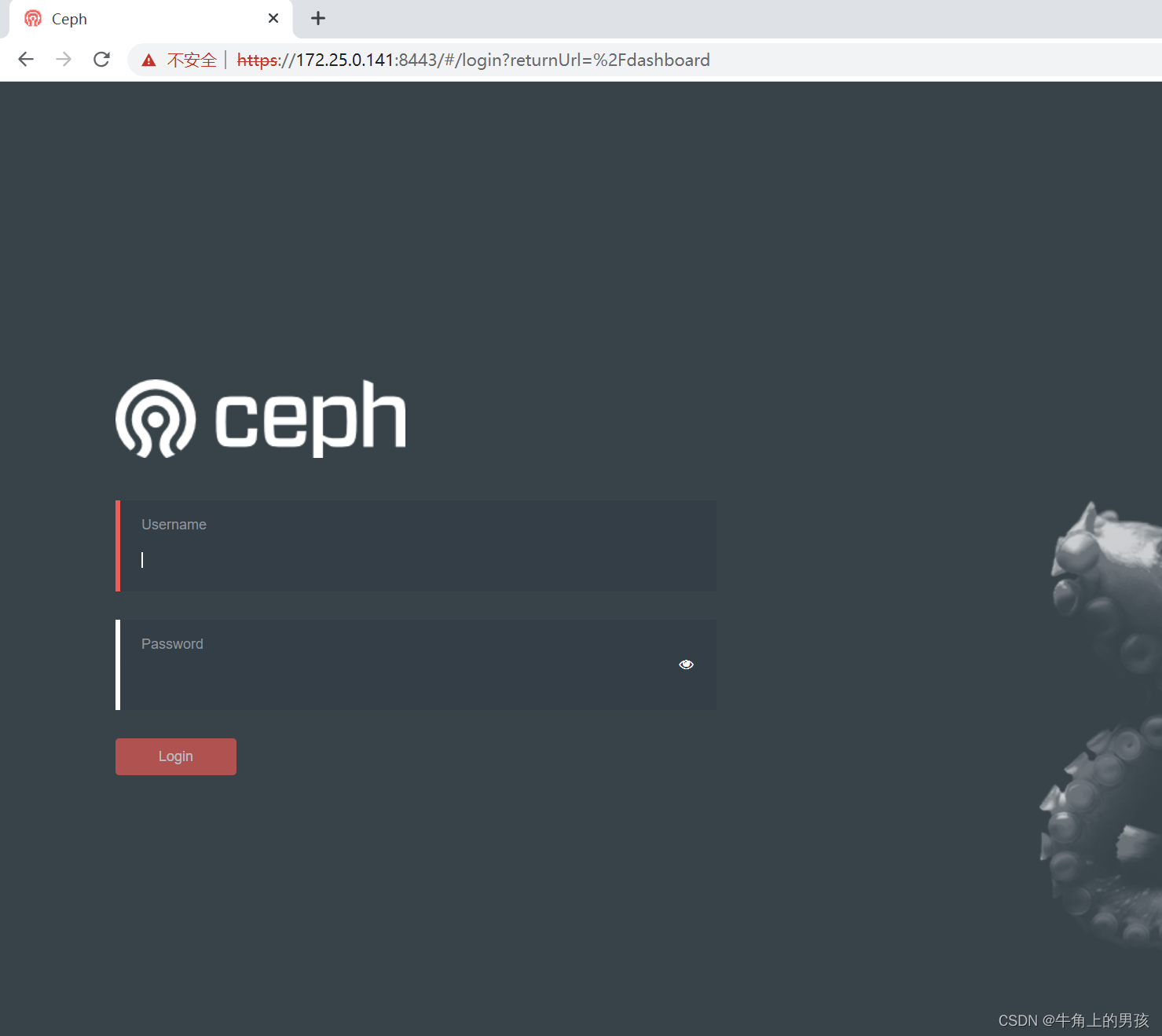

Ceph Dashboard is now available at:

URL: https://ceph1:8443/

User: admin

Password: cx763mtlk7

You can access the Ceph CLI with:

sudo /usr/sbin/cephadm shell --fsid d20e3700-2d2f-11ee-9166-000c29aa07d2 -c /etc/ceph/ceph.conf -k /etc/c

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/master/mgr/telemetry/

Bootstrap complete.

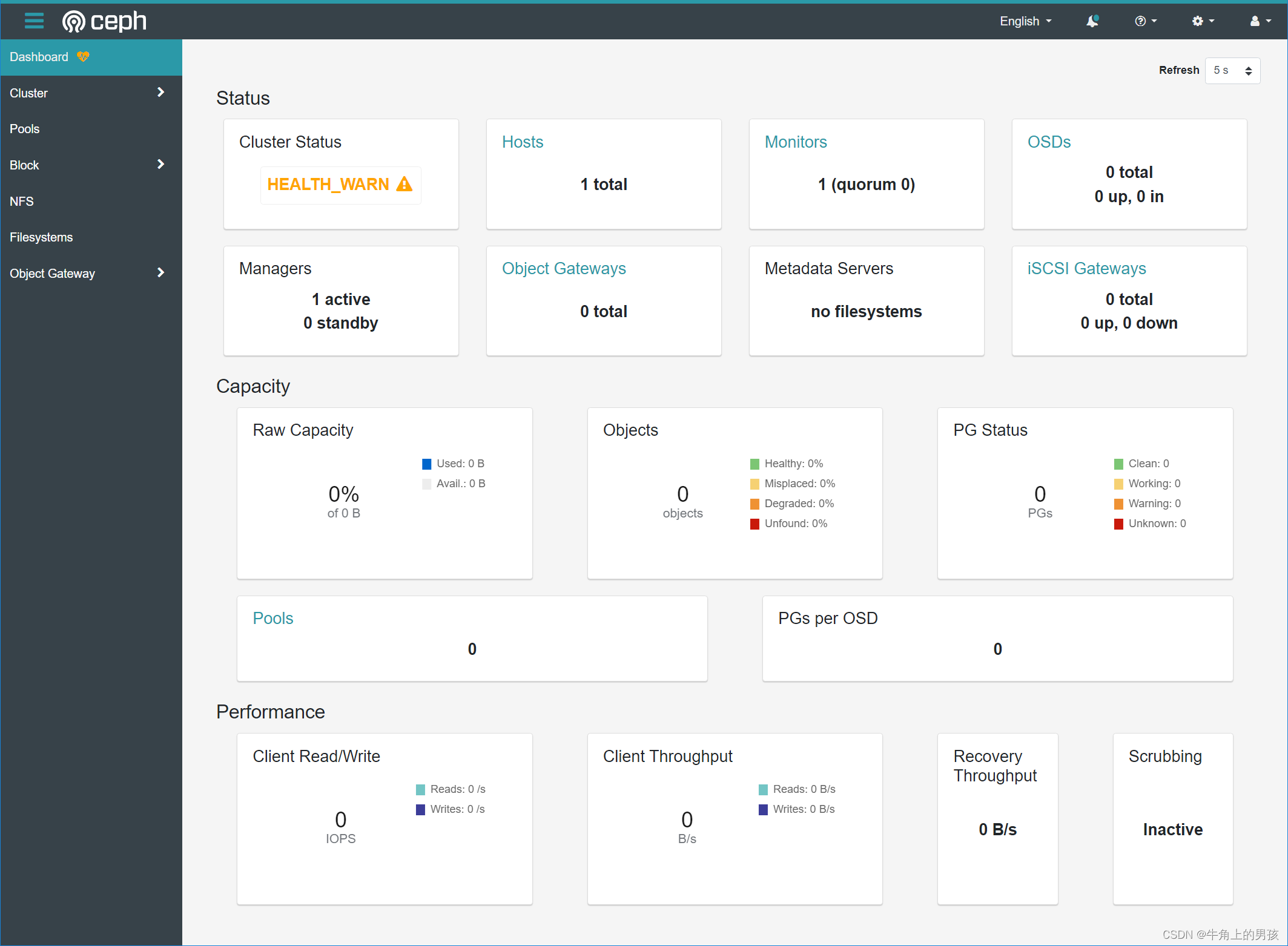

根据上文输出的URL、User、Password登录网页,如下

首次登录需要修改密码,修改密码之后登录如下:

通过cephadm shell启用ceph命令,也可以通过创建别名:

alias ceph='cephadm shell -- ceph'

而后直接在物理机上执行ceph -s

6.2 主机管理

6.2.1 查看可用主机

ceph orch host ls

[ceph: root@ceph1 /]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph1 ceph1

6.2.1 添加主机

将主机添加到集群中

ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph2

ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph3

scp -r /etc/ceph root@ceph2:/etc/

scp -r /etc/ceph root@ceph3:/etc/

ceph orch host add ceph1 172.25.0.141

ceph orch host add ceph2 172.25.0.142

ceph orch host add ceph3 172.25.0.143

ceph orch host ls

[ceph: root@ceph1 /]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph1 172.25.0.141

ceph2 172.25.0.142

ceph3 172.25.0.143

6.2.3 删除主机

从 环境中删除主机,如ceph3,确保正在运行的服务都已经停止和删除:

ceph orch host rm ceph3

6.3 部署MONS和MGRS

6.3.1 mon指定特定子网

ceph config set mon public_network 172.25.0.0/24

6.3.2 更改默认mon的数量

通过ceph orch ls 查看到相应服务的状态,如mon

[ceph: root@ceph1 /]# ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 9m ago 6h count:1 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f

crash 2/3 9m ago 6h * quay.io/ceph/ceph:v15 93146564743f

grafana 1/1 9m ago 6h count:1 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646

mgr 1/2 9m ago 6h count:2 quay.io/ceph/ceph:v15 93146564743f

mon 2/5 9m ago 6h count:5 quay.io/ceph/ceph:v15 93146564743f

node-exporter 1/3 10m ago 6h * quay.io/prometheus/node-exporter:v0.18.1 mix

osd.None 3/0 9m ago - <unmanaged> quay.io/ceph/ceph:v15 93146564743f

prometheus 1/1 9m ago 6h count:1 quay.io/prometheus/prometheus:v2.18.1 de242295e225

ceph集群一般默认会允许存在5个mon和2个mgr,将mon调整为3,执行如下命令:

ceph orch apply mon 3

[ceph: root@ceph1 /]# ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 35s ago 6h count:1 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f

crash 3/3 39s ago 6h * quay.io/ceph/ceph:v15 93146564743f

grafana 1/1 35s ago 6h count:1 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646

mgr 2/2 39s ago 6h count:2 quay.io/ceph/ceph:v15 93146564743f

mon 3/3 39s ago 11m ceph1;ceph2;ceph3;count:3 quay.io/ceph/ceph:v15 93146564743f

node-exporter 1/3 39s ago 6h * quay.io/prometheus/node-exporter:v0.18.1 mix

osd.None 3/0 35s ago - <unmanaged> quay.io/ceph/ceph:v15 93146564743f

prometheus 1/1 35s ago 6h count:1 quay.io/prometheus/prometheus:v2.18.1 de242295e225

6.3.3 部署mon和mgr

在特定的主机上部署mon

ceph orch apply mon --placement="3 ceph1 ceph2 ceph3"

在特定的主机上部署mgr

ceph orch apply mgr --placement="3 ceph1 ceph2 ceph3"

6.4 OSD部署

6.4.1 查看设备列表

执行命令ceph orch device ls查看群集主机上的存储设备清单可以显示为:

[ceph: root@ceph1 /]# ceph orch device ls

Hostname Path Type Serial Size Health Ident Fault Available

ceph1 /dev/sdb hdd 21.4G Unknown N/A N/A Yes

ceph1 /dev/sdc hdd 21.4G Unknown N/A N/A Yes

ceph1 /dev/sdd hdd 21.4G Unknown N/A N/A Yes

ceph2 /dev/sdb hdd 21.4G Unknown N/A N/A Yes

ceph2 /dev/sdc hdd 21.4G Unknown N/A N/A Yes

ceph2 /dev/sdd hdd 21.4G Unknown N/A N/A Yes

ceph3 /dev/sdb hdd 21.4G Unknown N/A N/A Yes

ceph3 /dev/sdc hdd 21.4G Unknown N/A N/A Yes

ceph3 /dev/sdd hdd 21.4G Unknown N/A N/A Yes

6.4.2 创建OSD

如果满足以下所有条件,则认为存储设备可用:

- 设备必须没有分区。

- 设备不得具有任何LVM状态。

- 不得安装设备。

- 该设备不得包含文件系统。

- 该设备不得包含Ceph BlueStore OSD。

- 设备必须大于5 GB。

Ceph拒绝在不可用的设备上配置OSD。

Ceph使用任何可用和未使用的存储设备,如下:

ceph orch apply osd --all-available-devices

在特定主机上的特定设备创建OSD,

ceph1主机添加为OSD

ceph orch daemon add osd ceph1:/dev/sdb

ceph orch daemon add osd ceph1:/dev/sdc

ceph orch daemon add osd ceph1:/dev/sdd

ceph2主机添加为OSD

ceph orch daemon add osd ceph2:/dev/sdb

ceph orch daemon add osd ceph2:/dev/sdc

ceph orch daemon add osd ceph2:/dev/sdd

ceph3主机添加为OSD

ceph orch daemon add osd ceph3:/dev/sdb

ceph orch daemon add osd ceph3:/dev/sdc

ceph orch daemon add osd ceph3:/dev/sdd

6.4.3 移除OSD

ceph orch osd rm 3

6.4.4启停(osd)服务

ceph orch daemon start/stop/restart osd.3

6.5 RGW部署

6.5.1 创建一个领域

首先创建一个领域

radosgw-admin realm create --rgw-realm=radosgw --default

执行结果如下:

[ceph: root@ceph1 /]# radosgw-admin realm create --rgw-realm=radosgw --default

{

"id": "c4b75303-5ef6-4d82-a60c-efa4ceea2bc2",

"name": "radosgw",

"current_period": "d4267c01-b762-473b-bcf5-f278b1ea608a",

"epoch": 1

}

6.5.2 创建区域组

创建区域组

radosgw-admin zonegroup create --rgw-zonegroup=default --master --default

执行结果如下:

[ceph: root@ceph1 /]# radosgw-admin zonegroup create --rgw-zonegroup=default --master --default

{

"id": "6a3dfbbf-3c09-4cc2-9644-1c17524ee4d1",

"name": "default",

"api_name": "default",

"is_master": "true",

"endpoints": [],

"hostnames": [],

"hostnames_s3website": [],

"master_zone": "",

"zones": [],

"placement_targets": [],

"default_placement": "",

"realm_id": "c4b75303-5ef6-4d82-a60c-efa4ceea2bc2",

"sync_policy": {

"groups": []

}

}

6.5.3 创建区域

创建区域

radosgw-admin zone create --rgw-zonegroup=default --rgw-zone=cn --master --default

执行结果如下:

[ceph: root@ceph1 /]# radosgw-admin zone create --rgw-zonegroup=default --rgw-zone=cn --master --default

{

"id": "4531574f-a84b-464f-962b-7a29aad20080",

"name": "cn",

"domain_root": "cn.rgw.meta:root",

"control_pool": "cn.rgw.control",

"gc_pool": "cn.rgw.log:gc",

"lc_pool": "cn.rgw.log:lc",

"log_pool": "cn.rgw.log",

"intent_log_pool": "cn.rgw.log:intent",

"usage_log_pool": "cn.rgw.log:usage",

"roles_pool": "cn.rgw.meta:roles",

"reshard_pool": "cn.rgw.log:reshard",

"user_keys_pool": "cn.rgw.meta:users.keys",

"user_email_pool": "cn.rgw.meta:users.email",

"user_swift_pool": "cn.rgw.meta:users.swift",

"user_uid_pool": "cn.rgw.meta:users.uid",

"otp_pool": "cn.rgw.otp",

"system_key": {

"access_key": "",

"secret_key": ""

},

"placement_pools": [

{

"key": "default-placement",

"val": {

"index_pool": "cn.rgw.buckets.index",

"storage_classes": {

"STANDARD": {

"data_pool": "cn.rgw.buckets.data"

}

},

"data_extra_pool": "cn.rgw.buckets.non-ec",

"index_type": 0

}

}

],

"realm_id": "c4b75303-5ef6-4d82-a60c-efa4ceea2bc2"

}

6.5.4 部署radosgw

为特定领域和区域部署radosgw守护程序

ceph orch apply rgw radosgw cn --placement="3 ceph1 ceph2 ceph3"

6.5.5 验证各节点是否启动rgw容器

ceph orch ps --daemon-type rgw

执行结果如下:

[ceph: root@ceph1 /]# ceph orch ps --daemon-type rgw

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

rgw.radosgw.cn.ceph1.jzxfhf ceph1 running (48s) 38s ago 48s 15.2.17 quay.io/ceph/ceph:v15 93146564743f f2b23eac68b0

rgw.radosgw.cn.ceph2.hdbbtx ceph2 running (55s) 42s ago 55s 15.2.17 quay.io/ceph/ceph:v15 93146564743f 1a96776d7b0f

rgw.radosgw.cn.ceph3.arlfch ceph3 running (52s) 41s ago 52s 15.2.17 quay.io/ceph/ceph:v15 93146564743f ab23d7f7a46c

6.6 MDS部署

Ceph 文件系统,名为 CephFS,兼容 POSIX,建立在 Ceph 对象存储RADOS之上,相关资料参考cephfs

6.6.1 创建数据存储池

创建一个用于cephfs数据存储的池

ceph osd pool create cephfs_data

6.6.2 创建元数据存储池

创建一个用于cephfs元数据存储的池

ceph osd pool create cephfs_metadata

6.6.3 创建文件系统

创建一个文件系统,名为cephfs:

ceph fs new cephfs cephfs_metadata cephfs_data

6.6.4 查看文件系统

查看文件系统列表

ceph fs ls

设置cephfs最大mds服务数量为3

ceph fs set cephfs max_mds 3

若mds只有一个,则需要设置mds为1,副本数若不够,size设置为1

ceph fs set cephfs max_mds 1

ceph osd pool set cephfs_metadata size 1

ceph osd pool set cephfs_data size 1

6.6.5 部署3个mds服务

在特定的主机上部署mds服务

ceph orch apply mds cephfs --placement="3 ceph1 ceph2 ceph3"

6.6.6 查看mds服务是否部署成功

ceph orch ps --daemon-type mds

执行结果如下:

[ceph: root@ceph1 /]# ceph orch ps --daemon-type mds

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

mds.cephfs.ceph1.gfvgda ceph1 running (10s) 4s ago 10s 15.2.17 quay.io/ceph/ceph:v15 93146564743f 7f216bfe781f

mds.cephfs.ceph2.ytkxqc ceph2 running (15s) 6s ago 15s 15.2.17 quay.io/ceph/ceph:v15 93146564743f dec713c46919

mds.cephfs.ceph3.tszetu ceph3 running (13s) 6s ago 13s 15.2.17 quay.io/ceph/ceph:v15 93146564743f 70ee41d1a81b

6.6.6 查看文件系统状态

ceph mds stat

执行结果如下:

cephfs:3 {0=cephfs.ceph2.ytkxqc=up:active,1=cephfs.ceph3.tszetu=up:active,2=cephfs.ceph1.gfvgda=up:active}

查下ceph状态

[ceph: root@ceph1 /]# ceph -s

cluster:

id: d20e3700-2d2f-11ee-9166-000c29aa07d2

health: HEALTH_WARN

insufficient standby MDS daemons available

services:

mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 24h)

mgr: ceph1.kxhbab(active, since 45m), standbys: ceph2.rovomy, ceph3.aseinm

mds: cephfs:3 {0=cephfs.ceph2.ytkxqc=up:active,1=cephfs.ceph3.tszetu=up:active,2=cephfs.ceph1.gfvgda=up:active}

osd: 9 osds: 9 up (since 22h), 9 in (since 22h)

rgw: 3 daemons active (radowgw.cn.ceph1.jzxfhf, radowgw.cn.ceph2.hdbbtx, radowgw.cn.ceph3.arlfch)

task status:

data:

pools: 9 pools, 233 pgs

objects: 269 objects, 14 KiB

usage: 9.2 GiB used, 171 GiB / 180 GiB avail

pgs: 233 active+clean

io:

client: 1.2 KiB/s rd, 1 op/s rd, 0 op/s wr

6.6.7 文件系统挂载

通过admin挂载文件系统

mount.ceph ceph1:6789,ceph2:6789,ceph3:6789:/ /mnt/cephfs -o name=admin

创建用户 cephfs,用于客户端访问CephFs

ceph auth get-or-create client.cephfs mon 'allow r' mds 'allow r, allow rw path=/' osd 'allow rw pool=cephfs_data' -o ceph.client.cephfs.keyring

查看输出的ceph.client.cephfs.keyring密钥文件,或使用下面的命令查看密钥:

ceph auth get-key client.cephfs

挂载cephfs到各节点本地目录

在各个节点执行:

mkdir /mnt/cephfs/

mount -t ceph ceph1:6789,ceph2:6789,ceph3:6789:/ /mnt/cephfs/ -o name=cephfs,secret=<cephfs访问用户的密钥>

编辑各个节点的/etc/fstab文件,实现开机自动挂载,添加以下内容:

ceph1:6789,ceph2:6789,ceph3:6789:/ /mnt/cephfs ceph name=cephfs,secretfile=<cephfs访问用户的密钥>,noatime,_netdev 0 2

6.7 部署 iSCSI 网关

6.7.1 创建池

ceph osd pool create iscsi_pool

ceph osd pool application enable iscsi_pool rbd

6.7.2 部署 iSCSI 网关

ceph orch apply iscsi iscsi_pool admin admin --placement="1 ceph1"

6.7.3 列出主机和进程

ceph orch ps --daemon_type=iscsi

执行结果如下:

[ceph: root@ceph1 /]# ceph orch ps --daemon_type=iscsi

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

iscsi.iscsi.ceph1.dszfku ceph1 running (11s) 2s ago 12s 3.5 quay.io/ceph/ceph:v15 93146564743f b4395ebd49b6

6.7.4 删除iSCSI网关

查看列表

[ceph: root@ceph1 /]# ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 4m ago 5h count:1 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f

crash 3/3 12m ago 5h * quay.io/ceph/ceph:v15 93146564743f

grafana 1/1 4m ago 5h count:1 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646

iscsi.iscsi 1/1 4m ago 4m ceph1;count:1 quay.io/ceph/ceph:v15 93146564743f

mds.cephfs 3/3 12m ago 4h ceph1;ceph2;ceph3;count:3 quay.io/ceph/ceph:v15 93146564743f

mgr 3/3 12m ago 5h ceph1;ceph2;ceph3;count:3 quay.io/ceph/ceph:v15 93146564743f

mon 3/3 12m ago 23h ceph1;ceph2;ceph3;count:3 quay.io/ceph/ceph:v15 93146564743f

node-exporter 3/3 12m ago 5h * quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf

osd.None 9/0 12m ago - <unmanaged> quay.io/ceph/ceph:v15 93146564743f

prometheus 1/1 4m ago 5h count:1 quay.io/prometheus/prometheus:v2.18.1 de242295e225

rgw.radowgw.cn 3/3 12m ago 5h ceph1;ceph2;ceph3;count:3 quay.io/ceph/ceph:v15 93146564743f

删除iscsi网关

ceph orch rm iscsi.iscsi

7. 问题记录:

7.1 文件系统启动不了

执行如下命令ceph mds stat和ceph health detail查看文件系统状态,结果如下:

HEALTH_ERR 1 filesystem is offline; 1 filesystem is online with fewer MDS than max_mds

[ERR] MDS_ALL_DOWN: 1 filesystem is offline

fs cephfs is offline because no MDS is active for it.

[WRN] MDS_UP_LESS_THAN_MAX: 1 filesystem is online with fewer MDS than max_mds

fs cephfs has 0 MDS online, but wants 1

通过ceph orch ls查看mds是否部署或者成功启动,若没有部署则通过以下命令部署:

ceph orch apply mds cephfs --placement="1 ceph1"

7.2 文件系统挂载报错mount error 2 = No such file or directory

查看命令ceph mds stat发现文件系统创建中

cephfs:1 {0=cephfs.ceph1.npyywh=up:creating}

通过命令ceph -s查看,结果如下:

cluster:

id: dd6e5410-221f-11ee-b47c-000c29fd771a

health: HEALTH_WARN

1 MDSs report slow metadata IOs

Reduced data availability: 64 pgs inactive

Degraded data redundancy: 65 pgs undersized

services:

mon: 1 daemons, quorum ceph1 (age 15h)

mgr: ceph1.vtottx(active, since 15h)

mds: cephfs:1 {0=cephfs.ceph1.npyywh=up:creating}

osd: 3 osds: 3 up (since 15h), 3 in (since 15h); 1 remapped pgs

data:

pools: 3 pools, 65 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 57 GiB / 60 GiB avail

pgs: 98.462% pgs not active

64 undersized+peered

1 active+undersized+remapped

发现如下问题

1 MDSs report slow metadata IOs

Reduced data availability: 64 pgs inactive

Degraded data redundancy: 65 pgs undersized

由于单个节点集群,pg不足,满足不了三副本的要求,需要设置mds为1,故将cephfs_metadata和cephfs_data的size设置为1,其他资源池一样

ceph fs set cephfs max_mds 1

ceph osd pool set cephfs_metadata size 1

ceph osd pool set cephfs_data size 1

通过命令ceph mds stat 查询结果如下:

cephfs:1 {0=cephfs.ceph1.npyywh=up:active}

状态为active,可以正常挂载

7.3 /var/log/tallylog is either world writable or not a normal file

安装软件包cephadm-15.2.17-0.el7.noarch.rpm,报如下错误:

pam_tally2: /var/log/tallylog is either world writable or not a normal file

pam_tally2: Authentication error

执行如下命令解决

chmod 600 /var/log/tallylog

pam_tally2 --user root --reset

7.4 RuntimeError: uid/gid not found

执行cephadm shell,报如下错误:

Inferring fsid c8fb20bc-247c-11ee-a39c-000c29aa07d2

Inferring config /var/lib/ceph/c8fb20bc-247c-11ee-a39c-000c29aa07d2/mon.node1/config

Using recent ceph image quay.io/ceph/ceph@<none>

Non-zero exit code 125 from /usr/bin/docker run --rm --ipc=host --net=host --entrypoint stat -e CONTAINER_IMAGE=quay.io/ceph/ceph@<none> -e NODE_NAME=node1 quay.io/ceph/ceph@<none> -c %u %g /var/lib/ceph

stat: stderr /usr/bin/docker: invalid reference format.

stat: stderr See '/usr/bin/docker run --help'.

Traceback (most recent call last):

File "/usr/sbin/cephadm", line 6250, in <module>

r = args.func()

File "/usr/sbin/cephadm", line 1381, in _infer_fsid

return func()

File "/usr/sbin/cephadm", line 1412, in _infer_config

return func()

File "/usr/sbin/cephadm", line 1440, in _infer_image

return func()

File "/usr/sbin/cephadm", line 3573, in command_shell

make_log_dir(args.fsid)

File "/usr/sbin/cephadm", line 1538, in make_log_dir

uid, gid = extract_uid_gid()

File "/usr/sbin/cephadm", line 2155, in extract_uid_gid

raise RuntimeError('uid/gid not found')

RuntimeError: uid/gid not found

经过分析,确认 “cephadmin shell” 命令会启动一个 ceph容器;因安装在离线环境中,通过docker load的镜像,丢失了 “RepoDigest” 信息,所有无法启动容器。

解决办法是使用docker registry 创建本地仓库,然后推送镜像到仓库,再拉取该镜像来完善 “RepoDigest” 信息。(所有主机),同时主机名的解析也需要放到第一位,以免解析不到。

7.5 iptables: No chain/target/match by that name.

执行docker restart registry时候报如下错误

Error response from daemon: Cannot restart container registry: driver failed programming external connectivity on endpoint registry (dd9a9c15a451daa6abd2b85e840d7856c5c5f98c1fb1ae35897d3fbb28e2997c): (iptables failed: iptables --wait -t nat -A DOCKER -p tcp -d 0/0 --dport 4000 -j DNAT --to-destination 172.17.0.2:5000 ! -i docker0: iptables: No chain/target/match by that name.

(exit status 1))

在麒麟系统里面使用了firewalld代替了iptables,在运行容器前,firewalld是运行的,iptables会被使用,后来关闭了firewalld导致无法找到iptables相关信息,解决办法是重启docker更新容器,执行如下命令:

systemctl restart docker

7.6 /usr/bin/ceph: timeout after 60 seconds

执行cephadm bootstrap

错误如下:

cephadm bootstrap --mon-ip 172.25.0.141

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

podman|docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: 65ae4594-2d2c-11ee-b17b-000c29aa07d2

Verifying IP 172.25.0.141 port 3300 ...

Verifying IP 172.25.0.141 port 6789 ...

Mon IP 172.25.0.141 is in CIDR network 172.25.0.0/24

Pulling container image quay.io/ceph/ceph:v15...

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

/usr/bin/ceph: timeout after 60 seconds

Non-zero exit code -9 from /usr/bin/docker run --rm --ipc=host --net=host --entrypoint /usr/bin/ceph -e CONTAINER_IMAGE=quay.io/ceph/ceph:v15 -e NODE_NAME=ceph1 -v /var/lib/ceph/65ae4594-2d2c-11ee-b17b-000c29aa07d2/mon.ceph1:/var/lib/ceph/mon/ceph-ceph1:z -v /tmp/ceph-tmpuylyqrau:/etc/ceph/ceph.client.admin.keyring:z -v /tmp/ceph-tmp85t1j6sg:/etc/ceph/ceph.conf:z quay.io/ceph/ceph:v15 status

mon not available, waiting (1/10)...

/usr/bin/ceph: timeout after 60 seconds

Non-zero exit code -9 from /usr/bin/docker run --rm --ipc=host --net=host --entrypoint /usr/bin/ceph -e CONTAINER_IMAGE=quay.io/ceph/ceph:v15 -e NODE_NAME=ceph1 -v /var/lib/ceph/65ae4594-2d2c-11ee-b17b-000c29aa07d2/mon.ceph1:/var/lib/ceph/mon/ceph-ceph1:z -v /tmp/ceph-tmpuylyqrau:/etc/ceph/ceph.client.admin.keyring:z -v /tmp/ceph-tmp85t1j6sg:/etc/ceph/ceph.conf:z quay.io/ceph/ceph:v15 status

mon not available, waiting (2/10)...

/usr/bin/ceph: timeout after 60 seconds

Non-zero exit code -9 from /usr/bin/docker run --rm --ipc=host --net=host --entrypoint /usr/bin/ceph -e CONTAINER_IMAGE=quay.io/ceph/ceph:v15 -e NODE_NAME=ceph1 -v /var/lib/ceph/65ae4594-2d2c-11ee-b17b-000c29aa07d2/mon.ceph1:/var/lib/ceph/mon/ceph-ceph1:z -v /tmp/ceph-tmpuylyqrau:/etc/ceph/ceph.client.admin.keyring:z -v /tmp/ceph-tmp85t1j6sg:/etc/ceph/ceph.conf:z quay.io/ceph/ceph:v15 status

mon not available, waiting (3/10)...

/usr/bin/ceph: timeout after 60 seconds

Non-zero exit code -9 from /usr/bin/docker run --rm --ipc=host --net=host --entrypoint /usr/bin/ceph -e CONTAINER_IMAGE=quay.io/ceph/ceph:v15 -e NODE_NAME=ceph1 -v /var/lib/ceph/65ae4594-2d2c-11ee-b17b-000c29aa07d2/mon.ceph1:/var/lib/ceph/mon/ceph-ceph1:z -v /tmp/ceph-tmpuylyqrau:/etc/ceph/ceph.client.admin.keyring:z -v /tmp/ceph-tmp85t1j6sg:/etc/ceph/ceph.conf:z quay.io/ceph/ceph:v15 status

mon not available, waiting (4/10)...

/usr/bin/ceph: timeout after 60 seconds

Non-zero exit code -9 from /usr/bin/docker run --rm --ipc=host --net=host --entrypoint /usr/bin/ceph -e CONTAINER_IMAGE=quay.io/ceph/ceph:v15 -e NODE_NAME=ceph1 -v /var/lib/ceph/65ae4594-2d2c-11ee-b17b-000c29aa07d2/mon.ceph1:/var/lib/ceph/mon/ceph-ceph1:z -v /tmp/ceph-tmpuylyqrau:/etc/ceph/ceph.client.admin.keyring:z -v /tmp/ceph-tmp85t1j6sg:/etc/ceph/ceph.conf:z quay.io/ceph/ceph:v15 status

mon not available, waiting (5/10)...

然后手动执行

docker run -it --ipc=host --net=host -e CONTAINER_IMAGE=quay.io/ceph/ceph:v15 -e NODE_NAME=ceph1 -v /var/lib/ceph/65ae4594-2d2c-11ee-b17b-000c29aa07d2/mon.ceph1:/var/lib/ceph/mon/ceph-ceph1:z -v /tmp/ceph-tmpuylyqrau:/etc/ceph/ceph.client.admin.keyring:z -v /tmp/ceph-tmp85t1j6sg:/etc/ceph/ceph.conf:z quay.io/ceph/ceph:v15 bash

bash进去后执行ceph -s,发现如下错误

[root@ceph1 /]# ceph -s

2023-07-28T10:01:34.083+0000 7f0dc4f41700 0 monclient(hunting): authenticate timed out after 300

2023-07-28T10:06:34.087+0000 7f0dc4f41700 0 monclient(hunting): authenticate timed out after 300

2023-07-28T10:11:34.087+0000 7f0dc4f41700 0 monclient(hunting): authenticate timed out after 300

查阅相关资料,发现跟DNS解析有关,修改/etc/resolv.conf,加入一个DNS SERVER

vim /etc/resolv.conf

nameserver 223.5.5.5

问题得到解决。

7.7 ERROR: Cannot infer an fsid, one must be specified

执行ceph -s,报如下错误:

ERROR: Cannot infer an fsid, one must be specified: ['65ae4594-2d2c-11ee-b17b-000c29aa07d2', '70eb8f7c-2d2f-11ee- 8265-000c29aa07d2', 'd20e3700-2d2f-11ee-9166-000c29aa07d2']

由于多次部署失败导致的,将失败的fsid删除即可,操作如下:

cephadm rm-cluster --fsid 65ae4594-2d2c-11ee-b17b-000c29aa07d2 --force

cephadm rm-cluster --fsid 70eb8f7c-2d2f-11ee-8265-000c29aa07d2 --force

7.8 2 failed cephadm daemon(s),daemon node-exporter.ceph2 on ceph2 is in error state

执行ceph -s,报信息2 failed cephadm daemon(s),如下

[ceph: root@ceph1 /]# ceph -s

cluster:

id: d20e3700-2d2f-11ee-9166-000c29aa07d2

health: HEALTH_WARN

2 failed cephadm daemon(s)

Degraded data redundancy: 1 pg undersized

services:

mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 9m)

mgr: ceph1.kxhbab(active, since 6h), standbys: ceph3.aseinm

osd: 3 osds: 3 up (since 42m), 3 in (since 42m); 1 remapped pgs

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 57 GiB / 60 GiB avail

pgs: 1 active+undersized+remapped

通过ceph health detail查看

HEALTH_WARN 2 failed cephadm daemon(s); Degraded data redundancy: 1 pg undersized

[WRN] CEPHADM_FAILED_DAEMON: 2 failed cephadm daemon(s)

daemon node-exporter.ceph2 on ceph2 is in error state

daemon node-exporter.ceph3 on ceph3 is in error state

[WRN] PG_DEGRADED: Degraded data redundancy: 1 pg undersized

pg 1.0 is stuck undersized for 44m, current state active+undersized+remapped, last acting [1,0]

发现是ceph2、ceph3上node-exporter有问题,再次通过ceph orch ps查看,如下

[ceph: root@ceph1 /]# ceph orch ps

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

alertmanager.ceph1 ceph1 running (17m) 6m ago 6h 0.20.0 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f f640d60309a8

crash.ceph1 ceph1 running (6h) 6m ago 6h 15.2.17 quay.io/ceph/ceph:v15 93146564743f 167923df16d6

crash.ceph2 ceph2 running (45m) 6m ago 45m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 36930ffad980

crash.ceph3 ceph3 running (7h) 6m ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f 3cf33326be8f

grafana.ceph1 ceph1 running (66m) 6m ago 6h 6.7.4 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646 a9f1cd6dd382

mgr.ceph1.kxhbab ceph1 running (6h) 6m ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f c738e894f955

mgr.ceph3.aseinm ceph3 running (7h) 6m ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f 2657cef61946

mon.ceph1 ceph1 running (6h) 6m ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f ac8d2bf766d9

mon.ceph2 ceph2 running (45m) 6m ago 45m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 30fc64339e92

mon.ceph3 ceph3 running (7h) 6m ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f b98335e1b0e1

node-exporter.ceph1 ceph1 running (66m) 6m ago 6h 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 7bb7cc89e4bf

node-exporter.ceph2 ceph2 error 6m ago 7h <unknown> quay.io/prometheus/node-exporter:v0.18.1 <unknown> <unknown>

node-exporter.ceph3 ceph3 error 6m ago 7h <unknown> quay.io/prometheus/node-exporter:v0.18.1 <unknown> <unknown>

osd.0 ceph1 running (45m) 6m ago 45m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 56418e7d64a2

osd.1 ceph1 running (45m) 6m ago 45m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 04be93b74209

osd.2 ceph1 running (45m) 6m ago 45m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 15ca4a2a1acc

prometheus.ceph1 ceph1 running (17m) 6m ago 6h 2.18.1 quay.io/prometheus/prometheus:v2.18.1 de242295e225 8dfaf3f5b9c2

ceph2、ceph3上node-exporter状态为error,将该服务重启

ceph orch daemon restart node-exporter.ceph2

ceph orch daemon restart node-exporter.ceph3

重启之后正常,如下:

[ceph: root@ceph1 /]# ceph -s

cluster:

id: d20e3700-2d2f-11ee-9166-000c29aa07d2

health: HEALTH_WARN

Degraded data redundancy: 1 pg undersized

services:

mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 22m)

mgr: ceph1.kxhbab(active, since 6h), standbys: ceph3.aseinm

osd: 3 osds: 3 up (since 56m), 3 in (since 56m); 1 remapped pgs

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 57 GiB / 60 GiB avail

pgs: 1 active+undersized+remapped

7.8 1 failed cephadm daemon(s),daemon node-exporter.ceph2 on ceph2 is in error state

执行ceph -s,报信息1 failed cephadm daemon(s),如下

[ceph: root@ceph1 /]# ceph -s

cluster:

id: d20e3700-2d2f-11ee-9166-000c29aa07d2

health: HEALTH_WARN

1 failed cephadm daemon(s)

services:

mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 49m)

mgr: ceph1.kxhbab(active, since 6h), standbys: ceph3.aseinm

osd: 9 osds: 8 up (since 116s), 8 in (since 116s)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 8.0 GiB used, 152 GiB / 160 GiB avail

pgs: 1 active+clean

通过ceph health detail查看

[ceph: root@ceph1 /]# ceph health detail

HEALTH_WARN 1 failed cephadm daemon(s)

[WRN] CEPHADM_FAILED_DAEMON: 1 failed cephadm daemon(s)

daemon osd.3 on ceph2 is in unknown state

发现是ceph2上osd.3有问题,再次通过ceph orch ps查看,如下

[ceph: root@ceph1 /]# ceph orch ps

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

alertmanager.ceph1 ceph1 running (53m) 2m ago 7h 0.20.0 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f f640d60309a8

crash.ceph1 ceph1 running (6h) 2m ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f 167923df16d6

crash.ceph2 ceph2 running (82m) 77s ago 82m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 36930ffad980

crash.ceph3 ceph3 running (7h) 31s ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f 3cf33326be8f

grafana.ceph1 ceph1 running (103m) 2m ago 7h 6.7.4 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646 a9f1cd6dd382

mgr.ceph1.kxhbab ceph1 running (6h) 2m ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f c738e894f955

mgr.ceph3.aseinm ceph3 running (7h) 31s ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f 2657cef61946

mon.ceph1 ceph1 running (6h) 2m ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f ac8d2bf766d9

mon.ceph2 ceph2 running (82m) 77s ago 82m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 30fc64339e92

mon.ceph3 ceph3 running (7h) 31s ago 7h 15.2.17 quay.io/ceph/ceph:v15 93146564743f b98335e1b0e1

node-exporter.ceph1 ceph1 running (103m) 2m ago 7h 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 7bb7cc89e4bf

node-exporter.ceph2 ceph2 running (34m) 77s ago 8h 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 9cde6dd53b22

node-exporter.ceph3 ceph3 running (33m) 31s ago 8h 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf 106d248979bc

osd.0 ceph1 running (82m) 2m ago 82m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 56418e7d64a2

osd.1 ceph1 running (81m) 2m ago 81m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 04be93b74209

osd.2 ceph1 running (81m) 2m ago 81m 15.2.17 quay.io/ceph/ceph:v15 93146564743f 15ca4a2a1acc

osd.3 ceph2 unknown 77s ago 2m <unknown> quay.io/ceph/ceph:v15 <unknown> <unknown>

osd.4 ceph2 running (98s) 77s ago 100s 15.2.17 quay.io/ceph/ceph:v15 93146564743f 02800b38df89

osd.5 ceph2 running (82s) 77s ago 84s 15.2.17 quay.io/ceph/ceph:v15 93146564743f 05ce4a9c9588

osd.6 ceph3 running (60s) 31s ago 61s 15.2.17 quay.io/ceph/ceph:v15 93146564743f 0d22f79c41ab

osd.7 ceph3 running (45s) 31s ago 47s 15.2.17 quay.io/ceph/ceph:v15 93146564743f a80a852550e3

osd.8 ceph3 running (32s) 31s ago 33s 15.2.17 quay.io/ceph/ceph:v15 93146564743f 51ebec72bb3f

prometheus.ceph1 ceph1 running (53m) 2m ago 7h 2.18.1 quay.io/prometheus/prometheus:v2.18.1 de242295e225 8dfaf3f5b9c2

ceph2上osd.3状态为unknown

ceph osd tree查看下osd状态

[ceph: root@ceph1 /]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.15588 root default

-3 0.05846 host ceph1

0 hdd 0.01949 osd.0 up 1.00000 1.00000

1 hdd 0.01949 osd.1 up 1.00000 1.00000

2 hdd 0.01949 osd.2 up 1.00000 1.00000

-5 0.03897 host ceph2

4 hdd 0.01949 osd.4 up 1.00000 1.00000

5 hdd 0.01949 osd.5 up 1.00000 1.00000

-7 0.05846 host ceph3

6 hdd 0.01949 osd.6 up 1.00000 1.00000

7 hdd 0.01949 osd.7 up 1.00000 1.00000

8 hdd 0.01949 osd.8 up 1.00000 1.00000

3 0 osd.3 down 0 1.00000

发现osd.3的状态为down,针对服务做一次重启

ceph orch daemon restart osd.3

等待一端时间后发现ceph orch ps获取的osd.3的状态为error

采取删除重新部署后,登录到ceph2,将磁盘重新初始化,用于满足OSD部署,如下

dmsetup rm ceph--89a94f3b--e5ef--4ec9--b828--d86ea84d6540-osd--block--e4a8e9c8--20e6--4847--8176--510533616844

vgremove ceph-89a94f3b-e5ef-4ec9-b828-d86ea84d6540

pvremove /dev/sdb

mkfs.xfs -f /dev/sdb

在ceph1上删除重新添加

ceph osd rm 3

ceph orch daemon rm osd.3 --force

ceph orch daemon add osd ceph2:/dev/sdb

重新部署后正常,估计一开始磁盘遇到些问题,如下:

[ceph: root@ceph1 /]# ceph -s

cluster:

id: d20e3700-2d2f-11ee-9166-000c29aa07d2

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 16h)

mgr: ceph1.kxhbab(active, since 22h), standbys: ceph3.aseinm

osd: 9 osds: 9 up (since 15h), 9 in (since 15h)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 9.1 GiB used, 171 GiB / 180 GiB avail

pgs: 1 active+clean

8. 参考文献:

https://docs.ceph.com/en/latest/cephadm/

https://docs.ceph.com/en/latest/cephfs/

https://blog.csdn.net/DoloresOOO/article/details/106855093

https://blog.csdn.net/XU_sun_/article/details/119909860

https://blog.csdn.net/networken/article/details/106870859

https://zhuanlan.zhihu.com/p/598832268

https://www.jianshu.com/p/b2aab379d7ec

https://blog.csdn.net/JineD/article/details/113886368

http://dbaselife.com/doc/752/

http://www.chenlianfu.com/?p=3388

http://mirrors.163.com/ceph/rpm-octopus/el7/noarch/

https://blog.csdn.net/qq_27979109/article/details/120345676#3mondocker_runcephconf_718

https://cloud-atlas.readthedocs.io/zh_CN/latest/ceph/deploy/install_mobile_cloud_ceph/debug_ceph_authenticate_time_out.html

https://access.redhat.com/documentation/zh-cn/red_hat_ceph_storage/5/html/operations_guide/introduction-to-the-ceph-orchestrator