文章目录

- 实验要求

- 数据集定义

- 1 手动实现前馈神经网络解决上述回归、二分类、多分类任务

- 1.1手动实现前馈网络-回归任务

- 1.2 手动实现前馈网络-二分类任务

- 1.3 手动实现前馈网络-多分类

- 1.4 实验结果分析

- 2 利用torch.nn实现前馈神经网络解决上述回归、二分类、多分类任务

- 2.1 torch.nn实现前馈网络-回归任务

- 2.2 torch.nn实现前馈网络-二分类

- 2.3 torch.nn实现前馈网络-多分类任务

- 2.4 实验结果分析

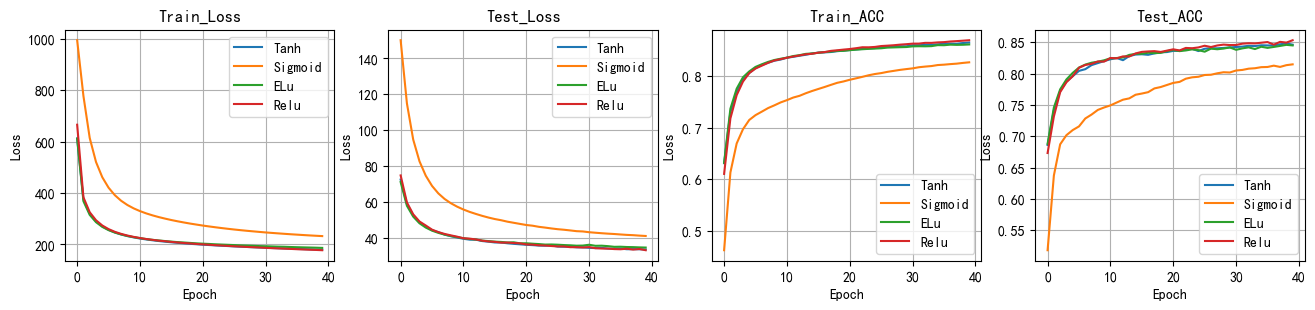

- 3 在多分类任务中使用至少三种不同的激活函数

- 3.1 使用Tanh激活函数

- 3.2 使用Sigmoid激活函数

- 3.3 使用ELU激活函数

- 3.4 实验结果分析

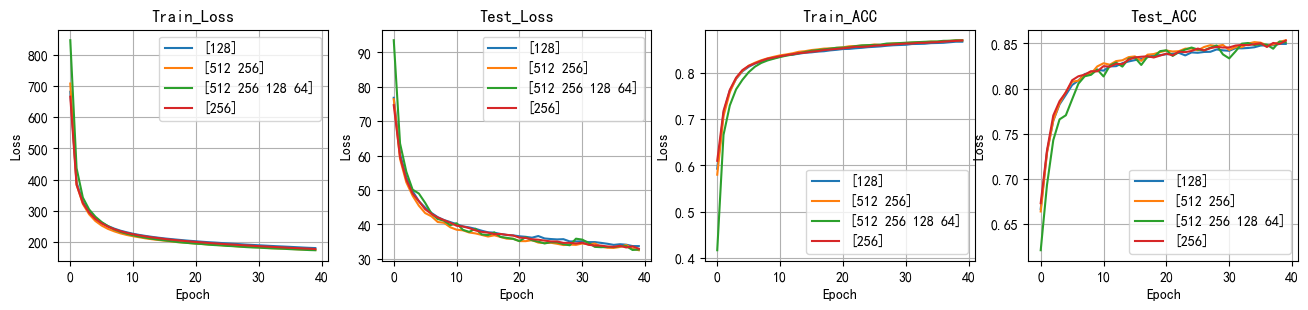

- 4 多分类任务中的模型评估隐藏层层数和隐藏单元个数对实验结果的影响

- 4.1 一个隐藏层,神经元个数为[128]

- 4.2 两个隐藏层,神经元个数分别为[512,256]

- 4.3 四个隐藏层,神经元个数分别为[512,256,128,64]

- 4.4 实验结果分析

实验要求

手动实现前馈神经网络解决上述回归、二分类、多分类任务

- 分析实验结果并绘制训练集和测试集loss曲线

利用torch.nn实现前馈神经网络解决上述回归、二分类、多分类任务

- 分析实验结果并绘制训练集和测试集loss曲线

在多分类实验的基础上使用至少三种不同的激活函数

- 对比使用不同激活函数的实验结果

在多分类任务实验中的模型评估隐藏层层数和隐藏单元个数对实验结果的影响

- 使用不同的隐藏层层数和隐藏单元个数,进行对比实验并分析实验结果

数据集定义

import time

import matplotlib.pyplot as plt

import numpy as np

import torch

import torch.nn as nn

import torchvision

from torch.nn.functional import cross_entropy, binary_cross_entropy

from torch.nn import CrossEntropyLoss

from torchvision import transforms

from sklearn import metrics

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 如果有gpu则在gpu上计算 加快计算速度

print(f'当前使用的device为{device}')

# 数据集定义

# 构建回归数据集合 - traindataloader1, testdataloader1

data_num, train_num, test_num = 10000, 7000, 3000 # 分别为样本总数量,训练集样本数量和测试集样本数量

true_w, true_b = 0.0056 * torch.ones(500,1), 0.028

features = torch.randn(10000, 500)

labels = torch.matmul(features,true_w) + true_b # 按高斯分布

labels += torch.tensor(np.random.normal(0,0.01,size=labels.size()),dtype=torch.float32)

# 划分训练集和测试集

train_features, test_features = features[:train_num,:], features[train_num:,:]

train_labels, test_labels = labels[:train_num], labels[train_num:]

batch_size = 128

traindataset1 = torch.utils.data.TensorDataset(train_features,train_labels)

testdataset1 = torch.utils.data.TensorDataset(test_features, test_labels)

traindataloader1 = torch.utils.data.DataLoader(dataset=traindataset1,batch_size=batch_size,shuffle=True)

testdataloader1 = torch.utils.data.DataLoader(dataset=testdataset1,batch_size=batch_size,shuffle=True)

# 构二分类数据集合

data_num, train_num, test_num = 10000, 7000, 3000 # 分别为样本总数量,训练集样本数量和测试集样本数量

# 第一个数据集 符合均值为 0.5 标准差为1 得分布

features1 = torch.normal(mean=0.2, std=2, size=(data_num, 200), dtype=torch.float32)

labels1 = torch.ones(data_num)

# 第二个数据集 符合均值为 -0.5 标准差为1的分布

features2 = torch.normal(mean=-0.2, std=2, size=(data_num, 200), dtype=torch.float32)

labels2 = torch.zeros(data_num)

# 构建训练数据集

train_features2 = torch.cat((features1[:train_num], features2[:train_num]), dim=0) # size torch.Size([14000, 200])

train_labels2 = torch.cat((labels1[:train_num], labels2[:train_num]), dim=-1) # size torch.Size([6000, 200])

# 构建测试数据集

test_features2 = torch.cat((features1[train_num:], features2[train_num:]), dim=0) # torch.Size([14000])

test_labels2 = torch.cat((labels1[train_num:], labels2[train_num:]), dim=-1) # torch.Size([6000])

batch_size = 128

# Build the training and testing dataset

traindataset2 = torch.utils.data.TensorDataset(train_features2, train_labels2)

testdataset2 = torch.utils.data.TensorDataset(test_features2, test_labels2)

traindataloader2 = torch.utils.data.DataLoader(dataset=traindataset2,batch_size=batch_size,shuffle=True)

testdataloader2 = torch.utils.data.DataLoader(dataset=testdataset2,batch_size=batch_size,shuffle=True)

# 定义多分类数据集 - train_dataloader - test_dataloader

batch_size = 128

# Build the training and testing dataset

traindataset3 = torchvision.datasets.FashionMNIST(root="../data",

train=True,

download=True,

transform=transforms.ToTensor())

testdataset3 = torchvision.datasets.FashionMNIST(root="../data",

train=False,

download=True,

transform=transforms.ToTensor())

traindataloader3 = torch.utils.data.DataLoader(traindataset3, batch_size=batch_size, shuffle=True)

testdataloader3 = torch.utils.data.DataLoader(testdataset3, batch_size=batch_size, shuffle=False)

# 绘制图像的代码

def picture(name, trainl, testl, type='Loss'):

plt.rcParams["font.sans-serif"]=["SimHei"] #设置字体

plt.rcParams["axes.unicode_minus"]=False #该语句解决图像中的“-”负号的乱码问题

plt.title(name) # 命名

plt.plot(trainl, c='g', label='Train '+ type)

plt.plot(testl, c='r', label='Test '+type)

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.grid(True)

print(f'回归数据集 样本总数量{len(traindataset1) + len(testdataset1)},训练样本数量{len(traindataset1)},测试样本数量{len(testdataset1)}')

print(f'二分类数据集 样本总数量{len(traindataset2) + len(testdataset2)},训练样本数量{len(traindataset2)},测试样本数量{len(testdataset2)}')

print(f'多分类数据集 样本总数量{len(traindataset3) + len(testdataset3)},训练样本数量{len(traindataset3)},测试样本数量{len(testdataset3)}')

当前使用的device为cuda

回归数据集 样本总数量10000,训练样本数量7000,测试样本数量3000

二分类数据集 样本总数量20000,训练样本数量14000,测试样本数量6000

多分类数据集 样本总数量70000,训练样本数量60000,测试样本数量10000

1 手动实现前馈神经网络解决上述回归、二分类、多分类任务

1.1手动实现前馈网络-回归任务

# 定义自己的前馈神经网络

class MyNet1():

def __init__(self):

# 设置隐藏层和输出层的节点数

num_inputs, num_hiddens, num_outputs = 500, 256, 1

w_1 = torch.tensor(np.random.normal(0,0.01,(num_hiddens,num_inputs)),dtype=torch.float32,requires_grad=True)

b_1 = torch.zeros(num_hiddens, dtype=torch.float32,requires_grad=True)

w_2 = torch.tensor(np.random.normal(0, 0.01,(num_outputs, num_hiddens)),dtype=torch.float32,requires_grad=True)

b_2 = torch.zeros(num_outputs,dtype=torch.float32, requires_grad=True)

self.params = [w_1, b_1, w_2, b_2]

# 定义模型结构

self.input_layer = lambda x: x.view(x.shape[0],-1)

self.hidden_layer = lambda x: self.my_relu(torch.matmul(x,w_1.t())+b_1)

self.output_layer = lambda x: torch.matmul(x,w_2.t()) + b_2

def my_relu(self, x):

return torch.max(input=x,other=torch.tensor(0.0))

def forward(self,x):

x = self.input_layer(x)

x = self.my_relu(self.hidden_layer(x))

x = self.output_layer(x)

return x

def mySGD(params, lr, batchsize):

for param in params:

param.data -= lr*param.grad / batchsize

def mse(pred, true):

ans = torch.sum((true-pred)**2) / len(pred)

# print(ans)

return ans

# 训练

model1 = MyNet1() # logistics模型

criterion = CrossEntropyLoss() # 损失函数

lr = 0.05 # 学习率

batchsize = 128

epochs = 40 #训练轮数

train_all_loss1 = [] # 记录训练集上得loss变化

test_all_loss1 = [] #记录测试集上的loss变化

begintime1 = time.time()

for epoch in range(epochs):

train_l = 0

for data, labels in traindataloader1:

pred = model1.forward(data)

train_each_loss = mse(pred.view(-1,1), labels.view(-1,1)) #计算每次的损失值

train_each_loss.backward() # 反向传播

mySGD(model1.params, lr, batchsize) # 使用小批量随机梯度下降迭代模型参数

# 梯度清零

train_l += train_each_loss.item()

for param in model1.params:

param.grad.data.zero_()

# print(train_each_loss)

train_all_loss1.append(train_l) # 添加损失值到列表中

with torch.no_grad():

test_loss = 0

for data, labels in traindataloader1:

pred = model1.forward(data)

test_each_loss = mse(pred, labels)

test_loss += test_each_loss.item()

test_all_loss1.append(test_loss)

if epoch==0 or (epoch+1) % 4 == 0:

print('epoch: %d | train loss:%.5f | test loss:%.5f'%(epoch+1,train_all_loss1[-1],test_all_loss1[-1]))

endtime1 = time.time()

print("手动实现前馈网络-回归实验 %d轮 总用时: %.3fs"%(epochs,endtime1-begintime1))

epoch: 1 | train loss:0.90351 | test loss:0.89862

epoch: 4 | train loss:0.88280 | test loss:0.88232

epoch: 8 | train loss:0.86953 | test loss:0.86965

epoch: 12 | train loss:0.86391 | test loss:0.86379

epoch: 16 | train loss:0.85774 | test loss:0.85766

epoch: 20 | train loss:0.85422 | test loss:0.85323

epoch: 24 | train loss:0.84861 | test loss:0.84816

epoch: 28 | train loss:0.84434 | test loss:0.84480

epoch: 32 | train loss:0.84073 | test loss:0.84144

epoch: 36 | train loss:0.83734 | test loss:0.83681

epoch: 40 | train loss:0.83258 | test loss:0.83313

手动实现前馈网络-回归实验 40轮 总用时: 6.407s

1.2 手动实现前馈网络-二分类任务

# 定义自己的前馈神经网络

class MyNet2():

def __init__(self):

# 设置隐藏层和输出层的节点数

num_inputs, num_hiddens, num_outputs = 200, 256, 1

w_1 = torch.tensor(np.random.normal(0, 0.01, (num_hiddens, num_inputs)), dtype=torch.float32,

requires_grad=True)

b_1 = torch.zeros(num_hiddens, dtype=torch.float32, requires_grad=True)

w_2 = torch.tensor(np.random.normal(0, 0.01, (num_outputs, num_hiddens)), dtype=torch.float32,

requires_grad=True)

b_2 = torch.zeros(num_outputs, dtype=torch.float32, requires_grad=True)

self.params = [w_1, b_1, w_2, b_2]

# 定义模型结构

self.input_layer = lambda x: x.view(x.shape[0], -1)

self.hidden_layer = lambda x: self.my_relu(torch.matmul(x, w_1.t()) + b_1)

self.output_layer = lambda x: torch.matmul(x, w_2.t()) + b_2

self.fn_logistic = self.logistic

def my_relu(self, x):

return torch.max(input=x, other=torch.tensor(0.0))

def logistic(self, x): # 定义logistic函数

x = 1.0 / (1.0 + torch.exp(-x))

return x

# 定义前向传播

def forward(self, x):

x = self.input_layer(x)

x = self.my_relu(self.hidden_layer(x))

x = self.fn_logistic(self.output_layer(x))

return x

def mySGD(params, lr):

for param in params:

param.data -= lr * param.grad

# 训练

model2 = MyNet2()

lr = 0.01 # 学习率

epochs = 40 # 训练轮数

train_all_loss2 = [] # 记录训练集上得loss变化

test_all_loss2 = [] # 记录测试集上的loss变化

train_Acc12, test_Acc12 = [], []

begintime2 = time.time()

for epoch in range(epochs):

train_l, train_epoch_count = 0, 0

for data, labels in traindataloader2:

pred = model2.forward(data)

train_each_loss = binary_cross_entropy(pred.view(-1), labels.view(-1)) # 计算每次的损失值

train_l += train_each_loss.item()

train_each_loss.backward() # 反向传播

mySGD(model2.params, lr) # 使用随机梯度下降迭代模型参数

# 梯度清零

for param in model2.params:

param.grad.data.zero_()

# print(train_each_loss)

train_epoch_count += (torch.tensor(np.where(pred > 0.5, 1, 0)).view(-1) == labels).sum()

train_Acc12.append((train_epoch_count/len(traindataset2)).item())

train_all_loss2.append(train_l) # 添加损失值到列表中

with torch.no_grad():

test_l, test_epoch_count = 0, 0

for data, labels in testdataloader2:

pred = model2.forward(data)

test_each_loss = binary_cross_entropy(pred.view(-1), labels.view(-1))

test_l += test_each_loss.item()

test_epoch_count += (torch.tensor(np.where(pred > 0.5, 1, 0)).view(-1) == labels.view(-1)).sum()

test_Acc12.append((test_epoch_count/len(testdataset2)).item())

test_all_loss2.append(test_l)

if epoch == 0 or (epoch + 1) % 4 == 0:

print('epoch: %d | train loss:%.5f | test loss:%.5f | train acc:%.5f | test acc:%.5f' % (epoch + 1, train_all_loss2[-1], test_all_loss2[-1], train_Acc12[-1], test_Acc12[-1]))

endtime2 = time.time()

print("手动实现前馈网络-二分类实验 %d轮 总用时: %.3f" % (epochs, endtime2 - begintime2))

epoch: 1 | train loss:74.47765 | test loss:30.79666 | train acc:0.75679 | test acc:0.87617

epoch: 4 | train loss:36.82598 | test loss:13.83257 | train acc:0.92143 | test acc:0.91883

epoch: 8 | train loss:21.74566 | test loss:9.70713 | train acc:0.92743 | test acc:0.91833

epoch: 12 | train loss:19.73010 | test loss:9.35242 | train acc:0.93014 | test acc:0.91900

epoch: 16 | train loss:18.79038 | test loss:9.32585 | train acc:0.93264 | test acc:0.91883

epoch: 20 | train loss:18.04211 | test loss:9.37476 | train acc:0.93586 | test acc:0.91817

epoch: 24 | train loss:17.39948 | test loss:9.40694 | train acc:0.93886 | test acc:0.91750

epoch: 28 | train loss:16.80431 | test loss:9.45879 | train acc:0.94221 | test acc:0.91667

epoch: 32 | train loss:16.15831 | test loss:9.49294 | train acc:0.94557 | test acc:0.91700

epoch: 36 | train loss:15.56557 | test loss:9.53963 | train acc:0.94829 | test acc:0.91683

epoch: 40 | train loss:14.91204 | test loss:9.57870 | train acc:0.95136 | test acc:0.91683

手动实现前馈网络-二分类实验 40轮 总用时: 10.233

1.3 手动实现前馈网络-多分类

# 定义自己的前馈神经网络

class MyNet3():

def __init__(self):

# 设置隐藏层和输出层的节点数

num_inputs, num_hiddens, num_outputs = 28 * 28, 256, 10 # 十分类问题

w_1 = torch.tensor(np.random.normal(0, 0.01, (num_hiddens, num_inputs)), dtype=torch.float32,

requires_grad=True)

b_1 = torch.zeros(num_hiddens, dtype=torch.float32, requires_grad=True)

w_2 = torch.tensor(np.random.normal(0, 0.01, (num_outputs, num_hiddens)), dtype=torch.float32,

requires_grad=True)

b_2 = torch.zeros(num_outputs, dtype=torch.float32, requires_grad=True)

self.params = [w_1, b_1, w_2, b_2]

# 定义模型结构

self.input_layer = lambda x: x.view(x.shape[0], -1)

self.hidden_layer = lambda x: self.my_relu(torch.matmul(x, w_1.t()) + b_1)

self.output_layer = lambda x: torch.matmul(x, w_2.t()) + b_2

def my_relu(self, x):

return torch.max(input=x, other=torch.tensor(0.0))

# 定义前向传播

def forward(self, x):

x = self.input_layer(x)

x = self.hidden_layer(x)

x = self.output_layer(x)

return x

def mySGD(params, lr, batchsize):

for param in params:

param.data -= lr * param.grad / batchsize

# 训练

model3 = MyNet3() # logistics模型

criterion = cross_entropy # 损失函数

lr = 0.15 # 学习率

epochs = 40 # 训练轮数

train_all_loss3 = [] # 记录训练集上得loss变化

test_all_loss3 = [] # 记录测试集上的loss变化

train_ACC13, test_ACC13 = [], [] # 记录正确的个数

begintime3 = time.time()

for epoch in range(epochs):

train_l,train_acc_num = 0, 0

for data, labels in traindataloader3:

pred = model3.forward(data)

train_each_loss = criterion(pred, labels) # 计算每次的损失值

train_l += train_each_loss.item()

train_each_loss.backward() # 反向传播

mySGD(model3.params, lr, 128) # 使用小批量随机梯度下降迭代模型参数

# 梯度清零

train_acc_num += (pred.argmax(dim=1)==labels).sum().item()

for param in model3.params:

param.grad.data.zero_()

# print(train_each_loss)

train_all_loss3.append(train_l) # 添加损失值到列表中

train_ACC13.append(train_acc_num / len(traindataset3)) # 添加准确率到列表中

with torch.no_grad():

test_l, test_acc_num = 0, 0

for data, labels in testdataloader3:

pred = model3.forward(data)

test_each_loss = criterion(pred, labels)

test_l += test_each_loss.item()

test_acc_num += (pred.argmax(dim=1)==labels).sum().item()

test_all_loss3.append(test_l)

test_ACC13.append(test_acc_num / len(testdataset3)) # # 添加准确率到列表中

if epoch == 0 or (epoch + 1) % 4 == 0:

print('epoch: %d | train loss:%.5f | test loss:%.5f | train acc: %.2f | test acc: %.2f'

% (epoch + 1, train_l, test_l, train_ACC13[-1],test_ACC13[-1]))

endtime3 = time.time()

print("手动实现前馈网络-多分类实验 %d轮 总用时: %.3f" % (epochs, endtime3 - begintime3))

epoch: 1 | train loss:1069.12612 | test loss:178.37273 | train acc: 0.34 | test acc: 0.44

epoch: 4 | train loss:924.58558 | test loss:148.63074 | train acc: 0.54 | test acc: 0.53

epoch: 8 | train loss:632.67137 | test loss:103.01818 | train acc: 0.61 | test acc: 0.62

epoch: 12 | train loss:494.95427 | test loss:82.15779 | train acc: 0.65 | test acc: 0.64

epoch: 16 | train loss:426.93544 | test loss:71.76805 | train acc: 0.67 | test acc: 0.66

epoch: 20 | train loss:389.04691 | test loss:65.90453 | train acc: 0.69 | test acc: 0.68

epoch: 24 | train loss:364.42420 | test loss:62.03389 | train acc: 0.71 | test acc: 0.70

epoch: 28 | train loss:346.08173 | test loss:59.08922 | train acc: 0.73 | test acc: 0.72

epoch: 32 | train loss:330.94492 | test loss:56.66035 | train acc: 0.75 | test acc: 0.74

epoch: 36 | train loss:318.06814 | test loss:54.59195 | train acc: 0.76 | test acc: 0.75

epoch: 40 | train loss:306.78502 | test loss:52.74187 | train acc: 0.77 | test acc: 0.77

手动实现前馈网络-多分类实验 40轮 总用时: 147.901

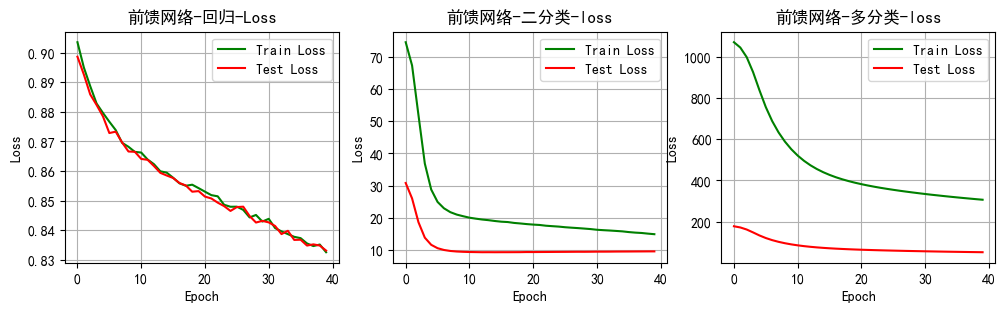

1.4 实验结果分析

plt.figure(figsize=(12,3))

plt.title('Loss')

plt.subplot(131)

picture('前馈网络-回归-Loss',train_all_loss1,test_all_loss1)

plt.subplot(132)

picture('前馈网络-二分类-loss',train_all_loss2,test_all_loss2)

plt.subplot(133)

picture('前馈网络-多分类-loss',train_all_loss3,test_all_loss3)

plt.show()

C:\Users\20919\AppData\Local\Temp\ipykernel_9328\3819980460.py:3: MatplotlibDeprecationWarning: Auto-removal of overlapping axes is deprecated since 3.6 and will be removed two minor releases later; explicitly call ax.remove() as needed.

plt.subplot(131)

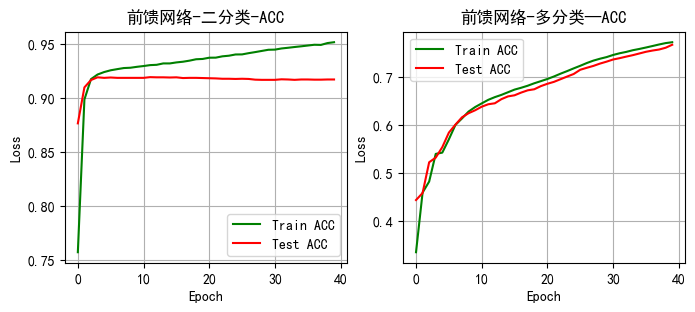

plt.figure(figsize=(8, 3))

plt.subplot(121)

picture('前馈网络-二分类-ACC',train_Acc12,test_Acc12,type='ACC')

plt.subplot(122)

picture('前馈网络-多分类—ACC', train_ACC13,test_ACC13, type='ACC')

plt.show()

2 利用torch.nn实现前馈神经网络解决上述回归、二分类、多分类任务

2.1 torch.nn实现前馈网络-回归任务

from torch.optim import SGD

from torch.nn import MSELoss

# 利用torch.nn实现前馈神经网络-回归任务 代码

# 定义自己的前馈神经网络

class MyNet21(nn.Module):

def __init__(self):

super(MyNet21, self).__init__()

# 设置隐藏层和输出层的节点数

num_inputs, num_hiddens, num_outputs = 500, 256, 1

# 定义模型结构

self.input_layer = nn.Flatten()

self.hidden_layer = nn.Linear(num_inputs, num_hiddens)

self.output_layer = nn.Linear(num_hiddens, num_outputs)

self.relu = nn.ReLU()

# 定义前向传播

def forward(self, x):

x = self.input_layer(x)

x = self.relu(self.hidden_layer(x))

x = self.output_layer(x)

return x

# 训练

model21 = MyNet21() # logistics模型

model21 = model21.to(device)

print(model21)

criterion = MSELoss() # 损失函数

criterion = criterion.to(device)

optimizer = SGD(model21.parameters(), lr=0.1) # 优化函数

epochs = 40 # 训练轮数

train_all_loss21 = [] # 记录训练集上得loss变化

test_all_loss21 = [] # 记录测试集上的loss变化

begintime21 = time.time()

for epoch in range(epochs):

train_l = 0

for data, labels in traindataloader1:

data, labels = data.to(device=device), labels.to(device)

pred = model21(data)

train_each_loss = criterion(pred.view(-1, 1), labels.view(-1, 1)) # 计算每次的损失值

optimizer.zero_grad() # 梯度清零

train_each_loss.backward() # 反向传播

optimizer.step() # 梯度更新

train_l += train_each_loss.item()

train_all_loss21.append(train_l) # 添加损失值到列表中

with torch.no_grad():

test_loss = 0

for data, labels in testdataloader1:

data, labels = data.to(device), labels.to(device)

pred = model21(data)

test_each_loss = criterion(pred,labels)

test_loss += test_each_loss.item()

test_all_loss21.append(test_loss)

if epoch == 0 or (epoch + 1) % 10 == 0:

print('epoch: %d | train loss:%.5f | test loss:%.5f' % (epoch + 1, train_all_loss21[-1], test_all_loss21[-1]))

endtime21 = time.time()

print("torch.nn实现前馈网络-回归实验 %d轮 总用时: %.3fs" % (epochs, endtime21 - begintime21))

MyNet21(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layer): Linear(in_features=500, out_features=256, bias=True)

(output_layer): Linear(in_features=256, out_features=1, bias=True)

(relu): ReLU()

)

epoch: 1 | train loss:161.37046 | test loss:0.49419

epoch: 10 | train loss:0.22988 | test loss:0.16238

epoch: 20 | train loss:0.14379 | test loss:0.13796

epoch: 30 | train loss:0.09954 | test loss:0.13368

epoch: 40 | train loss:0.07101 | test loss:0.13177

torch.nn实现前馈网络-回归实验 40轮 总用时: 5.138s

2.2 torch.nn实现前馈网络-二分类

# 利用torch.nn实现前馈神经网络-二分类任务

import time

from torch.optim import SGD

from torch.nn.functional import binary_cross_entropy

# 利用torch.nn实现前馈神经网络-回归任务 代码

# 定义自己的前馈神经网络

class MyNet22(nn.Module):

def __init__(self):

super(MyNet22, self).__init__()

# 设置隐藏层和输出层的节点数

num_inputs, num_hiddens, num_outputs = 200, 256, 1

# 定义模型结构

self.input_layer = nn.Flatten()

self.hidden_layer = nn.Linear(num_inputs, num_hiddens)

self.output_layer = nn.Linear(num_hiddens, num_outputs)

self.relu = nn.ReLU()

def logistic(self, x): # 定义logistic函数

x = 1.0 / (1.0 + torch.exp(-x))

return x

# 定义前向传播

def forward(self, x):

x = self.input_layer(x)

x = self.relu(self.hidden_layer(x))

x = self.logistic(self.output_layer(x))

return x

# 训练

model22 = MyNet22() # logistics模型

model22 = model22.to(device)

print(model22)

optimizer = SGD(model22.parameters(), lr=0.001) # 优化函数

epochs = 40 # 训练轮数

train_all_loss22 = [] # 记录训练集上得loss变化

test_all_loss22 = [] # 记录测试集上的loss变化

train_ACC22, test_ACC22 = [], []

begintime22 = time.time()

for epoch in range(epochs):

train_l, train_epoch_count, test_epoch_count = 0, 0, 0 # 每一轮的训练损失值 训练集正确个数 测试集正确个数

for data, labels in traindataloader2:

data, labels = data.to(device), labels.to(device)

pred = model22(data)

train_each_loss = binary_cross_entropy(pred.view(-1), labels.view(-1)) # 计算每次的损失值

optimizer.zero_grad() # 梯度清零

train_each_loss.backward() # 反向传播

optimizer.step() # 梯度更新

train_l += train_each_loss.item()

pred = torch.tensor(np.where(pred.cpu()>0.5, 1, 0)) # 大于 0.5时候,预测标签为 1 否则为0

each_count = (pred.view(-1) == labels.cpu()).sum() # 每一个batchsize的正确个数

train_epoch_count += each_count # 计算每个epoch上的正确个数

train_ACC22.append(train_epoch_count / len(traindataset2))

train_all_loss22.append(train_l) # 添加损失值到列表中

with torch.no_grad():

test_loss, each_count = 0, 0

for data, labels in testdataloader2:

data, labels = data.to(device), labels.to(device)

pred = model22(data)

test_each_loss = binary_cross_entropy(pred.view(-1),labels)

test_loss += test_each_loss.item()

# .cpu 为转换到cpu上计算

pred = torch.tensor(np.where(pred.cpu() > 0.5, 1, 0))

each_count = (pred.view(-1)==labels.cpu().view(-1)).sum()

test_epoch_count += each_count

test_all_loss22.append(test_loss)

test_ACC22.append(test_epoch_count / len(testdataset2))

if epoch == 0 or (epoch + 1) % 4 == 0:

print('epoch: %d | train loss:%.5f test loss:%.5f | train acc:%.5f | test acc:%.5f' % (epoch + 1, train_all_loss22[-1],

test_all_loss22[-1], train_ACC22[-1], test_ACC22[-1]))

endtime22 = time.time()

print("torch.nn实现前馈网络-二分类实验 %d轮 总用时: %.3fs" % (epochs, endtime22 - begintime22))

MyNet22(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layer): Linear(in_features=200, out_features=256, bias=True)

(output_layer): Linear(in_features=256, out_features=1, bias=True)

(relu): ReLU()

)

epoch: 1 | train loss:78.15685 test loss:32.32316 | train acc:0.51057 | test acc:0.54433

epoch: 4 | train loss:66.09958 test loss:27.78668 | train acc:0.72586 | test acc:0.74033

epoch: 8 | train loss:55.14796 test loss:23.36940 | train acc:0.83400 | test acc:0.83217

epoch: 12 | train loss:46.88042 test loss:20.06482 | train acc:0.87007 | test acc:0.86933

epoch: 16 | train loss:40.74989 test loss:17.58444 | train acc:0.88650 | test acc:0.88467

epoch: 20 | train loss:36.21955 test loss:15.75817 | train acc:0.89471 | test acc:0.89200

epoch: 24 | train loss:32.89339 test loss:14.41664 | train acc:0.90129 | test acc:0.89933

epoch: 28 | train loss:30.42755 test loss:13.42485 | train acc:0.90643 | test acc:0.90250

epoch: 32 | train loss:28.52416 test loss:12.66894 | train acc:0.91050 | test acc:0.90417

epoch: 36 | train loss:27.04887 test loss:12.09332 | train acc:0.91364 | test acc:0.90617

epoch: 40 | train loss:25.96526 test loss:11.65501 | train acc:0.91529 | test acc:0.90783

torch.nn实现前馈网络-二分类实验 40轮 总用时: 9.211s

2.3 torch.nn实现前馈网络-多分类任务

# 利用torch.nn实现前馈神经网络-多分类任务

from collections import OrderedDict

from torch.nn import CrossEntropyLoss

from torch.optim import SGD

# 定义自己的前馈神经网络

class MyNet23(nn.Module):

"""

参数: num_input:输入每层神经元个数,为一个列表数据

num_hiddens:隐藏层神经元个数

num_outs: 输出层神经元个数

num_hiddenlayer : 隐藏层的个数

"""

def __init__(self,num_hiddenlayer=1, num_inputs=28*28,num_hiddens=[256],num_outs=10,act='relu'):

super(MyNet23, self).__init__()

# 设置隐藏层和输出层的节点数

self.num_inputs, self.num_hiddens, self.num_outputs = num_inputs,num_hiddens,num_outs # 十分类问题

# 定义模型结构

self.input_layer = nn.Flatten()

# 若只有一层隐藏层

if num_hiddenlayer ==1:

self.hidden_layers = nn.Linear(self.num_inputs,self.num_hiddens[-1])

else: # 若有多个隐藏层

self.hidden_layers = nn.Sequential()

self.hidden_layers.add_module("hidden_layer1", nn.Linear(self.num_inputs,self.num_hiddens[0]))

for i in range(0,num_hiddenlayer-1):

name = str('hidden_layer'+str(i+2))

self.hidden_layers.add_module(name, nn.Linear(self.num_hiddens[i],self.num_hiddens[i+1]))

self.output_layer = nn.Linear(self.num_hiddens[-1], self.num_outputs)

# 指代需要使用什么样子的激活函数

if act == 'relu':

self.act = nn.ReLU()

elif act == 'sigmoid':

self.act = nn.Sigmoid()

elif act == 'tanh':

self.act = nn.Tanh()

elif act == 'elu':

self.act = nn.ELU()

print(f'你本次使用的激活函数为 {act}')

def logistic(self, x): # 定义logistic函数

x = 1.0 / (1.0 + torch.exp(-x))

return x

# 定义前向传播

def forward(self, x):

x = self.input_layer(x)

x = self.act(self.hidden_layers(x))

x = self.output_layer(x)

return x

# 训练

# 使用默认的参数即: num_inputs=28*28,num_hiddens=256,num_outs=10,act='relu'

model23 = MyNet23()

model23 = model23.to(device)

# 将训练过程定义为一个函数,方便实验三和实验四调用

def train_and_test(model=model23):

MyModel = model

print(MyModel)

optimizer = SGD(MyModel.parameters(), lr=0.01) # 优化函数

epochs = 40 # 训练轮数

criterion = CrossEntropyLoss() # 损失函数

train_all_loss23 = [] # 记录训练集上得loss变化

test_all_loss23 = [] # 记录测试集上的loss变化

train_ACC23, test_ACC23 = [], []

begintime23 = time.time()

for epoch in range(epochs):

train_l, train_epoch_count, test_epoch_count = 0, 0, 0

for data, labels in traindataloader3:

data, labels = data.to(device), labels.to(device)

pred = MyModel(data)

train_each_loss = criterion(pred, labels.view(-1)) # 计算每次的损失值

optimizer.zero_grad() # 梯度清零

train_each_loss.backward() # 反向传播

optimizer.step() # 梯度更新

train_l += train_each_loss.item()

train_epoch_count += (pred.argmax(dim=1)==labels).sum()

train_ACC23.append(train_epoch_count.cpu()/len(traindataset3))

train_all_loss23.append(train_l) # 添加损失值到列表中

with torch.no_grad():

test_loss, test_epoch_count= 0, 0

for data, labels in testdataloader3:

data, labels = data.to(device), labels.to(device)

pred = MyModel(data)

test_each_loss = criterion(pred,labels)

test_loss += test_each_loss.item()

test_epoch_count += (pred.argmax(dim=1)==labels).sum()

test_all_loss23.append(test_loss)

test_ACC23.append(test_epoch_count.cpu()/len(testdataset3))

if epoch == 0 or (epoch + 1) % 4 == 0:

print('epoch: %d | train loss:%.5f | test loss:%.5f | train acc:%5f test acc:%.5f:' % (epoch + 1, train_all_loss23[-1], test_all_loss23[-1],

train_ACC23[-1],test_ACC23[-1]))

endtime23 = time.time()

print("torch.nn实现前馈网络-多分类任务 %d轮 总用时: %.3fs" % (epochs, endtime23 - begintime23))

# 返回训练集和测试集上的 损失值 与 准确率

return train_all_loss23,test_all_loss23,train_ACC23,test_ACC23

train_all_loss23,test_all_loss23,train_ACC23,test_ACC23 = train_and_test(model=model23)

你本次使用的激活函数为 relu

MyNet23(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layers): Linear(in_features=784, out_features=256, bias=True)

(output_layer): Linear(in_features=256, out_features=10, bias=True)

(act): ReLU()

)

epoch: 1 | train loss:666.05922 | test loss:74.66159 | train acc:0.610050 test acc:0.67320:

epoch: 4 | train loss:294.27026 | test loss:48.96038 | train acc:0.789233 test acc:0.78620:

epoch: 8 | train loss:241.19507 | test loss:42.01461 | train acc:0.825667 test acc:0.81550:

epoch: 12 | train loss:221.16666 | test loss:39.38746 | train acc:0.837933 test acc:0.82430:

epoch: 16 | train loss:209.92536 | test loss:37.60138 | train acc:0.845417 test acc:0.83460:

epoch: 20 | train loss:201.97468 | test loss:36.77319 | train acc:0.851333 test acc:0.83660:

epoch: 24 | train loss:195.75960 | test loss:35.54864 | train acc:0.855767 test acc:0.84040:

epoch: 28 | train loss:190.39485 | test loss:34.62530 | train acc:0.859800 test acc:0.84480:

epoch: 32 | train loss:185.73421 | test loss:34.05905 | train acc:0.862967 test acc:0.84770:

epoch: 36 | train loss:181.50727 | test loss:33.46693 | train acc:0.865850 test acc:0.85030:

epoch: 40 | train loss:177.61171 | test loss:32.92881 | train acc:0.869550 test acc:0.85320:

torch.nn实现前馈网络-多分类任务 40轮 总用时: 172.190s

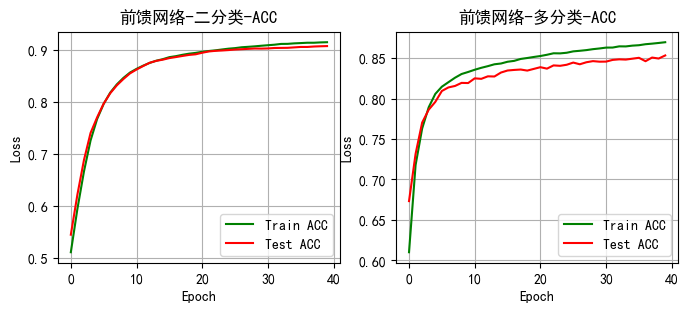

2.4 实验结果分析

plt.figure(figsize=(12,3))

plt.subplot(131)

picture('前馈网络-回归-loss',train_all_loss21,test_all_loss21)

plt.subplot(132)

picture('前馈网络-二分类-loss',train_all_loss22,test_all_loss22)

plt.subplot(133)

picture('前馈网络-多分类-loss',train_all_loss23,test_all_loss23)

plt.show()

plt.figure(figsize=(8,3))

plt.subplot(121)

picture('前馈网络-二分类-ACC',train_ACC22,test_ACC22,type='ACC')

plt.subplot(122)

picture('前馈网络-多分类-ACC',train_ACC23,test_ACC23,type='ACC')

plt.show()

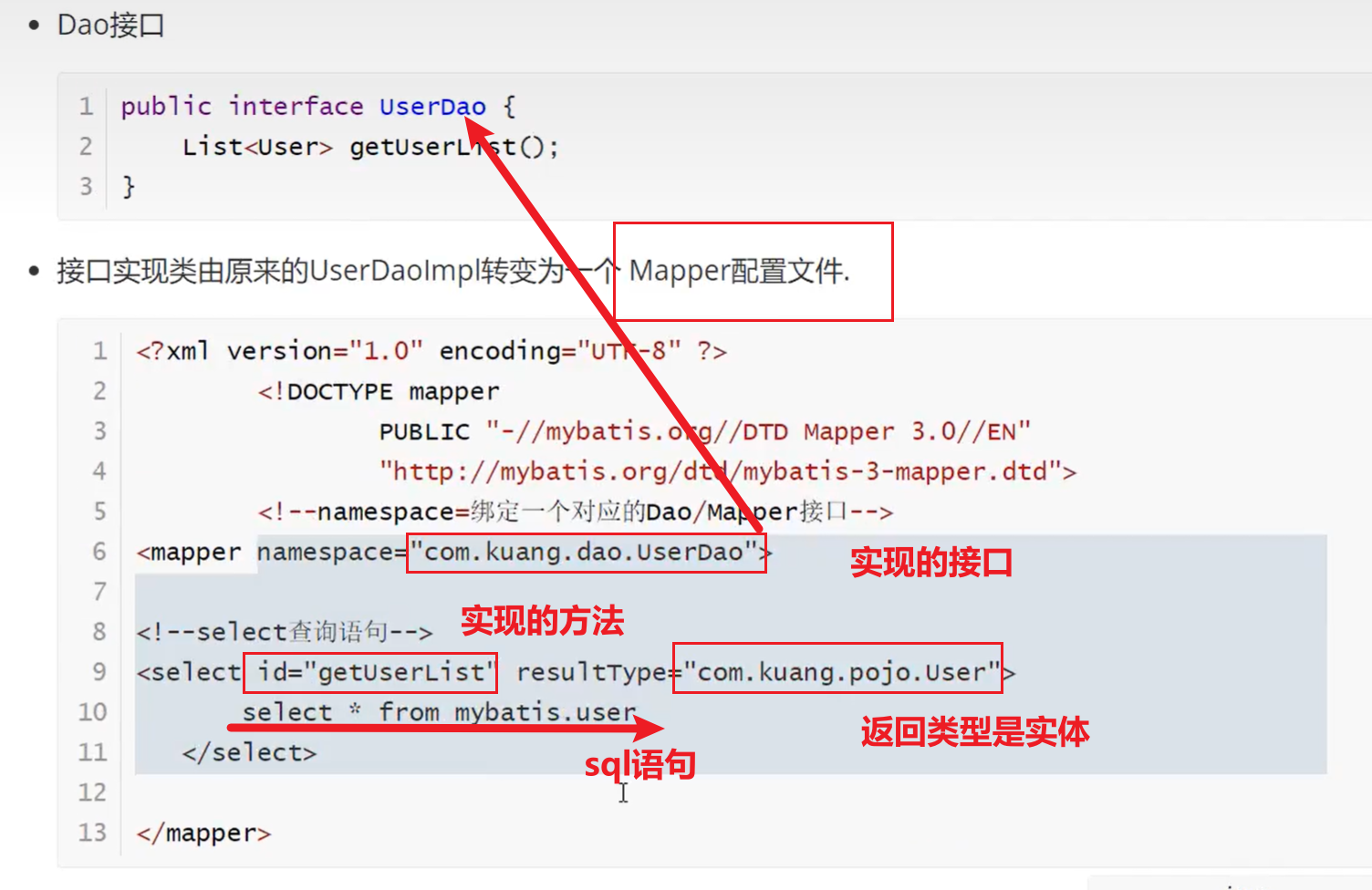

3 在多分类任务中使用至少三种不同的激活函数

默认的网络为一个隐藏层,神经元个数为[256],激活函数为relu函数

# 画图函数

def ComPlot(datalist,title='1',ylabel='Loss',flag='act'):

plt.rcParams["font.sans-serif"]=["SimHei"] #设置字体

plt.rcParams["axes.unicode_minus"]=False #该语句解决图像中的“-”负号的乱码问题

plt.title(title)

plt.xlabel('Epoch')

plt.ylabel(ylabel)

plt.plot(datalist[0],label='Tanh' if flag=='act' else '[128]')

plt.plot(datalist[1],label='Sigmoid' if flag=='act' else '[512 256]')

plt.plot(datalist[2],label='ELu' if flag=='act' else '[512 256 128 64]')

plt.plot(datalist[3],label='Relu' if flag=='act' else '[256]')

plt.legend()

plt.grid(True)

3.1 使用Tanh激活函数

# 使用实验二中多分类的模型定义其激活函数为 Tanh

model31 = MyNet23(1,28*28,[256],10,act='tanh')

model31 = model31.to(device) # 若有gpu则放在gpu上训练

# 调用实验二中定义的训练函数,避免重复编写代码

train_all_loss31,test_all_loss31,train_ACC31,test_ACC31 = train_and_test(model=model31)

你本次使用的激活函数为 tanh

MyNet23(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layers): Linear(in_features=784, out_features=256, bias=True)

(output_layer): Linear(in_features=256, out_features=10, bias=True)

(act): Tanh()

)

epoch: 1 | train loss:612.87620 | test loss:72.35193 | train acc:0.630767 test acc:0.68600:

epoch: 4 | train loss:289.93537 | test loss:48.48531 | train acc:0.792533 test acc:0.78730:

epoch: 8 | train loss:238.87227 | test loss:41.62686 | train acc:0.825883 test acc:0.81350:

epoch: 12 | train loss:219.53323 | test loss:38.82388 | train acc:0.837317 test acc:0.82480:

epoch: 16 | train loss:208.42022 | test loss:37.25787 | train acc:0.845517 test acc:0.83080:

epoch: 20 | train loss:200.89565 | test loss:36.22413 | train acc:0.849383 test acc:0.83430:

epoch: 24 | train loss:194.97263 | test loss:35.44775 | train acc:0.853683 test acc:0.83950:

epoch: 28 | train loss:190.27495 | test loss:34.88980 | train acc:0.857500 test acc:0.84030:

epoch: 32 | train loss:186.15559 | test loss:34.11328 | train acc:0.859867 test acc:0.84300:

epoch: 36 | train loss:182.57721 | test loss:33.86363 | train acc:0.862733 test acc:0.84490:

epoch: 40 | train loss:179.35559 | test loss:33.31250 | train acc:0.865117 test acc:0.84610:

torch.nn实现前馈网络-多分类任务 40轮 总用时: 155.164s

3.2 使用Sigmoid激活函数

# 使用实验二中多分类的模型定义其激活函数为 Sigmoid

model32 = MyNet23(1,28*28,[256],10,act='sigmoid')

model32 = model32.to(device) # 若有gpu则放在gpu上训练

# 调用实验二中定义的训练函数,避免重复编写代码

train_all_loss32,test_all_loss32,train_ACC32,test_ACC32 = train_and_test(model=model32)

你本次使用的激活函数为 sigmoid

MyNet23(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layers): Linear(in_features=784, out_features=256, bias=True)

(output_layer): Linear(in_features=256, out_features=10, bias=True)

(act): Sigmoid()

)

epoch: 1 | train loss:994.90819 | test loss:150.09833 | train acc:0.461850 test acc:0.51810:

epoch: 4 | train loss:521.03565 | test loss:82.54247 | train acc:0.696717 test acc:0.70170:

epoch: 8 | train loss:370.07181 | test loss:61.52568 | train acc:0.737817 test acc:0.73460:

epoch: 12 | train loss:320.86844 | test loss:54.29318 | train acc:0.758250 test acc:0.75380:

epoch: 16 | train loss:295.04981 | test loss:50.31758 | train acc:0.774917 test acc:0.76810:

epoch: 20 | train loss:277.36302 | test loss:47.61036 | train acc:0.789500 test acc:0.78180:

epoch: 24 | train loss:263.83344 | test loss:45.55588 | train acc:0.801517 test acc:0.79400:

epoch: 28 | train loss:253.27264 | test loss:43.96876 | train acc:0.810067 test acc:0.80040:

epoch: 32 | train loss:244.95116 | test loss:42.68511 | train acc:0.817117 test acc:0.80580:

epoch: 36 | train loss:238.21141 | test loss:41.71643 | train acc:0.822100 test acc:0.81050:

epoch: 40 | train loss:232.57444 | test loss:40.87718 | train acc:0.826650 test acc:0.81470:

torch.nn实现前馈网络-多分类任务 40轮 总用时: 134.940s

3.3 使用ELU激活函数

# 使用实验二中多分类的模型定义其激活函数为 ELU

model33 = MyNet23(1,28*28,[256],10,act='elu')

model33 = model33.to(device) # 若有gpu则放在gpu上训练

# 调用实验二中定义的训练函数,避免重复编写代码m

train_all_loss33,test_all_loss33,train_ACC33,test_ACC33 = train_and_test(model=model33)

你本次使用的激活函数为 elu

MyNet23(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layers): Linear(in_features=784, out_features=256, bias=True)

(output_layer): Linear(in_features=256, out_features=10, bias=True)

(act): ELU(alpha=1.0)

)

epoch: 1 | train loss:611.57683 | test loss:70.98758 | train acc:0.631517 test acc:0.68780:

epoch: 4 | train loss:286.80579 | test loss:47.96181 | train acc:0.797550 test acc:0.79060:

epoch: 8 | train loss:239.13265 | test loss:41.64160 | train acc:0.827467 test acc:0.81730:

epoch: 12 | train loss:220.97003 | test loss:39.17409 | train acc:0.838800 test acc:0.82480:

epoch: 16 | train loss:211.10187 | test loss:37.71396 | train acc:0.845150 test acc:0.83160:

epoch: 20 | train loss:204.37014 | test loss:36.77280 | train acc:0.849000 test acc:0.83580:

epoch: 24 | train loss:199.58872 | test loss:36.07494 | train acc:0.852783 test acc:0.83860:

epoch: 28 | train loss:195.67441 | test loss:35.67245 | train acc:0.855633 test acc:0.83880:

epoch: 32 | train loss:192.35206 | test loss:35.39273 | train acc:0.857883 test acc:0.84020:

epoch: 36 | train loss:189.34681 | test loss:34.88209 | train acc:0.859850 test acc:0.84100:

epoch: 40 | train loss:186.65965 | test loss:34.41847 | train acc:0.861350 test acc:0.84490:

torch.nn实现前馈网络-多分类任务 40轮 总用时: 180.760s

3.4 实验结果分析

plt.figure(figsize=(16,3))

plt.subplot(141)

ComPlot([train_all_loss31,train_all_loss32,train_all_loss33,train_all_loss23],title='Train_Loss')

plt.subplot(142)

ComPlot([test_all_loss31,test_all_loss32,test_all_loss33,test_all_loss23],title='Test_Loss')

plt.subplot(143)

ComPlot([train_ACC31,train_ACC32,train_ACC33,train_ACC23],title='Train_ACC')

plt.subplot(144)

ComPlot([test_ACC31,test_ACC32,test_ACC33,test_ACC23],title='Test_ACC')

plt.show()

4 多分类任务中的模型评估隐藏层层数和隐藏单元个数对实验结果的影响

默认的网络为一个隐藏层,神经元个数为[256],激活函数为relu函数

4.1 一个隐藏层,神经元个数为[128]

# 使用实验二中多分类的模型 一个隐藏层,神经元个数为[128]

model41 = MyNet23(1,28*28,[128],10,act='relu')

model41 = model41.to(device) # 若有gpu则放在gpu上训练

# 调用实验二中定义的训练函数,避免重复编写代码

train_all_loss41,test_all_loss41,train_ACC41,test_ACC41 = train_and_test(model=model41)

你本次使用的激活函数为 relu

MyNet23(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layers): Linear(in_features=784, out_features=128, bias=True)

(output_layer): Linear(in_features=128, out_features=10, bias=True)

(act): ReLU()

)

epoch: 1 | train loss:681.93590 | test loss:76.80434 | train acc:0.593033 test acc:0.66690:

epoch: 4 | train loss:297.45348 | test loss:49.63937 | train acc:0.786683 test acc:0.78260:

epoch: 8 | train loss:243.03557 | test loss:42.09247 | train acc:0.824183 test acc:0.81620:

epoch: 12 | train loss:222.75402 | test loss:39.42511 | train acc:0.837817 test acc:0.82460:

epoch: 16 | train loss:211.59478 | test loss:37.69665 | train acc:0.843933 test acc:0.83160:

epoch: 20 | train loss:203.98900 | test loss:36.79594 | train acc:0.849383 test acc:0.83710:

epoch: 24 | train loss:198.33328 | test loss:36.61095 | train acc:0.853650 test acc:0.83680:

epoch: 28 | train loss:193.15562 | test loss:35.70038 | train acc:0.858150 test acc:0.84080:

epoch: 32 | train loss:188.59352 | test loss:34.84765 | train acc:0.861850 test acc:0.84480:

epoch: 36 | train loss:184.53490 | test loss:34.03674 | train acc:0.864050 test acc:0.84770:

epoch: 40 | train loss:180.67314 | test loss:33.68400 | train acc:0.866833 test acc:0.84950:

torch.nn实现前馈网络-多分类任务 40轮 总用时: 240.728s

4.2 两个隐藏层,神经元个数分别为[512,256]

# 使用实验二中多分类的模型 两个隐藏层,神经元个数为[512,256]

model42 = MyNet23(2,28*28,[512,256],10,act='relu')

model42 = model42.to(device) # 若有gpu则放在gpu上训练

# 调用实验二中定义的训练函数,避免重复编写代码

train_all_loss42,test_all_loss42,train_ACC42,test_ACC42 = train_and_test(model=model42)

你本次使用的激活函数为 relu

MyNet23(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layers): Sequential(

(hidden_layer1): Linear(in_features=784, out_features=512, bias=True)

(hidden_layer2): Linear(in_features=512, out_features=256, bias=True)

)

(output_layer): Linear(in_features=256, out_features=10, bias=True)

(act): ReLU()

)

epoch: 1 | train loss:708.09641 | test loss:76.40485 | train acc:0.580183 test acc:0.66400:

epoch: 4 | train loss:288.94949 | test loss:48.32733 | train acc:0.787650 test acc:0.78370:

epoch: 8 | train loss:234.28474 | test loss:40.69212 | train acc:0.826850 test acc:0.81540:

epoch: 12 | train loss:215.26348 | test loss:38.27233 | train acc:0.839433 test acc:0.82650:

epoch: 16 | train loss:204.36354 | test loss:36.46978 | train acc:0.848867 test acc:0.83570:

epoch: 20 | train loss:196.70722 | test loss:35.85004 | train acc:0.853600 test acc:0.84040:

epoch: 24 | train loss:190.98923 | test loss:34.71030 | train acc:0.858500 test acc:0.84430:

epoch: 28 | train loss:185.85833 | test loss:33.99075 | train acc:0.861583 test acc:0.84810:

epoch: 32 | train loss:181.47207 | test loss:34.23866 | train acc:0.864783 test acc:0.84600:

epoch: 36 | train loss:177.98572 | test loss:33.15456 | train acc:0.866683 test acc:0.85090:

epoch: 40 | train loss:174.48177 | test loss:32.48847 | train acc:0.870033 test acc:0.85360:

torch.nn实现前馈网络-多分类任务 40轮 总用时: 329.830s

4.3 四个隐藏层,神经元个数分别为[512,256,128,64]

# 使用实验二中多分类的模型 四个隐藏层,神经元个数为[512,256,128,64]

model43 = MyNet23(3,28*28,[512,256,128],10,act='relu')

model43 = model43.to(device) # 若有gpu则放在gpu上训练

# 调用实验二中定义的训练函数,避免重复编写代码

train_all_loss43,test_all_loss43,train_ACC43,test_ACC43 = train_and_test(model=model43)

你本次使用的激活函数为 relu

MyNet23(

(input_layer): Flatten(start_dim=1, end_dim=-1)

(hidden_layers): Sequential(

(hidden_layer1): Linear(in_features=784, out_features=512, bias=True)

(hidden_layer2): Linear(in_features=512, out_features=256, bias=True)

(hidden_layer3): Linear(in_features=256, out_features=128, bias=True)

)

(output_layer): Linear(in_features=128, out_features=10, bias=True)

(act): ReLU()

)

epoch: 1 | train loss:847.01465 | test loss:93.53785 | train acc:0.417367 test acc:0.62110:

epoch: 4 | train loss:304.53037 | test loss:50.09736 | train acc:0.764167 test acc:0.76600:

epoch: 8 | train loss:240.31909 | test loss:41.55618 | train acc:0.821067 test acc:0.81370:

epoch: 12 | train loss:217.33034 | test loss:38.41716 | train acc:0.836800 test acc:0.82540:

epoch: 16 | train loss:205.49700 | test loss:36.88623 | train acc:0.847167 test acc:0.83430:

epoch: 20 | train loss:196.54471 | test loss:35.78100 | train acc:0.853717 test acc:0.84150:

epoch: 24 | train loss:190.24209 | test loss:34.97678 | train acc:0.858850 test acc:0.84320:

epoch: 28 | train loss:185.20985 | test loss:34.24367 | train acc:0.862883 test acc:0.84530:

epoch: 32 | train loss:180.86948 | test loss:34.61108 | train acc:0.865383 test acc:0.84120:

epoch: 36 | train loss:177.17262 | test loss:33.39929 | train acc:0.867300 test acc:0.84920:

epoch: 40 | train loss:173.78181 | test loss:32.69894 | train acc:0.869633 test acc:0.85120:

torch.nn实现前馈网络-多分类任务 40轮 总用时: 346.591s

4.4 实验结果分析

plt.figure(figsize=(16,3))

plt.subplot(141)

ComPlot([train_all_loss41,train_all_loss42,train_all_loss43,train_all_loss23],title='Train_Loss',flag='hidden')

plt.subplot(142)

ComPlot([test_all_loss41,test_all_loss42,test_all_loss43,test_all_loss23],title='Test_Loss',flag='hidden')

plt.subplot(143)

ComPlot([train_ACC41,train_ACC42,train_ACC43,train_ACC23],title='Train_ACC',flag='hidden')

plt.subplot(144)

ComPlot([test_ACC41,test_ACC42,test_ACC43,test_ACC23],title='Test_ACC', flag='hidden')

plt.show()

![[附源码]Node.js计算机毕业设计宠物短期寄养平台Express](https://img-blog.csdnimg.cn/43e2893260ea4addac1878d6bf8cc0a9.png)

![[附源码]计算机毕业设计基于人脸识别的社区防疫管理系统Springboot程序](https://img-blog.csdnimg.cn/c0d349988c734addbac9e643657b1bda.png)