系统环境:centos7

软件版本:jdk1.8、zookeeper3.4.8、hadoop2.8.5、hive1.1.0

一、安装

hive官网

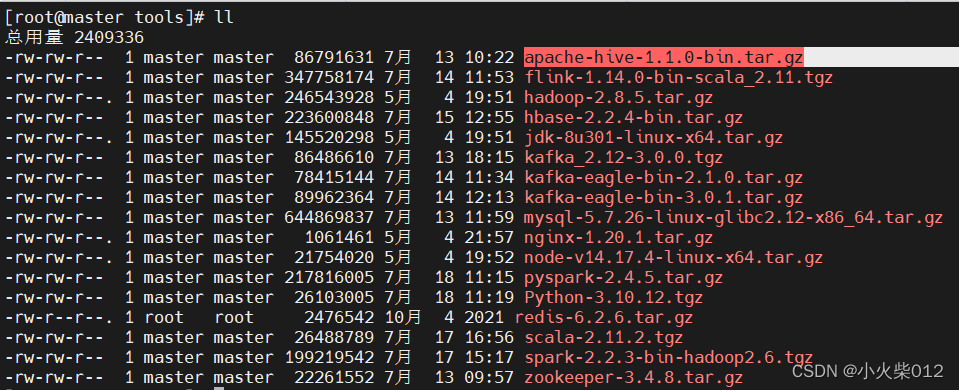

下载hive安装包,上传到linux服务器上,

解压安装包

tar -zxvf apache-hive-1.1.0-bin.tar.gz -C /home/local/

重命名文件

mv apache-hive-1.1.0-bin/ hive

二、Hive部署

配置Hive环境

vi /etc/profile

添加如下配置

#hive

export HIVE_HOME=/home/local/hive

export PATH=$PATH:${HIVE_HOME}/bin

数据库设置

mysql -uroot -p

#创建数据库

create database metastore;

#关闭只读

set global read_only=0;

#设置密码安全策略

set global validate_password_policy=0;

#设置密码长度

set global validate_password_length=4;

#更改密码

ALTER USER 'root'@'localhost' IDENTIFIED BY 'root';

#数据库授权

grant all on metastore.* to hive@'%' identified by 'hive';

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'root';

#刷新权限

flush privileges;

#退出mysql

exit

上传mysql-connector到Hive库目录

cp mysql-connector-java-5.1.49.jar /home/local/hive/lib/

修改hive配置文件

复制hive-env.sh

cp /home/local/hive/conf/hive-env.sh.template /home/local/hive/conf/hive-env.sh

修改hive-env.sh文件,参考如下内容:

vim /home/local/hive/conf/hive-env.sh

export JAVA_HOME=/home/local/java

export HADOOP_HOME=/home/local/hadoop

export HIVE_HOME=/home/local/hive

export HIVE_CONF_DIR=/home/local/hive/conf

复制hive-site.xml

cp /home/local/hive/conf/hive-default.xml.template /home/local/hive/conf/hive-site.xml

修改hive-site.xml

property>

<name>hive.exec.scratchdir</name>

<value>/user/hive/tmp</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>/user/hive/log</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://master:3306/metastore?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

</property>

初始化hive元数据库

schematool -dbType mysql -initSchema root root

分发hive至每个节点服务器

for i in {1..2};do scp -r /home/local/hive/ root@slave${i}:/home/local/;done

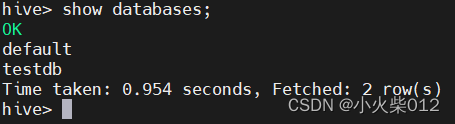

三、Hive测试

启动Hive

启动mysql

systemctl start mysql

hive

hive创建数据库

create database testdb;

创建表

drop table id exists testdb.score;

create table testdb.score

(name string comment "名称",

gender string comment "性别",

score int comment "分数")

row format delimited fields terminated by '\t'

lines terminated by '\n'

stored as textfile;

删除表

drop table if exists table;

本地文件导入hive

load data local inpath '' into table ''

hdfs文件导入hive

在/opt/hive/data/目录下新建stu_score.txt文件,添加如下内容

jone male 30

mike male 40

sunny female 50

文件上传到hdfs上

hdfs dfs -put /opt/hive/data/stu_score.txt /hive/warehouse

hdfs上传到hive表中

load data inpath '/hive/warehouse/stu_score.txt' into table score;

exit;

Java程序将hdfs文件上传到hive

导入依赖

<!--hadoop-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.8.5</version>

</dependency>

<!--hive-->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>1.1.0</version>

</dependency>

编写测试用例

public class HiveTest {

public static void main(String[] args) throws SQLException {

HdfsFileUploader("input/stu_score.txt", "/hive/warehouse");

HdfsToHiveUploader("/hive/warehouse/stu_score.txt", "score");

}

/**

* 使用sql查询数据库 统计时间 测试性能

*

* @param sql

* @return

*/

public static List getDataList(String sql) {

long start = System.currentTimeMillis();

List list = new ArrayList();

Connection connection = getConnection();

try {

Statement statement = connection.createStatement();

ResultSet resultSet = statement.executeQuery(sql);

ResultSetMetaData metaData = resultSet.getMetaData();

int columnCount = metaData.getColumnCount();

while (resultSet.next()) {

Map map = new HashMap<>();

for (int i = 1; i <= columnCount; i++) {

// 获取字段名称 metaData.getColumnName(i)

map.put(metaData.getColumnName(i), resultSet.getObject(i));

}

list.add(map);

// Process the result set

}

resultSet.close();

statement.close();

connection.close();

} catch (Exception e) {

e.printStackTrace();

}

long end = System.currentTimeMillis();

System.out.println("本次查询耗时" + (end - start) / 1000 + "秒");

return list;

}

/**

* 获取数据库连接对象

*

* @return

*/

public static Connection getConnection() {

Connection connection = null;

try {

// Load Hive JDBC driver

Class.forName("org.apache.hive.jdbc.HiveDriver");

// Establish connection to Hive

connection = DriverManager.getConnection("jdbc:hive2://192.168.245.200:10000/testdb", "root", "root");

} catch (Exception e) {

e.printStackTrace();

}

return connection;

}

/**

* 本地文件上传到hdfs

*/

public static void HdfsFileUploader(String localFilePath, String hdfsFilePath) {

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://192.168.245.200:9000"); // 设置HDFS的URL

try {

FileSystem fileSystem = FileSystem.get(configuration);

fileSystem.copyFromLocalFile(new Path(localFilePath), new Path(hdfsFilePath));

System.out.println("File uploaded to HDFS successfully.");

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* hdfs上传到hive

*/

public static void HdfsToHiveUploader(String hdfsFilePath, String tableName) {

try {

Connection connection = getConnection();

Statement statement = connection.createStatement();

// Create temporary external table in Hive

String createTableQuery = "CREATE EXTERNAL TABLE IF NOT EXISTS " + tableName + " (City STRING, City_Admaster STRING,City_EN STRING,Province STRING,Province_EN STRING,Region STRING,Tier STRING) " + "ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' " + "STORED AS TEXTFILE " + "LOCATION '/hive/warehouse/china_city_list'";

statement.execute(createTableQuery);

// Load data from HDFS to Hive table

String loadDataQuery = "LOAD DATA INPATH '" + hdfsFilePath + "' OVERWRITE INTO TABLE " + tableName;

statement.execute(loadDataQuery);

System.out.println("File uploaded from HDFS to Hive successfully.");

// Close connection and statement

statement.close();

connection.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

四、总结

本次实验中,Hive采用MySQL数据库保存Hive的元数据,而不是采用Hive自带的derby来存储元数据。

Hive常用的HiveQL操作命令主要包括:数据定义、数据操作。

Hive实现最大的优势是,对于非程序员,不用学习编写Java MapReduce代码,也可以完成MapReduce任务。