话不多说 直接上官网

Overview | Apache Flink

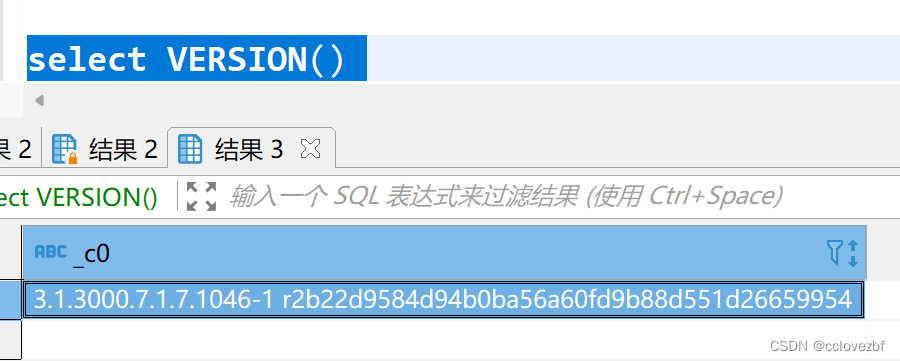

hive版本 3.1.3000

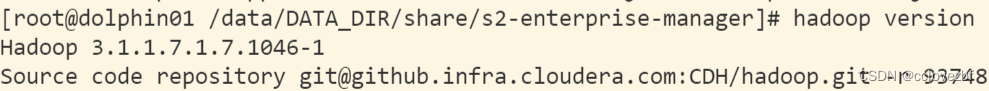

hadoop 版本 3.1.1.7.1.7

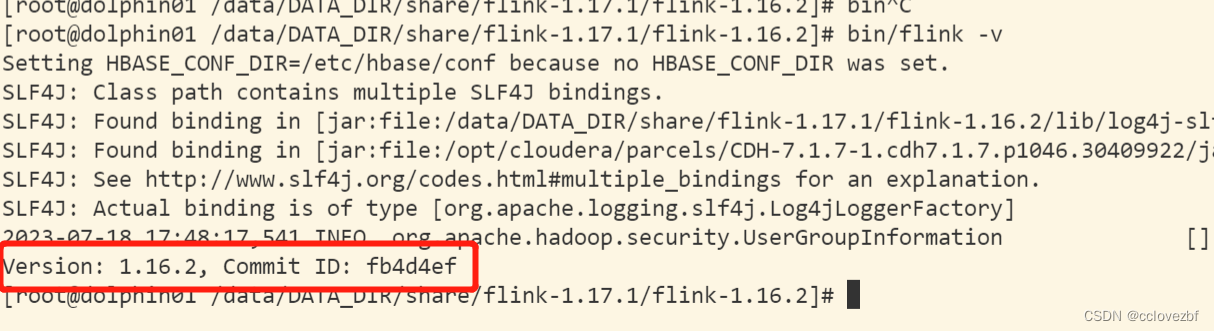

flink 1.16.2

代码 很简单我还是贴下

import com.fasterxml.jackson.databind.ObjectMapper

import com.typesafe.config.{Config, ConfigFactory}

import org.apache.flink.api.common.functions.MapFunction

import org.apache.flink.api.connector.sink2.SinkWriter

import org.apache.flink.api.java.utils.ParameterTool

import org.apache.flink.connector.elasticsearch.sink.{Elasticsearch7SinkBuilder, ElasticsearchSink, RequestIndexer}

import org.apache.flink.streaming.api.datastream.{DataStream, SingleOutputStreamOperator}

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment

import org.apache.flink.table.api.{SqlDialect, Table}

import org.apache.flink.table.catalog.hive.HiveCatalog

import org.apache.flink.types.Row

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.security.UserGroupInformation

import org.apache.http.HttpHost

import org.elasticsearch.action.update.UpdateRequest

import org.elasticsearch.common.xcontent.XContentType

import org.slf4j.{Logger, LoggerFactory}

import java.util

import java.util.Base64

import scala.collection.mutable

object Hive2ESProcess {

private val logger: Logger = LoggerFactory.getLogger(this.getClass.getName)

// val path = "C:\\Users\\coder\\Desktop\\kerberos\\cdp\\hive-conf"

// val hiveConfDir = "C:\\Users\\coder\\Desktop\\kerberos\\cdp\\hive-conf"

// //指定hadoop的位置。否则能会报 unknown hosts 错误,能够show databases tables functions 但是不能select

// val hadoopConfDir = "C:\\Users\\coder\\Desktop\\kerberos\\cdp\\hive-conf"

val name = "s2cluster"

val defaultDatabase = "dwintdata"

def main(args: Array[String]): Unit = {

val config: Config = ConfigFactory.load("hive2es.conf")

val hiveConfig: Config = config.getConfig("hive")

val esConfig: Config = config.getConfig("es")

//kbs认证

kbsAuth(hiveConfig)

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val tEnv: StreamTableEnvironment = StreamTableEnvironment.create(env)

//连接hive

createHiveCatalog(tEnv, hiveConfig)

//查询hive数据

val params: ParameterTool = ParameterTool.fromArgs(args)

val hiveTable: String = params.get("source", "dwintdata.dw_f_da_enterprise_business_info")

val index: String = params.get("target", "i_dw_f_da_enterprise_business_info")

val eid: String = params.get("es.id", "eid")

logger.info(s"hiveTable=${hiveTable},esIndex=${index}, es.id=${eid}")

//添加source

val table: Table = tEnv.sqlQuery("select * from " + hiveTable)

val value: DataStream[Row] = tEnv.toDataStream(table)

import collection.JavaConverters._

val result: SingleOutputStreamOperator[mutable.Map[String, Object]] = value

.map(new MapFunction[Row, mutable.Map[String, Object]] {

override def map(r: Row): mutable.Map[String, Object] = {

val fieldNames: util.Set[String] = r.getFieldNames(true)

val fields: Set[String] = fieldNames.asScala.toSet[String]

val map: mutable.Map[String, Object] = mutable.Map[String, Object]()

for (columnName <- fields) {

val columnValue: AnyRef = r.getField(columnName)

map.put(columnName, if (columnValue == null)null else columnValue.toString)

// if (columnValue == null) {

// map.put(columnName, null)

// } else {

// map.put(columnName, columnValue.toString)

// }

}

map

}

}

)

//创建es的index

def createIndex(element: mutable.Map[String, Object]): UpdateRequest = {

import collection.JavaConverters._

var esId=""

//一个columnValue可能做不了唯一id 需要两个columnValue构建

val columns: Array[String] = eid.split(",")

for(column <- columns){

if (!element.contains(column)) throw new RuntimeException(s"column $column not exists")

esId=esId+ element(column)

}

val json: String =new ObjectMapper().writeValueAsString(element.asJava)

//这种生成eid问题在于重跑数据 elt_create_time不同 最后encode的结果也不同 只是重跑一遍 数据就会插入两份

// val eid: String = Base64.getEncoder.encodeToString(json.getBytes)

val request = new UpdateRequest

request.index(index)

.id(esId)

.doc(json, XContentType.JSON)

.docAsUpsert(true) //upsert方式

}

//添加sink

val esSink: ElasticsearchSink[mutable.Map[String, Object]] = new Elasticsearch7SinkBuilder[mutable.Map[String, Object]]

.setBulkFlushMaxActions(1)

.setHosts(new HttpHost(esConfig.getString("host"), esConfig.getInt("port"), "http"))

.setConnectionUsername(esConfig.getString("username"))

.setConnectionPassword(esConfig.getString("password"))

// .setBulkFlushMaxActions() //设置最大处理数 也就是最多积攒多少个 默认1000

.setBulkFlushInterval(1000) //flush间隔 默认-1

.setEmitter((element: mutable.Map[String, Object], context: SinkWriter.Context, indexer: RequestIndexer) => {

indexer.add(createIndex(element))

}).build()

result.sinkTo(esSink)

env.execute()

}

def kbsAuth(config: Config) {

val keytab: String = config.getString("keytab")

val principal: String = config.getString("principal")

val krbPath: String = config.getString("krb5Conf")

logger.info(s"login as user: $principal with keytab: $keytab")

System.setProperty("java.security.krb5.conf", krbPath)

val hadoopConfig: Configuration = new Configuration

hadoopConfig.set("hadoop.security.authentication", "kerberos")

try {

UserGroupInformation.loginUserFromKeytab(principal, keytab)

} catch {

case ex: Exception =>

logger.error("Kerberos login failure, error:", ex)

}

}

def createHiveCatalog(tEnv: StreamTableEnvironment, hiveConfig: Config): Unit = {

val hiveConf: String = hiveConfig.getString("conf")

// val hadoopConf: String = hiveConfig.getString("hadoopConf")

val hiveVersion: String = hiveConfig.getString("version")

val hive = new HiveCatalog(name, defaultDatabase, hiveConf, hiveVersion)

tEnv.registerCatalog(name, hive)

tEnv.useCatalog(name)

tEnv.getConfig.setSqlDialect(SqlDialect.HIVE)

}

}

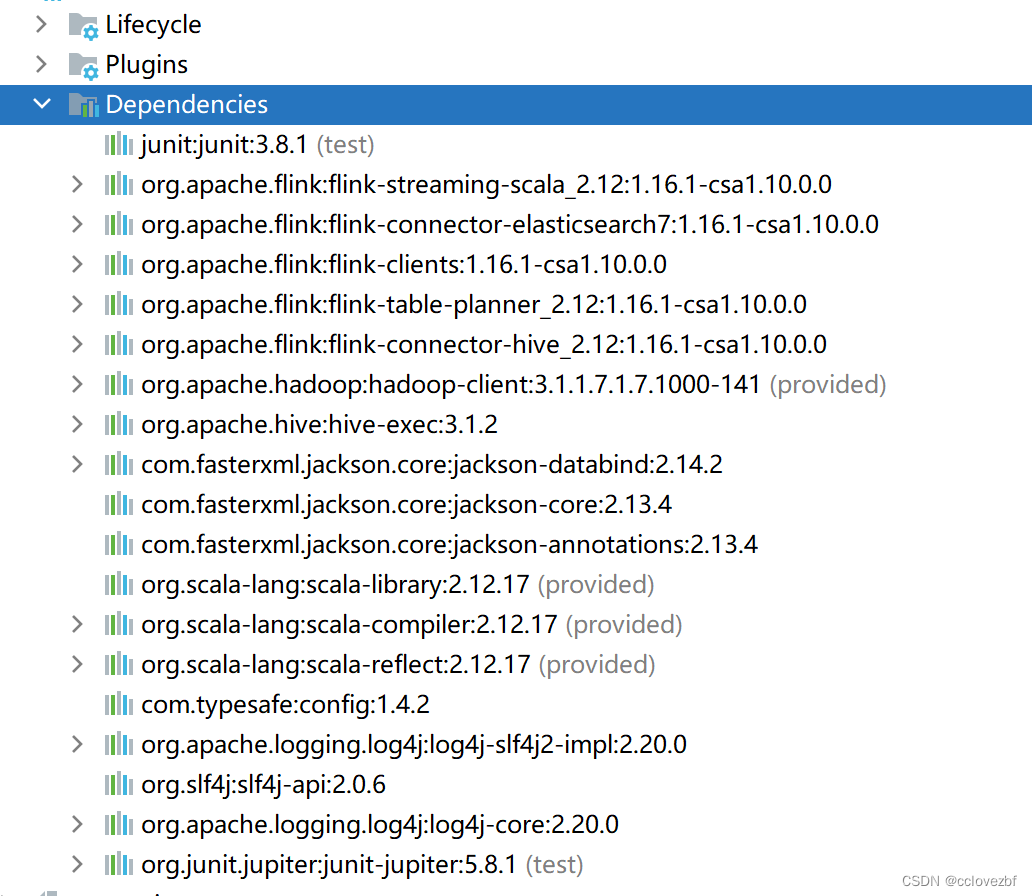

引入的jar

<hadoop.version>3.1.1.7.1.7.1000-141</hadoop.version> <flink.version>1.16.1-csa1.10.0.0</flink.version><dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>3.8.1</version> <scope>test</scope> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-scala_2.12</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-elasticsearch7</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-planner_${scala.binary}</artifactId> <version>${flink.version}</version> </dependency> <!-- <dependency>--> <!-- <groupId>org.apache.flink</groupId>--> <!-- <artifactId>flink-table-planner-blink_${scala.binary}</artifactId>--> <!-- <version>${flink.version}</version>--> <!-- </dependency>--> <!-- <dependency>--> <!-- <groupId>org.apache.flink</groupId>--> <!-- <artifactId>flink-table-api-java-bridge_2.12</artifactId>--> <!-- <version>${flink.version}</version>--> <!-- </dependency>--> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-hive_${scala.binary}</artifactId> <version>${flink.version}</version> </dependency> <!-- hadoop 版本不需要引入,服务器的hadoop_home有 但是flink启动的时候缺少了hadoop-mapreduce-client-core-3.1.1.7.1.7.1046-1.jar这个jar--> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>${hadoop.version}</version> <scope>${compile.scope}</scope> </dependency> <!--需要引入3.1.2的jar 用的服务器3.1.3的版本会报错--> <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-exec</artifactId> <version>3.1.2</version> <!-- <scope>${compile.scope}</scope>--> </dependency> <dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-databind</artifactId> <version>2.14.2</version> </dependency> <dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-core</artifactId> <version>2.13.4</version> </dependency> <dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-annotations</artifactId> <version>2.13.4</version> </dependency> </dependencies>

我遇到的问题

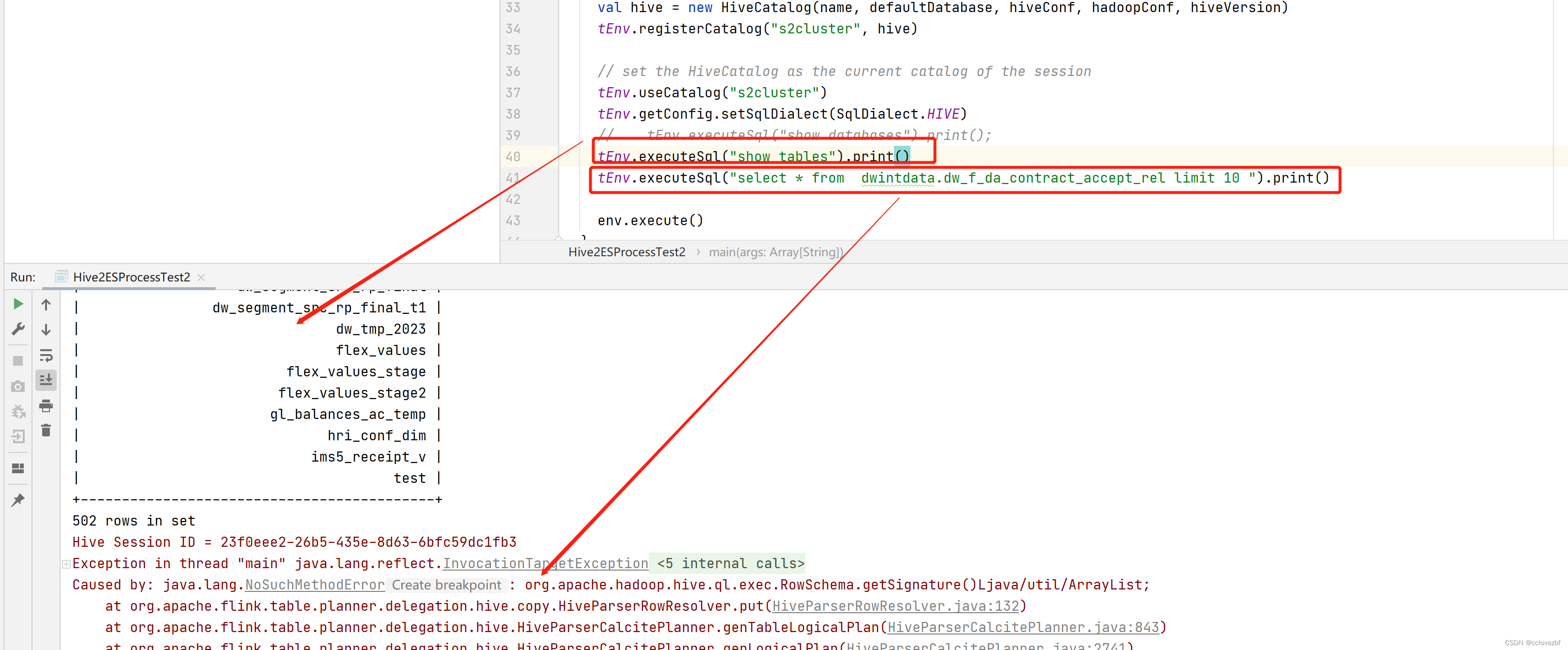

1.我最开始在本地运行 使用的是<hive.version>3.1.3000.7.1.7.1000-141</hive.version>

也就是和集群hive版本相同的数据,但是报错了!!!!!

报错 Caused by: java.lang.NoSuchMethodError: org.apache.hadoop.hive.ql.exec.RowSchema.getSignature()Ljava/util/ArrayList;

自己看下问题所在

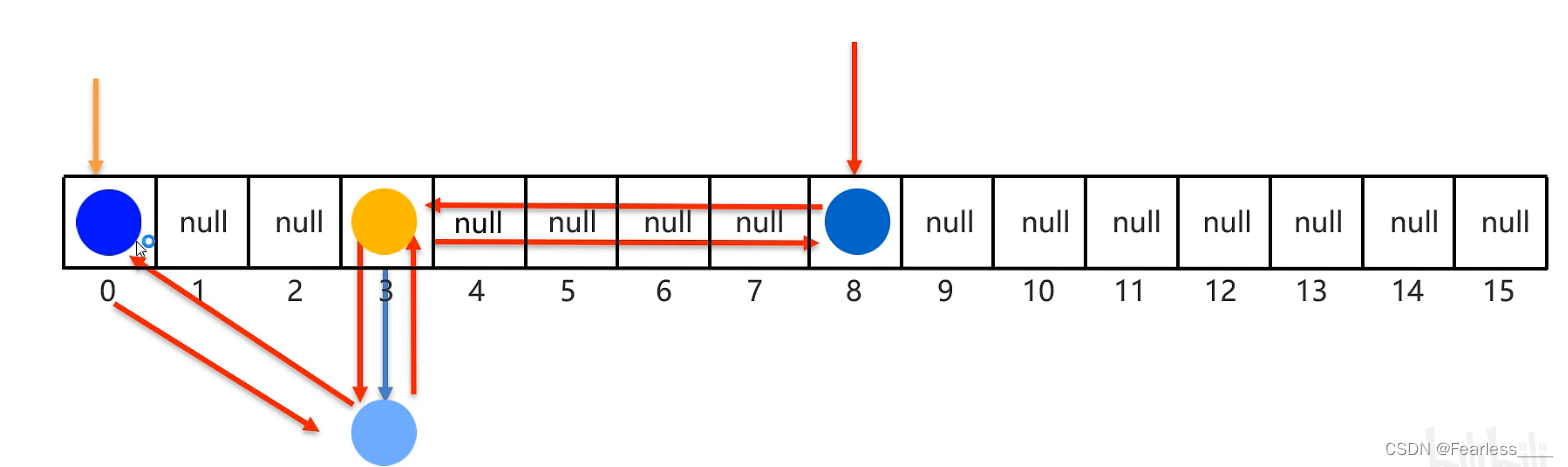

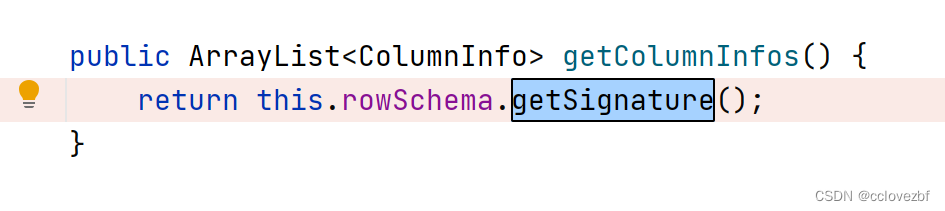

flink代码 注意这个返回的是arrayList

hive3.1.3 返回的是List

hive3.1.2 返回的是ArrayList

问题就很简单了 flink1.16就不支持3.1.3 所以我就降版本算球

我也一下子看不出这个问题 看了官网发现 说是支持3.1.3后面又只写了3.1.2

解决这个问题后 本地可以运行 也正常了。

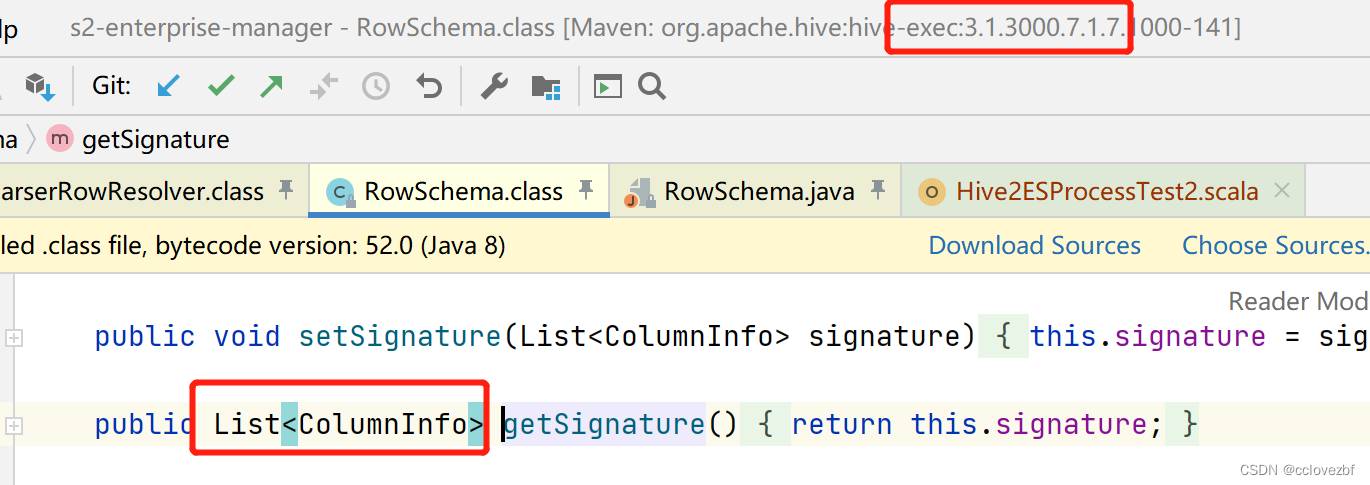

然后我上传到服务器的时候,最开始打的全量包,所有依赖都打进去了

hadoop hive flink 结果报错 我tm本地 服务器 认证了几十次了 突然就报这个错

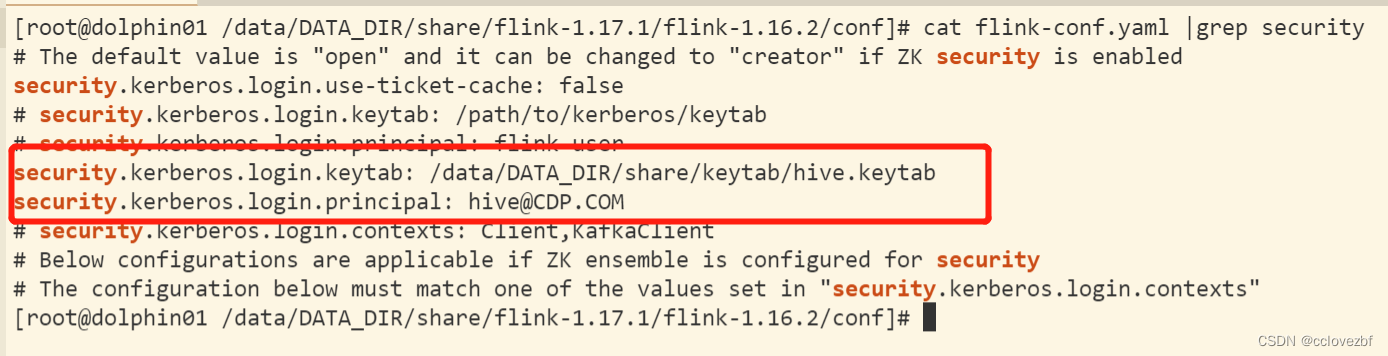

这个是flink程序 在yml里配置的

这个是flink程序 在yml里配置的

这里认证成功了 ,而我代码里失败了。。。。路径是没有问题的, kinit -kt 成功的

org.apache.hadoop.security.KerberosAuthException: failure to login: for principal: hive@CDP.COM from keytab /data/DATA_DIR/share/keytab/hive.keytab javax.security.auth.login.LoginException: Unable to obtain password from user

后面请别人帮忙看,最开始以为是jar的问题,后来也不是,这个问题先跳过,因为不影响后面的

上传后遇到这个问题 没法别人说是我hadoop-client 和hive-exec 不需要打,改为provided

结果报错。

报了一个 : org.apache.hadoop.hive.metastore.api.NoSuchObjectException

这不就是没有hive的jar包么。

我当时觉得就是缺少了hive的jar 还想ln-s 把CDH/hive/conf里的jar 引入到flink_home/lib里 发现真是错了。

还是得把hive-exec 3.1.2 依赖打进jar里。

现在是hive-exec3.1.2 compile hadoop-client provided的状态,打好jar后又报错了。

报了一个 classnofound org.apache.hadoop.mapred.JobConf 我就真是服了

我本地全部jar能够运行,

服务器flink本身export `hadoop classpath` +我打入的hive-exec3.1.2应该可以呀

没法继续看问题。 在本地找到这个jar 属于hadoop-mapreduce-client-core-3.1.1.7.1.7.1046-1.jar

那为啥服务器没有ls -l /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/*core*

发现hadoop classpath 有这个目录,这个目录也有这个jar ,按道理没问题呀

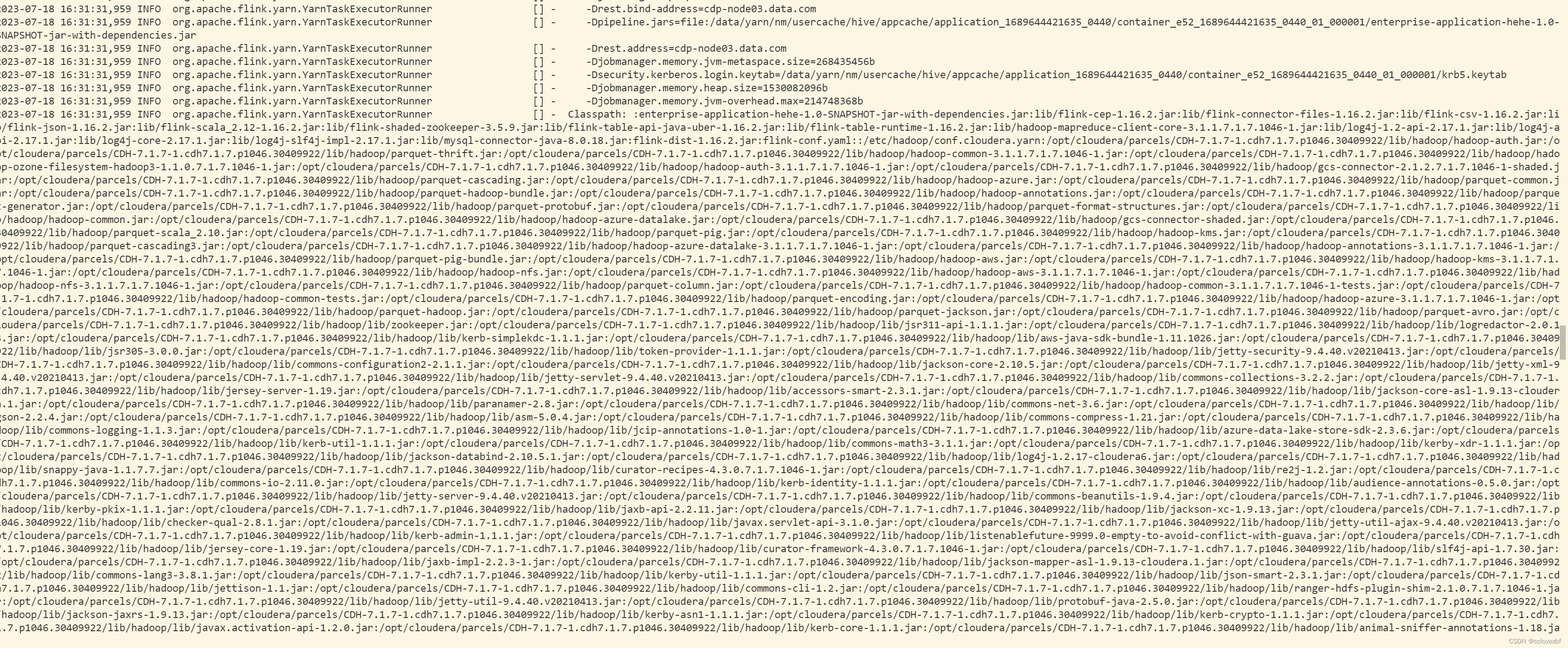

于是我们又要看下flink启动的时候引入了那些jar,flink的启动日志

我们稍微格式化下、发现flink确实没有引入 hadoop-mapreduce包下的数据

我们稍微格式化下、发现flink确实没有引入 hadoop-mapreduce包下的数据

Classpath:

:

enterprise-application-hehe-1.0-SNAPSHOT-jar-with-dependencies.jar:

lib/flink-cep-1.16.2.jar:

lib/flink-connector-files-1.16.2.jar:

lib/flink-csv-1.16.2.jar:

lib/flink-json-1.16.2.jar:

lib/flink-scala_2.12-1.16.2.jar:

lib/flink-shaded-zookeeper-3.5.9.jar:

lib/flink-table-api-java-uber-1.16.2.jar:

lib/flink-table-runtime-1.16.2.jar:

lib/hadoop-mapreduce-client-core-3.1.1.7.1.7.1046-1.jar: --这个是我自己放到目录的

lib/log4j-1.2-api-2.17.1.jar:

lib/log4j-api-2.17.1.jar:

lib/log4j-core-2.17.1.jar:

lib/log4j-slf4j-impl-2.17.1.jar:

lib/mysql-connector-java-8.0.18.jar:

flink-dist-1.16.2.jar:

flink-conf.yaml:

:

/etc/hadoop/conf.cloudera.yarn:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-auth.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-thrift.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-common-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-ozone-filesystem-hadoop3-1.1.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-auth-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/gcs-connector-2.1.2.7.1.7.1046-1-shaded.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-cascading.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-azure.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-common.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-hadoop-bundle.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-annotations.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-generator.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-protobuf.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-format-structures.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-common.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-azure-datalake.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/gcs-connector-shaded.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-scala_2.10.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-pig.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-kms.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-cascading3.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-azure-datalake-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-annotations-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-pig-bundle.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-aws.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-kms-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-nfs.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-aws-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-nfs-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-column.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-common-3.1.1.7.1.7.1046-1-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-common-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-encoding.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/hadoop-azure-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-hadoop.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-jackson.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/parquet-avro.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/zookeeper.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jsr311-api-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/logredactor-2.0.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-simplekdc-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/aws-java-sdk-bundle-1.11.1026.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jsr305-3.0.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/token-provider-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-security-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-configuration2-2.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jackson-core-2.10.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-xml-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-servlet-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-collections-3.2.2.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jersey-server-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/accessors-smart-2.3.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jackson-core-asl-1.9.13-cloudera.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/paranamer-2.8.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-net-3.6.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/gson-2.2.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/asm-5.0.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-compress-1.21.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-logging-1.1.3.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jcip-annotations-1.0-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/azure-data-lake-store-sdk-2.3.6.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-util-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-math3-3.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerby-xdr-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jackson-databind-2.10.5.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/log4j-1.2.17-cloudera6.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/snappy-java-1.1.7.7.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/curator-recipes-4.3.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/re2j-1.2.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-io-2.11.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-identity-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/audience-annotations-0.5.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-server-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-beanutils-1.9.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerby-pkix-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jaxb-api-2.2.11.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jackson-xc-1.9.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/checker-qual-2.8.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/javax.servlet-api-3.1.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-util-ajax-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-admin-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jersey-core-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/curator-framework-4.3.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/slf4j-api-1.7.30.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jaxb-impl-2.2.3-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jackson-mapper-asl-1.9.13-cloudera.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-lang3-3.8.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerby-util-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/json-smart-2.3.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jettison-1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-cli-1.2.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/ranger-hdfs-plugin-shim-2.1.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-util-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/protobuf-java-2.5.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jackson-jaxrs-1.9.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerby-asn1-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-crypto-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/javax.activation-api-1.2.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-core-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/animal-sniffer-annotations-1.18.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/nimbus-jose-jwt-7.9.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-codec-1.14.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/httpclient-4.5.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-webapp-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/log4j-api-2.17.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/wildfly-openssl-1.0.7.Final.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jackson-annotations-2.10.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/ranger-plugin-classloader-2.1.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-server-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-client-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/slf4j-log4j12.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/stax2-api-3.1.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/curator-client-4.3.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jsch-0.1.54.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/guava-28.1-jre.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/ranger-yarn-plugin-shim-2.1.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/j2objc-annotations-1.3.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jersey-servlet-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jersey-json-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/failureaccess-1.0.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/log4j-core-2.17.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/zookeeper-jute.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-io-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/xz-1.8.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/httpcore-4.4.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jetty-http-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jsp-api-2.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/jul-to-slf4j-1.7.30.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/netty-all-4.1.68.Final.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/woodstox-core-5.0.3.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerby-config-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/metrics-core-3.2.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/kerb-common-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/commons-lang-2.6.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop/lib/avro.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-3.1.1.7.1.7.1046-1-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-rbf.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-httpfs-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-native-client-3.1.1.7.1.7.1046-1-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-native-client.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-client-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-client-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-rbf-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-nfs.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-httpfs.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-client.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-native-client-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-native-client-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-rbf-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-rbf-3.1.1.7.1.7.1046-1-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-nfs-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs-client-3.1.1.7.1.7.1046-1-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/hadoop-hdfs.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jsr311-api-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-simplekdc-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jsr305-3.0.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/token-provider-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-security-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-configuration2-2.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jackson-core-2.10.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-xml-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-servlet-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/okhttp-2.7.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-collections-3.2.2.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jersey-server-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/accessors-smart-2.3.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jackson-core-asl-1.9.13-cloudera.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/paranamer-2.8.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-net-3.6.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/gson-2.2.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/asm-5.0.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-compress-1.21.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-logging-1.1.3.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jcip-annotations-1.0-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-util-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-math3-3.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerby-xdr-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jackson-databind-2.10.5.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/log4j-1.2.17-cloudera6.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/snappy-java-1.1.7.7.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/curator-recipes-4.3.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/re2j-1.2.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-io-2.11.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-identity-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/audience-annotations-0.5.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-server-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-beanutils-1.9.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerby-pkix-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jaxb-api-2.2.11.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jackson-xc-1.9.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/checker-qual-2.8.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/javax.servlet-api-3.1.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-util-ajax-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-admin-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/netty-3.10.6.Final.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jersey-core-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/leveldbjni-all-1.8.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/curator-framework-4.3.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jaxb-impl-2.2.3-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jackson-mapper-asl-1.9.13-cloudera.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-lang3-3.8.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerby-util-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/json-smart-2.3.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jettison-1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-cli-1.2.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-util-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/protobuf-java-2.5.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jackson-jaxrs-1.9.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerby-asn1-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-crypto-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/javax.activation-api-1.2.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-core-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/animal-sniffer-annotations-1.18.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/nimbus-jose-jwt-7.9.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-codec-1.14.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/httpclient-4.5.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-webapp-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jackson-annotations-2.10.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-server-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-client-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/avro-1.8.2.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/stax2-api-3.1.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/json-simple-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/curator-client-4.3.0.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/zookeeper-3.5.5.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jsch-0.1.54.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/guava-28.1-jre.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/j2objc-annotations-1.3.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jersey-servlet-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/zookeeper-jute-3.5.5.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-daemon-1.0.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jersey-json-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/failureaccess-1.0.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-io-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/xz-1.8.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/httpcore-4.4.13.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/jetty-http-9.4.40.v20210413.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/netty-all-4.1.68.Final.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/woodstox-core-5.0.3.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerby-config-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/okio-1.6.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/kerb-common-1.1.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-hdfs/lib/commons-lang-2.6.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-services-core.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-nodemanager-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-timeline-pluginstorage-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-nodemanager.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-applicationhistoryservice-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-api.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-tests-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-web-proxy-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-applicationhistoryservice.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-common.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-applications-distributedshell.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-services-api-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-timeline-pluginstorage.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-client-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-services-api.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-tests.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-resourcemanager-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-common.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-services-core-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-applications-distributedshell-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-resourcemanager.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-router-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-router.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-sharedcachemanager.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-common-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-registry.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-applications-unmanaged-am-launcher.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-api-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-web-proxy.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-common-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-client.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-registry-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/hadoop-yarn-server-sharedcachemanager-3.1.1.7.1.7.1046-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jackson-dataformat-yaml-2.10.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/swagger-annotations-1.5.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jersey-client-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/objenesis-1.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/spark-2.4.7.7.1.7.1046-1-yarn-shuffle.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/ehcache-3.3.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/bcpkix-jdk15on-1.60.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jakarta.activation-api-1.2.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/mssql-jdbc-6.2.1.jre7.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/snakeyaml-1.26.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/dnsjava-2.1.7.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/aopalliance-1.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/HikariCP-java7-2.4.12.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/guice-servlet-4.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/joda-time-2.10.6.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jackson-jaxrs-base-2.10.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jersey-guice-1.19.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jackson-jaxrs-json-provider-2.10.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/javax.inject-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/json-io-2.5.1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/guice-4.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/fst-2.50.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/bcprov-jdk15on-1.60.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jna-5.2.0.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jakarta.xml.bind-api-2.3.2.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/jackson-module-jaxb-annotations-2.10.5.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/metrics-core-3.2.4.jar:

/opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p1046.30409922/lib/hadoop-yarn/lib/java-util-1.9.0.jar没法自己软链接了一个

ln -s /opt/cloudera/parcels/CDH/jars/hadoop-mapreduce-client-core-3.1.1.7.1.7.1046-1.jar flink_home/lib/

至此问题解决了,服务器也正常了

从解决这个事情学到了什么

1.引入的jar不一样会导致报错 3.1.3->3.1.2,不要觉得源码难,源码写不了,难道还看不了,简单的一个arrayList list还是能看懂的

2.flink本身只有自己的lib目录和 hadoop的部分目录 所以缺少的jar还是要自己引入最好是ln -s

3.了解本地和服务器开发的的区别。

4.认证的哪个问题没解决。。。。不舒服