在音视频通信中,网络抖动和延迟是常见的问题,会导致音视频质量下降和用户体验不佳。为了解决这些问题,WebRTC引入了Jitter Buffer(抖动缓冲区)这一重要组件。Jitter Buffer是一个缓冲区,用于接收和处理网络传输中的音频和视频数据。它的主要作用是解决网络抖动和延迟带来的问题,以提供更稳定和流畅的音视频传输。Jitter Buffer通过调整数据包的接收和播放时间,使得音视频数据能够按照正确的顺序和时序进行解码和播放。

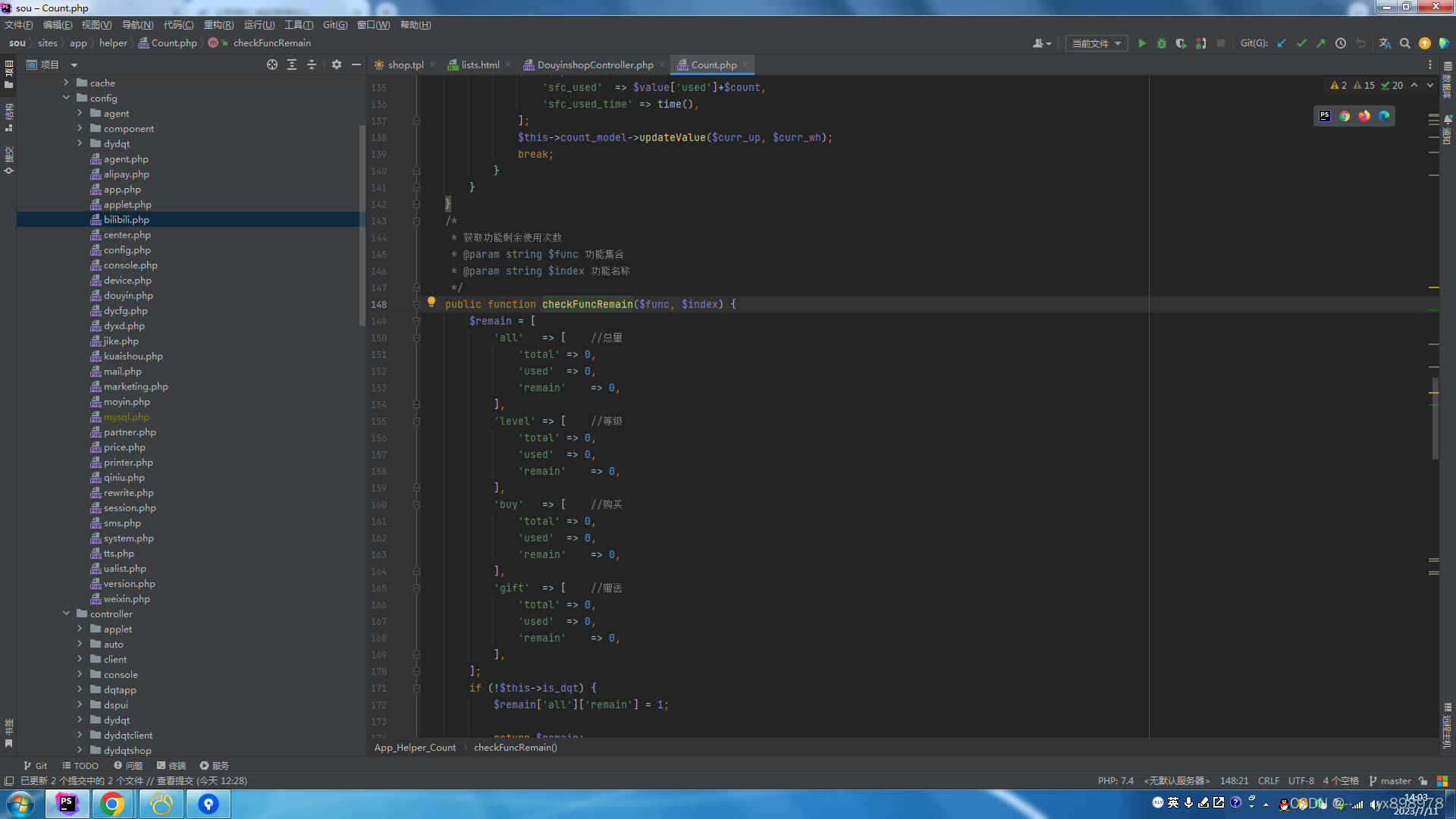

本文将从webrtc源码分析jitter buffer的实现,版本m98。

一、RTP数据包接收及解析

1、RTP包接收流程

- 跟P2P时的流程相似,从底层socket读取数据,到

UDPPort::OnReadPacket。

PhysicalSocketServer::WaitSelect>ProcessEvents>SocketDispatcher::OnEvent>SignalReadEvent>AsyncUDPSocket::OnReadEvent>SignalReadPacket>AllocationSequence::OnReadPacket>UDPPort::HandleIncomingPacket>UDPPort::OnReadPacket - 从

UDPPort::OnReadPacket到Call模块。

UDPPort::OnReadPacket>Connection::OnReadPacket>Connection::SignalReadPacket>P2PTransportChannel::OnReadPacket>P2PTransportChannel::SignalReadPacket>DtlsTransport::OnReadPacket>DtlsTransport::SignalReadPacket>RtpTransport::OnReadPacket>RtpTransport::OnRtpPacketReceived>RtpTransport::DemuxPacket>RtpDemuxer::OnRtpPacket>BaseChannel::OnRtpPacket>WebRtcVideoChannel::OnPacketReceived>Call::DeliverPacket>Call::DeliverRtp

2、RTP包解析

- 在

Call::DeliverRtp中通过调用RtpPacketReceived的Parse函数,进行RTP包的解析。

if (!parsed_packet.Parse(std::move(packet)))

return DELIVERY_PACKET_ERROR;

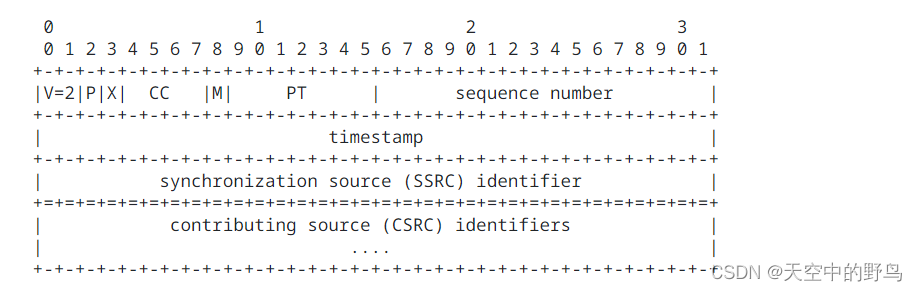

RtpPacketReceived继承于RtpPacket,也就是会调用RtpPacket::Parse>RtpPacket::ParseBuffer进行解析。- RTP头结构在webrtc源码阅读之h264 RTP打包中已经学习过了,如下图所示,根据RTP头结构,对RTP头中的关键参数进行解析。

3、RTP包H264解析

Call::DeliverRtp中解析完RTP包,会调用RtpStreamReceiverController::OnRtpPacket对解析后的RTP包进行处理。

if (video_receiver_controller_.OnRtpPacket(parsed_packet)) {

receive_stats_.AddReceivedVideoBytes(length,

parsed_packet.arrival_time());

event_log_->Log(

std::make_unique<RtcEventRtpPacketIncoming>(parsed_packet));

return DELIVERY_OK;

}

RtpStreamReceiverController::OnRtpPacket > RtpDemuxer::OnRtpPacket > RtpVideoStreamReceiver2::OnRtpPacket > RtpVideoStreamReceiver2::ReceivePacket

- 然后在

RtpVideoStreamReceiver2::ReceivePacket中调用VideoRtpDepacketizer的子类对rtp数据进行解析,以H264为例,就会调用VideoRtpDepacketizerH264::Parse。

absl::optional<VideoRtpDepacketizer::ParsedRtpPayload> parsed_payload =

type_it->second->Parse(packet.PayloadBuffer());

解析的过程就是打包的反过程,具体可以参考webrtc源码阅读之h264 RTP打包,本文不再分析。

二、视频JitterBuffer原理

- 通过PacketBuffer对收到的RTP包进行包的排序和组装,组装成一个完整的帧。

- 通过RtpFrameReferenceFinder将组装好的帧填充参考帧信息。

- 通过FrameBuffer判断填充了参考帧信息的完整帧是否是连续帧,并缓存下来。

- FrameBuffer根据某帧依赖的帧是否都已解码判断某帧是否可解码,交给解码器进行解码。

通过以上步骤可以保证每一帧是完整可靠的,且每一帧的参考帧都是完成可靠,那么当参考帧都解码以后,该帧便可以解码了。

1、判断完整帧

PacketBuffer::InsertResult PacketBuffer::InsertPacket(

std::unique_ptr<PacketBuffer::Packet> packet) {

PacketBuffer::InsertResult result;

uint16_t seq_num = packet->seq_num;

size_t index = seq_num % buffer_.size();

if (!first_packet_received_) {

first_seq_num_ = seq_num;

first_packet_received_ = true;

} else if (AheadOf(first_seq_num_, seq_num)) {//seq_num 在 first_seq_num之前

// If we have explicitly cleared past this packet then it's old,

// don't insert it, just silently ignore it.

if (is_cleared_to_first_seq_num_) {

return result;

}

first_seq_num_ = seq_num;

}

if (buffer_[index] != nullptr) {//buffer中 index对应的槽被占用了

// Duplicate packet, just delete the payload.

if (buffer_[index]->seq_num == packet->seq_num) {

return result;

}

// The packet buffer is full, try to expand the buffer.

//buffer满了的话,就扩展buffer,每次都是翻倍扩展

while (ExpandBufferSize() && buffer_[seq_num % buffer_.size()] != nullptr) {

}

index = seq_num % buffer_.size(); //计算扩展后的新index

// Packet buffer is still full since we were unable to expand the buffer.

if (buffer_[index] != nullptr) { //buffer已经扩展到最大了,就清空buffer,申请一个新的关键帧

// Clear the buffer, delete payload, and return false to signal that a

// new keyframe is needed.

RTC_LOG(LS_WARNING) << "Clear PacketBuffer and request key frame.";

ClearInternal();

result.buffer_cleared = true;

return result;

}

}

packet->continuous = false;

buffer_[index] = std::move(packet);

UpdateMissingPackets(seq_num); //更新丢包信息,用于NACK等计算

result.packets = FindFrames(seq_num); //查找一个完整帧

return result;

}

通过InsertPacket函数插入一包数据,并通过FindFrames判断完整帧。

std::vector<std::unique_ptr<PacketBuffer::Packet>> PacketBuffer::FindFrames(

uint16_t seq_num) {

std::vector<std::unique_ptr<PacketBuffer::Packet>> found_frames;

for (size_t i = 0; i < buffer_.size() && PotentialNewFrame(seq_num); ++i) { //该包是潜在的新帧

size_t index = seq_num % buffer_.size();

buffer_[index]->continuous = true; //设置包的连续性

//找到一帧的最后一包,往前推找到第一包,就是完整的一帧

if (buffer_[index]->is_last_packet_in_frame()) {

uint16_t start_seq_num = seq_num;

// Find the start index by searching backward until the packet with

// the `frame_begin` flag is set.

int start_index = index;

size_t tested_packets = 0;

int64_t frame_timestamp = buffer_[start_index]->timestamp;

// Identify H.264 keyframes by means of SPS, PPS, and IDR.

bool is_h264 = buffer_[start_index]->codec() == kVideoCodecH264;

bool has_h264_sps = false;

bool has_h264_pps = false;

bool has_h264_idr = false;

bool is_h264_keyframe = false;

int idr_width = -1;

int idr_height = -1;

while (true) {

++tested_packets;

if (!is_h264 && buffer_[start_index]->is_first_packet_in_frame()) //vp8及vp9判断逻辑就是找到第一包的标志

break;

if (is_h264) {

const auto* h264_header = absl::get_if<RTPVideoHeaderH264>(

&buffer_[start_index]->video_header.video_type_header);

if (!h264_header || h264_header->nalus_length >= kMaxNalusPerPacket)

return found_frames;

for (size_t j = 0; j < h264_header->nalus_length; ++j) {

if (h264_header->nalus[j].type == H264::NaluType::kSps) {

has_h264_sps = true;

} else if (h264_header->nalus[j].type == H264::NaluType::kPps) {

has_h264_pps = true;

} else if (h264_header->nalus[j].type == H264::NaluType::kIdr) {

has_h264_idr = true;

}

}

if ((sps_pps_idr_is_h264_keyframe_ && has_h264_idr && has_h264_sps &&

has_h264_pps) ||

(!sps_pps_idr_is_h264_keyframe_ && has_h264_idr)) {

is_h264_keyframe = true;

// Store the resolution of key frame which is the packet with

// smallest index and valid resolution; typically its IDR or SPS

// packet; there may be packet preceeding this packet, IDR's

// resolution will be applied to them.

if (buffer_[start_index]->width() > 0 &&

buffer_[start_index]->height() > 0) {

idr_width = buffer_[start_index]->width();

idr_height = buffer_[start_index]->height();

}

}

}

if (tested_packets == buffer_.size()) //已经把所有buffer缓存的包过了一遍了,没有找到与当前帧时间戳不一样的帧,

//也就是说当前帧之前的帧已经都不在buffer里了,或者当前帧就是第一帧,那么buffer中第一包就是帧的起始包

break;

start_index = start_index > 0 ? start_index - 1 : buffer_.size() - 1;

// In the case of H264 we don't have a frame_begin bit (yes,

// `frame_begin` might be set to true but that is a lie). So instead

// we traverese backwards as long as we have a previous packet and

// the timestamp of that packet is the same as this one. This may cause

// the PacketBuffer to hand out incomplete frames.

// See: https://bugs.chromium.org/p/webrtc/issues/detail?id=7106

if (is_h264 && (buffer_[start_index] == nullptr ||

buffer_[start_index]->timestamp != frame_timestamp)) {

break;

}

--start_seq_num;

}

if (is_h264) {

// Warn if this is an unsafe frame.

if (has_h264_idr && (!has_h264_sps || !has_h264_pps)) {

RTC_LOG(LS_WARNING)

<< "Received H.264-IDR frame "

"(SPS: "

<< has_h264_sps << ", PPS: " << has_h264_pps << "). Treating as "

<< (sps_pps_idr_is_h264_keyframe_ ? "delta" : "key")

<< " frame since WebRTC-SpsPpsIdrIsH264Keyframe is "

<< (sps_pps_idr_is_h264_keyframe_ ? "enabled." : "disabled");

}

// Now that we have decided whether to treat this frame as a key frame

// or delta frame in the frame buffer, we update the field that

// determines if the RtpFrameObject is a key frame or delta frame.

//更新帧类型信息

const size_t first_packet_index = start_seq_num % buffer_.size();

if (is_h264_keyframe) {

buffer_[first_packet_index]->video_header.frame_type =

VideoFrameType::kVideoFrameKey;

if (idr_width > 0 && idr_height > 0) {

// IDR frame was finalized and we have the correct resolution for

// IDR; update first packet to have same resolution as IDR.

buffer_[first_packet_index]->video_header.width = idr_width;

buffer_[first_packet_index]->video_header.height = idr_height;

}

} else {

buffer_[first_packet_index]->video_header.frame_type =

VideoFrameType::kVideoFrameDelta;

}

// If this is not a keyframe, make sure there are no gaps in the packet

// sequence numbers up until this point.

//如果当前帧是P帧,而且在当前序号前面还有丢包,就意味着当前帧之前有帧不完整,所以继续缓存

if (!is_h264_keyframe && missing_packets_.upper_bound(start_seq_num) !=

missing_packets_.begin()) {

return found_frames;

}

}

const uint16_t end_seq_num = seq_num + 1;

//把找到的完整帧的所有包放进found_frames中

uint16_t num_packets = end_seq_num - start_seq_num;

found_frames.reserve(found_frames.size() + num_packets);

for (uint16_t i = start_seq_num; i != end_seq_num; ++i) {

std::unique_ptr<Packet>& packet = buffer_[i % buffer_.size()];

RTC_DCHECK(packet);

RTC_DCHECK_EQ(i, packet->seq_num);

// Ensure frame boundary flags are properly set.

packet->video_header.is_first_packet_in_frame = (i == start_seq_num);

packet->video_header.is_last_packet_in_frame = (i == seq_num);

found_frames.push_back(std::move(packet));

}

//前面已经判断过如果是P帧,且前面有空洞会返回,所以这里:如果是P帧则不会清理,如果是I帧,则清理I帧之前的所有丢包

missing_packets_.erase(missing_packets_.begin(),

missing_packets_.upper_bound(seq_num));

}

++seq_num;

}

return found_frames;

}

首先判断包是否是潜在的新帧,判断逻辑大致就是:如果是一帧的第一包,那就是潜在新帧,否则如果该包的前一包是连续的,则是潜在新帧。然后如果找到最后一包的话,那就是有完整的一帧了。对于vpx来说,码流中有帧的第一包和最后一包的标志,所以直接判断即可;对于h264来说,最后一包的标志在RTP的Mark位设置,也不会判断错误;但是第一包判断上有问题(具体可见注释中bug report,个人没太理解为什么会这样),所以用时间戳变化来判断帧的第一包。

2、填充参考帧信息

RtpFrameReferenceFinder::ReturnVector RtpSeqNumOnlyRefFinder::ManageFrame(

std::unique_ptr<RtpFrameObject> frame) {

FrameDecision decision = ManageFrameInternal(frame.get());

//根据ManageFrameInternal的结果,如果是kStash就把帧缓存下来,

//如果是kHandOff,说明帧是连续的,该帧可能是之前缓存帧的参考帧,所以当该帧连续时,可以判断缓存帧中是否可以传播连续性

RtpFrameReferenceFinder::ReturnVector res;

switch (decision) {

case kStash:

if (stashed_frames_.size() > kMaxStashedFrames)

stashed_frames_.pop_back();

stashed_frames_.push_front(std::move(frame));

return res;

case kHandOff:

res.push_back(std::move(frame));

RetryStashedFrames(res);

return res;

case kDrop:

return res;

}

return res;

}

根据ManageFrameInternal的结果,如果是kStash就把帧缓存下来,如果是kHandOff,说明帧是连续的,该帧可能是之前缓存帧的参考帧,所以当该帧连续时,可以判断缓存帧中是否可以传播连续性。

RtpSeqNumOnlyRefFinder::FrameDecision

RtpSeqNumOnlyRefFinder::ManageFrameInternal(RtpFrameObject* frame) {

if (frame->frame_type() == VideoFrameType::kVideoFrameKey) { //关键帧、则插入新的GOP缓存

last_seq_num_gop_.insert(std::make_pair(

frame->last_seq_num(),

std::make_pair(frame->last_seq_num(), frame->last_seq_num())));

}

// We have received a frame but not yet a keyframe, stash this frame.

if (last_seq_num_gop_.empty()) //说明至今没收到关键帧,则缓存

return kStash;

// Clean up info for old keyframes but make sure to keep info

// for the last keyframe.

auto clean_to = last_seq_num_gop_.lower_bound(frame->last_seq_num() - 100); //最多缓存100个gop,清理掉100个之前的gop缓存

for (auto it = last_seq_num_gop_.begin();

it != clean_to && last_seq_num_gop_.size() > 1;) {

it = last_seq_num_gop_.erase(it);

}

// Find the last sequence number of the last frame for the keyframe

// that this frame indirectly references.

//找到第一个大于该帧序列号的关键帧,那么该关键帧的前一个关键帧对应的gop就是该帧所在的gop

auto seq_num_it = last_seq_num_gop_.upper_bound(frame->last_seq_num());

if (seq_num_it == last_seq_num_gop_.begin()) {

RTC_LOG(LS_WARNING) << "Generic frame with packet range ["

<< frame->first_seq_num() << ", "

<< frame->last_seq_num()

<< "] has no GoP, dropping frame.";

return kDrop;

}

seq_num_it--;

// Make sure the packet sequence numbers are continuous, otherwise stash

// this frame.

//rtp数据中可能带有填充包,last_picture_id_gop对应的是真实编码数据的序列号,

//last_picture_id_with_padding_gop对应的是可能填充包数据的序列号

uint16_t last_picture_id_gop = seq_num_it->second.first;

uint16_t last_picture_id_with_padding_gop = seq_num_it->second.second;

if (frame->frame_type() == VideoFrameType::kVideoFrameDelta) {

uint16_t prev_seq_num = frame->first_seq_num() - 1;

//判断该帧是否连续,即前一帧有没有缓存在gop中

if (prev_seq_num != last_picture_id_with_padding_gop)

return kStash;

}

RTC_DCHECK(AheadOrAt(frame->last_seq_num(), seq_num_it->first));

// Since keyframes can cause reordering we can't simply assign the

// picture id according to some incrementing counter.

frame->SetId(frame->last_seq_num());

frame->num_references =

frame->frame_type() == VideoFrameType::kVideoFrameDelta; //设置参考帧个数,关键帧就是0;否则是1,Webrtc默认每个帧前面的一帧是该帧的参考帧

frame->references[0] = rtp_seq_num_unwrapper_.Unwrap(last_picture_id_gop);

if (AheadOf<uint16_t>(frame->Id(), last_picture_id_gop)) {

seq_num_it->second.first = frame->Id();

seq_num_it->second.second = frame->Id();

}

UpdateLastPictureIdWithPadding(frame->Id());

frame->SetSpatialIndex(0);

frame->SetId(rtp_seq_num_unwrapper_.Unwrap(frame->Id()));

return kHandOff;

}

通过RtpFrameReferenceFinder::ManageFrame填充好帧的关键帧信息,并把连续的帧返回给RtpVideoStreamReceiver2。

3、FrameBuffer插入帧

int64_t FrameBuffer::InsertFrame(std::unique_ptr<EncodedFrame> frame) {

TRACE_EVENT0("webrtc", "FrameBuffer::InsertFrame");

RTC_DCHECK(frame);

MutexLock lock(&mutex_);

int64_t last_continuous_frame_id = last_continuous_frame_.value_or(-1);

if (!ValidReferences(*frame)) { //判断帧的参考帧是否有效,判断规则:1、参考帧必须在该帧前面

// 2、每帧的参考帧不能相同 (实际上webrtc默认每帧的参考帧就是前面一帧)

RTC_LOG(LS_WARNING) << "Frame " << frame->Id()

<< " has invalid frame references, dropping frame.";

return last_continuous_frame_id;

}

if (frames_.size() >= kMaxFramesBuffered) {

if (frame->is_keyframe()) {

RTC_LOG(LS_WARNING) << "Inserting keyframe " << frame->Id()

<< " but buffer is full, clearing"

" buffer and inserting the frame.";

ClearFramesAndHistory();

} else {

RTC_LOG(LS_WARNING) << "Frame " << frame->Id()

<< " could not be inserted due to the frame "

"buffer being full, dropping frame.";

return last_continuous_frame_id;

}

}

auto last_decoded_frame = decoded_frames_history_.GetLastDecodedFrameId();

auto last_decoded_frame_timestamp =

decoded_frames_history_.GetLastDecodedFrameTimestamp();

if (last_decoded_frame && frame->Id() <= *last_decoded_frame) {

if (AheadOf(frame->Timestamp(), *last_decoded_frame_timestamp) &&

frame->is_keyframe()) {

//帧id小于上一个解码的帧,但是时间戳比较新,而且还是关键帧,这可能是编码器重启了,所以这也是一个新的帧

// If this frame has a newer timestamp but an earlier frame id then we

// assume there has been a jump in the frame id due to some encoder

// reconfiguration or some other reason. Even though this is not according

// to spec we can still continue to decode from this frame if it is a

// keyframe.

RTC_LOG(LS_WARNING)

<< "A jump in frame id was detected, clearing buffer.";

ClearFramesAndHistory();

last_continuous_frame_id = -1;

} else {

RTC_LOG(LS_WARNING) << "Frame " << frame->Id() << " inserted after frame "

<< *last_decoded_frame

<< " was handed off for decoding, dropping frame.";

return last_continuous_frame_id;

}

}

// Test if inserting this frame would cause the order of the frames to become

// ambiguous (covering more than half the interval of 2^16). This can happen

// when the frame id make large jumps mid stream.

if (!frames_.empty() && frame->Id() < frames_.begin()->first &&

frames_.rbegin()->first < frame->Id()) {

RTC_LOG(LS_WARNING) << "A jump in frame id was detected, clearing buffer.";

ClearFramesAndHistory();

last_continuous_frame_id = -1;

}

auto info = frames_.emplace(frame->Id(), FrameInfo()).first; //插入该帧,并为该帧创建一个对应的FrameInfo

if (info->second.frame) {

return last_continuous_frame_id;

}

if (!UpdateFrameInfoWithIncomingFrame(*frame, info)) //更新该帧对应的FrameInfo

return last_continuous_frame_id;

if (!frame->delayed_by_retransmission())

timing_->IncomingTimestamp(frame->Timestamp(), frame->ReceivedTime());

// It can happen that a frame will be reported as fully received even if a

// lower spatial layer frame is missing.

if (stats_callback_ && frame->is_last_spatial_layer) {

stats_callback_->OnCompleteFrame(frame->is_keyframe(), frame->size(),

frame->contentType());

}

info->second.frame = std::move(frame);

if (info->second.num_missing_continuous == 0) {

info->second.continuous = true;

PropagateContinuity(info);

last_continuous_frame_id = *last_continuous_frame_;

// Since we now have new continuous frames there might be a better frame

// to return from NextFrame.

//有个新的连续帧了,发送给FindNextFrame函数

if (callback_queue_) {

callback_queue_->PostTask([this] {

MutexLock lock(&mutex_);

if (!callback_task_.Running())

return;

RTC_CHECK(frame_handler_);

callback_task_.Stop();

StartWaitForNextFrameOnQueue();

});

}

}

return last_continuous_frame_id;

}

UpdateFrameInfoWithIncomingFrame更新的就是的信息主要就是帧的连续性以及缺少的参考帧的数目。如果帧不缺少参考帧,且帧是连续的,则说明该帧是可解码的。就会重启寻找可解码帧的任务,将可解码帧进行解码。

4、寻找可解码帧

int64_t FrameBuffer::FindNextFrame(int64_t now_ms) {

int64_t wait_ms = latest_return_time_ms_ - now_ms;

frames_to_decode_.clear();

// `last_continuous_frame_` may be empty below, but nullopt is smaller

// than everything else and loop will immediately terminate as expected.

for (auto frame_it = frames_.begin();

frame_it != frames_.end() && frame_it->first <= last_continuous_frame_;

++frame_it) {

if (!frame_it->second.continuous ||

frame_it->second.num_missing_decodable > 0) { //帧是连续的,且不缺失参考帧

continue;

}

EncodedFrame* frame = frame_it->second.frame.get();

if (keyframe_required_ && !frame->is_keyframe()) //如果需要关键帧,但是当前帧不是关键帧,则不能解码

continue;

auto last_decoded_frame_timestamp =

decoded_frames_history_.GetLastDecodedFrameTimestamp();

// TODO(https://bugs.webrtc.org/9974): consider removing this check

// as it may make a stream undecodable after a very long delay between

// frames.

//比上次解码的帧时间戳更早,不可解码

if (last_decoded_frame_timestamp &&

AheadOf(*last_decoded_frame_timestamp, frame->Timestamp())) {

continue;

}

// Gather all remaining frames for the same superframe.

std::vector<FrameMap::iterator> current_superframe;

current_superframe.push_back(frame_it);

bool last_layer_completed = frame_it->second.frame->is_last_spatial_layer;

FrameMap::iterator next_frame_it = frame_it;

while (!last_layer_completed) { //vpx的逻辑

++next_frame_it;

if (next_frame_it == frames_.end() || !next_frame_it->second.frame) {

break;

}

if (next_frame_it->second.frame->Timestamp() != frame->Timestamp() ||

!next_frame_it->second.continuous) {

break;

}

if (next_frame_it->second.num_missing_decodable > 0) {

bool has_inter_layer_dependency = false;

for (size_t i = 0; i < EncodedFrame::kMaxFrameReferences &&

i < next_frame_it->second.frame->num_references;

++i) {

if (next_frame_it->second.frame->references[i] >= frame_it->first) {

has_inter_layer_dependency = true;

break;

}

}

// If the frame has an undecoded dependency that is not within the same

// temporal unit then this frame is not yet ready to be decoded. If it

// is within the same temporal unit then the not yet decoded dependency

// is just a lower spatial frame, which is ok.

if (!has_inter_layer_dependency ||

next_frame_it->second.num_missing_decodable > 1) {

break;

}

}

current_superframe.push_back(next_frame_it);

last_layer_completed = next_frame_it->second.frame->is_last_spatial_layer;

}

// Check if the current superframe is complete.

// TODO(bugs.webrtc.org/10064): consider returning all available to

// decode frames even if the superframe is not complete yet.

if (!last_layer_completed) {

continue;

}

frames_to_decode_ = std::move(current_superframe); //将可解码的帧放到frames_to_decode_

if (frame->RenderTime() == -1) { //设置Render时间

frame->SetRenderTime(timing_->RenderTimeMs(frame->Timestamp(), now_ms));

}

bool too_many_frames_queued =

frames_.size() > zero_playout_delay_max_decode_queue_size_ ? true

: false;

wait_ms = timing_->MaxWaitingTime(frame->RenderTime(), now_ms,

too_many_frames_queued); //设置下一帧等待时间

// This will cause the frame buffer to prefer high framerate rather

// than high resolution in the case of the decoder not decoding fast

// enough and the stream has multiple spatial and temporal layers.

// For multiple temporal layers it may cause non-base layer frames to be

// skipped if they are late.

if (wait_ms < -kMaxAllowedFrameDelayMs)

continue;

break;

}

wait_ms = std::min<int64_t>(wait_ms, latest_return_time_ms_ - now_ms);

wait_ms = std::max<int64_t>(wait_ms, 0);

return wait_ms;

}

FrameBuffer通过FindNextFrame找到可解码帧,通过GetNextFrame获取可解码帧,然后交由解码器进行解码。