Tensorflow版本:2.6.0

使用的是CNN神经网络,网络结构在最后给出

飞机和湖泊的卫星图片二分类网络

数据集请点击链接:https://www.kaggle.com/datasets/yo7oyo/lake-plane-binaryclass

数据集的构成:airplane: 700 张, lake 700 张

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import glob

%matplotlib inline

# 获取数据路径

# 'D:\\datasets\\dset\\dataset1\\卫星图像识别数据\\2_class\\airplane\\airplane_427.jpg 路径格式

img_path = glob.glob(r'D:\datasets\dset\dataset1\卫星图像识别数据\2_class/*/*.jpg')

import random

random.shuffle(img_path)

img_path[1].split('\\')[-2]

output: 'airplane'

#得到标签值label

#path:

dict_img = {'lake':1, 'airplane':0}

dict_label = dict((v, k) for k, v in dict_img.items())

label = [dict_img.get(img.split('\\')[-2]) for img in img_path]

print(dict_label)

读取图片:

"""

读取: x = tf.io.read_file(path) 读取二进制数据

解码: x = tf.image.decode_jpeg(x) 返回Tensor张量 支持多种图片解码

类型转化 tf.cast(x, tf.float64)

"""

def load_image(path):

# 读取图片

img = tf.io.read_file(path)

# 解码数据

img = tf.image.decode_jpeg(img, channels=3) # 转换图片大小 统一大小为 256 X 256

img = tf.image.resize(img, [256, 256])

# 数据转化

img = tf.cast(img, tf.float32)

# 归一化

img /= 255.0

return img

#%%

p, l = img_path[20], label[20]

img_tensor = load_image(p)

plt.title(dict_label.get(l))

plt.imshow(img_tensor)

# 创建dataset

ds_img = tf.data.Dataset.from_tensor_slices(img_path)

ds_img = ds_img.map(load_image)

# 标签的dataset

ds_label = tf.data.Dataset.from_tensor_slices(label)

print(ds_img, '\n', ds_label)

# 使用zip函数将数据进行合并

datas = tf.data.Dataset.zip((ds_img, ds_label))

print(datas)

all_count = len(img_path)

BATCH_SIZE = 16

# 划分训练集和测试集

train_ds = datas.take(int(all_count * 0.8))

test_ds = datas.skip(int(all_count * 0.8))

# 设置训练集和测试集

"""

测试集无需乱序 和 重复

"""

train_ds = train_ds.repeat().shuffle(100).batch(BATCH_SIZE)

test_ds = test_ds.batch(BATCH_SIZE)

print(train_ds, test_ds)

构建模型

model = tf.keras.Sequential([

layers.Conv2D(64, (3, 3), input_shape=(256, 256, 3), activation='relu'),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPool2D(),

# layers.Dropout(0.5),

layers.Conv2D(128, (3, 3), activation='relu'),

layers.Conv2D(128, (3, 3), activation='relu'),

layers.MaxPool2D(),

# layers.Dropout(0.5),

layers.Conv2D(256, (3, 3), activation='relu'),

layers.Conv2D(256, (3, 3), activation='relu'),

layers.MaxPool2D(),

# layers.Dropout(0.5),

layers.Conv2D(512, (3, 3), activation='relu'),

layers.Conv2D(512, (3, 3), activation='relu'),

layers.MaxPool2D(),

layers.Conv2D(512, (3, 3), activation='relu'),

layers.Conv2D(512, (3, 3), activation='relu'),

layers.Conv2D(512, (3, 3), activation='relu'),

layers.MaxPool2D(),

layers.GlobalAveragePooling2D(),

layers.Dense(1024, activation='relu'),

layers.Dense(256, activation='relu'),

layers.Dense(1, activation='sigmoid')

])

model.summary()

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.0001),

loss=tf.keras.losses.BinaryCrossentropy(),

metrics=['acc']

)

steps_pre_epoch = int(all_count*0.8) // BATCH_SIZE

val_steps = int(all_count * 0.2) // BATCH_SIZE

history = model.fit(

train_ds,

epochs=10,

steps_per_epoch=steps_pre_epoch,

validation_data=test_ds,

validation_steps=val_steps

)

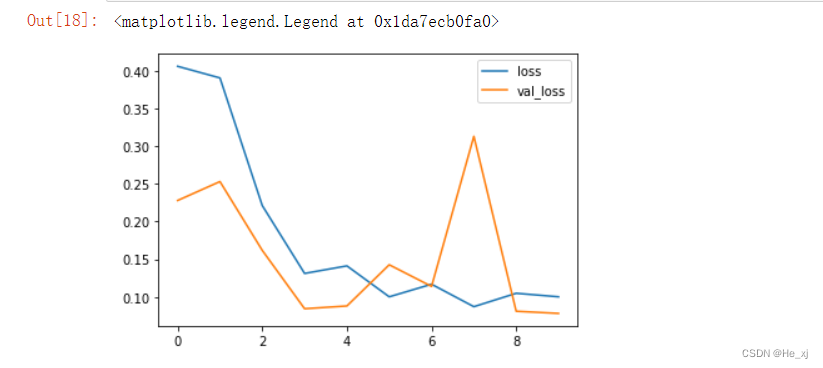

plt.plot(history.epoch, history.history.get('loss'), label='loss')

plt.plot(history.epoch, history.history.get('val_loss'), label='val_loss')

plt.legend()

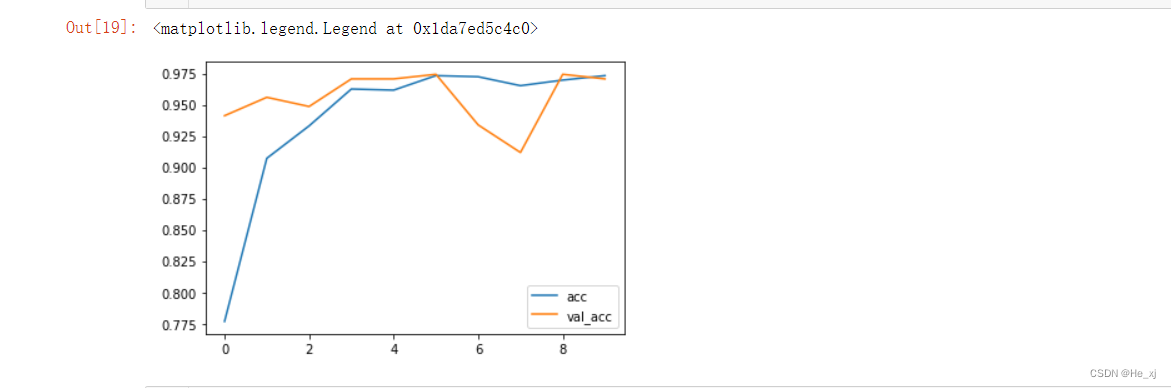

plt.plot(history.epoch, history.history.get('acc'), label='acc')

plt.plot(history.epoch, history.history.get('val_acc'), label='val_acc')

plt.legend()

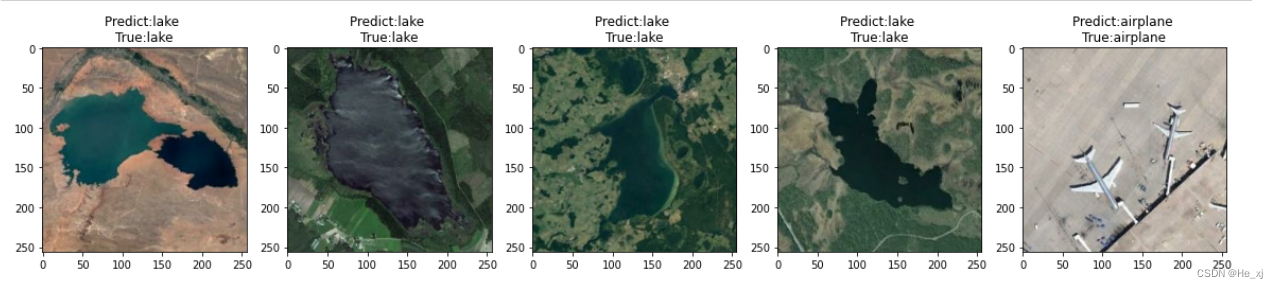

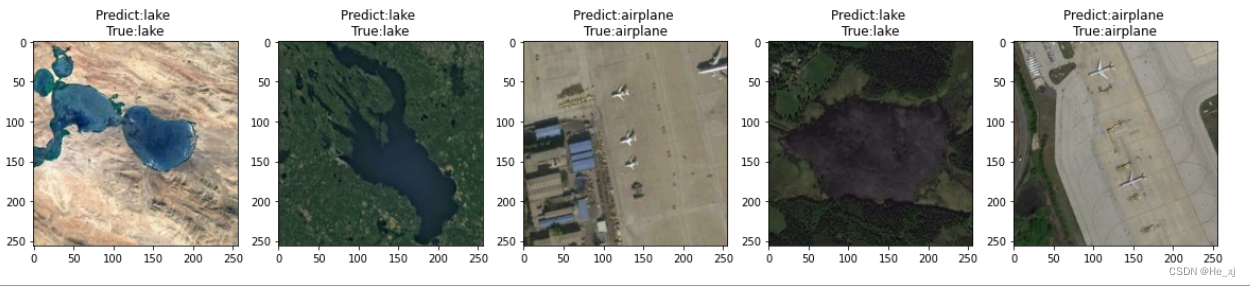

# 使用模型进行预测

plt.figure(figsize=(20,20))

for i in range(10):

plt.subplot(2,5,i+1)

index = random.randint(0, 1400)

plt.imshow(load_image(img_path[index]))

img = load_image(img_path[index])

plt.title(f'Predict:{dict_label.get(float(model.predict(tf.expand_dims(img, axis=0))[0]) > 0.5)} \nTrue:{dict_label.get(label[index])}')

plt.show()

加入BatchNormalization()的批量归一化,防止出现梯度消失的梯度爆炸并加快神经网络的传递和更新

构建模型

model = tf.keras.Sequential([

layers.Conv2D(64, (3, 3), input_shape=(256, 256, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.Conv2D(64, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.MaxPool2D(),

# layers.Dropout(0.5),

layers.Conv2D(128, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.Conv2D(128, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.MaxPool2D(),

# layers.Dropout(0.5),

layers.Conv2D(256, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.Conv2D(256, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.MaxPool2D(),

# layers.Dropout(0.5),

layers.Conv2D(512, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.Conv2D(512, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.MaxPool2D(),

layers.Conv2D(512, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.Conv2D(512, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.Conv2D(512, (3, 3)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.MaxPool2D(),

layers.GlobalAveragePooling2D(),

layers.Dense(1024),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.Dense(256),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.Dense(1, activation='sigmoid')

])

model.summary()

# 训练模型

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.0001),

loss=tf.keras.losses.BinaryCrossentropy(),

metrics=['acc']

)

steps_pre_epoch = int(all_count*0.8) // BATCH_SIZE

val_steps = int(all_count * 0.2) // BATCH_SIZE

history = model.fit(

train_ds,

epochs=10,

steps_per_epoch=steps_pre_epoch,

validation_data=test_ds,

validation_steps=val_steps

)

未加入批量归一化的网络结构

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 254, 254, 64) 1792

_________________________________________________________________

conv2d_1 (Conv2D) (None, 252, 252, 64) 36928

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 126, 126, 64) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 124, 124, 128) 73856

_________________________________________________________________

conv2d_3 (Conv2D) (None, 122, 122, 128) 147584

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 61, 61, 128) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 59, 59, 256) 295168

_________________________________________________________________

conv2d_5 (Conv2D) (None, 57, 57, 256) 590080

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 28, 28, 256) 0

_________________________________________________________________

conv2d_6 (Conv2D) (None, 26, 26, 512) 1180160

_________________________________________________________________

conv2d_7 (Conv2D) (None, 24, 24, 512) 2359808

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 12, 12, 512) 0

_________________________________________________________________

conv2d_8 (Conv2D) (None, 10, 10, 512) 2359808

_________________________________________________________________

conv2d_9 (Conv2D) (None, 8, 8, 512) 2359808

_________________________________________________________________

conv2d_10 (Conv2D) (None, 6, 6, 512) 2359808

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 3, 3, 512) 0

_________________________________________________________________

global_average_pooling2d (Gl (None, 512) 0

_________________________________________________________________

dense (Dense) (None, 1024) 525312

_________________________________________________________________

dense_1 (Dense) (None, 256) 262400

_________________________________________________________________

dense_2 (Dense) (None, 1) 257

=================================================================

Total params: 12,552,769

Trainable params: 12,552,769

Non-trainable params: 0