前言:之前学了挺多卷积神经网络模型,但是都只停留在概念。代码都没自己敲过,肯定不行,而且这代码也很难很多都看不懂。所以想着先从最先较简单的AlexNet开始敲。不过还是好多没搞明白,之后逐一搞清楚。

文章目录

- AlexNet 实战

- Alex.net

- train.py

- test.py

AlexNet 实战

-

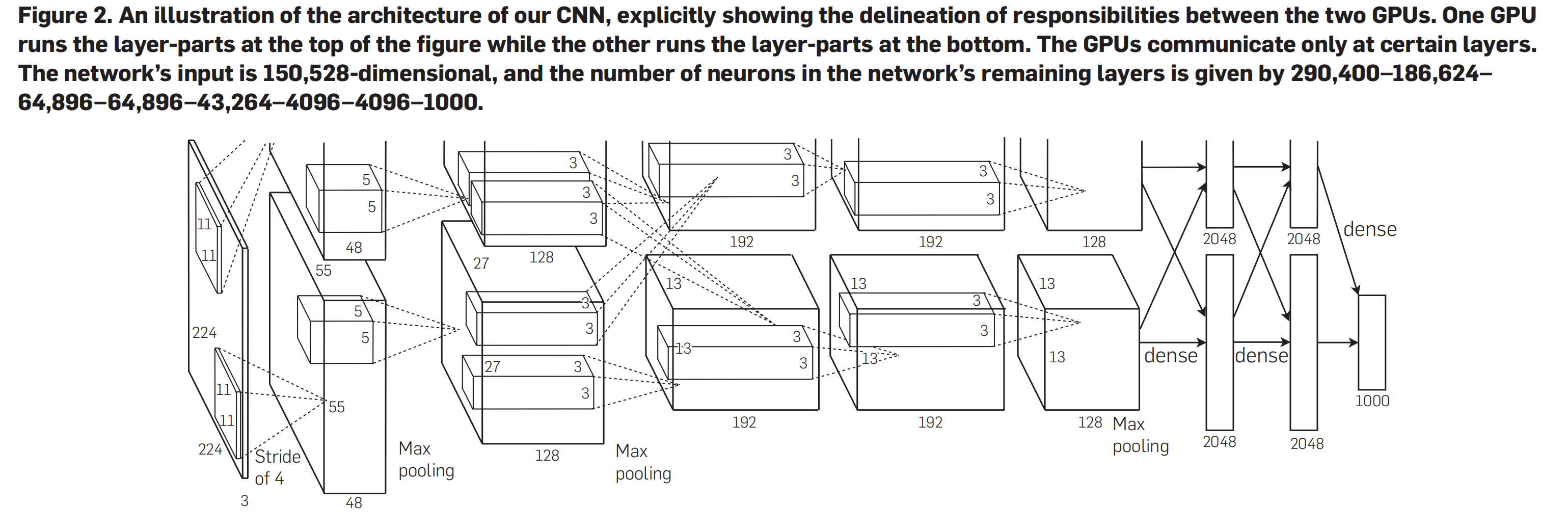

The first convolutional layer filters the 224 × 224 × 3 input image with 96 kernels of size 11 × 11 × 3 with a stride of 4 pixels (this is the distance between the receptive field centers of neighboring neurons in a kernel map).

-

第一个卷积层用96个大小为11 × 11 × 3的核对224 × 224 × 3输入图像进行过滤,步幅为4像素(这是核图中相邻神经元的感受野中心之间的距离)。

-

The second convolutional layer takes as input the (response-normalized and pooled) output of the first convolutional layer and filters it with 256 kernels of size 5 × 5 × 48.

-

第二个卷积层将第一个卷积层的(响应归一化和池化)输出作为输入,并用 256 个大小为 5 × 5 × 48 的内核对其进行过滤。

-

The third, fourth, and fifth convolutional layers are connected to one another without any intervening pooling or normalization layers.

-

第三个、第四个和第五个卷积层相互连接,没有任何中间池化或归一化层。

-

The third convolutional layer has 384 kernels of size 3 × 3 × 256

-

第三个卷积层有384个大小为3 × 3 × 256的核

-

The fourth convolutional layer has 384 kernels of size 3 × 3 × 192, and the fifth convolutional layer has 256 kernels of size 3 × 3 × 192. The fully connected layers have 4096 neurons each.

-

第四卷积层有384个大小为3 × 3 × 192的核,第五卷积层有256个大小为3 × 3 × 192的核。全连接层各有4096个神经元。

-

This is what we use throughout our network, with s = 2 and z = 3.

-

这是我们在整个网络中使用的,s = 2 和 z = 3。(即池化层,stride=2,kernel_size = 3)

Alex.net

搭建AlexNet就按照论文中的一步一步搭

import torch

from torch import nn

import torch.nn.functional as F

class MyAlexNet(nn.Module):

def __init__(self):

super(MyAlexNet,self).__init__()

## input [3,224,224]

self.c1 = nn.Conv2d(in_channels=3,out_channels=96,kernel_size=11,stride=4,padding=2) ##output [96,55,55]

self.s1 = nn.MaxPool2d(kernel_size=3, stride=2) ##output [96,27,27]

self.Relu = nn.ReLU()

self.c2 = nn.Conv2d(in_channels=96,out_channels=256,kernel_size=5,padding=2) ##output [256,27,27]

self.s2 = nn.MaxPool2d(kernel_size=3, stride=2) ##output [256,13,13]

self.c3 = nn.Conv2d(in_channels=256,out_channels=384,kernel_size=3,padding=1) ##output [384,13,13]

self.c4 = nn.Conv2d(in_channels=384,out_channels=384,kernel_size=3,padding=1) ##output [384,13,13]

self.c5 = nn.Conv2d(in_channels=384,out_channels=256,kernel_size=3,padding=1) ##output [256,13,13]

self.s5 = nn.MaxPool2d(kernel_size=3, stride=2) ##output [256,6,6]

self.flatten = nn.Flatten()

self.f6 = nn.Linear(256*6*6,4096)

self.f7 = nn.Linear(4096,4096)

self.f8 = nn.Linear(4096,2)

def forward(self,x):

x = self.Relu(self.c1(x))

x = self.s1(x)

x = self.Relu(self.c2(x))

x = self.s2(x)

x = self.Relu(self.c3(x))

x = self.Relu(self.c4(x))

x = self.Relu(self.c5(x))

x = self.s5(x)

x = self.flatten(x)

x = self.f6(x)

x = F.dropout(x,p=0.5)

x = self.f7(x)

x = F.dropout(x,p=0.5)

x = self.f8(x)

return x

if __name__ == '__main__':

x = torch.rand([1,3,224,224])

model = MyAlexNet()

y = model(x)

train.py

import torch

from torch import nn

from net import MyAlexNet

import numpy as np

from torch.optim import lr_scheduler

import os

from torchvision import transforms

from torchvision.datasets import ImageFolder

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

##解决中文显示问题

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

Root_train = r"F:/python/AlexNet/data/train"

Root_test = r"F:/python/AlexNet/data/val"

normalize = transforms.Normalize([0.5,0.5,0.5],[0.5,0.5,0.5])

train_transform = transforms.Compose([

transforms.Resize((224,224)),

transforms.RandomVerticalFlip(),##数据增强

transforms.ToTensor(),#转换为张量

normalize

])

val_transform = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),#转换为张量

normalize

])

train_dataset = ImageFolder(Root_train,transform=train_transform)

val_dataset = ImageFolder(Root_test,transform=val_transform)

train_dataloader = DataLoader(train_dataset,batch_size=32,shuffle=True)

val_dataloader = DataLoader(val_dataset,batch_size=32,shuffle=True) ##分批次,打乱

device = 'cuda' if torch.cuda.is_available else 'cpu'

model = MyAlexNet().to(device)

##定义损失函数

loss_fn = nn.CrossEntropyLoss()

##定义优化器

optimizer = torch.optim.SGD(model.parameters(),lr=0.01,momentum=0.9)

##学习率每隔10轮变为原来的0.5

lr_scheduler = lr_scheduler.StepLR(optimizer,step_size=10,gamma=0.5)

##定义训练函数

def train(dataloader,model,loss_fn,optimizer):

loss, current, n = 0.0, 0.0, 0

for batch,(x,y) in enumerate(dataloader):

image ,y = x.to(device), y.to(device)

output = model(image)

cur_loss = loss_fn(output,y)

_, pred = torch.max(output,axis=1)

cur_acc = torch.sum(y==pred)/output.shape[0]

##反向传播

optimizer.zero_grad()

cur_loss.backward()

optimizer.step()

loss +=cur_loss.item()

current +=cur_acc.item()

n += 1

train_loss = loss / n

train_acc = current / n

print("train_loss" + str(train_loss))

print("train_acc" + str(train_acc))

return train_loss, train_acc

##定义验证函数

def val(dataloader,model,loss_fn):

model.eval()

loss, current, n = 0.0, 0.0, 0

with torch.no_grad():

for batch,(x,y) in enumerate(dataloader):

image ,y = x.to(device), y.to(device)

output = model(image)

cur_loss = loss_fn(output,y)

_, pred = torch.max(output,axis=1)

cur_acc = torch.sum(y==pred)/output.shape[0]

loss += cur_loss.item()

current += cur_acc.item()

n += 1

val_loss = loss / n

val_acc = current / n

print("val_loss" + str(val_loss))

print("val_acc" + str(val_acc))

return val_loss, val_acc

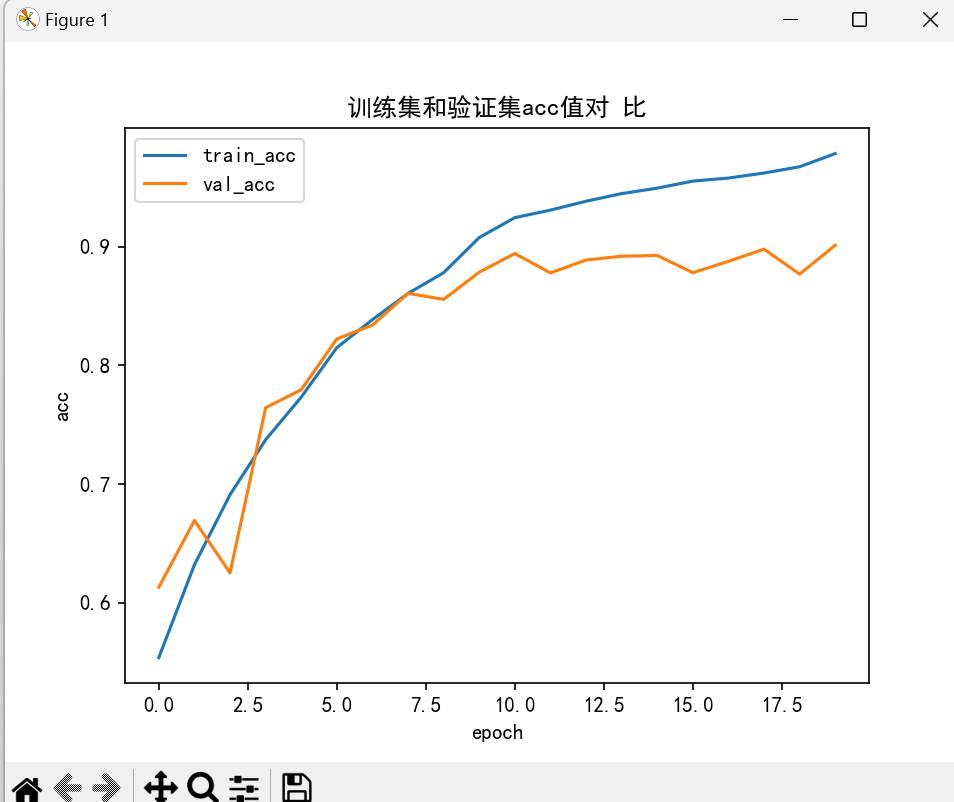

##定义画图函数

def matplot_loss(train_loss,val_loss):

plt.plot(train_loss,label='train_loss')

plt.plot(val_loss,label="val_loss")

plt.legend(loc='best')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.title("训练集和验证集loss值对比")

plt.show()

def matplot_acc(train_loss,val_loss):

plt.plot(train_loss,label='train_acc')

plt.plot(val_loss,label="val_acc")

plt.legend(loc='best')

plt.ylabel('acc')

plt.xlabel('epoch')

plt.title("训练集和验证集acc值对 比")

plt.show()

##开始训练

loss_train = []

acc_train = []

loss_val = []

acc_val = []

epoch = 20

min_acc = 0

for t in range(epoch):

lr_scheduler.step()

print(f"epoch{t+1}\n-----------")

train_loss,train_acc = train(train_dataloader,model,loss_fn,optimizer)

val_loss,val_acc = val(val_dataloader,model,loss_fn)

loss_train.append(train_loss)

acc_train.append(train_acc)

loss_val.append(val_loss)

acc_val.append(val_acc)

##保存最好的模型权重

if val_acc > min_acc:

folder='save_model'

if not os.path.exists(folder):

os.mkdir('save_model')

min_acc = val_acc

print(f"save bset model,第{t+1}轮")

torch.save(model.state_dict(),'save_model/best_model.pth')

##保存最后一轮的权重文件

if t == epoch-1:

torch.save(model.state_dict(),'save_model/last_model.pth')

matplot_loss(loss_train,loss_val)

matplot_acc(acc_train,acc_val)

print("Done")

test.py

import torch

from net import MyAlexNet

from torch.autograd import Variable

from torchvision import datasets, transforms

from torchvision.transforms import ToTensor

from torchvision.transforms import ToPILImage

from torchvision.datasets import ImageFolder

from torch.utils.data import DataLoader

Root_train = r"F:/python/AlexNet/data/train"

Root_test = r"F:/python/AlexNet/data/val"

normalize = transforms.Normalize([0.5,0.5,0.5],[0.5,0.5,0.5])

train_transform = transforms.Compose([

transforms.Resize((224,224)),

transforms.RandomVerticalFlip(),##数据增强

transforms.ToTensor(),#转换为张量

normalize

])

val_transform = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),#转换为张量

normalize

])

train_dataset = ImageFolder(Root_train,transform=train_transform)

val_dataset = ImageFolder(Root_test,transform=val_transform)

train_dataloader = DataLoader(train_dataset,batch_size=32,shuffle=True)

val_dataloader = DataLoader(val_dataset,batch_size=32,shuffle=True) ##分批次,打乱

device = 'cuda' if torch.cuda.is_available else 'cpu'

model = MyAlexNet().to(device)

## 加载模型

model.load_state_dict(torch.load("F:/python/AlexNet/save_model/best_model.pth"))

classes = [

"cat",

"dog",

]

##把张量转为照片格式

show = ToPILImage()

##进入验证阶段

model.eval()

for i in range(50):

x, y = val_dataset[i][0],val_dataset[i][1]

show(x).show()

x = Variable(torch.unsqueeze(x,dim=0).float(),requires_grad=True).to(device)

x = torch.tensor(x).to(device)

with torch.no_grad():

pred = model(x)

predicted,actual = classes[torch.argmax(pred[0])],classes[y]

print(f'predicted:"{predicted}",Actual:"{actual}"')

如果归一化的话,就会出现这种效果,但是如果把normalize = transforms.Normalize([0.5,0.5,0.5],[0.5,0.5,0.5])去掉就能显示正常图片

![[网络] ifconfig down掉的网口,插上网线网口灯依然亮?](https://img-blog.csdnimg.cn/d57c31d7236b42b3922a41368c2d9fd4.png)