如何将一个模型真正的投入应用呢?即我们常说的推理模块,前面博主已经介绍了如何使用DETR进行推理,今天博主则介绍DINO的推理实现过程:

其实在DINO的代码中已经给出了推理模块的实现,这里博主是将其流程进行梳理,并对其中的问题给出解决方案。

首先是所需的导入包:

import torch, json

from main import build_model_main

from util.slconfig import SLConfig

from util.visualizer import COCOVisualizer

from util import box_ops

随后是构建模型与权重文件加载,指的注意的是要想DINO进行推理,就需要将推理代码放到DINO项目中,博主想将模型与网络分离开进而将其部署在云端,进而开发一个目标检测的接口。

model_config_path = "config/DINO/DINO_4scale.py"

# change the path of the model config file

model_checkpoint_path = "checkpoint_best_regular.pth" # change

args = SLConfig.fromfile(model_config_path)

args.device = 'cuda'

model, criterion, postprocessors = build_model_main(args)

checkpoint = torch.load(model_checkpoint_path)

model.load_state_dict(checkpoint['model'])

_ = model.eval()#开启推理

引入COCO数据集中的标注类别名称,博主使用的是COCO缩减数据集,大家可以按照这个格式进行制作。

with open('util/coco_name.json') as f:

id2name = json.load(f)

id2name = {int(k):v for k,v in id2name.items()}

数据集读取文件格式:

{"1": "car", "2": "truck","3": "bus"}

接下来开始读取图片开始推理,记住一定要开启torch.no_grad,意为不计算梯度,因为这里的推理是不需要梯度更新的,否则会爆显存。

with torch.no_grad():

加载图像进行推理:

from PIL import Image

import datasets.transforms as T

image = Image.open("figs/1.jpg").convert("RGB") # load image

# transform images

transform = T.Compose([

T.RandomResize([800], max_size=1333),

T.ToTensor(),

T.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

image, _ = transform(image, None)

# predict images

output = model.cuda()(image[None].cuda())

output = postprocessors['bbox'](output, torch.Tensor([[1.0, 1.0]]).cuda())[0]

# visualize outputs

thershold = 0.3 # set a thershold

vslzr = COCOVisualizer()

scores = output['scores']

labels = output['labels']

boxes = box_ops.box_xyxy_to_cxcywh(output['boxes'])

select_mask = scores > thershold

box_label = [id2name[int(item)] for item in labels[select_mask]]

pred_dict = {

'boxes': boxes[select_mask],

'size': torch.Tensor([image.shape[1], image.shape[2]]),

'box_label': box_label

}

vslzr.visualize(image, pred_dict, savedir=None, dpi=100)

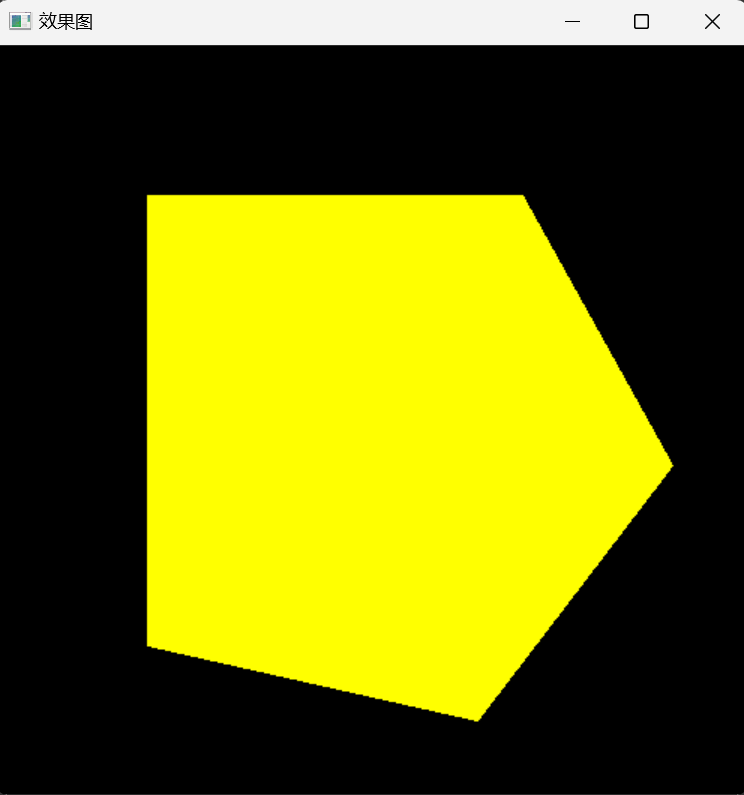

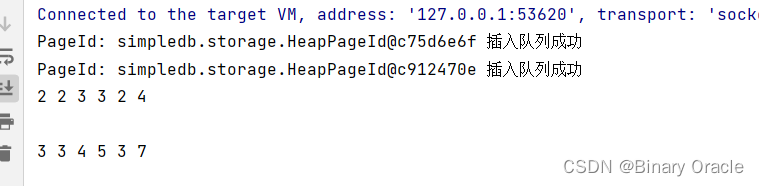

推理展示图如下: