数值分析算法 MATLAB 实践 数值优化算法

黄金分割法

function [x,y,k_cnt ]= Goldensection(fun, a, b, eps)

%fun为优化函数,a为区间左侧值,b为区间右侧值,eps为精度

% 黄金分割法

k_cnt=0;

while(b - a > eps)

x1 = a + 0.382 * (b - a);

x2 = a + 0.618 * (b - a); % lamda = 0.618,该方法也称为0.618分割法

if fun(x1) < fun(x2)

b = x2;

else

a = x1;

end

k_cnt = k_cnt+1;

end

x = (a + b) / 2;%最优解x

y = fun(x);%%最优解x对应的y

end

% 黄金分割法[x,y,k_cnt ]= Goldensection(fun, a, b, eps)

%fun为优化函数func,a为区间左侧值,b为区间右侧值,eps为精度

disp('******************黄金分割法******************')

a=-1;b=1;maxstep = 1000;eps = 1e-3;

[x0,y0,k0_cnt]= Goldensection(@fun1,a,b,eps);

disp(['迭代次数:k_cnt=',num2str(k0_cnt)])

disp(['最优解:x = ',num2str(x0)])

disp(['此时: Y = f(x) = ',num2str(y0)])

****黄金分割法******************

迭代次数:k_cnt=16

最优解:x = 0.24987

此时: Y = f(x) = -1.125

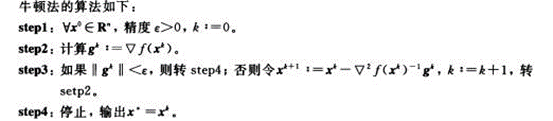

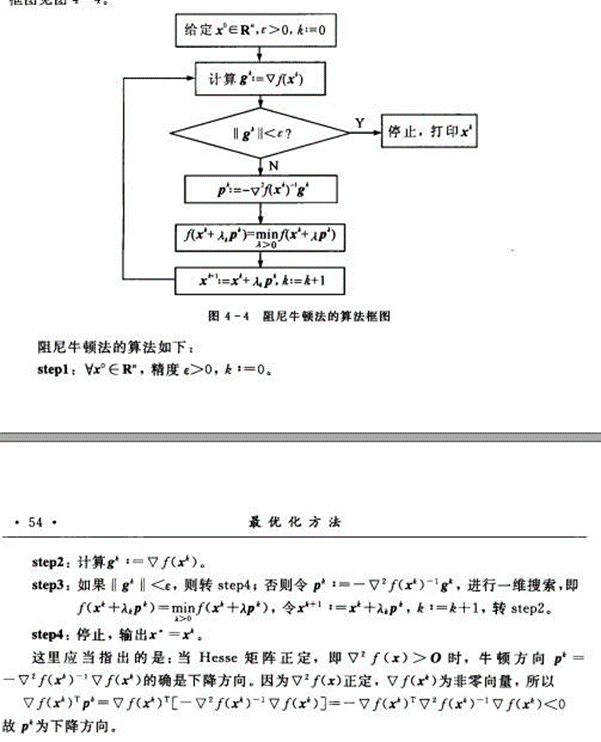

牛顿优化法(阻尼牛顿法)

function [x,val,k,flag] = NewtonMethod(fun,gfun,Hess,x0,k_max,epsilon)

%abs:使用NewtonMethod法求解无约束优化问题mini f(x)

%input:目标函数fun,梯度函数gfun,黑塞矩阵Hess,初始点x0

%output:最优解x,最优值val,迭代次数iter

%设置初始参数

if nargin<5||isempty(k_max)

k_max=500;

end

if nargin<6||isempty(epsilon)

epsilon=1e-6;

end

k=0;

xk=x0;

while(k < k_max)

gk=gfun(xk); %计算梯度

Gk=Hess(xk);

dk=-Gk\gk; %计算搜索方向

if(norm(dk) < epsilon)

flag=1;

break;

end

xk=xk+dk;

k=k+1;

end

x=xk;

val=fun(xk);

end

%function [x,val,k,flag] = NewtonMethod(fun,gfun,Hess,x0,k_max,epsilon)

%abs:使用NewtonMethod法求解无约束优化问题

%input:目标函数fun,梯度函数gfun,黑塞矩阵Hess,初始点x0

%output:最优解x,最优值val,迭代次数iter

it_max=10000;

eps=1e-6;

X3_0=[0 2]';%初值

[x3,y3,k3_cnt,flag] = NewtonMethod(@fun3,@gfun3,@Hessfun3,X3_0,it_max,eps);

disp(['迭代次数:k_cnt='])

disp(k3_cnt)

disp(['最优解:x = '])

disp(x3)

disp(['此时: Y = f(x) = '])

disp(y3)

******************Newton法******************

迭代次数:k_cnt=

1

最优解:x =

-2.2857

-0.2857

此时: Y = f(x) =

0.4286

%用 阻尼牛顿法 求单变量函数在单峰区间[a,b]上的近似极小点

%在命令窗口输入函数: [newx,newfk,k]=QNewton('fun','gfun','Hess',xk)

function [newx,newfk,k]=DNewtonMethod(fun,gfun,Hessfun,xk,maxk,epsilon)

%功能: 阻尼牛顿法 Armijo法非精确线搜索mini f(x)

%输入: xk是初始点,fun是目标函数, gfun是目标函数的梯度,Hess是Hesse矩阵的函数

%输出: newx, newfk分别是近似极小点和极小值

% k表示迭代次数

if nargin<5||isempty(maxk)

maxk=500;

end

if nargin<6||isempty(epsilon)

epsilon=1e-6;

end

rho=0.5; % rho取值范围为[0,0.5]

sigma=0.4; % sigma取值范围为[rho,1]

k=0;

while(k<=maxk)

gk=gfun(xk);

Gk=Hessfun(xk);

dk=-Gk\gk; % 解方程Gk*dk=-gk,计算搜索方向

if(norm(dk)<epsilon)

break;

end % 检验终止准则

m=0; mk=0;

while(m<20) % Armijo法非精确线搜索

if (fun(xk+rho^m*dk) <= fun(xk)+sigma*rho^m*gk'*dk)

mk=m; break;

end

m=m+1;

end

xk=xk+rho^mk*dk;

k=k+1;

end

newx=xk;

newfk=fun(newx);

end

%function [Newx,Newfk,kcnt]=DNewtonMethod(fun,gfun,Hessfun,xk,maxk,epsilon)

%功能: 阻尼牛顿法 Armijo法非精确线搜索

%输入: xk是初始点,fun是目标函数, gfun是目标函数的梯度,Hess是Hesse矩阵的函数

%输出: newx, newfk分别是近似极小点和极小值 kcnt迭代次数

disp('******************阻尼牛顿法******************')

X_0=[0 0]';

it_max=10000;

eps=1e-6;

[x1,y1,k1_cnt]=DNewtonMethod(@fun,@gfun,@Hessfun,X_0,it_max,eps);

disp(['迭代次数:k_cnt='])

disp(k1_cnt)

disp(['最优解:x = '])

disp(x1)

disp(['此时: Y = f(x) = '])

disp(y1)

X2_0=[1 1]';

[x2,y2,k2_cnt]=DNewtonMethod(@fun2,@gfun2,@Hessfun2,X2_0,it_max,eps);

disp(['迭代次数:k_cnt='])

disp(k2_cnt)

disp(['最优解:x = '])

disp(x2)

disp(['此时: Y = f(x) = '])

disp(y2)

****阻尼牛顿法******************

迭代次数:k_cnt=

1

最优解:x =

1

2

此时: Y = f(x) =

-8

迭代次数:k_cnt=

2

最优解:x =

4.0000

2.0000

此时: Y = f(x) =

4.0000

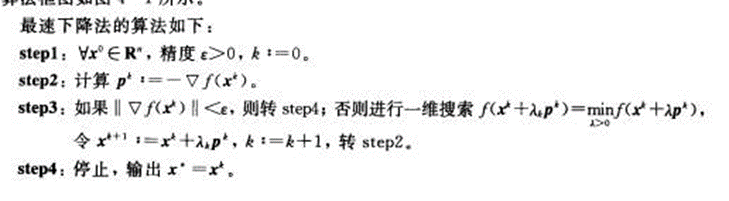

最速下降法

function [xo,fo,k_cnt] = Opt_Steepest(f,grad,x0,TolX,TolFun,dist0,MaxIter)

% 用最速下降法求最优化解mini f(x)

%输入:f为函数名 grad为梯度函数

%x0为解的初值 TolX,TolFun分别为变量和函数的误差阈值

%dist0为初始步长 MaxIter为最大迭代次数

%输出: xo为取最小值的点 fo为最小的函数值

% f0 = f(x(0))

k_cnt =0;

%%%%%%判断输入的变量数,设定一些变量为默认值

if nargin < 7

MaxIter = 100; %最大迭代次数默认为100

end

if nargin < 6

dist0 = 10; %初始步长默认为10

end

if nargin < 5

TolFun = 1e-8; %函数值误差为1e-8

end

if nargin < 4

TolX = 1e-6; %自变量距离误差

end

%%%%%第一步,求解的初值的函数值

x = x0;

fx0 = feval(f,x0);

fx = fx0;

dist = dist0;

kmax1 = 25; %线性搜索法确定步长的最大搜索次数

warning = 0;

%%%%%迭代计算求最优解

for k = 1: MaxIter

g = feval(grad,x);

g = g/norm(g); %求在x处的梯度方向

%%线性搜索方法确定步长

dist = dist*2; %令步长为原步长的二倍

fx1 = feval(f,x-dist*2*g);

for k1 = 1:kmax1

fx2 = fx1;

fx1 = feval(f,x-dist*g);

if fx0 > fx1+TolFun & fx1 < fx2 - TolFun %fx0 > fx1 < fx2,

den = 4*fx1 - 2*fx0 - 2*fx2;num = den - fx0 + fx2; %二次逼近法

dist = dist*num/den;

x = x - dist*g; fx = feval(f,x); %确定下一点

break;

else

dist = dist/2;

end

end

if k1 >= kmax1

warning = warning + 1; %无法确定最优步长

else

warning = 0;

end

if warning >= 2|(norm(x - x0) < TolX&abs(fx - fx0) < TolFun)

break;

end

x0 = x;

fx0 = fx;

end

xo = x; fo = fx;

k_cnt = k_cnt+1;

if k == MaxIter

fprintf('Just best in %d iterations',MaxIter);

end

%function [xo,fo,k_cnt] = Opt_Steepest(f,grad,x0,TolX,TolFun,dist0,MaxIter)

% 用最速下降法求最优化解mini f(x)

%输入:f为函数名 grad为梯度函数

%x0为解的初值 TolX,TolFun分别为变量和函数的误差阈值

%dist0为初始步长 MaxIter为最大迭代次数

%输出: xo为取最小值的点 fo为最小的函数值

disp('******************最速下降法******************')

X4_0 = [1 4]'; %初值是列向量

dist0 =1; %dist0为初始步长

it_max=10000;

eps=1e-6;

[x4,y4,k4_cnt] = Opt_Steepest(@fun4,@gfun4,X4_0,eps,eps,dist0,it_max);

disp('迭代次数:k_cnt=')

disp(k4_cnt)

disp(['最优解:xo = '])

disp(x4)

disp(['此时: Y = f(x) = '])

disp(y4)

X5_0 = [1 1]'; %初值是列向量

dist0 =1; %dist0为初始步长

it_max=10000;

eps=1e-6;

[x5,y5,k5_cnt] = Opt_Steepest(@fun5,@gfun5,X5_0,eps,eps,dist0,it_max);

disp('迭代次数:k_cnt=')

disp(k5_cnt)

disp(['最优解:xo = '])

disp(x5)

disp(['此时: Y = f(x) = '])

disp(y5)

****最速下降法******************

迭代次数:k_cnt=

1

最优解:xo =

4.6663

4.3328

此时: Y = f(x) =

-20.3333

迭代次数:k_cnt=

1

最优解:xo =

3

2

此时: Y = f(x) =

0

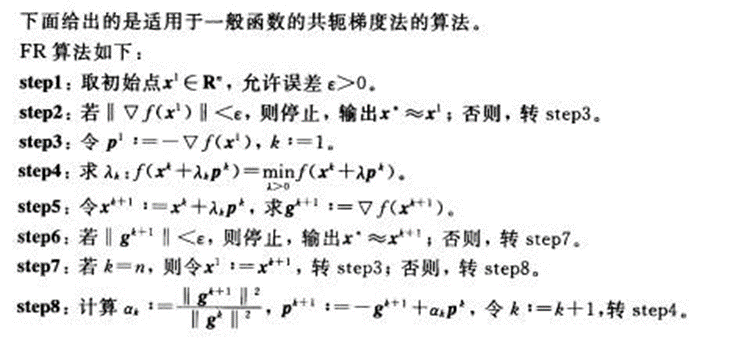

共轭梯度法

function[x,val,k]=ConJgradMethod(fun,gfun,x0)

%功能:用共轭梯度法求无约束问题 mini f(x)

%输入:fun,gfun分别是目标函数和梯度,x0是初始点

%输出:x,val分别是近似最优点和最优值,k表示迭代次数

k=0;

maxk=5000;

rho=0.6;

sigma=0.4;

e=1e-5;%精度

n=length(x0);

while(k<maxk)

g=feval(gfun,x0);%求梯度

itern=k-(n+1)*floor(k/(n+1));%用于重新开始

itern=itern+1;

%计算搜索方向

if(itern==1)

d=-g;

else

beta=(g'*g)/(g0'*g0);

d=-g+beta*d0;

gd=g'*d; %当搜索方向不是下降方向时,插入负梯度方向作为搜索方向

if(gd>=0.0)

d=-g;

end

end

if(norm(g)<=e)

break;

end

m=0;

mk=0;

while(m<20)

if(feval(fun,x0+rho^m*d)<feval(fun,x0)+sigma*rho^m*g'*d)

mk=m;

break;

end

m=m+1;

end

x0=x0+d*rho^mk;

val=feval(fun,x0);

g0=g;

d0=d;

k=k+1;

end

x=x0;

val=fun(x);%就是f在x处的函数值

end

function[x,val,k]=ConJgradMethod(fun,gfun,x0)

%功能:用共轭梯度法求无约束问题 mini f(x)

%输入:fun,gfun分别是目标函数和梯度,x0是初始点

%输出:x,val分别是近似最优点和最优值,k表示迭代次数

k=0;

maxk=5000;

rho=0.6;

sigma=0.4;

e=1e-5;%精度

n=length(x0);

while(k<maxk)

g=feval(gfun,x0);%求梯度

itern=k-(n+1)*floor(k/(n+1));%用于重新开始

itern=itern+1;

%计算搜索方向

if(itern==1)

d=-g;

else

beta=(g'*g)/(g0'*g0);

d=-g+beta*d0;

gd=g'*d; %当搜索方向不是下降方向时,插入负梯度方向作为搜索方向

if(gd>=0.0)

d=-g;

end

end

if(norm(g)<=e)

break;

end

m=0;

mk=0;

while(m<20)

if(feval(fun,x0+rho^m*d)<feval(fun,x0)+sigma*rho^m*g'*d)

mk=m;

break;

end

m=m+1;

end

x0=x0+d*rho^mk;

val=feval(fun,x0);

g0=g;

d0=d;

k=k+1;

end

x=x0;

val=fun(x);%就是f在x处的函数值

end

非梯度搜索算法Nelder_Mead方法

function [x,fval,flag,kcnt]=Nelder_Mead(fun,x0, max_time,eps)

% realization of Nelder-Mead Simplex

% [x fval flag] = nm_min(f , x0 , max_time , eps)

% max_time:最大迭代次数,默认10000

% eps:精度,默认1e-5

%参数检查

kcnt =0;

if nargin < 2

error('请至少传入函数和初始点');

end

% 默认值设置

if nargin < 3

max_time = 10000 ;

end

if nargin < 4

eps = 1e-5 ;

end

n = length(x0) ;%变量个数

x0 = x0(:) ; %把x0变成列向量

% vx是单纯形矩阵,n行n+1列

% 即n维空间中的n+1个点

vx = x0 ;

% vf是函数值,对应每个列向量

vf = feval(fun , x0) ;

% 构造初始单纯形

% 分别对每个维度按一定比例扩张,以形成一个高维体

for i = 1:n

x = x0 ;

if abs(x(i)) < eps

x(i) = x(i) + 0.005 ;%该维度上为0时,人工指定加上一定值

else

x(i) = x(i) * 1.05 ;

end

vx = [vx , x] ;

vf = [vf , feval(fun,x)] ;

end

%排序,做迭代准备

[vf,index] = sort(vf) ;

vx = vx(:,index) ;

%开始迭代

while max_time > 0

%各个点在各维度上的差值足够小

if (max(max(abs(vx(:,2:n+1)-vx(:,1:n)))) < eps)

break

end

%三个考察点,最优,次差,最差

best = vx(: , 1) ;

fbest = vf(1) ;

soso = vx(: , n) ;

fsoso = vf(n) ;

worst = vx(: , n+1) ;

fworst = vf(n+1) ;

center = sum(vx(:, 1:n) , 2) ./ n ;

r = 2 * center - worst ;%反射点

fr = feval(fun , r) ;

if fr < fbest

% 比最好的结果还好,说明方向正确,考察扩展点,

% 以期望更多的下降

e = 2 * r - center ; %扩展点

fe = feval(fun , e) ;

% 在扩展点和反射点中选择较优者去替换最差点

if fe < fr

vx(: , n+1) = e ;

f(: , n+1) = fe ;

else

vx(: , n+1) = r ;

vf(: , n+1) = fr ;

end

else

if fr < fsoso

%比次差结果好,能够改进

vx(: , n+1) = r ;

vf(: , n+1) = fr ;

else

% 比次差结果坏,应该考察压缩点当压缩点无法

% 得到更优值的时候,考虑收缩

shrink = 0 ;

if fr < fworst

%由于r点更优所以向r点的方向找压缩点

c = ( r + center ) ./ 2 ;

fc = feval(fun , c) ;

if fc < fr %确定从r压缩向c可以改进

vx(: , n+1) = c ;

vf(: , n+1) = fc ;

else

%否则的话,准备进行收缩

shrink = true ;

end

else

c = (worst + center) ./ 2 ;

fc = feval(fun , c) ;

if fc < fr %确定从r压缩向c可以改进

vx(: , n+1) = c ;

vf(: , n+1) = fc ;

else %否则的话,准备进行收缩

shrink = 1 ;

end

end %fr < fworst

if shrink %压缩点并非更优,考虑所有点向best收缩

for i = 2:n+1

vx(: , i) = ( vx(i) + best ) ./ 2 ;

vf(: , i) = feval(fun , vx(: , i)) ;

end

end %shrink

end %fr < fsoso

end %fr < fbest

[vf,index] = sort(vf) ;

vx = vx(:,index) ;

max_time = max_time - 1 ;

kcnt = kcnt+1;

end %while max_time > 0

x = vx(: , 1) ;

fval = vf(: , 1);

if max_time > 0

flag = 1 ;

else

flag = 0 ;

end

end

%Nelder-Mead方法实现代码

% [x fval flag] = Nelder_Mead(f , x0 , max_time , eps)

% max_time:最大迭代次数 eps:精度

f1 =@(x) (x(1).^2+(x(2)-2).^2);

numx1 = [0,0];

[nmx1, fval_1, flag,kcnt1] = Nelder_Mead(f1, numx1);

disp('迭代次数:kcnt1=')

disp(kcnt1)

disp(['最优解:nmx1 = '])

disp(nmx1)

disp(['此时: Y = f(x) = '])

disp(fval_1)

f2 =@(x) (2*x(1).^2+x(2).^2-10*x(1)*x(2)+4*x(1)*(x(2)^3));

numx2 = [1,1];

[nmx2, fval_2, flag,kcnt2] = Nelder_Mead(f2, numx2);

disp('迭代次数:kcnt2=')

disp(kcnt2)

disp(['最优解:nmx2 = '])

disp(nmx2)

disp(['此时: Y = f(x) = '])

disp(fval_2)

%-----------单形替换法---主函数-----------%

function [DanXingTiHuanFa_x, DanXingTiHuanFa_xf, DanXingTiHuanFa_n] = DanXingTiHuanFa(E, x0)

n = 0; %迭代次数统计

N = length(x0); %获取x0的维数

%将x0存储到X中的第n+1列

X(:, N+1) = x0;

%x0的函数值,存储在F_X的第n+1位中

F_X(N+1) = func(x0);

for i = 1:N

d = 2*rand([N,1])-1; %根据x0的维数生成[-1,1]之间的随机向量d

d = d / norm(d); %单位化

X(:, i) = x0 + 1.3 * d; %求xi,存到X的第i列

F_X(i) = func(X(:, i)); %求n个顶点对应函数值,存储在FX中的对应位置

end

while 1

%设求和初值为第N+1个

Xsum = X(:, N+1);

%给最大最小值一个初值用于比较,初值为x0的函数值

F_Xmin = F_X(N+1);

F_Xmax = F_X(N+1);

F_Xmin_N = N+1;

F_Xmax_N = N+1;

for i = 1:N

%找函数值的最大、最小值

if F_X(i) < F_Xmin

F_Xmin = F_X(i);

F_Xmin_N = i; %记录最小函数值的索引位置

end

if F_X(i) > F_Xmax

F_Xmax = F_X(i);

F_Xmax_N = i; %记录最大函数值的索引位置

end

%所有x(n+1个)求和

Xsum = Xsum + X(:, i);

end

%得到最值点

Xmin = X(:, F_Xmin_N);

Xmax = X(:, F_Xmax_N);

%求形心

Xsum = Xsum - Xmax; %在n+1个的求和中,剔除最大点

Xavg = Xsum / N; %求出形心点

%收敛条件

%if 1/3 * sqrt((F_Xmin - F_Xmax)^2 + (F_Xmin - func(Xavg))^2 + (func(Xavg) - F_Xmax)^2) <= E

if 1/3 * (norm(Xmin-Xmax) + norm(Xavg-Xmax) + norm(Xmin-Xavg)) <= E

break

end

n = n+1;

%反射计算

xr = Xavg + 1.3*(Xavg - Xmax); %反射计算,反射计算取1.3(或取1)

%延伸计算

if func(xr) < F_Xmax

xe = xr + 1.3*(xr-Xavg); %延伸系数这里取1.3(1-2之间)

if func(xe) < func(xr)

X(:, F_Xmax_N) = xe;

F_X(F_Xmax_N) = func(xe);

continue %比较函数值大小

else

X(:, F_Xmax_N) = xr;

F_X(F_Xmax_N) = func(xr);

continue

end

else

%收缩计算

xs = Xmax + 0.5*(Xavg - Xmax); %收缩系数这里取0.5(0.5-0.7)

if func(xs) < F_Xmax

X(:, F_Xmax_N) = xs;

F_X(F_Xmax_N) = func(xs);

continue

else

%缩边计算

for i=1:N+1

X(:, i) = Xmin + 0.5*(X(:, i)-Xmax);

F_X(i) = func(X(:, i));

end

end

end

end

%-----------返回计算结果-----------%

DanXingTiHuanFa_x = Xmin;

DanXingTiHuanFa_xf = func(Xmin);

DanXingTiHuanFa_n = n;

end

%-----------调用单形替换法2-----------%

x0 = [2;1];

E = 1e-8;

[DanXingTiHuanFa_x, DanXingTiHuanFa_xf, DanXingTiHuanFa_n] = DanXingTiHuanFa(E, x0);

fprintf('单形替换法:\n')

fprintf('极值点为:[%f, %f, %f, %f] \n',DanXingTiHuanFa_x)

fprintf('极值为:%f\n',DanXingTiHuanFa_xf)

fprintf('迭代次数为:%d\n\n',DanXingTiHuanFa_n)

function f=func(x)

f = 2*x(1).^3+4*x(1)*x(2).^3-10*x(1)*x(2)+x(2).^2;

end

单形替换法:

极值点为:[1.001558, 0.833451, 极值为:-3.324089

迭代次数为:69

Powell法

function [x_min,f_min] = PowellImprovedMethod(func,x0,options)

%Powell

if nargin<3

options.tol = 1e-12;

options.iterNum = 1000;

options.bracketMethod = '';

options.linearSrcMethod = '';

options.plot2.Flag = 0;

options.plot2.x = [];

options.plot2.y = [];

options.plot2.z = [];

end

tol = options.tol;

iterNum = options.iterNum;

plot2 = options.plot2;

if length(x0)~=2

plot2.Flag = 0;

end

x_min = x0;

f_min = func(x0);

E = eye(length(x0));

xk = x0;

f_pre = func(xk);

f = f_pre+1e10;

if plot2.Flag == 1

figure,subplot(1,2,1),axis equal, hold on;

contourf(plot2.x,plot2.y,plot2.z,30,'linestyle','-')

colormap('jet');

tempf =f_pre;

end

while(iterNum)

x1k = xk;

deltaM_max = -inf;

di = 1;

for i=1:1:length(x0)

d = E(:,i);

lamdaFuncH = @(lamda)(func(xk+lamda.*d));

[a,b,c] = bracketAdvanceBack(lamdaFuncH,0,0.01);

lamda = GoldSection(lamdaFuncH,a,c,1e-12);

xk1 = xk + lamda.*d;

f =func(xk1);

if plot2.Flag == 1

tempf = [tempf,f];

subplot(1,2,1),plot([xk(1),xk1(1)],[xk(2),xk1(2)],'-o','LineWidth',2);

subplot(1,2,2),plot(tempf,'-b.','LineWidth',2); grid on;

axis([0,60,-10,func(x0)]);

xlabel('Step');

ylabel('Objective Function Value');

end

deltaM = func(xk) - f;

if deltaM_max<deltaM

deltaM_max = deltaM;

di = i;

end

xk = xk1;

iterNum = iterNum - 1;

end

x2k = 2.*xk-x1k;

f0 = f_pre;f2 = f;f3 = func(x2k);

if (f3<f0&&(f0-2*f2+f3)*(f0-f2-deltaM_max)^2<0.5*deltaM_max*(f0-f3)^2 )

d = xk-x1k;

lamdaFuncH = @(lamda)(func(xk+lamda.*d));

[a,b,c] = bracketAdvanceBack(lamdaFuncH,0,0.01);

lamda = GoldSection(lamdaFuncH,a,c,1e-12);

xk1 = xk + lamda.*d;

f =func(xk1);

E(:,di) = [];

E = [E,d];

else

if f2<f3

xk1 = xk;

f =func(xk1);

else

xk1 = x2k;

f =func(xk1);

end

end

if plot2.Flag == 1

tempf = [tempf,f];

subplot(1,2,1),plot([xk(1),xk1(1)],[xk(2),xk1(2)],'-o','LineWidth',2);

subplot(1,2,2),plot(tempf,'-b.','LineWidth',2); grid on;

axis([0,60,-10,func(x0)]);

xlabel('Step');

ylabel('Objective Function Value');

end

xk = xk1;

iterNum = iterNum - 1;

if abs(f-f_pre)<tol||iterNum == 0

x_min = xk;

f_min = f;

break;

else

f_pre = f;

end

E;

end

functionx_min=GoldSection(func,a,b,toll,plotFlag)

%确保[a,b]为单峰区间

if nargin < 5

plotFlag = 0;

end

if nargin < 4

toll = 10^(-8);

end

if (a>b)

[a,b]=swap(a,b);

end

GOLD = (sqrt(5)-1)/2;

%step 1 初始化x1 x2

x1 = a+(1-GOLD)*(b-a);

x2 = a+GOLD*(b-a);

fx1 = func(x1);

fx2 = func(x2);

if plotFlag == 1

figH = figure();

abInt = (b-a)/100;

xx = a:abInt:b;

for i = 1:1:length(xx)

yy(i) = func(xx(i));

end

plot(xx,yy,'-b',a,func(a),'-*r',b,func(b),'-*g');

hold on;

end

%step 2 ,迭代缩短区间

while abs(a-b)>toll

if fx1<fx2 %如果fx1<fx2,则说明极小点在a x2区间内

b = x2;

x2 = x1;

fx2 = fx1;

x1 = a + (1-GOLD)*(b-a);

fx1 = func(x1);

elseif fx1 == fx2

a = x1;

b = x2;

x1 = a+(1-GOLD)*(b-a);

x2 = a+GOLD*(b-a);

fx1 = func(x1);

fx2 = func(x2);

else%反之,则说明极小点在x1 b之间

a = x1;

x1 = x2;

fx1 = fx2;

x2 = a + GOLD*(b-a);

fx2 = func(x2);

end

if plotFlag == 1

pause(0.15);

plot(a,func(a),'-*r',b,func(b),'-*g');

end

end

x_min = (a+b)/2;

end

function[a,b]=swap(a,b)

t=a;

a=b;

b=t;

end

functiona=SignFunc(a,x)

a = abs(a);

if x<0

a = -a;

end

end

XX7_1 = [1 1]'; %初值是列向量

[x_min1,f_min1] = PowellImprovedMethod(@fun6,XX7_1);

disp(['最优解:x_min1 = '])

disp(x_min1)

disp(['此时: Y = f(x) =f_min1 '])

disp(f_min1)

function [x_min,f_min]=ConjugateDirectionMethod(func,x0,options)

%Powell

if nargin<3

options.tol = 1e-12;

options.iterNum = 1000;

options.bracketMethod = '';

options.linearSrcMethod = '';

options.plot2.Flag = 0;

options.plot2.x = [];

options.plot2.y = [];

options.plot2.z = [];

end

tol = options.tol;

iterNum = options.iterNum;

plot2 = options.plot2;

if length(x0)~=2

plot2.Flag = 0;

end

x_min = x0;

f_min = func(x0);

E = eye(length(x0));

%E = [1,0;-1,1];

xk = x0;

f_pre = func(xk);

f = f_pre+1e10;

if plot2.Flag == 1

figure,subplot(1,2,1),axis equal, hold on;

contourf(plot2.x,plot2.y,plot2.z,30,'linestyle','-')

colormap('jet');

tempf =f_pre;

end

while(iterNum)

x1k = xk;

for i=1:1:length(x0)

d = E(:,i);

lamdaFuncH = @(lamda)(func(xk+lamda.*d));

[a,b,c] = bracketAdvanceBack(lamdaFuncH,0,0.01);

lamda = GoldSection(lamdaFuncH,a,c,1e-12);

xk1 = xk + lamda.*d;

f =func(xk1);

if plot2.Flag == 1

tempf = [tempf,f];

subplot(1,2,1),plot([xk(1),xk1(1)],[xk(2),xk1(2)],'-o','LineWidth',2);

subplot(1,2,2),plot(tempf,'-b.','LineWidth',2); grid on;

axis([0,60,-10,func(x0)]);

xlabel('Step');

ylabel('Objective Function Value');

end

xk = xk1;

iterNum = iterNum - 1;

end

d = xk-x1k;

lamdaFuncH = @(lamda)(func(xk+lamda.*d));

[a,b,c] = bracketAdvanceBack(lamdaFuncH,0,0.01);

lamda = GoldSection(lamdaFuncH,a,c,1e-12);

xk1 = xk + lamda.*d;

f =func(xk1);

if plot2.Flag == 1

tempf = [tempf,f];

subplot(1,2,1),plot([xk(1),xk1(1)],[xk(2),xk1(2)],'-o','LineWidth',2);

subplot(1,2,2),plot(tempf,'-b.','LineWidth',2); grid on;

axis([0,60,-10,func(x0)]);

xlabel('Step');

ylabel('Objective Function Value');

end

xk = xk1;

iterNum = iterNum - 1;

if abs(f-f_pre)<tol||iterNum == 0

x_min = xk;

f_min = f;

break;

else

f_pre = f;

end

E(:,1) = [];

E = [E,d];

end

function [x_min,f_min]=ConjugateDirectionMethod(func,x0,options)

%Powell

if nargin<3

options.tol = 1e-12;

options.iterNum = 1000;

options.bracketMethod = '';

options.linearSrcMethod = '';

options.plot2.Flag = 0;

options.plot2.x = [];

options.plot2.y = [];

options.plot2.z = [];

end

tol = options.tol;

iterNum = options.iterNum;

plot2 = options.plot2;

if length(x0)~=2

plot2.Flag = 0;

end

x_min = x0;

f_min = func(x0);

E = eye(length(x0));

%E = [1,0;-1,1];

xk = x0;

f_pre = func(xk);

f = f_pre+1e10;

if plot2.Flag == 1

figure,subplot(1,2,1),axis equal, hold on;

contourf(plot2.x,plot2.y,plot2.z,30,'linestyle','-')

colormap('jet');

tempf =f_pre;

end

while(iterNum)

x1k = xk;

for i=1:1:length(x0)

d = E(:,i);

lamdaFuncH = @(lamda)(func(xk+lamda.*d));

[a,b,c] = bracketAdvanceBack(lamdaFuncH,0,0.01);

lamda = GoldSection(lamdaFuncH,a,c,1e-12);

xk1 = xk + lamda.*d;

f =func(xk1);

if plot2.Flag == 1

tempf = [tempf,f];

subplot(1,2,1),plot([xk(1),xk1(1)],[xk(2),xk1(2)],'-o','LineWidth',2);

subplot(1,2,2),plot(tempf,'-b.','LineWidth',2); grid on;

axis([0,60,-10,func(x0)]);

xlabel('Step');

ylabel('Objective Function Value');

end

xk = xk1;

iterNum = iterNum - 1;

end

d = xk-x1k;

lamdaFuncH = @(lamda)(func(xk+lamda.*d));

[a,b,c] = bracketAdvanceBack(lamdaFuncH,0,0.01);

lamda = GoldSection(lamdaFuncH,a,c,1e-12);

xk1 = xk + lamda.*d;

f =func(xk1);

if plot2.Flag == 1

tempf = [tempf,f];

subplot(1,2,1),plot([xk(1),xk1(1)],[xk(2),xk1(2)],'-o','LineWidth',2);

subplot(1,2,2),plot(tempf,'-b.','LineWidth',2); grid on;

axis([0,60,-10,func(x0)]);

xlabel('Step');

ylabel('Objective Function Value');

end

xk = xk1;

iterNum = iterNum - 1;

if abs(f-f_pre)<tol||iterNum == 0

x_min = xk;

f_min = f;

break;

else

f_pre = f;

end

E(:,1) = [];

E = [E,d];

end

XX7_0 = [1 1]'; %初值是列向量

[x_min,f_min] = ConjugateDirectionMethod(@fun6,XX7_0);

disp(['最优解:x_min = '])

disp(x_min)

disp(['此时: Y = f(x) =f_min1 '])

disp(f_min)

推荐使用该方法

%-----------鲍威尔法---主函数-----------%

function [BaoWeiErFa_x,BaoWeiErFa_xf,BaoWeiErFa_n]=BaoWeiErFa(E, X0)

n = length(X0); %获取x0的维数

m = 0; %迭代次数统计

%定义方向作为列向量存储的矩阵

D = zeros(n,n); %根据维数n定义一个1行n列的方向向量基础

for i = 1:n

D(i, i) = 1; %把第n位置为1

end

%求X0的函数值

F_X0 = func(X0);

while 1

m = m + 1;

%从X0经过n次坐标轮换一维搜索得到X1

X0_s = X0;

FDiff_max = 0;

for i = 1:n

[X1, F_X1] = YiWeiSouSuo(X0_s, D(:,i), E);

FDiff = func(X0_s) - func(X1); %计算相邻两点函数值的差

if FDiff > FDiff_max %找出函数值最大下降量

FDiff_max = FDiff;

FDiff_max_n = i;

end

X0_s = X1;

end

%求反射点

Xr = 2 * X1 - X0;

F_Xr = func(Xr);

if (F_Xr < F_X0) && ((F_X0 + F_Xr - 2*F_X1) * (F_X0 - F_X1 - FDiff_max)^2 < 0.5 * (F_X0 - F_Xr))

%改变方向

%将FDiff_max_n后面的数整体往前一位移动一个

for i = FDiff_max_n+1:n

D(:,i-1) = D(:,i);

end

%将新方向放到最后一位

D(:, n) = X1 - X0;

%求下一轮的起始点

[X0_1, F_X0_1] = YiWeiSouSuo(X1, X1 - X0, E);

else

%不改变方向,取Xr与X1中函数值较小的为下一轮起点

if F_Xr < F_X1

X0_1 = Xr;

F_X0_1 = F_Xr;

else

X0_1 = X1;

F_X0_1 = F_X1;

end

end

if norm(X1-X0) < E

break

else

X0 = X0_1;

F_X0 = F_X0_1;

end

end

BaoWeiErFa_x = X0_1;%最优点

BaoWeiErFa_xf = F_X0_1;%最优点对应函数值

BaoWeiErFa_n = m; %迭代次数

end

%-----------黄金分割法---主函数-----------%

function [HJFGF_x, HJFGF_xf, HJFGF_n] = HuangJinFenGeFa(a,b,w)

k=(5^(1/2)-1)/2; %(5^(1/2)-1)/2约等于0.618

c = a + (1-k) * (b-a);

d = a + k * (b-a);

n = 0; %迭代次数统计

%缩小区间到精度要求

while norm(a-b) > w

n = n + 1;

if func(c) < func(d)

a = a;

b = d;

d = c;

c = a + (1-k) * (b-a);

elseif func(c) > func(d)

a = c;

c = d;

b = b;

d = a + k * (b-a);

else

a = c;

b = d;

c = a + (1-k) * (b-a);

d = a + k * (b-a);

end

end

%比较区间端点值大小

if func(a) < func(b)

HJFGF_x = a;

HJFGF_xf= func(a);

else

HJFGF_x = b;

HJFGF_xf = func(b);

end

HJFGF_n = n;

%-----------进退法---主函数-----------%

function [JT_a, JT_b, JT_c] = JinTuiFa(x0,a,d) %x0为初值,a为初始步长,d为方向

%计算x1

x1 = x0 + a * d;

%前进后退标志符,n=1意味着上一次是前进

n=1;

%判断前进还是后退运算

if func(x1) > func(x0)

JT_c = x1;

[JT_a, JT_b]=Back(x0,x1,a,n,d);

else

JT_a = x0;

[JT_b, JT_c]=Front(x0,x1,a,n,d);

end

%-----------后退运算---子函数-----------%

function [B_a, B_b] = Back(x0,x1,a,n,d)

if n == 1

n = 0;

else

a = 2 * a;

end

x2 = x0 - a * d;

if func(x2) < func(x0)

x1 = x0;

x0 = x2;

[B_a, B_b] = Back(x0,x1,a,n,d); %递归

else

B_a = x2;

B_b = x1;

end

%-----------前进运算---子函数-----------%

function [F_a, F_b] = Front(x0,x1,a,n,d)

if n == 0

n = 1;

else

a = 2 * a;

end

x2 = x1 + a * d;

if func(x2) < func(x1)

x0 = x1;

x1 = x2;

[F_a, F_b] = Front(x0,x1,a,n,d);

else

F_a = x0;

F_b = x2;

end

%-----------整合一维搜索---子函数-----------%

function[YiWeiSouSuo_x,YiWeiSouSuo_fx]= YiWeiSouSuo(Start,Dir,E)

[JT_a, JT_b, JT_c] = JinTuiFa(Start, 0.01, Dir);

[HJFGF_x, HJFGF_f, HJFGF_n] = HuangJinFenGeFa(JT_a, JT_c, E);

YiWeiSouSuo_x = HJFGF_x;

YiWeiSouSuo_fx = HJFGF_f;

end

function f=func(x)

f = 2*x(1).^3+4*x(1)*x(2).^3-10*x(1)*x(2)+x(2).^2;

end

%-----------调用鲍威尔法-----------%

x0 = [2;1];%初值

E = 1e-8;

[BaoWeiErFa_x, BaoWeiErFa_xf, BaoWeiErFa_n] = BaoWeiErFa(E, x0);

fprintf('鲍威尔法:\n')

fprintf('极值点为:[%f, %f]\n',BaoWeiErFa_x)

fprintf('极值为:%f\n',BaoWeiErFa_xf)

fprintf('迭代次数为:%d\n\n',BaoWeiErFa_n)

鲍威尔法:

极值点为:[1.001558, 0.833451]

极值为:-3.324089

迭代次数为:4