scratch lenet(7): C语言计算可学习参数数量和连接数量

1. 目的

按照 LeNet-5 对应的原版论文 LeCun-98.pdf 的网络结构,算出符合原文数据的“网络每层可学习参数数量、连接数量”。

网络上很多人的 LeNet-5 实现仅仅是 “copy” 现有的别人的项目, 缺乏“根据论文手动实现”的“复现”精神。严格对齐到论文结果,对于 LeNet-5 这样的经典论文, 是可以做到的。

具体实现使用 C 语言, 不依赖 PyTorch 或 Caffe 等第三方库, 也不使用 C++ 特性。

2. C1 层

公式

C1 层是卷积层。

可学习参数数量

$ \text{nk} * (\text{kh} * \text{kw} * \text{kc} + 1)$

其中:

- nk \text{nk} nk kernel 数量

- kh \text{kh} kh, kw \text{kw} kw: kernel 的高度、宽度

- kc \text{kc} kc: kernel 的通道数

- 1: bias

连接数量

(

kh

∗

kw

∗

kc

+

1

)

∗

out

h

∗

out

w

∗

out

c

;

(\text{kh} * \text{kw} * \text{kc} + 1) * \text{out}_h * \text{out}_w * \text{out}_c;

(kh∗kw∗kc+1)∗outh∗outw∗outc;

其中:

- kh \text{kh} kh, kw \text{kw} kw: kernel 的高度、宽度

- kc \text{kc} kc: kernel 的通道数

- 1: bias

- out h \text{out}_h outh, out w \text{out}_w outw: 输出 feature map 的高度、宽度

- out c \text{out}_c outc: 输出 feature map 的通道数,也就是 kernel 的数量 n k nk nk

代码

typedef struct ConvHyper

{

int in_h;

int in_w;

int in_c;

int kh;

int kw;

int number_of_kernel;

int out_h;

int out_w;

int out_c;

} ConvHyper;

ConvHyper C1;

double* C1_kernel[6];

double C1_bias[6];

double* C1_output[6];

void init_lenet()

{

// C1

{

C1.in_h = 32;

C1.in_w = 32;

C1.in_c = 1;

C1.kh = 5;

C1.kw = 5;

C1.number_of_kernel = 6;

C1.out_h = 28;

C1.out_w = 28;

C1.out_c = C1.number_of_kernel;

// unpack

int kh = C1.kh;

int kw = C1.kw;

int in_channel = C1.in_c;

int number_of_kernel = C1.number_of_kernel;

double** kernel = C1_kernel;

int out_h = C1.out_h;

int out_w = C1.out_w;

double* bias = C1_bias;

int in_c = C1.in_c;

int out_c = C1.out_c;

int fan_in = get_fan_in(in_channel, kh, kw);

int fan_out = get_fan_out(number_of_kernel, kh, kw);

for (int k = 0; k < number_of_kernel; k++)

{

kernel[k] = (double*)malloc(kh * kw * sizeof(double));

init_kernel(kernel[k], kh, kw, fan_in, fan_out);

C1_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

bias[k] = 0.0;

}

int num_of_train_param = number_of_kernel * (kh * kw * in_c + 1);

int num_of_conn = (kh * kw * in_c + 1) * out_h * out_w * out_c;

int expected_num_of_train_param = 156;

int expected_num_of_conn = 122304;

if (expected_num_of_train_param == num_of_train_param && expected_num_of_conn == num_of_conn)

{

printf("Layer C1 has %d trainable parameters, %d connections\n", num_of_train_param, num_of_conn);

}

else

{

printf("Layer C1 wrong\n");

}

}

...

}

3. S2 层

公式

可学习参数数量

n

k

∗

2

nk * 2

nk∗2

其中

- n k nk nk 表示 kernel 数量。

- 2 表示 bias 和 coeff。

bias 好理解, 每个 kernel 对应一个 bias。 coeff 意思是系数, 每个 kernel 也有一个。

The four inputs to a unit in S2 are added, then multiplied by a trianable coefficient, and added to a trainable bias.

连接数量

(

k

h

∗

k

w

+

1

)

∗

k

c

∗

o

u

t

h

∗

o

u

t

w

(kh * kw + 1) * kc * out_h * out_w

(kh∗kw+1)∗kc∗outh∗outw

其中

- k h kh kh, k w kw kw: kernel 的高度、宽度

- 1: bias

- k c kc kc: kernel 的通道数, 也等于输出的通道数

- o u t h out_h outh, o u t w out_w outw: 输出 feature map 的高度、宽度

代码

typedef struct SubsampleHyper

{

int in_h;

int in_w;

int in_c;

int kh;

int kw;

int number_of_kernel;

int stride_h;

int stride_w;

int out_h;

int out_w;

int out_c;

} SubsampleHyper;

SubsampleHyper S2;

double* S2_output[6];

double S2_coeff[6];

double S2_bias[6];

void init_lenet()

{

// S2

{

S2.in_h = C1.out_h;

S2.in_w = C1.out_w;

S2.in_c = C1.out_c;

S2.kh = 2;

S2.kw = 2;

S2.number_of_kernel = C1.number_of_kernel;

S2.stride_h = 2;

S2.stride_w = 2;

S2.out_h = 14;

S2.out_w = 14;

S2.out_c = S2.in_c;

// unpack

int number_of_kernel = S2.number_of_kernel;

int out_h = S2.out_h;

int out_w = S2.out_w;

int kh = S2.kh;

int kw = S2.kw;

int in_c = S2.in_c;

for (int k = 0; k < number_of_kernel; k++)

{

S2_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

}

int num_of_train_param = number_of_kernel * 2; // #bias + #coeff

int num_of_conn = (kh * kw + 1) * in_c * out_h * out_w;

int expected_num_of_train_param = 12;

int expected_num_of_conn = 5880;

if (expected_num_of_train_param == num_of_train_param && expected_num_of_conn == num_of_conn)

{

printf("Layer S2 has %d trainable parameters, %d connections\n", num_of_train_param, num_of_conn);

}

else

{

printf("Layer S2 wrong\n");

}

}

...

}

4. C3 层

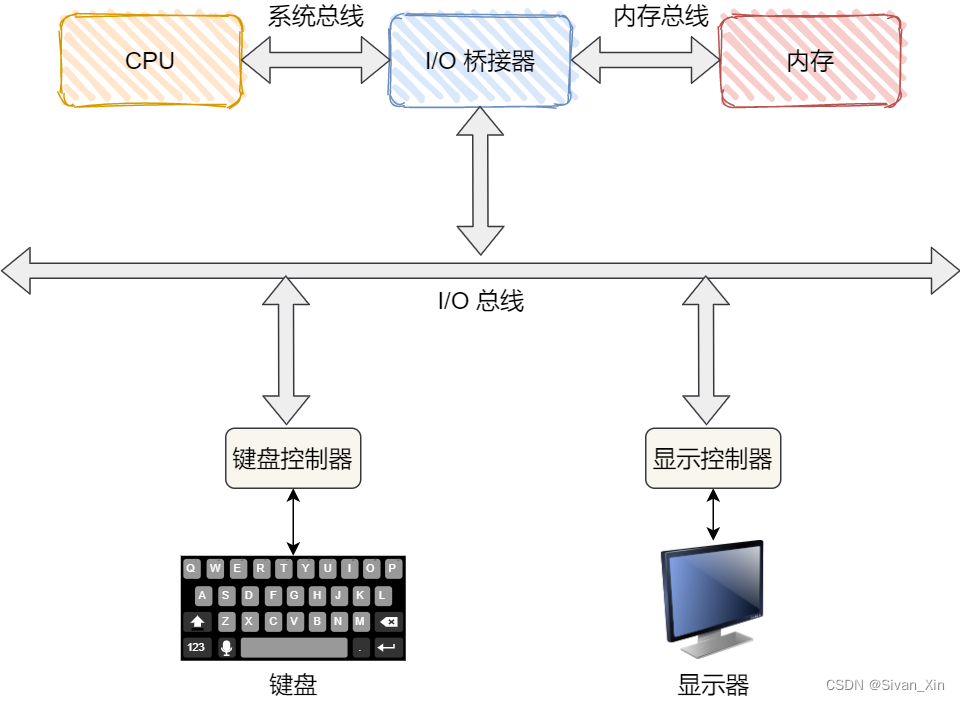

4.1 连接表

这一层虽然是“卷积层”,但是和C1层又不太一样。对于C1层,每个 kernel 的通道数量等于 input feature map 的通道数量;对于C3层, 每个 kernel 的数量小于等于 input feature map 的通道数量, 具体是多少, 展示在了如下的表格中:

图中 X 表示有连接。

C3 这层有16个 kernel:

- 对于第0个kernel, 它有3个通道,和 input feature map 的0、1、2通道做卷积。

- 对于第6个kernel, 它有4个通道,和 input feature map 的第0、1、2、3通道做卷积。

- 对于第15个kernel,它有6个通道,和 input feature map 的所有通道做卷积。

或者也可以这样理解: 每个 kernel 有6个通道,但是做卷积时, 第0个kernel只允许用0、1、2通道,第6个 kernel 只允许用0、1、2、3通道,以此类推。

换言之,我们需要根据论文的这张表, 确定出 C3 层的16个 kernel 各自的通道数量

bool X = true;

bool O = false;

bool connection_table[6 * 16] =

{

// 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

X, O, O, O, X, X, X, O, O, X, X, X, X, O, X, X,

X, X, O, O, O, X, X, X, O, O, X, X, X, X, O, X,

X, X, X, O, O, O, X, X, X, O, O, X, O, X, X, X,

O, X, X, X, O, O, X, X, X, X, O, O, X, O, X, X,

O, O, X, X, X, O, O, X, X, X, X, O, X, X, O, X,

O, O, O, X, X, X, O, O, X, X, X, X, O, X, X, X,

};

int num_of_train_param = 0;

int kc[16] = { 0 };

for (int i = 0; i < in_c; i++)

{

for (int j = 0; j < out_c; j++)

{

int idx = i * out_c + j;

kc[j] += connection_table[idx];

}

}

// 等价于如下的结果:

//int kc[16] = {

// 3, 3, 3, 3,

// 3, 3, 4, 4,

// 4, 4, 4, 4,

// 4, 4, 4, 6

//};

4.2 公式

可学习参数数量

Σ

k

(

kh

∗

kw

∗

kc

[

k

]

+

1

)

\Sigma_{k}({\text{kh} * \text{kw} * \text{kc}[k] + 1})

Σk(kh∗kw∗kc[k]+1)

其中:

- kh \text{kh} kh, kw \text{kw} kw: kernel 的高度、宽度

- kc \text{kc} kc: kernel 的通道数

- Σ \Sigma Σ 和 k k k: 遍历所有 kernel

- 1: bias

连接数量

Σ

k

(

kh

∗

kw

∗

kc

[

k

]

+

1

)

∗

(

out

h

∗

out

w

)

\Sigma_{k}({\text{kh} * \text{kw} * \text{kc}[k] + 1}) * (\text{out}_h * \text{out}_w)

Σk(kh∗kw∗kc[k]+1)∗(outh∗outw)

其中:

- kh \text{kh} kh, kw \text{kw} kw: kernel 的高度、宽度

- kc \text{kc} kc: kernel 的通道数

- Σ \Sigma Σ 和 k k k: 遍历所有 kernel

- 1: bias

- out h \text{out}_h outh, out w \text{out}_w outw: 输出 feature map 的高度、宽度

4.3 代码

ConvHyper C3;

double* C3_kernel[16];

double C3_bias[16];

double* C3_output[16];

void init_lenet()

{

...

// C3

{

C3.in_h = S2.out_h;

C3.in_w = S2.out_w;

C3.in_c = S2.out_c;

C3.kh = 5;

C3.kw = 5;

C3.number_of_kernel = 16;

C3.out_h = 10;

C3.out_w = 10;

C3.out_c = C3.number_of_kernel;

// unpack

int kh = C3.kh;

int kw = C3.kw;

int in_channel = C3.in_c;

int number_of_kernel = C3.number_of_kernel;

double** kernel = C3_kernel;

int out_h = C3.out_h;

int out_w = C3.out_w;

double* bias = C3_bias;

int in_c = C3.in_c;

int out_c = C3.out_c;

int fan_in = get_fan_in(in_channel, kh, kw);

int fan_out = get_fan_out(number_of_kernel, kh, kw);

for (int k = 0; k < number_of_kernel; k++)

{

kernel[k] = (double*)malloc(kh * kw * sizeof(double));

init_kernel(kernel[k], kh, kw, fan_in, fan_out);

C3_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

bias[k] = 0.0;

}

bool X = true;

bool O = false;

bool connection_table[6 * 16] =

{

// 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

X, O, O, O, X, X, X, O, O, X, X, X, X, O, X, X,

X, X, O, O, O, X, X, X, O, O, X, X, X, X, O, X,

X, X, X, O, O, O, X, X, X, O, O, X, O, X, X, X,

O, X, X, X, O, O, X, X, X, X, O, O, X, O, X, X,

O, O, X, X, X, O, O, X, X, X, X, O, X, X, O, X,

O, O, O, X, X, X, O, O, X, X, X, X, O, X, X, X,

};

int num_of_train_param = 0;

int kc[16] = { 0 };

for (int i = 0; i < in_c; i++)

{

for (int j = 0; j < out_c; j++)

{

int idx = i * out_c + j;

kc[j] += connection_table[idx];

}

}

//int kc[16] = {

// 3, 3, 3, 3,

// 3, 3, 4, 4,

// 4, 4, 4, 4,

// 4, 4, 4, 6

//};

for (int k = 0; k < out_c; k++)

{

num_of_train_param += (kh * kw * kc[k] + 1);

}

int num_of_conn = 0;

for (int k = 0; k < out_c; k++)

{

num_of_conn += (kh * kw * kc[k] + 1) * out_h * out_w;

}

int expected_num_of_train_param = 1516;

int expected_num_of_conn = 151600;

if (expected_num_of_train_param == num_of_train_param && expected_num_of_conn == num_of_conn)

{

printf("Layer C3 has %d trainable parameters, %d connections\n", num_of_train_param, num_of_conn);

}

else

{

printf("Layer C3 wrong\n");

}

}

...

}

5. S4 层

公式

和 S2 层的计算公式一致, 略。

代码

// S4

{

S4.in_h = C3.out_h;

S4.in_w = C3.out_w;

S4.in_c = C3.out_c;

S4.kh = 2;

S4.kw = 2;

S4.number_of_kernel = C3.number_of_kernel;

S4.stride_h = 2;

S4.stride_w = 2;

S4.out_h = 5;

S4.out_w = 5;

S4.out_c = S4.in_c;

// unpack

int number_of_kernel = S4.number_of_kernel;

int out_h = S4.out_h;

int out_w = S4.out_w;

int kh = S4.kh;

int kw = S4.kw;

int in_c = S4.in_c;

for (int k = 0; k < number_of_kernel; k++)

{

S4_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

}

int num_of_train_param = number_of_kernel * 2; // #bias + #coeff

int num_of_conn = (kh * kw + 1) * in_c * out_h * out_w;

int expected_num_of_train_param = 32;

int expected_num_of_conn = 2000;

if (expected_num_of_train_param == num_of_train_param && expected_num_of_conn == num_of_conn)

{

printf("Layer S4 has %d trainable parameters, %d connections\n", num_of_train_param, num_of_conn);

}

else

{

printf("Layer S4 wrong\n");

}

}

6. C5 层

公式

和C1层公式一致,略。

代码

// C5

{

C5.in_h = S4.out_h;

C5.in_w = S4.out_w;

C5.in_c = S4.out_c;

C5.kh = 5;

C5.kw = 5;

C5.number_of_kernel = 120;

C5.out_h = 1;

C5.out_w = 1;

C5.out_c = C5.number_of_kernel;

// unpack

int kh = C5.kh;

int kw = C5.kw;

int in_channel = C5.in_c;

int number_of_kernel = C5.number_of_kernel;

double** kernel = C5_kernel;

int out_h = C5.out_h;

int out_w = C5.out_w;

double* bias = C5_bias;

int in_c = C5.in_c;

int out_c = C5.out_c;

int fan_in = get_fan_in(in_channel, kh, kw);

int fan_out = get_fan_out(number_of_kernel, kh, kw);

for (int k = 0; k < number_of_kernel; k++)

{

kernel[k] = (double*)malloc(kh * kw * sizeof(double));

init_kernel(kernel[k], kh, kw, fan_in, fan_out);

C5_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

bias[k] = 0.0;

}

int num_of_trainable_conn = (kh * kw * in_c + 1) * out_h * out_w * out_c;

int expected_num_of_trainable_conn = 48120;

if (expected_num_of_trainable_conn == num_of_trainable_conn)

{

printf("Layer C5 has %d trainable connections\n", num_of_trainable_conn);

}

else

{

printf("Layer C5 wrong\n");

}

}

7. F6层

公式

F6 层是 Fully Connected layer, 全连接层。可学习参数数量,等于连接数量, 等于:

nk ∗ ( feat i n + 1 ) \text{nk} * (\text{feat}_{in} + 1) nk∗(featin+1)

其中:

- nk \text{nk} nk: kernel 数量

- feat i n \text{feat}_{in} featin: 输入 feature map 数量 (看做是1维的向量)

- 1: bias

代码

// F6

{

F6.in_num = C5.out_h * C5.out_w * C5.out_c;

F6.out_num = 84;

// unpack

int in_num = F6.in_num;

int out_num = F6.out_num;

int num_of_kernel = out_num;

for (int k = 0; k < num_of_kernel; k++)

{

F6_kernel[k] = (double*)malloc(in_num * sizeof(double));

F6_bias[k] = 0;

}

int num_of_train_param = num_of_kernel * (in_num + 1);

int expected_num_of_train_param = 10164;

if (expected_num_of_train_param == num_of_train_param)

{

printf("Layer F6 has %d trainable parameters\n", num_of_train_param);

}

else

{

printf("Layer F6 wrong\n");

}

}

8. C1~F6 层初始化完整代

typedef struct ConvHyper

{

int in_h;

int in_w;

int in_c;

int kh;

int kw;

int number_of_kernel;

int out_h;

int out_w;

int out_c;

} ConvHyper;

ConvHyper C1;

double* C1_kernel[6];

double C1_bias[6];

double* C1_output[6];

typedef struct SubsampleHyper

{

int in_h;

int in_w;

int in_c;

int kh;

int kw;

int number_of_kernel;

int stride_h;

int stride_w;

int out_h;

int out_w;

int out_c;

} SubsampleHyper;

SubsampleHyper S2;

double* S2_output[6];

double S2_coeff[6];

double S2_bias[6];

ConvHyper C3;

double* C3_kernel[16];

double C3_bias[16];

double* C3_output[16];

SubsampleHyper S4;

double* S4_output[16];

ConvHyper C5;

double* C5_kernel[120];

double C5_bias[120];

double* C5_output[120];

typedef struct FullyConnectedHyper

{

int in_num;

int out_num;

} FullyConnectedHyper;

FullyConnectedHyper F6;

double F6_output[84];

double* F6_kernel[84];

double F6_bias[84];

void init_lenet()

{

// C1

{

C1.in_h = 32;

C1.in_w = 32;

C1.in_c = 1;

C1.kh = 5;

C1.kw = 5;

C1.number_of_kernel = 6;

C1.out_h = 28;

C1.out_w = 28;

C1.out_c = C1.number_of_kernel;

// unpack

int kh = C1.kh;

int kw = C1.kw;

int in_channel = C1.in_c;

int number_of_kernel = C1.number_of_kernel;

double** kernel = C1_kernel;

int out_h = C1.out_h;

int out_w = C1.out_w;

double* bias = C1_bias;

int in_c = C1.in_c;

int out_c = C1.out_c;

int fan_in = get_fan_in(in_channel, kh, kw);

int fan_out = get_fan_out(number_of_kernel, kh, kw);

for (int k = 0; k < number_of_kernel; k++)

{

kernel[k] = (double*)malloc(kh * kw * sizeof(double));

init_kernel(kernel[k], kh, kw, fan_in, fan_out);

C1_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

bias[k] = 0.0;

}

int num_of_train_param = number_of_kernel * (kh * kw * in_c + 1);

int num_of_conn = (kh * kw * in_c + 1) * out_h * out_w * out_c;

int expected_num_of_train_param = 156;

int expected_num_of_conn = 122304;

if (expected_num_of_train_param == num_of_train_param && expected_num_of_conn == num_of_conn)

{

printf("Layer C1 has %d trainable parameters, %d connections\n", num_of_train_param, num_of_conn);

}

else

{

printf("Layer C1 wrong\n");

}

}

// S2

{

S2.in_h = C1.out_h;

S2.in_w = C1.out_w;

S2.in_c = C1.out_c;

S2.kh = 2;

S2.kw = 2;

S2.number_of_kernel = C1.number_of_kernel;

S2.stride_h = 2;

S2.stride_w = 2;

S2.out_h = 14;

S2.out_w = 14;

S2.out_c = S2.in_c;

// unpack

int number_of_kernel = S2.number_of_kernel;

int out_h = S2.out_h;

int out_w = S2.out_w;

int kh = S2.kh;

int kw = S2.kw;

int in_c = S2.in_c;

for (int k = 0; k < number_of_kernel; k++)

{

S2_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

}

int num_of_train_param = number_of_kernel * 2; // #bias + #coeff

int num_of_conn = (kh * kw + 1) * in_c * out_h * out_w;

int expected_num_of_train_param = 12;

int expected_num_of_conn = 5880;

if (expected_num_of_train_param == num_of_train_param && expected_num_of_conn == num_of_conn)

{

printf("Layer S2 has %d trainable parameters, %d connections\n", num_of_train_param, num_of_conn);

}

else

{

printf("Layer S2 wrong\n");

}

}

// C3

{

C3.in_h = S2.out_h;

C3.in_w = S2.out_w;

C3.in_c = S2.out_c;

C3.kh = 5;

C3.kw = 5;

C3.number_of_kernel = 16;

C3.out_h = 10;

C3.out_w = 10;

C3.out_c = C3.number_of_kernel;

// unpack

int kh = C3.kh;

int kw = C3.kw;

int in_channel = C3.in_c;

int number_of_kernel = C3.number_of_kernel;

double** kernel = C3_kernel;

int out_h = C3.out_h;

int out_w = C3.out_w;

double* bias = C3_bias;

int in_c = C3.in_c;

int out_c = C3.out_c;

int fan_in = get_fan_in(in_channel, kh, kw);

int fan_out = get_fan_out(number_of_kernel, kh, kw);

for (int k = 0; k < number_of_kernel; k++)

{

kernel[k] = (double*)malloc(kh * kw * sizeof(double));

init_kernel(kernel[k], kh, kw, fan_in, fan_out);

C3_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

bias[k] = 0.0;

}

bool X = true;

bool O = false;

bool connection_table[6 * 16] =

{

// 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

X, O, O, O, X, X, X, O, O, X, X, X, X, O, X, X,

X, X, O, O, O, X, X, X, O, O, X, X, X, X, O, X,

X, X, X, O, O, O, X, X, X, O, O, X, O, X, X, X,

O, X, X, X, O, O, X, X, X, X, O, O, X, O, X, X,

O, O, X, X, X, O, O, X, X, X, X, O, X, X, O, X,

O, O, O, X, X, X, O, O, X, X, X, X, O, X, X, X,

};

int num_of_train_param = 0;

int kc[16] = { 0 };

for (int i = 0; i < in_c; i++)

{

for (int j = 0; j < out_c; j++)

{

int idx = i * out_c + j;

kc[j] += connection_table[idx];

}

}

//int kc[16] = {

// 3, 3, 3, 3,

// 3, 3, 4, 4,

// 4, 4, 4, 4,

// 4, 4, 4, 6

//};

for (int k = 0; k < out_c; k++)

{

num_of_train_param += (kh * kw * kc[k] + 1);

}

int num_of_conn = 0;

for (int k = 0; k < out_c; k++)

{

num_of_conn += (kh * kw * kc[k] + 1) * out_h * out_w;

}

int expected_num_of_train_param = 1516;

int expected_num_of_conn = 151600;

if (expected_num_of_train_param == num_of_train_param && expected_num_of_conn == num_of_conn)

{

printf("Layer C3 has %d trainable parameters, %d connections\n", num_of_train_param, num_of_conn);

}

else

{

printf("Layer C3 wrong\n");

}

}

// S4

{

S4.in_h = C3.out_h;

S4.in_w = C3.out_w;

S4.in_c = C3.out_c;

S4.kh = 2;

S4.kw = 2;

S4.number_of_kernel = C3.number_of_kernel;

S4.stride_h = 2;

S4.stride_w = 2;

S4.out_h = 5;

S4.out_w = 5;

S4.out_c = S4.in_c;

// unpack

int number_of_kernel = S4.number_of_kernel;

int out_h = S4.out_h;

int out_w = S4.out_w;

int kh = S4.kh;

int kw = S4.kw;

int in_c = S4.in_c;

for (int k = 0; k < number_of_kernel; k++)

{

S4_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

}

int num_of_train_param = number_of_kernel * 2; // #bias + #coeff

int num_of_conn = (kh * kw + 1) * in_c * out_h * out_w;

int expected_num_of_train_param = 32;

int expected_num_of_conn = 2000;

if (expected_num_of_train_param == num_of_train_param && expected_num_of_conn == num_of_conn)

{

printf("Layer S4 has %d trainable parameters, %d connections\n", num_of_train_param, num_of_conn);

}

else

{

printf("Layer S4 wrong\n");

}

}

// C5

{

C5.in_h = S4.out_h;

C5.in_w = S4.out_w;

C5.in_c = S4.out_c;

C5.kh = 5;

C5.kw = 5;

C5.number_of_kernel = 120;

C5.out_h = 1;

C5.out_w = 1;

C5.out_c = C5.number_of_kernel;

// unpack

int kh = C5.kh;

int kw = C5.kw;

int in_channel = C5.in_c;

int number_of_kernel = C5.number_of_kernel;

double** kernel = C5_kernel;

int out_h = C5.out_h;

int out_w = C5.out_w;

double* bias = C5_bias;

int in_c = C5.in_c;

int out_c = C5.out_c;

int fan_in = get_fan_in(in_channel, kh, kw);

int fan_out = get_fan_out(number_of_kernel, kh, kw);

for (int k = 0; k < number_of_kernel; k++)

{

kernel[k] = (double*)malloc(kh * kw * sizeof(double));

init_kernel(kernel[k], kh, kw, fan_in, fan_out);

C5_output[k] = (double*)malloc(out_h * out_w * sizeof(double));

bias[k] = 0.0;

}

int num_of_trainable_conn = (kh * kw * in_c + 1) * out_h * out_w * out_c;

int expected_num_of_trainable_conn = 48120;

if (expected_num_of_trainable_conn == num_of_trainable_conn)

{

printf("Layer C5 has %d trainable connections\n", num_of_trainable_conn);

}

else

{

printf("Layer C5 wrong\n");

}

}

// F6

{

F6.in_num = C5.out_h * C5.out_w * C5.out_c;

F6.out_num = 84;

// unpack

int in_num = F6.in_num;

int out_num = F6.out_num;

int num_of_kernel = out_num;

for (int k = 0; k < num_of_kernel; k++)

{

F6_kernel[k] = (double*)malloc(in_num * sizeof(double));

F6_bias[k] = 0;

}

int num_of_train_param = num_of_kernel * (in_num + 1);

int expected_num_of_train_param = 10164;

if (expected_num_of_train_param == num_of_train_param)

{

printf("Layer F6 has %d trainable parameters\n", num_of_train_param);

}

else

{

printf("Layer F6 wrong\n");

}

}

}

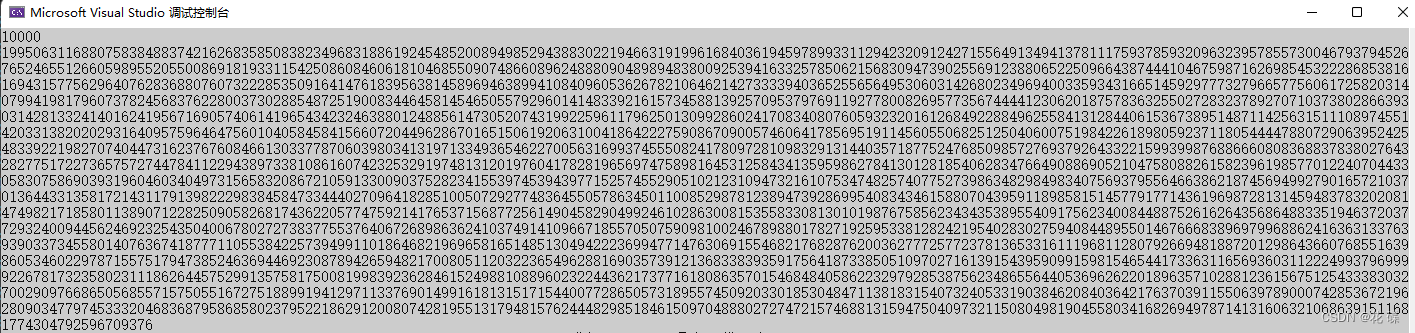

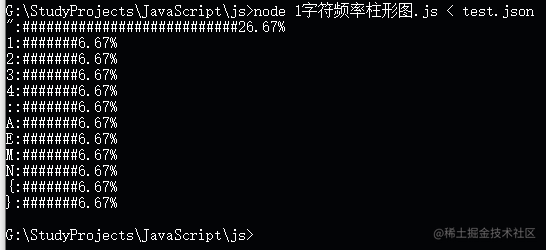

运行输出:

Layer C1 has 156 trainable parameters, 122304 connections

Layer S2 has 12 trainable parameters, 5880 connections

Layer C3 has 1516 trainable parameters, 151600 connections

Layer S4 has 32 trainable parameters, 2000 connections

Layer C5 has 48120 trainable connections

Layer F6 has 10164 trainable parameters

9. Referencecs

- https://vision.stanford.edu/cs598_spring07/papers/Lecun98.pdf