作者: tomxu 原文来源: https://tidb.net/blog/69083bca

TiDB v7.1.0版本 相关(部署、在线扩容、数据迁移)测试

一、服务器信息参数

| 序号 | 服务器型号 | 主机名 | 配置 | | IP地址 | 用户名 | 密码 | | -- | --------- | --------------------------- | -------------------------------------------------------------- | - | ------------- | ---- | --------- | | 1 | Dell R610 | 192_168_31_50_TiDB | E5-2609 v3 @ 1.90GHz * 2 / 32GB ROM /2TB HD/10000* 2 Network | | 192.168.31.50 | root | P@ssw0rd | | 2 | Dell R610 | 192_168_31_51_PD1 | E5-2609 v3 @ 1.90GHz * 2 / 32GB ROM /2TB HD/10000* 2 Network | | 192.168.31.51 | root | P@ssw0rd | | 3 | Dell R610 | 192_168_31_52_PD2 | E5-2609 v3 @ 1.90GHz * 2 / 32GB ROM /2TB HD/10000* 2 Network | | 192.168.31.52 | root | P@ssw0rd | | 4 | Dell R610 | 192_168_31_53_TiKV1 | E5-2609 v3 @ 1.90GHz * 2 / 32GB ROM /2TB HD/10000* 2 Network | | 192.168.31.53 | root | P@ssw0rd | | 5 | Dell R610 | 192_168_31_54_TiKV2 | E5-2609 v3 @ 1.90GHz * 2 / 32GB ROM /2TB HD/10000* 2 Network | | 192.168.31.54 | root | P@ssw0rd | | 6 | Dell R610 | 192_168_31_55_TiKV3 | E5-2609 v3 @ 1.90GHz * 2 / 32GB ROM /2TB HD/10000* 2 Network | | 192.168.31.55 | root | P@ssw0rd | | 7 | Dell R610 | 192_168_31_56_TiKV4 | E5-2609 v3 @ 1.90GHz * 2 / 32GB ROM /2TB HD/10000* 2 Network | | 192.168.31.56 | root | P@ssw0rd | | 8 | Dell R610 | 192_168_31_57_Dashboard | E5-2609 v3 @ 1.90GHz * 2 / 32GB ROM /2TB HD/10000* 2 Network | | 192.168.31.57 | root | P@ssw0rd |

二、TiDB v7.1.0 离线安装包下载

本次采用在官方下载页面选择对应版本的 TiDB server 离线镜像包(包含 TiUP 离线组件包)。需要同时下载 TiDB-community-server 软件包和 TiDB-community-toolkit 软件包。

TiDB-community-server 软件包: https://download.pingcap.org/tidb-community-server-v7.1.0-linux-amd64.tar.gz

TiDB-community-toolkit 软件包: https://download.pingcap.org/tidb-community-toolkit-v7.1.0-linux-amd64.tar.gz

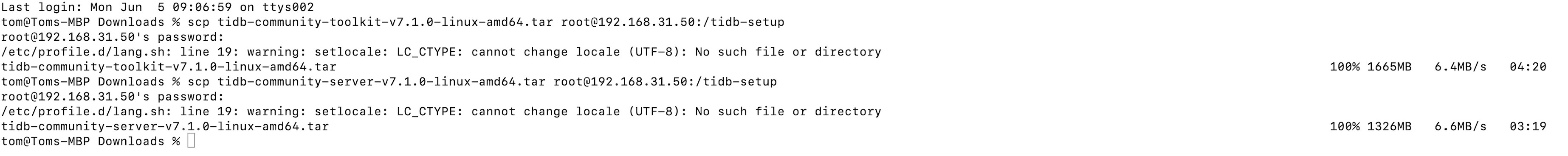

三、上传安装包到 192_168_31_50_TiDB 主机的 /home目录,

可以使用以下两种方式

(1)命令方式上传文件到指定的服务器:scp 上传文件的包名子 root@上传服务器地址:/上传路径

(2)工具方式上传文件到指定的服务器:可以使用winscp、xsftp等工具

上传完成后,解压上述两个包文件 [root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# sh local_install.sh

Disable telemetry success

Successfully set mirror to /tidb-setup/tidb-community-server-v7.1.0-linux-amd64

Detected shell: bash

Shell profile: /root/.bash_profile

Installed path: /root/.tiup/bin/tiup

===============================================

1. source /root/.bash_profile

2. Have a try: tiup playground

===============================================

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# source /root/.bash_profile

初始化集群拓扑文件[首次部署,排除192.168.31.56,然后在进行扩容操作]

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster template > tidb_install.yaml

编辑拓扑文件内容如下:

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

# # The user who runs the tidb cluster.

user: "tidb"

# # group is used to specify the group name the user belong to if it's not the same as user.

# group: "tidb"

# # SSH port of servers in the managed cluster.

ssh_port: 22

# # Storage directory for cluster deployment files, startup scripts, and configuration files.

deploy_dir: "/tidb-deploy"

# # TiDB Cluster data storage directory

data_dir: "/tidb-data"

# # Supported values: "amd64", "arm64" (default: "amd64")

arch: "amd64"

# # Resource Control is used to limit the resource of an instance.

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html

# # Supports using instance-level `resource_control` to override global `resource_control`.

# resource_control:

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#MemoryLimit=bytes

# memory_limit: "2G"

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#CPUQuota=

# # The percentage specifies how much CPU time the unit shall get at maximum, relative to the total CPU time available on one CPU. Use values > 100% for allotting CPU time on more than one CPU.

# # Example: CPUQuota=200% ensures that the executed processes will never get more than two CPU time.

# cpu_quota: "200%"

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#IOReadBandwidthMax=device%20bytes

# io_read_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"

# io_write_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"

# # Monitored variables are applied to all the machines.

monitored:

# # The communication port for reporting system information of each node in the TiDB cluster.

node_exporter_port: 9100

# # Blackbox_exporter communication port, used for TiDB cluster port monitoring.

blackbox_exporter_port: 9115

# # Storage directory for deployment files, startup scripts, and configuration files of monitoring components.

# deploy_dir: "/tidb-deploy/monitored-9100"

# # Data storage directory of monitoring components.

# data_dir: "/tidb-data/monitored-9100"

# # Log storage directory of the monitoring component.

# log_dir: "/tidb-deploy/monitored-9100/log"

# # Server configs are used to specify the runtime configuration of TiDB components.

# # All configuration items can be found in TiDB docs:

# # - TiDB: https://pingcap.com/docs/stable/reference/configuration/tidb-server/configuration-file/

# # - TiKV: https://pingcap.com/docs/stable/reference/configuration/tikv-server/configuration-file/

# # - PD: https://pingcap.com/docs/stable/reference/configuration/pd-server/configuration-file/

# # - TiFlash: https://docs.pingcap.com/tidb/stable/tiflash-configuration

# #

# # All configuration items use points to represent the hierarchy, e.g:

# # readpool.storage.use-unified-pool

# # ^ ^

# # - example: https://github.com/pingcap/tiup/blob/master/examples/topology.example.yaml.

# # You can overwrite this configuration via the instance-level `config` field.

# server_configs:

# tidb:

# tikv:

# pd:

# tiflash:

# tiflash-learner:

# # Server configs are used to specify the configuration of PD Servers.

pd_servers:

# # The ip address of the PD Server.

- host: 192.168.31.51

# # SSH port of the server.

# ssh_port: 22

# # PD Server name

# name: "pd-1"

# # communication port for TiDB Servers to connect.

# client_port: 2379

# # Communication port among PD Server nodes.

# peer_port: 2380

# # PD Server deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/pd-2379"

# # PD Server data storage directory.

# data_dir: "/tidb-data/pd-2379"

# # PD Server log file storage directory.

# log_dir: "/tidb-deploy/pd-2379/log"

# # numa node bindings.

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.pd` values.

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

- host: 192.168.31.52

# ssh_port: 22

# name: "pd-1"

# client_port: 2379

# peer_port: 2380

# deploy_dir: "/tidb-deploy/pd-2379"

# data_dir: "/tidb-data/pd-2379"

# log_dir: "/tidb-deploy/pd-2379/log"

# numa_node: "0,1"

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

# host: 10.0.1.13

# ssh_port: 22

# name: "pd-1"

# client_port: 2379

# peer_port: 2380

# deploy_dir: "/tidb-deploy/pd-2379"

# data_dir: "/tidb-data/pd-2379"

# log_dir: "/tidb-deploy/pd-2379/log"

# numa_node: "0,1"

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

# # Server configs are used to specify the configuration of TiDB Servers.

tidb_servers:

# # The ip address of the TiDB Server.

- host: 192.168.31.50

# # SSH port of the server.

# ssh_port: 22

# # The port for clients to access the TiDB cluster.

# port: 4000

# # TiDB Server status API port.

# status_port: 10080

# # TiDB Server deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/tidb-4000"

# # TiDB Server log file storage directory.

# log_dir: "/tidb-deploy/tidb-4000/log"

# # The ip address of the TiDB Server.

#host: 10.0.1.15

# ssh_port: 22

# port: 4000

# status_port: 10080

# deploy_dir: "/tidb-deploy/tidb-4000"

# log_dir: "/tidb-deploy/tidb-4000/log"

#host: 10.0.1.16

# ssh_port: 22

# port: 4000

# status_port: 10080

# deploy_dir: "/tidb-deploy/tidb-4000"

# log_dir: "/tidb-deploy/tidb-4000/log"

# # Server configs are used to specify the configuration of TiKV Servers.

tikv_servers:

# # The ip address of the TiKV Server.

- host: 192.168.31.53

# # SSH port of the server.

# ssh_port: 22

# # TiKV Server communication port.

# port: 20160

# # TiKV Server status API port.

# status_port: 20180

# # TiKV Server deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/tikv-20160"

# # TiKV Server data storage directory.

# data_dir: "/tidb-data/tikv-20160"

# # TiKV Server log file storage directory.

# log_dir: "/tidb-deploy/tikv-20160/log"

# # The following configs are used to overwrite the `server_configs.tikv` values.

# config:

# log.level: warn

# # The ip address of the TiKV Server.

- host: 192.168.31.54

# ssh_port: 22

# port: 20160

# status_port: 20180

# deploy_dir: "/tidb-deploy/tikv-20160"

# data_dir: "/tidb-data/tikv-20160"

# log_dir: "/tidb-deploy/tikv-20160/log"

# config:

# log.level: warn

- host: 192.168.31.55

# ssh_port: 22

# port: 20160

# status_port: 20180

# deploy_dir: "/tidb-deploy/tikv-20160"

# data_dir: "/tidb-data/tikv-20160"

# log_dir: "/tidb-deploy/tikv-20160/log"

# config:

# log.level: warn

# # Server configs are used to specify the configuration of TiFlash Servers.

tiflash_servers:

# # The ip address of the TiFlash Server.

- host: 192.168.31.57

# # SSH port of the server.

# ssh_port: 22

# # TiFlash TCP Service port.

# tcp_port: 9000

# # TiFlash raft service and coprocessor service listening address.

# flash_service_port: 3930

# # TiFlash Proxy service port.

# flash_proxy_port: 20170

# # TiFlash Proxy metrics port.

# flash_proxy_status_port: 20292

# # TiFlash metrics port.

# metrics_port: 8234

# # TiFlash Server deployment file, startup script, configuration file storage directory.

# deploy_dir: /tidb-deploy/tiflash-9000

## With cluster version >= v4.0.9 and you want to deploy a multi-disk TiFlash node, it is recommended to

## check config.storage.* for details. The data_dir will be ignored if you defined those configurations.

## Setting data_dir to a ','-joined string is still supported but deprecated.

## Check https://docs.pingcap.com/tidb/stable/tiflash-configuration#multi-disk-deployment for more details.

# # TiFlash Server data storage directory.

# data_dir: /tidb-data/tiflash-9000

# # TiFlash Server log file storage directory.

# log_dir: /tidb-deploy/tiflash-9000/log

# # The ip address of the TiKV Server.

#host: 10.0.1.21

# ssh_port: 22

# tcp_port: 9000

# flash_service_port: 3930

# flash_proxy_port: 20170

# flash_proxy_status_port: 20292

# metrics_port: 8234

# deploy_dir: /tidb-deploy/tiflash-9000

# data_dir: /tidb-data/tiflash-9000

# log_dir: /tidb-deploy/tiflash-9000/log

# # Server configs are used to specify the configuration of Prometheus Server.

monitoring_servers:

# # The ip address of the Monitoring Server.

- host: 192.168.31.57

# # SSH port of the server.

# ssh_port: 22

# # Prometheus Service communication port.

# port: 9090

# # ng-monitoring servive communication port

# ng_port: 12020

# # Prometheus deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/prometheus-8249"

# # Prometheus data storage directory.

# data_dir: "/tidb-data/prometheus-8249"

# # Prometheus log file storage directory.

# log_dir: "/tidb-deploy/prometheus-8249/log"

# # Server configs are used to specify the configuration of Grafana Servers.

grafana_servers:

# # The ip address of the Grafana Server.

- host: 192.168.31.57

# # Grafana web port (browser access)

# port: 3000

# # Grafana deployment file, startup script, configuration file storage directory.

# deploy_dir: /tidb-deploy/grafana-3000

# # Server configs are used to specify the configuration of Alertmanager Servers.

alertmanager_servers:

# # The ip address of the Alertmanager Server.

- host: 192.168.31.57

# # SSH port of the server.

# ssh_port: 22

# # Alertmanager web service port.

# web_port: 9093

# # Alertmanager communication port.

# cluster_port: 9094

# # Alertmanager deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/alertmanager-9093"

# # Alertmanager data storage directory.

# data_dir: "/tidb-data/alertmanager-9093"

# # Alertmanager log file storage directory.

# log_dir: "/tidb-deploy/alertmanager-9093/log"

进行按集群拓扑文件对服务器条件检测

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster check ./tidb_install.yaml --user root -p

Input SSH password:

安装前条件检测结果如下:可以看到结果中有部份fail或warn状态信息,可以能过增加修复参数 --apply 进行修复。

Node Check Result Message

---- ----- ------ -------

192.168.31.54 disk Warn mount point / does not have 'noatime' option set

192.168.31.54 cpu-governor Warn Unable to determine current CPU frequency governor policy

192.168.31.54 service Fail service firewalld is running but should be stopped

192.168.31.54 swap Warn swap is enabled, please disable it for best performance

192.168.31.54 thp Fail THP is enabled, please disable it for best performance

192.168.31.55 swap Warn swap is enabled, please disable it for best performance

192.168.31.55 disk Warn mount point / does not have 'noatime' option set

192.168.31.55 service Fail service firewalld is running but should be stopped

192.168.31.55 cpu-governor Warn Unable to determine current CPU frequency governor policy

192.168.31.55 thp Fail THP is enabled, please disable it for best performance

192.168.31.50 service Fail service firewalld is running but should be stopped

192.168.31.50 cpu-governor Warn Unable to determine current CPU frequency governor policy

192.168.31.50 swap Warn swap is enabled, please disable it for best performance

192.168.31.50 thp Fail THP is enabled, please disable it for best performance

192.168.31.57 command Fail numactl not usable, bash: numactl: command not found

192.168.31.57 disk Warn mount point / does not have 'noatime' option set

192.168.31.57 sysctl Fail net.core.somaxconn = 128, should be greater than 32768

192.168.31.57 sysctl Fail net.ipv4.tcp_syncookies = 1, should be 0

192.168.31.57 sysctl Fail vm.swappiness = 30, should be 0

192.168.31.57 thp Fail THP is enabled, please disable it for best performance

192.168.31.57 cpu-governor Warn Unable to determine current CPU frequency governor policy

192.168.31.57 swap Warn swap is enabled, please disable it for best performance

192.168.31.57 selinux Fail SELinux is not disabled

192.168.31.57 service Fail service firewalld is running but should be stopped

192.168.31.57 limits Fail soft limit of 'nofile' for user 'tidb' is not set or too low

192.168.31.57 limits Fail hard limit of 'nofile' for user 'tidb' is not set or too low

192.168.31.57 limits Fail soft limit of 'stack' for user 'tidb' is not set or too low

192.168.31.51 cpu-governor Warn Unable to determine current CPU frequency governor policy

192.168.31.51 disk Warn mount point / does not have 'noatime' option set

192.168.31.51 service Fail service firewalld is running but should be stopped

192.168.31.51 swap Warn swap is enabled, please disable it for best performance

192.168.31.51 thp Fail THP is enabled, please disable it for best performance

192.168.31.52 service Fail service firewalld is running but should be stopped

192.168.31.52 cpu-governor Warn Unable to determine current CPU frequency governor policyB

192.168.31.52 disk Warn mount point / does not have 'noatime' option set

192.168.31.52 swap Warn swap is enabled, please disable it for best performance

192.168.31.52 thp Fail THP is enabled, please disable it for best performance

192.168.31.53 disk Warn mount point / does not have 'noatime' option set

192.168.31.53 thp Fail THP is enabled, please disable it for best performance

192.168.31.53 service Fail service firewalld is running but should be stopped

192.168.31.53 cpu-governor Warn Unable to determine current CPU frequency governor policy

192.168.31.53 swap Warn swap is enabled, please disable it for best performance

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster check ./tidb_install.yaml --apply --user root -p

检测结果中有192.168.31.57主机上没有安装numactl,远程在此主机使用如下命令进行安装numactl

[root@192_168_31_57_Dashboard ~]# yum -y install numactl

Failed to set locale, defaulting to C

Loaded plugins: fastestmirror

Determining fastest mirrors

* base: mirrors.aliyun.com

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

base | 3.6 kB 00:00

extras | 2.9 kB 00:00

updates | 2.9 kB 00:00

(1/4): extras/7/x86_64/primary_db | 249 kB 00:00

(2/4): base/7/x86_64/group_gz | 153 kB 00:00

(3/4): base/7/x86_64/primary_db | 6.1 MB 00:00

(4/4): updates/7/x86_64/primary_db | 21 MB 00:01

Resolving Dependencies

--> Running transaction check

---> Package numactl.x86_64 0:2.0.12-5.el7 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

numactl x86_64 2.0.12-5.el7 base 66 k

Transaction Summary

================================================================================

Install 1 Package

Total download size: 66 k

Installed size: 141 k

Downloading packages:

warning: /var/cache/yum/x86_64/7/base/packages/numactl-2.0.12-5.el7.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID f4a80eb5: NOKEY

Public key for numactl-2.0.12-5.el7.x86_64.rpm is not installed

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster check ./tidb_install.yaml --user root -p

Input SSH password:

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster deploy tidb-lab v7.1.0 ./tidb_install.yaml --user root -p

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.12.2/tiup-cluster deploy tidb-lab v7.1.0 ./tidb_install.yaml --user root -p

Input SSH password:

+ Detect CPU Arch Name

- Detecting node 192.168.31.51 Arch info ... ⠹ Shell: host=192.168.31.51, sudo=false, command=`uname -m`

+ Detect CPU Arch Name

- Detecting node 192.168.31.51 Arch info ... Done

- Detecting node 192.168.31.52 Arch info ... Done

- Detecting node 192.168.31.53 Arch info ... Done

- Detecting node 192.168.31.54 Arch info ... Done

- Detecting node 192.168.31.55 Arch info ... Done

- Detecting node 192.168.31.50 Arch info ... Done

- Detecting node 192.168.31.57 Arch info ... Done

+ Detect CPU OS Name

- Detecting node 192.168.31.51 OS info ... ⠹ Shell: host=192.168.31.51, sudo=false, command=`uname -s`

+ Detect CPU OS Name

- Detecting node 192.168.31.51 OS info ... Done

- Detecting node 192.168.31.52 OS info ... Done

- Detecting node 192.168.31.53 OS info ... Done

- Detecting node 192.168.31.54 OS info ... Done

- Detecting node 192.168.31.55 OS info ... Done

- Detecting node 192.168.31.50 OS info ... Done

- Detecting node 192.168.31.57 OS info ... Done

Please confirm your topology:

Cluster type: tidb

Cluster name: tidb-lab

Cluster version: v7.1.0

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

pd 192.168.31.51 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 192.168.31.52 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

tikv 192.168.31.53 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 192.168.31.54 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 192.168.31.55 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tidb 192.168.31.50 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tiflash 192.168.31.57 9000/8123/3930/20170/20292/8234 linux/x86_64 /tidb-deploy/tiflash-9000,/tidb-data/tiflash-9000

prometheus 192.168.31.57 9090/12020 linux/x86_64 /tidb-deploy/prometheus-9090,/tidb-data/prometheus-9090

grafana 192.168.31.57 3000 linux/x86_64 /tidb-deploy/grafana-3000

alertmanager 192.168.31.57 9093/9094 linux/x86_64 /tidb-deploy/alertmanager-9093,/tidb-data/alertmanager-9093

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

+ Generate SSH keys ... Done

+ Download TiDB components

- Download pd:v7.1.0 (linux/amd64) ... Done

- Download tikv:v7.1.0 (linux/amd64) ... Done

- Download tidb:v7.1.0 (linux/amd64) ... Done

- Download tiflash:v7.1.0 (linux/amd64) ... Done

- Download prometheus:v7.1.0 (linux/amd64) ... Done

- Download grafana:v7.1.0 (linux/amd64) ... Done

- Download alertmanager: (linux/amd64) ... Done

- Download node_exporter: (linux/amd64) ... Done

- Download blackbox_exporter: (linux/amd64) ... Done

+ Initialize target host environments

- Prepare 192.168.31.54:22 ... Done

- Prepare 192.168.31.55:22 ... Done

- Prepare 192.168.31.50:22 ... Done

- Prepare 192.168.31.57:22 ... Done

- Prepare 192.168.31.51:22 ... Done

- Prepare 192.168.31.52:22 ... Done

- Prepare 192.168.31.53:22 ... Done

+ Deploy TiDB instance

- Copy pd -> 192.168.31.51 ... Done

- Copy pd -> 192.168.31.52 ... Done

- Copy tikv -> 192.168.31.53 ... Done

- Copy tikv -> 192.168.31.54 ... Done

- Copy tikv -> 192.168.31.55 ... Done

- Copy tidb -> 192.168.31.50 ... Done

- Copy tiflash -> 192.168.31.57 ... Done

- Copy prometheus -> 192.168.31.57 ... Done

- Copy grafana -> 192.168.31.57 ... Done

- Copy alertmanager -> 192.168.31.57 ... Done

- Deploy node_exporter -> 192.168.31.51 ... Done

- Deploy node_exporter -> 192.168.31.52 ... Done

- Deploy node_exporter -> 192.168.31.53 ... Done

- Deploy node_exporter -> 192.168.31.54 ... Done

- Deploy node_exporter -> 192.168.31.55 ... Done

- Deploy node_exporter -> 192.168.31.50 ... Done

- Deploy node_exporter -> 192.168.31.57 ... Done

- Deploy blackbox_exporter -> 192.168.31.53 ... Done

- Deploy blackbox_exporter -> 192.168.31.54 ... Done

- Deploy blackbox_exporter -> 192.168.31.55 ... Done

- Deploy blackbox_exporter -> 192.168.31.50 ... Done

- Deploy blackbox_exporter -> 192.168.31.57 ... Done

- Deploy blackbox_exporter -> 192.168.31.51 ... Done

- Deploy blackbox_exporter -> 192.168.31.52 ... Done

+ Copy certificate to remote host

+ Init instance configs

- Generate config pd -> 192.168.31.51:2379 ... Done

- Generate config pd -> 192.168.31.52:2379 ... Done

- Generate config tikv -> 192.168.31.53:20160 ... Done

- Generate config tikv -> 192.168.31.54:20160 ... Done

- Generate config tikv -> 192.168.31.55:20160 ... Done

- Generate config tidb -> 192.168.31.50:4000 ... Done

- Generate config tiflash -> 192.168.31.57:9000 ... Done

- Generate config prometheus -> 192.168.31.57:9090 ... Done

- Generate config grafana -> 192.168.31.57:3000 ... Done

- Generate config alertmanager -> 192.168.31.57:9093 ... Done

+ Init monitor configs

- Generate config node_exporter -> 192.168.31.55 ... Done

- Generate config node_exporter -> 192.168.31.50 ... Done

- Generate config node_exporter -> 192.168.31.57 ... Done

- Generate config node_exporter -> 192.168.31.51 ... Done

- Generate config node_exporter -> 192.168.31.52 ... Done

- Generate config node_exporter -> 192.168.31.53 ... Done

- Generate config node_exporter -> 192.168.31.54 ... Done

- Generate config blackbox_exporter -> 192.168.31.57 ... Done

- Generate config blackbox_exporter -> 192.168.31.51 ... Done

- Generate config blackbox_exporter -> 192.168.31.52 ... Done

- Generate config blackbox_exporter -> 192.168.31.53 ... Done

- Generate config blackbox_exporter -> 192.168.31.54 ... Done

- Generate config blackbox_exporter -> 192.168.31.55 ... Done

- Generate config blackbox_exporter -> 192.168.31.50 ... Done

Enabling component pd

Enabling instance 192.168.31.52:2379

Enabling instance 192.168.31.51:2379

Enable instance 192.168.31.52:2379 success

Enable instance 192.168.31.51:2379 success

Enabling component tikv

Enabling instance 192.168.31.55:20160

Enabling instance 192.168.31.53:20160

Enabling instance 192.168.31.54:20160

Enable instance 192.168.31.53:20160 success

Enable instance 192.168.31.55:20160 success

Enable instance 192.168.31.54:20160 success

Enabling component tidb

Enabling instance 192.168.31.50:4000

Enable instance 192.168.31.50:4000 success

Enabling component tiflash

Enabling instance 192.168.31.57:9000

Enable instance 192.168.31.57:9000 success

Enabling component prometheus

Enabling instance 192.168.31.57:9090

Enable instance 192.168.31.57:9090 success

Enabling component grafana

Enabling instance 192.168.31.57:3000

Enable instance 192.168.31.57:3000 success

Enabling component alertmanager

Enabling instance 192.168.31.57:9093

Enable instance 192.168.31.57:9093 success

Enabling component node_exporter

Enabling instance 192.168.31.51

Enabling instance 192.168.31.52

Enabling instance 192.168.31.54

Enabling instance 192.168.31.50

Enabling instance 192.168.31.55

Enabling instance 192.168.31.57

Enabling instance 192.168.31.53

Enable 192.168.31.51 success

Enable 192.168.31.53 success

Enable 192.168.31.52 success

Enable 192.168.31.55 success

Enable 192.168.31.54 success

Enable 192.168.31.50 success

Enable 192.168.31.57 success

Enabling component blackbox_exporter

Enabling instance 192.168.31.51

Enabling instance 192.168.31.54

Enabling instance 192.168.31.55

Enabling instance 192.168.31.50

Enabling instance 192.168.31.57

Enabling instance 192.168.31.53

Enabling instance 192.168.31.52

Enable 192.168.31.51 success

Enable 192.168.31.54 success

Enable 192.168.31.55 success

Enable 192.168.31.53 success

Enable 192.168.31.52 success

Enable 192.168.31.50 success

Enable 192.168.31.57 success

Cluster `tidb-lab` deployed successfully, you can start it with command: `tiup cluster start tidb-lab --init

部署完成,执行 tiup cluster start tidb-lab --init 安全初始化

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster start tidb-lab --init

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.12.2/tiup-cluster start tidb-lab --init

Starting cluster tidb-lab...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-lab/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-lab/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.55

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.53

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.52

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.51

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.50

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.57

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.57

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.57

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.57

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.54

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 192.168.31.52:2379

Starting instance 192.168.31.51:2379

Start instance 192.168.31.51:2379 success

Start instance 192.168.31.52:2379 success

Starting component tikv

Starting instance 192.168.31.55:20160

Starting instance 192.168.31.53:20160

Starting instance 192.168.31.54:20160

Start instance 192.168.31.53:20160 success

Start instance 192.168.31.55:20160 success

Start instance 192.168.31.54:20160 success

Starting component tidb

Starting instance 192.168.31.50:4000

Start instance 192.168.31.50:4000 success

Starting component tiflash

Starting instance 192.168.31.57:9000

Start instance 192.168.31.57:9000 success

Starting component prometheus

Starting instance 192.168.31.57:9090

Start instance 192.168.31.57:9090 success

Starting component grafana

Starting instance 192.168.31.57:3000

Start instance 192.168.31.57:3000 success

Starting component alertmanager

Starting instance 192.168.31.57:9093

Start instance 192.168.31.57:9093 success

Starting component node_exporter

Starting instance 192.168.31.52

Starting instance 192.168.31.55

Starting instance 192.168.31.57

Starting instance 192.168.31.51

Starting instance 192.168.31.50

Starting instance 192.168.31.54

Starting instance 192.168.31.53

Start 192.168.31.52 success

Start 192.168.31.54 success

Start 192.168.31.55 success

Start 192.168.31.53 success

Start 192.168.31.50 success

Start 192.168.31.51 success

Start 192.168.31.57 success

Starting component blackbox_exporter

Starting instance 192.168.31.52

Starting instance 192.168.31.55

Starting instance 192.168.31.50

Starting instance 192.168.31.51

Starting instance 192.168.31.54

Starting instance 192.168.31.53

Starting instance 192.168.31.57

Start 192.168.31.52 success

Start 192.168.31.55 success

Start 192.168.31.53 success

Start 192.168.31.54 success

Start 192.168.31.51 success

Start 192.168.31.50 success

Start 192.168.31.57 success

+ [ Serial ] - UpdateTopology: cluster=tidb-lab

Started cluster `tidb-lab` successfully

The root password of TiDB database has been changed.

The new password is: 'g@7Qa^2tbxU_w3510+'.

Copy and record it to somewhere safe, it is only displayed once, and will not be stored.

The generated password can NOT be get and shown again.

TIDB数据库默认密码为g@7Qa^2tbxU_w3510+ 尝试使用myql命令修改密码,使用如下命令,修改后的密码为P@ssw0rd

tom@Toms-MBP ~ % mysql -h 192.168.31.50 -P 4000 -u root -pg@7Qa^2tbxU_w3510+

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 409

Server version: 5.7.25-TiDB-v7.1.0 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> set password = password ('P@ssw0rd');

Query OK, 0 rows affected (0.11 sec)

mysql>

再次尝试以修改的密码登录此数据库,测试可以正常登陆

tom@Toms-MBP ~ % mysql -h 192.168.31.50 -P 4000 -u root -pP@ssw0rd

tom@Toms-MBP ~ % mysql -h 192.168.31.50 -P 4000 -u root -pP@ssw0rd

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 411

Server version: 5.7.25-TiDB-v7.1.0 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| INFORMATION_SCHEMA |

| METRICS_SCHEMA |

| PERFORMANCE_SCHEMA |

| mysql |

| test |

+--------------------+

5 rows in set (0.01 sec)

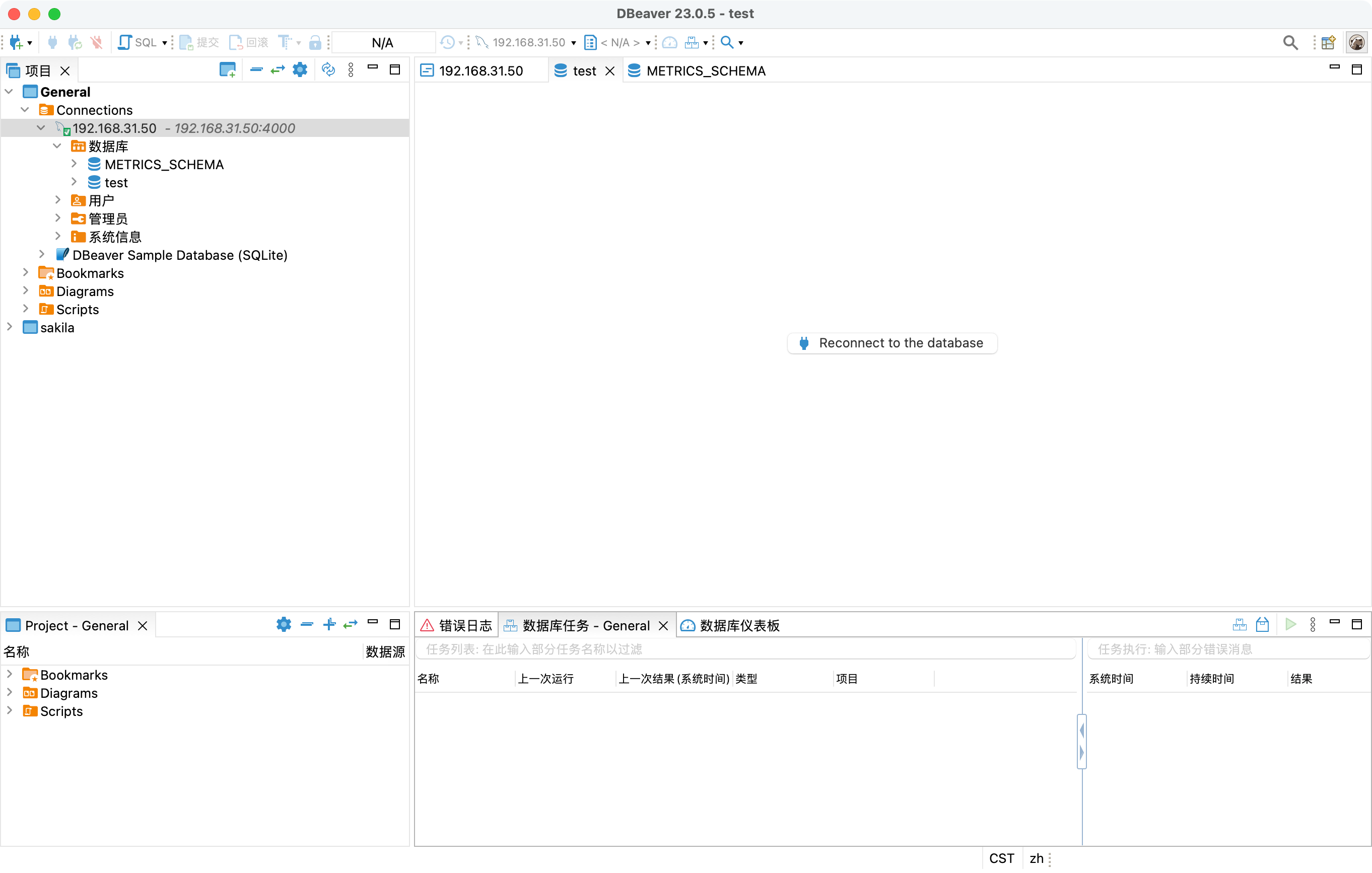

使用工具进入登录如下图

通过tiup cluster display tidb-lab 命令查看当前集群状态信息,如下图

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster display tidb-lab

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.12.2/tiup-cluster display tidb-lab

Cluster type: tidb

Cluster name: tidb-lab

Cluster version: v7.1.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.31.51:2379/dashboard

Grafana URL: http://192.168.31.57:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.31.57:9093 alertmanager 192.168.31.57 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.31.57:3000 grafana 192.168.31.57 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.31.51:2379 pd 192.168.31.51 2379/2380 linux/x86_64 Up|L|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.31.52:2379 pd 192.168.31.52 2379/2380 linux/x86_64 Up /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.31.57:9090 prometheus 192.168.31.57 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.31.50:4000 tidb 192.168.31.50 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.31.57:9000 tiflash 192.168.31.57 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.31.53:20160 tikv 192.168.31.53 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.31.54:20160 tikv 192.168.31.54 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.31.55:20160 tikv 192.168.31.55 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

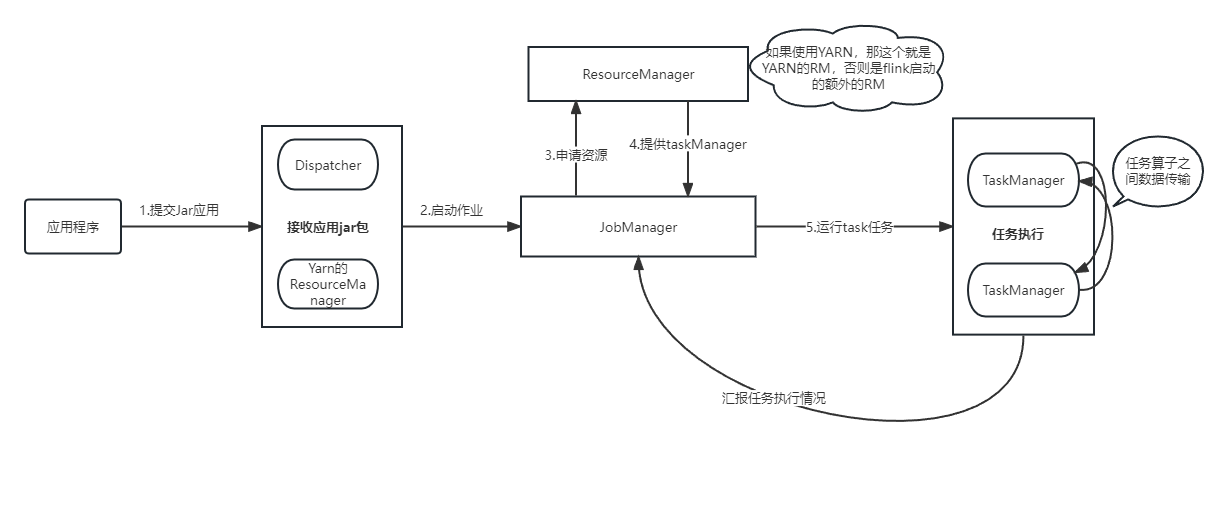

GC 状态面板

tom@Toms-MBP ~ % mysql -h 192.168.31.50 -P 4000 -u root -pP@ssw0rd

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 521

Server version: 5.7.25-TiDB-v7.1.0 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> select * from mysql.tidb;

+--------------------------+-----------------------------------------------------------------------------------------------------+---------------------------------------------------------------------------------------------+

| VARIABLE_NAME | VARIABLE_VALUE | COMMENT |

+--------------------------+-----------------------------------------------------------------------------------------------------+---------------------------------------------------------------------------------------------+

| bootstrapped | True | Bootstrap flag. Do not delete. |

| tidb_server_version | 146 | Bootstrap version. Do not delete. |

| system_tz | Asia/Shanghai | TiDB Global System Timezone. |

| new_collation_enabled | True | If the new collations are enabled. Do not edit it. |

| tikv_gc_leader_uuid | 622259489f40009 | Current GC worker leader UUID. (DO NOT EDIT) |

| tikv_gc_leader_desc | host:192_168_31_50_TiDB, pid:8607, start at 2023-06-05 10:31:26.645091475 +0800 CST m=+41.567402185 | Host name and pid of current GC leader. (DO NOT EDIT) |

| tikv_gc_leader_lease | 20230605-11:05:26.931 +0800 | Current GC worker leader lease. (DO NOT EDIT) |

| tikv_gc_auto_concurrency | true | Let TiDB pick the concurrency automatically. If set false, tikv_gc_concurrency will be used |

| tikv_gc_enable | true | Current GC enable status |

| tikv_gc_run_interval | 10m0s | GC run interval, at least 10m, in Go format. |

| tikv_gc_life_time | 10m0s | All versions within life time will not be collected by GC, at least 10m, in Go format. |

| tikv_gc_last_run_time | 20230605-11:02:26.989 +0800 | The time when last GC starts. (DO NOT EDIT) |

| tikv_gc_safe_point | 20230605-10:52:26.989 +0800 | All versions after safe point can be accessed. (DO NOT EDIT) |

| tikv_gc_scan_lock_mode | legacy | Mode of scanning locks, "physical" or "legacy" |

| tikv_gc_mode | distributed | Mode of GC, "central" or "distributed" |

+--------------------------+-----------------------------------------------------------------------------------------------------+---------------------------------------------------------------------------------------------+

15 rows in set (0.00 sec)

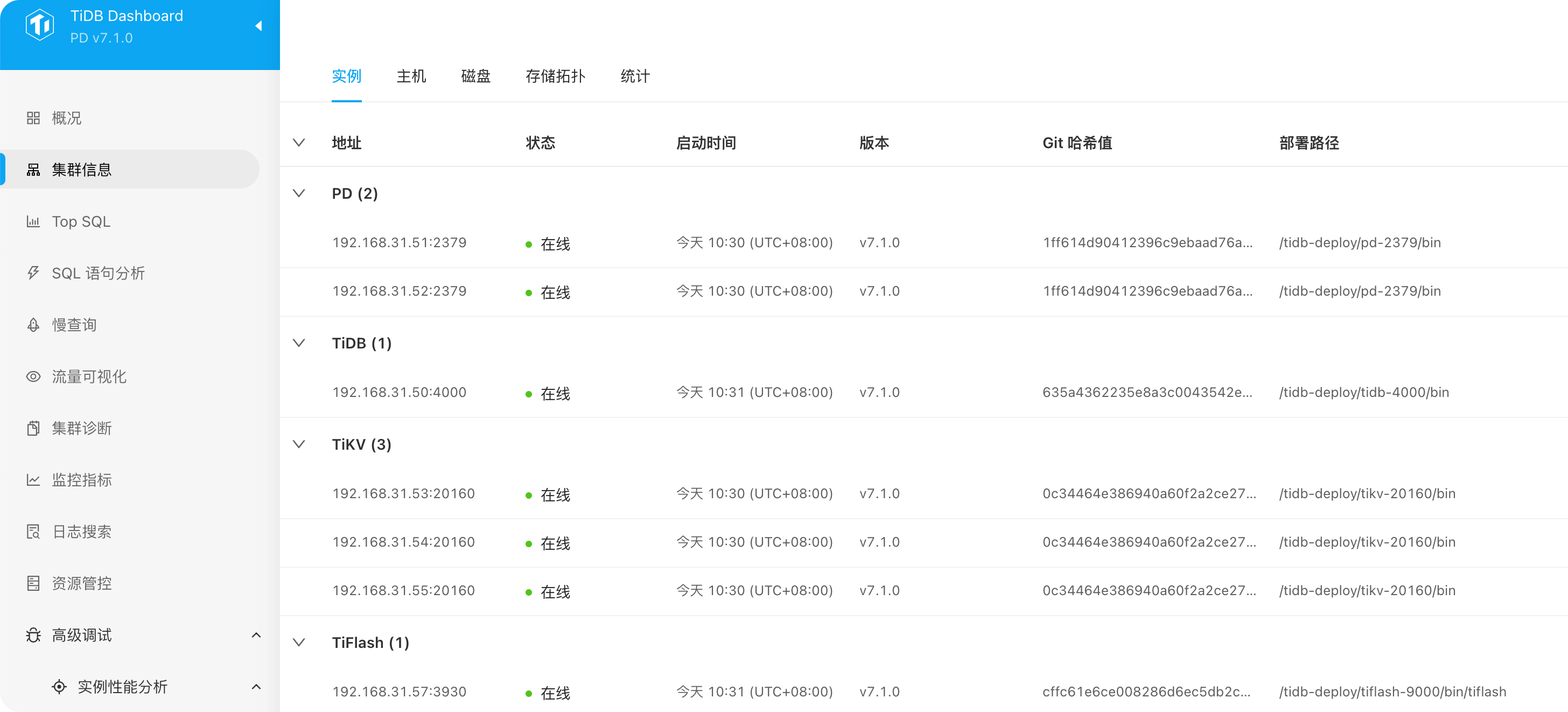

登录 Dashboard URL页面 http://192.168.31.51:2379/dashboard,用户名:root密码:P@ssw0rd,下图为集群信息页面

Dashboard中的集群信息,截图如下:

Dashboard中的监控指标信息,指标信息项目截图如下:

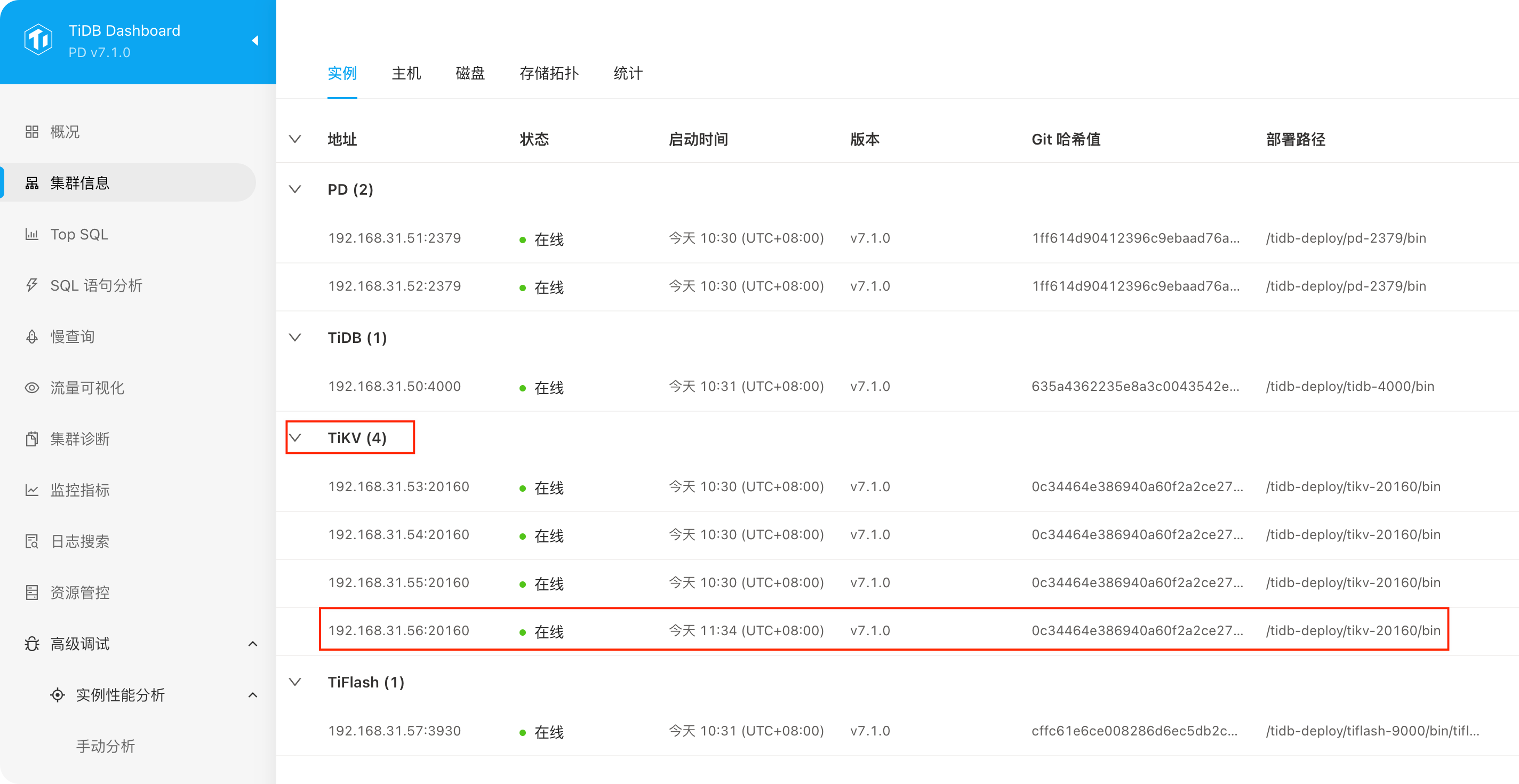

在线扩容操作,此操作把192.168.31.56扩容到TiKV里面,最终形成4台TiKV场景 首选编辑在线扩容文件,操作如下

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# vim scale-out.yml

tikv_servers:

- host: 192.168.31.56

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /tidb-deploy/tikv-20160

data_dir: /tidb-data/tikv-20160

log_dir: /tidb-deploy/tikv-20160/log

查看当前集群配置文件信息,操作如下

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster edit-config tidb-lab

global:

user: tidb

ssh_port: 22

ssh_type: builtin

deploy_dir: /tidb-deploy

data_dir: /tidb-data

os: linux

arch: amd64

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

deploy_dir: /tidb-deploy/monitor-9100

data_dir: /tidb-data/monitor-9100

log_dir: /tidb-deploy/monitor-9100/log

tidb_servers:

- host: 192.168.31.50

ssh_port: 22

port: 4000

status_port: 10080

deploy_dir: /tidb-deploy/tidb-4000

log_dir: /tidb-deploy/tidb-4000/log

arch: amd64

os: linux

tikv_servers:

- host: 192.168.31.53

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /tidb-deploy/tikv-20160

data_dir: /tidb-data/tikv-20160

log_dir: /tidb-deploy/tikv-20160/log

arch: amd64

os: linux

- host: 192.168.31.54

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /tidb-deploy/tikv-20160

data_dir: /tidb-data/tikv-20160

log_dir: /tidb-deploy/tikv-20160/log

arch: amd64

os: linux

- host: 192.168.31.55

ssh_port: 22

port: 20160

status_port: 20180

deploy_dir: /tidb-deploy/tikv-20160

data_dir: /tidb-data/tikv-20160

log_dir: /tidb-deploy/tikv-20160/log

arch: amd64

os: linux

tiflash_servers:

- host: 192.168.31.57

ssh_port: 22

tcp_port: 9000

http_port: 8123

flash_service_port: 3930

flash_proxy_port: 20170

flash_proxy_status_port: 20292

metrics_port: 8234

deploy_dir: /tidb-deploy/tiflash-9000

data_dir: /tidb-data/tiflash-9000

log_dir: /tidb-deploy/tiflash-9000/log

arch: amd64

os: linux

pd_servers:

- host: 192.168.31.51

ssh_port: 22

name: pd-192.168.31.51-2379

client_port: 2379

peer_port: 2380

deploy_dir: /tidb-deploy/pd-2379

data_dir: /tidb-data/pd-2379

log_dir: /tidb-deploy/pd-2379/log

arch: amd64

os: linux

- host: 192.168.31.52

ssh_port: 22

name: pd-192.168.31.52-2379

client_port: 2379

peer_port: 2380

deploy_dir: /tidb-deploy/pd-2379

data_dir: /tidb-data/pd-2379

log_dir: /tidb-deploy/pd-2379/log

arch: amd64

os: linux

monitoring_servers:

- host: 192.168.31.57

ssh_port: 22

port: 9090

ng_port: 12020

deploy_dir: /tidb-deploy/prometheus-9090

data_dir: /tidb-data/prometheus-9090

log_dir: /tidb-deploy/prometheus-9090/log

external_alertmanagers: []

arch: amd64

os: linux

grafana_servers:

- host: 192.168.31.57

ssh_port: 22

port: 3000

deploy_dir: /tidb-deploy/grafana-3000

arch: amd64

os: linux

username: admin

password: admin

anonymous_enable: false

root_url: ""

domain: ""

alertmanager_servers:

- host: 192.168.31.57

ssh_port: 22

web_port: 9093

cluster_port: 9094

deploy_dir: /tidb-deploy/alertmanager-9093

data_dir: /tidb-data/alertmanager-9093

log_dir: /tidb-deploy/alertmanager-9093/log

arch: amd64

os: linux

执行扩容操作前,如下命令进行检查合规:

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster check tidb-lab scale-out.yml --cluster --user root -p

检查合规同时执行修复命令如下:

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster check tidb-lab scale-out.yml --cluster --apply --user root -p

执行扩容TiKV的配置文件,如下命令:

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster scale-out tidb-lab scale-out.yml -p

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster scale-out tidb-lab scale-out.yml -p

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.12.2/tiup-cluster scale-out tidb-lab scale-out.yml -p

Input SSH password:

+ Detect CPU Arch Name

- Detecting node 192.168.31.56 Arch info ... Done

+ Detect CPU OS Name

- Detecting node 192.168.31.56 OS info ... Done

Please confirm your topology:

Cluster type: tidb

Cluster name: tidb-lab

Cluster version: v7.1.0

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

tikv 192.168.31.56 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-lab/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-lab/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.55

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.52

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.50

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.57

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.53

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.57

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.54

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.57

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.57

+ [Parallel] - UserSSH: user=tidb, host=192.168.31.51

+ Download TiDB components

- Download tikv:v7.1.0 (linux/amd64) ... Done

- Download node_exporter: (linux/amd64) ... Done

- Download blackbox_exporter: (linux/amd64) ... Done

+ Initialize target host environments

- Initialized host 192.168.31.56 ... Done

+ Deploy TiDB instance

- Deploy instance tikv -> 192.168.31.56:20160 ... Done

- Deploy node_exporter -> 192.168.31.56 ... Done

- Deploy blackbox_exporter -> 192.168.31.56 ... Done

+ Copy certificate to remote host

+ Generate scale-out config

- Generate scale-out config tikv -> 192.168.31.56:20160 ... Done

+ Init monitor config

- Generate config node_exporter -> 192.168.31.56 ... Done

- Generate config blackbox_exporter -> 192.168.31.56 ... Done

Enabling component tikv

Enabling instance 192.168.31.56:20160

Enable instance 192.168.31.56:20160 success

Enabling component node_exporter

Enabling instance 192.168.31.56

Enable 192.168.31.56 success

Enabling component blackbox_exporter

Enabling instance 192.168.31.56

Enable 192.168.31.56 success

+ [ Serial ] - Save meta

+ [ Serial ] - Start new instances

Starting component tikv

Starting instance 192.168.31.56:20160

Start instance 192.168.31.56:20160 success

Starting component node_exporter

Starting instance 192.168.31.56

Start 192.168.31.56 success

Starting component blackbox_exporter

Starting instance 192.168.31.56

Start 192.168.31.56 success

+ Refresh components conifgs

- Generate config pd -> 192.168.31.51:2379 ... Done

- Generate config pd -> 192.168.31.52:2379 ... Done

- Generate config tikv -> 192.168.31.53:20160 ... Done

- Generate config tikv -> 192.168.31.54:20160 ... Done

- Generate config tikv -> 192.168.31.55:20160 ... Done

- Generate config tikv -> 192.168.31.56:20160 ... Done

- Generate config tidb -> 192.168.31.50:4000 ... Done

- Generate config tiflash -> 192.168.31.57:9000 ... Done

- Generate config prometheus -> 192.168.31.57:9090 ... Done

- Generate config grafana -> 192.168.31.57:3000 ... Done

- Generate config alertmanager -> 192.168.31.57:9093 ... Done

+ Reload prometheus and grafana

- Reload prometheus -> 192.168.31.57:9090 ... Done

- Reload grafana -> 192.168.31.57:3000 ... Done

+ [ Serial ] - UpdateTopology: cluster=tidb-lab

Scaled cluster `tidb-lab` out successfully

执行tiup cluster display tidb-lab命令查看,扩容后结果信息,可以看到192.168.31.56成功扩容到tikv.

[root@192_168_31_50_TiDB tidb-community-server-v7.1.0-linux-amd64]# tiup cluster display tidb-lab

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.12.2/tiup-cluster display tidb-lab

Cluster type: tidb

Cluster name: tidb-lab

Cluster version: v7.1.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.31.51:2379/dashboard

Grafana URL: http://192.168.31.57:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.31.57:9093 alertmanager 192.168.31.57 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.31.57:3000 grafana 192.168.31.57 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.31.51:2379 pd 192.168.31.51 2379/2380 linux/x86_64 Up|L|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.31.52:2379 pd 192.168.31.52 2379/2380 linux/x86_64 Up /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.31.57:9090 prometheus 192.168.31.57 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.31.50:4000 tidb 192.168.31.50 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.31.57:9000 tiflash 192.168.31.57 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.31.53:20160 tikv 192.168.31.53 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.31.54:20160 tikv 192.168.31.54 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.31.55:20160 tikv 192.168.31.55 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.31.56:20160 tikv 192.168.31.56 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

Total nodes: 11

通过TiDB Dashboard查看集群信息,可以看到TiKV节点原3节点扩容成4节点。

数据迁移测试,从 MySql --> TiDB 数据导入测试,环境信息介绍,目前有mysql数据

| 序号 | 地址 | 数据库类型 | 数据库版本 | 数据库名 | 账号 | 密码 | | -- | ------------- | ----- | ------ | -------- | -------- | --------- | | 1 | 192.168.31.13 | mysql | 5.7.40 | jsh_erp | jsh_erp | P@ssw0rd | | 2 | 192.168.31.50 | TiDB | 7.1.0 | | root | P@ssw0rd |

进入TiDB主机把相关迁移工具配置好,并完成环境变量的配置 数据迁出工具使用Dumpling

| 使用场景 | 用于将数据从 MySQL/TiDB 进行全量导出 |

|---|---|

| 上游 | MySQL,TiDB |

| 下游(输出文件) | SQL,CSV |

| 主要优势 | * 支持全新的 table-filter,筛选数据更加方便 |

- 支持导出到 Amazon S3 云盘| |使用限制|* 如果导出后计划往非 TiDB 的数据库恢复,建议使用 Dumpling。

- 如果导出后计划往另一个 TiDB 恢复,建议使用BR|

数据迁入工具使用TiDB Lightning

| 使用场景 | 用于将数据全量导入到 TiDB |

|---|---|

| 上游(输入源文件) | * Dumpling 输出的文件 |

- 从 Amazon Aurora 或 Apache Hive 导出的 Parquet 文件

- CSV 文件

- 从本地盘或 Amazon S3 云盘读取数据| |下游|TiDB| |主要优势|* 支持快速导入大量数据,实现快速初始化 TiDB 集群的指定表

- 支持断点续传

- 支持数据过滤| |使用限制|* 如果使用

物理导入模式

进行数据导入,TiDB Lightning 运行后,TiDB 集群将无法正常对外提供服务。

- 如果你不希望 TiDB 集群的对外服务受到影响,可以参考 TiDB Lightning

逻辑导入模式

中的硬件需求与部署方式进行数据导入。

解压dumping与tidb-lightning包文件,如命令如下

[root@192_168_31_50_TiDB tidb-community-toolkit-v7.1.0-linux-amd64]# tar -xvf dumpling-v7.1.0-linux-amd64.tar.gz

[root@192_168_31_50_TiDB tidb-community-toolkit-v7.1.0-linux-amd64]# tar -xvf tidb-lightning-v7.1.0-linux-amd64.tar.gz

配置环境变量,让dumping与tidb-lightning命令生效,新增内容为:/tidb-setup/tidb-community-toolkit-v7.1.0-linux-amd64,完成后如下信息:

[root@192_168_31_50_TiDB tidb-community-toolkit-v7.1.0-linux-amd64]# vim /root/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export PATH=/root/.tiup/bin:/tidb-setup/tidb-community-toolkit-v7.1.0-linux-amd64:$PATH

数据导出位置/dumping_erp_db

[root@192_168_31_50_TiDB /]# mkdir dumping_erp_db

利用dumping命令把192.168.31.13的jsh_erp数据库导出在/dumping_erp_db文件夹中,命令如下:

[root@192_168_31_50_TiDB /]# dumpling -h192.168.31.13 -ujsh_erp -p'P@ssw0rd' -P3306 --filetype sql -t 2 -o /dumpling_erp_db/ -r 20000 -F 256MiB -B jsh_erp

执行结果,如下信息 [2023/06/05 12:20:42.298 +08:00] [INFO] [main.go:82] ["dump data successfully, dumpling will exit now"]

Release version: v7.1.0

Git commit hash: 635a4362235e8a3c0043542e629532e3c7bb2756

Git branch: heads/refs/tags/v7.1.0

Build timestamp: 2023-05-30 10:51:41Z

Go version: go version go1.20.3 linux/amd64

[2023/06/05 12:20:42.057 +08:00] [INFO] [versions.go:54] ["Welcome to dumpling"] ["Release Version"=v7.1.0] ["Git Commit Hash"=635a4362235e8a3c0043542e629532e3c7bb2756] ["Git Branch"=heads/refs/tags/v7.1.0] ["Build timestamp"="2023-05-30 10:51:41"] ["Go Version"="go version go1.20.3 linux/amd64"]

[2023/06/05 12:20:42.066 +08:00] [WARN] [version.go:322] ["select tidb_version() failed, will fallback to 'select version();'"] [error="Error 1046 (3D000): No database selected"]

[2023/06/05 12:20:42.067 +08:00] [INFO] [version.go:429] ["detect server version"] [type=MySQL] [version=5.7.40-log]

[2023/06/05 12:20:42.067 +08:00] [WARN] [dump.go:1421] ["error when use FLUSH TABLE WITH READ LOCK, fallback to LOCK TABLES"] [error="sql: FLUSH TABLES WITH READ LOCK: Error 1227 (42000): Access denied; you need (at least one of) the RELOAD privilege(s) for this operation"] [errorVerbose="Error 1227 (42000): Access denied; you need (at least one of) the RELOAD privilege(s) for this operation\nsql: FLUSH TABLES WITH READ LOCK\ngithub.com/pingcap/tidb/dumpling/export.FlushTableWithReadLock\n\tgithub.com/pingcap/tidb/dumpling/export/sql.go:619\ngithub.com/pingcap/tidb/dumpling/export.resolveAutoConsistency\n\tgithub.com/pingcap/tidb/dumpling/export/dump.go:1416\ngithub.com/pingcap/tidb/dumpling/export.runSteps\n\tgithub.com/pingcap/tidb/dumpling/export/dump.go:1290\ngithub.com/pingcap/tidb/dumpling/export.NewDumper\n\tgithub.com/pingcap/tidb/dumpling/export/dump.go:124\nmain.main\n\t./main.go:70\nruntime.main\n\truntime/proc.go:250\nruntime.goexit\n\truntime/asm_amd64.s:1598"]

[2023/06/05 12:20:42.074 +08:00] [INFO] [dump.go:151] ["begin to run Dump"] [conf="{\"s3\":{\"endpoint\":\"\",\"region\":\"\",\"storage-class\":\"\",\"sse\":\"\",\"sse-kms-key-id\":\"\",\"acl\":\"\",\"access-key\":\"\",\"secret-access-key\":\"\",\"session-token\":\"\",\"provider\":\"\",\"force-path-style\":true,\"use-accelerate-endpoint\":false,\"role-arn\":\"\",\"external-id\":\"\",\"object-lock-enabled\":false},\"gcs\":{\"endpoint\":\"\",\"storage-class\":\"\",\"predefined-acl\":\"\",\"credentials-file\":\"\"},\"azblob\":{\"endpoint\":\"\",\"account-name\":\"\",\"account-key\":\"\",\"access-tier\":\"\"},\"AllowCleartextPasswords\":false,\"SortByPk\":true,\"NoViews\":true,\"NoSequences\":true,\"NoHeader\":false,\"NoSchemas\":false,\"NoData\":false,\"CompleteInsert\":false,\"TransactionalConsistency\":true,\"EscapeBackslash\":true,\"DumpEmptyDatabase\":true,\"PosAfterConnect\":false,\"CompressType\":0,\"Host\":\"192.168.31.13\",\"Port\":3306,\"Threads\":2,\"User\":\"jsh_erp\",\"Security\":{\"CAPath\":\"\",\"CertPath\":\"\",\"KeyPath\":\"\"},\"LogLevel\":\"info\",\"LogFile\":\"\",\"LogFormat\":\"text\",\"OutputDirPath\":\"/dumpling_erp_db/\",\"StatusAddr\":\":8281\",\"Snapshot\":\"\",\"Consistency\":\"lock\",\"CsvNullValue\":\"\\\\N\",\"SQL\":\"\",\"CsvSeparator\":\",\",\"CsvDelimiter\":\"\\\"\",\"Databases\":[\"jsh_erp\"],\"Where\":\"\",\"FileType\":\"sql\",\"ServerInfo\":{\"ServerType\":1,\"ServerVersion\":\"5.7.40-log\",\"HasTiKV\":false},\"Rows\":20000,\"ReadTimeout\":900000000000,\"TiDBMemQuotaQuery\":0,\"FileSize\":268435456,\"StatementSize\":1000000,\"SessionParams\":{},\"Tables\":{},\"CollationCompatible\":\"loose\",\"IOTotalBytes\":null,\"Net\":\"\"}"]

[2023/06/05 12:20:42.097 +08:00] [INFO] [dump.go:204] ["get global metadata failed"] [error="sql: SHOW MASTER STATUS: sql: SHOW MASTER STATUS, args: []: Error 1227 (42000): Access denied; you need (at least one of) the SUPER, REPLICATION CLIENT privilege(s) for this operation"]

[2023/06/05 12:20:42.109 +08:00] [INFO] [dump.go:275] ["All the dumping transactions have started. Start to unlock tables"]

[2023/06/05 12:20:42.115 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_account] [estimateCount=4]

[2023/06/05 12:20:42.115 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=4] [conf.rows=20000] [database=jsh_erp] [table=jsh_account]

[2023/06/05 12:20:42.126 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_account_head] [estimateCount=6]

[2023/06/05 12:20:42.127 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=6] [conf.rows=20000] [database=jsh_erp] [table=jsh_account_head]

[2023/06/05 12:20:42.132 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_account_item] [estimateCount=6]

[2023/06/05 12:20:42.132 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=6] [conf.rows=20000] [database=jsh_erp] [table=jsh_account_item]

[2023/06/05 12:20:42.136 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_depot] [estimateCount=3]

[2023/06/05 12:20:42.136 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=3] [conf.rows=20000] [database=jsh_erp] [table=jsh_depot]

[2023/06/05 12:20:42.144 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_depot_head] [estimateCount=108]

[2023/06/05 12:20:42.144 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=108] [conf.rows=20000] [database=jsh_erp] [table=jsh_depot_head]

[2023/06/05 12:20:42.150 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_depot_item] [estimateCount=2232]

[2023/06/05 12:20:42.150 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=2232] [conf.rows=20000] [database=jsh_erp] [table=jsh_depot_item]

[2023/06/05 12:20:42.154 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_function] [estimateCount=62]

[2023/06/05 12:20:42.154 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=62] [conf.rows=20000] [database=jsh_erp] [table=jsh_function]

[2023/06/05 12:20:42.158 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_in_out_item] [estimateCount=3]

[2023/06/05 12:20:42.158 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=3] [conf.rows=20000] [database=jsh_erp] [table=jsh_in_out_item]

[2023/06/05 12:20:42.162 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_log] [estimateCount=823]

[2023/06/05 12:20:42.162 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=823] [conf.rows=20000] [database=jsh_erp] [table=jsh_log]

[2023/06/05 12:20:42.167 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_material] [estimateCount=415]

[2023/06/05 12:20:42.167 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=415] [conf.rows=20000] [database=jsh_erp] [table=jsh_material]

[2023/06/05 12:20:42.172 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_material_attribute] [estimateCount=5]

[2023/06/05 12:20:42.172 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=5] [conf.rows=20000] [database=jsh_erp] [table=jsh_material_attribute]

[2023/06/05 12:20:42.176 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_material_category] [estimateCount=8]

[2023/06/05 12:20:42.176 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=8] [conf.rows=20000] [database=jsh_erp] [table=jsh_material_category]

[2023/06/05 12:20:42.180 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_material_current_stock] [estimateCount=418]

[2023/06/05 12:20:42.180 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=418] [conf.rows=20000] [database=jsh_erp] [table=jsh_material_current_stock]

[2023/06/05 12:20:42.184 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_material_extend] [estimateCount=492]

[2023/06/05 12:20:42.185 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=492] [conf.rows=20000] [database=jsh_erp] [table=jsh_material_extend]

[2023/06/05 12:20:42.203 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_material_initial_stock] [estimateCount=47]

[2023/06/05 12:20:42.203 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=47] [conf.rows=20000] [database=jsh_erp] [table=jsh_material_initial_stock]

[2023/06/05 12:20:42.224 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_material_property] [estimateCount=4]

[2023/06/05 12:20:42.224 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=4] [conf.rows=20000] [database=jsh_erp] [table=jsh_material_property]

[2023/06/05 12:20:42.233 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_msg] [estimateCount=1]

[2023/06/05 12:20:42.233 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=1] [conf.rows=20000] [database=jsh_erp] [table=jsh_msg]

[2023/06/05 12:20:42.237 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_orga_user_rel] [estimateCount=8]

[2023/06/05 12:20:42.237 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=8] [conf.rows=20000] [database=jsh_erp] [table=jsh_orga_user_rel]

[2023/06/05 12:20:42.242 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_organization] [estimateCount=4]

[2023/06/05 12:20:42.242 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=4] [conf.rows=20000] [database=jsh_erp] [table=jsh_organization]

[2023/06/05 12:20:42.246 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_person] [estimateCount=4]

[2023/06/05 12:20:42.246 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=4] [conf.rows=20000] [database=jsh_erp] [table=jsh_person]

[2023/06/05 12:20:42.250 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_platform_config] [estimateCount=8]

[2023/06/05 12:20:42.250 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=8] [conf.rows=20000] [database=jsh_erp] [table=jsh_platform_config]

[2023/06/05 12:20:42.256 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_role] [estimateCount=4]

[2023/06/05 12:20:42.256 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=4] [conf.rows=20000] [database=jsh_erp] [table=jsh_role]

[2023/06/05 12:20:42.260 +08:00] [INFO] [dump.go:755] ["fallback to sequential dump due to no proper field. This won't influence the whole dump process"] [database=jsh_erp] [table=jsh_sequence] []

[2023/06/05 12:20:42.265 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_serial_number] [estimateCount=3]

[2023/06/05 12:20:42.266 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=3] [conf.rows=20000] [database=jsh_erp] [table=jsh_serial_number]

[2023/06/05 12:20:42.271 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_supplier] [estimateCount=31]

[2023/06/05 12:20:42.272 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=31] [conf.rows=20000] [database=jsh_erp] [table=jsh_supplier]

[2023/06/05 12:20:42.277 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_system_config] [estimateCount=1]

[2023/06/05 12:20:42.277 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=1] [conf.rows=20000] [database=jsh_erp] [table=jsh_system_config]

[2023/06/05 12:20:42.281 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_tenant] [estimateCount=1]

[2023/06/05 12:20:42.282 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=1] [conf.rows=20000] [database=jsh_erp] [table=jsh_tenant]

[2023/06/05 12:20:42.287 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_unit] [estimateCount=4]

[2023/06/05 12:20:42.287 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=4] [conf.rows=20000] [database=jsh_erp] [table=jsh_unit]

[2023/06/05 12:20:42.291 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_user] [estimateCount=4]

[2023/06/05 12:20:42.291 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=4] [conf.rows=20000] [database=jsh_erp] [table=jsh_user]

[2023/06/05 12:20:42.296 +08:00] [INFO] [dump.go:761] ["get estimated rows count"] [database=jsh_erp] [table=jsh_user_business] [estimateCount=41]

[2023/06/05 12:20:42.296 +08:00] [INFO] [dump.go:767] ["fallback to sequential dump due to estimate count < rows. This won't influence the whole dump process"] ["estimate count"=41] [conf.rows=20000] [database=jsh_erp] [table=jsh_user_business]

[2023/06/05 12:20:42.297 +08:00] [INFO] [collector.go:264] ["backup success summary"] [total-ranges=61] [ranges-succeed=61] [ranges-failed=0] [total-take=187.802008ms] [total-rows=5165] [total-kv-size=666.7kB] [average-speed=3.55MB/s]

[2023/06/05 12:20:42.298 +08:00] [INFO] [main.go:82] ["dump data successfully, dumpling will exit now"]

进入/dumpling_erp_db文件夹中查看导出的数据,如下图

[root@192_168_31_50_TiDB /]# cd /dumpling_erp_db/

[root@192_168_31_50_TiDB dumpling_erp_db]# ls

jsh_erp-schema-create.sql jsh_erp.jsh_material_attribute-schema.sql jsh_erp.jsh_platform_config.0000000000000.sql

jsh_erp.jsh_account-schema.sql jsh_erp.jsh_material_attribute.0000000000000.sql jsh_erp.jsh_role-schema.sql

jsh_erp.jsh_account.0000000000000.sql jsh_erp.jsh_material_category-schema.sql jsh_erp.jsh_role.0000000000000.sql

jsh_erp.jsh_account_head-schema.sql jsh_erp.jsh_material_category.0000000000000.sql jsh_erp.jsh_sequence-schema.sql

jsh_erp.jsh_account_head.0000000000000.sql jsh_erp.jsh_material_current_stock-schema.sql jsh_erp.jsh_sequence.0000000000000.sql

jsh_erp.jsh_account_item-schema.sql jsh_erp.jsh_material_current_stock.0000000000000.sql jsh_erp.jsh_serial_number-schema.sql

jsh_erp.jsh_account_item.0000000000000.sql jsh_erp.jsh_material_extend-schema.sql jsh_erp.jsh_serial_number.0000000000000.sql

jsh_erp.jsh_depot-schema.sql jsh_erp.jsh_material_extend.0000000000000.sql jsh_erp.jsh_supplier-schema.sql

jsh_erp.jsh_depot.0000000000000.sql jsh_erp.jsh_material_initial_stock-schema.sql jsh_erp.jsh_supplier.0000000000000.sql

jsh_erp.jsh_depot_head-schema.sql jsh_erp.jsh_material_initial_stock.0000000000000.sql jsh_erp.jsh_system_config-schema.sql

jsh_erp.jsh_depot_head.0000000000000.sql jsh_erp.jsh_material_property-schema.sql jsh_erp.jsh_system_config.0000000000000.sql

jsh_erp.jsh_depot_item-schema.sql jsh_erp.jsh_material_property.0000000000000.sql jsh_erp.jsh_tenant-schema.sql

jsh_erp.jsh_depot_item.0000000000000.sql jsh_erp.jsh_msg-schema.sql jsh_erp.jsh_tenant.0000000000000.sql

jsh_erp.jsh_function-schema.sql jsh_erp.jsh_msg.0000000000000.sql jsh_erp.jsh_unit-schema.sql

jsh_erp.jsh_function.0000000000000.sql jsh_erp.jsh_orga_user_rel-schema.sql jsh_erp.jsh_unit.0000000000000.sql

jsh_erp.jsh_in_out_item-schema.sql jsh_erp.jsh_orga_user_rel.0000000000000.sql jsh_erp.jsh_user-schema.sql

jsh_erp.jsh_in_out_item.0000000000000.sql jsh_erp.jsh_organization-schema.sql jsh_erp.jsh_user.0000000000000.sql

jsh_erp.jsh_log-schema.sql jsh_erp.jsh_organization.0000000000000.sql jsh_erp.jsh_user_business-schema.sql

jsh_erp.jsh_log.0000000000000.sql jsh_erp.jsh_person-schema.sql jsh_erp.jsh_user_business.0000000000000.sql

jsh_erp.jsh_material-schema.sql jsh_erp.jsh_person.0000000000000.sql metadata

jsh_erp.jsh_material.0000000000000.sql jsh_erp.jsh_platform_config-schema.sql

导入数据到TiDB数据库,此次采用的是“逻辑导入”,因为此次数据量小50GB,首先新建Tidb-lightning配置文件,配置文件信息如下:

[lightning]

level = "info"

file = "tidb-lightning.log"

max-size = 128 # MB

max-days = 28

max-backups = 14

check-requirements = true

[mydumper]

data-source-dir = "/dumpling_erp_db"

[tikv-importer]

backend = "tidb"

on-duplicate = "replace"

[tidb]

host = "192.168.31.50"

port = 4000

user = "root"

password = "P@ssw0rd"

log-level = "error"

执行导入命令 tidb-lightning -config tidb-lightning.toml > tidb-lightning.log

[root@192_168_31_50_TiDB tidb-setup]# tidb-lightning -config tidb-lightning.toml > tidb-lightning.log

导入成功后日志如下:

tom@Toms-MBP ~ % mysql -h 192.168.31.50 -P 4000 -u root -pP@ssw0rd

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 525

Server version: 5.7.25-TiDB-v7.1.0 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| INFORMATION_SCHEMA |

| METRICS_SCHEMA |

| PERFORMANCE_SCHEMA |

| mysql |

| test |

+--------------------+

5 rows in set (0.00 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| INFORMATION_SCHEMA |

| METRICS_SCHEMA |

| PERFORMANCE_SCHEMA |

| jsh_erp |

| mysql |

| test |

+--------------------+

6 rows in set (0.00 sec)

mysql> use jsh_erp

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+----------------------------+

| Tables_in_jsh_erp |

+----------------------------+

| jsh_account |

| jsh_account_head |

| jsh_account_item |

| jsh_depot |

| jsh_depot_head |

| jsh_depot_item |

| jsh_function |

| jsh_in_out_item |

| jsh_log |

| jsh_material |

| jsh_material_attribute |

| jsh_material_category |

| jsh_material_current_stock |

| jsh_material_extend |

| jsh_material_initial_stock |

| jsh_material_property |

| jsh_msg |

| jsh_orga_user_rel |

| jsh_organization |

| jsh_person |

| jsh_platform_config |

| jsh_role |

| jsh_sequence |

| jsh_serial_number |

| jsh_supplier |

| jsh_system_config |

| jsh_tenant |

| jsh_unit |

| jsh_user |

| jsh_user_business |

+----------------------------+

30 rows in set (0.01 sec)

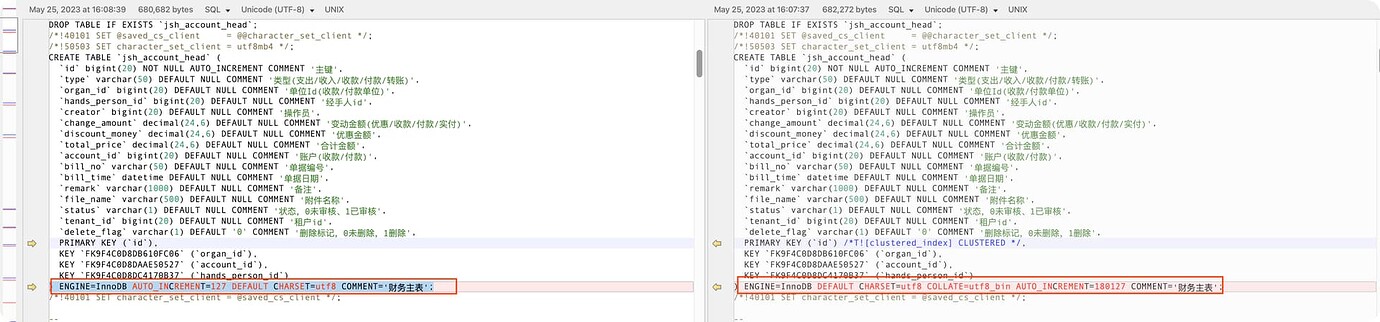

数据库导入成功,我验证一下原有jsh_erp数据库与TiDB里的jsh_erp是否一致,由于数据库自身较小,本次使用beyong Compare工具进行比对(对比前把原有数据库与现有数据库进行导出),数据对比结果如下图:

![Linux学习[16]bash学习深入2---别名设置alias---history指令---环境配置相关](https://img-blog.csdnimg.cn/6de5eb4bf67f49cca374c395999c67f2.png)