文章目录

- how to compile on Linux(using cmake)

- yolo v4 测试

- 网络结构

- route 和shotcut

- Neck

- Head

- Loss

- 参考

YOLO v4是YOLO系列的第三篇,YOLO v4融合了大量的检测小技巧,为了能够更快地理解YOLO v4,可先查看前两篇文章。

【YOLO系列】YOLO v3(网络结构图+代码)

【YOLO 系列】YOLO v4-v5先验知识

how to compile on Linux(using cmake)

克隆darnet源码,使用cmake进行编译。cmake的版本要大于等于3.18,我的cmake版本原先是3.14,版本过低,下载新版本的cmake重新编译就可以升级cmake的版本。我的基础配置信息如下所示(啊啊啊啊,终于蹭到GPU了,开心):

| - | 版本 |

|---|---|

| cuda | 11.6 |

| cudnn | 8.4.0 |

| opencv | 4.2.0 |

| cmake | 3.25.3 |

基于上述配置,使用如下命令进行编译,无出错。

git clone https://github.com/AlexeyAB/darknet

cd darknet

mkdir build_release

cd build_release

cmake …

cmake --build . --target install --parallel 8

编译成功后,在darknet目录下会出现darknet执行文件和libdarknet.so动态库文件

yolo v4 测试

下载预训练模型和cfg文件:

wget https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weights

wget https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4.cfg

yolov4 测试命令:

./darknet detector test ./cfg/coco.data ./cfg/yolov4.cfg ./yolov4.weights data/dog.jpg -i 0 -thresh 0.25

下载Yolov4的预训练权重和cfg配置文件,使用生成的darknet执行文件进行检测,测试结果和图片展示如下所示:

YoLo v4的预测结果比YoLo v3多预测了一个pottedplant(盆栽植物),置信度有些低,33%。并且预测错误,预测的是垃圾桶,而不是盆栽植物,垃圾桶上的树叶起了干扰作用吧。

网络结构

从AlexeyAB/darknet/tree/yolov4中项目中下载YOLO v4的cfg文件和.weight预训练权重文件。darknet在测试时,会输出yolo v4的层信息,但是仍想想用Netro能够直观地查看YOLO v4的网络结构。最后我选择Tianxiaomo/pytorch-YOLOv4中 demo_darknet2onnx.py文件将.weight文件转换成ONNX文件,然后就可以清晰直观地使用Netro查看YOLO v4的网络结构了。如下是运行命令

python demo_darknet2onnx.py cfg\yolov4.cfg data\coco.names yolov4.weights data\dog.jpg 1

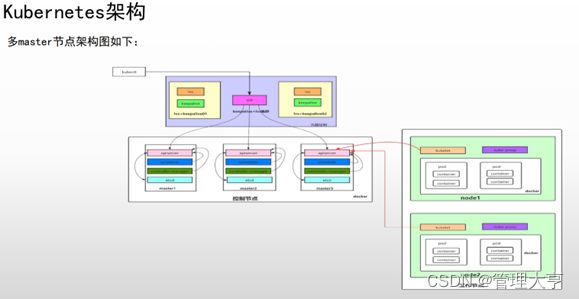

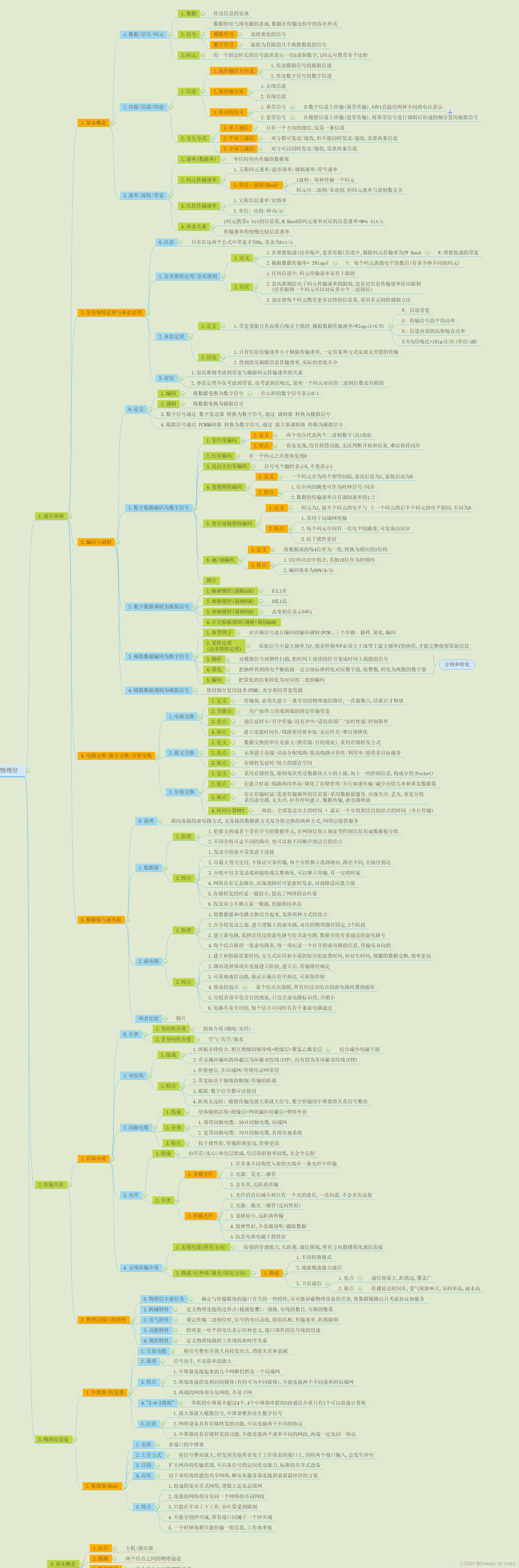

YOLOv4清晰地将网络结构分为backbone、Neck和Head三部分。backbone采用的是CSPDarknet53,Neck为SPP和PAN,Head与YOLO v3一样。其中B表示backbone的结束点,N表示Neck的结束点。由于YOLO v4与YOLO v3一样采用多尺度预测,所以Neck的输出也有三个。

每个组件下面的数字是其在YOLO v4网络结构的层数。有了层数,会更好理解下述的Neck和Head的代码。

route 和shotcut

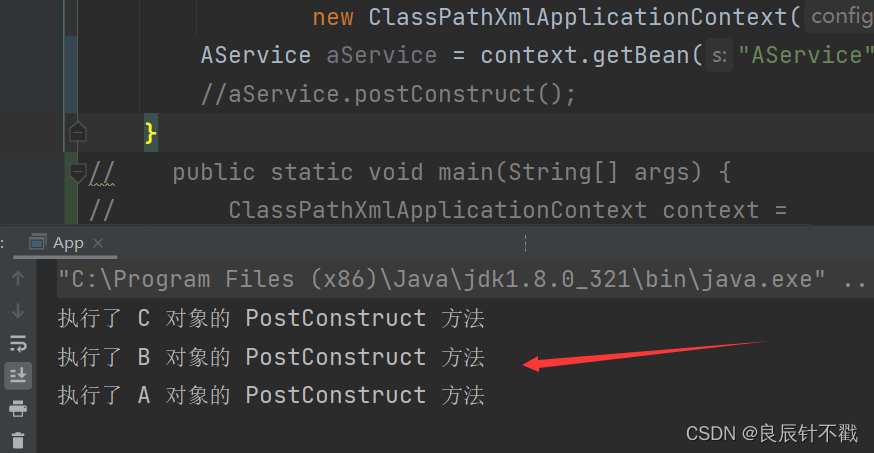

YOLO v4的cfg中route和shotcut层。shoutcut就是做残差操作,这里就不再赘述。下图是YOLO v4的前几网络层信息,包括了route和shoutcut层。

下图是上图网络层信息的图形化展示。如上图所示,编号为3的route层后接一个输入时,route 1,编号为1的卷积层的输出为编号为2和编号为4卷积层的输入,也就是说编号为1的卷积层后有两个分支。如上图所示,编号为9的route层后接两个输入时,route 8 2,编号为2的卷积和编号为8的卷积进行concat操作,分开的两个分支进行合并操作。

如下图所示,当route后接四个输入时,为YOLO v4中的SPP结构,和YOLO v3中的SPP结构一样。YOLO系列中的SPP模块只是借鉴空间金字塔池化的思想,实现全局特征和局部特征的特征融合,丰富最终特征图的表达能力,从而提高mAP。

Neck

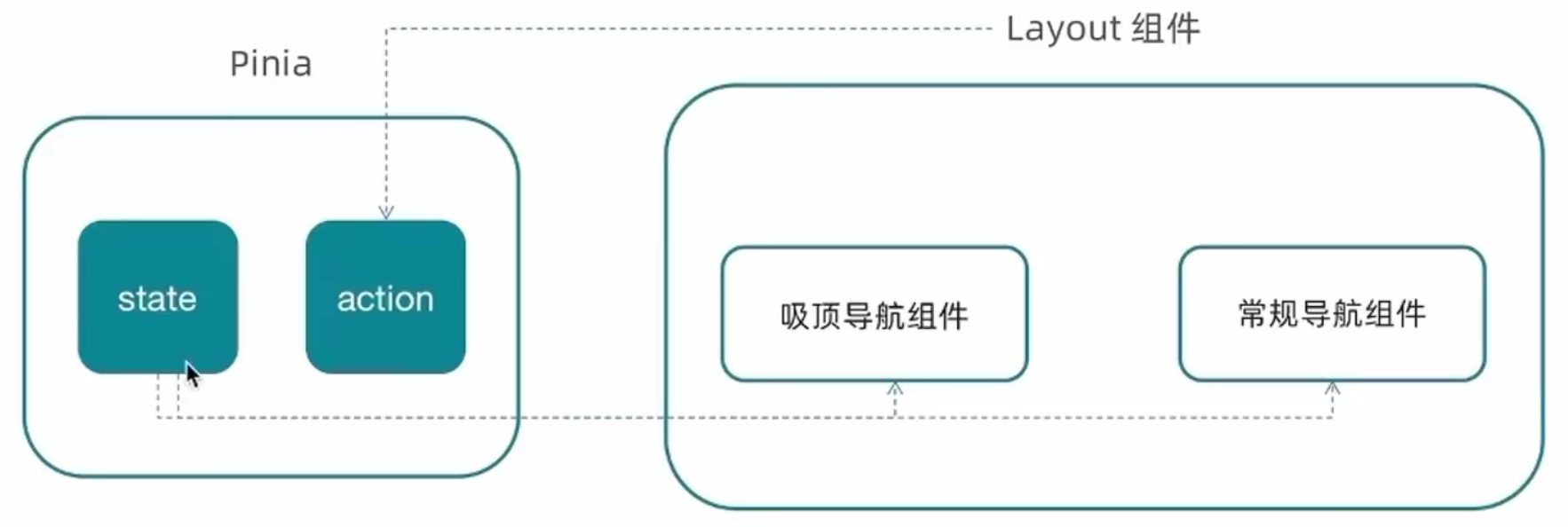

YOLO v4的Neck由SPP和PAN两个组件构成。上文中已经描述过SPP,在这里不再赘述。PANet引入了bottom-up的路径,让低层特征传递到高层特征。详情见【YOLO 系列】YOLO v4-v5先验知识

class Neck(nn.Module):

def __init__(self, inference=False):

super().__init__()

self.inference = inference

self.conv1 = Conv_Bn_Activation(1024, 512, 1, 1, 'leaky')

self.conv2 = Conv_Bn_Activation(512, 1024, 3, 1, 'leaky')

# SPP

self.conv3 = Conv_Bn_Activation(1024, 512, 1, 1, 'leaky')

self.maxpool1 = nn.MaxPool2d(kernel_size=5, stride=1, padding=5 // 2)

self.maxpool2 = nn.MaxPool2d(kernel_size=9, stride=1, padding=9 // 2)

self.maxpool3 = nn.MaxPool2d(kernel_size=13, stride=1, padding=13 // 2)

# R -1 -3 -5 -6

# SPP

self.conv4 = Conv_Bn_Activation(2048, 512, 1, 1, 'leaky')

self.conv5 = Conv_Bn_Activation(512, 1024, 3, 1, 'leaky')

self.conv6 = Conv_Bn_Activation(1024, 512, 1, 1, 'leaky')

self.conv7 = Conv_Bn_Activation(512, 256, 1, 1, 'leaky')

# UP

self.upsample1 = Upsample()

# R 85

self.conv8 = Conv_Bn_Activation(512, 256, 1, 1, 'leaky')

# R -1 -3

self.conv9 = Conv_Bn_Activation(512, 256, 1, 1, 'leaky')

self.conv10 = Conv_Bn_Activation(256, 512, 3, 1, 'leaky')

self.conv11 = Conv_Bn_Activation(512, 256, 1, 1, 'leaky')

self.conv12 = Conv_Bn_Activation(256, 512, 3, 1, 'leaky')

self.conv13 = Conv_Bn_Activation(512, 256, 1, 1, 'leaky')

self.conv14 = Conv_Bn_Activation(256, 128, 1, 1, 'leaky')

# UP

self.upsample2 = Upsample()

# R 54

self.conv15 = Conv_Bn_Activation(256, 128, 1, 1, 'leaky')

# R -1 -3

self.conv16 = Conv_Bn_Activation(256, 128, 1, 1, 'leaky')

self.conv17 = Conv_Bn_Activation(128, 256, 3, 1, 'leaky')

self.conv18 = Conv_Bn_Activation(256, 128, 1, 1, 'leaky')

self.conv19 = Conv_Bn_Activation(128, 256, 3, 1, 'leaky')

self.conv20 = Conv_Bn_Activation(256, 128, 1, 1, 'leaky')

def forward(self, input, downsample4, downsample3, inference=False):

x1 = self.conv1(input) # 105

x2 = self.conv2(x1) # 106

# SPP 107-113

x3 = self.conv3(x2) # 107

m1 = self.maxpool1(x3)

m2 = self.maxpool2(x3)

m3 = self.maxpool3(x3)

spp = torch.cat([m3, m2, m1, x3], dim=1) # 113

# SPP end

x4 = self.conv4(spp) # 114

x5 = self.conv5(x4) # 115

x6 = self.conv6(x5) # 116

x7 = self.conv7(x6) # 117

# UP

up = self.upsample1(x7, downsample4.size(), self.inference) # 118 上采样

# R 85

x8 = self.conv8(downsample4) # 120

# R 120 118

x8 = torch.cat([x8, up], dim=1) # 121

x9 = self.conv9(x8) # 122

x10 = self.conv10(x9) # 123

x11 = self.conv11(x10) # 124

x12 = self.conv12(x11) # 125

x13 = self.conv13(x12) # 126

x14 = self.conv14(x13) # 127

# UP

up = self.upsample2(x14, downsample3.size(), self.inference) # 128

# R 54

x15 = self.conv15(downsample3) # 130

# R 130 128

x15 = torch.cat([x15, up], dim=1) # 131

x16 = self.conv16(x15) # 132

x17 = self.conv17(x16) # 133

x18 = self.conv18(x17) # 134

x19 = self.conv19(x18) # 135

x20 = self.conv20(x19) # 136

return x20, x13, x6 # 136, 126, 116

Head

YOLO v4的head和YOLOv3 backbone后网络结构一样。

class Yolov4Head(nn.Module):

def __init__(self, output_ch, n_classes, inference=False):

super().__init__()

self.inference = inference

self.conv1 = Conv_Bn_Activation(128, 256, 3, 1, 'leaky')

self.conv2 = Conv_Bn_Activation(256, output_ch, 1, 1, 'linear', bn=False, bias=True) # conv

self.yolo1 = YoloLayer(

anchor_mask=[0, 1, 2], num_classes=n_classes,

anchors=[12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401],

num_anchors=9, stride=8)

# R -4

self.conv3 = Conv_Bn_Activation(128, 256, 3, 2, 'leaky')

# R -1 -16

self.conv4 = Conv_Bn_Activation(512, 256, 1, 1, 'leaky')

self.conv5 = Conv_Bn_Activation(256, 512, 3, 1, 'leaky')

self.conv6 = Conv_Bn_Activation(512, 256, 1, 1, 'leaky')

self.conv7 = Conv_Bn_Activation(256, 512, 3, 1, 'leaky')

self.conv8 = Conv_Bn_Activation(512, 256, 1, 1, 'leaky')

self.conv9 = Conv_Bn_Activation(256, 512, 3, 1, 'leaky')

self.conv10 = Conv_Bn_Activation(512, output_ch, 1, 1, 'linear', bn=False, bias=True) # conv

self.yolo2 = YoloLayer(

anchor_mask=[3, 4, 5], num_classes=n_classes,

anchors=[12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401],

num_anchors=9, stride=16)

# R -4

self.conv11 = Conv_Bn_Activation(256, 512, 3, 2, 'leaky')

# R -1 -37

self.conv12 = Conv_Bn_Activation(1024, 512, 1, 1, 'leaky')

self.conv13 = Conv_Bn_Activation(512, 1024, 3, 1, 'leaky')

self.conv14 = Conv_Bn_Activation(1024, 512, 1, 1, 'leaky')

self.conv15 = Conv_Bn_Activation(512, 1024, 3, 1, 'leaky')

self.conv16 = Conv_Bn_Activation(1024, 512, 1, 1, 'leaky')

self.conv17 = Conv_Bn_Activation(512, 1024, 3, 1, 'leaky')

self.conv18 = Conv_Bn_Activation(1024, output_ch, 1, 1, 'linear', bn=False, bias=True) # conv

self.yolo3 = YoloLayer(

anchor_mask=[6, 7, 8], num_classes=n_classes,

anchors=[12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401],

num_anchors=9, stride=32)

def forward(self, input1, input2, input3):

# input1, input2, input3 = # 136, 126, 116

x1 = self.conv1(input1) # 137

x2 = self.conv2(x1) # 138 76*76*225

x3 = self.conv3(input1) # 140

# R 141 126

x3 = torch.cat([x3, input2], dim=1) # 142

x4 = self.conv4(x3) # 143

x5 = self.conv5(x4) # 144

x6 = self.conv6(x5) # 145

x7 = self.conv7(x6) # 146

x8 = self.conv8(x7) # 147

x9 = self.conv9(x8) # 148

x10 = self.conv10(x9) # 149 38*38*255

# R 147

x11 = self.conv11(x8) # 152

# R 152 116

x11 = torch.cat([x11, input3], dim=1) # 153

x12 = self.conv12(x11) # 154

x13 = self.conv13(x12) # 155

x14 = self.conv14(x13) # 156

x15 = self.conv15(x14) # 157

x16 = self.conv16(x15) # 158

x17 = self.conv17(x16) # 159

x18 = self.conv18(x17) # 160 19*19*255

if self.inference:

y1 = self.yolo1(x2)

y2 = self.yolo2(x10)

y3 = self.yolo3(x18)

return get_region_boxes([y1, y2, y3])

else:

return [x2, x10, x18]

Loss

YoLo v4的损失函数与YoLo v3的损失函数一样,对中心点坐标x和y都采用BCE,对宽高w和h采用MSE。bbox的置信度和类别预测都使用交叉熵损失。

loss_xy += F.binary_cross_entropy(input=output[..., :2], target=target[..., :2], weight=tgt_scale * tgt_scale, reduction='sum')

loss_wh += F.mse_loss(input=output[..., 2:4], target=target[..., 2:4], reduction='sum') / 2

loss_obj += F.binary_cross_entropy(input=output[..., 4], target=target[..., 4], reduction='sum')

loss_cls += F.binary_cross_entropy(input=output[..., 5:], target=target[..., 5:], reduction='sum')

loss_l2 += F.mse_loss(input=output, target=target, reduction='sum') # L2正则化

# 总和

loss = loss_xy + loss_wh + loss_obj + loss_cls

参考

- YOLOv4: Optimal Speed and Accuracy of Object Detection

- AlexeyAB/darknet

- Tianxiaomo/pytorch-YOLOv4