一、Deployment使用

状态:Available部署完成,Progressing进行中,Complete已经完成,Failed失败的

失败原因:Quota不足,ReadingnessProbe失败,image pull失败,Limit Ranges范围,应用程序运行错误

1、创建nginx deploy

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-deployment-66b9f7ff85-2mqpj 1/1 Running 0 1m13s app=nginx,pod-template-hash=66b9f7ff85

nginx-deployment-66b9f7ff85-d9rqv 1/1 Running 0 1m13s app=nginx,pod-template-hash=66b9f7ff85

nginx-deployment-66b9f7ff85-db8fk 1/1 Running 0 1m13s app=nginx,pod-template-hash=66b9f7ff85

2、更新版本

(1)通过set修改

[root@master01 ~]# kubectl get deployment -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deployment 3/3 3 3 15m nginx nginx:latest app=nginx

[root@master01 ~]# kubectl set image deployment/nginx-deployment nginx=nginx:1.18

deployment.apps/nginx-deployment image updated

[root@master01 ~]# kubectl get deployment -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deployment 3/3 1 3 15m nginx nginx:1.18 app=nginx

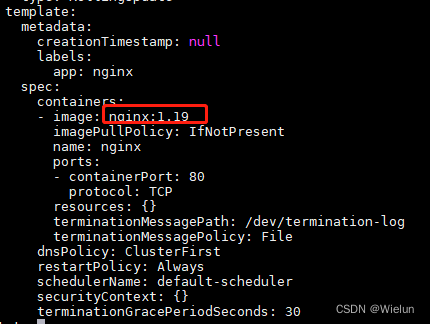

(2)通过edit修改

[root@master01 ~]# kubectl edit deployment nginx-deployment

[root@master01 ~]# kubectl get deployment -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deployment 3/3 1 3 17m nginx nginx:1.19 app=nginx

[root@master01 ~]# kubectl rollout status deployment nginx-deployment #查看更新状态

deployment "nginx-deployment" successfully rolled out

3、发布策略

(1)Recreate

. 此技术意味着服务的停机时间取决于应用程序的关闭和启动持续时间

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

strategy:

rollingUpdate:

type: Recreate

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl get pod -w #开另外一个terminal查看过程

(2)滚动更新(rolling-update)

滚动更新通过逐个替换实例来逐步部署新版本的应用,直到所有实例都被替换完成为止。

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

4、回滚

[root@master01 ~]# kubectl set image deployment/nginx-deployment nginx=nginx:1.19 --record=true #更新记录方便回滚

[root@master01 ~]# kubectl rollout history deployment nginx-deployment #查看历史

deployment.apps/nginx-deployment

REVISION CHANGE-CAUSE

2 <none>

4 <none>

7 kubectl set image deployment/nginx-deployment nginx=nginx:1.19 --record=true

[root@master01 ~]# kubectl rollout history deployment nginx-deployment --revision=7 #查看细节

deployment.apps/nginx-deployment with revision #7

Pod Template:

Labels: app=nginx

pod-template-hash=6f777cb8b7

Annotations: kubernetes.io/change-cause: kubectl set image deployment/nginx-deployment nginx=nginx:1.19 --record=true

Containers:

nginx:

Image: nginx:1.19

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

[root@master01 ~]# kubectl rollout undo deployment nginx-deployment #回滚上一个版本

[root@master01 ~]# kubectl rollout undo undo deployment nginx-deployment --to-revision=7 #回滚指定版本

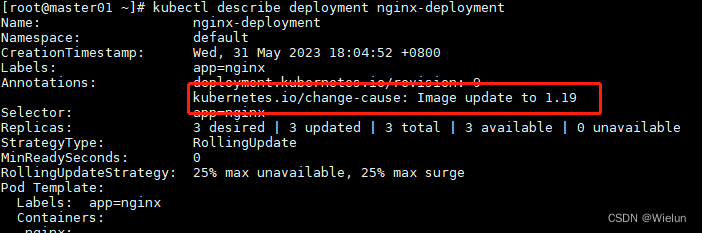

5、添加注释

(1)通过命令

[root@master01 ~]# kubectl annotate deployments.apps nginx-deployment kubernetes.io/change-cause="image update to 1.19" #写注释

(2)通过文件

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

annotations:

kubernetes.io/change-cause: "Image update to 1.19"

labels:

app: nginx

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

replicas: 3

selector:

matchLabels:

app: nginx

revisionHistoryLimit: 20

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.19

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl describe deployment nginx-deployment

6、patch操作

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

annotations:

kubernetes.io/change-cause: "Image update to latest"

labels:

app: nginx

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

replicas: 10

selector:

matchLabels:

app: nginx

revisionHistoryLimit: 20

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl patch deployments.apps nginx-deployment --type=json -p='[{"op":"replace","path":"/spec/template/spec/containers/0/image","value":"nginx:1.19"}]'

[root@master01 ~]# kubectl get deployment -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deployment 10/10 10 10 3h nginx nginx:1.19 app=nginx

[root@master01 ~]# kubectl patch deployments.apps nginx-deployment -p '{"spec":{"replicas":3}}'

[root@master01 ~]# kubectl get deployment -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-deployment 3/3 3 3 3h4m nginx nginx:1.19 app=nginx

7、通过不同文件更新

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

[root@master01 ~]# cat nginx-deploy-v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

annotations:

kubernetes.io/change-cause: "Image update to latest"

labels:

app: nginx

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

replicas: 10

selector:

matchLabels:

app: nginx

revisionHistoryLimit: 20

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.21

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl delete -f nginx-deploy.yaml

[root@master01 ~]# kubectl replace -f nginx-deploy-v2.yaml

8、副本数量操作

- 如果总数为10

- maxUnavailable: 10 -(10*25%)向下取整,结果为8

- maxSurge = 10 + (10*25%)向上取整,结果为13

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-66b9f7ff85-5cs67 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-6jkq8 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-6pv4x 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-8s8mm 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-hhv97 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-htkwj 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-j8h22 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-k7fwn 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-n7fdn 1/1 Running 0 5s

nginx-deployment-66b9f7ff85-zzsqb 1/1 Running 0 5s

[root@master01 ~]# kubectl scale deployment nginx-deployment --replicas 3

deployment.apps/nginx-deployment scaled

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-66b9f7ff85-hhv97 1/1 Running 0 92s

nginx-deployment-66b9f7ff85-j8h22 1/1 Running 0 92s

nginx-deployment-66b9f7ff85-k7fwn 1/1 Running 0 92s

[root@master01 ~]# kubectl scale deployment nginx-deployment --replicas 10

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-66b9f7ff85-6jh8k 1/1 Running 0 3s

nginx-deployment-66b9f7ff85-9dzfj 1/1 Running 0 3s

nginx-deployment-66b9f7ff85-ght2r 1/1 Running 0 3s

nginx-deployment-66b9f7ff85-hhv97 1/1 Running 0 115s

nginx-deployment-66b9f7ff85-j8h22 1/1 Running 0 115s

nginx-deployment-66b9f7ff85-k7fwn 1/1 Running 0 115s

nginx-deployment-66b9f7ff85-kp969 1/1 Running 0 3s

nginx-deployment-66b9f7ff85-w7f8t 1/1 Running 0 3s

nginx-deployment-66b9f7ff85-zhkjn 1/1 Running 0 3s

nginx-deployment-66b9f7ff85-zjmlg 1/1 Running 0 3s

二、探针使用

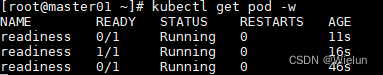

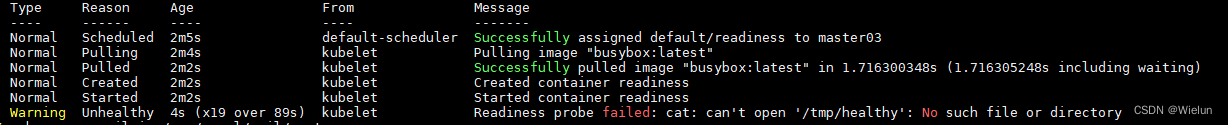

1、readinessProbe

(1)Exec探针

[root@master01 ~]# cat read-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: readiness

labels:

test: readiness

spec:

containers:

- name: readiness

image: busybox:latest

command:

- "/bin/sh"

- "-c"

- "touch /tmp/healthy;sleep 30; rm -rf /tmp/healthy;sleep 100000000000"

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10

periodSeconds: 5

[root@master01 ~]# kubectl apply -f read-pod.yaml

[root@master01 ~]# kubectl get pod -w

[root@master01 ~]# kubectl describe pod readiness

(2)httpGet和Socket探针

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

annotations:

kubernetes.io/change-cause: "Image update to latest"

labels:

app: nginx

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

minReadySeconds: 10

progressDeadlineSeconds: 600

replicas: 10

selector:

matchLabels:

app: nginx

revisionHistoryLimit: 20

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

readinessProbe:

#httpGet:

# path: /

# port: 80

#periodSeconds: 1

tcpSocket:

port: 80

initialDelaySeconds: 15

periodSeconds: 20

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

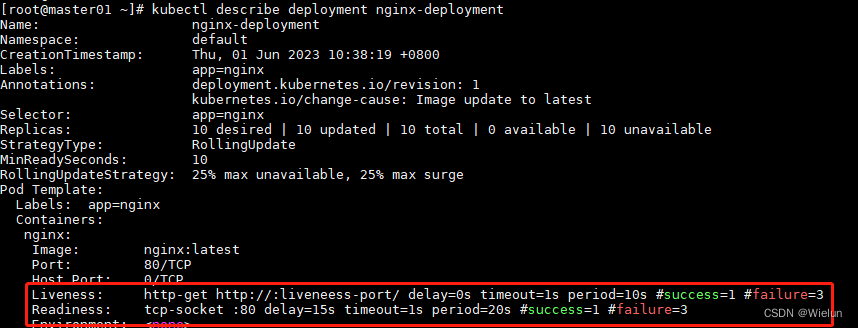

[root@master01 ~]# kubectl describe deployment nginx-deployment

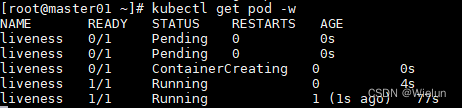

2、Liveness探针

(1)Exec

[root@master01 ~]# cat live-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness

labels:

test: liveness

spec:

containers:

- name: liveness

image: busybox:latest

command:

- "/bin/sh"

- "-c"

- "touch /tmp/healthy;sleep 30; rm -rf /tmp/healthy;sleep 100000000000"

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10

periodSeconds: 5

[root@master01 ~]# kubectl apply -f live-pod.yaml

[root@master01 ~]# kubectl get pod -w

(2)httpGet探针

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

annotations:

kubernetes.io/change-cause: "Image update to latest"

labels:

app: nginx

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

minReadySeconds: 10

progressDeadlineSeconds: 600

replicas: 10

selector:

matchLabels:

app: nginx

revisionHistoryLimit: 20

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- name: liveneess-port

containerPort: 80

readinessProbe:

#httpGet:

# path: /

# port: 80

#periodSeconds: 1

tcpSocket:

port: 80

initialDelaySeconds: 15

periodSeconds: 20

livenessProbe:

httpGet:

path: /

port: liveneess-port

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl describe deployment nginx-deployment

3、startupProbe

(1)httpGet

[root@master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

annotations:

kubernetes.io/change-cause: "Image update to latest"

labels:

app: nginx

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

minReadySeconds: 10

progressDeadlineSeconds: 600

replicas: 10

selector:

matchLabels:

app: nginx

revisionHistoryLimit: 20

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- name: liveneess-port

containerPort: 80

readinessProbe:

#httpGet:

# path: /

# port: 80

#periodSeconds: 1

tcpSocket:

port: 80

initialDelaySeconds: 15

periodSeconds: 20

livenessProbe:

httpGet:

path: /

port: liveneess-port

startupProbe:

httpGet:

path: /

port: liveneess-port

failureThreshold: 30

periodSeconds: 20

[root@master01 ~]# kubectl apply -f nginx-deploy.yaml

[root@master01 ~]# kubectl describe deployment nginx-deployment

(2)滚动更新

[root@master01 ~]# cat app.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app

spec:

minReadySeconds: 10

selector:

matchLabels:

run: app

replicas: 10

template:

metadata:

labels:

run: app

spec:

containers:

- name: app

image: busybox:latest

args:

- /bin/sh

- -c

- sleep 10; touch /tmp/healthy;sleep 100000000000

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10

periodSeconds: 5

[root@master01 ~]# cat app_v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app

spec:

minReadySeconds: 10

selector:

matchLabels:

run: app

replicas: 10

template:

metadata:

labels:

run: app

spec:

containers:

- name: app

image: busybox:latest

args:

- /bin/sh

- -c

- sleep 100000000000

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10

periodSeconds: 5

[root@master01 ~]# kubectl apply -f app.yaml

[root@master01 ~]# kubectl apply -f app_v2.yaml

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

app-6fb66c48fc-58dpt 1/1 Running 0 2m18s

app-6fb66c48fc-5sx5w 1/1 Running 0 2m18s

app-6fb66c48fc-75dtw 1/1 Running 0 2m18s

app-6fb66c48fc-79nhq 1/1 Running 0 2m18s

app-6fb66c48fc-8v9hs 1/1 Terminating 0 2m18s

app-6fb66c48fc-cgxj6 1/1 Running 0 2m18s

app-6fb66c48fc-cztkt 1/1 Running 0 2m18s

app-6fb66c48fc-f4npw 1/1 Terminating 0 2m18s

app-6fb66c48fc-g5vsv 1/1 Running 0 2m18s

app-6fb66c48fc-wjq5b 1/1 Running 0 2m18s

app-d7855499b-8lv5l 0/1 Running 0 23s

app-d7855499b-chgg9 0/1 Running 0 23s

app-d7855499b-ph7jw 0/1 Running 0 22s

app-d7855499b-q8xd7 0/1 Running 0 22s

app-d7855499b-qmqc6 0/1 Running 0 23s

[root@master01 ~]# kubectl rollout status deployment app

Waiting for deployment "app" rollout to finish: 5 out of 10 new replicas have been updated...

[root@master01 ~]# kubectl delete -f app_v2.yaml

三、DaemonSet

1、部署cAdvisor-RollingUpdate

如果不是每个节点都安装,那么需要加tolerations

通过set image就可以进行更新

[root@master01 ~]# cat cadvisor.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: cadvisor

labels:

k8s-app: cadvisor

spec:

selector:

matchLabels:

name: cadvisor

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

name: cadvisor

spec:

hostNetwork: true

containers:

- name: cadvisor

image: google/cadvisor:latest

ports:

- containerPort: 8080

volumeMounts:

- name: rootfs

mountPath: /rootfs

readOnly: true

- name: sys

mountPath: /sys

readOnly: true

- name: containerd

mountPath: /var/lib/containerd

readOnly: true

- name: disk

mountPath: /dev/disk

readOnly: true

volumes:

- name: rootfs

hostPath:

path: /

- name: sys

hostPath:

path: /sys

- name: containerd

hostPath:

path: /var/lib/containerd

- name: disk

hostPath:

path: /dev/disk

[root@master01 ~]# kubectl apply -f cadvisor.yaml

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cadvisor-5mfxm 1/1 Running 0 44s 10.10.10.23 master03 <none> <none>

cadvisor-d6mj9 1/1 Running 0 44s 10.10.10.21 master01 <none> <none>

cadvisor-hl4nx 1/1 Running 0 44s 10.10.10.24 node01 <none> <none>

cadvisor-w6lm4 1/1 Running 0 44s 10.10.10.22 master02 <none> <none>

cadvisor-wcf4d 1/1 Running 0 44s 10.10.10.25 node02 <none> <none>

[root@master01 ~]# kubectl rollout history daemonset cadvisor #查看更新记录

2、部署cAdvisor-OnDelete

手动更新,自己去删除更新

[root@master01 ~]# cat cadvisor.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: cadvisor

labels:

k8s-app: cadvisor

spec:

selector:

matchLabels:

name: cadvisor

updateStrategy:

type: OnDelete

template:

metadata:

labels:

name: cadvisor

spec:

hostNetwork: true

containers:

- name: cadvisor

image: google/cadvisor:latest

ports:

- containerPort: 8080

volumeMounts:

- name: rootfs

mountPath: /rootfs

readOnly: true

- name: sys

mountPath: /sys

readOnly: true

- name: containerd

mountPath: /var/lib/containerd

readOnly: true

- name: disk

mountPath: /dev/disk

readOnly: true

volumes:

- name: rootfs

hostPath:

path: /

- name: sys

hostPath:

path: /sys

- name: containerd

hostPath:

path: /var/lib/containerd

- name: disk

hostPath:

path: /dev/disk

[root@master01 ~]# kubectl apply -f cadvisor.yaml

[root@master01 ~]# kubectl delete -f cadvisor.yaml

四、Job与CronJob

1、Job初体验

restartPolicy: alway、Never、OnFailure

Never:如果发生错误会一直新建pod,会把资源耗尽,可通过backoffLimit:限制最多几次 activeDeadlineSeconds:多少秒就删除 ttlSecondsAfterFinished:清理失败或者成功job时间

[root@master01 ~]# cat myjob.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: myjob

spec:

backoffLimit: 3

activeDeadlineSeconds: 10

ttlSecondsAfterFinished: 30

template:

spec:

containers:

- name: myjob

image: busybox

command: ["echo","hello world!"]

restartPolicy: Never

[root@master01 ~]# cat myjob.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: myjob

spec:

backoffLimit: 3

activeDeadlineSeconds: 10

ttlSecondsAfterFinished: 30

template:

spec:

containers:

- name: myjob

image: busybox

command: ["echo","hello world!"]

restartPolicy: Never

[root@master01 ~]# kubectl apply -f myjob.yaml

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myjob-cqmxf 0/1 Completed 0 19s

[root@master01 ~]# kubectl get jobs myjob

NAME COMPLETIONS DURATION AGE

myjob 1/1 5s 2m1s

[root@master01 ~]# kubectl logs myjob-cqmxf

hello world!

[root@master01 ~]# kubectl delete -f myjob.yaml

(2)同时运行2个

completions:执行总量

[root@master01 ~]# cat myjob.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: myjob

spec:

parallelism: 2

completions: 10

completionMode: Indexed

backoffLimit: 3

activeDeadlineSeconds: 120

ttlSecondsAfterFinished: 1200

template:

spec:

containers:

- name: myjob

image: busybox

command: ["echo","hello world!"]

restartPolicy: Never

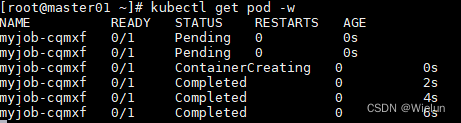

[root@master01 ~]# kubectl apply -f myjob.yaml

2、CronJob初体验

设置时区:timeZone: Etc/UTC

[root@master01 ~]# cat cronjob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

command: ["echo","hello world!"]

restartPolicy: OnFailure

[root@master01 ~]# kubectl apply -f cronjob.yaml

3、CronJob设置参数

successfulJobsHistoryLimit: 3 #记录成功次数3

failedJobsHistoryLimit: 3 #记录失败次数3

startingDeadlineSeconds: 200 #最晚执行时间

concurrencyPolicy: Allow #允许叠加,Forbid忽略旧任务 Replace忽略新任务

[root@master01 ~]# cat cronjob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: hello

spec:

successfulJobsHistoryLimit: 3

failedJobsHistoryLimit: 3

startingDeadlineSeconds: 200

concurrencyPolicy: Allow

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

command: ["echo","hello world!"]

restartPolicy: OnFailure

[root@master01 ~]# kubectl apply -f cronjob.yaml