一、背景

-

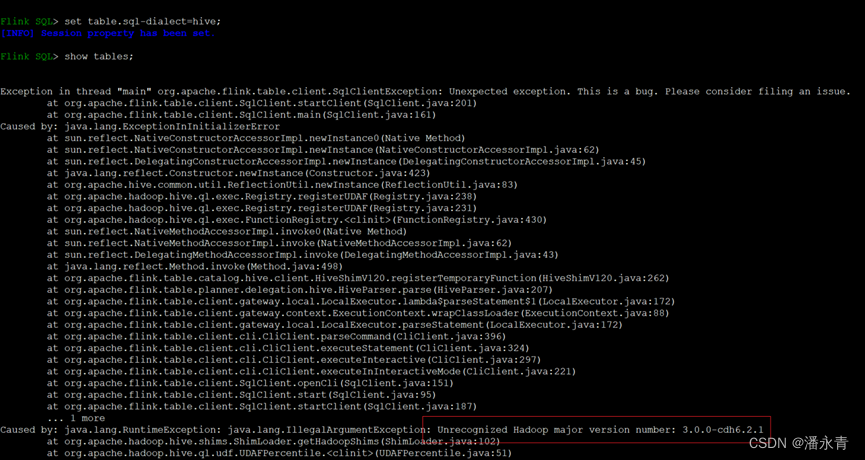

异常描述

CDH-6.3.2环境下使用Flink-1.14.4的FlinkSQL的hive方言时出现如下异常

java.lang.Runtimelxception: java,lang.IllegalArgumentException: Unrecoonized Hadoop major version number: 3.0.0-cdh6.2.1

-

问题说明

开源社区hive 2.x的版本这种情况下是不支持hadoop 3.x版本。但是CDH中hive 2.1.1-cdh6.3.2版本和社区版本是不一样的,可以支持hadoop 3.x版本

二、基于3.0.0-cdh6.3.2版本编译Flink1.14.4

1、环境及软件准备

- Centos7.9 (非必须,建议在Linux环境编译,少撞坑..)

- maven-3.9.2

- flink-1.14.4

2、编译Flink1.14.4

- maven-3.9.2

vim maven-3.9.2/conf/settings.xml

<settings xmlns="http://maven.apache.org/SETTINGS/1.2.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.2.0 https://maven.apache.org/xsd/settings-1.2.0.xsd">

<localRepository>/root/maven-3.9.2/repo</localRepository>

......

......

......

<mirrors>

<mirror>

<id>maven-default-http-blocker</id>

<mirrorOf>external:http:*</mirrorOf>

<name>Pseudo repository to mirror external repositories initially using HTTP.</name>

<url>http://0.0.0.0/</url>

<blocked>true</blocked>

</mirror>

<mirror>

<id>conjars-https</id>

<url>https://conjars.wensel.net/repo/</url>

<mirrorOf>conjars</mirrorOf>

</mirror>

<mirror>

<id>conjars</id>

<name>conjars</name>

<url>https://conjars.wensel.net/repo/</url>

<mirrorOf>conjarse</mirrorOf>

</mirror>

</mirrors>

......

......

......2. flink-1.14.4 下载、解压

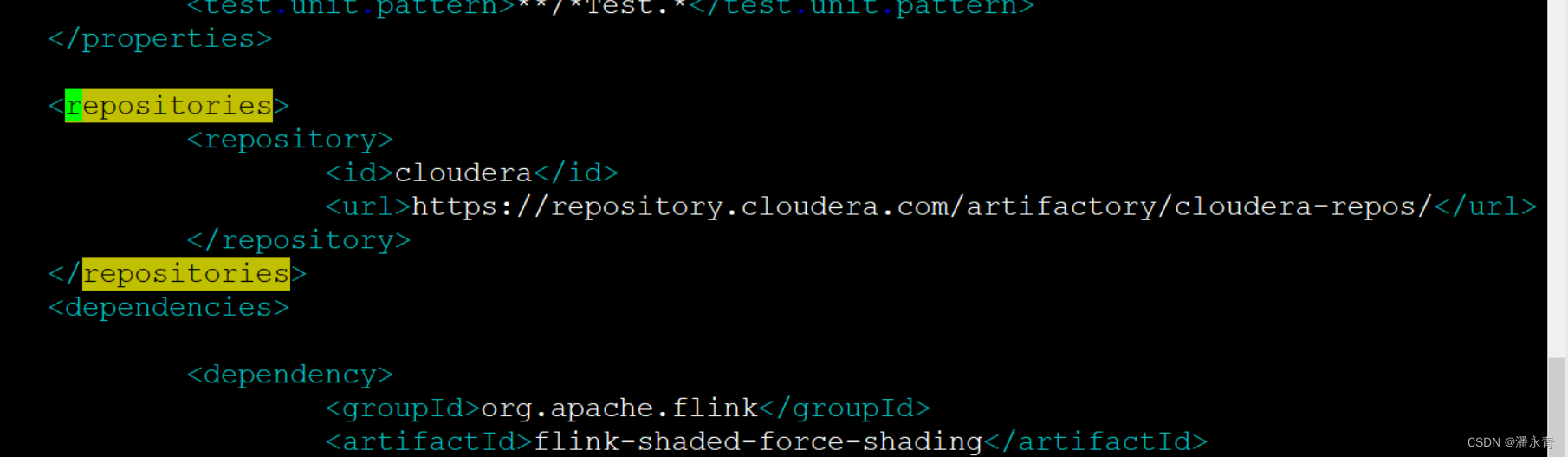

vim /root/flink-1.14.4/pom.xml

配置cdh仓库地址

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

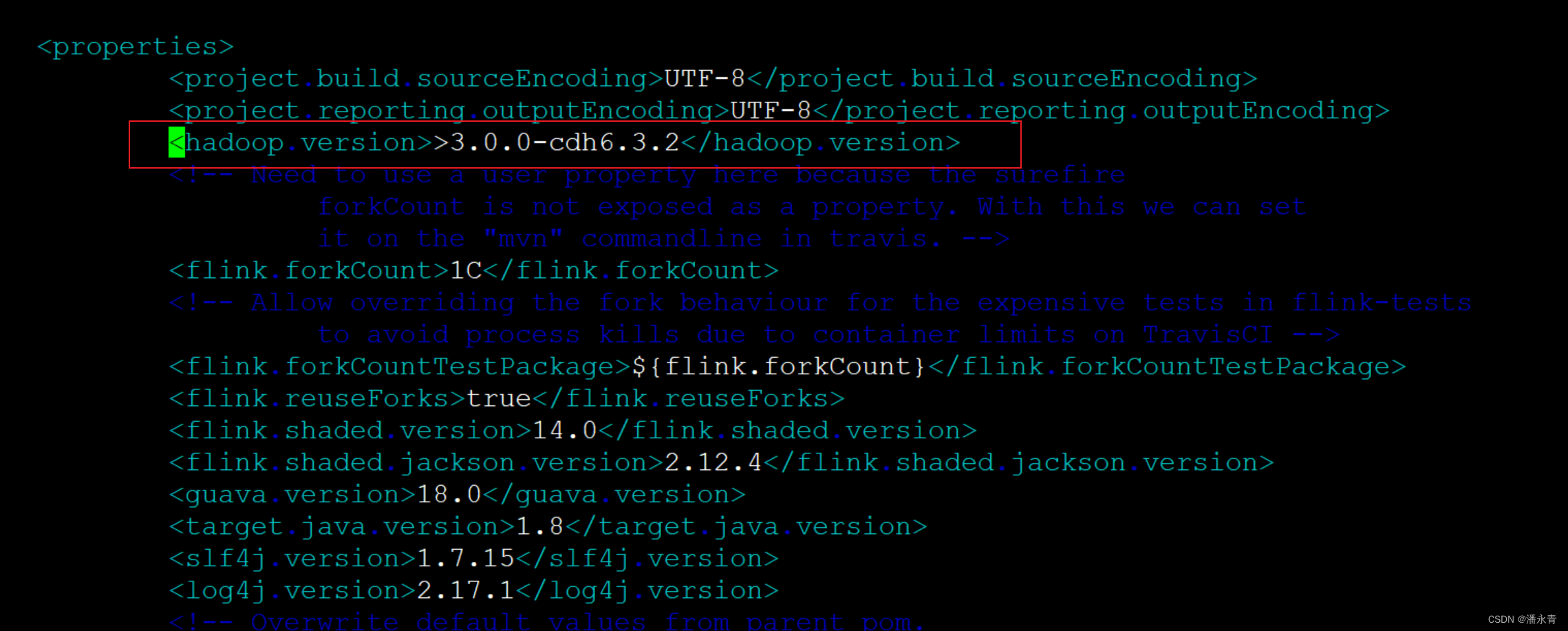

修改hadoop版本为cdh对应的hadoop版本

<hadoop.version>3.0.0-cdh6.3.2</hadoop.version>

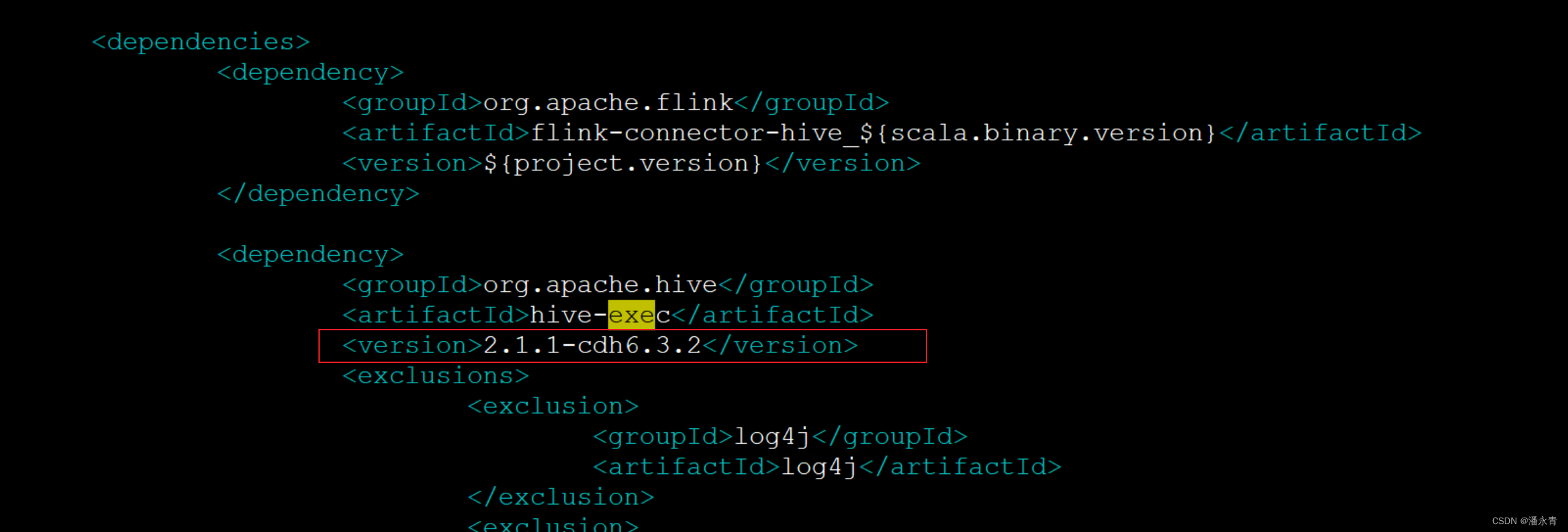

vim /root/flink-1.14.4/flink-connectors/flink-sql-connector-hive-2.2.0/pom.xml

将/flink-sql-connector-hive-2.2.0中的 hive-exec 修改版本为cdh对应的hive版本2.1.1-cdh6.3.2

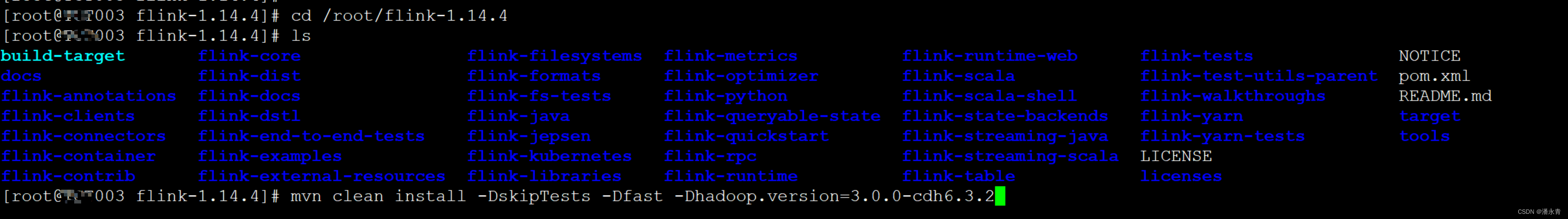

开始编译

mvn clean install -DskipTests -Dfast -Dhadoop.version=3.0.0-cdh6.3.2

编译结果

[INFO] --- install:2.5.2:install (default-install) @ java-ci-tools ---

[INFO] Installing /root/flink-1.14.4/tools/ci/java-ci-tools/target/java-ci-tools-1.14.4.jar to /root/.m2/repository/org/apache/flink/java-ci-tools/1.14.4/java-ci-tools-1.14.4.jar

[INFO] Installing /root/flink-1.14.4/tools/ci/java-ci-tools/target/dependency-reduced-pom.xml to /root/.m2/repository/org/apache/flink/java-ci-tools/1.14.4/java-ci-tools-1.14.4.pom

[INFO] Installing /root/flink-1.14.4/tools/ci/java-ci-tools/target/java-ci-tools-1.14.4-tests.jar to /root/.m2/repository/org/apache/flink/java-ci-tools/1.14.4/java-ci-tools-1.14.4-tests.jar

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for Flink : 1.14.4:

[INFO]

[INFO] Flink : ............................................ SUCCESS [ 59.471 s]

[INFO] Flink : Annotations ................................ SUCCESS [ 16.499 s]

[INFO] Flink : Test utils : ............................... SUCCESS [ 0.048 s]

[INFO] Flink : Test utils : Junit ......................... SUCCESS [ 0.756 s]

[INFO] Flink : Metrics : .................................. SUCCESS [ 0.051 s]

[INFO] Flink : Metrics : Core ............................. SUCCESS [ 32.274 s]

[INFO] Flink : Core ....................................... SUCCESS [01:24 min]

[INFO] Flink : Java ....................................... SUCCESS [ 19.666 s]

[INFO] Flink : Scala ...................................... SUCCESS [07:07 min]

[INFO] Flink : FileSystems : .............................. SUCCESS [ 0.035 s]

[INFO] Flink : FileSystems : Hadoop FS .................... SUCCESS [03:25 min]

[INFO] Flink : FileSystems : Mapr FS ...................... SUCCESS [ 0.701 s]

[INFO] Flink : FileSystems : Hadoop FS shaded ............. SUCCESS [ 56.192 s]

[INFO] Flink : FileSystems : S3 FS Base ................... SUCCESS [ 22.125 s]

[INFO] Flink : FileSystems : S3 FS Hadoop ................. SUCCESS [ 42.749 s]

[INFO] Flink : FileSystems : S3 FS Presto ................. SUCCESS [03:29 min]

[INFO] Flink : FileSystems : OSS FS ....................... SUCCESS [ 18.792 s]

[INFO] Flink : FileSystems : Azure FS Hadoop .............. SUCCESS [03:40 min]

[INFO] Flink : RPC : ...................................... SUCCESS [ 0.032 s]

[INFO] Flink : RPC : Core ................................. SUCCESS [ 0.213 s]

[INFO] Flink : RPC : Akka ................................. SUCCESS [02:45 min]

[INFO] Flink : RPC : Akka-Loader .......................... SUCCESS [ 2.896 s]

[INFO] Flink : Queryable state : .......................... SUCCESS [ 0.028 s]

[INFO] Flink : Queryable state : Client Java .............. SUCCESS [ 0.287 s]

[INFO] Flink : Runtime .................................... SUCCESS [02:21 min]

[INFO] Flink : Optimizer .................................. SUCCESS [ 1.279 s]

[INFO] Flink : Connectors : ............................... SUCCESS [ 0.047 s]

[INFO] Flink : Connectors : File Sink Common .............. SUCCESS [ 0.198 s]

[INFO] Flink : Streaming Java ............................. SUCCESS [ 7.293 s]

[INFO] Flink : Clients .................................... SUCCESS [ 17.004 s]

[INFO] Flink : DSTL ....................................... SUCCESS [ 0.029 s]

[INFO] Flink : DSTL : DFS ................................. SUCCESS [ 0.352 s]

[INFO] Flink : State backends : ........................... SUCCESS [ 0.028 s]

[INFO] Flink : State backends : RocksDB ................... SUCCESS [04:48 min]

[INFO] Flink : State backends : Changelog ................. SUCCESS [ 0.409 s]

[INFO] Flink : Test utils : Utils ......................... SUCCESS [ 36.604 s]

[INFO] Flink : Runtime web ................................ SUCCESS [03:45 min]

[INFO] Flink : Test utils : Connectors .................... SUCCESS [ 0.129 s]

[INFO] Flink : Connectors : Base .......................... SUCCESS [ 0.422 s]

[INFO] Flink : Connectors : Files ......................... SUCCESS [ 0.668 s]

[INFO] Flink : Examples : ................................. SUCCESS [ 0.060 s]

[INFO] Flink : Examples : Batch ........................... SUCCESS [ 17.426 s]

[INFO] Flink : Connectors : Hadoop compatibility .......... SUCCESS [ 4.132 s]

[INFO] Flink : Tests ...................................... SUCCESS [ 41.403 s]

[INFO] Flink : Streaming Scala ............................ SUCCESS [ 22.269 s]

[INFO] Flink : Connectors : HCatalog ...................... SUCCESS [01:46 min]

[INFO] Flink : Table : .................................... SUCCESS [ 0.062 s]

[INFO] Flink : Table : Common ............................. SUCCESS [01:41 min]

[INFO] Flink : Table : API Java ........................... SUCCESS [ 1.249 s]

[INFO] Flink : Table : API Java bridge .................... SUCCESS [ 0.533 s]

[INFO] Flink : Formats : .................................. SUCCESS [ 0.029 s]

[INFO] Flink : Format : Common ............................ SUCCESS [ 0.070 s]

[INFO] Flink : Table : API Scala .......................... SUCCESS [ 7.713 s]

[INFO] Flink : Table : API Scala bridge ................... SUCCESS [ 7.706 s]

[INFO] Flink : Table : SQL Parser ......................... SUCCESS [01:50 min]

[INFO] Flink : Table : SQL Parser Hive .................... SUCCESS [ 12.423 s]

[INFO] Flink : Table : Code Splitter ...................... SUCCESS [01:24 min]

[INFO] Flink : Libraries : ................................ SUCCESS [ 0.034 s]

[INFO] Flink : Libraries : CEP ............................ SUCCESS [ 1.634 s]

[INFO] Flink : Table : Runtime ............................ SUCCESS [ 3.295 s]

[INFO] Flink : Table : Planner ............................ SUCCESS [02:05 min]

[INFO] Flink : Formats : Json ............................. SUCCESS [ 0.635 s]

[INFO] Flink : Connectors : Elasticsearch base ............ SUCCESS [01:47 min]

[INFO] Flink : Connectors : Elasticsearch 5 ............... SUCCESS [ 34.571 s]

[INFO] Flink : Connectors : Elasticsearch 6 ............... SUCCESS [01:05 min]

[INFO] Flink : Connectors : Elasticsearch 7 ............... SUCCESS [01:32 min]

[INFO] Flink : Connectors : HBase base .................... SUCCESS [01:05 min]

[INFO] Flink : Connectors : HBase 1.4 ..................... SUCCESS [02:10 min]

[INFO] Flink : Connectors : HBase 2.2 ..................... SUCCESS [01:40 min]

[INFO] Flink : Formats : Hadoop bulk ...................... SUCCESS [ 0.972 s]

[INFO] Flink : Formats : Orc .............................. SUCCESS [ 9.371 s]

[INFO] Flink : Formats : Orc nohive ....................... SUCCESS [ 9.061 s]

[INFO] Flink : Formats : Avro ............................. SUCCESS [ 22.277 s]

[INFO] Flink : Formats : Parquet .......................... SUCCESS [ 46.070 s]

[INFO] Flink : Formats : Csv .............................. SUCCESS [ 0.426 s]

[INFO] Flink : Connectors : Hive .......................... SUCCESS [04:35 min]

[INFO] Flink : Connectors : JDBC .......................... SUCCESS [05:52 min]

[INFO] Flink : Connectors : RabbitMQ ...................... SUCCESS [ 6.576 s]

[INFO] Flink : Connectors : Twitter ....................... SUCCESS [ 25.245 s]

[INFO] Flink : Connectors : Nifi .......................... SUCCESS [ 28.031 s]

[INFO] Flink : Connectors : Cassandra ..................... SUCCESS [ 26.062 s]

[INFO] Flink : Metrics : JMX .............................. SUCCESS [ 0.188 s]

[INFO] Flink : Formats : Avro confluent registry .......... SUCCESS [ 55.983 s]

[INFO] Flink : Test utils : Testing Framework ............. SUCCESS [ 5.095 s]

[INFO] Flink : Connectors : Kafka ......................... SUCCESS [01:16 min]

[INFO] Flink : Connectors : Google PubSub ................. SUCCESS [ 46.304 s]

[INFO] Flink : Connectors : Kinesis ....................... SUCCESS [04:09 min]

[INFO] Flink : Connectors : Pulsar ........................ SUCCESS [08:09 min]

[INFO] Flink : Connectors : SQL : Elasticsearch 6 ......... SUCCESS [ 6.595 s]

[INFO] Flink : Connectors : SQL : Elasticsearch 7 ......... SUCCESS [ 8.016 s]

[INFO] Flink : Connectors : SQL : HBase 1.4 ............... SUCCESS [ 7.123 s]

[INFO] Flink : Connectors : SQL : HBase 2.2 ............... SUCCESS [ 14.437 s]

[INFO] Flink : Connectors : SQL : Hive 1.2.2 .............. SUCCESS [04:18 min]

[INFO] Flink : Connectors : SQL : Hive 2.2.0 .............. SUCCESS [01:54 min]

[INFO] Flink : Connectors : SQL : Hive 2.3.6 .............. SUCCESS [05:09 min]

[INFO] Flink : Connectors : SQL : Hive 3.1.2 .............. SUCCESS [08:55 min]

[INFO] Flink : Connectors : SQL : Kafka ................... SUCCESS [ 1.015 s]

[INFO] Flink : Connectors : SQL : Kinesis ................. SUCCESS [ 9.111 s]

[INFO] Flink : Formats : Sequence file .................... SUCCESS [ 0.436 s]

[INFO] Flink : Formats : Compress ......................... SUCCESS [ 0.445 s]

[INFO] Flink : Formats : Avro AWS Glue Schema Registry .... SUCCESS [02:30 min]

[INFO] Flink : Formats : SQL Orc .......................... SUCCESS [ 0.267 s]

[INFO] Flink : Formats : SQL Parquet ...................... SUCCESS [ 0.630 s]

[INFO] Flink : Formats : SQL Avro ......................... SUCCESS [ 1.222 s]

[INFO] Flink : Formats : SQL Avro Confluent Registry ...... SUCCESS [ 5.252 s]

[INFO] Flink : Examples : Streaming ....................... SUCCESS [ 15.564 s]

[INFO] Flink : Examples : Table ........................... SUCCESS [ 6.001 s]

[INFO] Flink : Examples : Build Helper : .................. SUCCESS [ 0.062 s]

[INFO] Flink : Examples : Build Helper : Streaming Twitter SUCCESS [ 0.495 s]

[INFO] Flink : Examples : Build Helper : Streaming State machine SUCCESS [ 0.482 s]

[INFO] Flink : Examples : Build Helper : Streaming Google PubSub SUCCESS [ 14.322 s]

[INFO] Flink : Container .................................. SUCCESS [ 0.174 s]

[INFO] Flink : Queryable state : Runtime .................. SUCCESS [ 0.441 s]

[INFO] Flink : Kubernetes ................................. SUCCESS [01:24 min]

[INFO] Flink : Yarn ....................................... SUCCESS [ 1.518 s]

[INFO] Flink : Libraries : Gelly .......................... SUCCESS [ 1.530 s]

[INFO] Flink : Libraries : Gelly scala .................... SUCCESS [ 10.840 s]

[INFO] Flink : Libraries : Gelly Examples ................. SUCCESS [ 13.025 s]

[INFO] Flink : External resources : ....................... SUCCESS [ 0.023 s]

[INFO] Flink : External resources : GPU ................... SUCCESS [ 0.144 s]

[INFO] Flink : Metrics : Dropwizard ....................... SUCCESS [ 0.162 s]

[INFO] Flink : Metrics : Graphite ......................... SUCCESS [ 2.120 s]

[INFO] Flink : Metrics : InfluxDB ......................... SUCCESS [ 39.168 s]

[INFO] Flink : Metrics : Prometheus ....................... SUCCESS [ 12.082 s]

[INFO] Flink : Metrics : StatsD ........................... SUCCESS [ 0.118 s]

[INFO] Flink : Metrics : Datadog .......................... SUCCESS [ 0.252 s]

[INFO] Flink : Metrics : Slf4j ............................ SUCCESS [ 0.106 s]

[INFO] Flink : Libraries : CEP Scala ...................... SUCCESS [ 7.770 s]

[INFO] Flink : Table : Uber ............................... SUCCESS [ 7.552 s]

[INFO] Flink : Python ..................................... SUCCESS [10:14 min]

[INFO] Flink : Table : SQL Client ......................... SUCCESS [ 10.765 s]

[INFO] Flink : Libraries : State processor API ............ SUCCESS [ 0.733 s]

[INFO] Flink : Scala shell ................................ SUCCESS [ 11.145 s]

[INFO] Flink : Dist ....................................... SUCCESS [03:24 min]

[INFO] Flink : Yarn Tests ................................. SUCCESS [ 3.867 s]

[INFO] Flink : E2E Tests : ................................ SUCCESS [02:42 min]

[INFO] Flink : E2E Tests : CLI ............................ SUCCESS [ 0.131 s]

[INFO] Flink : E2E Tests : Parent Child classloading program SUCCESS [ 0.119 s]

[INFO] Flink : E2E Tests : Parent Child classloading lib-package SUCCESS [ 0.103 s]

[INFO] Flink : E2E Tests : Dataset allround ............... SUCCESS [ 0.119 s]

[INFO] Flink : E2E Tests : Dataset Fine-grained recovery .. SUCCESS [ 0.147 s]

[INFO] Flink : E2E Tests : Datastream allround ............ SUCCESS [ 0.710 s]

[INFO] Flink : E2E Tests : Batch SQL ...................... SUCCESS [ 0.112 s]

[INFO] Flink : E2E Tests : Stream SQL ..................... SUCCESS [ 0.122 s]

[INFO] Flink : E2E Tests : Distributed cache via blob ..... SUCCESS [ 0.131 s]

[INFO] Flink : E2E Tests : High parallelism iterations .... SUCCESS [ 7.028 s]

[INFO] Flink : E2E Tests : Stream stateful job upgrade .... SUCCESS [ 0.577 s]

[INFO] Flink : E2E Tests : Queryable state ................ SUCCESS [ 1.534 s]

[INFO] Flink : E2E Tests : Local recovery and allocation .. SUCCESS [ 0.152 s]

[INFO] Flink : E2E Tests : Elasticsearch 5 ................ SUCCESS [ 5.104 s]

[INFO] Flink : E2E Tests : Elasticsearch 6 ................ SUCCESS [ 2.661 s]

[INFO] Flink : Quickstart : ............................... SUCCESS [ 0.543 s]

[INFO] Flink : Quickstart : Java .......................... SUCCESS [01:24 min]

[INFO] Flink : Quickstart : Scala ......................... SUCCESS [ 0.071 s]

[INFO] Flink : E2E Tests : Quickstart ..................... SUCCESS [ 0.315 s]

[INFO] Flink : E2E Tests : Confluent schema registry ...... SUCCESS [ 2.002 s]

[INFO] Flink : E2E Tests : Stream state TTL ............... SUCCESS [ 5.917 s]

[INFO] Flink : E2E Tests : SQL client ..................... SUCCESS [01:18 min]

[INFO] Flink : E2E Tests : File sink ...................... SUCCESS [ 0.898 s]

[INFO] Flink : E2E Tests : State evolution ................ SUCCESS [ 0.501 s]

[INFO] Flink : E2E Tests : RocksDB state memory control ... SUCCESS [ 0.534 s]

[INFO] Flink : E2E Tests : Common ......................... SUCCESS [ 0.578 s]

[INFO] Flink : E2E Tests : Metrics availability ........... SUCCESS [ 0.165 s]

[INFO] Flink : E2E Tests : Metrics reporter prometheus .... SUCCESS [ 0.197 s]

[INFO] Flink : E2E Tests : Heavy deployment ............... SUCCESS [ 8.971 s]

[INFO] Flink : E2E Tests : Connectors : Google PubSub ..... SUCCESS [ 48.814 s]

[INFO] Flink : E2E Tests : Streaming Kafka base ........... SUCCESS [ 0.183 s]

[INFO] Flink : E2E Tests : Streaming Kafka ................ SUCCESS [ 8.357 s]

[INFO] Flink : E2E Tests : Plugins : ...................... SUCCESS [ 0.049 s]

[INFO] Flink : E2E Tests : Plugins : Dummy fs ............. SUCCESS [ 0.093 s]

[INFO] Flink : E2E Tests : Plugins : Another dummy fs ..... SUCCESS [ 0.147 s]

[INFO] Flink : E2E Tests : TPCH ........................... SUCCESS [ 5.189 s]

[INFO] Flink : E2E Tests : Streaming Kinesis .............. SUCCESS [ 17.962 s]

[INFO] Flink : E2E Tests : Elasticsearch 7 ................ SUCCESS [ 3.020 s]

[INFO] Flink : E2E Tests : Common Kafka ................... SUCCESS [ 1.408 s]

[INFO] Flink : E2E Tests : TPCDS .......................... SUCCESS [ 0.366 s]

[INFO] Flink : E2E Tests : Netty shuffle memory control ... SUCCESS [ 0.132 s]

[INFO] Flink : E2E Tests : Python ......................... SUCCESS [ 6.509 s]

[INFO] Flink : E2E Tests : HBase .......................... SUCCESS [ 1.420 s]

[INFO] Flink : E2E Tests : AWS Glue Schema Registry ....... SUCCESS [ 19.292 s]

[INFO] Flink : E2E Tests : Pulsar ......................... SUCCESS [ 1.578 s]

[INFO] Flink : State backends : Heap spillable ............ SUCCESS [ 0.270 s]

[INFO] Flink : Contrib : .................................. SUCCESS [ 0.022 s]

[INFO] Flink : Contrib : Connectors : Wikiedits ........... SUCCESS [ 2.737 s]

[INFO] Flink : FileSystems : Tests ........................ SUCCESS [ 0.718 s]

[INFO] Flink : Docs ....................................... SUCCESS [ 8.164 s]

[INFO] Flink : Walkthrough : .............................. SUCCESS [ 0.026 s]

[INFO] Flink : Walkthrough : Common ....................... SUCCESS [ 0.232 s]

[INFO] Flink : Walkthrough : Datastream Java .............. SUCCESS [ 0.061 s]

[INFO] Flink : Walkthrough : Datastream Scala ............. SUCCESS [ 0.059 s]

[INFO] Flink : Tools : CI : Java .......................... SUCCESS [ 49.851 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 02:18 h

[INFO] Finished at: 2023-05-25T13:05:59+08:00

[INFO] ------------------------------------------------------------------------

[WARNING]

[WARNING] Plugin validation issues were detected in 38 plugin(s)

[WARNING]

[WARNING] * org.apache.maven.plugins:maven-compiler-plugin:3.8.0

[WARNING] * com.github.eirslett:frontend-maven-plugin:1.9.1

[WARNING] * org.apache.maven.plugins:maven-checkstyle-plugin:2.17

[WARNING] * org.apache.maven.plugins:maven-clean-plugin:3.1.0

[WARNING] * org.apache.maven.plugins:maven-assembly-plugin:3.0.0

[WARNING] * com.github.os72:protoc-jar-maven-plugin:3.11.4

[WARNING] * com.github.siom79.japicmp:japicmp-maven-plugin:0.11.0

[WARNING] * org.codehaus.gmavenplus:gmavenplus-plugin:1.8.1

[WARNING] * org.apache.maven.plugins:maven-jar-plugin:2.4

[WARNING] * org.apache.maven.plugins:maven-antrun-plugin:1.8

[WARNING] * org.apache.maven.plugins:maven-clean-plugin:2.5

[WARNING] * org.apache.maven.plugins:maven-install-plugin:2.5.2

[WARNING] * org.apache.maven.plugins:maven-jar-plugin:2.5

[WARNING] * net.alchim31.maven:scala-maven-plugin:3.2.2

[WARNING] * org.apache.avro:avro-maven-plugin:1.10.0

[WARNING] * org.antlr:antlr3-maven-plugin:3.5.2

[WARNING] * com.googlecode.fmpp-maven-plugin:fmpp-maven-plugin:1.0

[WARNING] * org.antlr:antlr4-maven-plugin:4.7

[WARNING] * org.apache.maven.plugins:maven-antrun-plugin:1.7

[WARNING] * com.diffplug.spotless:spotless-maven-plugin:2.4.2

[WARNING] * org.apache.maven.plugins:maven-assembly-plugin:2.4

[WARNING] * org.apache.maven.plugins:maven-remote-resources-plugin:1.5

[WARNING] * org.apache.maven.plugins:maven-archetype-plugin:2.2

[WARNING] * org.apache.maven.plugins:maven-shade-plugin:3.1.1

[WARNING] * org.scalastyle:scalastyle-maven-plugin:1.0.0

[WARNING] * pl.project13.maven:git-commit-id-plugin:4.0.2

[WARNING] * org.apache.maven.plugins:maven-surefire-plugin:2.22.2

[WARNING] * org.apache.maven.plugins:maven-surefire-plugin:2.22.1

[WARNING] * org.apache.maven.plugins:maven-enforcer-plugin:3.0.0-M1

[WARNING] * org.apache.maven.plugins:maven-dependency-plugin:3.1.1

[WARNING] * org.apache.maven.plugins:maven-source-plugin:3.0.1

[WARNING] * org.apache.maven.plugins:maven-resources-plugin:3.1.0

[WARNING] * org.commonjava.maven.plugins:directory-maven-plugin:0.1

[WARNING] * org.apache.maven.plugins:maven-surefire-plugin:2.19.1

[WARNING] * org.codehaus.mojo:exec-maven-plugin:1.5.0

[WARNING] * org.xolstice.maven.plugins:protobuf-maven-plugin:0.5.1

[WARNING] * org.xolstice.maven.plugins:protobuf-maven-plugin:0.6.1

[WARNING] * org.apache.rat:apache-rat-plugin:0.12

[WARNING]

[WARNING] For more or less details, use 'maven.plugin.validation' property with one of the values (case insensitive): [BRIEF, DEFAULT, VERBOSE]

[WARNING]

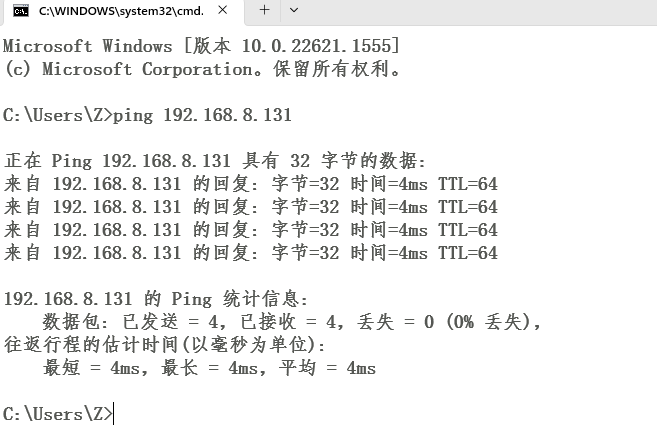

[root@TCT003 flink-1.14.4]# 三、测试

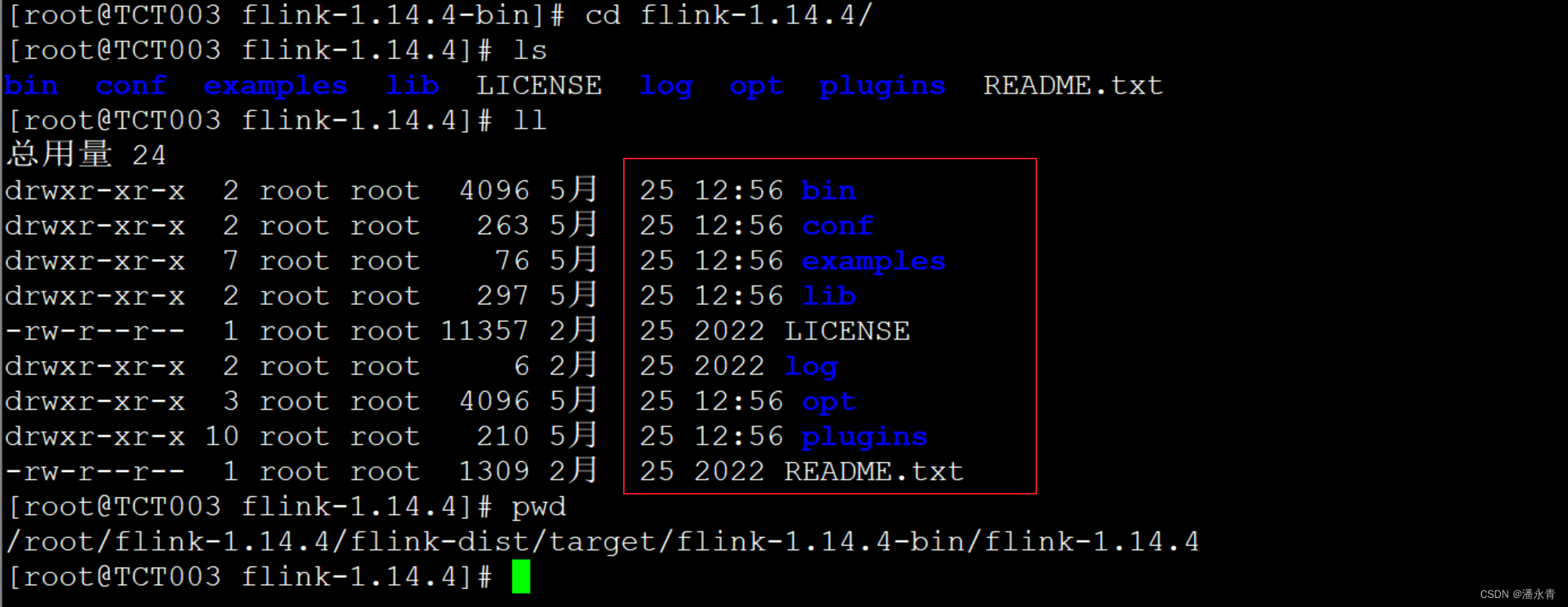

1.获取编译后的Flink-1.14.4安装包

/root/flink-1.14.4/flink-dist/target/flink-1.14.4-bin

2. flink的lib包配置

包的来源:

flink-sql-connector-hive-2.2.0_2.11-1.14.4.jar

/root/flink-1.14.4/flink-connectors/flink-sql-connector-hive-2.2.0/target/flink-sql-connector-hive-2.2.0_2.11-1.14.4.jar

flink-connector-hive_2.11-1.14.4.jar

/root/flink-1.14.4/flink-connectors/flink-connector-hive/target/flink-connector-hive_2.11-1.14.4.jar

libfb303-0.9.3.jar

cdh lib 包下获取即可

3.sqlClient客户端验证

说明:我已经配置flink的standalone集群,在此就不在赘述了

[root@TCT001 bin]# cat /opt/flink-1.14.4/conf/sql-conf.sql

CREATE CATALOG my_hive WITH (

'type' = 'hive',

'hive-version' = '2.1.1',

'default-database' = 'default',

'hive-conf-dir' = '/etc/hive/conf.cloudera.hive/',

'hadoop-conf-dir'='/etc/hadoop/conf.cloudera.hdfs/'

);

-- set the HiveCatalog as the current catalog of the session

USE CATALOG my_hive;

[root@TCT001 bin]#

[root@TCT001 bin]#

[root@TCT001 bin]#

[root@TCT001 bin]# ./sql-client.sh embedded -i ../conf/sql-conf.sql

Setting HBASE_CONF_DIR=/etc/hbase/conf because no HBASE_CONF_DIR was set.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/flink-1.14.4/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Successfully initialized from sql script: file:/opt/flink-1.14.4/bin/../conf/sql-conf.sql

Command history file path: /root/.flink-sql-history

▒▓██▓██▒

▓████▒▒█▓▒▓███▓▒

▓███▓░░ ▒▒▒▓██▒ ▒

░██▒ ▒▒▓▓█▓▓▒░ ▒████

██▒ ░▒▓███▒ ▒█▒█▒

░▓█ ███ ▓░▒██

▓█ ▒▒▒▒▒▓██▓░▒░▓▓█

█░ █ ▒▒░ ███▓▓█ ▒█▒▒▒

████░ ▒▓█▓ ██▒▒▒ ▓███▒

░▒█▓▓██ ▓█▒ ▓█▒▓██▓ ░█░

▓░▒▓████▒ ██ ▒█ █▓░▒█▒░▒█▒

███▓░██▓ ▓█ █ █▓ ▒▓█▓▓█▒

░██▓ ░█░ █ █▒ ▒█████▓▒ ██▓░▒

███░ ░ █░ ▓ ░█ █████▒░░ ░█░▓ ▓░

██▓█ ▒▒▓▒ ▓███████▓░ ▒█▒ ▒▓ ▓██▓

▒██▓ ▓█ █▓█ ░▒█████▓▓▒░ ██▒▒ █ ▒ ▓█▒

▓█▓ ▓█ ██▓ ░▓▓▓▓▓▓▓▒ ▒██▓ ░█▒

▓█ █ ▓███▓▒░ ░▓▓▓███▓ ░▒░ ▓█

██▓ ██▒ ░▒▓▓███▓▓▓▓▓██████▓▒ ▓███ █

▓███▒ ███ ░▓▓▒░░ ░▓████▓░ ░▒▓▒ █▓

█▓▒▒▓▓██ ░▒▒░░░▒▒▒▒▓██▓░ █▓

██ ▓░▒█ ▓▓▓▓▒░░ ▒█▓ ▒▓▓██▓ ▓▒ ▒▒▓

▓█▓ ▓▒█ █▓░ ░▒▓▓██▒ ░▓█▒ ▒▒▒░▒▒▓█████▒

██░ ▓█▒█▒ ▒▓▓▒ ▓█ █░ ░░░░ ░█▒

▓█ ▒█▓ ░ █░ ▒█ █▓

█▓ ██ █░ ▓▓ ▒█▓▓▓▒█░

█▓ ░▓██░ ▓▒ ▓█▓▒░░░▒▓█░ ▒█

██ ▓█▓░ ▒ ░▒█▒██▒ ▓▓

▓█▒ ▒█▓▒░ ▒▒ █▒█▓▒▒░░▒██

░██▒ ▒▓▓▒ ▓██▓▒█▒ ░▓▓▓▓▒█▓

░▓██▒ ▓░ ▒█▓█ ░░▒▒▒

▒▓▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░▓▓ ▓░▒█░

______ _ _ _ _____ ____ _ _____ _ _ _ BETA

| ____| (_) | | / ____|/ __ \| | / ____| (_) | |

| |__ | |_ _ __ | | __ | (___ | | | | | | | | |_ ___ _ __ | |_

| __| | | | '_ \| |/ / \___ \| | | | | | | | | |/ _ \ '_ \| __|

| | | | | | | | < ____) | |__| | |____ | |____| | | __/ | | | |_

|_| |_|_|_| |_|_|\_\ |_____/ \___\_\______| \_____|_|_|\___|_| |_|\__|

Welcome! Enter 'HELP;' to list all available commands. 'QUIT;' to exit.

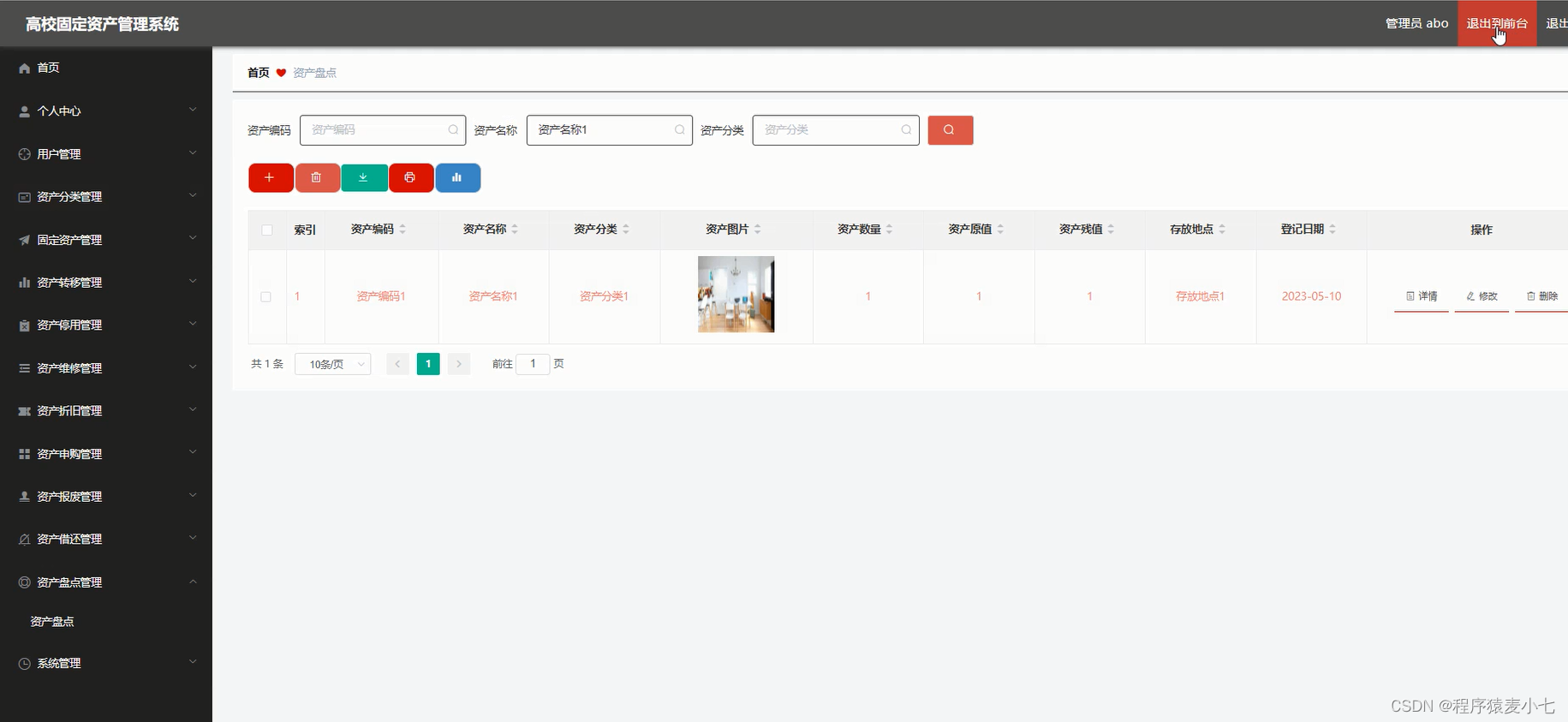

Flink SQL> show tables;

+------------+

| table name |

+------------+

| hive_k |

| k |

+------------+

2 rows in set

Flink SQL> set table.sql-dialect=hive;

[INFO] Session property has been set.

Flink SQL> select * from hive_k;

2023-05-25 18:07:14,422 INFO org.apache.hadoop.mapred.FileInputFormat [] - Total input files to process : 0

SQL Query Result (Table)

Table program finished. Page: Last of 1 Updated: 18:07:19.966

tinyint0 smallint1 int2 bigint3 float4 double5 decimal6 boolean7 char8 varchar9 stri

四、相关资源下载

如跟我版本一样的码友们,可以直接下载去用

flink-1.14.4.tgz 下载

链接:https://pan.baidu.com/s/1L3idoPOs_LzgamOt9e5rZg?pwd=gqj0

提取码:gqj0