多源传感器GNSS INS 视觉 LiDAR 组合导航与SLAM开源项目总结

本文基于 吴桐wutong 微信公众号文章完善而来。

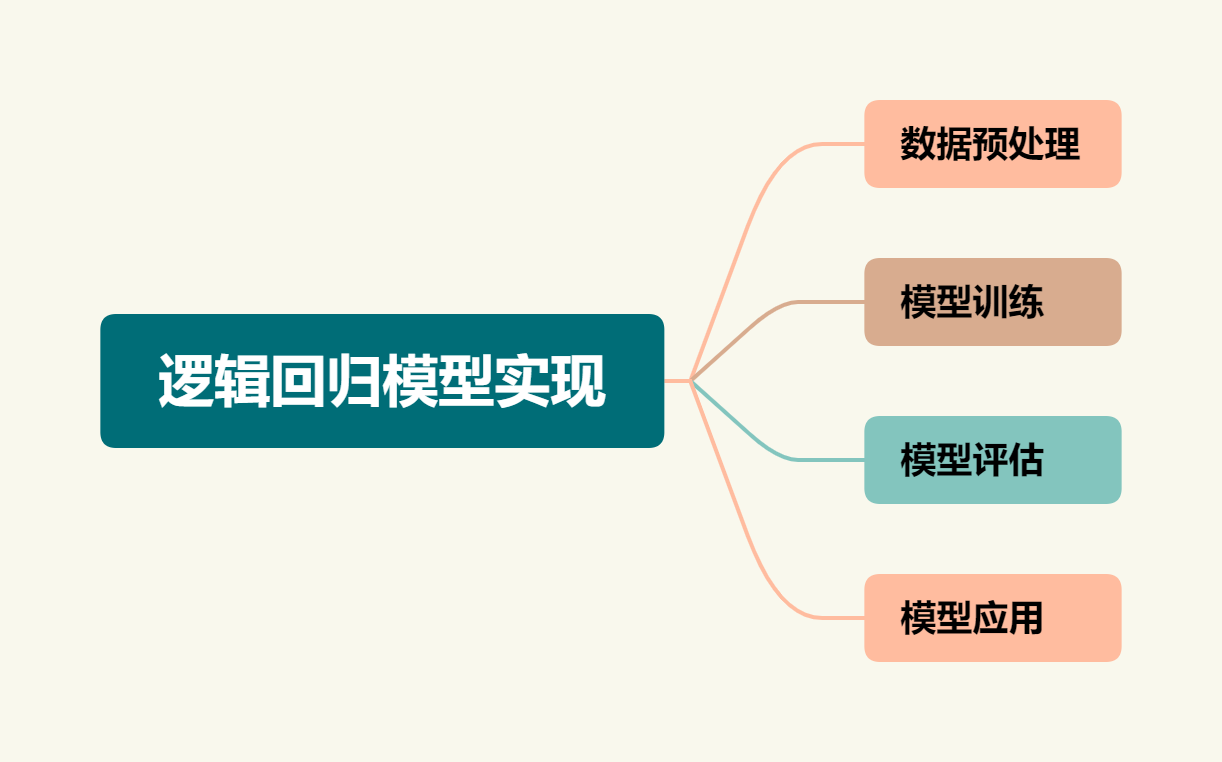

开源代码总览

| 名称 | 传感器类型 | 组合类型 | 滤波方法 | 备注 |

|---|---|---|---|---|

| RTKLIB | G | - | KF | GAMP、rtklibexplorer https://www.rtklib.com/ |

| GPSTK | G | - | KF | https://github.com/SGL-UT/GPSTk |

| BNC | G | - | KF | ppp_wizard |

| KF_GINS | G、I | 松组合 | KF | OB_GINS https://github.com/i2Nav-WHU/KF-GINS/blob/main/README_CN.md |

| PSINS | G、I | 紧组合 | KF | http://www.psins.org.cn |

| OB_GINS | G、I | 松组合 | 图优化 | https://github.com/i2Nav-WHU/OB_GINS |

| igNav | G、I | 紧组合 | 图优化 | rtklib https://github.com/Erensu/ignav |

| LOAM/LeGo-LOAM | 2D 雷达 | 仅雷达点云 | https://github.com/laboshinl/loam_velodyne https://github.com/RobustFieldAutonomyLab/LeGO-LOAM | |

| LIO-SAM | G、I、3D雷达 | 松组合G、紧组合LI | 图优化 | https://github.com/smilefacehh/LIO-SAM-DetailedNote |

| LVI-SAM | G、V、I、3D雷达 | 松组合G、松组合VI以及LI子系统 | 因子图优化 | https://github.com/TixiaoShan/LVI-SAM |

| SVO | MV | 仅图像,直接法 | https://github.com/uzh-rpg/rpg_svo https://rpg.ifi.uzh.ch/svo_pro.html | |

| LSD-SLAM | MV、SV | 直接法 | KF | https://github.com/tum-vision/lsd_slam https://github.com/apesIITM/lsd_slam_stereo |

| ORB-SLAM | MV、 | 图像特征 | https://github.com/raulmur/ORB_SLAM | |

| ORB-SLAM2 | MV、SV、RGB-D | 图像描述子 | Bundle Adjustment | https://github.com/electech6/ORB_SLAM2_detailed_comments https://github.com/raulmur/ORB_SLAM2 |

| ORB-SLAM3 | MV、SV、RGB-D、I | 图像描述子 ,紧耦合VI | 图优化 | https://github.com/electech6/ORB_SLAM3_detailed_comments https://github.com/UZ-SLAMLab/ORB_SLAM3 |

| msckf_vio | SV、I | 紧组合VI | MSCKF | https://github.com/KumarRobotics/msckf_vio |

| VINS-Mono | MV、I | 紧组合VI | 图优化 | https://github.com/HKUST-Aerial-Robotics/VINS-Mono |

| VINS-Fusion | G、MV、SV、I | 松组合G,紧组合VI,图像用KLT | 图优化 | https://github.com/HKUST-Aerial-Robotics/VINS-Fusion |

| GVINS | G、MV、SV、I | 紧组合G,紧组合VI | 图优化 | https://github.com/HKUST-Aerial-Robotics/GVINS |

| IC_GVINS | G、V、I | 紧组合G、紧组合VI、松组合GI | 图优化 | https://github.com/i2Nav-WHU/IC-GVINS |

| InGVIO | G、V、I | 紧组合 | Invariant EKF | https://github.com/ChangwuLiu/InGVIO |

| OpenVINS | MV、SV、I | 紧组合VI | MSCKF | https://github.com/rpng/open_vins https://docs.openvins.com/ |

(MonoV: 单目相机;StereoV: 双目相机;2D LiDAR; 3D LiDAR)

表中前半部分组合类型是以GNSS为准,即使用GNSS原始数据,则为紧组合。滤波方法中的图优化,其实就是最小二乘,但不仅仅是最小二乘。此外,视觉惯性里程计VIO的紧耦合是指:

- 松耦合(Loosely Coupled): 松耦合是指IMU和相机分别进行自身的运动估计,然后对其位姿估计结果进行融合,两个模块更新频率不一致 , 模块之间存在一定的信息交换,在松耦合方式中以惯性数据为核心 , 视觉测量数据修正惯性测量数据的累积误差。

- 紧耦合(Tightly Coupled): 紧耦合是指把IMU的状态与相机的状态合并在一起,共同构建运动方程和观测方程,然后进行状态估计,IMU的尺度度量信息可以用于辅助视觉中的尺度的估计。

由于作者不是很熟悉CV与SLAM,因此一些VSLAM的开源项目的分类不是那么确切。希望同行批评指正。

以下是各个项目的详细介绍。

RTKLIB

An Open Source Program Package for GNSS Positioning

RTKLIB is an open source program package for standard and precise positioning with GNSS (global navigation satellite system). RTKLIB consists of a portable program library and several APs (application programs) utilizing the library.

GPSTK

GPSTK已更名为GNSSTK,并在github分为两个项目,分别为GNSSTK (libraries)和GNSSTK-APPS (applications)。The primary goals of the GPSTk project are to:

- provide applications for use by the GNSS and satellite navigation community.

- provide a core library to facilitate the development of GNSS applications.

GPSTk核心库提供了许多在GNSS教科书和经典论文中发现的模型和算法,例如求解用户位置或估计大气折射。还支持RINEX等常见数据格式。

GPSTk库中有几种类型的函数:

-

全球定位系统(GPS)的时间。时间表示之间的转换,如MJD、GPS周和秒,以及许多其他。

-

星历表计算。广播和精确星历表的位置和时钟插补。

-

大气延迟模型。包括电离层和对流层模型。

-

位置的解决方案。包括接收机自主完整性监控算法的实现。

-

数学。包括矩阵和矢量实现,以及插值和数值积分。

-

GNSS数据结构。包含根据年代、卫星、来源和观测类型绘制的观测数据结构。还提供了适当的处理类,包括完整的“精确点定位”(PPP)处理链。

-

应用程序框架。包括处理命令行选项、提供交互式帮助和使用文件系统。

BKG Ntrip Client (BNC)

BNC是专门为实时定位而生的软件。大量的同学使用其作为实时精密单点定位的基础软件进行二次开发。

The BKG Ntrip Client (BNC) is an Open Source multi-stream client program designed for a variety of real-time GNSS applications.It was primarily designed for receiving data streams from any Ntrip supporting Broadcaster. The program handles the HTTP communication and transfers received GNSS data to a serial or IP port feeding networking software or a DGPS/RTK application. It can compute a real-time Precise Point Positioning (PPP) solution from RTCM streams or RINEX files. During the last years BNC has been enriched with RINEX quality and editing functions. You can run BNC with GUI as well as in batch processing mode.

PSINS

PSINS(Precise Strapdown Inertial Navigation System,高精度捷联惯导算法)网站主要介绍高精度捷联惯导系统及其组合导航系统的算法原理和软件实现,由西北工业大学自动化学院惯性技术教研室严老师开发,Matlab & C++ 核心代码全部开源,本站同时还提供丰富的惯导原始数据和相关学习资料,宗旨是“致力于使专业实用的捷联惯导算法问题不再成为问题”。网站作者将尽最大努力以完善代码和数据资料的正确性、完整性和可靠性,但当网友将代码移植应用于正式产品时,作者不承诺它们总是有效的。

PSINS工具箱主要应用于捷联惯导系统的数据处理和算法验证开发,它包括

- 惯性传感器数据分析、

- 惯组标定、

- 初始对准、

- 惯导AVP(姿态-速度-位置)更新解算、

- 组合导航Kalman滤波

等功能。

KF_GINS OB_GINS IC-GINS

三个项目均是武汉大学多源智能导航实验室(i2Nav)开源的多传感器融合的项目代码。不过遗憾的是,均是以松组合的形式融合GNSS数据。其中KF_GINS、OB_GINS分别为基于EKF和图优化的数据处理方法,其输入和输出相同,两套开源代码可以同时阅读,相互印证。IC-GINS则因为视觉的加入,同样需要基于ROS系统,但其代码与OB_GINS也有很多相通之处。

An EKF-Based GNSS/INS Integrated Navigation System

基于扩展卡尔曼滤波的GNSS/INS组合导航软件KF-GINS1。KF-GINS实现经典的GNSS位置和IMU数据的组合导航解算,算法实现参考牛小骥教授和陈起金博士的《惯性导航原理与GNSS/INS组合导航》课程讲义,作为课程的配套资源。软件采用C++语言编写,采用CMake管理项目。 KF-GINS的主要特点有:

- 扩展卡尔曼滤波架构(误差状态向量)的GNSS/INS松组合算法,包括IMU误差补偿、惯性导航解算、Kalman滤波、误差反馈等环节

- 采用21维系统误差状态,包括位置误差、速度误差、姿态误差、IMU零偏误差和IMU比例因子误差

- 姿态误差采用Phi角模型,速度、位置误差定义在导航坐标系下

- 惯性导航解算采用基于线性变化假设的双子样机械编排算法,补偿了姿态圆锥效应、速度的旋转效应和划桨效应

Optimization Based GINS

Optimization-Based GNSS/INS Integrated Navigation System

We open-source OB_GINS, an optimization-based GNSS/INS integrated navigation system. The main features of OB_GINS are as follows:

- A sliding-window optimizer for GNSS/INS integration;

- Abstract IMU-preintegration implementation, including:

- The normal IMU preintegration without the Earth’s rotation consideration;

- The normal IMU/ODO preintegration;

- The refined IMU preintegration with the Earth’s rotation consideration;

- The refined IMU/ODO preintegration;

- Implementation of the marginalization;

- Tools for attitude parameterization and coordinate frames;

- Tools for file IO;

ignav是基于rtklib二次开发的GNSS/INS紧组合算法。对于熟悉rtklib的同学,如果想扩展自己的技术路线,学习GNSS/INS组合导航相关算法,ignav是一个不错的选择。ignav基本将现有的一些组合算法均已实现,包括但不限于与RTK的紧组合/里程计辅助/非完整性约束等。而且ignav的编码风格与rtklib一脉相承,注释以及文档一应俱全,对初学者十分友好。

github简介:

IGNAV基于RTKLIB开发的INS/GNSS组合导航算法库,采用C语言编写;IGNAV主要功能包括:

- 松耦合算法;

- SPP、PPP、DGPS、RTK紧耦合算法;

- 里程计辅助;

- 磁力计辅助;

- NHC、ZUPT、ZARU等运动约束;

- Doppler辅助INS/GNSS;

- 双天线航向辅助;

- 静对准、动对准初始化;

- INS正向和反向机械编排;

- INS/GNSS正向和反向组合滤波;

- 初步支持视觉信息辅助定位定姿;

- RTS/前后向滤波平滑;

- 车载轨迹动态显示等。

LOAM

Laser Odometry and Mapping (Loam) is a realtime method for state estimation and mapping using a 3D lidar.

LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

This repository contains code for a lightweight and ground optimized lidar odometry and mapping (LeGO-LOAM) system for ROS compatible UGVs. The system takes in point cloud from a Velodyne VLP-16 Lidar (palced horizontally) and optional IMU data as inputs. It outputs 6D pose estimation in real-time.

Lidar-inertial Odometry

An updated lidar-initial odometry package, LIO-SAM, has been open-sourced and available for testing.

LIO-SAM

LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping

A real-time lidar-inertial odometry package. We strongly recommend the users read this document thoroughly and test the package with the provided dataset first.

这个包基于LeGo-LOAM拓展而来,添加了IMU预积分和GPS因子:前段使用紧耦合的IMU融合方式,替代原有的帧间里程计,使得前段更佳轻量;后段沿用LeGo-LOAM,在此基础上融入了GPS观测。同时前后端相互耦合,提高系统精度。

Shan, T., Englot, B., Meyers, D., Wang, W., Ratti, C., & Daniela, R. (2020). LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 5135–5142. IEEE. Retrieved from https://arxiv.org/abs/2007.00258

LVI-SAM

LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping.

A lidar-visual-inertial odometry and mapping system, which combines the advantages of LIO-SAM and Vins-Mono at a system level.

知乎专栏——LVI-SAM:LIO-SAM 与 Vins-Mono 紧耦合系统

Shan, T., Englot, B., Ratti, C., & Rus, D. (2021). LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping. 2021 IEEE International Conference on Robotics and Automation (ICRA), 5692–5698. https://arxiv.org/abs/2104.10831

摘要:我们提出了一种通过平滑和建图实现紧密耦合激光-视觉-惯性里程计的框架LVI-SAM,该框架实现了高精度和鲁棒性的实时状态估计和地图构建。LVI-SAM建立在一个因子图上,由两个子系统组成:视觉惯性系统(VIS)和激光惯性系统(LIS)。这两个子系统以紧密耦合的方式设计,其中VIS利用LIS估计来促进初始化。利用激光雷达测量提取视觉特征的深度信息,提高了VIS的精度。反过来,LIS利用VIS估计进行初始猜测,以支持扫描匹配。循环闭包首先由VIS识别,然后由LIS进一步细化。当两个子系统中的一个发生故障时,LVI-SAM也可以正常工作,这增加了它在无纹理和无特征环境中的鲁棒性。LVI-SAM在不同规模和环境下从多个平台收集的数据集上进行了广泛的评估。我们的实现在这个URL中开源。

SVO

Semi-direct Visual Odometry

SVO: Fast Semi-Direct Monocular Visual Odometry

C. Forster, M. Pizzoli, D. Scaramuzza, SVO: Fast Semi-Direct Monocular Visual Odometry, IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, 2014.

我们提出了一种半直接单目视觉里程计算法,它比目前最先进的方法精确、可靠和更快。半直接方法消除了昂贵的特征提取和鲁棒匹配技术的运动估计的需要。我们的算法直接操作像素强度,这导致亚像素精度在高帧率。采用显式模拟离群值测量的概率映射方法来估计3D点,从而获得更少的离群值和更可靠的点。精确和高帧率的运动估计在小的、重复的和高频纹理的场景中带来了更高的鲁棒性。该算法应用于gps拒绝环境下的微型飞行器状态估计,在机载嵌入式计算机上以每秒55帧的速度运行,在消费级笔记本电脑上以每秒300帧以上的速度运行。我们将我们的方法称为SVO(半直接视觉测程法),并将我们的实现作为开源软件发布。

What is SVO? SVO uses a semi-drect paradigm to estimate the 6-DOF motion of a camera system from both pixel intensities (direct) and features (without the necessity for time-consuming feature extraction and matching procedures), while achieving better accuracy by directly using the pixel intensities.

What does SVO Pro include? SVO Pro offers the following functionalities:

- -视觉测程:SVO的最新版本,支持单眼或立体声设置下的透视和鱼眼/折射相机。它还包括主动曝光控制。

- 视觉-惯性里程计:SVO前端+视觉-惯性滑动窗口优化后端(从OKVIS修改)

- -视觉惯性SLAM: SVO前端+视觉惯性滑动窗口优化后端+全局捆绑调整地图(使用iSAM2)。得益于iSAM2,全局地图可以实时更新,并用于帧率本地化。

- -视觉-惯性SLAM与循环关闭:循环关闭,通过DBoW2,集成在全局bundle调整。姿态图优化也包括作为全局bundle调整的轻量级替代。

Robotics and Perception Group, University of Zurich http://rpg.ifi.uzh.ch/

LSD-SLAM

Large-Scale Direct Monocular SLAM

LSD-SLAM是一种新颖的、直接的单目SLAM技术:它不使用关键点,而是直接对图像强度进行跟踪和映射。相机使用直接图像对齐进行跟踪,而几何形状以半密集深度图的形式进行估计,滤波经过多次像素立体比较得到。然后,我们构建关键帧的Sim(3)姿态图,这允许构建经过比例尺漂移校正的大比例尺地图,包括循环闭包。LSD-SLAM实时运行在CPU上,甚至在现代智能手机上。

LSD-SLAM-STEREO with Stereo Cameras

We propose a novel Large-Scale Direct SLAM algorithm for stereo cameras (Stereo LSD-SLAM) that runs in real-time at high frame rate on standard CPUs. In contrast to sparse interest-point based methods, our approach aligns images directly based on the photoconsistency of all highcontrast pixels, including corners, edges and high texture areas. It concurrently estimates the depth at these pixels from two types of stereo cues: Static stereo through the fixed-baseline stereo camera setup as well as temporal multi-view stereo exploiting the camera motion. By incorporating both disparity sources, our algorithm can even estimate depth of pixels that are under-constrained when only using fixed-baseline stereo. Using a fixed baseline, on the other hand, avoids scale-drift that typically occurs in pure monocular SLAM. We furthermore propose a robust approach to enforce illumination invariance, capable of handling aggressive brightness changes between frames – greatly improving the performance in realistic settings. In experiments, we demonstrate state-of-the-art results on stereo SLAM benchmarks such as Kitti or challenging datasets from the EuRoC Challenge 3 for micro aerial vehicles.

ORB-SLAM、ORB-SLAM2

ORB-SLAM: a Versatile and Accurate Monocular SLAM System

ORB-SLAM2: an Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras

ORB-SLAM is a versatile and accurate Monocular SLAM solution able to compute in real-time the camera trajectory and a sparse 3D reconstruction of the scene in a wide variety of environments, ranging from small hand-held sequences to a car driven around several city blocks. It is able to close large loops and perform global relocalisation in real-time and from wide baselines.

See our project webpage: http://webdiis.unizar.es/~raulmur/orbslam/

ORB-SLAM2 is a real-time SLAM library for Monocular, Stereo and RGB-D cameras that computes the camera trajectory and a sparse 3D reconstruction (in the stereo and RGB-D case with true scale). It is able to detect loops and relocalize the camera in real time. We provide examples to run the SLAM system in the KITTI dataset as stereo or monocular, in the TUM dataset as RGB-D or monocular, and in the EuRoC dataset as stereo or monocular. We also provide a ROS node to process live monocular, stereo or RGB-D streams. The library can be compiled without ROS. ORB-SLAM2 provides a GUI to change between a SLAM Mode and Localization Mode, see section 9 of this document.

[Stereo and RGB-D] Raúl Mur-Artal and Juan D. Tardós. ORB-SLAM2: an Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras. IEEE Transactions on Robotics, vol. 33, no. 5, pp. 1255-1262, 2017. PDF.

Raúl Mur-Artal, J. M. M. Montiel and Juan D. Tardós. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Transactions on Robotics, vol. 31, no. 5, pp. 1147-1163, 2015. (2015 IEEE Transactions on Robotics Best Paper Award). PDF.

ORB-SLAM3

ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM

ORB-SLAM3 is the first real-time SLAM library able to perform Visual, Visual-Inertial and Multi-Map SLAM with monocular, stereo and RGB-D cameras, using pin-hole and fisheye lens models. In all sensor configurations, ORB-SLAM3 is as robust as the best systems available in the literature, and significantly more accurate.

This software is based on ORB-SLAM2

[ORB-SLAM3] Carlos Campos, Richard Elvira, Juan J. Gómez Rodríguez, José M. M. Montiel and Juan D. Tardós, ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial and Multi-Map SLAM, IEEE Transactions on Robotics 37(6):1874-1890, Dec. 2021. PDF.

摘要: ORB-SLAM3是一个支持视觉、视觉加惯导、混合地图的SLAM系统,可以在单目,双目和RGB-D相机上利用针孔或者鱼眼模型运行。

他是第一个基于特征的紧耦合的VIO系统,仅依赖于最大后验估计(包括IMU在初始化时)。这样一个系统的效果就是:不管是在大场景还是小场景,室内还是室外都能鲁棒实时的运行,在精度上相比于上一版提升了2到5倍。本文的第二个创新点是根据改进recall的新的重定位模块来构建的混合地图,因为这个模块他可以让ORB-SLAM3在特征不是很好的场景中长期运行:当里程计失败的时候,系统会重新构建地图并将这个地图和原来构建的地图对齐。和那些仅利用最新的几帧数据的里程计相比,ORB-SLAM3是第一个能够在所有算法阶段重用所有先前信息的系统。这样的机制就可以在BA的时候用有共视关系的关键帧,即使两帧在时间相差很远,或者来自原来的建图过程。

这个系统在EuRoC数据集上达到了平均3.6cm的精度,在TUM-VI这种利用手持设备快速移动的数据集(AR/VR场景)上达到了9mm的精度。作者已经开源了代码.

[IMU-Initialization] Carlos Campos, J. M. M. Montiel and Juan D. Tardós, Inertial-Only Optimization for Visual-Inertial Initialization, ICRA 2020. PDF

MSCKF_VIO

Stereo Visual Inertial Odometry.

The MSCKF_VIO package is a stereo version of MSCKF. The software takes in synchronized stereo images and IMU messages and generates real-time 6DOF pose estimation of the IMU frame.

Sun, K., Mohta, K., Pfrommer, B., Watterson, M., Liu, S., Mulgaonkar, Y., … Kumar, V. (2018, January 10). Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight. arXiv. https://doi.org/10.48550/arXiv.1712.00036

摘要:近年来,用于状态估计的视觉辅助惯性里程计已经显著成熟。然而,在微型飞行器自主飞行应用中,由于尺寸和重量的限制,很难使用高质量传感器和功率处理器,因此,在提高计算效率和鲁棒性方面,我们仍面临挑战。在本文中,我们提出了一种使用多状态约束卡尔曼滤波器(MSCKF)[1]的基于滤波器的立体视觉惯性里程计。先前关于立体视觉惯性里程计的工作已经产生了计算昂贵的解决方案。我们证明,我们的立体多状态约束卡尔曼滤波器(S-MSCKF)在计算成本方面与最先进的单眼解决方案相当,同时提供了显著更强的鲁棒性。我们评估了S-MSCKF算法,并在EuRoC数据集和我们自己的实验数据集上将其与最先进的方法(包括OKVIS、ROVIO和VINS-MONO)进行了比较,这些数据集演示了室内和室外环境中最高速度为17.5m/S的快速自主飞行。我们的S-MSCKF实施可在https://github.com/KumarRobotics/msckf_vio.

OpenVINS

OpenVINS: A Research Platform for Visual-Inertial Estimation

Welcome to the OpenVINS project! The OpenVINS project houses some core computer vision code along with a state-of-the art filter-based visual-inertial estimator. The core filter is an Extended Kalman filter which fuses inertial information with sparse visual feature tracks. These visual feature tracks are fused leveraging the Multi-State Constraint Kalman Filter (MSCKF) sliding window formulation which allows for 3D features to update the state estimate without directly estimating the feature states in the filter. Inspired by graph-based optimization systems, the included filter has modularity allowing for convenient covariance management with a proper type-based state system. Please take a look at the feature list below for full details on what the system supports.

- Github project page - https://github.com/rpng/open_vins

- Documentation - https://docs.openvins.com/

- Getting started guide - https://docs.openvins.com/getting-started.html

- Publication reference - https://pgeneva.com/downloads/papers/Geneva2020ICRA.pdf

Features

- Sliding window visual-inertial MSCKF

- Modular covariance type system

- Extendable visual-inertial simulator

- On manifold SE(3) b-spline

- Arbitrary number of cameras

- Arbitrary sensor rate

- Automatic feature generation

- Five different feature representations

- Global XYZ

- Global inverse depth

- Anchored XYZ

- Anchored inverse depth

- Anchored MSCKF inverse depth

- Anchored single inverse depth

- Calibration of sensor intrinsics and extrinsics

- Camera to IMU transform

- Camera to IMU time offset

- Camera intrinsics

- Environmental SLAM feature

- OpenCV ARUCO tag SLAM features

- Sparse feature SLAM features

- Visual tracking support

- Monocular camera

- Stereo camera (synchronized)

- Binocular cameras (synchronized)

- KLT or descriptor based

- Masked tracking

- Static and dynamic state initialization

- Zero velocity detection and updates

- Out of the box evaluation on EurocMav, TUM-VI, UZH-FPV, KAIST Urban and VIO datasets

- Extensive evaluation suite (ATE, RPE, NEES, RMSE, etc…)

- Comprehensive documentation and derivations

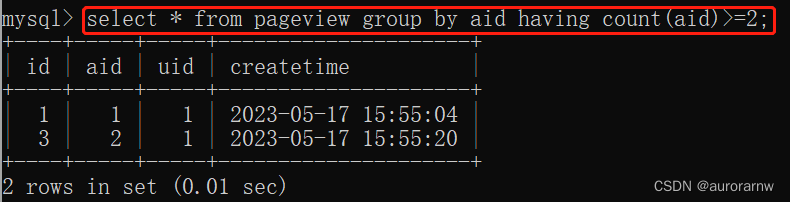

代码结构图

VINS-Mono

A Robust and Versatile Monocular Visual-Inertial State Estimator

VINS-Mono是一个用于单目视觉惯性系统的实时SLAM框架。它使用基于优化的滑动窗口公式提供高精度的视觉惯性里程计。它具有高效的IMU预集成,带有偏差校正,自动估计器初始化,在线外部校准,故障检测和恢复,环路检测,全局位姿图优化,映射合并,位姿图重用,在线时间校准,滚动快门支持。VINS-Mono主要用于自动无人机的状态估计和反馈控制,但它也能够为AR应用提供准确的定位。此代码运行在Linux上,并与ROS完全集成。iOS移动实现,请访问VINS-Mobile。

VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator, Tong Qin, Peiliang Li, Zhenfei Yang, Shaojie Shen, IEEE Transactions on Roboticspdf

VINS-Fusion

An optimization-based multi-sensor state estimator

VINS-Fusion是一种基于优化的多传感器状态估计器,可为自主应用(无人机、汽车和AR/VR)实现精确的自我定位。VINS-Fusion是VINS-Mono的扩展,支持多种视觉惯性传感器类型(单声道相机+ IMU,立体相机+ IMU,甚至仅立体相机)。我们还展示了一个融合VINS和GPS的玩具示例。特点:

- 支持多传感器(立体声摄像头/单声道摄像头+IMU /立体声摄像头+IMU)

- 在线空间标定(相机与IMU转换)

- 在线时间校准(相机与IMU之间的时间偏移)

- 可视化闭环

Qin, T., Cao, S., Pan, J., & Shen, S. (2019, January 11). A General Optimization-based Framework for Global Pose Estimation with Multiple Sensors. arXiv. https://doi.org/10.48550/arXiv.1901.03642

Qin, T., Pan, J., Cao, S., & Shen, S. (2019, January 11). A General Optimization-based Framework for Local Odometry Estimation with Multiple Sensors. arXiv. https://doi.org/10.48550/arXiv.1901.03638

GVINS

香港科技大学空中机器人组发布的最新的使用GNSS原始观测量的多传感器(GNSS/INS/camera)融合开源项目。其使用伪距和多普勒观测值,与INS以及视觉同时进行图优化,可以在全场景提供连续的平滑的6-Dof位姿。

GVINS is a non-linear optimization based system that tightly fuses GNSS raw measurements with visual and inertial information for real-time and drift-free state estimation. By incorporating GNSS pseudorange and Doppler shift measurements, GVINS is capable to provide smooth and consistent 6-DoF global localization in complex environment. The system framework and VIO part are adapted from VINS-Mono.

GVINS is a non-linear optimization based system that tightly fuses GNSS raw measurements with visual and inertial information for real-time and drift-free state estimation. By incorporating GNSS pseudorange and Doppler shift measurements, GVINS is capable to provide smooth and consistent 6-DoF global localization in complex environment. The system framework and VIO part are adapted from VINS-Mono.

Our system contains the following features:

- global 6-DoF estimation in ECEF frame;

- multi-constellation support (GPS, GLONASS, Galileo, BeiDou);

- online local-ENU frame alignment;

- global pose recovery in GNSS-unfriendly or even GNSS-denied area.

IC-GVINS

A Robust, Real-time, INS-Centric GNSS-Visual-Inertial Navigation System

Visual navigation systems are susceptible to complex environments, while inertial navigation systems (INS) are not affected by external factors. Hence, we present IC-GVINS, a robust, real-time, INS-centric global navigation satellite system (GNSS)-visual-inertial navigation system to fully utilize the INS advantages. The Earth rotation has been compensated in the INS to improve the accuracy of high-grade inertial measurement units (IMUs). To promote the system robustness in high-dynamic conditions, the precise INS information is employed to assist the feature tracking and landmark triangulation. With a GNSS-aided initialization, the IMU, visual, and GNSS measurements are tightly fused in a unified world frame within the factor graph optimization framework.

Authors: Hailiang Tang, Xiaoji Niu, and Tisheng Zhang from the Integrated and Intelligent Navigation (i2Nav) Group, Wuhan University.

Related Paper:

Xiaoji Niu, Hailiang Tang, Tisheng Zhang, Jing Fan, and Jingnan Liu, “IC-GVINS: A Robust, Real-time, INS-Centric GNSS-Visual-Inertial Navigation System,” IEEE Robotics and Automation Letters, 2022.

InGVIO

InGVIO是清华大学近期开源的一套多传感器数据融合项目,是基于invariant-EKF,紧融合GNSS伪距和多普勒,以及惯性传感器和单/双目视觉数据。从其论文来看,与当前基于图优化和基于EKF的算法相比, invariant-EKF在准确性和计算负载方面提供了极具竞争力的结果。与代码同时开源的,还有一组固定翼机载数据,下载地址请参考项目github。因为涉及到了视觉,所以ROS系统必不可少。好消息是InGVIO与GVINS使用相同的GNSS数据结构体gnss_comm,只需熟悉一次。

InGVIO is an invariant filter approach for fusion of monocular/stereo camera, IMU and raw GNSS measurements including pseudo ranges and Doppler shifts. InGVIO is intrinsically consistent under conditional infinitesimal invariance of the GNSS-Visual-Inertial system. InGVIO has the following key features:fast due to decoupled IMU propagation, key-frame marginalization strategy and no SLAM-features;accurate due to intrinsic consistency maintenance;better convergence properties than ‘naive’ EKF-based filters.

InGVIO是一种用于融合单目/立体摄像机、IMU和原始GNSS测量(包括伪距离和多普勒频移)的不变滤波方法。InGVIO在gnss -视觉-惯性系统条件无穷小不变性下具有本质一致性。InGVIO具有以下关键特性:由于解耦IMU传播,关键帧边缘化策略和无slam特征,速度快;由于内在一致性维护,精度高;比“naive”基于ekf的滤波器具有更好的收敛性能。

An invariant filter for visual-inertial-raw GNSS fusion.

Paper: InGVIO: A Consistent Invariant Filter For Fast and High-Accuracy GNSS-Visual-Inertial Odometry. Author:** Changwu Liu, Chen Jiang and Haowen Wang. https://arxiv.org/abs/2210.15145

参考:

- GNSS算法相关开源代码(含多传感器融合相关项目)——GNSS和自动驾驶,2022-12-11 20:48 发表于上海*

- 王金科, 左星星, 赵祥瑞, 等. 2022. 多源融合SLAM的现状与挑战[M]//中国图象图形学报: 卷 27. 368-389.

![[MRCTF2020]Ez_bypass1](https://img-blog.csdnimg.cn/img_convert/81cf413e9af64e04ade15a8a4323e464.png)