案例说明:

在KingbaseES V8R3集群执行failover切换后,原主库被人为误(未配置recovery.conf)启动;或者人为promote备库为主库后。需要将操作节点再重新加入集群,此时节点与主库的timeline将出现分叉,导致节点直接加入集群失败,可以通过sys_rewind工具恢复节点为新的备库加入到集群。适用版本:KingbaseES V8R3

案例一: failover切换后,原主库未配置recovery.conf直接启动数据库服务

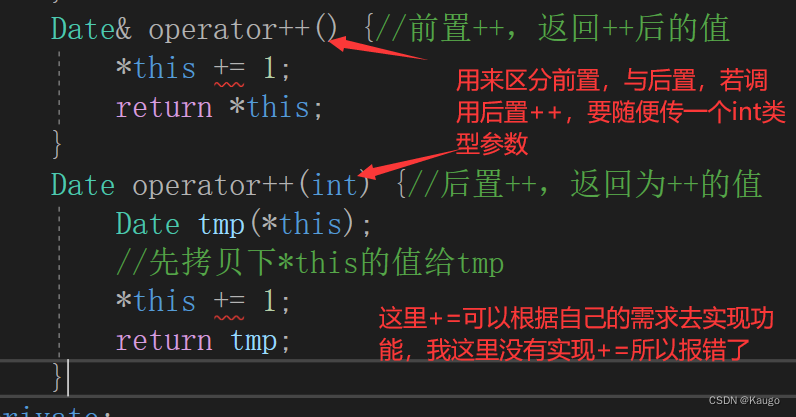

集群failover切换后,没有在原主库data目录下创建recovery.conf文件,启动原主库数据库服务。后创建了recovery.conf,再启动原主库以备库加入流复制失败,因为timeline与新主库不一致。采用sys_rewind工具重新将原主库加入集群。如下图所示,故障现象:

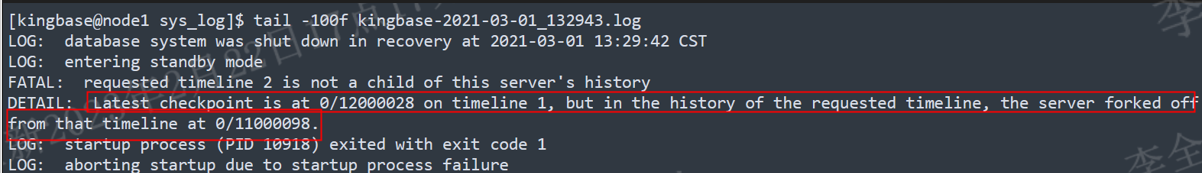

1、查看原主库sys_log日志

=如下所示,备库数据库服务启动失败,因为timeline与新主库不一致=

[kingbase@node1 sys_log]$ tail -100f kingbase-2021-03-01_132943.log

LOG: database system was shut down in recovery at 2021-03-01 13:29:42 CST

LOG: entering standby mode

FATAL: requested timeline 2 is not a child of this server's history

DETAIL: Latest checkpoint is at 0/12000028 on timeline 1, but in the history of the requested timeline, the server forked off from that timeline at 0/11000098.

LOG: startup process (PID 10918) exited with exit code 1

LOG: aborting startup due to startup process failure

LOG: database system is shut down

---数据库启动时会读取sys_wal目录下的所有X.history文件,并取最高的来判断控制文件中的checkpoint是否合理。X.history会记录每个时间线timeline的结束LSN,当发现checkpoint的LSN为A,

而history文件中记录对应时间线的结束LSN为B,且A>B时,就会认为checkpoint是非法的,

就会出现此报错。2、在原主库执行sys_rewind加入集群

[kingbase@node1 bin]$ ./sys_rewind -D /home/kingbase/cluster/kha/db/data --source-server='host=192.168.7.243 port=54321 user=system dbname=PROD' -P -n

connected to server

datadir_source = /home/kingbase/cluster/kha/db/data

rewinding from last common checkpoint at 0/10000028 on timeline 1

find last common checkpoint start time from 2021-03-01 13:36:24.675116 CST to 2021-03-01 13:36:24.702727 CST, in "0.027611" seconds.

reading source file list

reading target file list

reading WAL in target

need to copy 298 MB (total source directory size is 363 MB)

Rewind datadir file from source

Get archive xlog list from source

Rewind archive log from source

59462/305222 kB (19%) copied

creating backup label and updating control file

syncing target data directory

rewind start wal location 0/10000028 (file 000000010000000000000010), end wal location 0/11070290 (file 000000020000000000000011). time from 2021-03-01 13:36:25.675116 CST to 2021-03-01 13:36:25.379386 CST, in "0.704270" seconds.

Done!3、启动新备库数据库服务

[kingbase@node1 bin]$ ./sys_ctl start -D ../data

server starting

......

[kingbase@node1 bin]$ ps -ef|grep kingbase

.......

kingbase 11983 13899 0 13:31 pts/1 00:00:00 tail -100f kingbase-2021-03-01_132943.log

kingbase 13611 1 0 13:36 pts/0 00:00:00 /home/kingbase/cluster/kha/db/bin/kingbase -D ../data

kingbase 13614 13611 0 13:36 ? 00:00:00 kingbase: logger process

kingbase 13615 13611 0 13:36 ? 00:00:00 kingbase: startup process recovering 000000020000000000000011

kingbase 13619 13611 0 13:36 ? 00:00:00 kingbase: checkpointer process

kingbase 13620 13611 0 13:36 ? 00:00:00 kingbase: writer process

kingbase 13621 13611 0 13:36 ? 00:00:00 kingbase: wal receiver process streaming 0/11070EB8

kingbase 13622 13611 0 13:36 ? 00:00:00 kingbase: stats collector process4、查询集群节点状态

prod=# select * from sys_stat_replication ;

pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | backend_xmin | state | sent_location | write_location | flush_location | replay_location | sync_priority | sync_state

-------+----------+---------+------------------+---------------+-----------------+-------------+-------------------------------+--------------+--

27104 | 10 | system | node248 | 192.168.7.248 | | 22355 | 2021-03-01 13:19:11.376063+08 | | streaming | 0/11070FD0 | 0/11070FD0 | 0/11070FD0 | 0/11070FD0 | 0 | async

(1 row)

prod=# show pool_nodes;

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+-------+--------+-----------+---------+------------+-------------------+-------------------

0 | 192.168.7.243 | 54321 | up | 0.500000 | primary | 1 | true | 0

1 | 192.168.7.248 | 54321 | up | 0.500000 | standby | 0 | false | 0

(2 rows)

---如上所示,节点恢复为新的备库,加入集群后状态正常。案例2:备库promote为新主库重新加入到集群

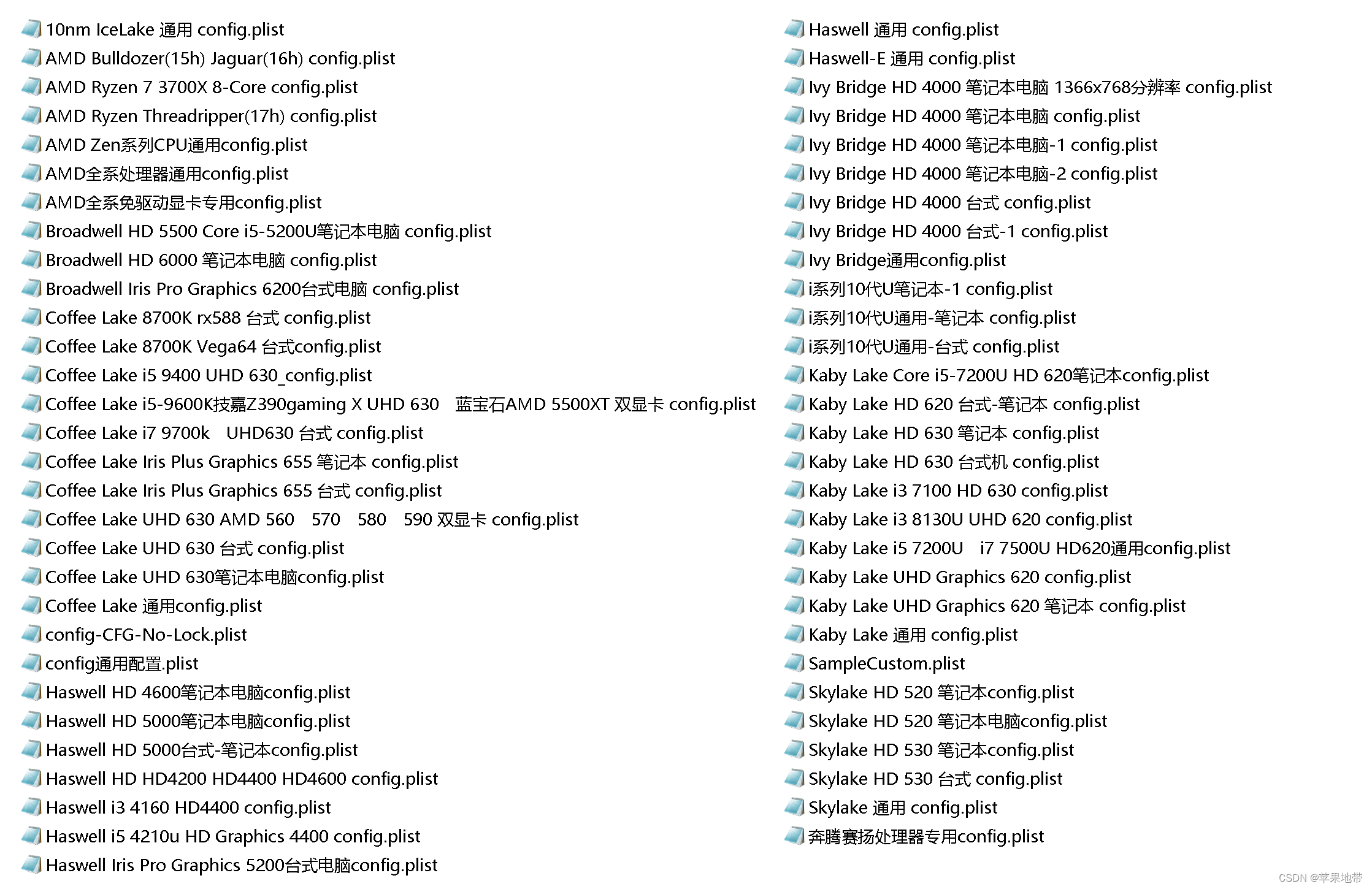

在一个备库节点上执行promote后执行测试,测试完成后,在data目录下配置了recovery.conf,然后启动数据库服务,出现timeline不匹配的故障,如下图所示,故障现象:

1、备库执行promote

[kingbase@node102 bin]$ ./sys_ctl promote -D ../data

server promoting2、配置recovery.conf启动数据库

1)配置recovery.conf

[kingbase@node102 bin]$ cat ../data/recovery.conf

standby_mode='on'

primary_conninfo='port=54321 host=192.168.1.101 user=SYSTEM password=MTIzNDU2Cg== application_name=node2'

recovery_target_timeline='latest'

primary_slot_name ='slot_node2'2、启动数据库服务Tips:

备库数据库服务启动正常,但不能加入到集群。

kingbase 19814 1 0 15:14 pts/0 00:00:00 /home/kingbase/cluster/HAR3/db/bin/kingbase -D ../data

kingbase 19815 19814 0 15:14 ? 00:00:00 kingbase: logger process

kingbase 19816 19814 0 15:14 ? 00:00:00 kingbase: startup process recovering 0000000600000000000000D3

kingbase 19820 19814 0 15:14 ? 00:00:00 kingbase: checkpointer process

kingbase 19821 19814 0 15:14 ? 00:00:00 kingbase: writer process

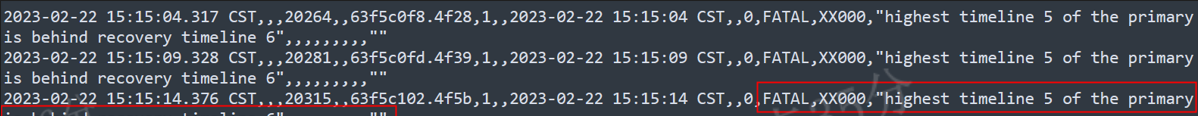

kingbase 19822 19814 0 15:14 ? 00:00:00 kingbase: stats collector process3、查看sys_log日志

2023-02-22 15:15:04.317 CST,,,20264,,63f5c0f8.4f28,1,,2023-02-22 15:15:04 CST,,0,FATAL,XX000,"highest timeline 5 of the primary is behind recovery timeline 6",,,,,,,,,""

2023-02-22 15:15:09.328 CST,,,20281,,63f5c0fd.4f39,1,,2023-02-22 15:15:09 CST,,0,FATAL,XX000,"highest timeline 5 of the primary is behind recovery timeline 6",,,,,,,,,""

2023-02-22 15:15:14.376 CST,,,20315,,63f5c102.4f5b,1,,2023-02-22 15:15:14 CST,,0,FATAL,XX000,"highest timeline 5 of the primary is behind recovery timeline 6",,,,,,,,,""

---如上所示,出现备库和主库timeline不一致的问题。4、查看流复制状态

prod=# select * from sys_stat_replication ;

pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | backend_xmin |

state | sent_location | write_location | flush_location | replay_location | sync_priority | sync_state

-------+----------+---------+------------------+---------------+-----------------+-------------+-------------------------------+--------------+--3、在备库执行sys_rewind恢复

1)执行sys_rewind

[kingbase@node102 bin]$ ./sys_rewind -D /home/kingbase/cluster/HAR3/db/data --source-server='host=192.168.1.101 port=54321 user=SYSTEM password=123456 dbname=PROD' -P -n

connected to server

datadir_source = /home/kingbase/cluster/HAR3/db/data

rewinding from last common checkpoint at 0/D1000108 on timeline 5

find last common checkpoint start time from 2023-02-22 15:18:54.216014 CST to 2023-02-22 15:18:54.233126 CST, in "0.017112" seconds.

reading source file list

reading target file list

reading WAL in target

need to copy 91 MB (total source directory size is 580 MB)

Rewind datadir file from source

Get archive xlog list from source

Rewind archive log from source

44827/93979 kB (47%) copied

creating backup label and updating control file

syncing target data directory

rewind start wal location 0/D10000D0 (file 0000000500000000000000D1), end wal location 0/D103EAA0 (file 0000000500000000000000D1). time from 2023-02-22 15:18:54.216014 CST to 2023-02-22 15:18:54.543176 CST, in "0.327162" seconds.

Done!2)启动备库数据库服务

[kingbase@node102 bin]$ ./sys_ctl start -D ../data

server starting3)查看集群节点状态

TEST=# show pool_nodes;

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+-------+--------+-----------+---------+------------+-------------------+-------------------

0 | 192.168.1.101 | 54321 | up | 0.500000 | primary | 0 | true | 0

1 | 192.168.1.102 | 54321 | up | 0.500000 | standby | 0 | false | 0

(2 rows)4)查看流复制状态

TEST=# select * from sys_stat_replication;

PID | USESYSID | USENAME | APPLICATION_NAME | CLIENT_ADDR | CLIENT_HOSTNAME | CLIENT_PORT | BACKEND_START | BACKEND_XMIN | STATE | SENT_LOCATION | WRITE_LOCATION | FLUSH_LOCATION | REPLAY_LOCATION | SYNC_PRIORITY | SYNC_STATE

-------+----------+---------+------------------+---------------+-----------------+-------------+-------------------------------+--------------+-----------+---------------+----------------+----------------+-----------------+---------------+------------

13034 | 10 | SYSTEM | node2 | 192.168.1.102 | | 27047 | 2023-02-22 15:19:07.660862+08 | | streaming | 0/D103F700 | 0/D103F700 | 0/D103F700 | 0/D103F700 | 2 | sync

(1 row)

---如上所示,备库恢复后,加入到集群。