注意:进行本文的实验前,为了加快训练速度,进行了参数调整

num-episodes:由60000改成了10000

lr:由0.01改成了0.1

batch-size:由1024改成了32

1.报错

1.1 AttributeError: 'Scenario' object has no attribute 'benchmark_data'

(py3.6) xiaowang@xw:~/maddpg-master/maddpg-master/experiments$ python train.py --scenario simple --benchmark2023-05-19 15:05:52.380659: I tensorflow/core/platform/cpu_feature_guard.cc:140] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

Traceback (most recent call last):

File "train.py", line 199, in <module>

train(arglist)

File "train.py", line 87, in train

env = make_env(arglist.scenario, arglist, arglist.benchmark)

File "train.py", line 64, in make_env

env = MultiAgentEnv(world, scenario.reset_world, scenario.reward, scenario.observation, scenario.benchmark_data)

AttributeError: 'Scenario' object has no attribute 'benchmark_data'

根据错误信息,出现了AttributeError: 'Scenario' object has no attribute 'benchmark_data'的异常。这意味着在你的代码中,Scenario对象没有名为benchmark_data的属性。具体来说,train.py文件中的第64行调用了Scenario对象的benchmark_data属性,但该属性未被定义,因此导致了AttributeError异常。

解决方式:是simple这个环境里压根就没有

benchmark_data属性,最后发现simple_world_comm环境里有benchmark_data属性。其他环境读者可自行查看。

1.2 FileNotFoundError: [Errno 2] No such file or directory: './benchmark_files/.pkl'

(py3.6) xiaowang@xw:~/maddpg-master/maddpg-master/experiments$ python train.py --scenario simple_world_comm --benchmark2023-05-18 21:25:27.749253: I tensorflow/core/platform/cpu_feature_guard.cc:140] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA Using good policy maddpg and adv policy maddpg Loading previous state... Starting iterations... Finished benchmarking, now saving... Traceback (most recent call last): File "train.py", line 199, in <module> train(arglist) File "train.py", line 151, in train with open(file_name, 'wb') as fp: FileNotFoundError: [Errno 2] No such file or directory: './benchmark_files/.pkl'

这个错误是由于程序无法找到指定的目录而引起的。具体来说,它无法找到名为"./benchmark_files/.pkl"的文件。

解决方式:在当前目录下新建benchmark_files目录

此处的当前目录是指:~/maddpg-master/maddpg-master/experiments

(py3.6) xiaowang@xw:~/maddpg-master/maddpg-master/experiments$ mkdir benchmark_files1.3 NameError: name 'reward' is not defined

(py3.6) xiaowang@xw:~/maddpg-master/maddpg-master/experiments$ python train.py --scenario simple_speaker_listener --exp-name simple_speaker_listener --display --benchmark

2023-05-19 15:27:29.237950: I tensorflow/core/platform/cpu_feature_guard.cc:140] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

Using good policy maddpg and adv policy maddpg

Loading previous state...

Starting iterations...

Traceback (most recent call last):

File "train.py", line 199, in <module>

train(arglist)

File "train.py", line 120, in train

new_obs_n, rew_n, done_n, info_n = env.step(action_n)

File "/home/xiaowang/multiagent-particle-envs-master/multiagent-particle-envs-master/multiagent/environment.py", line 97, in step

info_n['n'].append(self._get_info(agent))

File "/home/xiaowang/multiagent-particle-envs-master/multiagent-particle-envs-master/multiagent/environment.py", line 122, in _get_info

return self.info_callback(agent, self.world)

File "/home/xiaowang/multiagent-particle-envs-master/multiagent-particle-envs-master/multiagent/scenarios/simple_speaker_listener.py", line 61, in benchmark_data

return self.reward(agent, reward)

NameError: name 'reward' is not defined

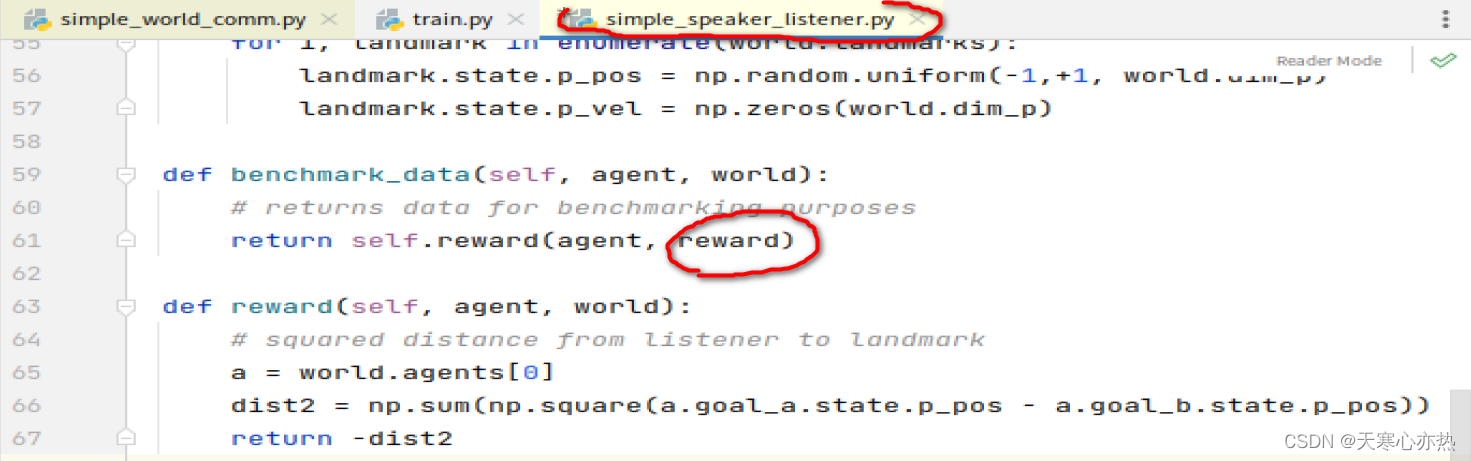

根据错误信息,看起来是在simple_speaker_listener.py文件的benchmark_data函数中出现了一个NameError。错误指出reward未定义。

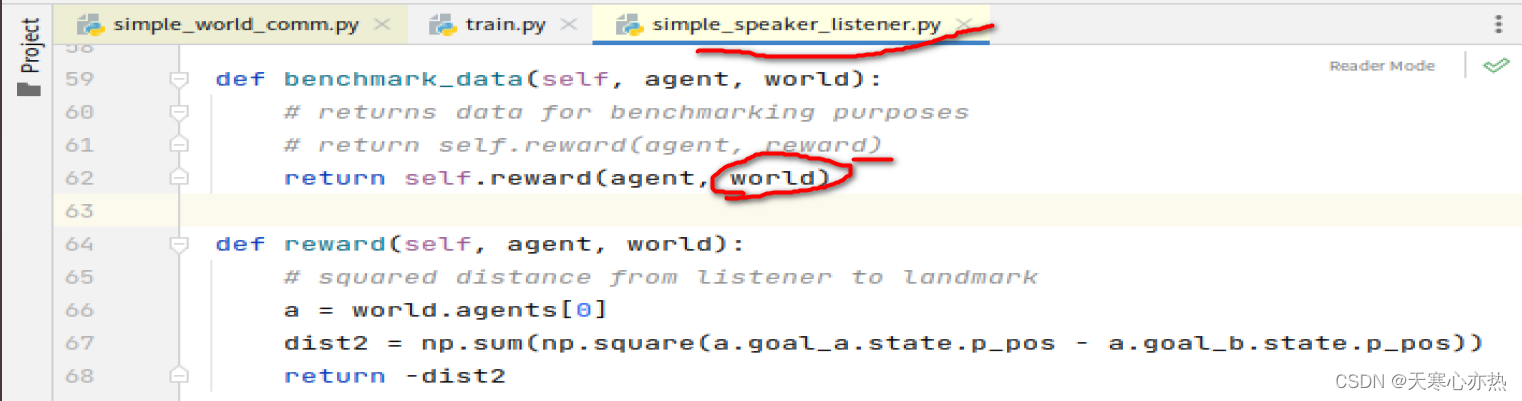

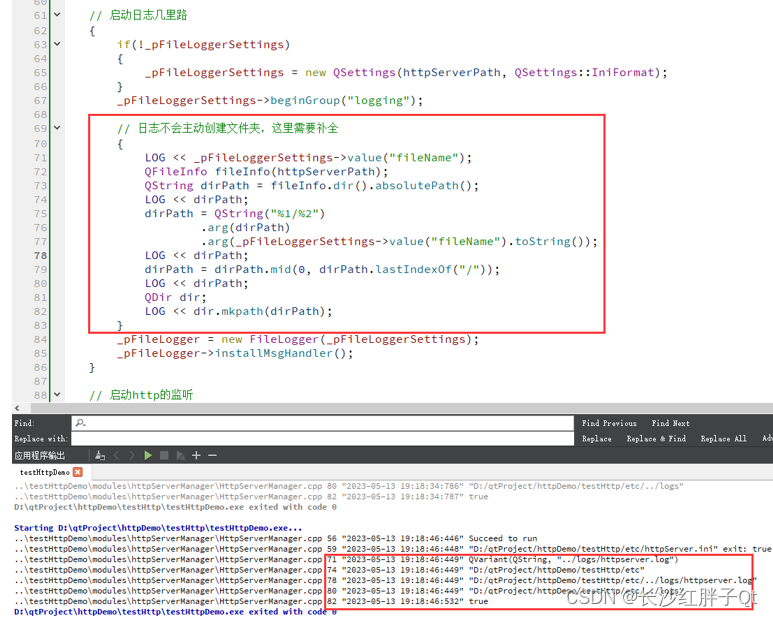

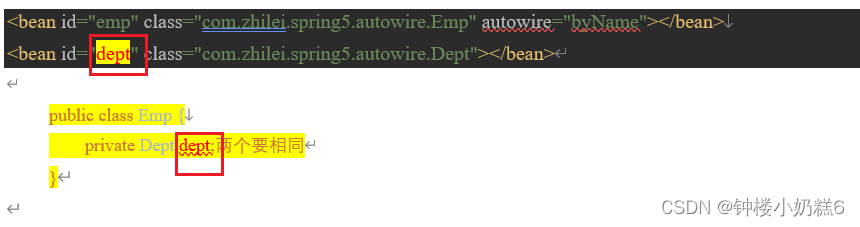

源码:

在benchmark_data函数中,reward变量未被定义。根据代码逻辑,你可能想要使用world参数作为代理的奖励计算。

修改后的代码:

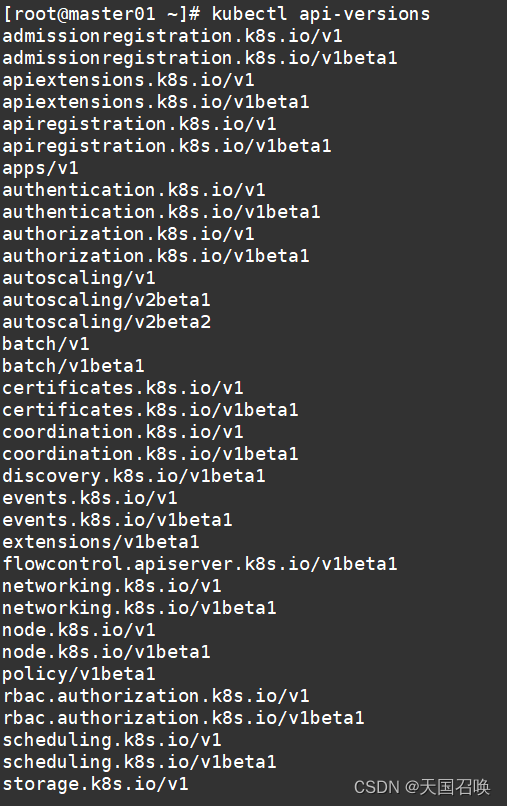

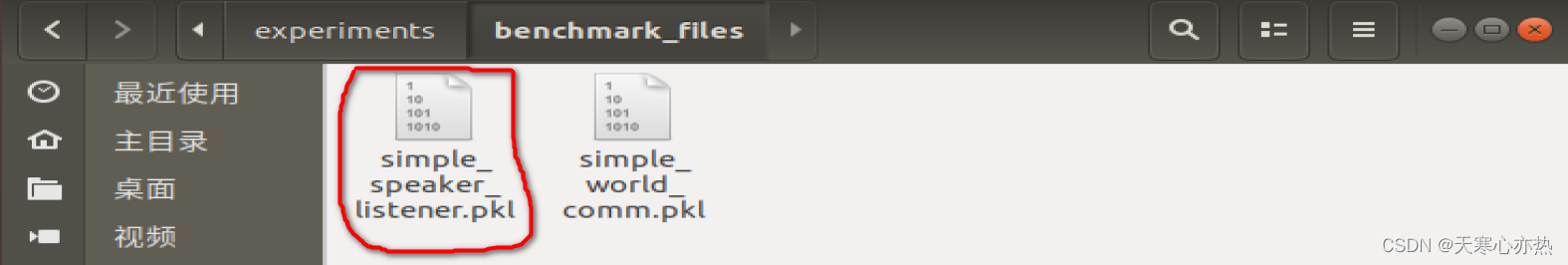

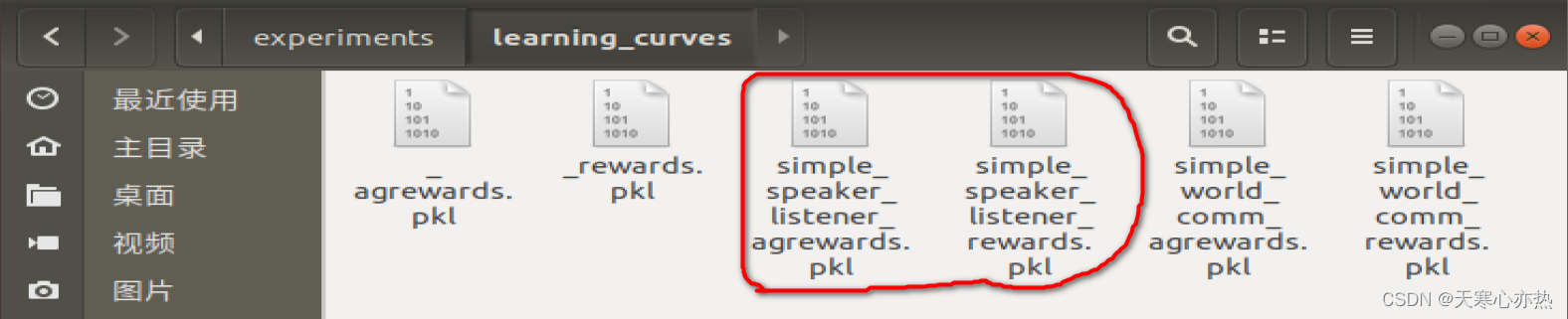

最后生成的文件:

注意:.pkl前面的simple_speaker_listener对应于 --exp-name simple_speaker_listener

2.问题

2.1 为何执行程序生成的tmp/policy在系统重启后,会被删除?

临时目录(如/tmp目录)通常用于存储临时文件和临时数据,这些文件在系统重新启动后会被清除。这是系统的正常行为,旨在确保系统的临时目录保持干净和整洁,避免占用过多的磁盘空间。

如果你需要在系统重启后保留生成的文件,你可以考虑将其存储到持久性目录中,例如用户的主目录或特定的数据存储位置。你可以根据你的需求选择一个适当的目录,并相应地修改程序中指定的路径。

2.2 .pkl文件的读取方法?

参考博客:https://blog.csdn.net/Ving_x/article/details/114488844?spm=1001.2014.3001.5506

2.2.1 benchmark_files

(py3.6) xiaowang@xw:~/maddpg-master/maddpg-master/experiments/benchmark_files$ python

Python 3.6.13 |Anaconda, Inc.| (default, Jun 4 2021, 14:25:59)

[GCC 7.5.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

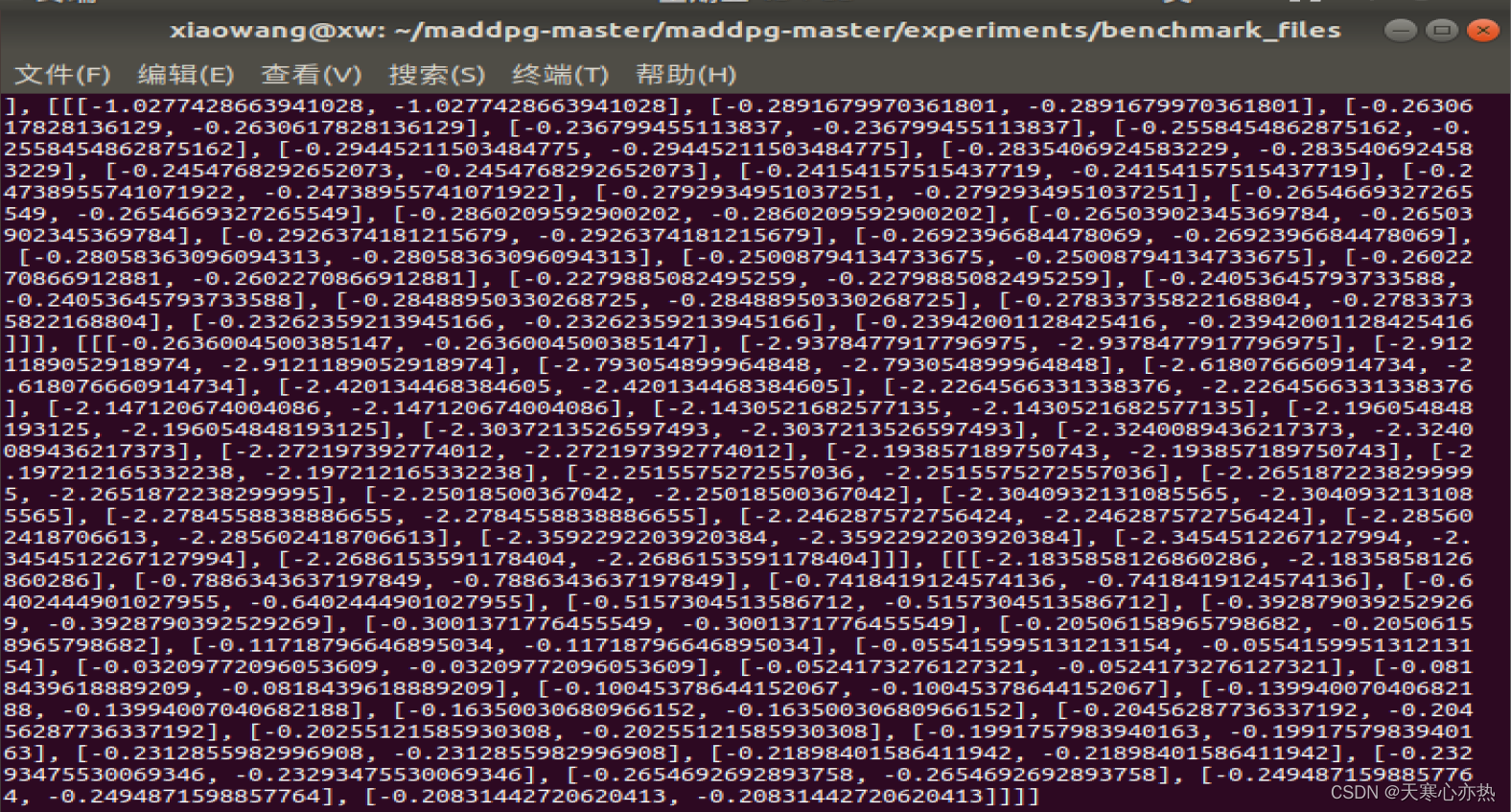

>>> import pickle

>>> f = open('simple_speaker_listener.pkl','rb')

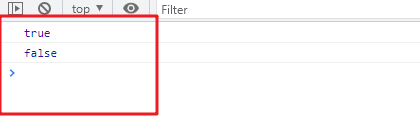

>>> pickle.load(f)

读取出来的结果具体什么意思,还不是很明白

2.2.2 learning_curves

(py3.6) xiaowang@xw:~/maddpg-master/maddpg-master/experiments$ cd learning_curves

(py3.6) xiaowang@xw:~/maddpg-master/maddpg-master/experiments/learning_curves$ python

Python 3.6.13 |Anaconda, Inc.| (default, Jun 4 2021, 14:25:59)

[GCC 7.5.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import pickle

>>> h = open('simple_speaker_listener_agrewards.pkl','rb')

>>> pickle.load(h)

[-163.46119879797098, -163.46119879797098, -114.84105265020297, -114.84105265020297, -64.38157706651045, -64.38157706651045, -70.75851825275176, -70.75851825275176, -49.63350864577481, -49.63350864577481, -43.8131349330825, -43.8131349330825, -49.2736882977545, -49.2736882977545, -46.977216679765895, -46.977216679765895, -45.81473064003137, -45.81473064003137, -53.31954291339132, -53.31954291339132]

>>> >>> i = open('simple_speaker_listener_rewards.pkl','rb')

>>> pickle.load(i)

[-326.92239759594196, -229.68210530040594, -128.7631541330209, -141.5170365055035, -99.2670172915496, -87.62626986616502, -98.54737659550898, -93.95443335953178, -91.62946128006274, -106.63908582678263]

>>>