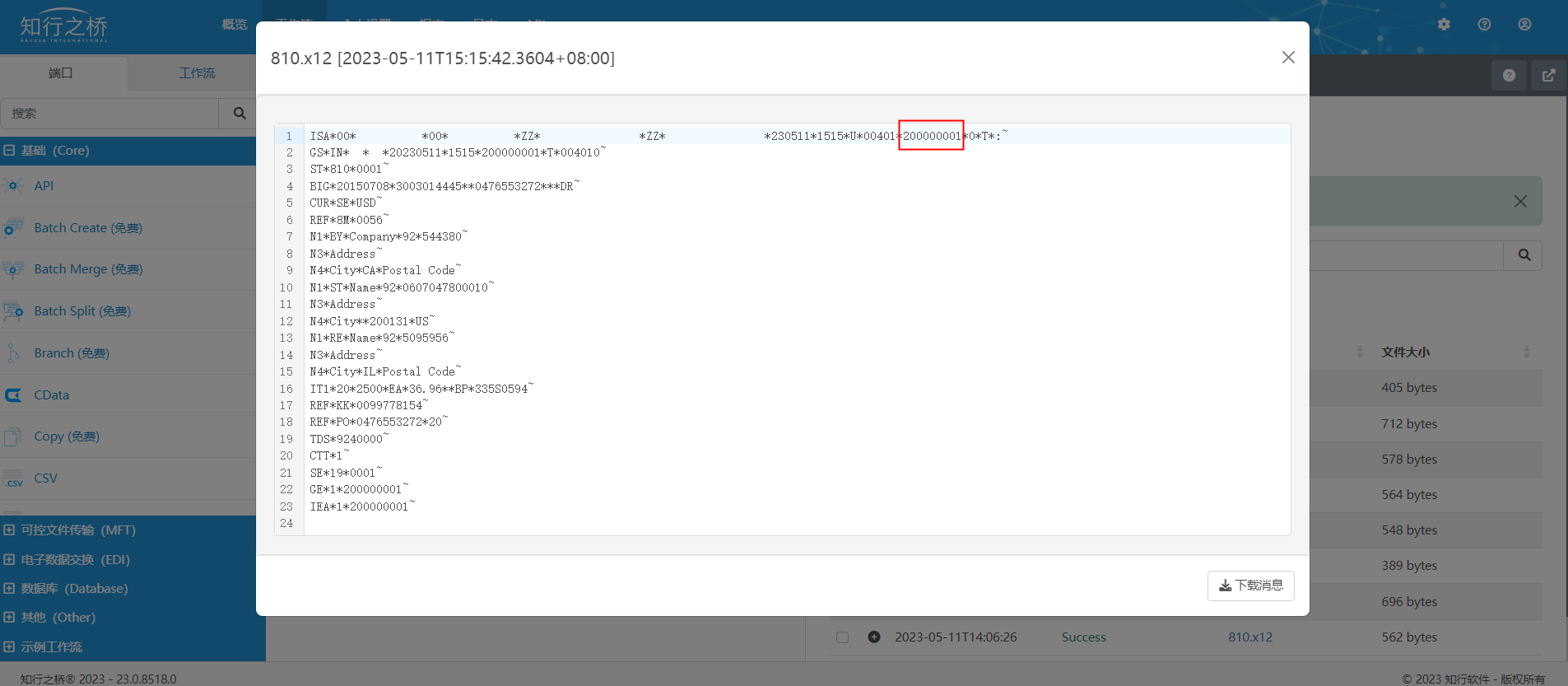

定时发送邮件功能:

定时发送邮件的功能位于 homework 的 views.py 中

使用的模块是 apscheduler (我读作ap司改就)

准备的部分:(了解即可)

安装好 django-apscheduler 后,在 setting.py 中添加:

INSTALLED_APPS = (

# ...

"django_apscheduler",

)

然后通过迁移命令(还记得吗,数据库迁移的两条命令:python manage.py makemigrations 和 python manage.py migrate)

就会在数据库中生成两张表:(你可以点进去看看是什么子)

- django_apscheduler_djangojob 表保存注册的任务以及下次执行的时间

- django_apscheduler_djangojobexecution 保存每次任务执行的时间和结果和任务状态

具体用到的部分:(重要)

# 定时任务相关的包

from apscheduler.schedulers.background import BackgroundScheduler

from django_apscheduler.jobstores import DjangoJobStore, register_job

'''

开启定时任务,实现自动发送邮件提醒

'''

# 1.实例化调度器

scheduler = BackgroundScheduler()

# 2.调度器使用DjangoJobStore()

scheduler.add_jobstore(DjangoJobStore(), "default")

try:

# 3.设置定时任务

# 另一种方式为每天固定时间执行任务,对应代码为

@register_job(scheduler, "cron", id='test2', hour=0)

# @register_job(scheduler, "interval", id='test1', minutes=1)

def my_job():

# 发送邮件提醒

send_email()

# 4.注册定时任务(0.4.0版本之后不需要注册)

# register_events(scheduler)

# 5.开启定时任务

scheduler.start()

except Exception as e:

print(e)

# 有错误就停止定时器

# scheduler.shutdown()

# 获取应当被提醒的学生的邮箱列表

def send_email():

today = datetime.date.today()

print('今天的日期是:', today)

# 替换用字典

var = {

'ENDDATE': "AND d.end_date = '" + str(today + datetime.timedelta(days=1)) + " 00:00:00'",

}

sql_raw = '''

SELECT

a.id,

a.name,

b.email,

c.name AS lesson_name,

d.name AS homework_name,

d.end_date

FROM

student a

LEFT JOIN user b ON a.id = b.id

LEFT JOIN lesson c ON a.class_id = c.classes

LEFT JOIN homework d ON c.id = d.lesson_id

WHERE

1=1

[ENDDATE]

'''

sql = sql_fmt(sql_raw, var)

print(sql)

res = do_sql(sql)

print(res)

for r in res:

if r is not None:

send_email1(r)

print('给', r['name'], '发送邮件提醒成功!')

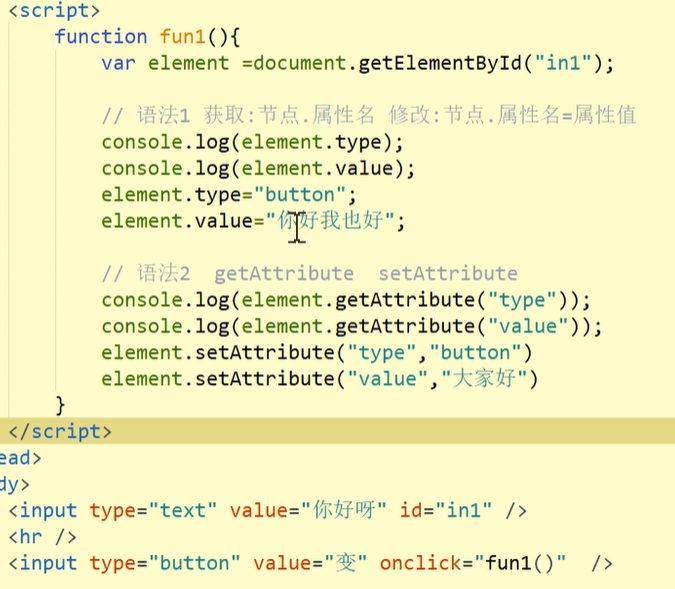

用到了我们写在 common 下的 API的 send_email.py 里的函数:

发送邮件的功能是由django提供的(你看那个 django.core.mail ),因此我们只需要准备好需要的东西(数据),按照格式填进去就好了。

发送邮件需要QQ邮箱的SMTP服务,毕竟是Django以你的身份(用你的QQ邮箱)给别人发邮件对不对,所以就需要这个密码。

from django.core.mail import get_connection, send_mail

def send_email1(info_dict):

print('发送邮件提醒')

password = 'XXXXXXXXXX'

conn = get_connection(host='smtp.qq.com', username='XXXXXXXXX@qq.com', password=password)

student_name = info_dict['name']

email = info_dict['email']

lesson_name = info_dict['lesson_name']

homework_name = info_dict['homework_name']

end_date = str(info_dict['end_date'])

# print([email])

msg = student_name + ',您好!\n 您参与的课程[' + lesson_name + ']的作业[' + homework_name + ']即将在 ' + end_date + ' 截止\n请尽快提交作业\n From: 在线作业管理系统'

send_mail(subject='作业截止提醒', message=msg, from_email='XXXXXXXXX@qq.com', recipient_list=[email], connection=conn)

补充:

重点在这个装饰器 @register_job()

- 注册后的任务会根据 id 以及相关的配置进行定时任务

- 设置触发器:'date’为单次任务,比如指定5月12日执行;'interval’为间隔,比如每隔一分钟执行一次;'cron’为定时执行,比如每天的8点半

代码查重功能:

代码查重的功能也位于 homework 的 views.py 中

大致思路:

- 前端我们有两个上传文件的按钮还有两个框,选择完文件后,会浏览本地文件,将文件名放入框框里

- 当文件框内的字发生了改变且两个框里都有文件的时候,就会触发上传,将两个文件传到我们的media文件夹下的code_comparison文件夹中

- 然后完成上传后,继续发ajax,将两个文件名传到后端,那么后端拿到文件名后,就可以打开文件,然后进行代码查重的处理(这个你看下面)

- 完成处理之后呢,将结果返回,结果显示到页面上(结果显示的部分先是隐藏的,有结果后再显示,html里有个

hide()和show()你看看)。

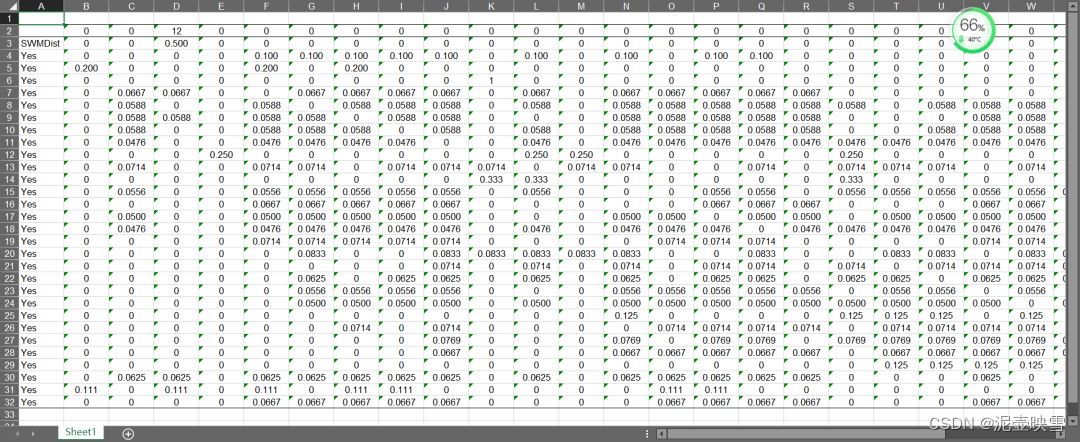

代码查重的思路:

- 有文件名了就有路径了,有路径了就可以读取文件,就是下面的

Code()的部分 - 他通过这个

PythonLexer(我读作莱克色) 来分析每个字段,区分他是关键字呀还是变量呀还是标点呀还是换行呀 - 每个字段就是对应一个block,那么整个代码就能换成一个二维的block数组,每个block通过下面的方法计算相似度

- 根据设定的阈值来判断块是否重复的,比如阈值是60分,这个块的相似度是80分,那他是不是就是重复了

- 总的代码的重复度就是

超过相似度阈值的block数除以总block数(例如:宿舍4个人,其中有3个人超过了60分,那么总体的及格率是不是就是 3 / 4?就是这个道理)

代码查重的代码:

是不是比较简单?清除文件的没有用上就先不管,然后热力图没实现的也不管,就下面这俩:

# 展示 代码查重页面

def show_code_compare(request):

return render(request, 'code_compare.html')

# 代码查重

def compare_code(request):

print('进行代码查重')

data = json.loads(request.body)

print(data)

f1_name = data['f1_name']

f2_name = data['f2_name']

Similarity_threshold = data['Similarity_threshold']

kgrams = data['kgrams']

window_size = data['window_size']

print(kgrams, window_size, Similarity_threshold)

# 代码查重

winnowing_rate = code_compare(f1_name, f2_name, kgrams, window_size, Similarity_threshold)

return JsonResponse({'code': 0, 'winnowing_rate': winnowing_rate, 'message': '查重成功'})

code_compare() 函数呢是我们写在common.API.code_hander里面的:

从 code_compare() 开始看奥,有些用不到的我在底下删掉了,比如热力图这种的,这样子看应该会清晰一点

import os

from pygments.lexers import PythonLexer

from pygments import token

from re import search

import io

from hashlib import sha1

from difflib import SequenceMatcher

from operator import itemgetter

import plotly.graph_objects as go

from plotly.subplots import make_subplots

from HMSol.settings import MEDIA_ROOT

categories = {

'Call': ['A', 'rgb(80, 138, 44)'],

'Builtin': ['B', 'rgb(212, 212, 102)'],

'Comparison': ['C', 'rgb(176, 176, 176)'],

'FunctionDef': ['D', 'rgb(4, 163, 199)'],

'Function': ['F', 'rgb(199, 199, 72)'],

'Indent': ['I', 'rgb(237, 237, 237)'],

'Keyword': ['K', 'rgb(161, 53, 219)'],

'Linefeed': ['L', 'rgb(255, 255, 255)'],

'Namespace': ['M', 'rgb(232, 232, 209)'],

'Number': ['N', 'rgb(192, 237, 145)'],

'Operator': ['O', 'rgb(212, 212, 212)'],

'Punctuation': ['P', 'rgb(214, 216, 216)'],

'Pseudo': ['Q', 'rgb(14, 3, 163)'],

'String': ['S', 'rgb(194, 126, 0)'],

'Variable': ['V', 'rgb(184, 184, 176)'],

'WordOp': ['W', 'rgb(8, 170, 207)'],

'NamespaceKw': ['X', 'rgb(161, 53, 219)']

}

# 定义要使用的哈希函数的种类

def hash_fun(text):

hs = sha1(text.encode("utf-8"))

hs = hs.hexdigest()[-4:]

hs = int(hs, 16)

return hs

# 将字符串转化为kgrams

def kgrams(text, n):

text = list(text)

return zip(*[text[i:] for i in range(n)])

# 获取每个grams的哈希值

def do_hashing(kgrams):

hashlist = []

for i, kg in enumerate(list(kgrams)):

ngram_text = "".join(kg)

hashvalue = hash_fun(ngram_text)

hashlist.append((hashvalue, i))

return hashlist

def computeCode(f1, f2):

# 若文件为空,则返回0, 0

if None in [f1, f2]:

return 0, 0

c1 = Code(f1, f1.name)

c2 = Code(f2, f2.name)

# c1.calculate_similarity(c2) # Calculate similarity

return c1, c2

# 给token分类

def get_category(t):

category = '' # 用单个大写字母代表类别

if t[0] == token.Keyword.Namespace:

category = categories['NamespaceKw'][0]

elif t[0] == token.Name.Namespace:

category = categories['Namespace'][0]

elif t[0] == token.Name.Function:

category = categories['Function'][0]

elif t[0] == token.Name:

category = categories['Variable'][0]

elif t[0] == token.Name.Builtin.Pseudo:

category = categories['Pseudo'][0]

elif t[0] == token.Name.Builtin:

category = categories['Builtin'][0]

elif t[0] in token.Literal.Number:

category = categories['Number'][0]

elif t[0] in token.Literal.String and t[1] not in ['\'', '\"'] and t[0] not in token.Literal.String.Doc:

category = categories['String'][0]

elif t[0] == token.Keyword and t[1] == 'def':

category = categories['FunctionDef'][0]

elif t[0] == token.Keyword:

category = categories['Keyword'][0]

elif t[0] == token.Text and (search(r'\s{2,}\S', t[1]) is not None):

category = categories['Indent'][0]

elif t[0] == token.Operator.Word:

category = categories['WordOp'][0]

elif t[0] == token.Operator and (t[1] == '==' or t[1] == '!='):

category = categories['Comparison'][0]

elif t[0] == token.Punctuation:

category = categories['Punctuation'][0]

elif t[0] == token.Operator:

category = categories['Operator'][0]

# elif t[0] == token.Text and t[1] == '\n':

# category = categories['Linefeed'][0]

elif t[0] == token.Whitespace and t[1] == '\n': # 换行

category = categories['Linefeed'][0]

else:

category = None # 若为注释或其他未分配的token,则忽略

return category

# 为定义的类别创建cmap, 用于在plot中显示颜色

def get_cmap():

clist = []

prev_c = [0, "rgb(255, 255, 255)"]

for key in categories:

clist.append(prev_c)

clist.append([ord(categories[key][0]), prev_c[1]])

prev_c = [ord(categories[key][0]), categories[key][1]]

# Normalize keys of colour representation

# Needed for plotly

max_ = max(clist, key=itemgetter(0))[0]

min_ = min(clist, key=itemgetter(0))[0]

converted = []

for element in clist:

converted.append([(element[0] - min_) / (max_ - min_), element[1]])

# Return cmap for categories

return converted

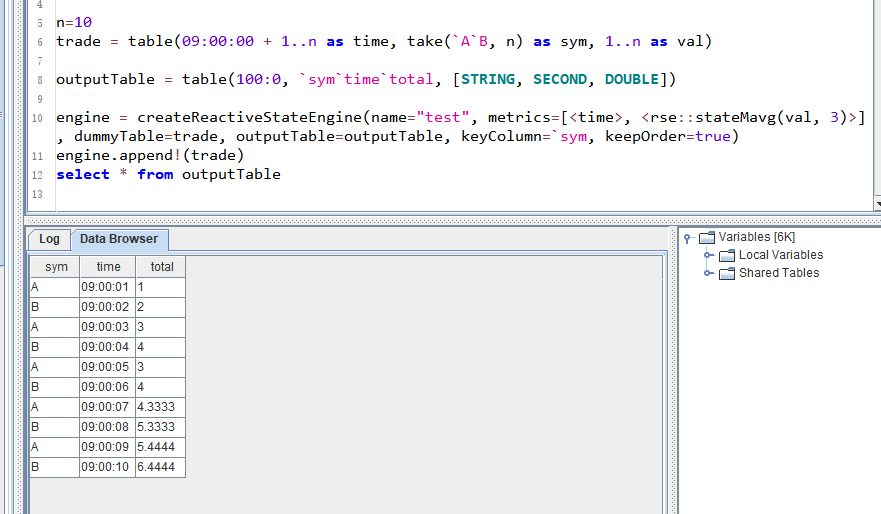

# 展示使用winnowing算法的相似度

def show_winnowing(c1, c2):

# 设置参数

# k_size 'KGrams大小', 2, 15, 5

# win_size '滑动窗口大小', 2, 15, 4

k_size = 5

win_size = 4

# 'Winnowing-相似度: **{:.0f}%**'.format(c1.winnowing_similarity(c2, k_size, win_size) * 100))

print('Winnowing-相似度: **{:.0f}%**'.format(c1.winnowing_similarity(c2, k_size, win_size) * 100))

# [论文](https://theory.stanford.edu/~aiken/publications/papers/sigmod03.pdf)以获得更多信息"

# 返回交集的长度

def intersection(lst1, lst2):

temp = set(lst2)

lst3 = [value for value in lst1 if value in temp]

return len(lst3)

# 将滑动窗口应用于哈希列表

def sl_window(hashes, n):

return zip(*[hashes[i:] for i in range(n)])

# 获取滑动窗口的最小值

def get_min(windows):

result = []

prev_min = ()

for w in windows:

# 找到最小散列并取最右边的出现

min_h = min(w, key=lambda x: (x[0], -x[1]))

# 仅在与前一个最小值不同时才使用散列

if min_h != prev_min:

result.append(min_h)

prev_min = min_h

return result

# winnowing算法

def winnowing(text, size_k, window_size):

hashes = (do_hashing(kgrams(text, size_k)))

return set(get_min(sl_window(hashes, window_size)))

# 使用winnowing算法和jaccard距离得到相似度

def winnowing_similarity(text_a, text_b, size_k=5, window_size=4):

# Get fingerprints using winnowing

w1 = winnowing(text_a, size_k, window_size)

w2 = winnowing(text_b, size_k, window_size)

# print('w1:', w1)

# Do use list instead of set to also consider number of occurece of copied content

hash_list_a = [x[0] for x in w1]

hash_list_b = [x[0] for x in w2]

# 交集

intersect = intersection(hash_list_a, hash_list_b) + intersection(hash_list_b, hash_list_a)

# 全集

union = len(hash_list_a) + len(hash_list_b)

# print('intersect:', intersect)

# print('union:', union)

return intersect / union

# 为一个block创建tokens

class Block(object):

def __init__(self, tokens, similarity=0, compared=False):

self._similarity = similarity

# self._compared = compared

self._tokens = tokens

@property

def similarity(self):

return self._similarity

@similarity.setter

def similarity(self, s):

self._similarity = s

@property

def tokens(self):

return self._tokens

# 运算符重载

def __len__(self):

return len(self.tokens)

def __str__(self):

return ''.join(str(t[0]) for t in self.tokens)

# 对象方法:

# 使用token比较两个block

def compare(self, other):

if isinstance(other, Block):

# 使用DIFFLIB计算相似度

return SequenceMatcher(None, str(self), str(other)).ratio()

# 使用原始字符串比较两个block

def compare_str(self, other):

if isinstance(other, Block):

return SequenceMatcher(None, self.clnstr(), other.clnstr()).ratio()

def clnstr(self):

return ''.join(str(t[3].lower()) for t in self.tokens)

def max_row(self):

return max(self.tokens, key=itemgetter(1))[1]

def max_col(self):

return max(self.tokens, key=itemgetter(2))[2]

# 将源代码用色块tokens表示, 便于实现相似性比较

class Code:

# 初始化

def __init__(self, text, name="", similarity_threshold=0.9):

self._blocks = []

self._max_row = 0

self._max_col = 0

self._name = name

self._similarity_threshold = similarity_threshold

self._lvs_blocksize = 8

self.__tokenizeFromText(text)

# 分析代码

def __tokenizeFromText(self, text):

lexer = PythonLexer() # 使用lexer做词法分析, 判断属于哪种编程语言

tokens = lexer.get_tokens(text)

tokens = list(tokens) # 转化为list

result = []

prev_c = '' # 前一个的类别

row = 0

col = 0

# 使用category简化tokens

for token in tokens:

c = get_category(token)

if c is not None:

# 换行检测,更新坐标但不加入result

if c == 'L':

row = row + 1

col = 0

# 检测到新的block, prev_c为\n且不为缩进

elif prev_c == 'L' and c != 'I' and result:

self.blocks.append(Block(result))

result = []

# 不为空行

if c != 'L':

# 区分函数调用和变量

if prev_c == 'V' and token[1] == '(':

result[-1] = 'A', result[-1][1], result[-1][2], result[-1][3]

result.append((c, row, col, token[1]))

col += 1

if col > self._max_col:

self._max_col = col

prev_c = c

self._max_row = row # 依照代码的行数更新最大行数

# 结果不为空则追加最后一个block

if result:

self.blocks.append(Block(result))

@property

def blocks(self):

return self._blocks

@blocks.setter

def blocks(self, b):

self._blocks = b

# 重置所有block的相似度

def resetSimilarity(self):

for block in self.blocks:

block.similarity = 0

# 使用string compare和annotate方法查找能匹配的block

def __pre_process(self, other):

other_blocks = other.blocks

for block_a in self.blocks:

for block_b in other_blocks:

if block_a.similarity == 1:

break

if block_a.clnstr() == block_b.clnstr():

block_a.similarity = 1.0

block_b.similarity = 1.0

# 处理代码的相似度

def __process_similarity(self, other):

for block_a in self.blocks:

if block_a.similarity == 0:

best_score = 0 # 记录最佳匹配分数

for block_b in other.blocks:

if len(block_a) > self._lvs_blocksize:

score = block_a.compare(block_b)

else:

score = block_a.compare_str(block_b)

# 找到最大的匹配分数

if score >= best_score:

best_score = score

# 也为代码b设置最佳匹配数

if block_b.similarity < best_score:

block_b.similarity = best_score

block_a.similarity = best_score

# 计算每个块的相似度分数(使用levensthein计算方法)

def calculate_similarity(self, other):

# 将所有block的相似度置为空

self.resetSimilarity()

other.resetSimilarity()

self.__pre_process(other)

self.__process_similarity(other)

other.__process_similarity(self)

# 返回计算的相似度,为超过相似度阈值的block数除以总block数

def getSimScore(self):

total_len = 0

len_plagiat = 0

for block in self.blocks:

total_len += len(block)

if block.similarity >= self._similarity_threshold:

len_plagiat += len(block) * block.similarity

return len_plagiat / total_len

# 计算每个块的相似度分数(使用使用winnowing方法)

def winnowing_similarity(self, other, size_k=5, window_size=4):

score = winnowing_similarity(str(self), str(other), size_k, window_size)

return score

# 打印结果 也是返回一个列表

def printResult(c1, c2, threshold):

threshold /= 100 # 除以100 比如我们设置的是60 实际用到的是0.6

# 这就是下面用到的 判断中等、高的门槛

plagrism_threshold_high = 90

plagrism_threshold_medium = 60

# 两个代码的相似度阈值都设为我们传入的这个threshold

c1._similarity_threshold = threshold

c2._similarity_threshold = threshold

ans = []

if (c1.getSimScore() * 100) >= plagrism_threshold_high:

ans.append('\'{}\' 的相似度为 {:.0f}% 相似度高, 可以认为是抄袭'.format(c1._name, c1.getSimScore() * 100))

elif (c1.getSimScore() * 100) >= plagrism_threshold_medium:

ans.append('\'{}\' 的相似度为 {:.0f}% 相似度中等, 可以认为不是抄袭'.format(c1._name, c1.getSimScore() * 100))

else:

ans.append('\'{}\' 的相似度为 {:.0f}% 相似度低, 可以认为不是抄袭'.format(c1._name, c1.getSimScore() * 100))

if (c2.getSimScore() * 100) >= plagrism_threshold_high:

ans.append('\'{}\' 的相似度为 {:.0f}% 相似度高, 可以认为是抄袭'.format(c2._name, c2.getSimScore() * 100))

elif (c2.getSimScore() * 100) >= plagrism_threshold_medium:

ans.append('\'{}\' 的相似度为 {:.0f}% 相似度中等, 可以认为不是抄袭'.format(c2._name, c2.getSimScore() * 100))

else:

ans.append('\'{}\' 的相似度为 {:.0f}% 相似度低, 可以认为不是抄袭'.format(c2._name, c2.getSimScore() * 100))

return ans

# 代码查重 传入两个文件的名称 还有滑块的三个参数

def code_compare(file_1_name, file_2_name, k_size, win_size, Similarity_threshold):

# 文件所在路径

file_1_url = MEDIA_ROOT + '/code_comparison/' + file_1_name

file_2_url = MEDIA_ROOT + '/code_comparison/' + file_2_name

# 读取文件部分

file_1 = open(file_1_url, 'br') # 使用二进制

file_2 = open(file_2_url, 'br')

io_file_1 = io.BytesIO(file_1.read()) # 使用BytesIO读取

io_file_2 = io.BytesIO(file_2.read())

file1 = io_file_1.read().decode(errors='忽略')

file2 = io_file_2.read().decode(errors='忽略')

c1 = Code(file1, file_1_name) # Code()是上面写的类 你可以点过去看

c2 = Code(file2, file_2_name)

# 计算相似度

c1.calculate_similarity(c2)

# 若文件不为空,则将结果加到这个ans列表里,返回

if 0 not in [c1, c2]:

ans = []

w = 'Winnowing-相似度: {:.0f}%'.format(c1.winnowing_similarity(c2, k_size, win_size) * 100)

ans.append(w)

res = printResult(c1, c2, Similarity_threshold)

for r in res:

ans.append(r)

return ans

有些函数你就看注释就好了 知道他是干什么的,比如这个

就记他是处理代码的相似度的

# 处理代码的相似度

def __process_similarity(self, other):

for block_a in self.blocks:

if block_a.similarity == 0:

best_score = 0 # 记录最佳匹配分数

for block_b in other.blocks:

if len(block_a) > self._lvs_blocksize:

score = block_a.compare(block_b)

else:

score = block_a.compare_str(block_b)

# 找到最大的匹配分数

if score >= best_score:

best_score = score

# 也为代码b设置最佳匹配数

if block_b.similarity < best_score:

block_b.similarity = best_score

block_a.similarity = best_score