文章目录

- 1. 二进制部署三节点(复用)高可用 k8s 集群

- 1.1 环境规划阶段

- 1.1.1 实验架构图

- 1.1.2 系统版本说明

- 1.1.3 环境基本信息

- 1.1.4 k8s 网段划分

- 1.2 基础安装及优化阶段

- 1.2.1 系统信息检查

- 1.2.2 静态 IP 地址配置

- 1.2.3 配置主机名

- 1.2.4 配置/etc/hosts文件

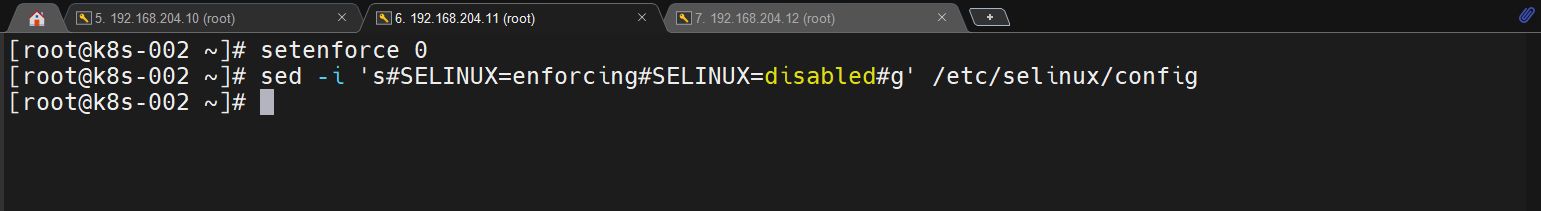

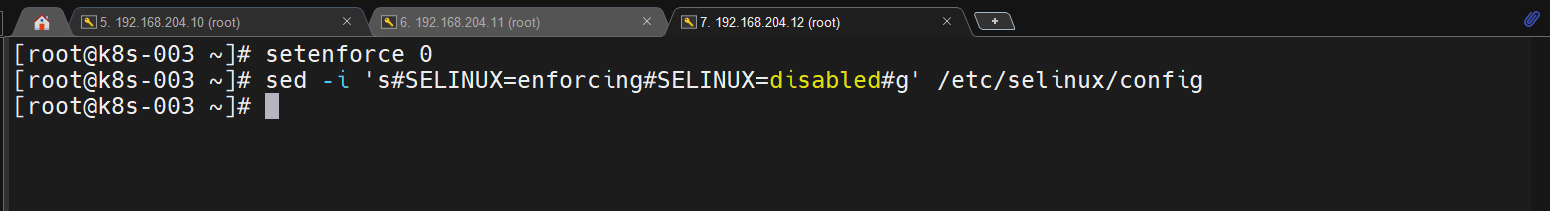

- 1.2.5 关闭 selinux

- 1.2.6 配置主机互信

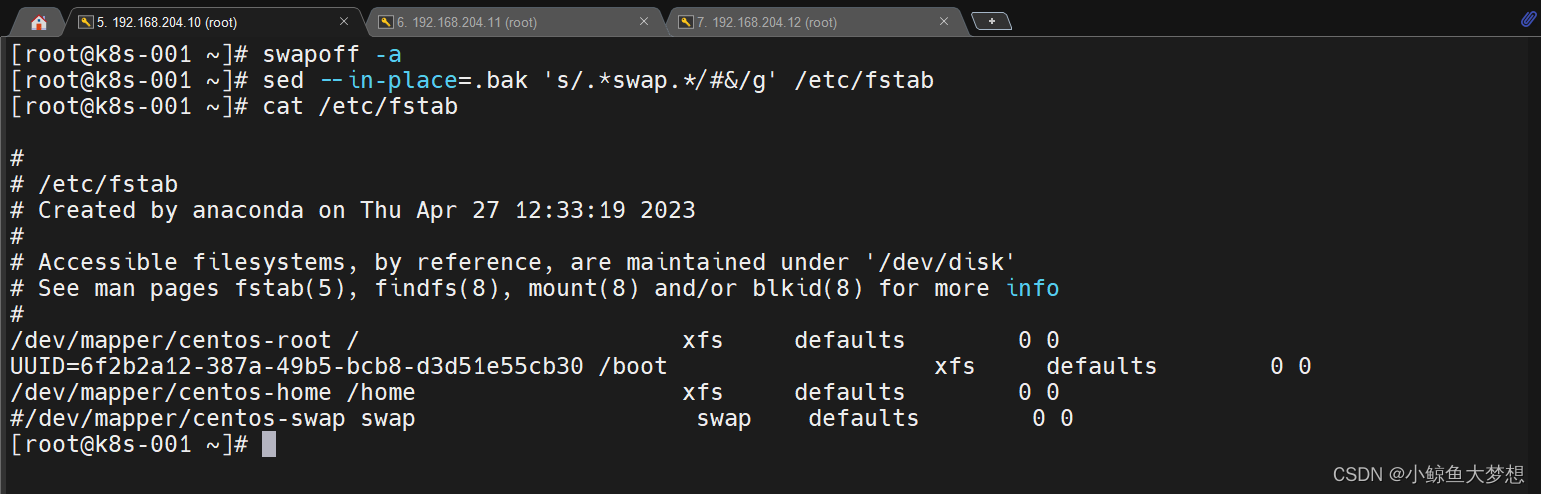

- 1.2.7 关闭交换分区

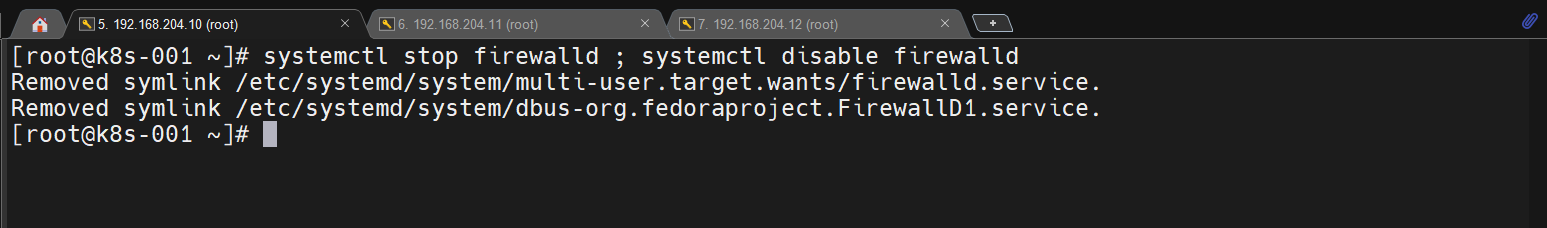

- 1.2.8 关闭 firewalld

- 1.2.9 关闭 NetworkManager

- 1.2.10 设置资源限制

- 1.2.11 配置时间同步

- 1.2.12 配置国内源

- 1.2.13 升级内核

- 1.2.14 安装基础工具

- 1.2.15 配置内核模块和参数

- 1.2.16 安装容器运行时

- 1.3 集群创建优化阶段

- 1.3.1 高可用组件安装 keepalived、nginx

- 1.3.2 cfssl 工具安装

- 1.3.3 配置 CA 证书中心

- 1.3.4 生成 etcd 证书

- 1.3.5 生成 apiserver 证书

- 1.3.6 生成 kubectl 证书

- 1.3.7 生成 controller-manager 证书

- 1.3.8 生成 scheduler 证书

- 1.3.9 生成 kube-proxy 证书

- 1.3.10 安装 etcd 高可用集群

- 1.3.11 k8s 二进制包安装

- 1.3.12 安装 kube-apiserver

- 1.3.13 安装 kubectl

- 1.3.14 安装 kube-controller-manager

- 1.3.15 安装 **kube-scheduler**

- 1.3.16 安装 kubelet

- 1.3.17 安装 kube-proxy

- 1.3.18 安装网络插件 calico

- 1.3.19 安装 coredns

- 1.4 插件安装及优化阶段

- 1.4.1 kubectl 命令补全功能

- 1.4.2 Dashboard UI 安装

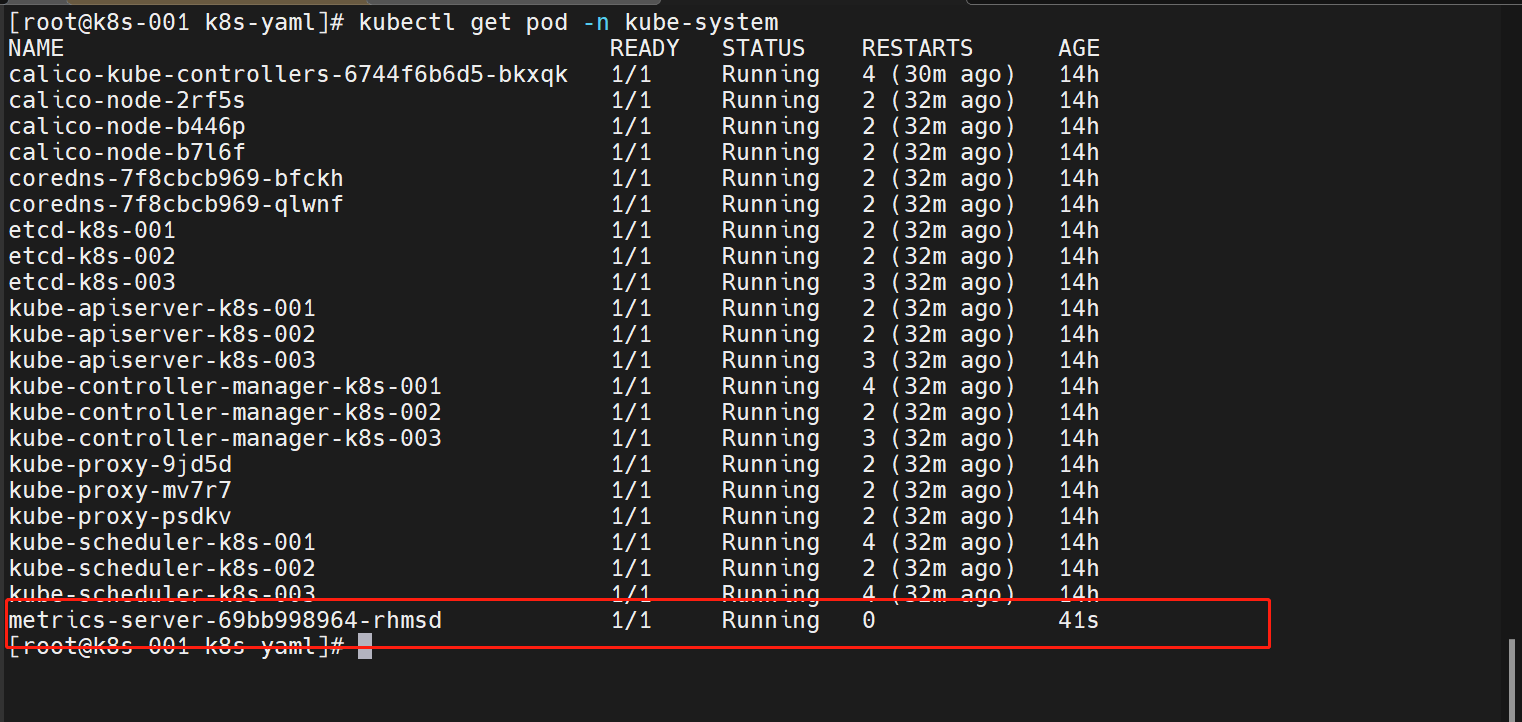

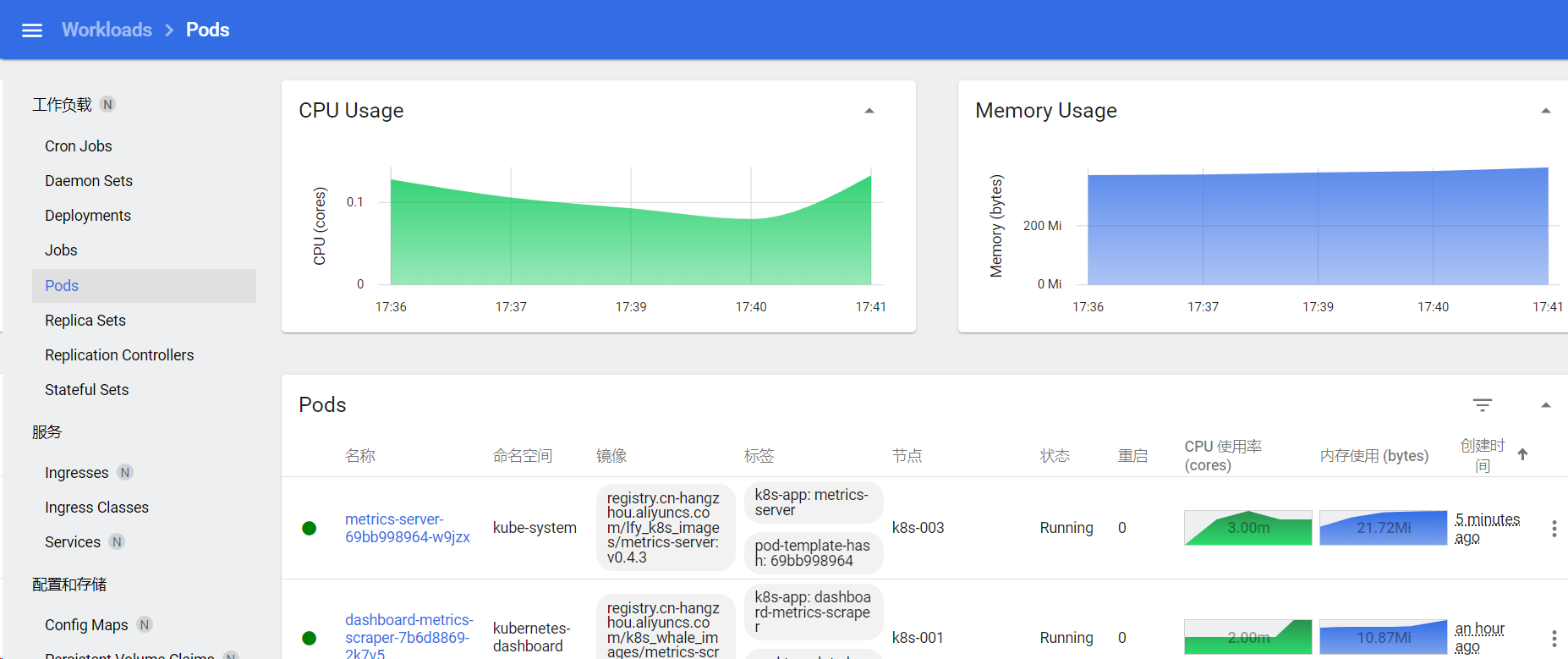

- 1.4.3 安装 metrics

- 1.5 集群验证阶段

- 1.5.1 节点验证

- 1.5.2 Pod 验证

- 1.5.3 k8s 网段验证

- 1.5.4 创建资源验证

- 1.5.5 pod 解析验证

- 1.5.6 节点访问验证

- 1.5.7 Pod 之间的通信

1. 二进制部署三节点(复用)高可用 k8s 集群

1.1 环境规划阶段

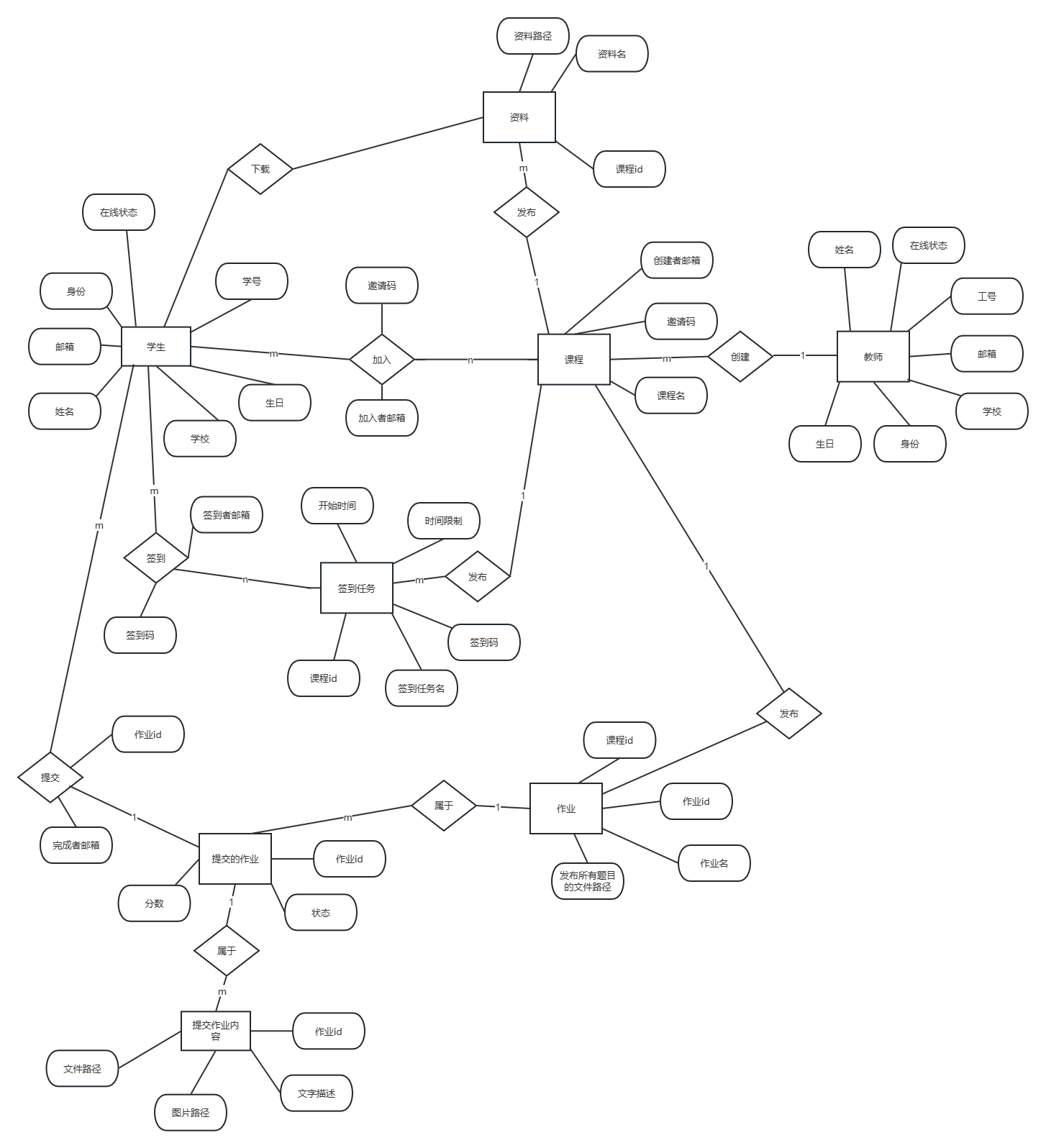

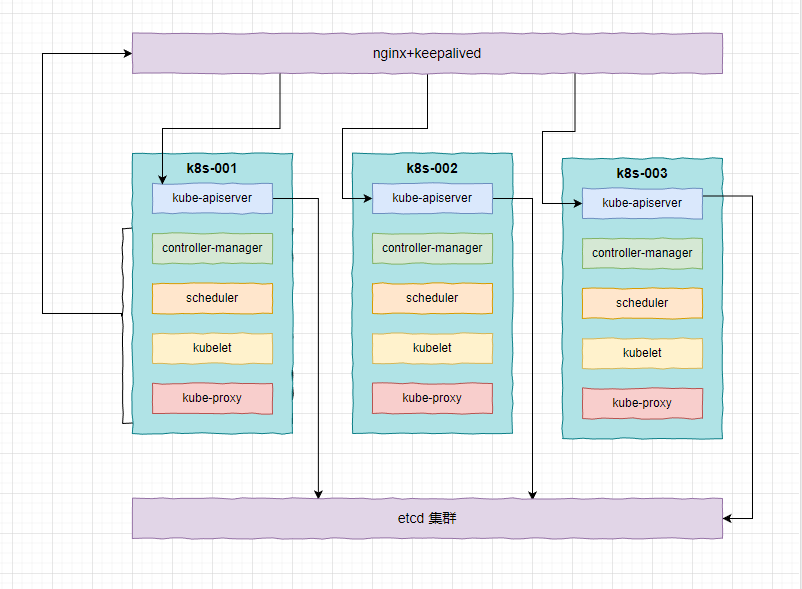

1.1.1 实验架构图

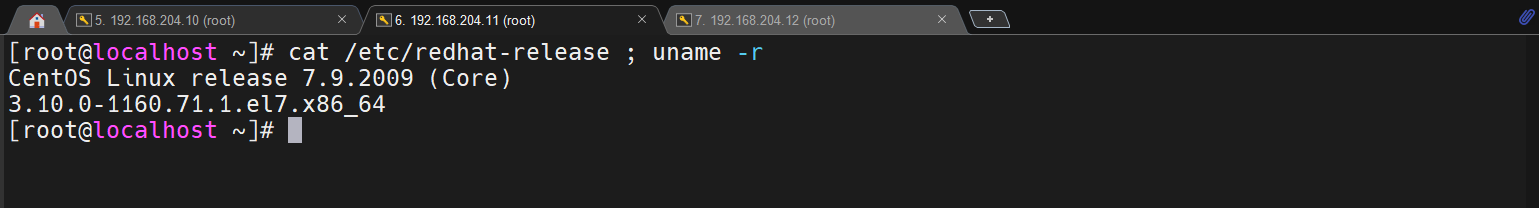

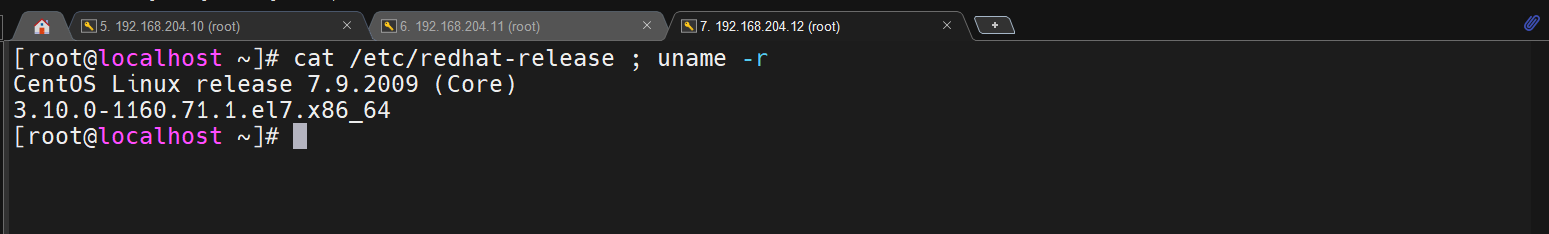

1.1.2 系统版本说明

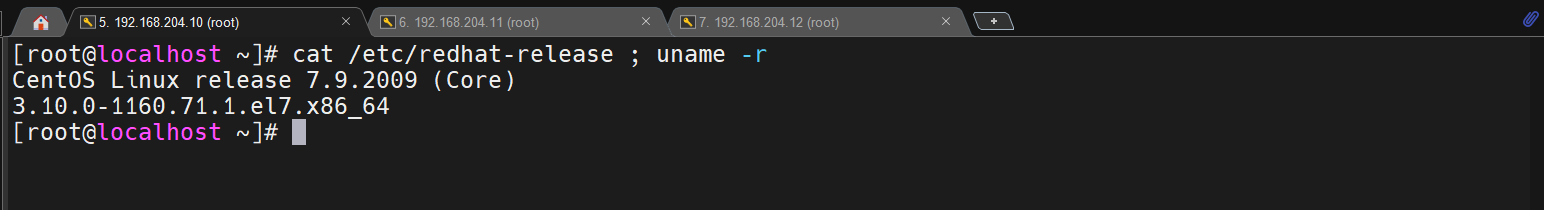

OS 版本:CentOS Linux release 7.9.2009 (Core)

初始内核版本:3.10.0-1160.71.1.el7.x86_64

配置信息:2C2G 150G硬盘

文件系统:xfs

网络:外网权限

k8s 版本:1.25.9

1.1.3 环境基本信息

| K8s集群角色 | IP地址 | 主机名 | 组件信息 |

|---|---|---|---|

| 控制节点1(工作节点1) | 192.168.204.10 | k8s-001 | apiserver、controller-manager、schedule、etcd、kube-proxy、容器运行时、keepalived、nginx、calico、coredns、kubelet |

| 控制节点2(工作节点2) | 192.168.204.11 | k8s-002 | apiserver、controller-manager、schedule、etcd、kube-proxy、容器运行时、keepalived、nginx、calico、coredns、kubelet |

| 控制节点3(工作节点3) | 192.168.204.12 | k8s-003 | apiserver、controller-manager、schedule、etcd、kube-proxy、容器运行时、calico、coredns、kubelet |

| VIP地址 | 192.168.204.13(k8s-vip) |

1.1.4 k8s 网段划分

- service 网段:10.165.0.0/16

1.2 基础安装及优化阶段

无特别说明,三台都要执行

1.2.1 系统信息检查

检查系统版本以及内核

cat /etc/redhat-release ; uname -r

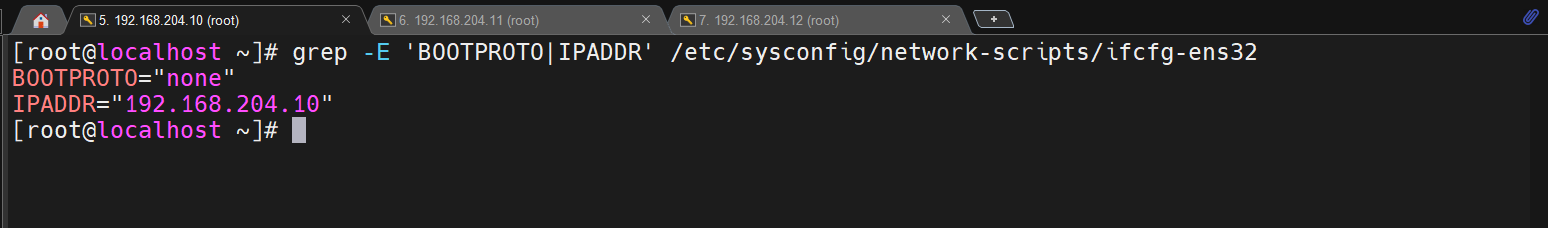

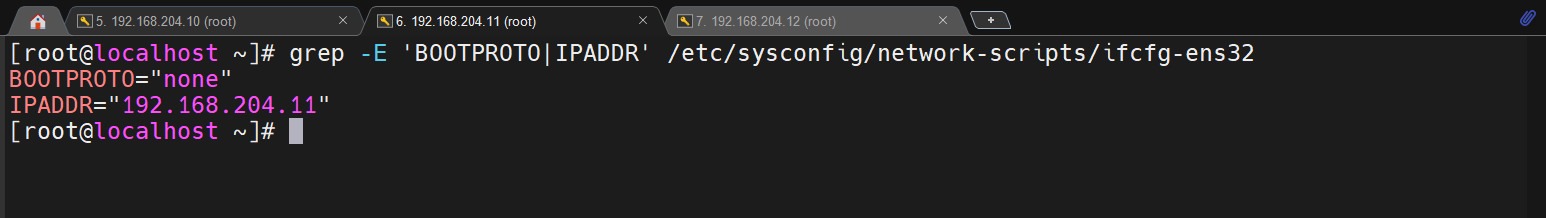

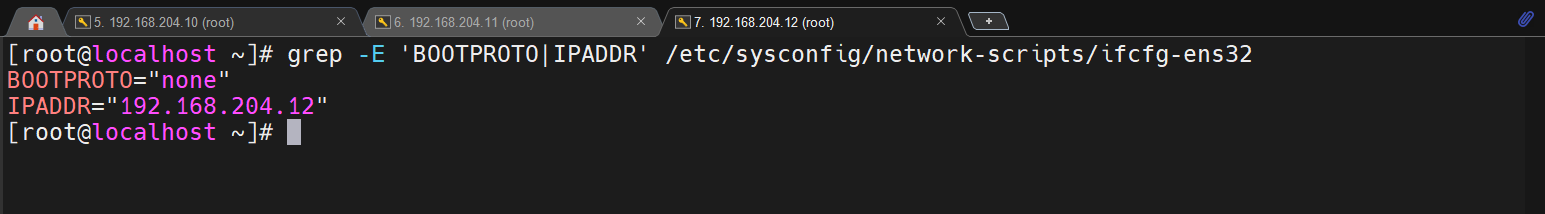

1.2.2 静态 IP 地址配置

服务器必须配置静态IP地址,不可变动

grep -E 'BOOTPROTO|IPADDR' /etc/sysconfig/network-scripts/ifcfg-ens32

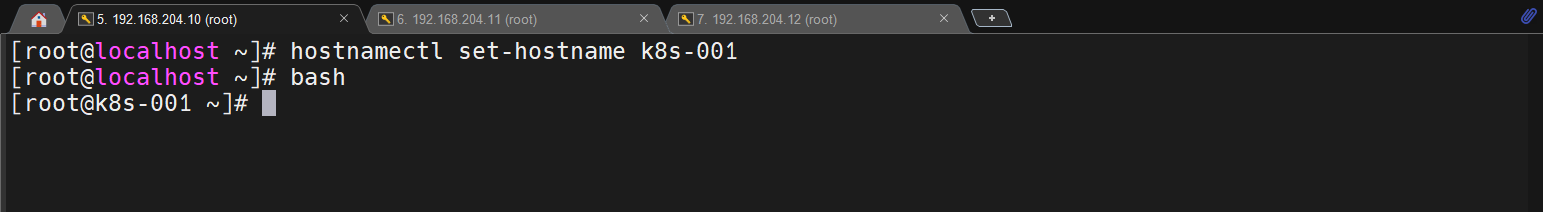

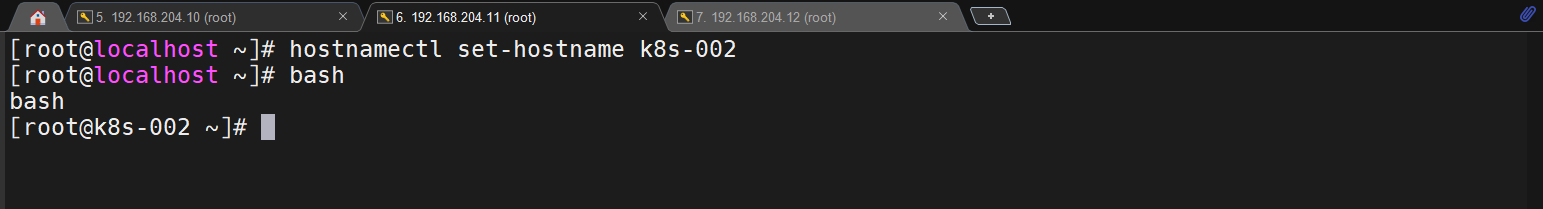

1.2.3 配置主机名

按照规划配置对应主机的主机名即可

hostnamectl set-hostname k8s-001

hostnamectl set-hostname k8s-002

hostnamectl set-hostname k8s-003

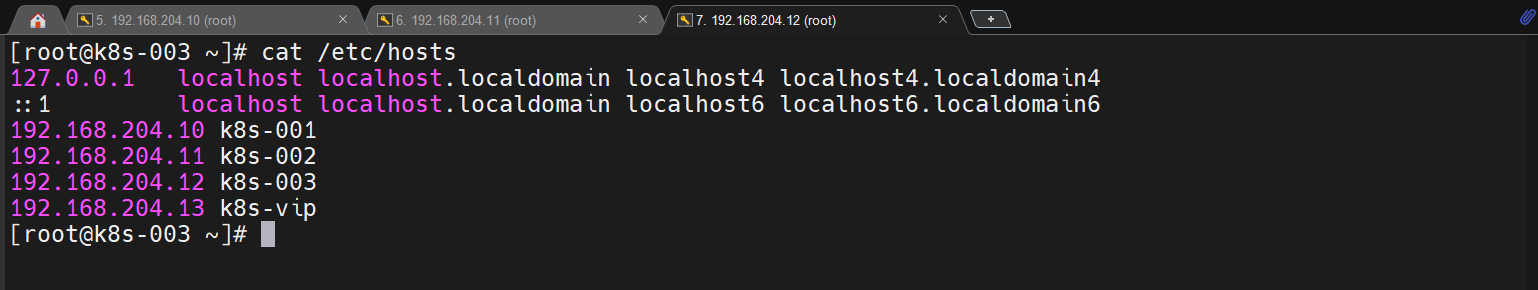

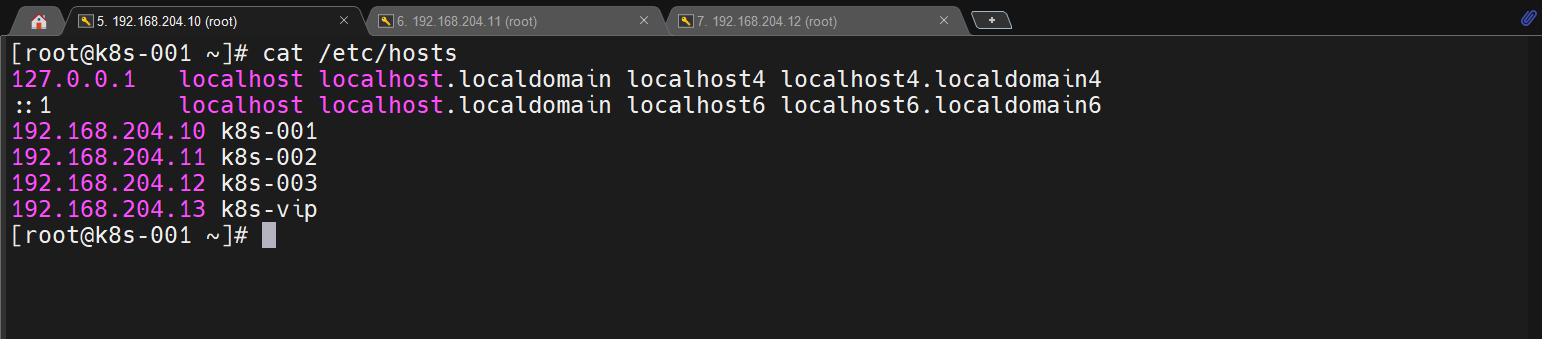

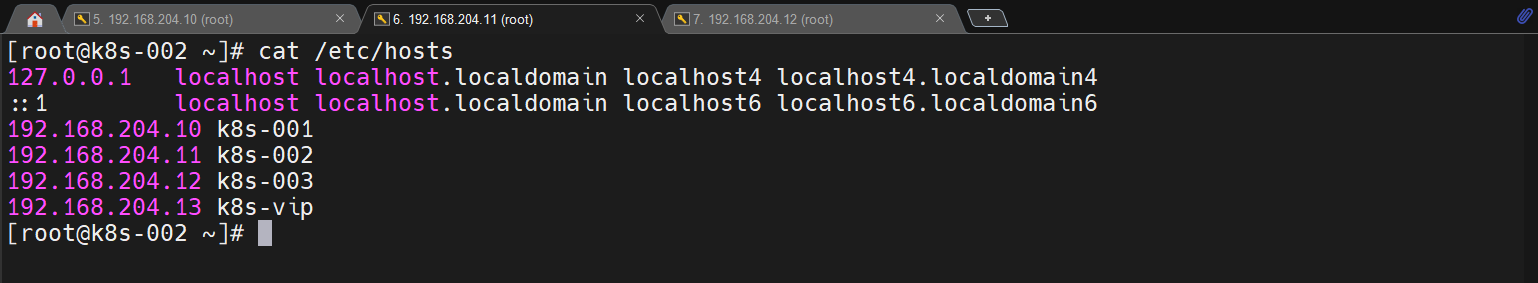

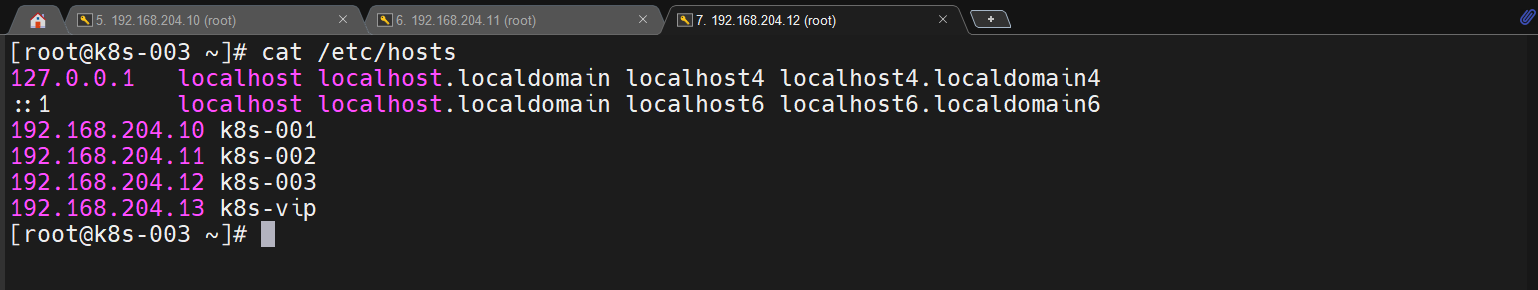

1.2.4 配置/etc/hosts文件

cat >> /etc/hosts <<EOF

192.168.204.10 k8s-001

192.168.204.11 k8s-002

192.168.204.12 k8s-003

192.168.204.13 k8s-vip

EOF

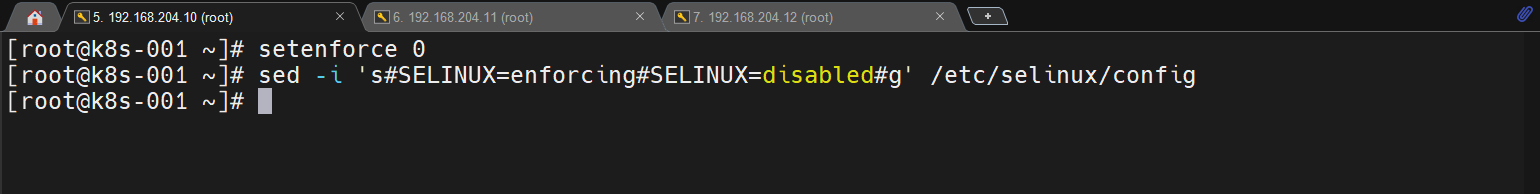

1.2.5 关闭 selinux

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

1.2.6 配置主机互信

### 只需要配置k8s-001到三节点互信即可

ssh-keygen -t rsa -f ~/.ssh/id_rsa -N ''

ssh-copy-id k8s-001

ssh-copy-id k8s-002

ssh-copy-id k8s-003

1.2.7 关闭交换分区

必须关闭

swapoff -a

sed --in-place=.bak 's/.*swap.*/#&/g' /etc/fstab

1.2.8 关闭 firewalld

systemctl stop firewalld ; systemctl disable firewalld

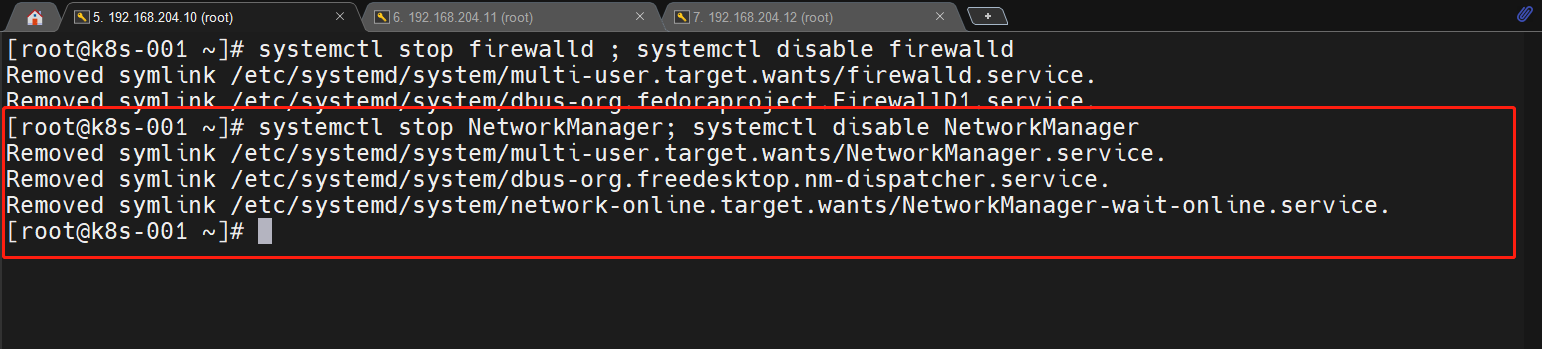

1.2.9 关闭 NetworkManager

systemctl stop NetworkManager; systemctl disable NetworkManager

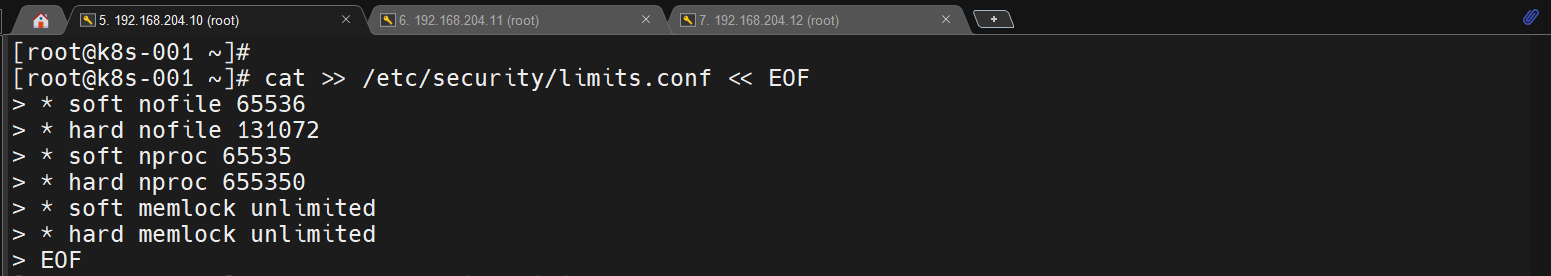

1.2.10 设置资源限制

/etc/security/limits.conf 初始文件没有任何有效的参数内容

cat >> /etc/security/limits.conf << EOF

* soft nofile 65536

* hard nofile 131072

* soft nproc 65535

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

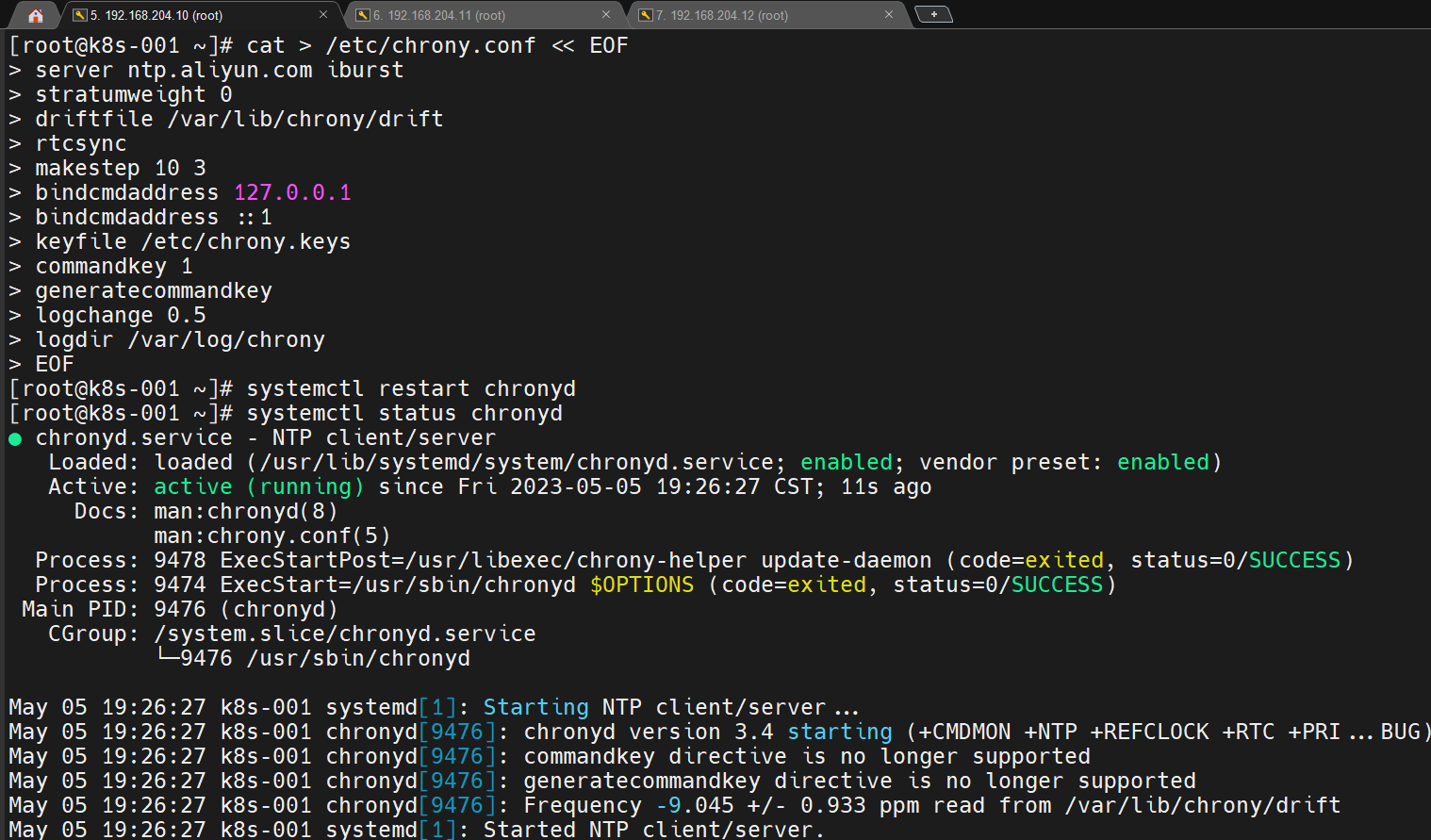

1.2.11 配置时间同步

### 配置chrony.conf

cat > /etc/chrony.conf << EOF

server ntp.aliyun.com iburst

stratumweight 0

driftfile /var/lib/chrony/drift

rtcsync

makestep 10 3

bindcmdaddress 127.0.0.1

bindcmdaddress ::1

keyfile /etc/chrony.keys

commandkey 1

generatecommandkey

logchange 0.5

logdir /var/log/chrony

EOF

### 重启服务

1.2.12 配置国内源

## centos 7 的yum和epel源

mkdir /etc/yum.repos.d.bak && mv /etc/yum.repos.d/*.repo /etc/yum.repos.d.bak

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

curl -o /etc/yum.repos.d/epel.repo https://mirrors.aliyun.com/repo/epel-7.repo

yum clean all && yum makecache

## 配置docker源

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

yum makecache fast

## 配置k8s源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

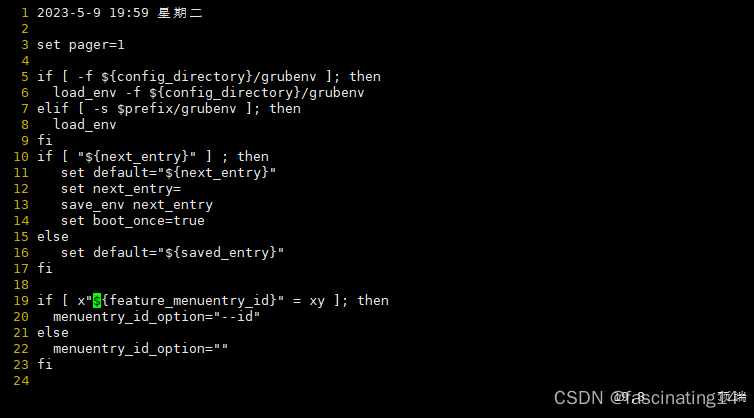

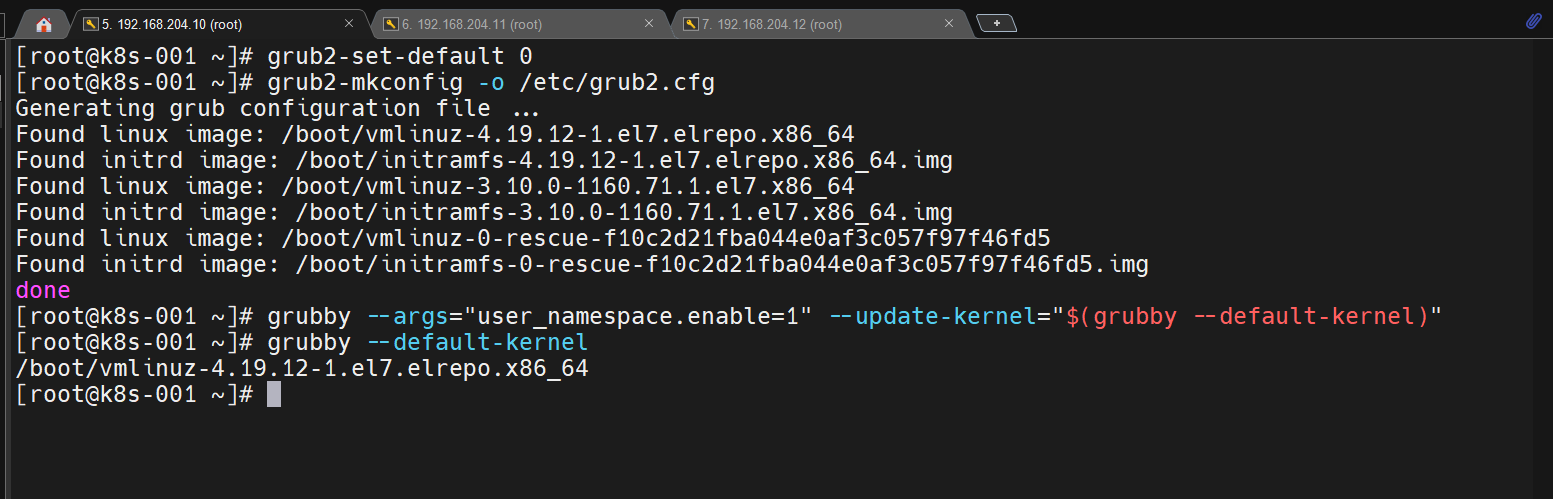

1.2.13 升级内核

### 先升级一下软件包

yum update --exclude=kernel* -y

### 下载内核(4.19以上推荐,默认其实也可以)

curl -o kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

curl -o kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

### 安装内核(当前目录只有这2个rpm包)

yum localinstall -y *.rpm

### 更改内核启动顺序

grub2-set-default 0

grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

### 检查是否加载最新

grubby --default-kernel

### 重启服务器

reboot

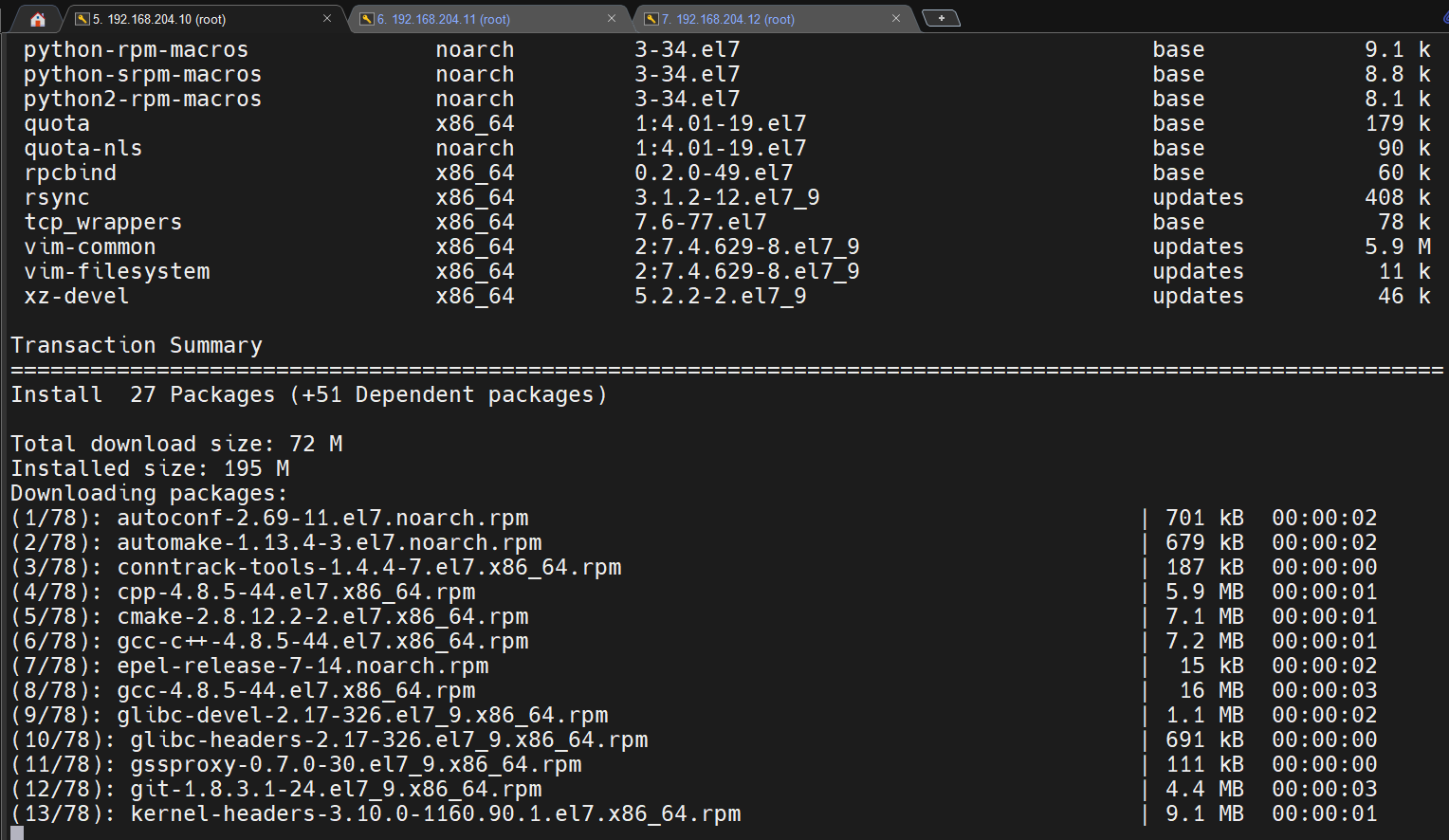

1.2.14 安装基础工具

就安装一些用得到的一些工具

yum install -y device-mapper-persistent-data net-tools nfs-utils jq psmisc git lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack telnet ipset sysstat libseccomp

1.2.15 配置内核模块和参数

### 配置需要加载模块

cat > /etc/modules-load.d/k8s.conf << EOF

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

overlay

br_netfilter

EOF

## 开机自动加载

systemctl enable systemd-modules-load.service --now

## 配置内核参数优化

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

net.ipv4.conf.all.route_localnet = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

## 生效加载

sysctl --system

### 重启服务,检查模块加载是否正常

reboot

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

1.2.16 安装容器运行时

docker 引擎和 containerd 都安装上

安装 containerd

### 安装

yum install -y containerd.io-1.6.6

### 生成配置文件

containerd config default > /etc/containerd/config.toml

### 修改配置文件

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml

sed -i 's#sandbox_image = "k8s.gcr.io/pause:3.6"#sandbox_image="registry.aliyuncs.com/google_containers/pause:3.7"#g' /etc/containerd/config.toml

### 配置镜像加速

sed -i 's#config_path = ""#config_path = "/etc/containerd/certs.d"#g' /etc/containerd/config.toml

mkdir -p /etc/containerd/certs.d/docker.io

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://registry-1.docker.io"

[host."https://xpd691zc.mirror.aliyuncs.com"]

capabilities = ["pull", "resolve", "push"]

EOF

## 启动生效

systemctl daemon-reload ; systemctl enable containerd --now

**安装 crictl **

### 下载二进制包

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.25.0/crictl-v1.25.0-linux-amd64.tar.gz

### 解压

tar -xf crictl-v1.25.0-linux-amd64.tar.gz

### 移动位置

mv crictl /usr/local/bin/

### 配置

cat > /etc/crictl.yaml << EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

### 重启生效

systemctl restart containerd

安装 docker

yum install docker-ce -y

# 配置镜像加速

mkdir -p /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://xpd691zc.mirror.aliyuncs.com"]

}

EOF

# 启动服务

systemctl enable docker --now

1.3 集群创建优化阶段

1.3.1 高可用组件安装 keepalived、nginx

安装组件

yum install nginx keepalived nginx-mod-stream -y

cat /dev/null > /etc/nginx/nginx.conf

cat /dev/null > /etc/keepalived/keepalived.conf

配置相关文件

### 三个节点都一样的 /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream kube-apiserver {

server 192.168.204.10:6443 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.204.11:6443 weight=5 max_fails=3 fail_timeout=30s;

server 192.168.204.12:6443 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 16443;

proxy_pass kube-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}

### /etc/keepalived/keepalived.conf 文件

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens32 ##注意网卡

virtual_router_id 51

priority 100 # 主是100,其他2个节点是90、80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.204.13/24

}

track_script {

check_nginx

}

}

### /etc/keepalived/check_nginx.sh 文件内容

#!/bin/bash

#

counter=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$" )

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$" )

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

## 给执行权限

chmod +x /etc/keepalived/check_nginx.sh

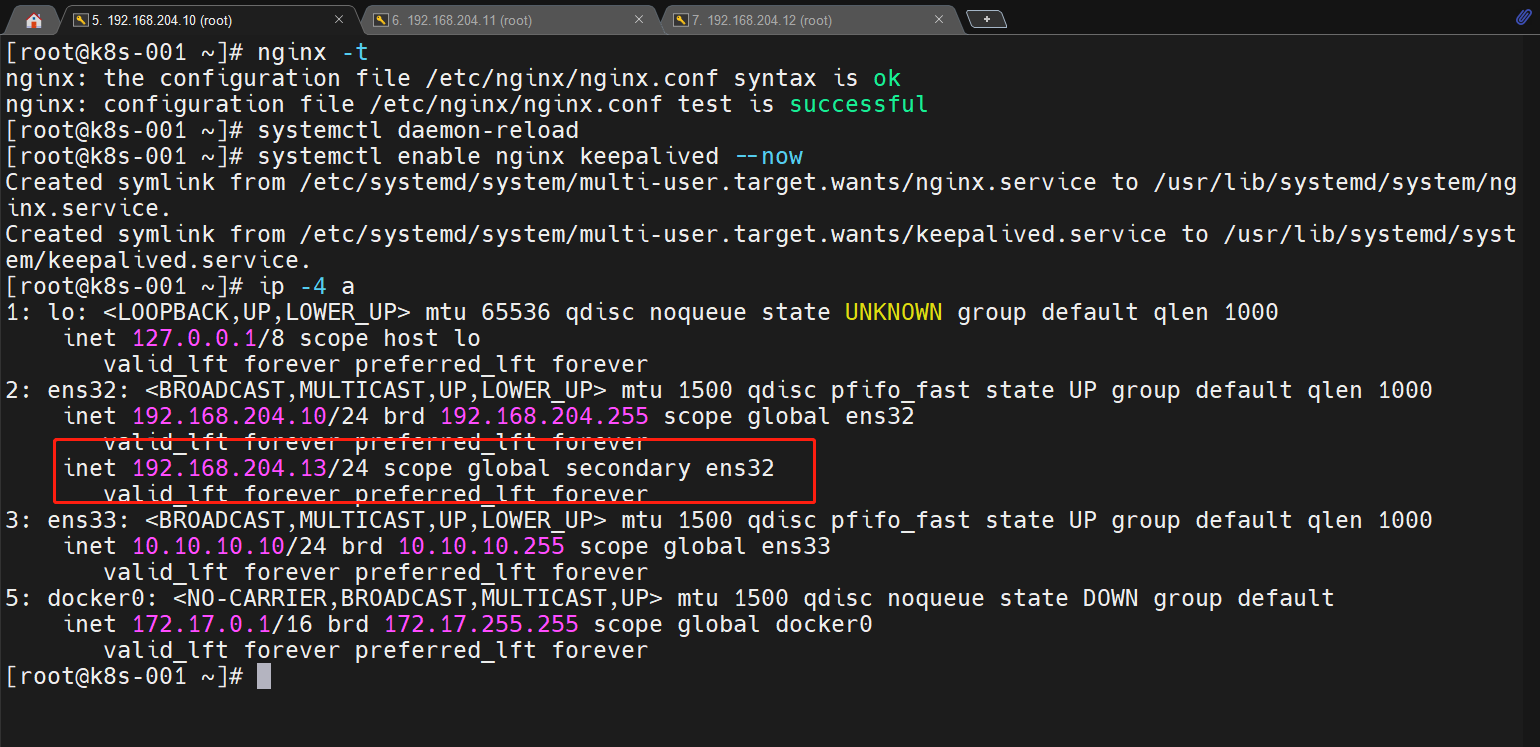

启动验证

nginx -t

systemctl daemon-reload

systemctl enable nginx keepalived --now

ip -4 a

## 尝试停一下主节点的nginx,看是否漂移恢复

systemctl stop nginx

## 验证结果:vip 正常飘逸,恢复后会回到第一台

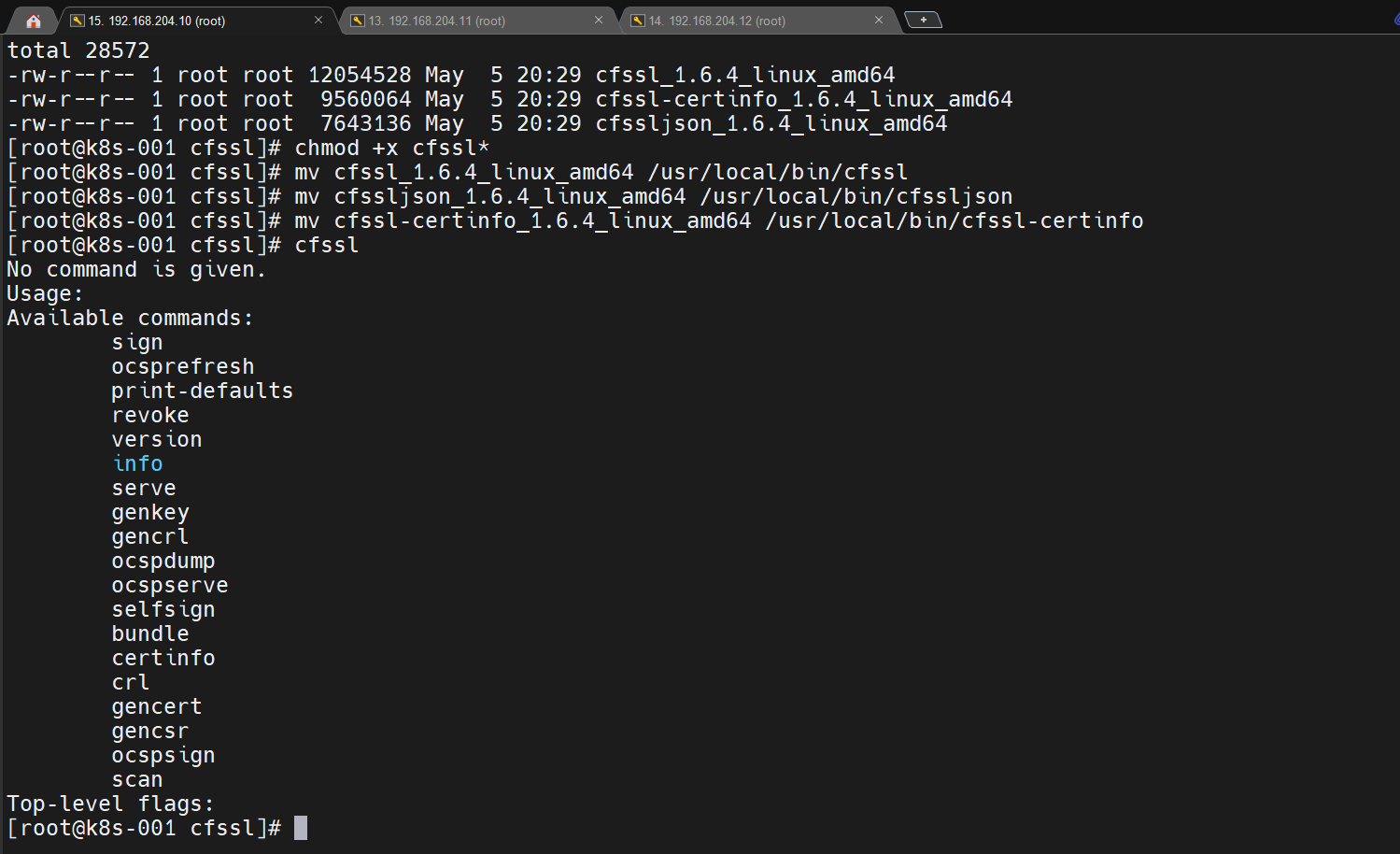

1.3.2 cfssl 工具安装

## k8s-001 执行安装即可

# 下载地址:https://github.com/cloudflare/cfssl/releases

# 下载三个软件:cfssl-certinfo_linux-amd64 、cfssljson_linux-amd64 、cfssl_linux-amd64

# 下载最新版本1.6.4后操作

cd /root/cfssl

chmod +x cfssl*

mv cfssl_1.6.4_linux_amd64 /usr/local/bin/cfssl

mv cfssljson_1.6.4_linux_amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_1.6.4_linux_amd64 /usr/local/bin/cfssl-certinfo

1.3.3 配置 CA 证书中心

我就在 k8s-001 上操作生成证书文件

先创建一个存放各个证书的文件夹

mkdir -p /root/cfssl/pki

cd /root/cfssl/pki/

配置 CA 证书

- 生成 CA 证书请求文件

vim /root/cfssl/pki/ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "87600h"

}

}

- 生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

- 生成 CA 证书的配置文件

vim /root/cfssl/pki/ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

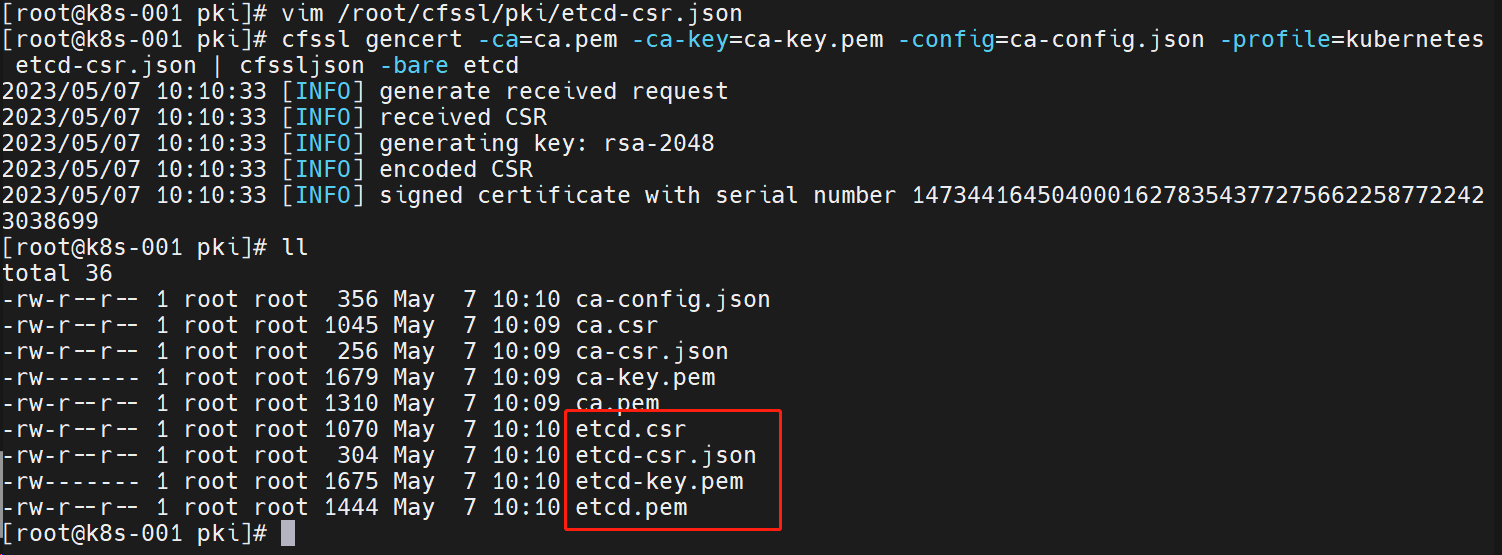

1.3.4 生成 etcd 证书

- 配置证书请求文件

vim /root/cfssl/pki/etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.204.10",

"192.168.204.11",

"192.168.204.12",

"192.168.204.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "k8s",

"OU": "system"

}]

}

- 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

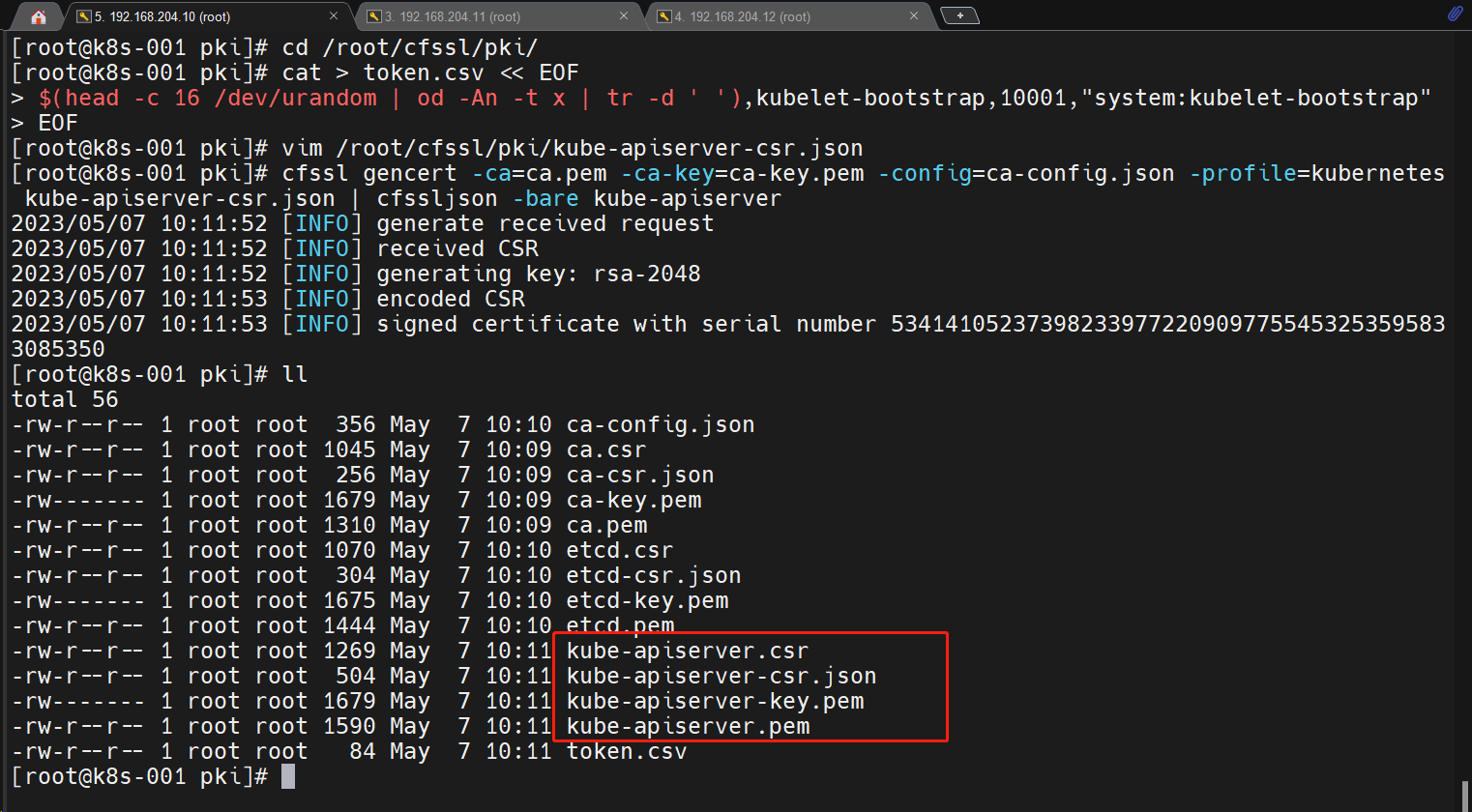

1.3.5 生成 apiserver 证书

- 创建 token.csv 文件

cd /root/cfssl/pki/

cat > token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

- 创建证书请求文件

vim /root/cfssl/pki/kube-apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.204.10",

"192.168.204.11",

"192.168.204.12",

"192.168.204.13",

"10.165.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "k8s",

"OU": "system"

}

]

}

- 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

1.3.6 生成 kubectl 证书

- 创建证书请求文件

vim /root/cfssl/pki/admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "system:masters",

"OU": "system"

}

]

}

- 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

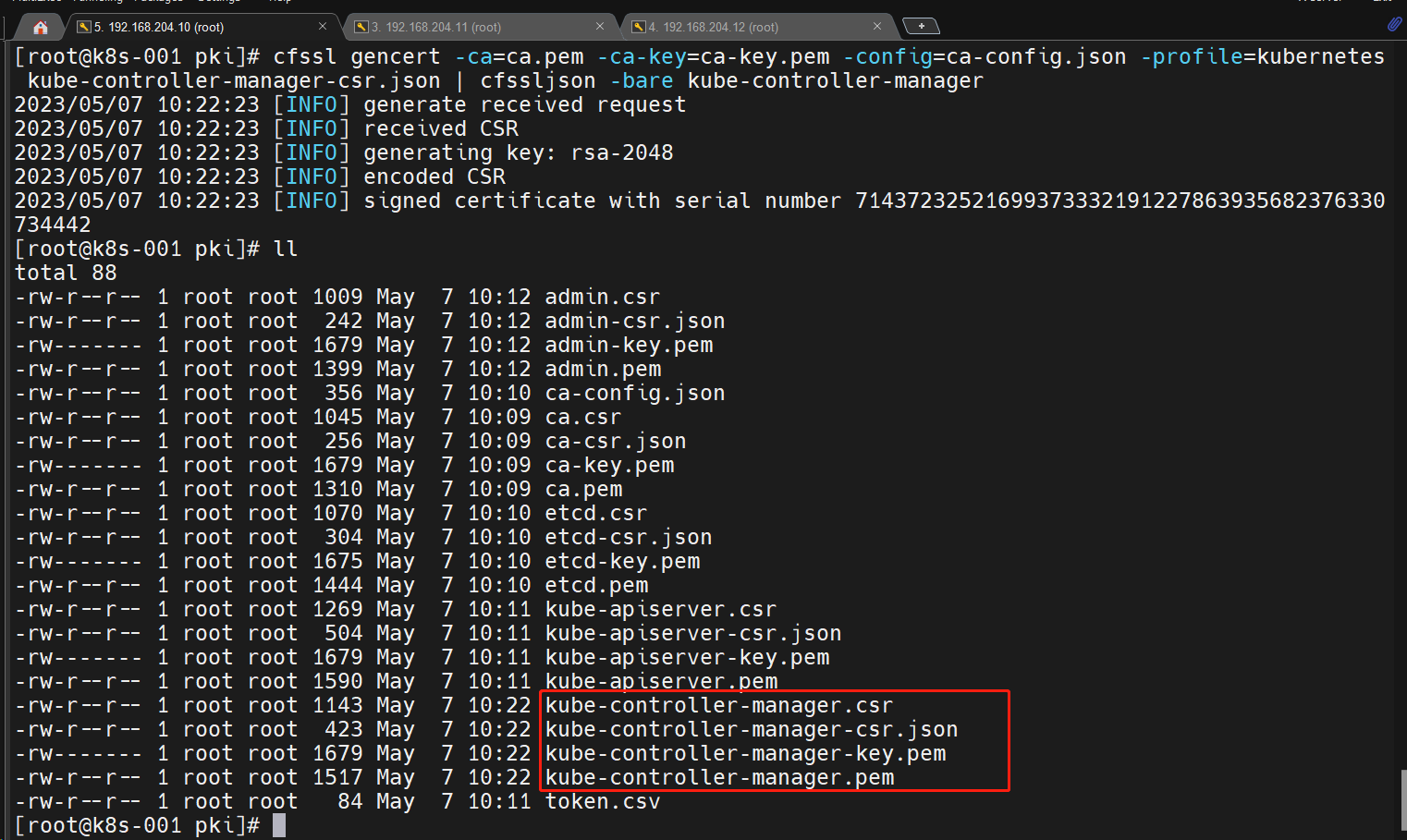

1.3.7 生成 controller-manager 证书

- 创建证书请求文件

vim /root/cfssl/pki/kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.204.10",

"192.168.204.11",

"192.168.204.12",

"192.168.204.13"

],

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

- 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

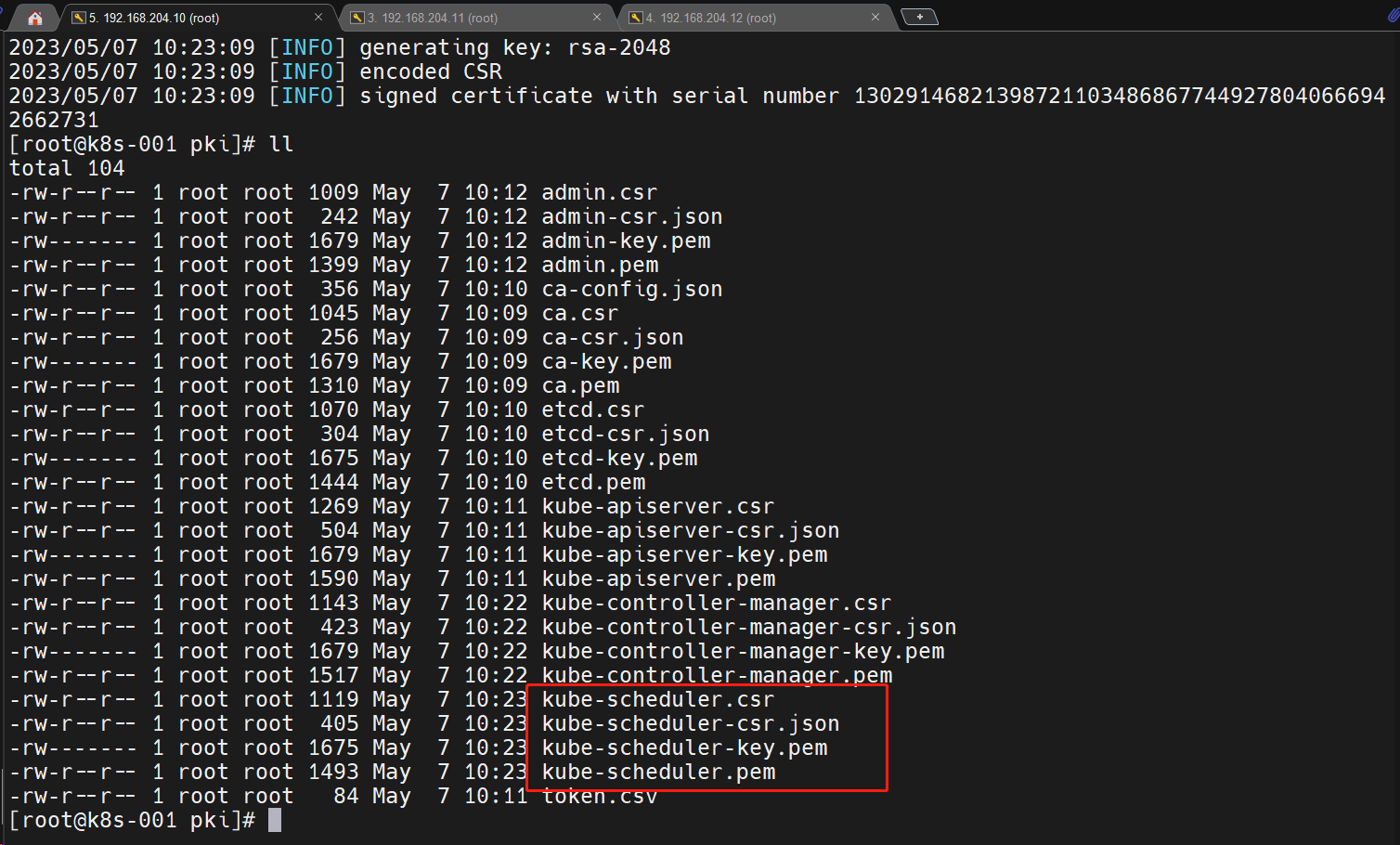

1.3.8 生成 scheduler 证书

- 创建证书请求文件

vim /root/cfssl/pki/kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.204.10",

"192.168.204.11",

"192.168.204.12",

"192.168.204.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

- 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

1.3.9 生成 kube-proxy 证书

- 创建证书请求文件

vim /root/cfssl/pki/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "k8s",

"OU": "system"

}

]

}

- 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

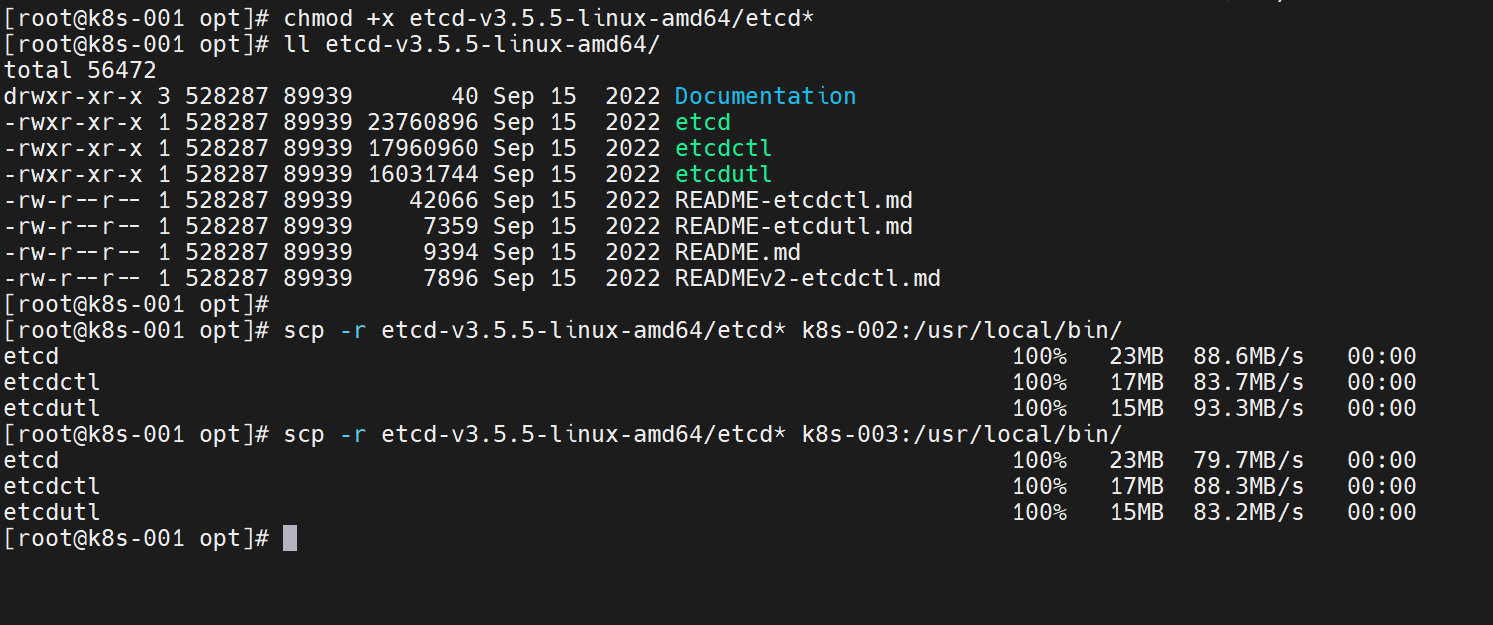

1.3.10 安装 etcd 高可用集群

k8s-001操作即可

准备 etcd 二进制安装包

# etcd 二进制下载地址:1.25.9版本对应的etcd版本:v3.5.5

https://github.com/etcd-io/etcd/releases

#得到的包:etcd-v3.5.5-linux-amd64.tar.gz

tar -xf etcd-v3.5.5-linux-amd64.tar.gz

cp -ar etcd-v3.5.5-linux-amd64/etcd* /usr/local/bin/

chmod +x /usr/local/bin/etcd*

## 将二进制包发送给其他节点

chmod +x etcd-v3.5.5-linux-amd64/etcd*

scp -r etcd-v3.5.5-linux-amd64/etcd* k8s-002:/usr/local/bin/

scp -r etcd-v3.5.5-linux-amd64/etcd* k8s-003:/usr/local/bin/

创建 etcd 配置文件

## k8s-001节点执行,生成配置文件

mkdir -p /etc/etcd/

## /etc/etcd/etcd.conf文件内容如下

#[Member]

ETCD_NAME="etcd1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.204.10:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.204.10:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.204.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.204.10:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.204.10:2380,etcd2=https://192.168.204.11:2380,etcd3=https://192.168.204.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

## k8s-002节点执行,生成配置文件

mkdir -p /etc/etcd/

## /etc/etcd/etcd.conf文件内容如下

#[Member]

ETCD_NAME="etcd2"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.204.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.204.11:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.204.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.204.11:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.204.10:2380,etcd2=https://192.168.204.11:2380,etcd3=https://192.168.204.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

## k8s-003节点执行,生成配置文件

mkdir -p /etc/etcd/

## /etc/etcd/etcd.conf文件内容如下

#[Member]

ETCD_NAME="etcd3"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.204.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.204.12:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.204.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.204.12:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.204.10:2380,etcd2=https://192.168.204.11:2380,etcd3=https://192.168.204.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群 Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new 是新集群,existing 表示加入已有集群

创建 etcd 启动服务文件

###三台都执行

## vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-cert-file=/etc/etcd/ssl/etcd.pem \

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-client-cert-auth \

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

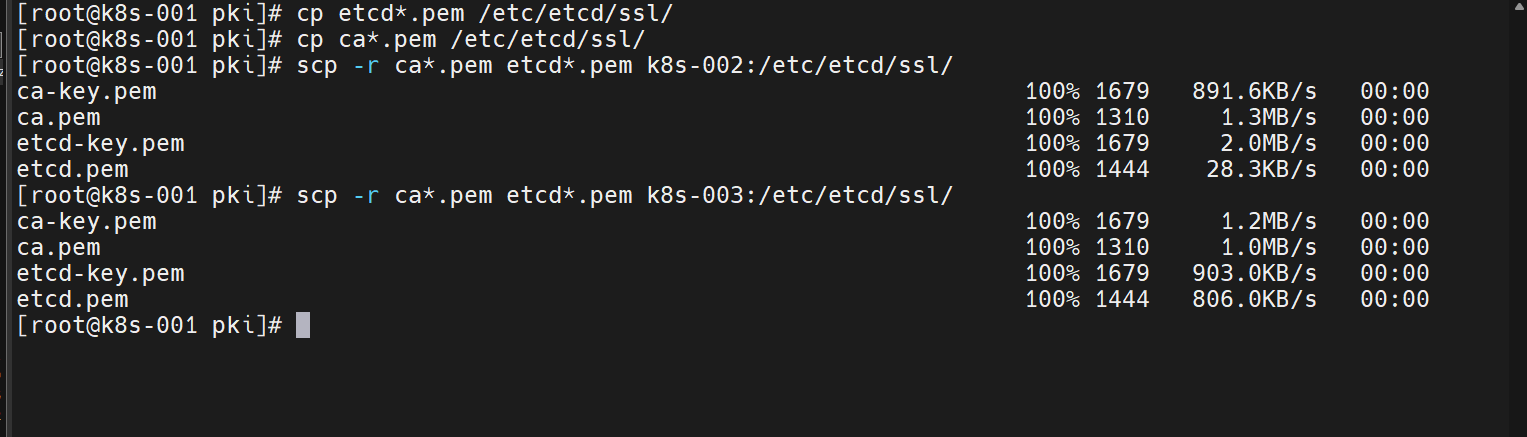

拷贝证书文件

## 我的证书放在k8s-001的/root/cfssl/pki下的

## 每台执行

mkdir -p /etc/etcd/ssl/

## k8s-001执行

cd /root/cfssl/pki

cp ca*.pem /etc/etcd/ssl/

cp etcd*.pem /etc/etcd/ssl/

scp -r ca*.pem etcd*.pem k8s-002:/etc/etcd/ssl/

scp -r ca*.pem etcd*.pem k8s-003:/etc/etcd/ssl/

启动 etcd 集群

### 每台执行

mkdir -p /var/lib/etcd/default.etcd

### 每台执行

systemctl daemon-reload

systemctl enable etcd --now

如果先启动第一台的etcd服务,会发现启动会命令行会卡住,这是正常的,只需要再启动第二台的,就可以了

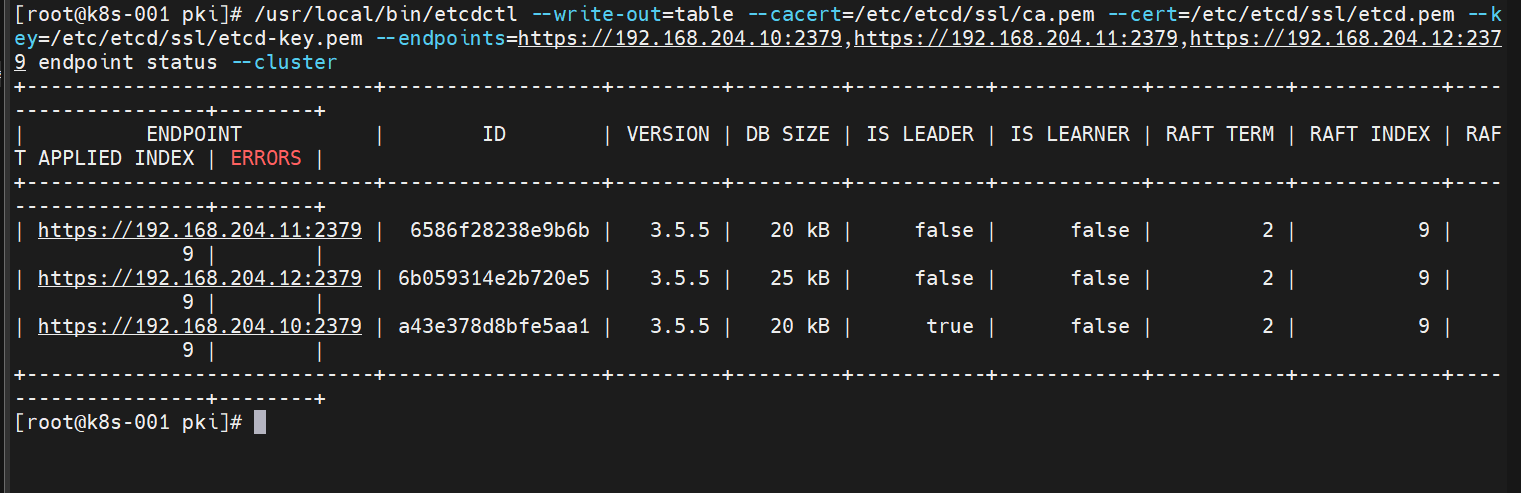

查看集群状态

### k8s-001执行

/usr/local/bin/etcdctl --write-out=table --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints=https://192.168.204.10:2379,https://192.168.204.11:2379,https://192.168.204.12:2379 endpoint status --cluster

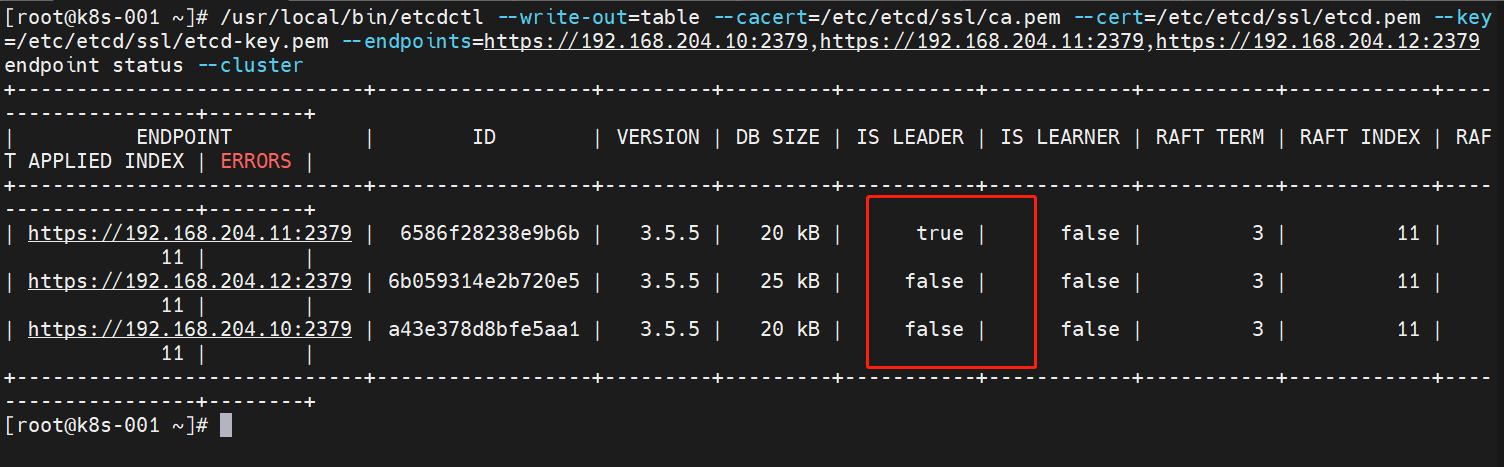

我试了下关闭第一台虚拟机后的状态

重启会恢复,但是不会恢复到之前的主节点

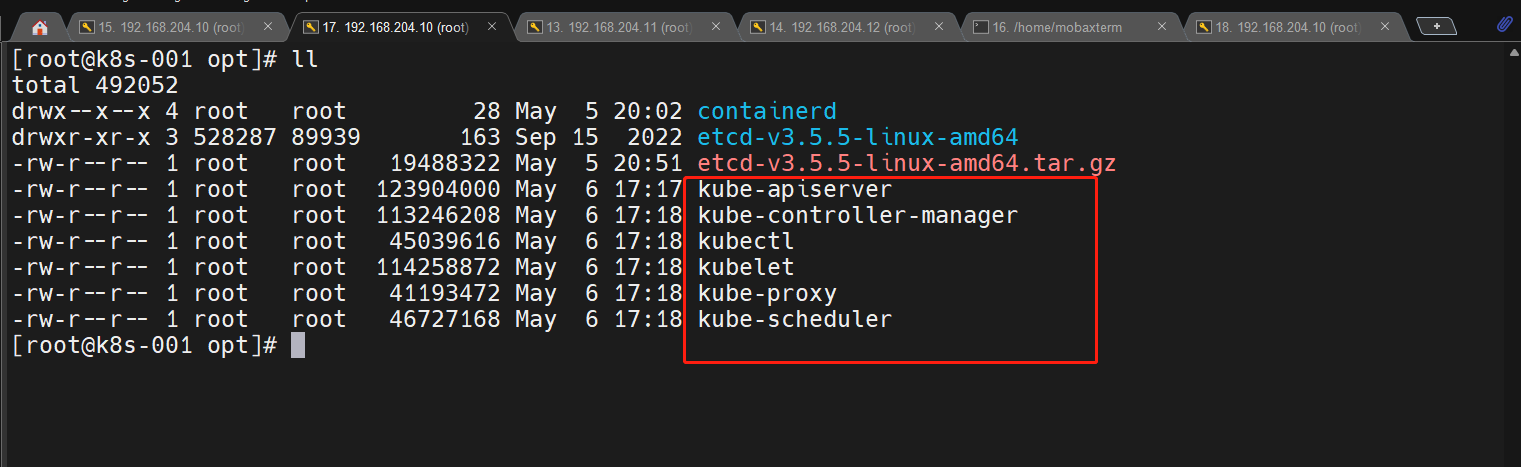

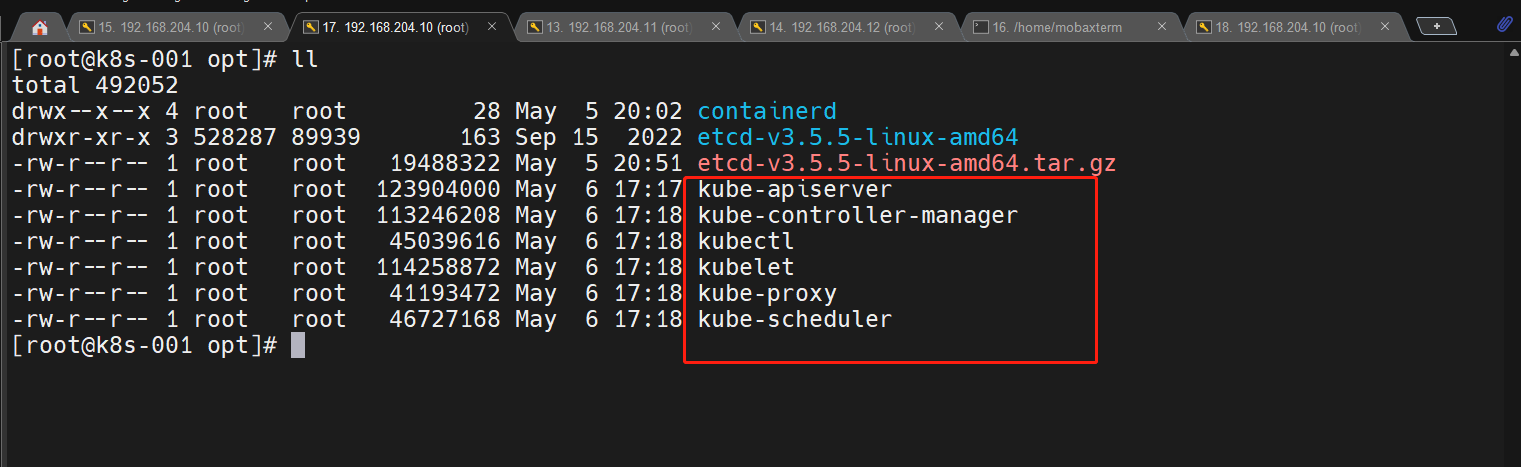

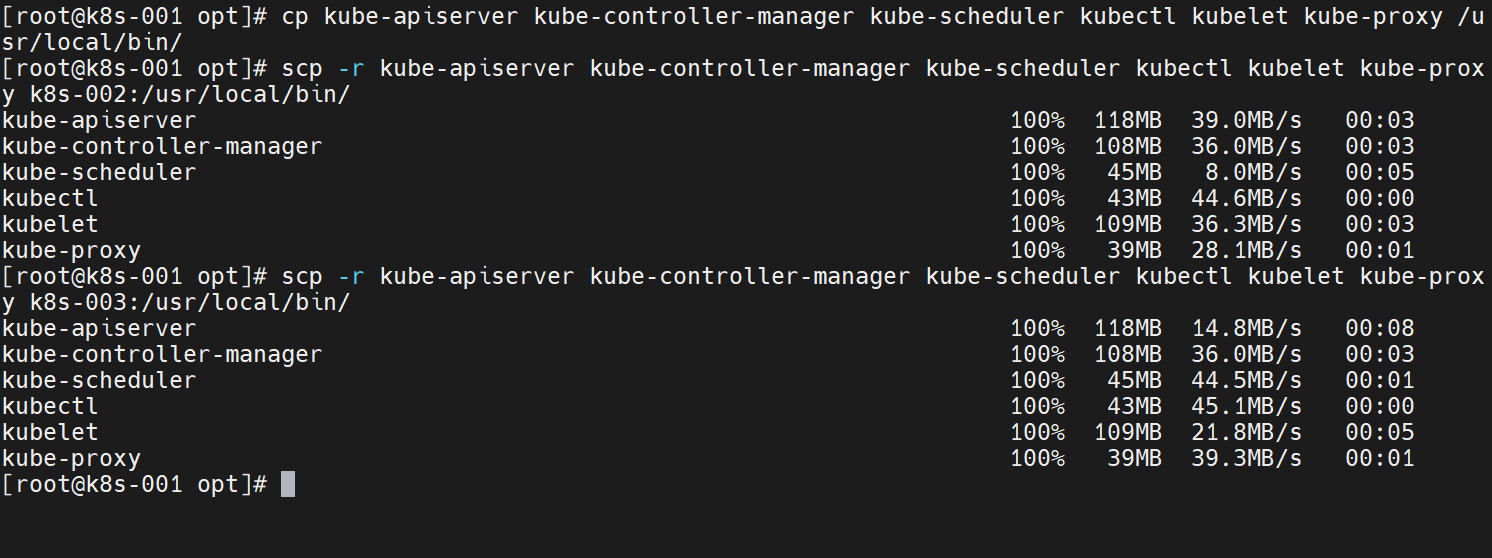

1.3.11 k8s 二进制包安装

# 第一台下载即可:https://www.downloadkubernetes.com/

# 下载的二进制包有:kube-apiserver、kube-controller-manager、kube-proxy、kube-scheduler、kubectl、kubelet

# 我放在k8s-001的/opt下面

ll

### k8s-001拷贝二进制包到其他节点

chmod +x kube*

cp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy /usr/local/bin/

scp -r kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy k8s-002:/usr/local/bin/

scp -r kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy k8s-003:/usr/local/bin/

1.3.12 安装 kube-apiserver

创建数据目录

### 每台执行

mkdir -p /etc/kubernetes/ssl

mkdir /var/log/kubernetes

创建服务配置文件

### k8s-001上的/etc/kubernetes/kube-apiserver.conf

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=192.168.204.10 \

--secure-port=6443 \

--advertise-address=192.168.204.10 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.165.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.204.10:2379,https://192.168.204.11:2379,https://192.168.204.12:2379 \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4"

### k8s-002上的/etc/kubernetes/kube-apiserver.conf

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=192.168.204.11 \

--secure-port=6443 \

--advertise-address=192.168.204.11 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.165.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.204.10:2379,https://192.168.204.11:2379,https://192.168.204.12:2379 \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4"

### k8s-003上的/etc/kubernetes/kube-apiserver.conf

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=192.168.204.12 \

--secure-port=6443 \

--advertise-address=192.168.204.12 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.165.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.204.10:2379,https://192.168.204.11:2379,https://192.168.204.12:2379 \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4"

创建服务启动文件

### 每台执行创建文件:/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

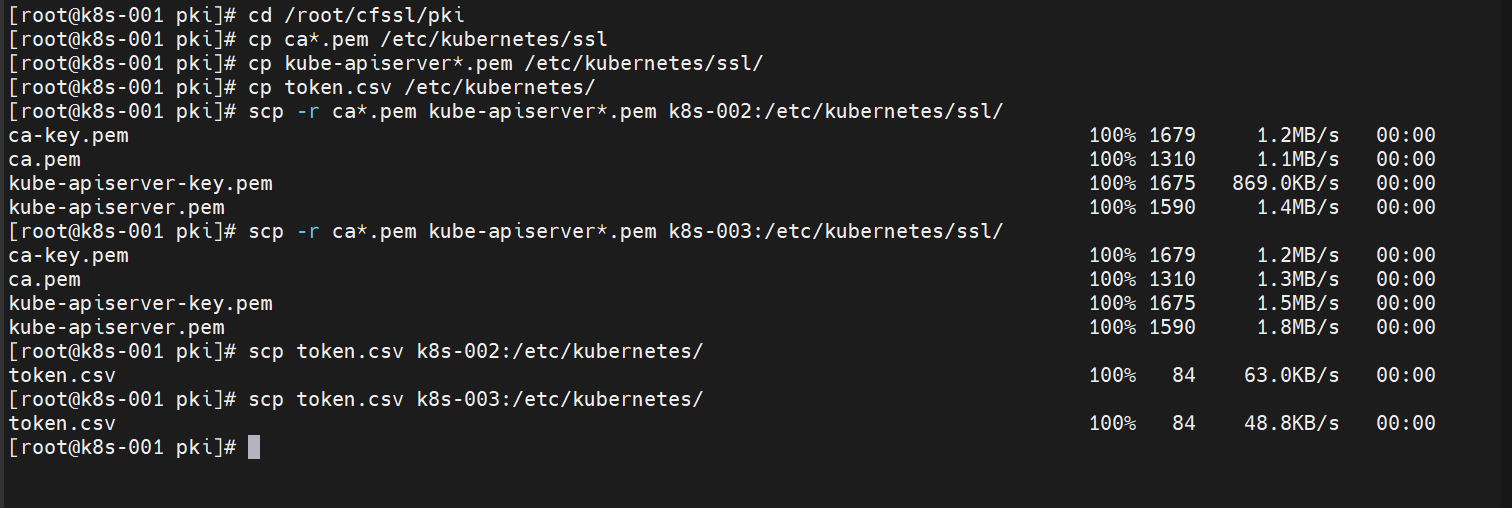

拷贝证书

### k8s-001执行

cd /root/cfssl/pki

cp ca*.pem /etc/kubernetes/ssl

cp kube-apiserver*.pem /etc/kubernetes/ssl/

cp token.csv /etc/kubernetes/

scp -r ca*.pem kube-apiserver*.pem k8s-002:/etc/kubernetes/ssl/

scp -r ca*.pem kube-apiserver*.pem k8s-003:/etc/kubernetes/ssl/

scp token.csv k8s-002:/etc/kubernetes/

scp token.csv k8s-003:/etc/kubernetes/

启动服务

### 每台执行

systemctl daemon-reload

systemctl enable kube-apiserver --now

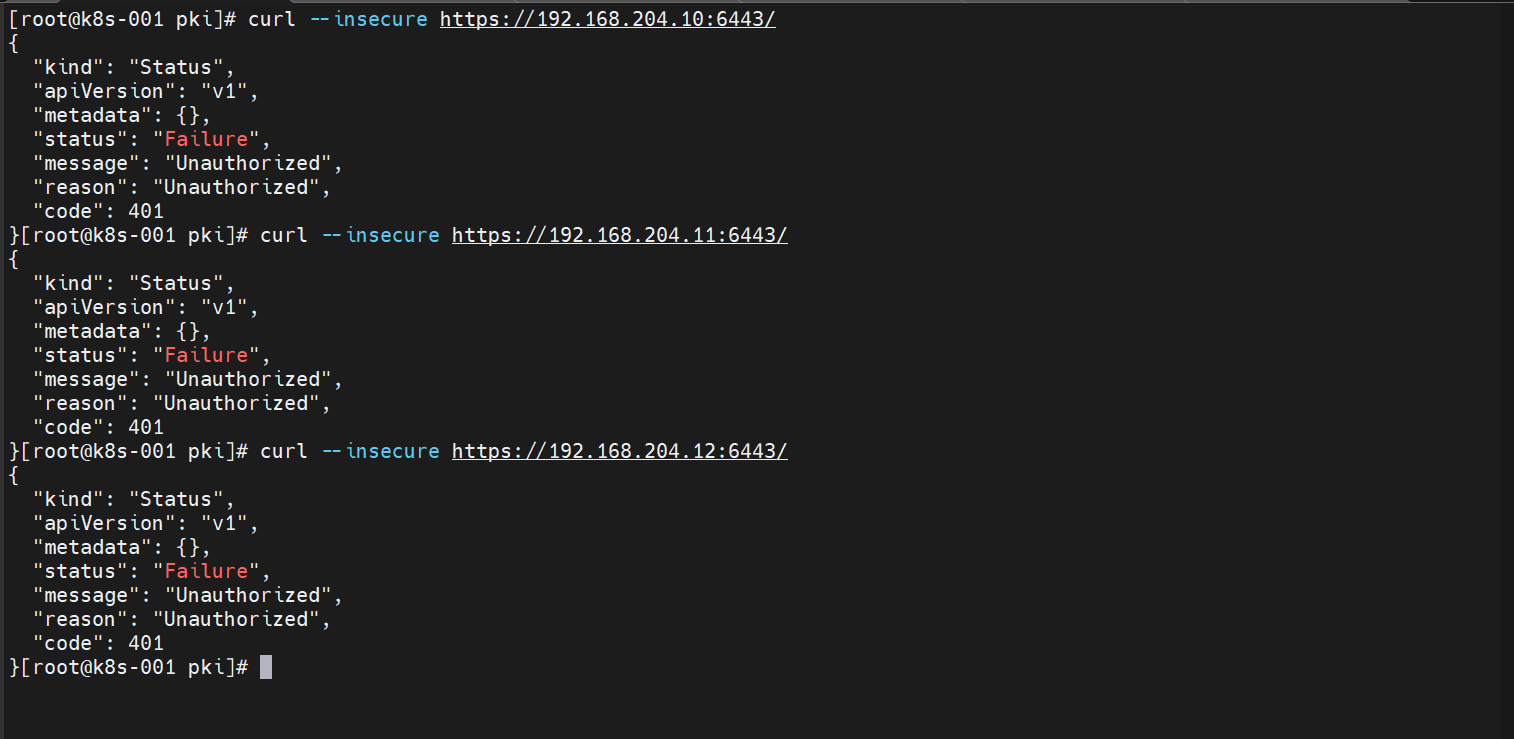

测试验证

### 每台执行

systemctl status kube-apiserver

### k8s-001执行

curl --insecure https://192.168.204.10:6443/

curl --insecure https://192.168.204.11:6443/

curl --insecure https://192.168.204.12:6443/

以下都是正常的,因为还没授权

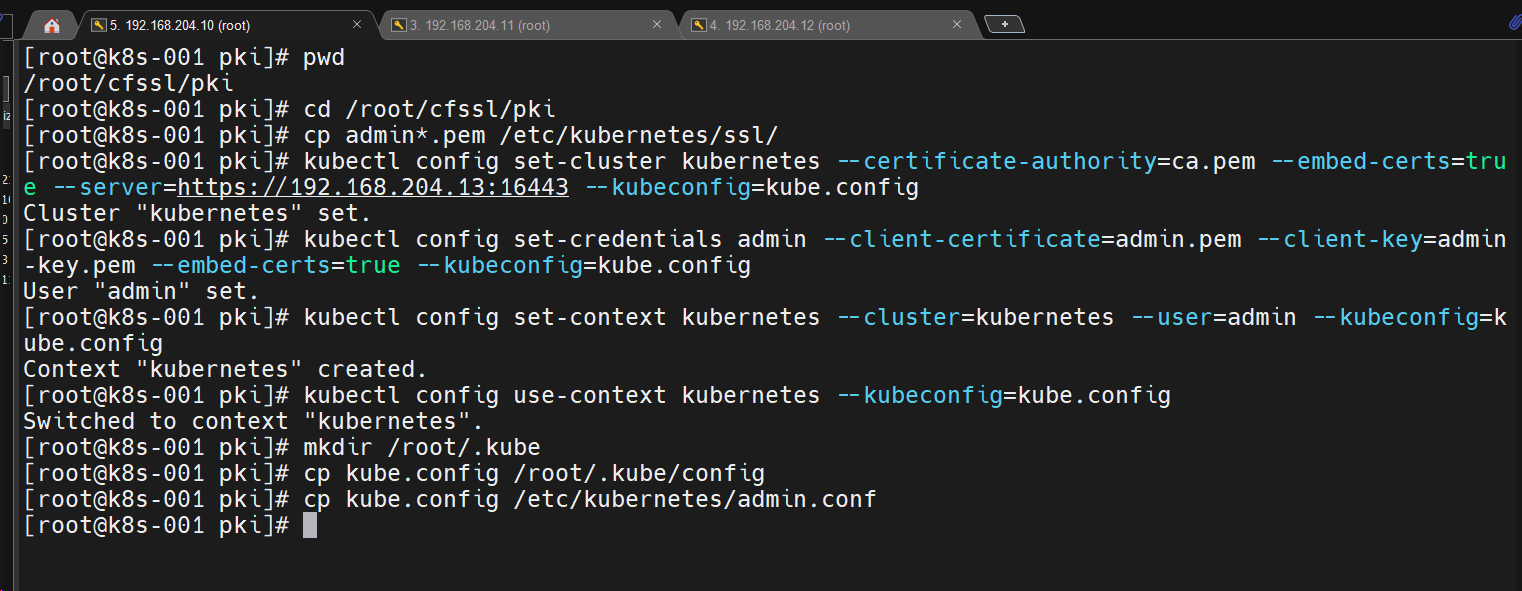

1.3.13 安装 kubectl

我只需要在一台主机上操作 k8s 集群资源,所以我就在k8s-001 安装这个即可

- 拷贝证书

cd /root/cfssl/pki

cp admin*.pem /etc/kubernetes/ssl/

- 配置安全上下文

### 设置集群参数

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.204.13:16443 --kubeconfig=kube.config

### 设置客户端认证参数

kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config

### 设置上下文参数

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

### 设置当前上下文

kubectl config use-context kubernetes --kubeconfig=kube.config

### 设置

mkdir /root/.kube

cp kube.config /root/.kube/config

cp kube.config /etc/kubernetes/admin.conf

- 授权 kubernetes 证书访问权限

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

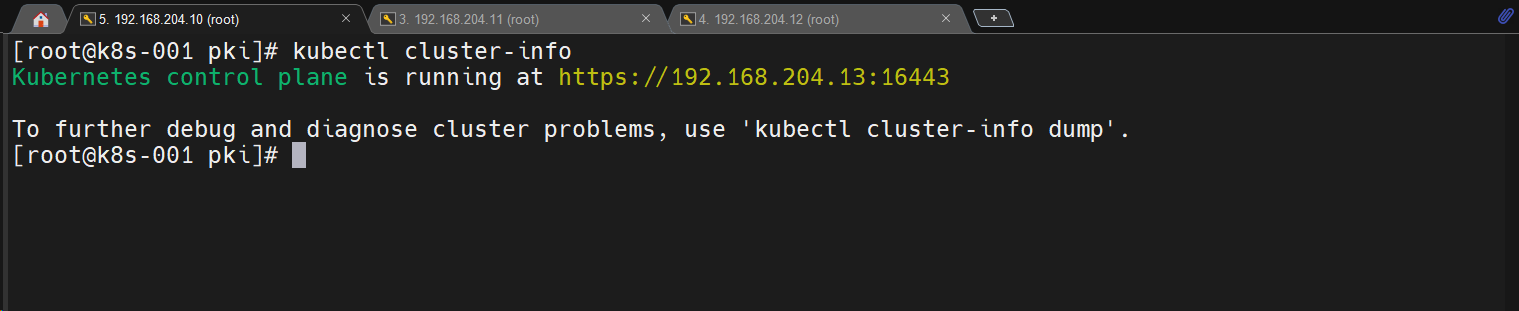

验证一下

- 查看集群状态

kubectl cluster-info

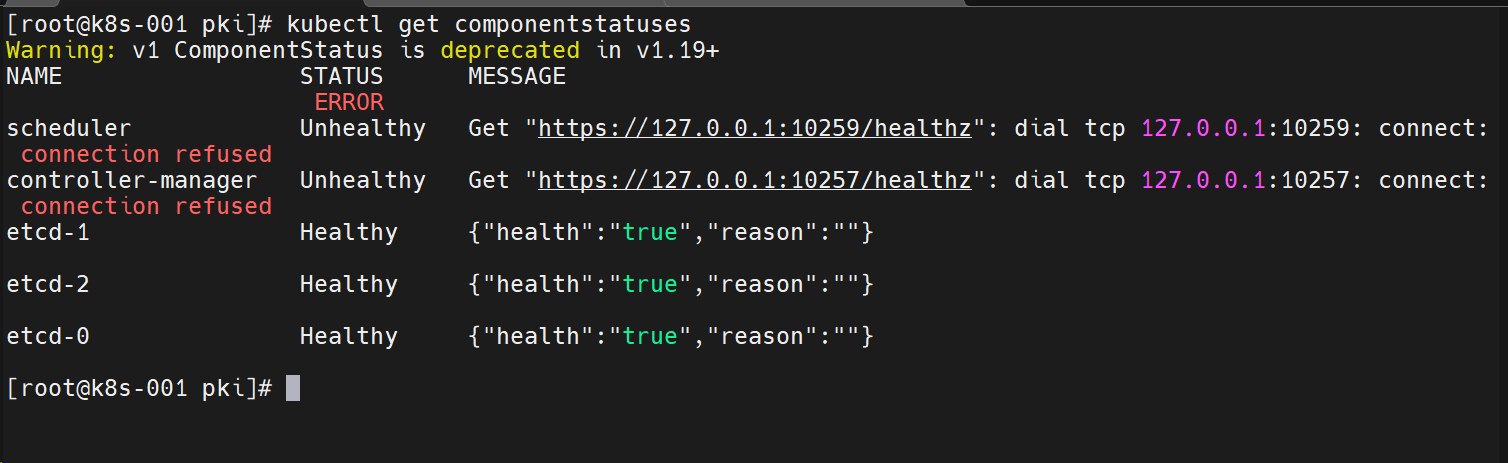

- 查看组件状态

kubectl get componentstatuses

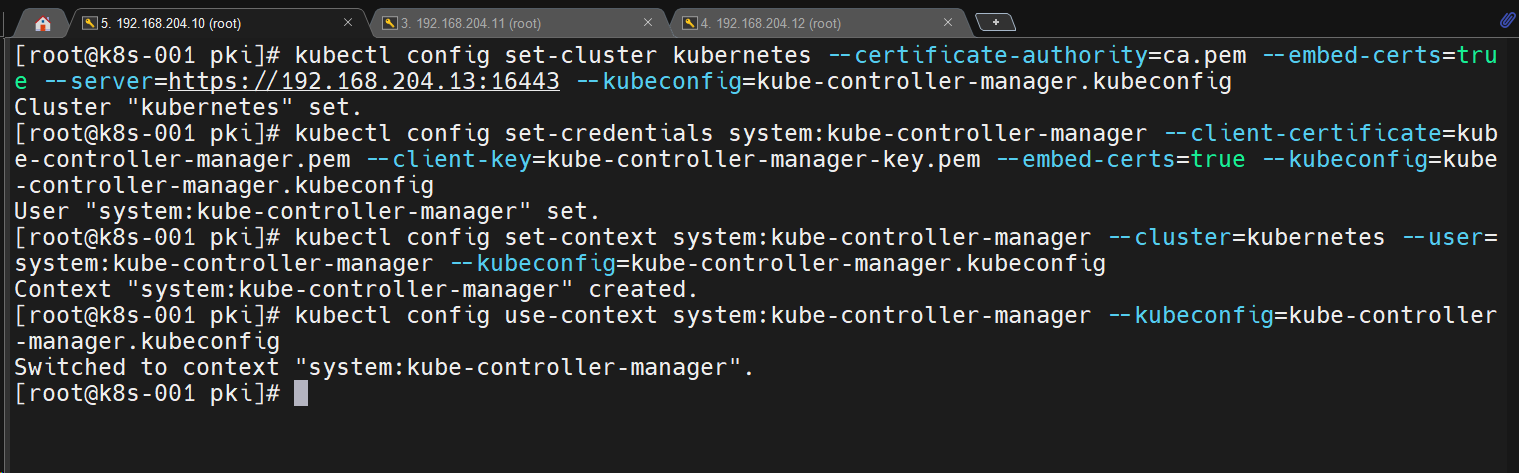

1.3.14 安装 kube-controller-manager

- 配置 kubeconfig

### 设置集群参数

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.204.13:16443 --kubeconfig=kube-controller-manager.kubeconfig

### 设置客户端参数

kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig

### 设置上下文参数

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

### 设置当前上下文

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

- 创建配置文件

### 三台都一样的/etc/kubernetes/kube-controller-manager.conf文件

KUBE_CONTROLLER_MANAGER_OPTS=" \

--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.165.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.166.0.0/16 \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-sync-period=10s \

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--v=2"

- 创建服务启动文件

### 三台都一样的/usr/lib/systemd/system/kube-controller-manager.service文件

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

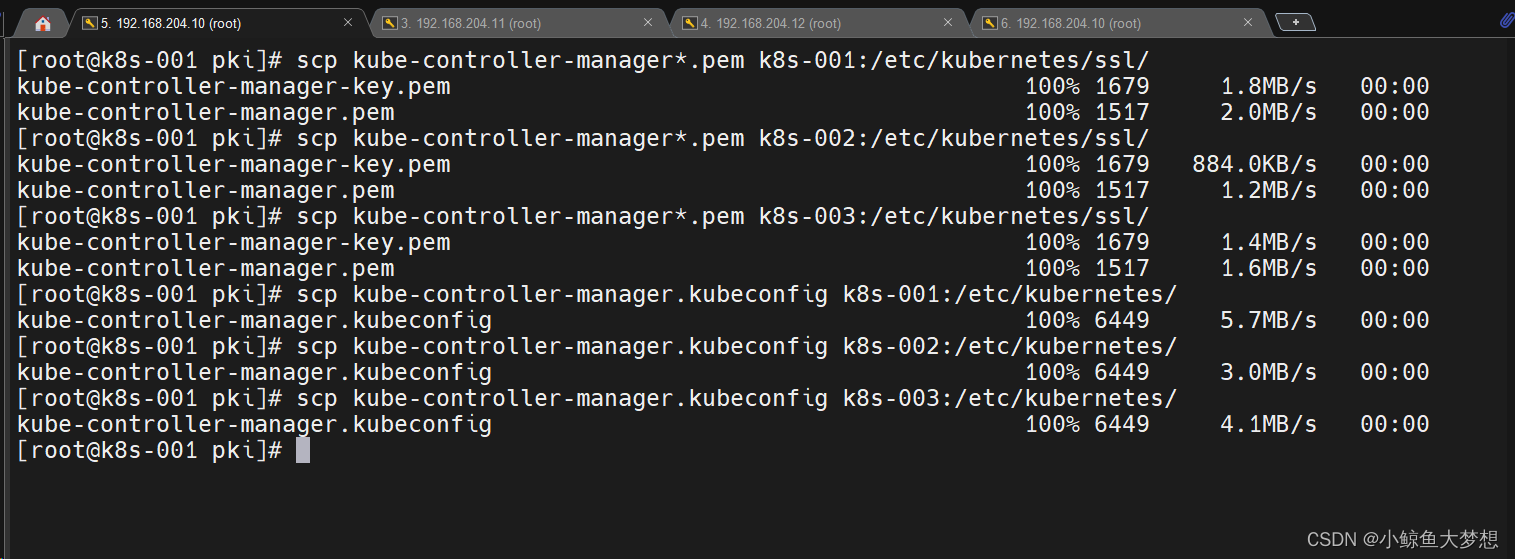

- 拷贝证书

### k8s-001执行

cd /root/cfssl/pki

scp kube-controller-manager*.pem k8s-001:/etc/kubernetes/ssl/

scp kube-controller-manager*.pem k8s-002:/etc/kubernetes/ssl/

scp kube-controller-manager*.pem k8s-003:/etc/kubernetes/ssl/

scp kube-controller-manager.kubeconfig k8s-001:/etc/kubernetes/

scp kube-controller-manager.kubeconfig k8s-002:/etc/kubernetes/

scp kube-controller-manager.kubeconfig k8s-003:/etc/kubernetes/

- 启动服务

### 3台都执行

systemctl daemon-reload

systemctl enable kube-controller-manager --now

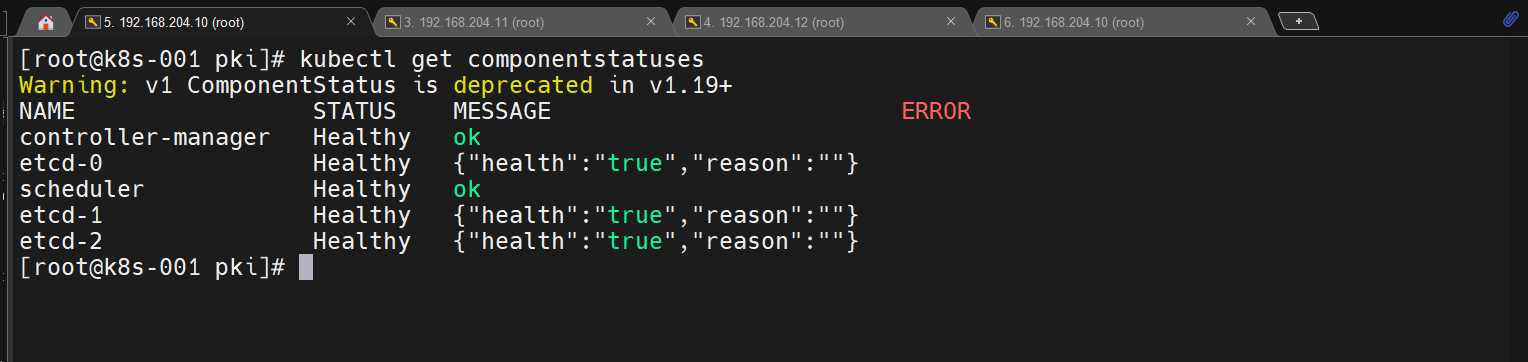

- 检查一下

kubectl get componentstatuses

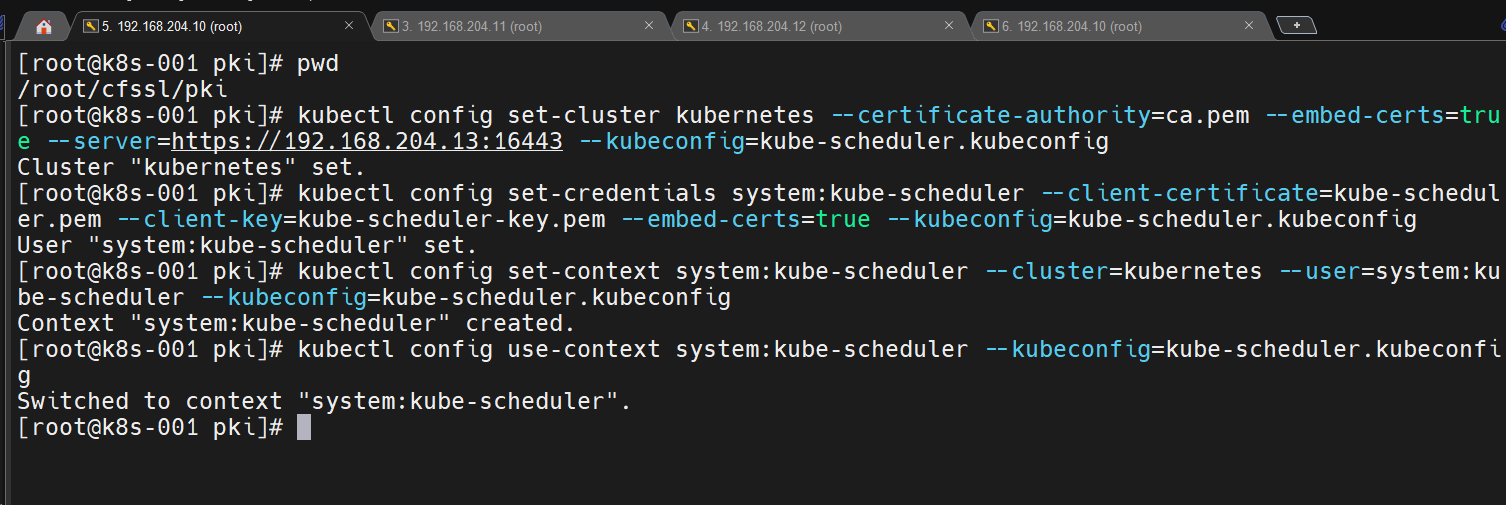

1.3.15 安装 kube-scheduler

- 配置 kubeconfig

### k8s-001执行

cd /root/cfssl/pki

### 设置集群参数

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.204.13:16443 --kubeconfig=kube-scheduler.kubeconfig

### 设置客户端认证参数

kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

### 设置上下文参数

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

### 设置当前上下文

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

- 创建配置文件

###每台都要/etc/kubernetes/kube-scheduler.conf,都是一样的文件

KUBE_SCHEDULER_OPTS="--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

- 创建服务启动文件

###每台都要/usr/lib/systemd/system/kube-scheduler.service,都是一样的文件

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

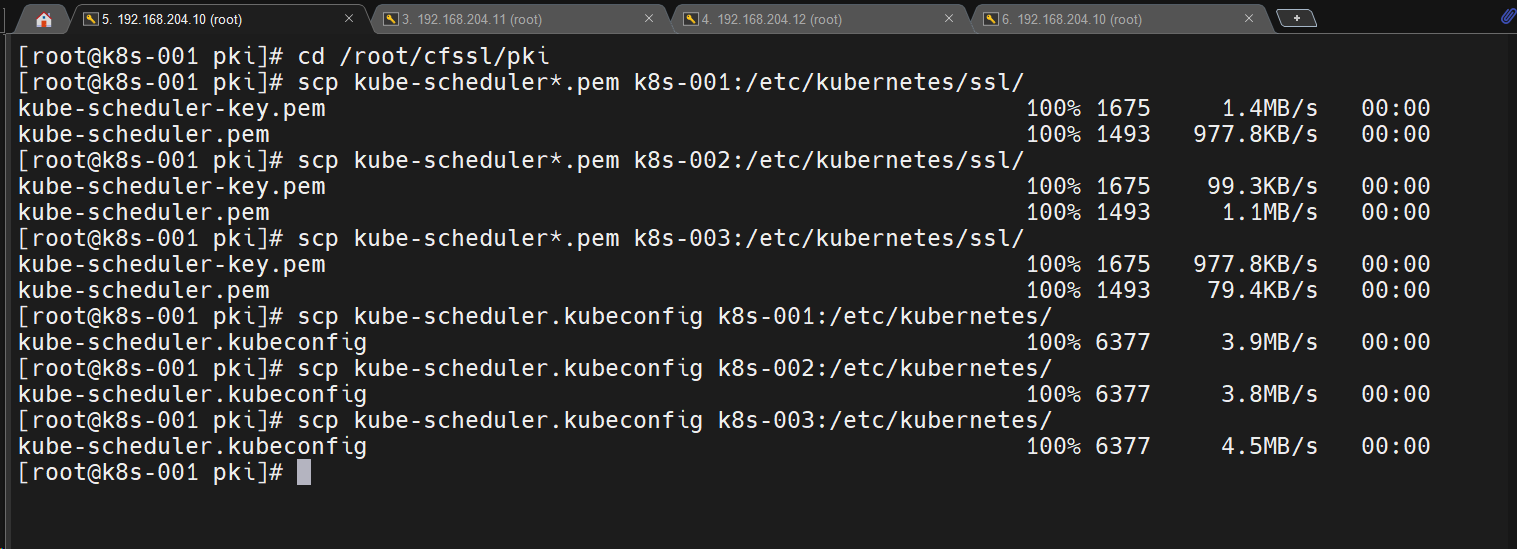

- 拷贝文件

### k8s-001执行

cd /root/cfssl/pki

scp kube-scheduler*.pem k8s-001:/etc/kubernetes/ssl/

scp kube-scheduler*.pem k8s-002:/etc/kubernetes/ssl/

scp kube-scheduler*.pem k8s-003:/etc/kubernetes/ssl/

scp kube-scheduler.kubeconfig k8s-001:/etc/kubernetes/

scp kube-scheduler.kubeconfig k8s-002:/etc/kubernetes/

scp kube-scheduler.kubeconfig k8s-003:/etc/kubernetes/

- 启动服务

### 每台执行

systemctl daemon-reload

systemctl enable kube-scheduler --now

- 验证一下

1.3.16 安装 kubelet

- 创建 kubelet-bootstrap.kubeconfig

cd /root/cfssl/pki

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.204.13:16443 --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

- 创建配置文件

### k8s-001上的/etc/kubernetes/kubelet.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.204.10",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletServerCertificate": true

},

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.165.0.2"]

}

### k8s-002上的/etc/kubernetes/kubelet.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.204.11",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletServerCertificate": true

},

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.165.0.2"]

}

### k8s-003上的/etc/kubernetes/kubelet.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.204.12",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletServerCertificate": true

},

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.165.0.2"]

}

- 创建服务启动文件

### 每台执行这个文件:/usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.json \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7 \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

- 拷贝文件

### k8s-001执行

cd /root/cfssl/pki

scp kubelet-bootstrap.kubeconfig k8s-001:/etc/kubernetes/

scp kubelet-bootstrap.kubeconfig k8s-002:/etc/kubernetes/

scp kubelet-bootstrap.kubeconfig k8s-003:/etc/kubernetes/

scp ca.pem k8s-001:/etc/kubernetes/ssl

scp ca.pem k8s-002:/etc/kubernetes/ssl

scp ca.pem k8s-003:/etc/kubernetes/ssl

- 启动服务

### 每台执行

mkdir /var/lib/kubelet

systemctl daemon-reload

systemctl enable kubelet --now

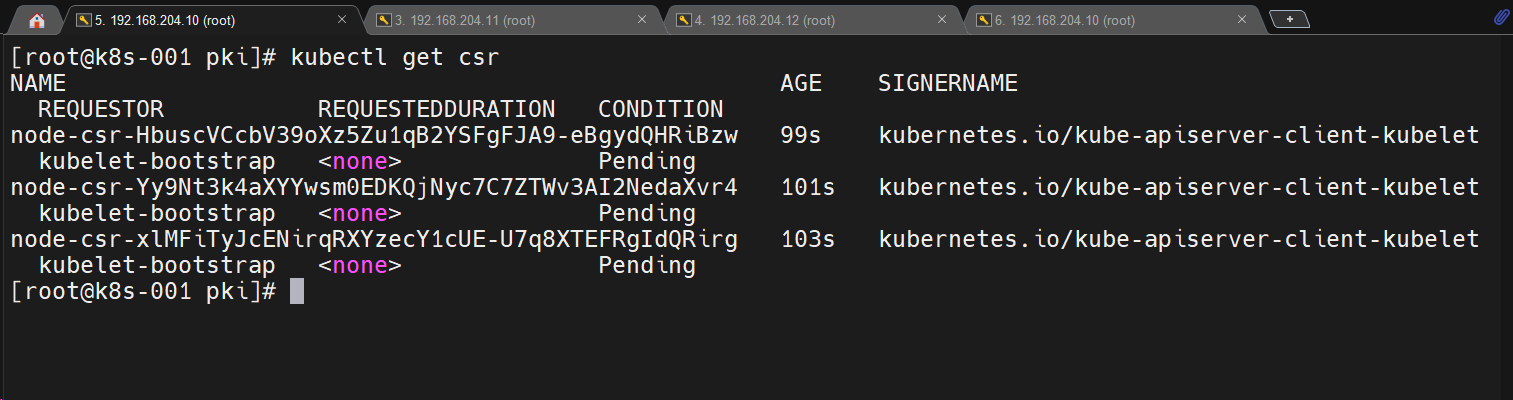

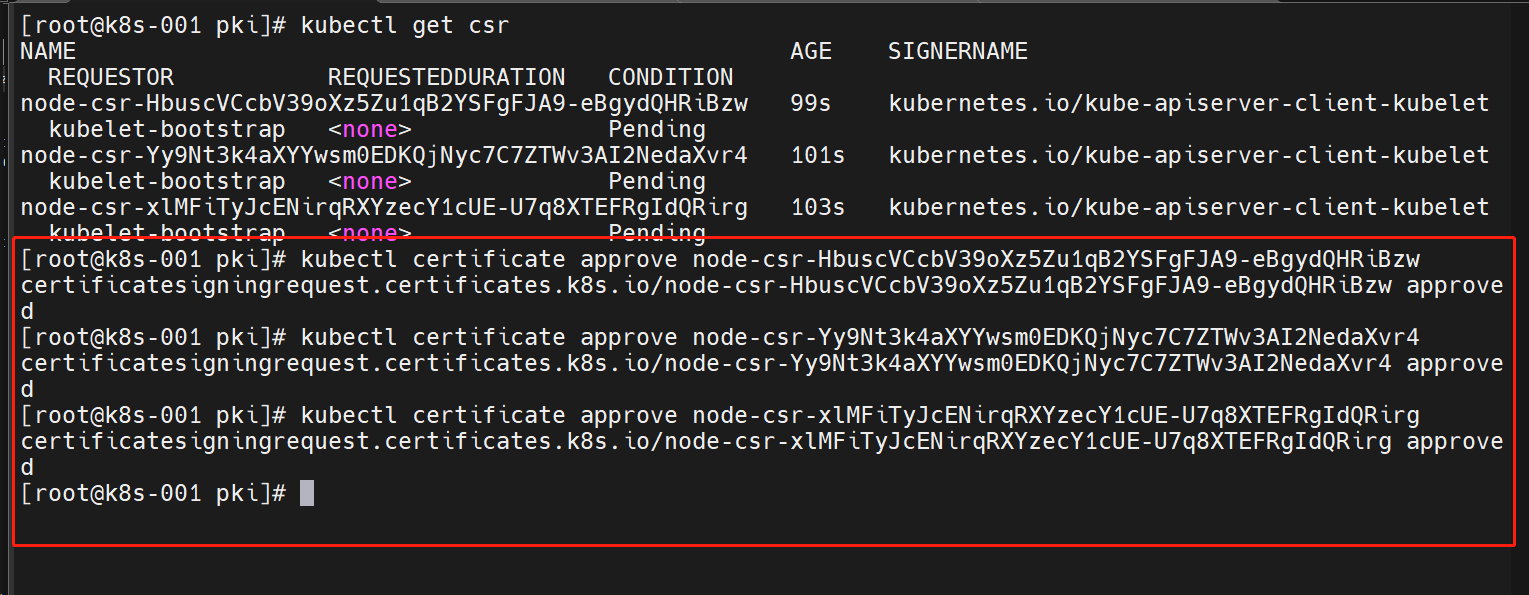

- 授权

### k8s-001执行

### CSR请求

kubectl get csr

### 同意

kubectl certificate approve xxx

1.3.17 安装 kube-proxy

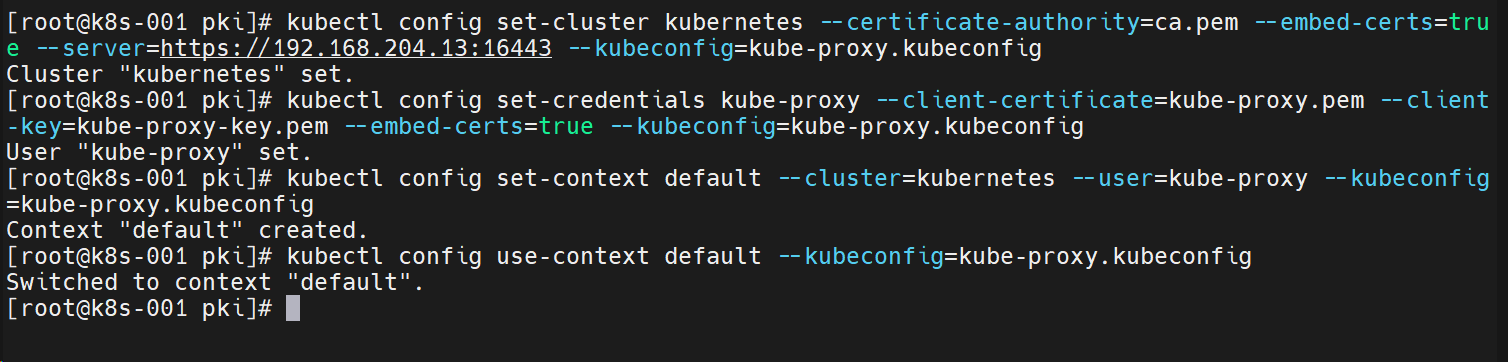

- 配置 kubeconfig

cd /root/cfssl/pki

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.204.13:16443 --kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

- 创建配置文件

### k8s-001的/etc/kubernetes/kube-proxy.yaml文件

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 192.168.204.10

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 192.168.204.0/24

healthzBindAddress: 192.168.204.10:10256

kind: KubeProxyConfiguration

metricsBindAddress: 192.168.204.10:10249

mode: "ipvs"

### k8s-002的/etc/kubernetes/kube-proxy.yaml文件

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 192.168.204.11

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 192.168.204.0/24

healthzBindAddress: 192.168.204.11:10256

kind: KubeProxyConfiguration

metricsBindAddress: 192.168.204.11:10249

mode: "ipvs"

### k8s-003的/etc/kubernetes/kube-proxy.yaml文件

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 192.168.204.12

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 192.168.204.0/24

healthzBindAddress: 192.168.204.12:10256

kind: KubeProxyConfiguration

metricsBindAddress: 192.168.204.12:10249

mode: "ipvs"

- 创建服务启动文件

### 三个节点都是一样的:/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

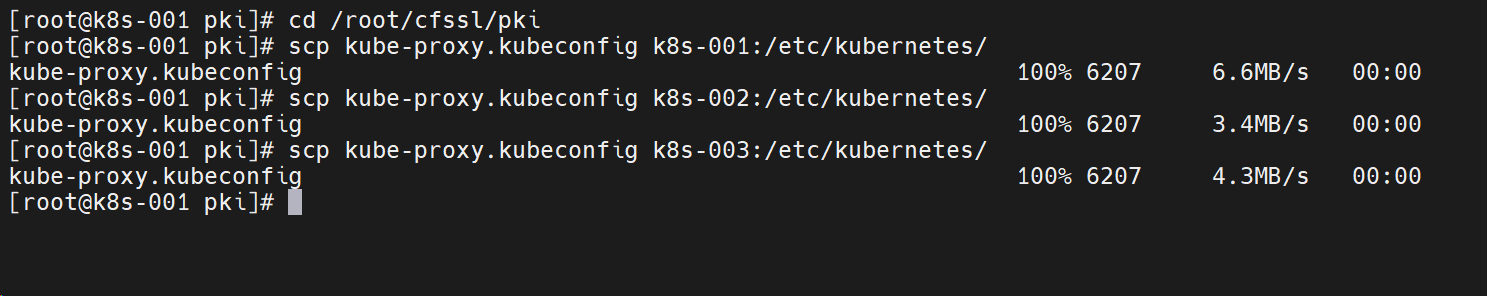

- 拷贝文件

### k8s-001执行

cd /root/cfssl/pki

scp kube-proxy.kubeconfig k8s-001:/etc/kubernetes/

scp kube-proxy.kubeconfig k8s-002:/etc/kubernetes/

scp kube-proxy.kubeconfig k8s-003:/etc/kubernetes/

- 启动服务

### 每台执行

mkdir -p /var/lib/kube-proxy

systemctl daemon-reload

systemctl enable kube-proxy --now

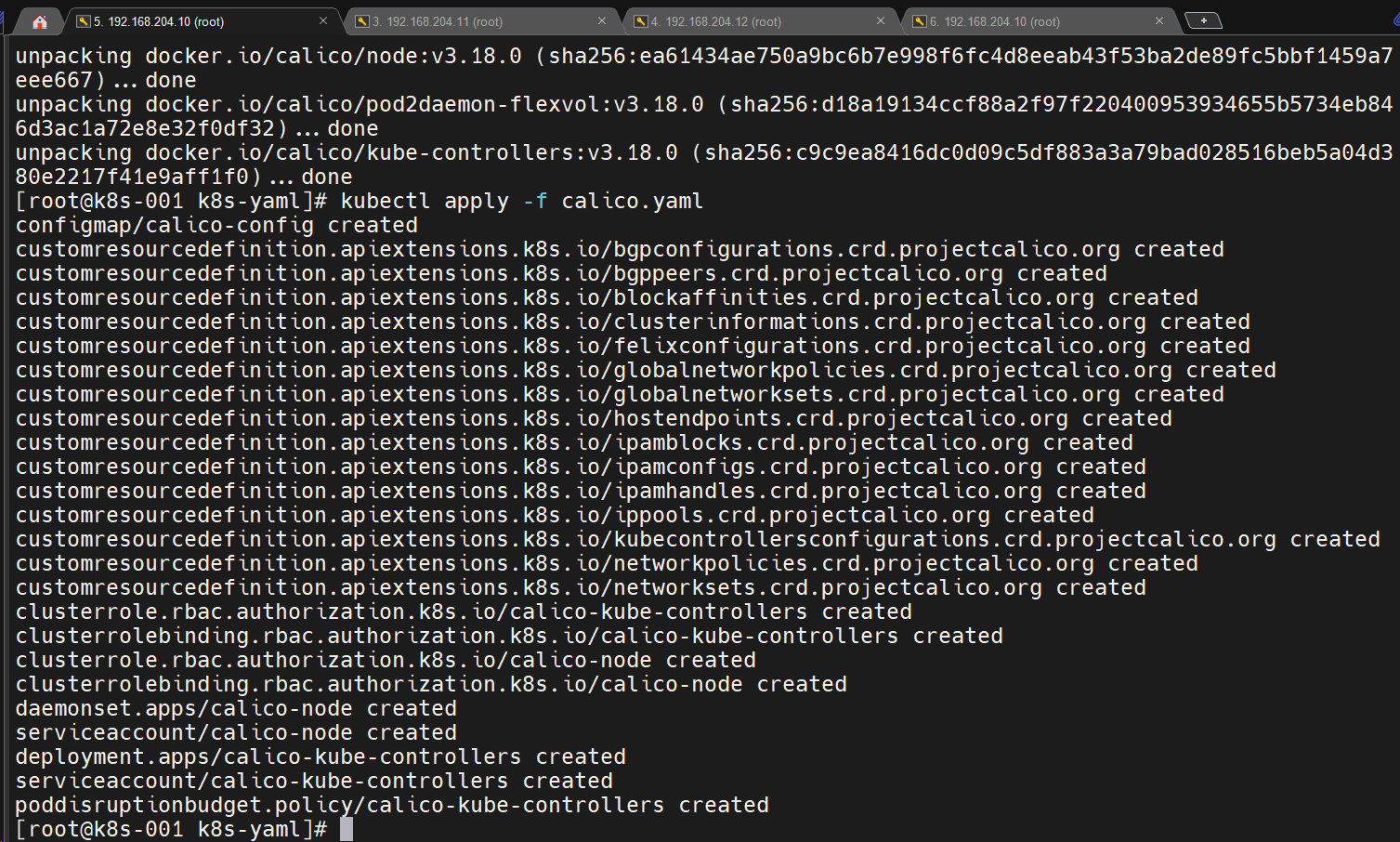

1.3.18 安装网络插件 calico

### k8s-001执行即可

mkdir /opt/k8s-yaml

cd /opt/k8s-yaml

### 提前准备好了相关文件和镜像包:calico.tar.gz和calico.yaml

### 将镜像拷贝到其他节点

scp calico.tar.gz k8s-002:/root

scp calico.tar.gz k8s-003:/root

### 在每个节点对应位置导入镜像

ctr -n=k8s.io images import calico.tar.gz

### 在k8s-001节点启动

kubectl apply -f calico.yaml

再看下集群状态

kubectl get nodes

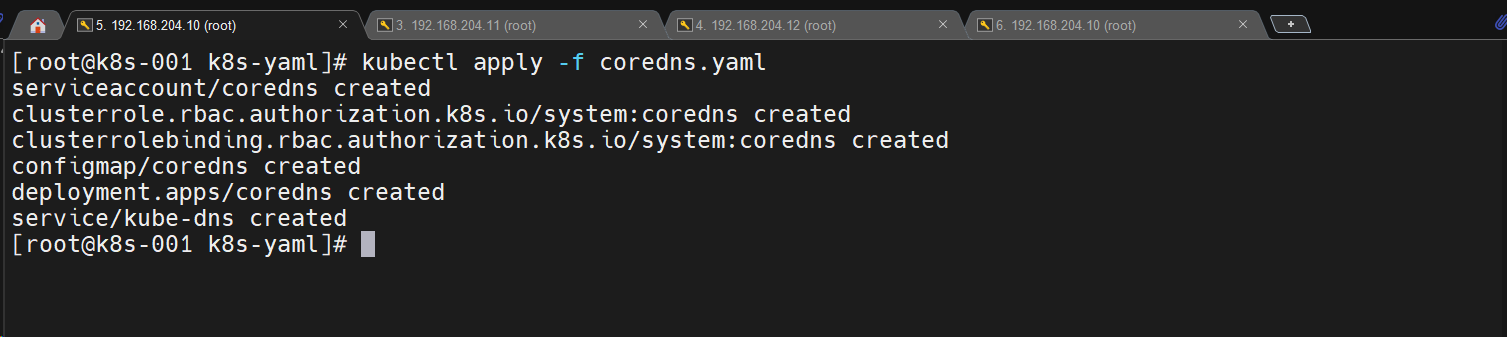

1.3.19 安装 coredns

### k8s-001执行即可

cd /opt/k8s-yaml

### 提前准备好了coredns.yaml

### 注意修改下 clusterIP地址:clusterIP: 10.165.0.2

kubectl apply -f coredns.yaml

1.4 插件安装及优化阶段

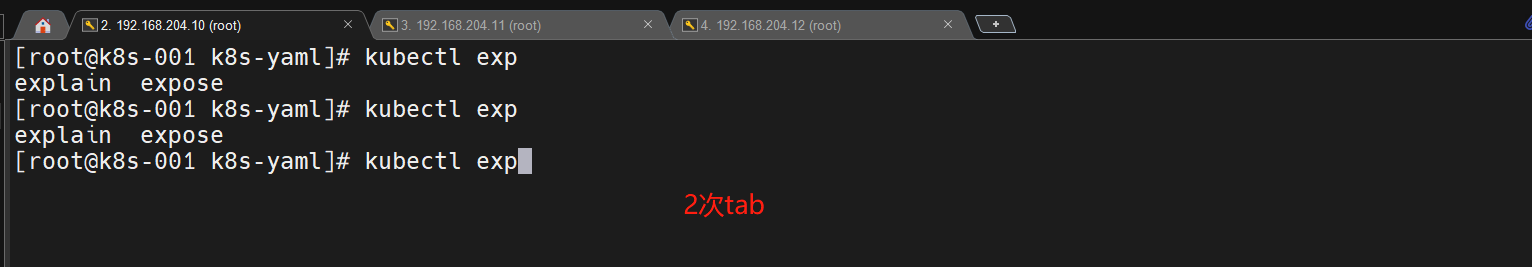

1.4.1 kubectl 命令补全功能

### 任意一台执行

yum install bash-completion

source /usr/share/bash-completion/bash_completion

bash

type _init_completion

kubectl completion bash | sudo tee /etc/bash_completion.d/kubectl > /dev/null

source ~/.bashrc

bash

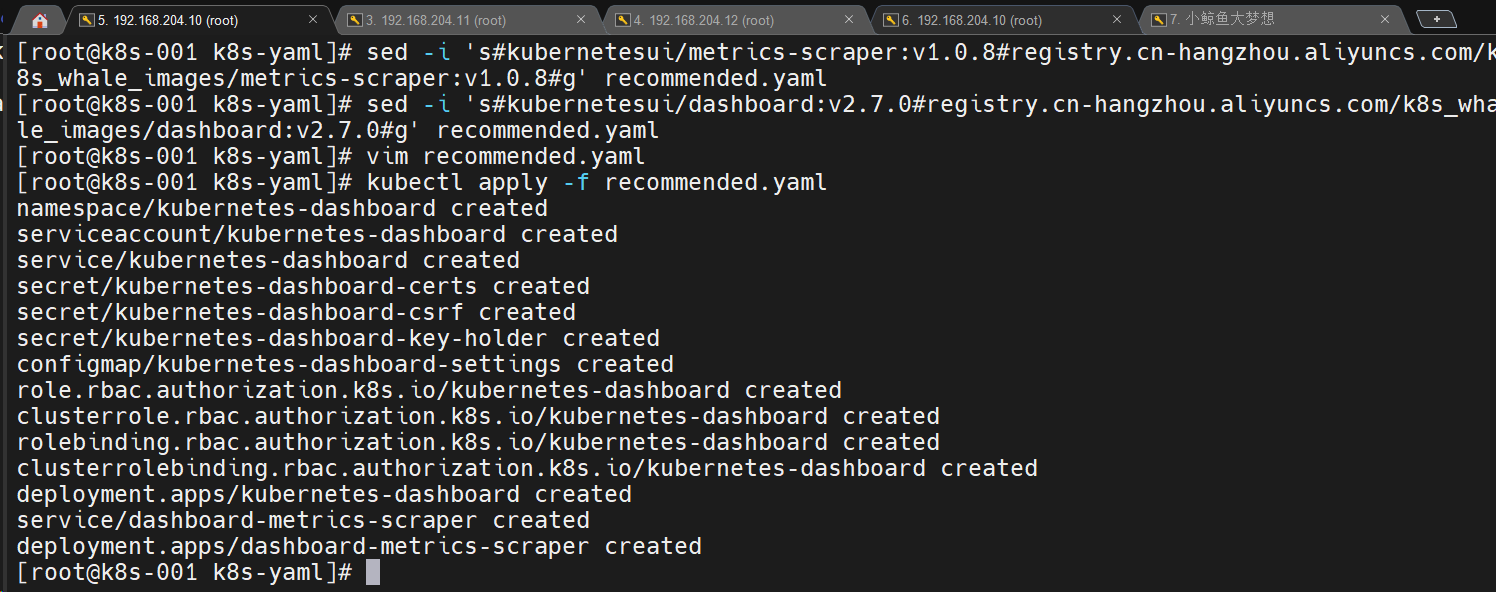

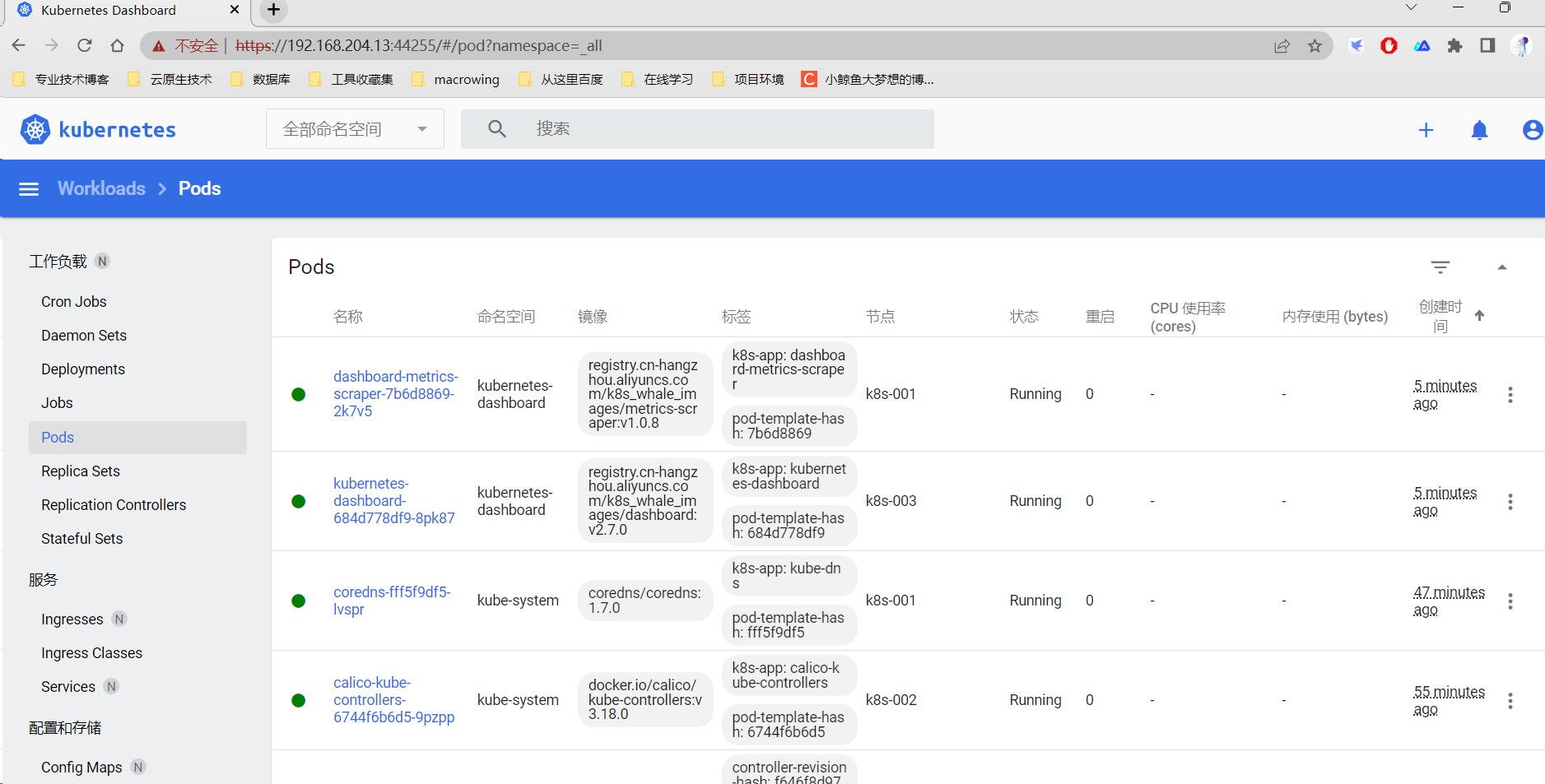

1.4.2 Dashboard UI 安装

准备的 yaml 文件

直接下载也是可以的:https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

-

这个服务的镜像不好拉取,我自己做成了阿里云的仓库进行拉取了(这是一共公开的仓库)

-

需要的是这2个镜像

- kubernetesui/metrics-scraper:v1.0.8

- kubernetesui/dashboard:v2.7.0

-

我换成了阿里云的

- registry.cn-hangzhou.aliyuncs.com/k8s_whale_images/dashboard:v2.7.0

- registry.cn-hangzhou.aliyuncs.com/k8s_whale_images/metrics-scraper:v1.0.8

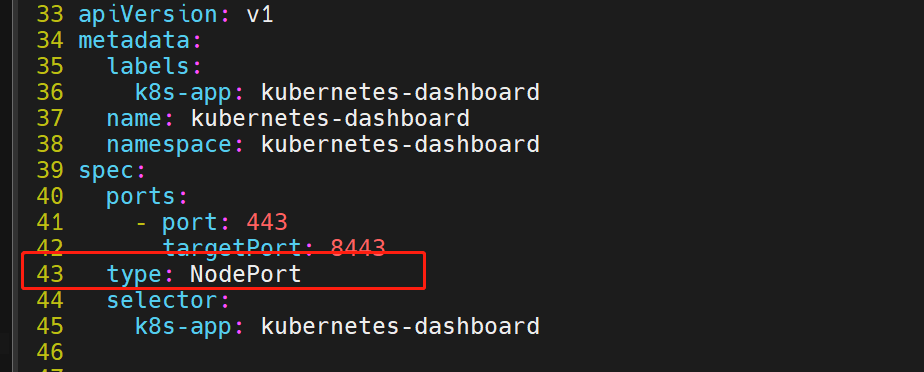

# 我们还得修改一下这个服务的暴露方式,这样我们就可以外部访问了

# 要将配置文件里面的 Service 暴露方式设置成 NodePort 方式,这样我们外部就可以直接访问了

# 在342行位置

type: NodePort

# 再把镜像改一下

sed -i 's#kubernetesui/metrics-scraper:v1.0.8#registry.cn-hangzhou.aliyuncs.com/k8s_whale_images/metrics-scraper:v1.0.8#g' recommended.yaml

sed -i 's#kubernetesui/dashboard:v2.7.0#registry.cn-hangzhou.aliyuncs.com/k8s_whale_images/dashboard:v2.7.0#g' recommended.yaml

运行一下

kubectl apply -f recommended.yaml

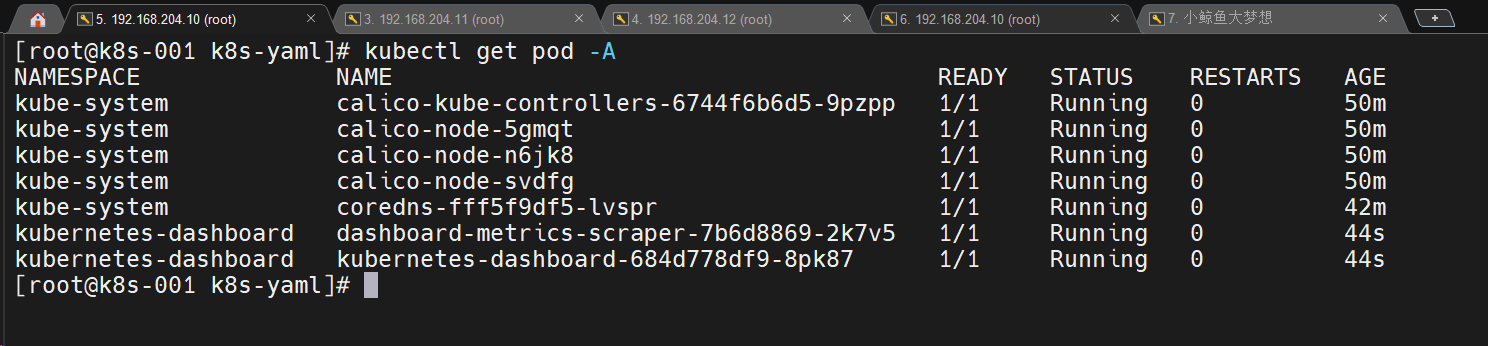

看下状态

kubectl get pod -A

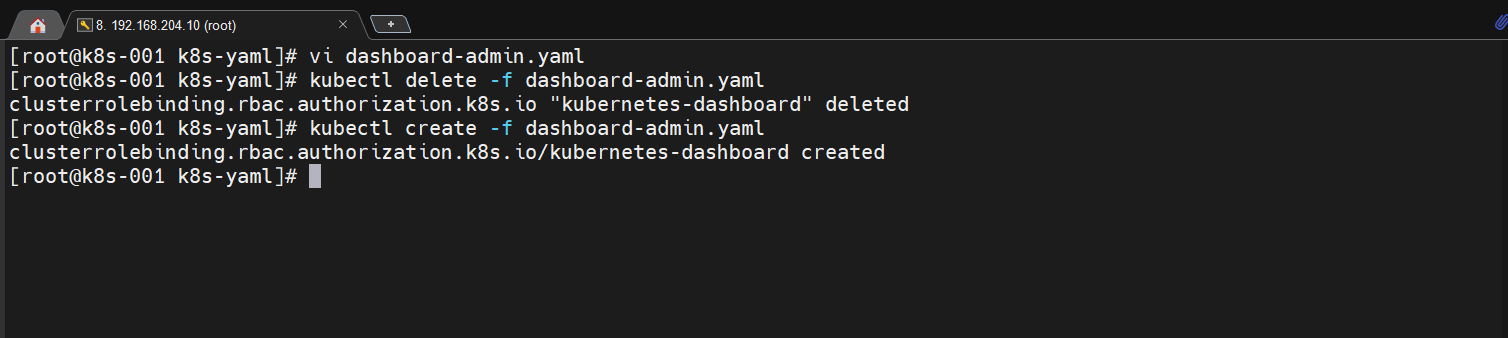

配置访问

### dashboard-admin.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

生效一下

kubectl delete -f dashboard-admin.yaml

kubectl create -f dashboard-admin.yaml

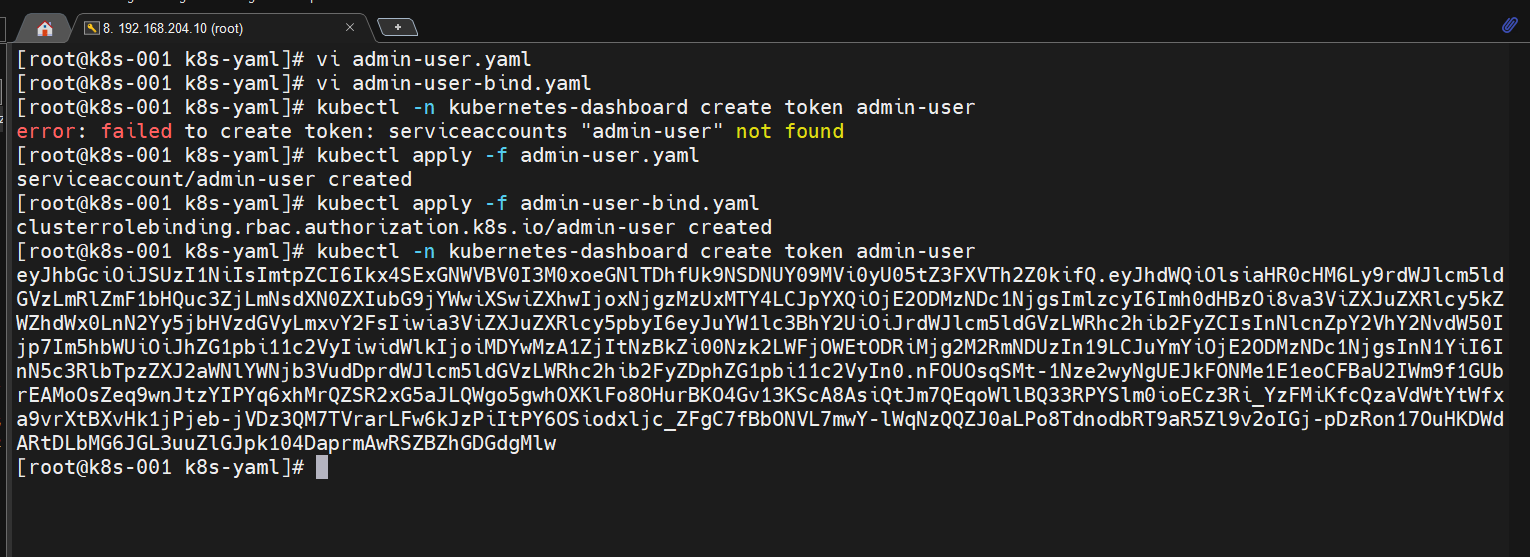

生成登陆 token

这个版本的好像和之前的有所改变,需要这样操作才能获取到登陆 token

- 创建服务账号

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

- 集群角色绑定

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

- 生成 token

kubectl -n kubernetes-dashboard create token admin-user

登陆验证

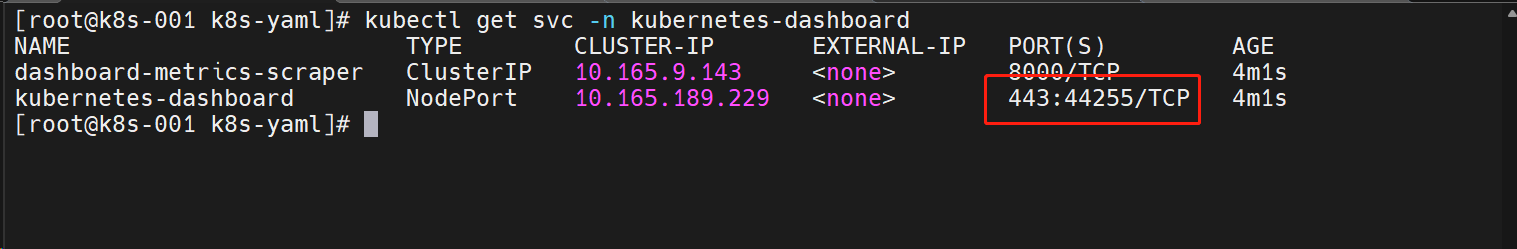

##看一下访问端口

kubectl get svc -n kubernetes-dashboard

## 44255

试试浏览器访问:https://192.168.204.13:44255/ (vip 地址也可)

输入上面生成的 token 即可访问

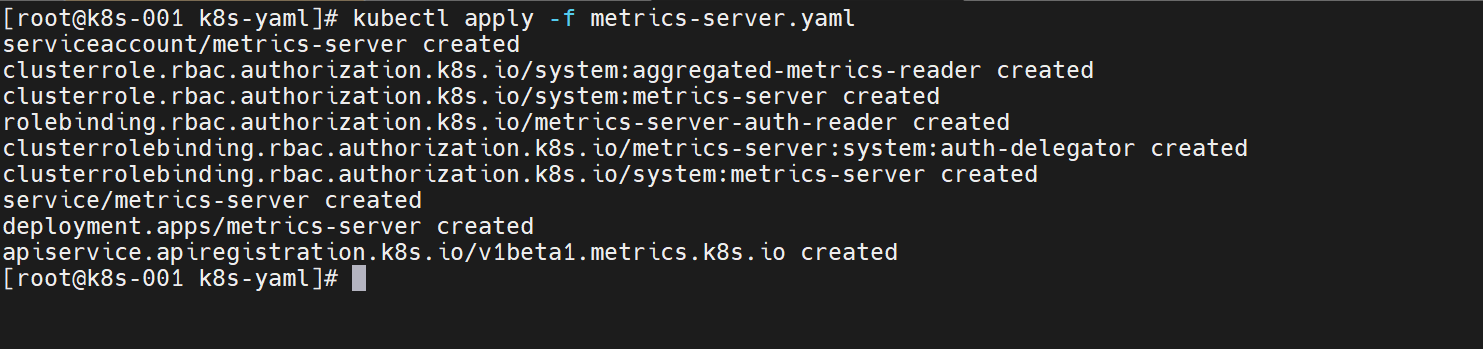

1.4.3 安装 metrics

有关 metrics-server 地址:https://github.com/kubernetes-sigs/metrics-server#readme

Metrics Server是 k8s 内置自动缩放管道的可扩展、高效的容器资源度量源。

Metrics Server 从 Kubelets 收集资源度量,并通过Metrics API在Kubernetes apiserver中公开这些度量,供Horizontal Pod Autoscaler和Vertical Pod Autocaler使用。kubectl top还可以访问Metrics API,从而更容易调试自动缩放管道。

准备 yaml 文件

##### metrics-server.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --kubelet-insecure-tls

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/metrics-server:v0.4.3

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

部署一下

### k8s-001执行即可

kubectl apply -f metrics-server.yaml

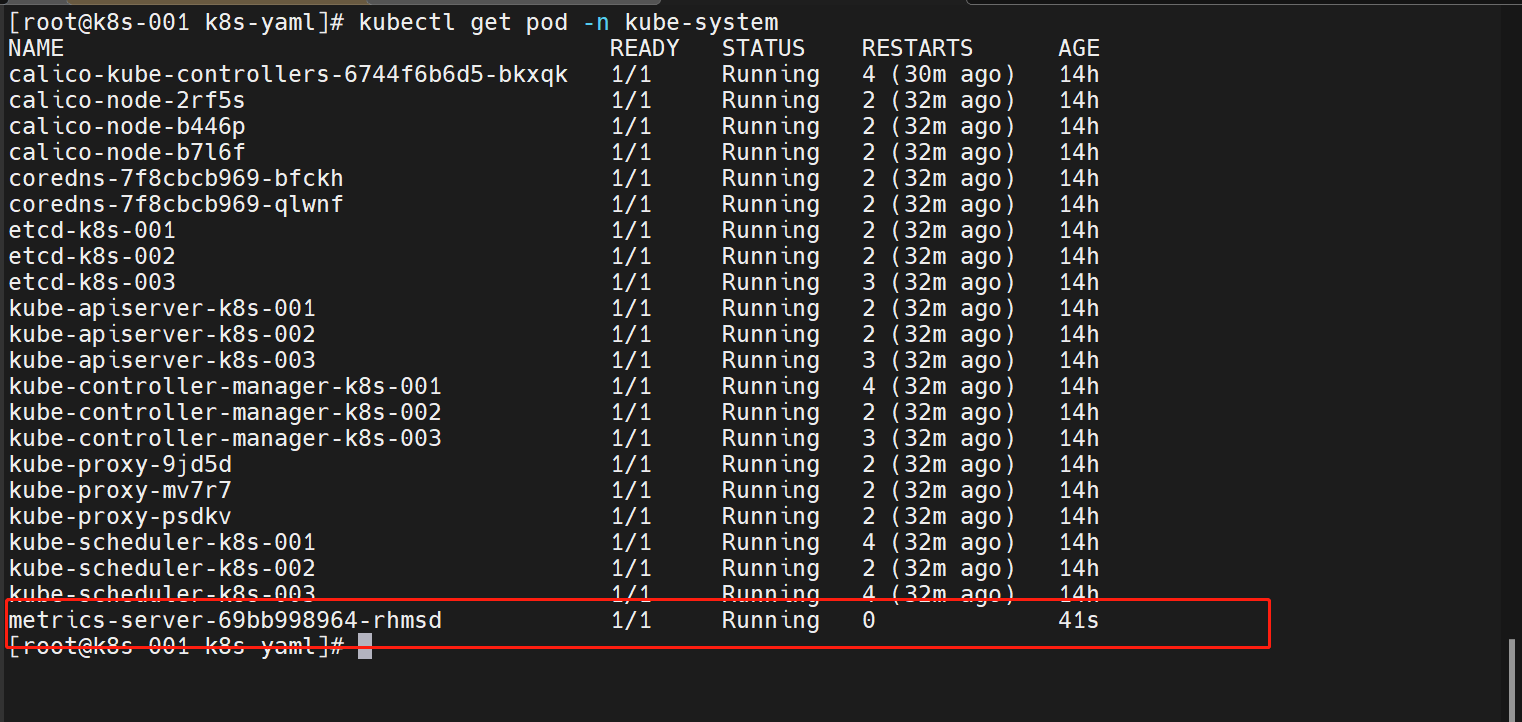

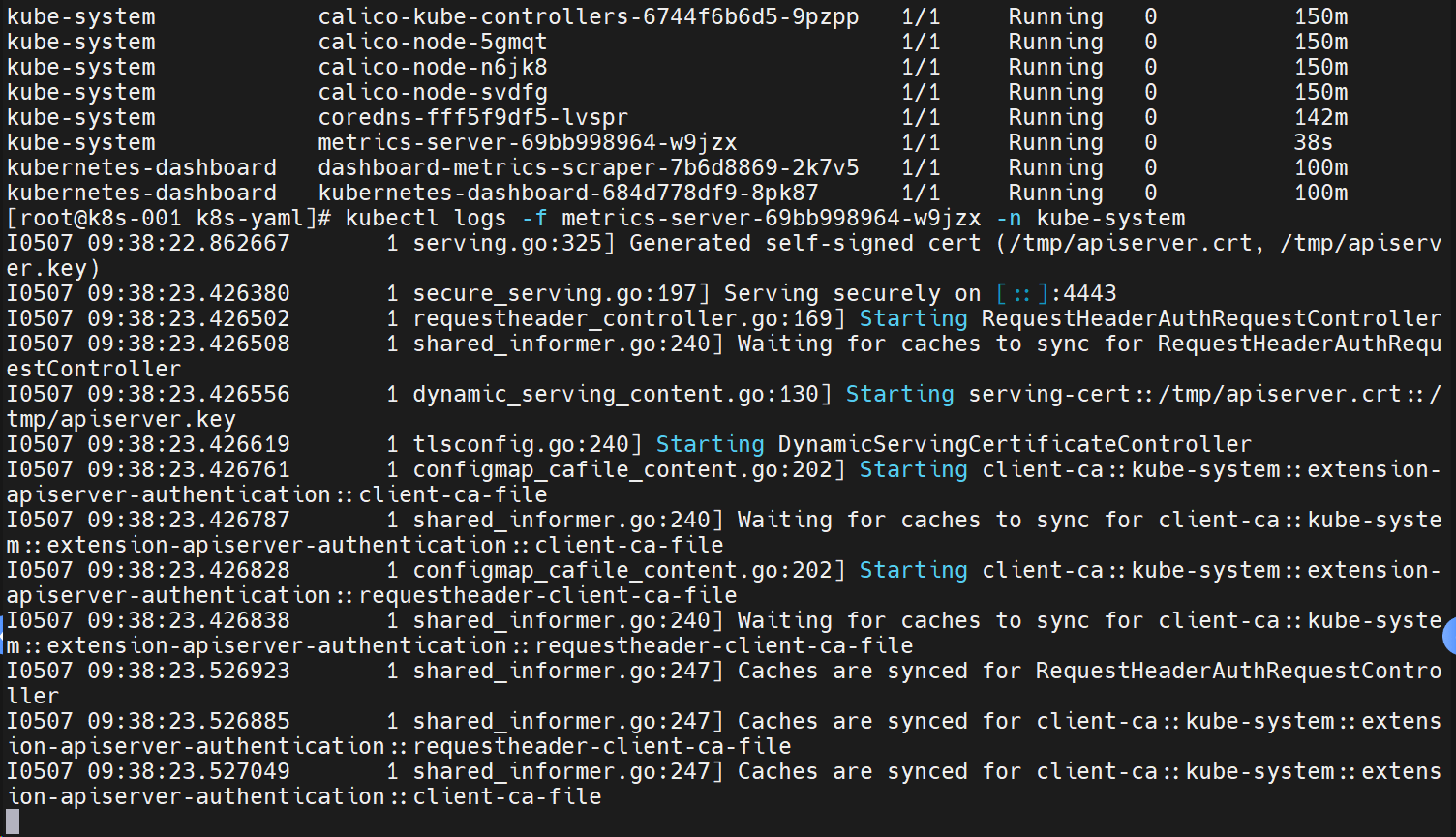

查看一下状态

kubectl get pod -n kube-system

还需要额外配置才能生效

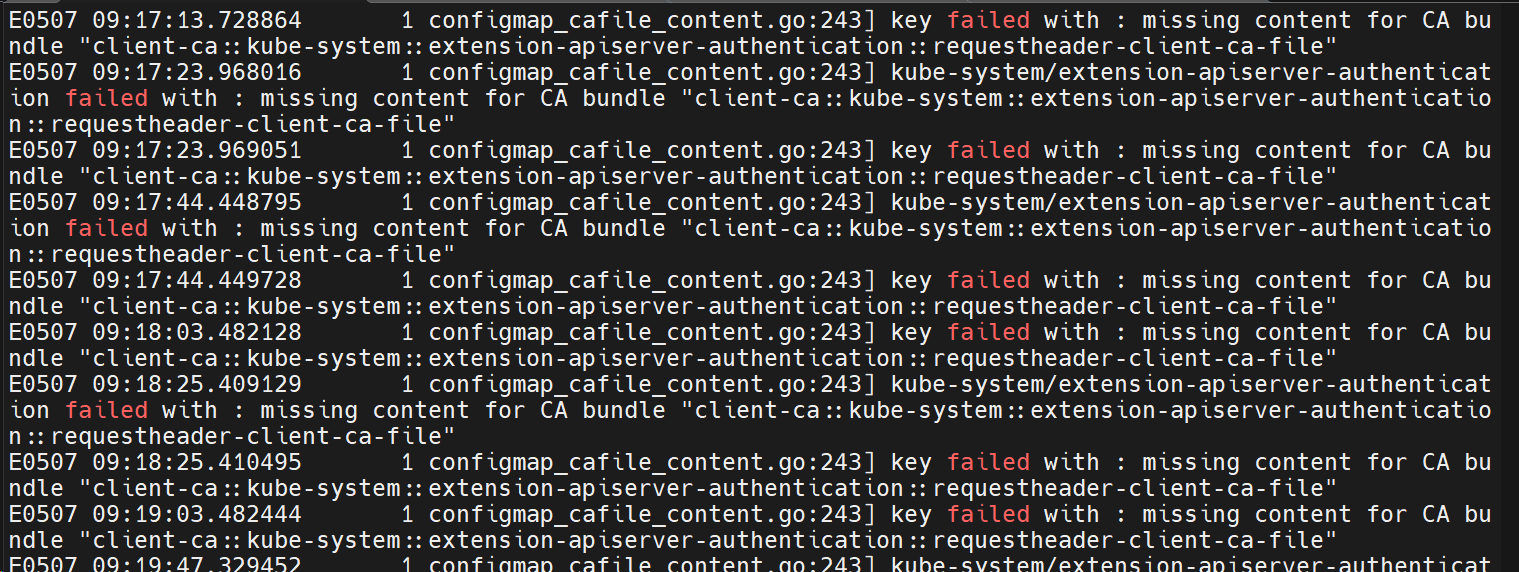

不然服务一直输出以下错误:

### k8s-001执行

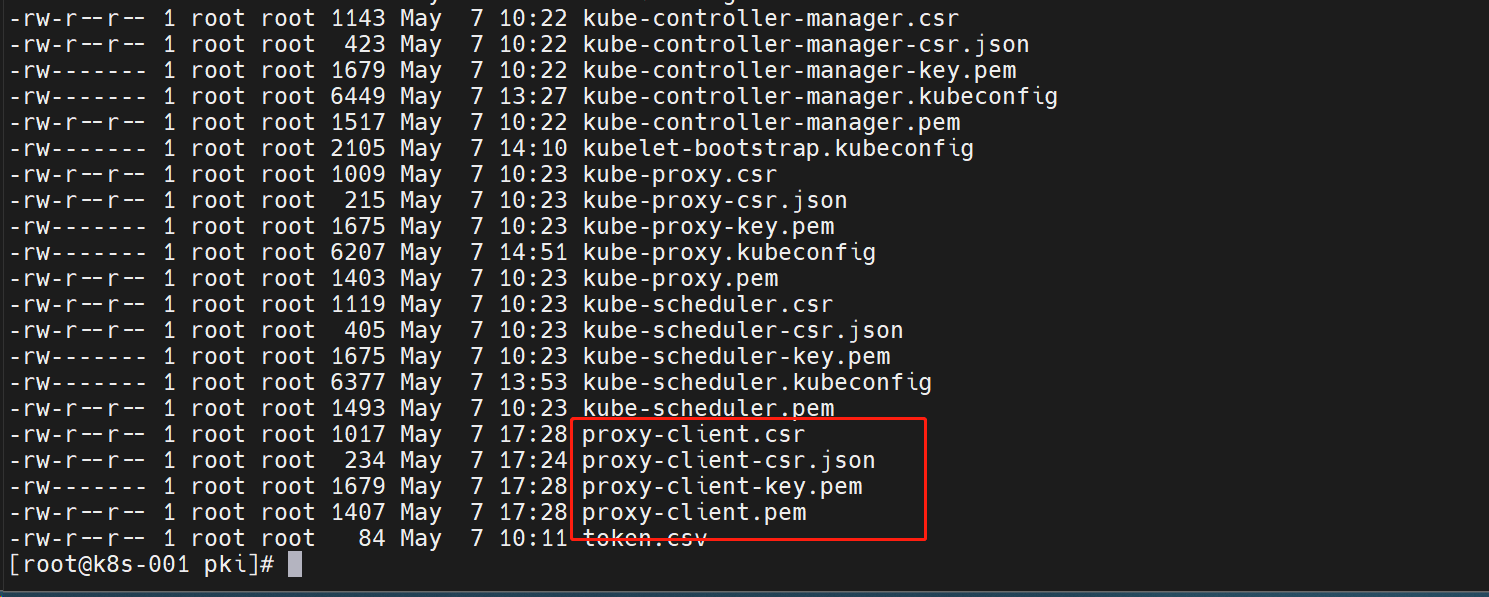

cd /root/cfssl/pki

### 生成proxy-client-csr.json内容

{

"CN": "aggregator",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Sichuan",

"L": "Chengdu",

"O": "system:masters",

"OU": "System"

}

]

}

### 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes proxy-client-csr.json | cfssljson -bare proxy-client

### 复制证书

scp proxy-client*.pem k8s-001:/etc/kubernetes/ssl/

scp proxy-client*.pem k8s-002:/etc/kubernetes/ssl/

scp proxy-client*.pem k8s-003:/etc/kubernetes/ssl/

修改kube-apiserver配置文件

### 增加以下内容

--runtime-config=api/all=true \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \

--proxy-client-cert-file=/etc/kubernetes/ssl/proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/ssl/proxy-client-key.pem \

重启生效

### 每个节点依次执行

systemctl daemon-reload && systemctl restart kube-apiserver

重新部署一下看是否正常

1.5 集群验证阶段

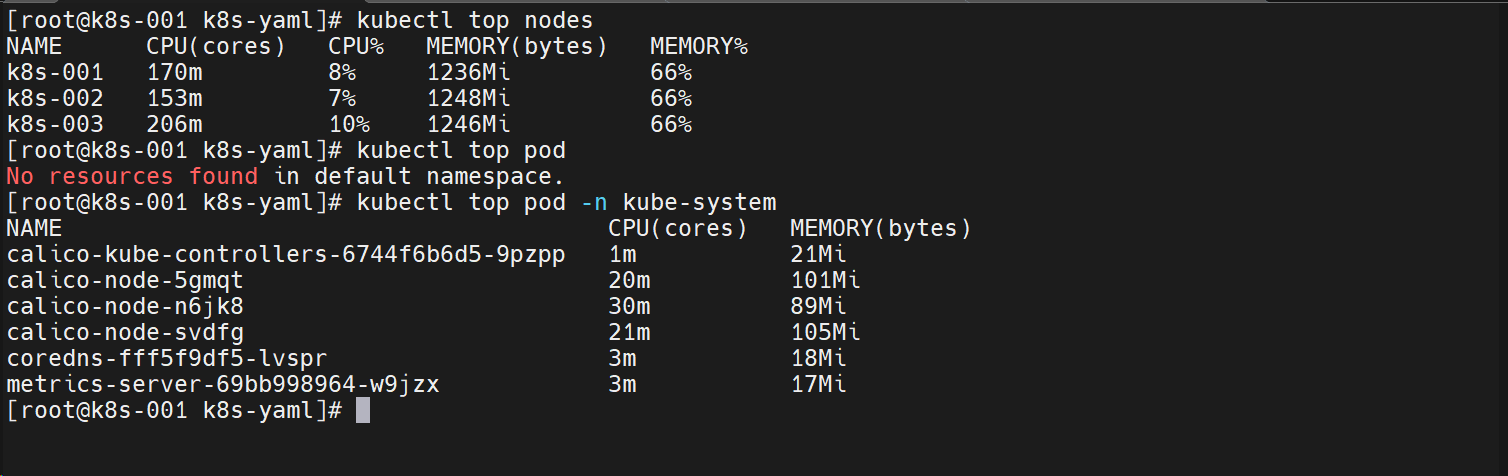

1.5.1 节点验证

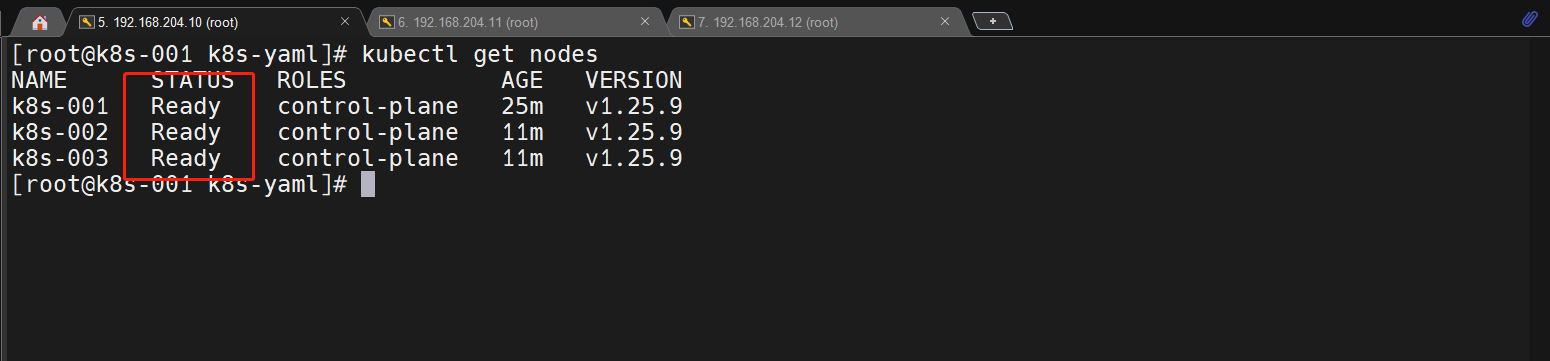

kubectl get nodes

- 节点正常处于

Ready状态 - 版本和安装无出入

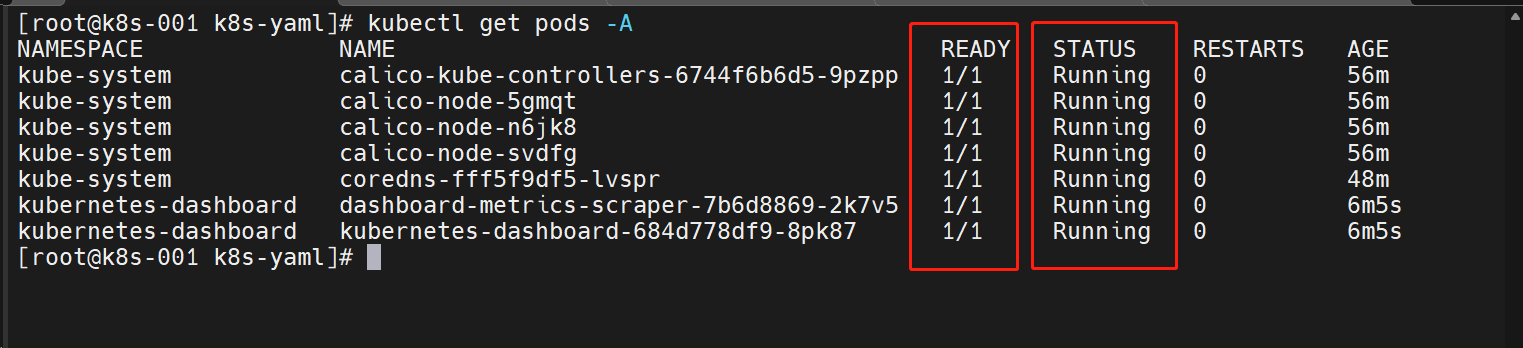

1.5.2 Pod 验证

kubectl get pods -A

- 状态肯定是需要是

Running状态 - Pod 准备是否就绪,处于

1/1(前面数字和后面数字保持一致) - 对于重启次数,不用太纠结这个,虚拟机重启都会导致这个重启次数

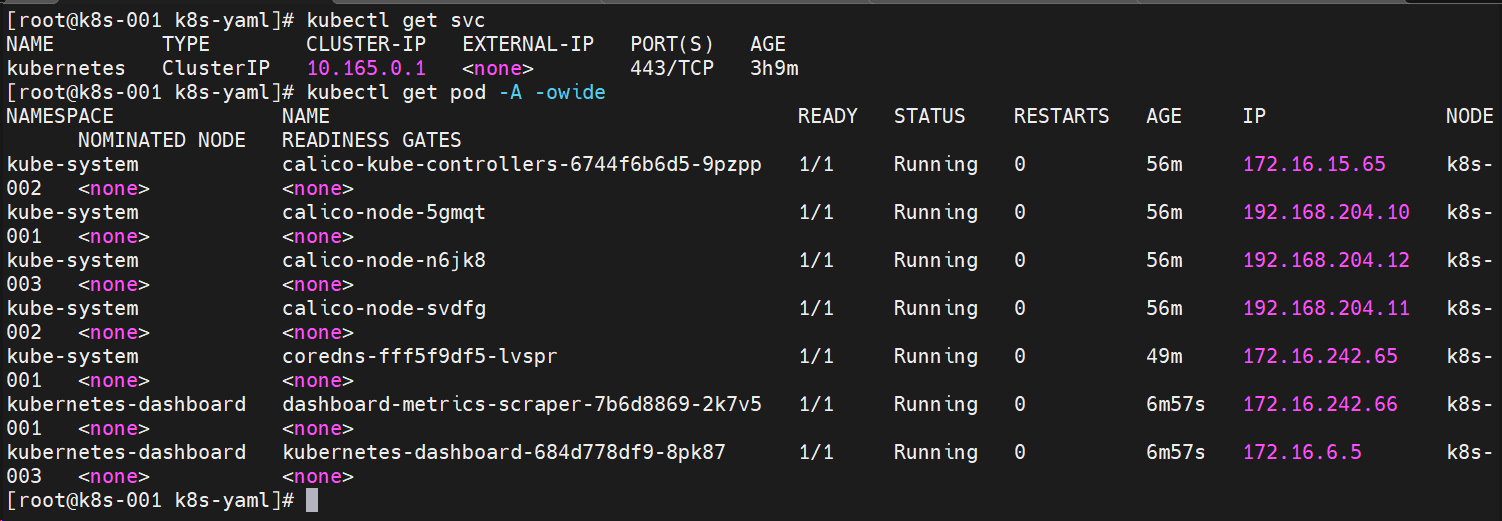

1.5.3 k8s 网段验证

kubectl get svc

kubectl get pod -A -owide

-

核实一下对于的网段是否和我们规划的一致,是否存在冲突

-

对于网络用的宿主机 IP 地址,是因为

pod网络模式用的主机模式

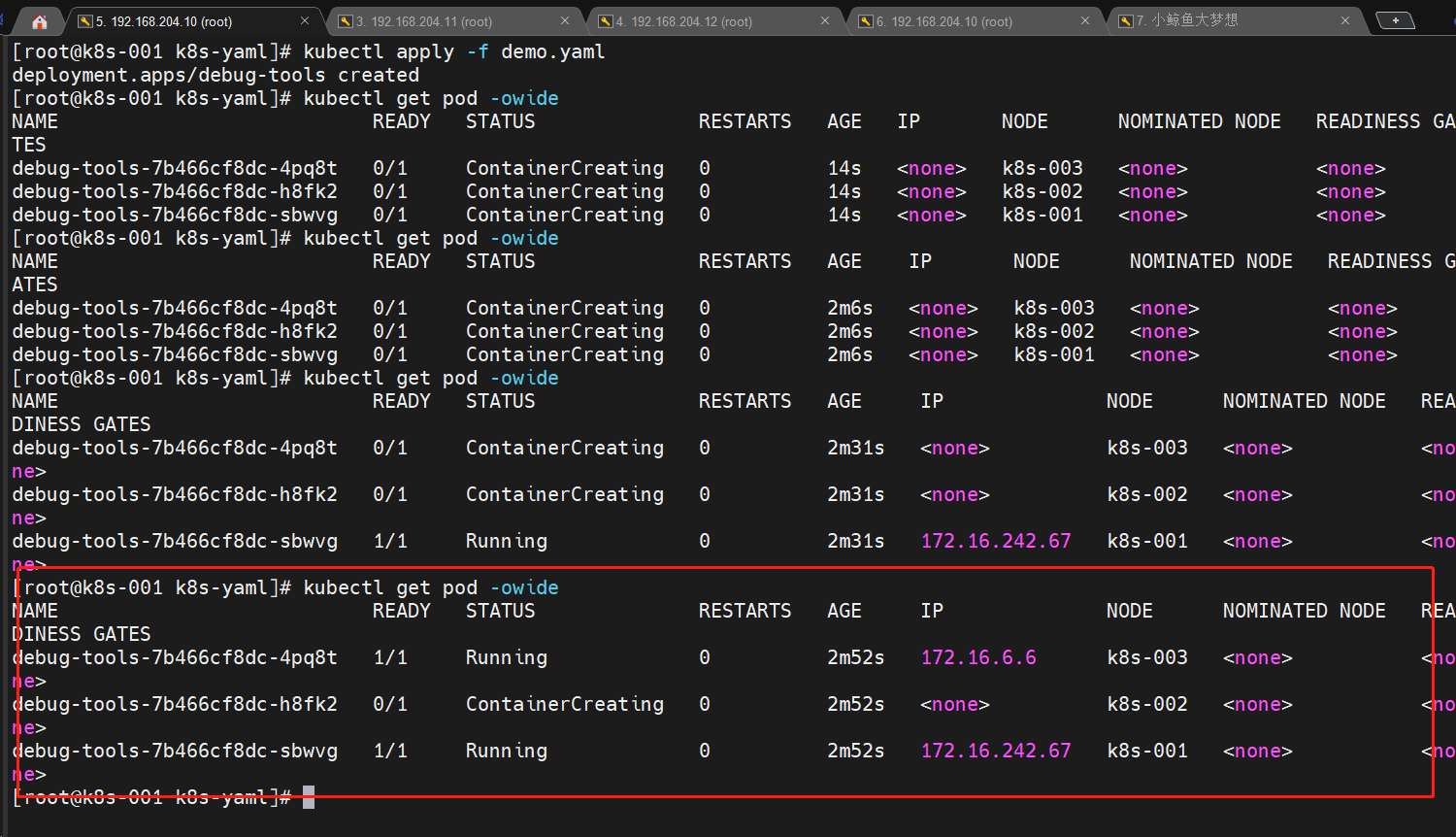

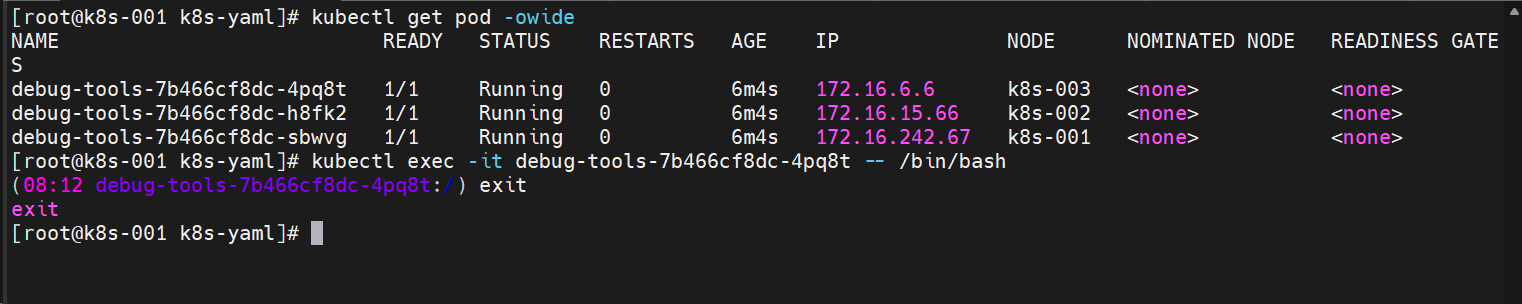

1.5.4 创建资源验证

### 我们使用国内的一个debug工具镜像进行验证即可,包括后续的验证,准备这么一个yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: debug-tools

labels:

app: debug-tools

spec:

replicas: 3

selector:

matchLabels:

app: debug-tools

template:

metadata:

labels:

app: debug-tools

spec:

containers:

- name: debug-tools

image: registry.cn-hangzhou.aliyuncs.com/k8s_whale_images/debug-tools:latest

command: ["/bin/sh","-c","sleep 3600"]

运行一下

kubectl apply -f demo.yaml

查看一下是否正常部署

- 副本数

pod状态- 容器内部

删除资源是否正常

kubectl delete -f demo.yaml

- 资源配置有限,稍微比较慢,只要资源能够正常创建删除即可

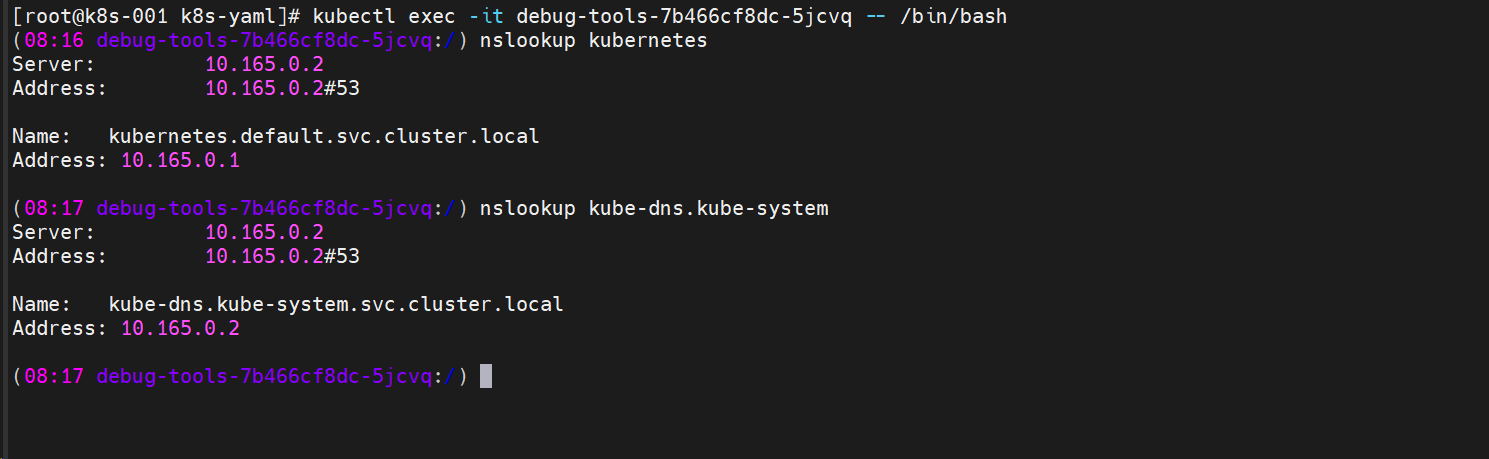

1.5.5 pod 解析验证

验证是否正确解析 service

# 从上面的pod进行验证

# 同空间(容器内部验证)

nslookup kubernetes

# 跨空间

nslookup kube-dns.kube-system

- 有 IP 返回即可

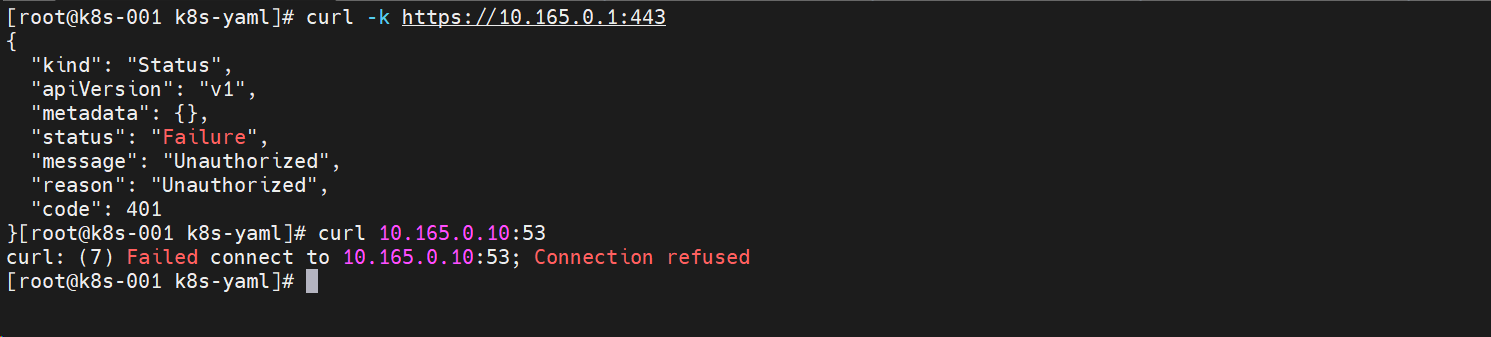

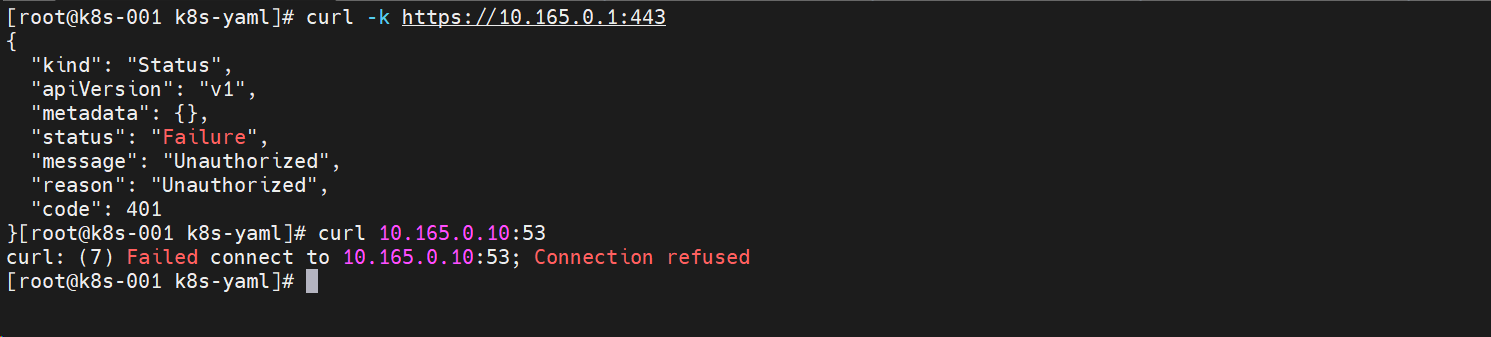

1.5.6 节点访问验证

# 宿主机必须是能够访问k8s svc 443 和 kube-dns的53端口

# 每个节点

curl -k https://10.165.0.1:443

curl 10.165.0.10:53

- 有这些说明事正常通信的

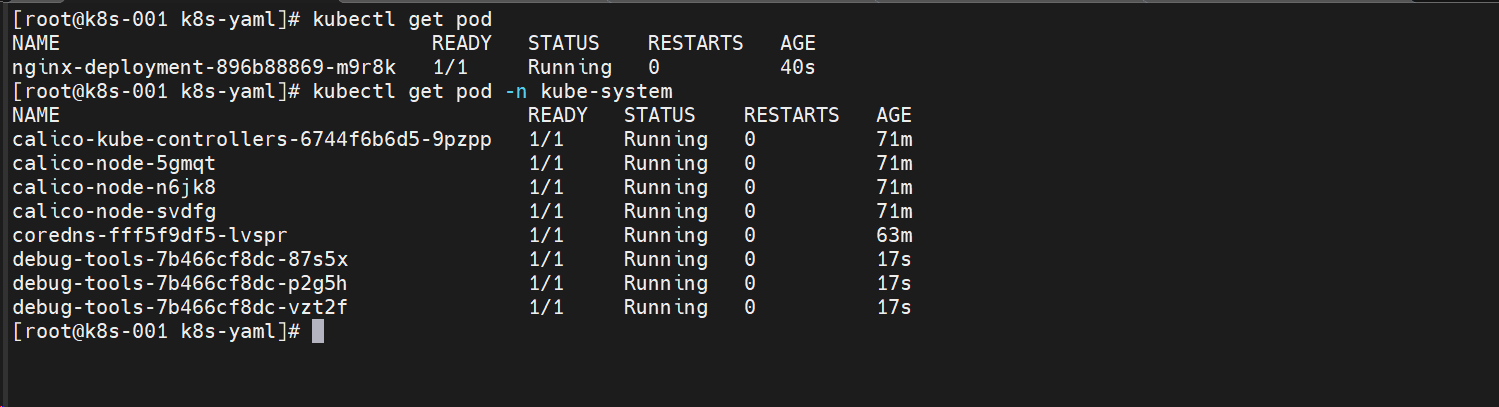

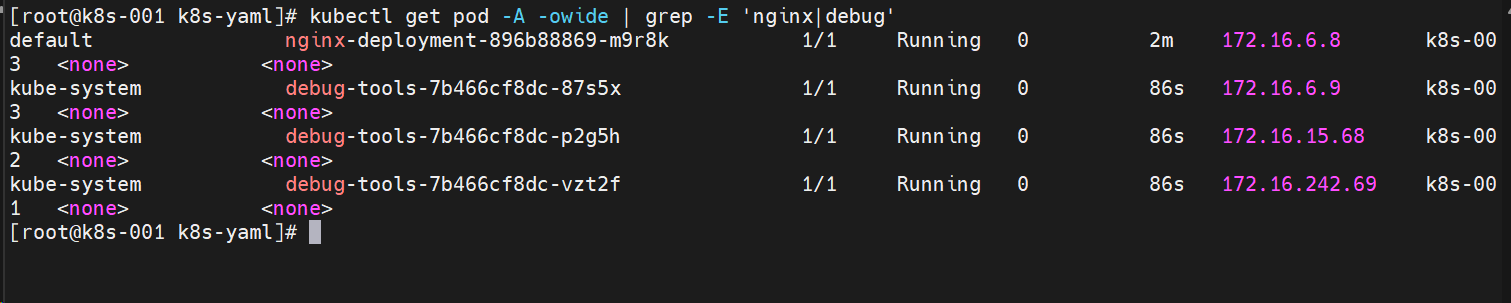

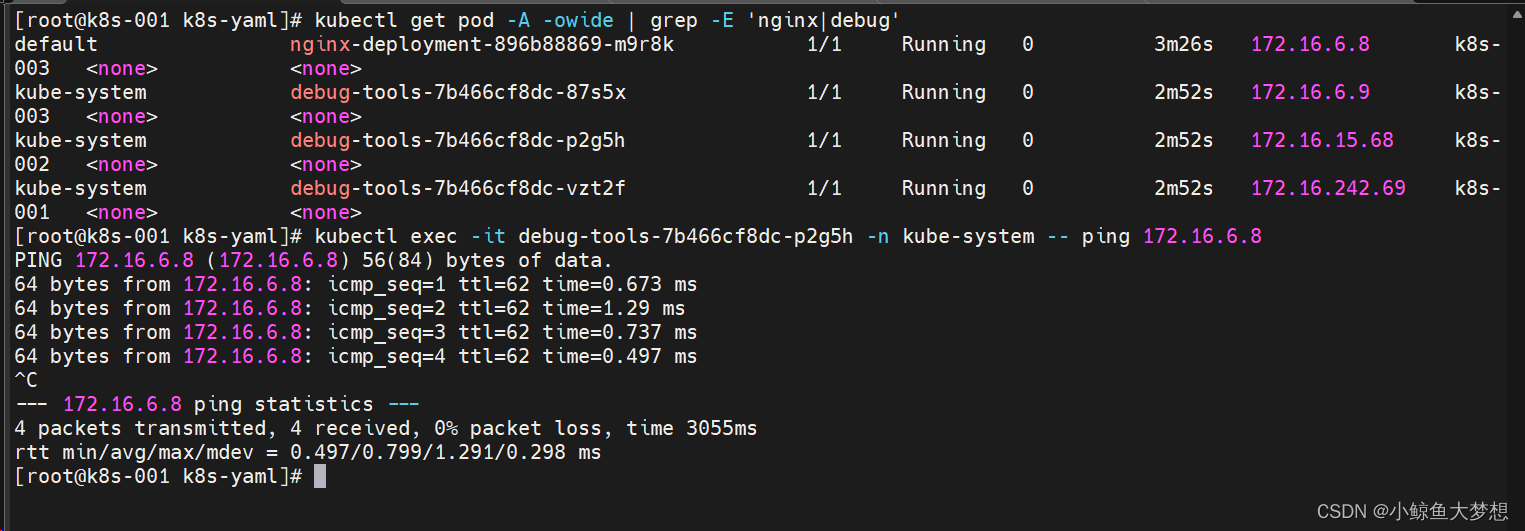

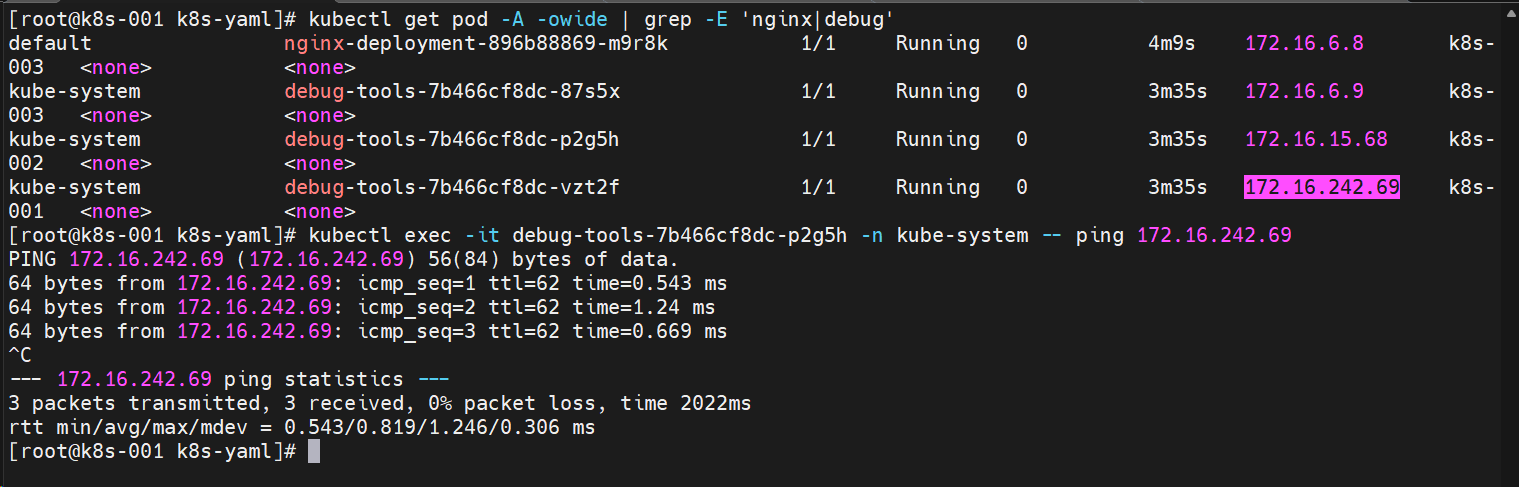

1.5.7 Pod 之间的通信

同机器上的pod和不同机器上的都要去验证一下

准备2个ymal

第一个

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

第二个

apiVersion: apps/v1

kind: Deployment

metadata:

name: debug-tools

namespace: kube-system

labels:

app: debug-tools

spec:

replicas: 1

selector:

matchLabels:

app: debug-tools

template:

metadata:

labels:

app: debug-tools

spec:

containers:

- name: debug-tools

image: registry.cn-hangzhou.aliyuncs.com/k8s_whale_images/debug-tools:latest

command: ["/bin/sh","-c","sleep 3600"]

跑起来

- 我们跑了2个容器,在不同的空间下

验证 Pod 之间通信

查看一下各 pod 地址

实际去 ping 验证一下:

# 不同空间

kubectl exec -it debug-tools-7b466cf8dc-xmhl7 -n kube-system ping 10.166.6.10

相同的空间

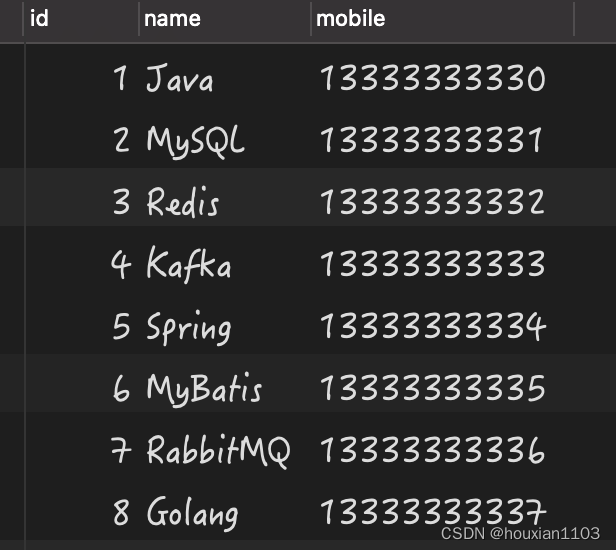

到现在,应该算是一个比较完美的过程了