Pre:

如果之前从没接触过实例分割,建议先了解一下实例分割的输出是什么。

实例分割两个关键输出是:mask系数、mask原型

本文参考自该项目(这么优秀的代码当然要给star!):GitHub - UNeedCryDear/yolov5-seg-opencv-onnxruntime-cpp: yolov5 segmentation with onnxruntime and opencv

目录

Pre:

一、代码总结

1、实例分割输出 与 目标检测输出 的区别?

2、如何获得目标的mask?

二、源码

yolov5_seg_utils.h

yolov5_seg_utils.cpp

yolo_seg.h

yolo_seg.cpp

main.cpp

三、效果

一、代码总结

1、实例分割输出 与 目标检测输出 的区别?

还是写一点实例分割部分的东西吧,互相交流下,嘿嘿。

yolov5n-seg.onnx 一张图片输入会获得两个输出,分别为

output0: float32[1, 25200,117] 。25200是输出anchor的数量,117是4个anchor坐标信息、1个置信度、80个类别概率、32个mask系数。

output1: float32[1,32,160,160]。即一张图片输出的一组mask原型 ,你可以理解为网络32张mask。在网络输入图上突出前景的mask,共32张,然后尺寸缩小到尺寸为160*160。

2、如何获得目标的mask?

这段代码读起来还挺废脑细胞的。

首先要指出的是,这个mask是相对于目标框的,不是相对于原图的。

获得目标框的mask:

- 检测框区域映射到mask原型上,然后在原型上裁剪下该区域。

- mask系数与目标框区域原型作矩阵乘法,其结果然后进行sigmod运算,阈值化(01二值),便获得突出目标的mask

- 目标mask映射到原图上

对应源码:

void GetMask2(const Mat& maskProposals, const Mat& mask_protos, OutputSeg& output, const MaskParams& maskParams) {

int seg_channels = maskParams.segChannels;

int net_width = maskParams.netWidth;

int seg_width = maskParams.segWidth;

int net_height = maskParams.netHeight;

int seg_height = maskParams.segHeight;

float mask_threshold = maskParams.maskThreshold;

Vec4f params = maskParams.params;

Size src_img_shape = maskParams.srcImgShape;

Rect temp_rect = output.box;

// 把已经到原图的检测框坐标信息 映射到 获得mask原型分支的输入尺寸上【160, 160】

int rang_x = floor((temp_rect.x * params[0] + params[2]) / net_width * seg_width);

int rang_y = floor((temp_rect.y * params[1] + params[3]) / net_height * seg_height);

int rang_w = ceil(((temp_rect.x + temp_rect.width) * params[0] + params[2]) / net_width * seg_width) - rang_x;

int rang_h = ceil(((temp_rect.y + temp_rect.height) * params[0] + params[3]) / net_width * seg_height) - rang_y;

//

rang_w = MAX(rang_w, 1);

rang_h = MAX(rang_h, 1);

if (rang_x + rang_w > seg_width){

if (seg_width - rang_x > 0)

rang_w =seg_width -rang_x;

else

rang_x -= 1;

}

if (rang_y + rang_h > seg_height) {

if (seg_height - rang_y > 0)

rang_h = seg_height - rang_y;

else

rang_y -= 1;

}

vector<Range> roi_ranges;

roi_ranges.push_back(Range(0,1));

roi_ranges.push_back(Range::all());

roi_ranges.push_back(Range(rang_y, rang_h+rang_y));

roi_ranges.push_back(Range(rang_x, rang_w+rang_x));

// 裁剪mask原型

Mat temp_mask_protos = mask_protos(roi_ranges).clone(); // 剪裁原型,保存检测框内部的原型,其余位置清零, 以此来获得感兴趣区域(roi)

Mat protos = temp_mask_protos.reshape(0, { seg_channels, rang_w*rang_h});// 检测至检测框大小?

// mask系数与mask原型做矩阵乘法

Mat matmul_res = (maskProposals * protos).t(); // mask系数【1,32】 与 mask原型【32, h*w】进行矩阵相称

Mat masks_feature = matmul_res.reshape(1,{rang_h, rang_w}); //【1,h,w】

Mat dest, mask;

// sigmod

cv::exp(-masks_feature, dest);

dest = 1.0 / (1.0 + dest);

// 检测框坐标 映射到 原图尺寸

int left = floor((net_width / seg_width * rang_x - params[2]) / params[0]);

int top = floor((net_width / seg_height * rang_y - params[3]) / params[1]);

int width = ceil(net_width / seg_height * rang_w / params[0]);

int height = ceil(net_height / seg_height * rang_h / params[1]);

// 检测框mask缩放到原图尺寸

resize(dest, mask, Size(width, height), INTER_NEAREST);

// 阈值化

mask = mask(temp_rect - Point(left, top)) > mask_threshold;

output.boxMask = mask;

}

二、源码

yolov5_seg_utils.h

#pragma once

#include<iostream>

#include <numeric>

#include<opencv2/opencv.hpp>

#define YOLO_P6 false //是否使用P6模型

#define ORT_OLD_VISON 12 //ort1.12.0 之前的版本为旧版本API

struct OutputSeg {

int id; //结果类别id

float confidence; //结果置信度

cv::Rect box; //矩形框

cv::Mat boxMask; //矩形框内mask,节省内存空间和加快速度

};

struct MaskParams {

int segChannels = 32;

int segWidth = 160;

int segHeight = 160;

int netWidth = 640;

int netHeight = 640;

float maskThreshold = 0.5;

cv::Size srcImgShape;

cv::Vec4d params;

};

bool CheckParams(int netHeight, int netWidth, const int* netStride, int strideSize);

void DrawPred(cv::Mat& img, std::vector<OutputSeg> result, std::vector<std::string> classNames, std::vector<cv::Scalar> color);

void LetterBox(const cv::Mat& image, cv::Mat& outImage,

cv::Vec4d& params, //[ratio_x,ratio_y,dw,dh]

const cv::Size& newShape = cv::Size(640, 640),

bool autoShape = false,

bool scaleFill = false,

bool scaleUp = true,

int stride = 32,

const cv::Scalar& color = cv::Scalar(114, 114, 114));

void GetMask(const cv::Mat& maskProposals, const cv::Mat& maskProtos, std::vector<OutputSeg>& output, const MaskParams& maskParams);

void GetMask2(const cv::Mat& maskProposals, const cv::Mat& maskProtos, OutputSeg& output, const MaskParams& maskParams);yolov5_seg_utils.cpp

#pragma once

#include "yolov5_seg_utils.h"

using namespace cv;

using namespace std;

bool CheckParams(int netHeight, int netWidth, const int* netStride, int strideSize) {

if (netHeight % netStride[strideSize - 1] != 0 || netWidth % netStride[strideSize - 1] != 0)

{

cout << "Error:_netHeight and _netWidth must be multiple of max stride " << netStride[strideSize - 1] << "!" << endl;

return false;

}

return true;

}

void LetterBox(const cv::Mat& image, cv::Mat& outImage, cv::Vec4d& params, const cv::Size& newShape,

bool autoShape, bool scaleFill, bool scaleUp, int stride, const cv::Scalar& color)

{

if (false) {

int maxLen = MAX(image.rows, image.cols);

outImage = Mat::zeros(Size(maxLen, maxLen), CV_8UC3);

image.copyTo(outImage(Rect(0, 0, image.cols, image.rows)));

params[0] = 1;

params[1] = 1;

params[3] = 0;

params[2] = 0;

}

cv::Size shape = image.size();

float r = std::min((float)newShape.height / (float)shape.height,

(float)newShape.width / (float)shape.width);

if (!scaleUp)

r = std::min(r, 1.0f);

float ratio[2]{ r, r };

int new_un_pad[2] = { (int)std::round((float)shape.width * r),(int)std::round((float)shape.height * r) };

auto dw = (float)(newShape.width - new_un_pad[0]);

auto dh = (float)(newShape.height - new_un_pad[1]);

if (autoShape)

{

dw = (float)((int)dw % stride);

dh = (float)((int)dh % stride);

}

else if (scaleFill)

{

dw = 0.0f;

dh = 0.0f;

new_un_pad[0] = newShape.width;

new_un_pad[1] = newShape.height;

ratio[0] = (float)newShape.width / (float)shape.width;

ratio[1] = (float)newShape.height / (float)shape.height;

}

dw /= 2.0f;

dh /= 2.0f;

if (shape.width != new_un_pad[0] && shape.height != new_un_pad[1])

{

cv::resize(image, outImage, cv::Size(new_un_pad[0], new_un_pad[1]));

}

else {

outImage = image.clone();

}

int top = int(std::round(dh - 0.1f));

int bottom = int(std::round(dh + 0.1f));

int left = int(std::round(dw - 0.1f));

int right = int(std::round(dw + 0.1f));

params[0] = ratio[0];

params[1] = ratio[1];

params[2] = left;

params[3] = top;

cv::copyMakeBorder(outImage, outImage, top, bottom, left, right, cv::BORDER_CONSTANT, color);

}

void GetMask(const cv::Mat& maskProposals, const cv::Mat& maskProtos, std::vector<OutputSeg>& output, const MaskParams& maskParams) {

//cout << maskProtos.size << endl;

int seg_channels = maskParams.segChannels;

int net_width = maskParams.netWidth;

int seg_width = maskParams.segWidth;

int net_height = maskParams.netHeight;

int seg_height = maskParams.segHeight;

float mask_threshold = maskParams.maskThreshold;

Vec4f params = maskParams.params;

Size src_img_shape = maskParams.srcImgShape;

Mat protos = maskProtos.reshape(0, { seg_channels,seg_width * seg_height });

Mat matmul_res = (maskProposals * protos).t();

Mat masks = matmul_res.reshape(output.size(), { seg_width,seg_height });

vector<Mat> maskChannels;

split(masks, maskChannels);

for (int i = 0; i < output.size(); ++i) {

Mat dest, mask;

//sigmoid

cv::exp(-maskChannels[i], dest);

dest = 1.0 / (1.0 + dest);

Rect roi(int(params[2] / net_width * seg_width), int(params[3] / net_height * seg_height), int(seg_width - params[2] / 2), int(seg_height - params[3] / 2));

dest = dest(roi);

resize(dest, mask, src_img_shape, INTER_NEAREST);

//crop

Rect temp_rect = output[i].box;

mask = mask(temp_rect) > mask_threshold;

output[i].boxMask = mask;

}

}

void GetMask2(const Mat& maskProposals, const Mat& mask_protos, OutputSeg& output, const MaskParams& maskParams) {

int seg_channels = maskParams.segChannels;

int net_width = maskParams.netWidth;

int seg_width = maskParams.segWidth;

int net_height = maskParams.netHeight;

int seg_height = maskParams.segHeight;

float mask_threshold = maskParams.maskThreshold;

Vec4f params = maskParams.params;

Size src_img_shape = maskParams.srcImgShape;

Rect temp_rect = output.box;

// 把已经到原图的检测框坐标信息 映射到 获得mask原型分支的输入尺寸上【160, 160】

int rang_x = floor((temp_rect.x * params[0] + params[2]) / net_width * seg_width);

int rang_y = floor((temp_rect.y * params[1] + params[3]) / net_height * seg_height);

int rang_w = ceil(((temp_rect.x + temp_rect.width) * params[0] + params[2]) / net_width * seg_width) - rang_x;

int rang_h = ceil(((temp_rect.y + temp_rect.height) * params[0] + params[3]) / net_width * seg_height) - rang_y;

//

rang_w = MAX(rang_w, 1);

rang_h = MAX(rang_h, 1);

if (rang_x + rang_w > seg_width){

if (seg_width - rang_x > 0)

rang_w =seg_width -rang_x;

else

rang_x -= 1;

}

if (rang_y + rang_h > seg_height) {

if (seg_height - rang_y > 0)

rang_h = seg_height - rang_y;

else

rang_y -= 1;

}

vector<Range> roi_ranges;

roi_ranges.push_back(Range(0,1));

roi_ranges.push_back(Range::all());

roi_ranges.push_back(Range(rang_y, rang_h+rang_y));

roi_ranges.push_back(Range(rang_x, rang_w+rang_x));

// 裁剪mask原型

Mat temp_mask_protos = mask_protos(roi_ranges).clone(); // 剪裁原型,保存检测框内部的原型,其余位置清零, 以此来获得感兴趣区域(roi)

Mat protos = temp_mask_protos.reshape(0, { seg_channels, rang_w*rang_h});// 检测至检测框大小?

// mask系数与mask原型做矩阵乘法

Mat matmul_res = (maskProposals * protos).t(); // mask系数【1,32】 与 mask原型【32, h*w】进行矩阵相称

Mat masks_feature = matmul_res.reshape(1,{rang_h, rang_w}); //【1,h,w】

Mat dest, mask;

// sigmod

cv::exp(-masks_feature, dest);

dest = 1.0 / (1.0 + dest);

// 检测框坐标 映射到 原图尺寸

int left = floor((net_width / seg_width * rang_x - params[2]) / params[0]);

int top = floor((net_width / seg_height * rang_y - params[3]) / params[1]);

int width = ceil(net_width / seg_height * rang_w / params[0]);

int height = ceil(net_height / seg_height * rang_h / params[1]);

// 检测框mask缩放到原图尺寸

resize(dest, mask, Size(width, height), INTER_NEAREST);

// 阈值化

mask = mask(temp_rect - Point(left, top)) > mask_threshold;

output.boxMask = mask;

}

void DrawPred(Mat& img, vector<OutputSeg> result, std::vector<std::string> classNames, vector<Scalar> color) {

Mat mask = img.clone();

for (int i=0; i< result.size(); i++){

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num =i;

// 目标画框

rectangle(img, result[i].box, color[result[i].id], 2, 8);

// 目标mask,这里非目标像素值为0

mask(result[i].box).setTo(color[result[i].id], result[i].boxMask);

string label = classNames[result[i].id] + ":" + to_string(result[i].confidence);

// 框左上角打印信息

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 0.5, color[result[i].id], 2);

}

addWeighted(img, 0.5, mask, 0.5, 0, img);

}yolo_seg.h

#pragma once

#include<iostream>

#include<opencv2/opencv.hpp>

#include "yolov5_seg_utils.h"

class YoloSeg {

public:

YoloSeg() {

}

~YoloSeg() {}

/** \brief Read onnx-model

* \param[out] read onnx file into cv::dnn::Net

* \param[in] modelPath:onnx-model path

* \param[in] isCuda:if true and opencv built with CUDA(cmake),use OpenCV-GPU,else run it on cpu.

*/

bool ReadModel(cv::dnn::Net& net, std::string& netPath, bool isCuda);

/** \brief detect.

* \param[in] srcImg:a 3-channels image.

* \param[out] output:detection results of input image.

*/

bool Detect(cv::Mat& srcImg, cv::dnn::Net& net, std::vector<OutputSeg>& output);

#if(defined YOLO_P6 && YOLO_P6==true)

const int _netWidth = 1280; //ONNX图片输入宽度

const int _netHeight = 1280; //ONNX图片输入高度

const int _segWidth = 320; //_segWidth=_netWidth/mask_ratio

const int _segHeight = 320;

const int _segChannels = 32;

#else

const int _netWidth = 640; //ONNX图片输入宽度

const int _netHeight = 640; //ONNX图片输入高度

const int _segWidth = 160; //_segWidth=_netWidth/mask_ratio

const int _segHeight = 160;

const int _segChannels = 32;

#endif // YOLO_P6

float _classThreshold = 0.25;

float _nmsThreshold = 0.45;

float _maskThreshold = 0.5;

public:

std::vector<std::string> _className = { "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush" };//类别名,换成自己的模型需要修改此项

};yolo_seg.cpp

#include"yolo_seg.h"

using namespace std;

using namespace cv;

using namespace cv::dnn;

bool YoloSeg::ReadModel(Net& net, string& netPath, bool isCuda = false) {

try {

net = readNet(netPath);

#if CV_VERSION_MAJOR==4 &&CV_VERSION_MINOR==7&&CV_VERSION_REVISION==0

net.enableWinograd(false); //bug of opencv4.7.x in AVX only platform ,https://github.com/opencv/opencv/pull/23112 and https://github.com/opencv/opencv/issues/23080

//net.enableWinograd(true); //If your CPU supports AVX2, you can set it true to speed up

#endif

}

catch (const std::exception&) {

return false;

}

if (isCuda) {

//cuda

net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA); //or DNN_TARGET_CUDA_FP16

}

else {

//cpu

cout << "Inference device: CPU" << endl;

net.setPreferableBackend(cv::dnn::DNN_BACKEND_DEFAULT);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

}

return true;

}

bool YoloSeg::Detect(Mat& srcImg, Net& net, vector<OutputSeg>& output) {

Mat blob;

output.clear();

int col = srcImg.cols;

int row = srcImg.rows;

Mat netInputImg;

Vec4d params;

LetterBox(srcImg, netInputImg, params, cv::Size(_netWidth, _netHeight));

blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(_netWidth, _netHeight), cv::Scalar(0, 0, 0), true, false);

//**************************************************************************************************************************************************/

//如果在其他设置没有问题的情况下但是结果偏差很大,可以尝试下用下面两句语句

// If there is no problem with other settings, but results are a lot different from Python-onnx , you can try to use the following two sentences

//

//$ blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(_netWidth, _netHeight), cv::Scalar(104, 117, 123), true, false);

//$ blobFromImage(netInputImg, blob, 1 / 255.0, cv::Size(_netWidth, _netHeight), cv::Scalar(114, 114,114), true, false);

//****************************************************************************************************************************************************/

net.setInput(blob);

std::vector<cv::Mat> net_output_img;

//*********************************************************************************************************************************

//net.forward(net_output_img, net.getUnconnectedOutLayersNames());

//opencv4.5.x和4.6.x这里输出不一致,推荐使用下面的固定名称输出

// 如果使用net.forward(net_output_img, net.getUnconnectedOutLayersNames()),需要确认下net.getUnconnectedOutLayersNames()返回值中output0在前,output1在后,否者出错

//

// The outputs of opencv4.5.x and 4.6.x are inconsistent.Please make sure "output0" is in front of "output1" if you use net.forward(net_output_img, net.getUnconnectedOutLayersNames())

//*********************************************************************************************************************************

vector<string> output_layer_names{ "output0","output1" };

net.forward(net_output_img, output_layer_names); //获取output的输出

std::vector<int> class_ids;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<vector<float>> picked_proposals; //output0[:,:, 5 + _className.size():net_width]===> for mask

int net_width = _className.size() + 5 + _segChannels;// 80 + 5 + 32 = 117

int out0_width= net_output_img[0].size[2];

// assert(net_width == out0_width, "Error Wrong number of _className or _segChannels"); //模型类别数目不对或者_segChannels设置错误

int net_height = net_output_img[0].size[1];// 25200

float* pdata = (float*)net_output_img[0].data;

for (int r = 0; r < net_height; r++) { //lines

float box_score = pdata[4];

if (box_score >= _classThreshold) {

cv::Mat scores(1, _className.size(), CV_32FC1, pdata + 5); // 可是 后面不只是有80个类别的概率;

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float)max_class_socre;

if (max_class_socre >= _classThreshold) {

vector<float> temp_proto(pdata + 5 + _className.size(), pdata + net_width); // Mask Coeffcients,mask的掩码系数

picked_proposals.push_back(temp_proto);

//rect [x,y,w,h]

float x = (pdata[0] - params[2]) / params[0]; //x

float y = (pdata[1] - params[3]) / params[1]; //y

float w = pdata[2] / params[0]; //w

float h = pdata[3] / params[1]; //h

int left = MAX(int(x - 0.5 * w + 0.5), 0);

int top = MAX(int(y - 0.5 * h + 0.5), 0);

class_ids.push_back(classIdPoint.x);

confidences.push_back(max_class_socre * box_score);

boxes.push_back(Rect(left, top, int(w + 0.5), int(h + 0.5)));

}

}

pdata += net_width;//下一行

}

//NMS

vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, _classThreshold, _nmsThreshold, nms_result);

std::vector<vector<float>> temp_mask_proposals;

Rect holeImgRect(0, 0, srcImg.cols, srcImg.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = class_ids[idx];

result.confidence = confidences[idx];

result.box = boxes[idx] & holeImgRect;

temp_mask_proposals.push_back(picked_proposals[idx]);

output.push_back(result);

}

MaskParams mask_params;

mask_params.params = params;

mask_params.srcImgShape = srcImg.size();

for (int i = 0; i < temp_mask_proposals.size(); ++i) {

GetMask2(Mat(temp_mask_proposals[i]).t(), net_output_img[1], output[i], mask_params); // 注意这里是net_output_img[1],为原型mask

}

//******************** ****************

// 老版本的方案,如果上面GetMask2出错,建议使用这个。

// If the GetMask2() still reports errors , it is recommended to use GetMask().

// Mat mask_proposals;

//for (int i = 0; i < temp_mask_proposals.size(); ++i)

// mask_proposals.push_back(Mat(temp_mask_proposals[i]).t());

//GetMask(mask_proposals, net_output_img[1], output, mask_params);

//*****************************************************/

if (output.size())

return true;

else

return false;

}main.cpp

#include <iostream>

#include<opencv2/opencv.hpp>

#include "yolo_seg.h"

#include<sys/time.h>

using namespace std;

using namespace cv;

using namespace dnn;

int main() {

//yolov5(); //https://github.com/UNeedCryDear/yolov5-opencv-dnn-cpp

string model_path = "/home/jason/PycharmProjects/pytorch_learn/yolo/yolov5-7.0/yolov5n-seg.onnx";

YoloSeg test;

Net net;

if (test.ReadModel(net, model_path, true)) {

cout << "read net ok!" << endl;

}

else {

return -1;

}

//生成随机颜色

vector<Scalar> color;

srand(time(0));

for (int i = 0; i < 80; i++) {

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

VideoCapture capture(0);

struct timeval t1, t2;

double timeuse;

Mat img;

while (1) {

capture >> img;

vector<OutputSeg> result;

gettimeofday(&t1, NULL);

bool find = test.Detect(img, net, result);

gettimeofday(&t2, NULL);

if (find) {

DrawPred(img, result, test._className, color);

}

else {

cout << "Detect Failed!"<<endl;

}

timeuse = (t2.tv_sec - t1.tv_sec) + (double)(t2.tv_usec - t1.tv_usec)/1000000; //s

string label = "duration:" + to_string(timeuse*1000); //ms

putText(img, label, Point(30,30), FONT_HERSHEY_SIMPLEX,0.5, Scalar(0,0,255), 2, 8);

imshow("result", img);

if(waitKey(1)=='q') break;

}

return 0;

}三、效果

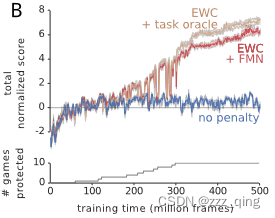

图片输入:

摄像头输入:

Yolov5n-seg用OpenCV DNN 进行C++部署,在CPU上居然200多ms,我承认与yolov5 检测相比输出变大,运算量也会增加。但是Yolov5n-seg在Python推理才100ms左右,可能是python的矩阵运算确实很快??有大佬说下原因吗?

觉得有帮助点个赞哦!