目录

搭建环境准备三台虚拟机:

环境条件限制:

一,关闭交换分区

二,禁用selinux

三,防火墙关闭

四,docker安装

五,设置IPv4 流量传递到 iptables

六,配置k8s的yum源

七,三台服务安装kubelet, kubeadm,kubectl

八 启动主节点:

九,启动从节点

十,配置cni pod网络插件

查看组件下载状态:

十一,卸载 kubelet kubeadm kubectl

问题汇总:

问题一,镜像问题

问题二 ,健康检测不过:

问题三 ,找不到环境变量

问题四,从节点使用kubectl工具

问题五,卸载后没有删除干净

虚拟机环境搭建可以参考博主这篇文章操作:centos虚拟机服务器手把手搭建_虚拟机搭建服务器_无名之辈之码谷娃的博客-CSDN博客

搭建环境准备三台虚拟机:

| ip | hostname | 配置内存 |

| 192.168.192.150 | master | Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 8 On-line CPU(s) list: 0-7 Thread(s) per core: 1 Core(s) per socket: 4 Socket(s): 2 NUMA node(s): 1 Vendor ID: GenuineIntel CPU family: 6 Model: 165 Model name: Intel(R) Core(TM) i7-10750H CPU @ 2.60GHz Stepping: 2 CPU MHz: 2591.589 BogoMIPS: 5183.99 Hypervisor vendor: VMware Virtualization type: full L1d cache: 32K L1i cache: 32K L2 cache: 256K L3 cache: 12288K |

| 192.168.192.151 | slave1 | Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 8 On-line CPU(s) list: 0-7 Thread(s) per core: 1 Core(s) per socket: 4 Socket(s): 2 NUMA node(s): 1 Vendor ID: GenuineIntel CPU family: 6 Model: 165 Model name: Intel(R) Core(TM) i7-10750H CPU @ 2.60GHz Stepping: 2 CPU MHz: 2591.589 BogoMIPS: 5183.99 Hypervisor vendor: VMware Virtualization type: full L1d cache: 32K L1i cache: 32K L2 cache: 256K L3 cache: 12288K |

| 192.168.192.151 | slave2 | Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 8 On-line CPU(s) list: 0-7 Thread(s) per core: 1 Core(s) per socket: 4 Socket(s): 2 NUMA node(s): 1 Vendor ID: GenuineIntel CPU family: 6 Model: 165 Model name: Intel(R) Core(TM) i7-10750H CPU @ 2.60GHz Stepping: 2 CPU MHz: 2591.589 BogoMIPS: 5183.99 Hypervisor vendor: VMware Virtualization type: full L1d cache: 32K L1i cache: 32K L2 cache: 256K L3 cache: 12288K |

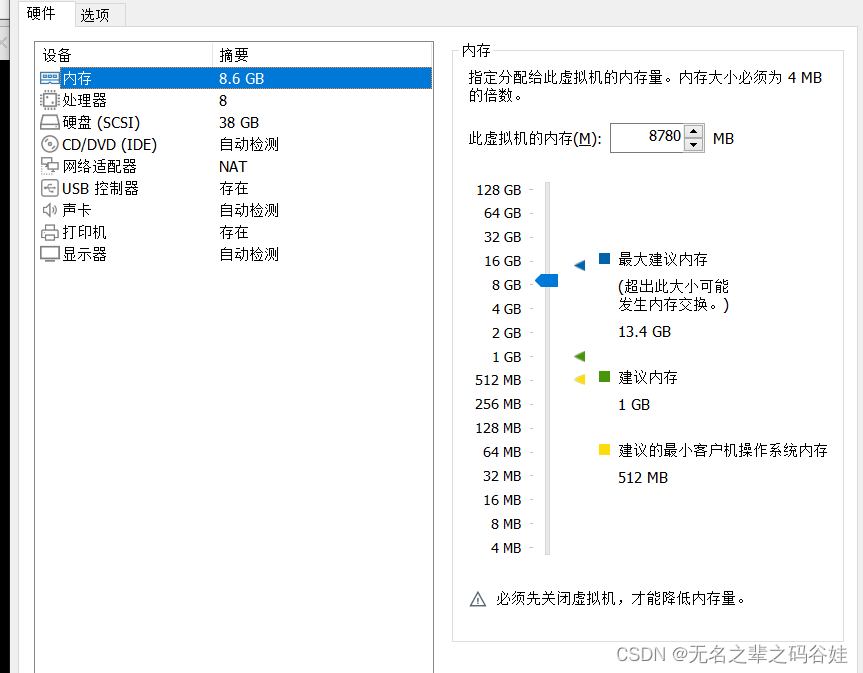

我这里是三台都是8g的内存

最低配置:2GB 或更多 RAM,2 个 CPU 或更多 CPU,硬盘30G 配置是越高越好

环境条件限制:

1,集群中所有机器之间网络互通 可以访问外网,需要拉取镜像

2, 禁止 swap 分区

3,禁用selinux

4,关闭防火墙

前置工作:

一,关闭交换分区

--临时关闭

swapoff -a

--永久关闭

sed -i 's/.*swap.*/#&/' /etc/fstab

二,禁用selinux

--永久禁用

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

三,防火墙关闭

--禁用

systemctl disable firewalld.service

systemctl stop firewalld.service

注:这三台服务器都要执行这三个命令

四,docker安装

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O/etc/yum.repos.d/docker-ce.repo

##安装指定版本docker

yum -y install docker-ce-18.06.1.ce-3.el7

##设置后台启动docker

systemctl enable docker && systemctl start docker

##设置镜像加速

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

五,设置IPv4 流量传递到 iptables

##设置IPv4 流量传递到 iptables

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

##执行生效

sysctl --system

六,配置k8s的yum源

vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

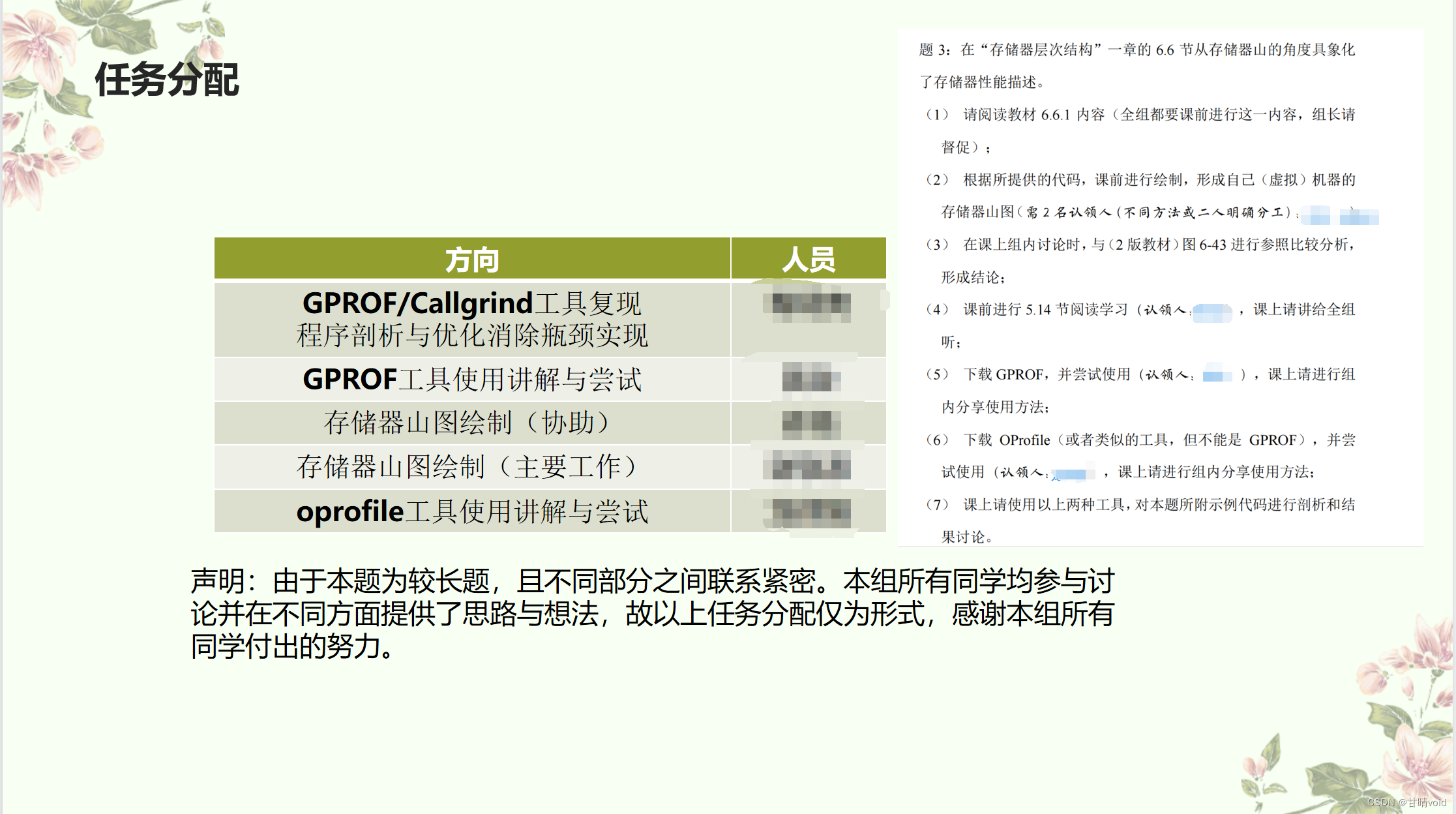

七,三台服务安装kubelet, kubeadm,kubectl

##指定版本安装

yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

##后台启动

systemctl enable kubelet

##启动kubelet

systemctl start kubelet

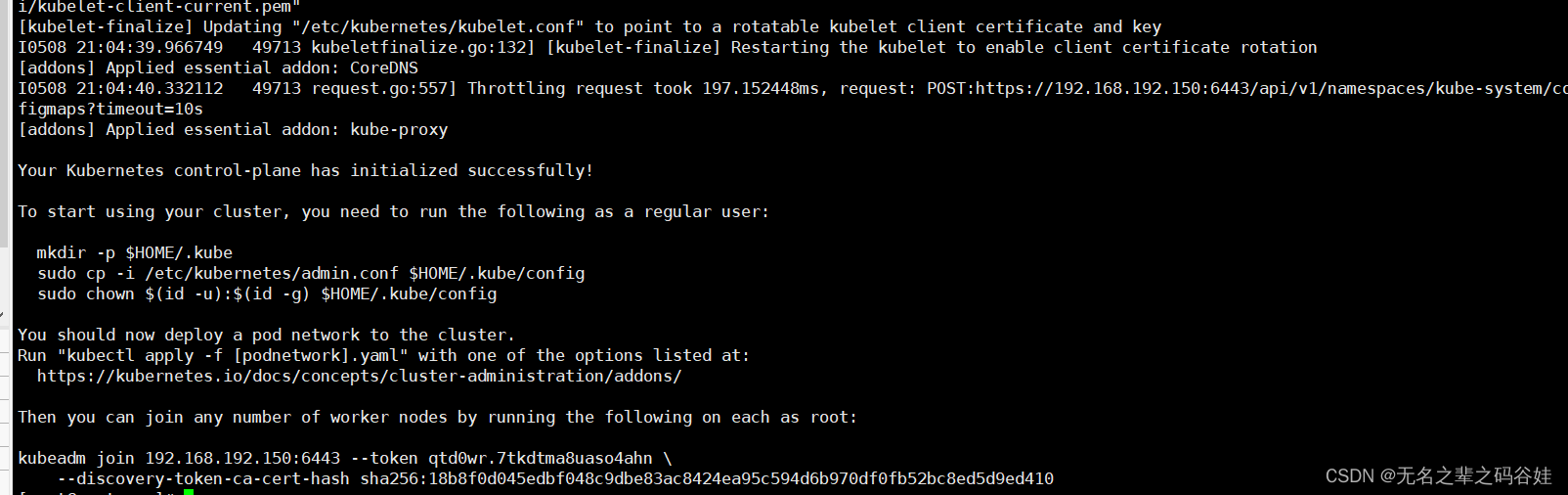

八 启动主节点:

kubeadm init --apiserver-advertise-address=192.168.192.150 --image-repository=registry.aliyuncs.com/google_containers --kubernetes-version=v1.18.0 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --v=5

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.192.150:6443 --token qtd0wr.7tkdtma8uaso4ahn \

--discovery-token-ca-cert-hash sha256:18b8f0d045edbf048c9dbe83ac8424ea95c594d6b970df0fb52bc8ed5d9ed410

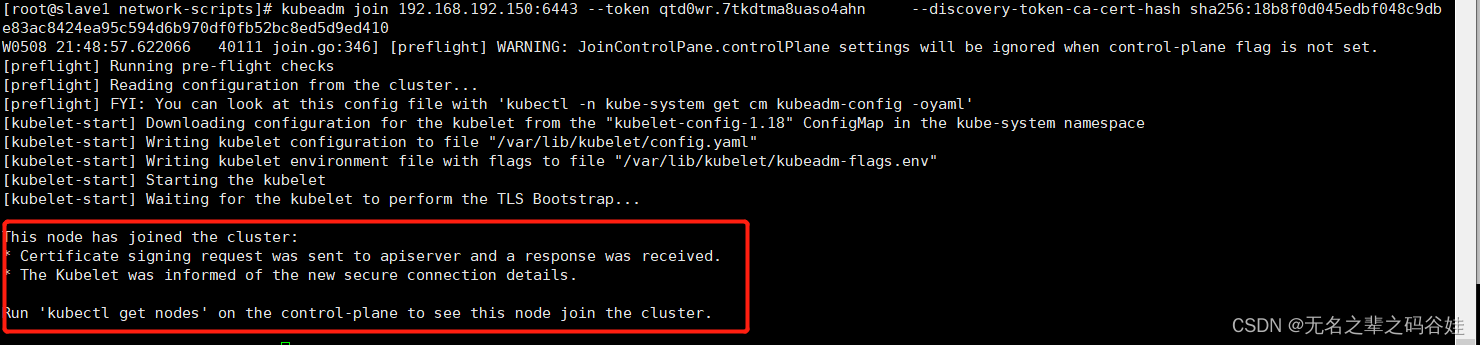

九,启动从节点

需要把主节点的命令去从节点执行:

kubeadm join 192.168.192.150:6443 --token qtd0wr.7tkdtma8uaso4ahn \

--discovery-token-ca-cert-hash sha256:18b8f0d045edbf048c9dbe83ac8424ea95c594d6b970df0fb52bc8ed5d9ed410

slave1:

slave2:

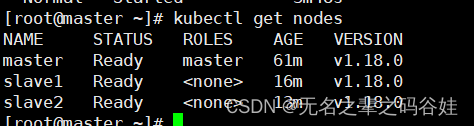

查看节点状态:

kubectl get nodes

这个时候节点状态是未就绪,还得配置pod网络插件

十,配置cni pod网络插件

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

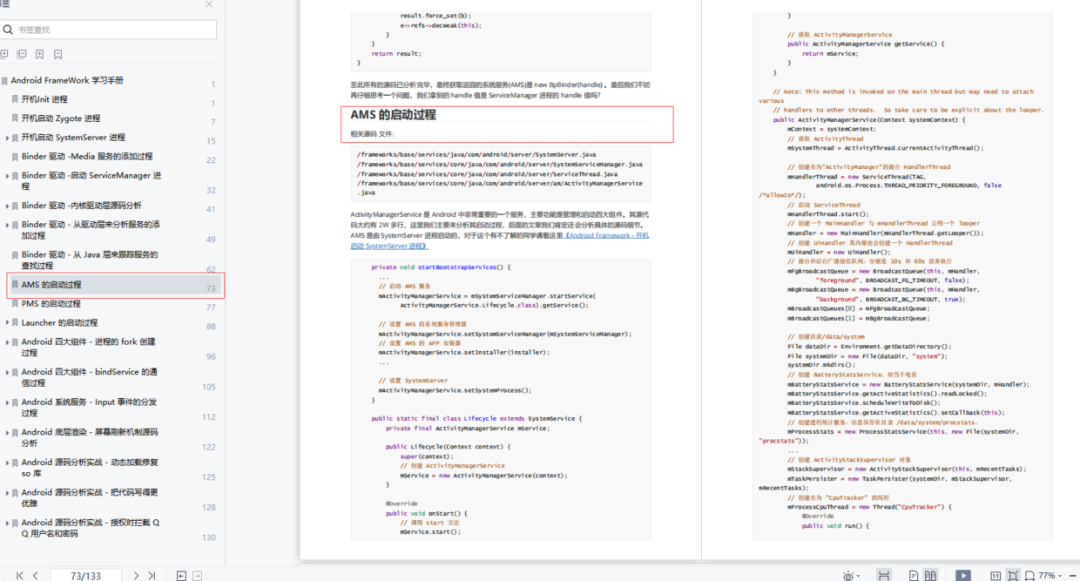

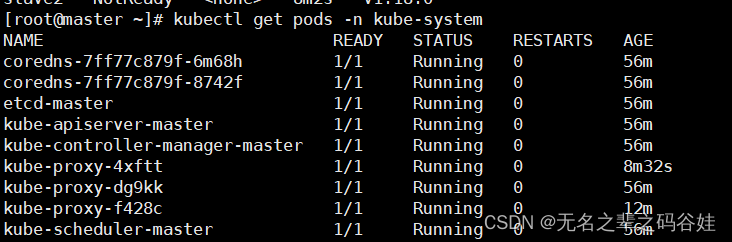

查看组件下载状态:

kubectl get pods -n kube-system

如果这俩个下载失败,没有Running状态就需要手动拉取镜像了

coredns-7ff77c879f-6m68h 1/1 Running 0 57m

coredns-7ff77c879f-8742f 1/1 Running 0 57m

kubectl describe pod -n kube-system coredns-7ff77c879f-6m68h

成功的状态:

kubectl get nodes

到这里我们的集群还不算真正完成,我们创建一个容器运行看看

验证一个nginx是否能够正常访问

kubectl create deployment nginx --image=nginx

![]()

kubectl expose deployment nginx --port=80 --type=NodePort![]()

kubectl get pod,svc

到这里我们的集群才算是搭建成功!!!

十一,卸载 kubelet kubeadm kubectl

##环境搭建问题后可以卸载重新执行命令操作

yum remove -y kubelet kubeadm kubectl

kubeadm reset -f

modprobe -r ipip

lsmod

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /etc/systemd/system/kubelet.service.d

rm -rf /etc/systemd/system/kubelet.service

rm -rf /usr/bin/kube*

rm -rf /etc/cni

rm -rf /opt/cni

rm -rf /var/lib/etcd

rm -rf /var/etcd

问题汇总:

问题一:镜像问题

[root@master ~]# kubeadm init --apiserver-advertise-address=192.168.192.150 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.18.0--service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --v=5

I0508 20:31:14.877517 38501 initconfiguration.go:103] detected and using CRI socket: /var/run/dockershim.sock

invalid version "v1.18.0--service-cidr=10.96.0.0/12"

k8s.io/kubernetes/cmd/kubeadm/app/util.splitVersion

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/util/version.go:167

k8s.io/kubernetes/cmd/kubeadm/app/util.kubernetesReleaseVersion

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/util/version.go:78

k8s.io/kubernetes/cmd/kubeadm/app/util.KubernetesReleaseVersion

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/util/version.go:66

k8s.io/kubernetes/cmd/kubeadm/app/util/config.NormalizeKubernetesVersion

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/util/config/common.go:93

k8s.io/kubernetes/cmd/kubeadm/app/util/config.SetClusterDynamicDefaults

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/util/config/initconfiguration.go:149

k8s.io/kubernetes/cmd/kubeadm/app/util/config.SetInitDynamicDefaults

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/util/config/initconfiguration.go:56

k8s.io/kubernetes/cmd/kubeadm/app/util/config.DefaultedInitConfiguration

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/util/config/initconfiguration.go:188

k8s.io/kubernetes/cmd/kubeadm/app/util/config.LoadOrDefaultInitConfiguration

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/util/config/initconfiguration.go:222

k8s.io/kubernetes/cmd/kubeadm/app/cmd.newInitData

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/cmd/init.go:330

k8s.io/kubernetes/cmd/kubeadm/app/cmd.NewCmdInit.func3

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/cmd/init.go:191

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).InitData

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow/runner.go:183

k8s.io/kubernetes/cmd/kubeadm/app/cmd.NewCmdInit.func1

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/cmd/init.go:139

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).execute

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/github.com/spf13/cobra/command.go:826

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).ExecuteC

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/github.com/spf13/cobra/command.go:914

k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).Execute

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/github.com/spf13/cobra/command.go:864

k8s.io/kubernetes/cmd/kubeadm/app.Run

/workspace/anago-v1.18.0-rc.1.21+8be33caaf953ac/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/kubeadm.go:50

main.main

_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/kubeadm.go:25

runtime.main

/usr/local/go/src/runtime/proc.go:203

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1357

这个问题一般还是分区问题

问题二 健康检测不过:

The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get http://localhost:10248/healthz: dial tcp [::1]:10248: connect: connection refused.

###问题一和问题二,问题五都可以执行这个命令一般都是分区和网络问题

sudo swapoff -a

sudo kubeadm reset

sudo rm -rf /var/lib/cni/

sudo systemctl daemon-reload

sudo iptables -F && sudo iptables -t nat -F && sudo iptables -t mangle -F && sudo iptables -X问题三 找不到环境变量

[root@master opt]# kubectl get nodes

error: no configuration has been provided, try setting KUBERNETES_MASTER environment variable

这一步需要在安装完kubectl 后启动后 执行这个命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

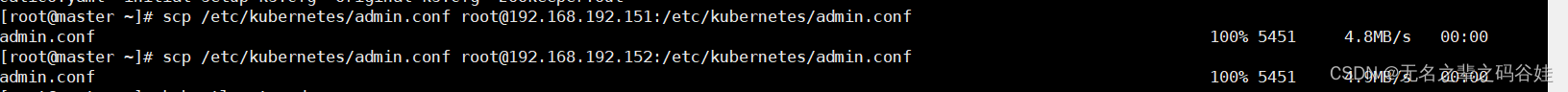

sudo chown $(id -u):$(id -g) $HOME/.kube/config问题四,从节点使用kubectl工具

这个从节点执行没有目录:去主节点执行命令复制文件就行

scp /etc/kubernetes/admin.conf root@192.168.192.151:/etc/kubernetes/admin.conf

scp /etc/kubernetes/admin.conf root@192.168.192.152:/etc/kubernetes/admin.conf

[root@slave1 network-scripts]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

cp: cannot stat ‘/etc/kubernetes/admin.conf’: No such file or directory

五,卸载后没有删除干净

从节点报错:

error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition

To see the stack trace of this error execute with --v=5 or higher

[root@slave1 network-scripts]# kubeadm join 192.168.192.150:6443 --token qtd0wr.7tkdtma8uaso4ahn \

> --discovery-token-ca-cert-hash sha256:18b8f0d045edbf048c9dbe83ac8424ea95c594d6b970df0fb52bc8ed5d9ed410

W0508 21:39:20.279566 38344 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

[ERROR Port-10250]: Port 10250 is in use

[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

rm -f /etc/kubernetes/pki/ca.crt

rm -f /etc/kubernetes/kubelet.conf

杀端口:10250

主要是分区问题和节点网络问题可以执行问题二命令

————没有与生俱来的天赋,都是后天的努力拼搏(我是小杨,谢谢你的关注和支持)