参考:SIFT图像匹配原理及python实现(源码实现及基于opencv实现)

#include <iostream>

#include <opencv2/opencv.hpp>

#define _USE_MATH_DEFINES

#include <math.h>

#include <numeric>

float mod_float(float x, float y) //小数取模

{

return x - floor(x / y) * y;

}

bool sort_method(const cv::KeyPoint kp1, const cv::KeyPoint kp2)

{

if (kp1.pt.x != kp2.pt.x)

return kp1.pt.x < kp2.pt.x;

if (kp1.pt.y != kp2.pt.y)

return kp1.pt.y < kp2.pt.y;

if (kp1.size != kp2.size)

return kp1.size < kp2.size;

if (kp1.angle != kp2.angle)

return kp1.angle < kp2.angle;

if (kp1.response != kp2.response)

return kp1.response < kp2.response;

if (kp1.octave != kp2.octave)

return kp1.octave < kp2.octave;

return kp1.class_id < kp2.class_id;

}

cv::Mat vec2mat(std::vector<std::vector<float>> vec) //二维vector转Mat

{

cv::Mat m(vec.size(), vec[0].size(), CV_32F);

for (int i = 0; i < vec.size(); ++i)

m.row(i) = cv::Mat(vec[i]).t();

return m;

}

class SIFT

{

public:

SIFT(int num_octave, int num_scale, float sigma)

{

m_num_octave = num_octave;

m_num_scale = num_scale;

m_sigma = sigma;

m_contrast_t = 0.04;

m_eigenvalue_r = 10;

m_scale_factor = 1.5;

m_radius_factor = 3;

m_num_bins = 36;

m_peak_ratio = 0.8;

}

cv::Mat pre_treat_img(cv::Mat img_src, float sigma, float sigma_camera = 0.5)

{

float sigma_mid = sqrt(pow(sigma, 2) - pow(2*sigma_camera, 2));

cv::Mat img = img_src.clone();

cv::resize(img, img, cv::Size(img.cols * 2, img.rows * 2));

cv::GaussianBlur(img, img, cv::Size(0, 0), sigma_mid, sigma_mid);

return img;

}

int get_numOfOctave(cv::Mat img)

{

return std::round(log(std::min(img.cols, img.rows)) / log(2)) - 1;

}

std::vector<cv::Mat> construct_octave(cv::Mat img_src, float s, float sigma)

{

std::vector<cv::Mat> octave;

octave.push_back(img_src);

float k = pow(2, 1 / s);

for (size_t i = 1; i < s + 3; i++)

{

cv::Mat img = octave.back().clone(), cur_img;

float cur_sigma = sigma * pow(k, i);

float pre_sigma = sigma * pow(k, i - 1);

float mid_sigma = sqrt(pow(cur_sigma, 2) - pow(pre_sigma, 2));

cv::GaussianBlur(img, cur_img, cv::Size(0, 0), mid_sigma, mid_sigma);

octave.push_back(cur_img);

}

return octave;

}

std::vector<std::vector<cv::Mat>> construct_gaussian_pyramid(cv::Mat img_src)

{

std::vector<std::vector<cv::Mat>> pyr;

cv::Mat img_base = img_src.clone();

for (size_t i = 0; i < m_num_octave; i++)

{

std::vector<cv::Mat> octave = construct_octave(img_base, m_num_scale, m_sigma);

pyr.push_back(octave);

img_base = octave[octave.size() - 3];

cv::resize(img_base, img_base, cv::Size(img_base.cols / 2, img_base.rows / 2));

}

return pyr;

}

std::vector<std::vector<cv::Mat>> construct_DOG(std::vector<std::vector<cv::Mat>> pyr)

{

std::vector<std::vector<cv::Mat>> dog_pyr;

for (size_t i = 0; i < pyr.size(); i++)

{

std::vector<cv::Mat> octave = pyr[i];

std::vector<cv::Mat> dog;

for (size_t j = 0; j < octave.size() - 1; j++)

{

cv::Mat diff = octave[j + 1] - octave[j];

dog.push_back(diff);

}

dog_pyr.push_back(dog);

}

return dog_pyr;

}

bool is_extreme(cv::Mat bot, cv::Mat mid, cv::Mat top, float thr)

{

float c = mid.at<float>(1, 1);

cv::Mat temp;

cv::vconcat(bot, mid, temp);

cv::vconcat(temp, top, temp);

//std::cout << bot << std::endl;

//std::cout << temp << std::endl;

if (c > thr)

{

int len = 0;

for (size_t i = 0; i < temp.rows; i++)

{

for (size_t j = 0; j < temp.cols; j++)

{

if (temp.at<float>(i, j) > c)

len++;

}

}

return len == 0;

}

else if (c < -thr)

{

int len = 0;

for (size_t i = 0; i < temp.rows; i++)

{

for (size_t j = 0; j < temp.cols; j++)

{

if (temp.at<float>(i, j) < c)

len++;

}

}

return len == 0;

}

return false;

}

cv::Mat get_gradient(cv::Mat arr)

{

//std::cout << arr << std::endl;

//std::cout << arr.at<cv::Vec3f>(1, 2)[1] << " " << arr.at<cv::Vec3f>(1, 0)[1] << std::endl;

float dx = (arr.at<cv::Vec3f>(1, 2)[1] - arr.at<cv::Vec3f>(1, 0)[1]) / 2;

float dy = (arr.at<cv::Vec3f>(2, 1)[1] - arr.at<cv::Vec3f>(0, 1)[1]) / 2;

float ds = (arr.at<cv::Vec3f>(1, 1)[2] - arr.at<cv::Vec3f>(1, 1)[0]) / 2;

cv::Mat ret = (cv::Mat_<float>(3, 1) << dx, dy, ds);

//std::cout << ret << std::endl;

return ret;

}

cv::Mat get_hessian(cv::Mat arr)

{

float dxx = arr.at<cv::Vec3f>(1, 2)[1] - 2 * arr.at<cv::Vec3f>(1, 1)[1] + arr.at<cv::Vec3f>(1, 0)[1];

float dyy = arr.at<cv::Vec3f>(2, 1)[1] - 2 * arr.at<cv::Vec3f>(1, 1)[1] + arr.at<cv::Vec3f>(0, 1)[1];

float dss = arr.at<cv::Vec3f>(1, 1)[2] - 2 * arr.at<cv::Vec3f>(1, 1)[1] + arr.at<cv::Vec3f>(1, 1)[0];

float dxy = 0.25 * (arr.at<cv::Vec3f>(0, 0)[1] + arr.at<cv::Vec3f>(2, 2)[1] - arr.at<cv::Vec3f>(0, 2)[1] - arr.at<cv::Vec3f>(2, 0)[1]);

float dxs = 0.25 * (arr.at<cv::Vec3f>(1, 0)[0] + arr.at<cv::Vec3f>(1, 2)[2] - arr.at<cv::Vec3f>(1, 2)[0] - arr.at<cv::Vec3f>(1, 0)[2]);

float dys = 0.25 * (arr.at<cv::Vec3f>(0, 1)[0] + arr.at<cv::Vec3f>(2, 1)[2] - arr.at<cv::Vec3f>(2, 1)[0] - arr.at<cv::Vec3f>(0, 1)[2]);

cv::Mat ret = (cv::Mat_<float>(3, 3) << dxx, dxy, dxs, dxy, dyy, dys, dxs, dys, dss);

//std::cout << ret << std::endl;

return ret;

}

std::vector<cv::Mat> fit_extreme(cv::Mat bot, cv::Mat mid, cv::Mat top)

{

//std::cout << bot << std::endl;

//std::cout << mid << std::endl;

//std::cout << top << std::endl;

std::vector<cv::Mat> arr_split = { bot, mid, top };

cv::Mat arr, g, h, rt;

cv::merge(arr_split, arr);

g = get_gradient(arr/255);

h = get_hessian(arr/255);

rt = -h.inv() * g;

//std::cout << rt << std::endl;

return { g,h,rt };

}

std::pair<cv::KeyPoint, int> try_fit_extreme(std::vector<cv::Mat> octave, int s, int i, int j, int board_width, int octave_index)

{

bool flag = false;

cv::Mat bot_img = octave[s - 1];

cv::Mat mid_img = octave[s];

cv::Mat top_img = octave[s + 1];

cv::Mat g, h, offset;

for (size_t n = 0; n < 5; n++)

{

//std::cout << bot_img(cv::Rect(j - 1, i - 1, 3, 3)) << std::endl;

std::vector<cv::Mat> vec = fit_extreme(bot_img(cv::Rect(j - 1, i - 1, 3, 3)),

mid_img(cv::Rect(j - 1, i - 1, 3, 3)),

top_img(cv::Rect(j - 1, i - 1, 3, 3)));

g = vec[0];

h = vec[1];

offset = vec[2];

//std::cout << offset << std::endl;

float max_value = 0;

for (size_t k = 0; k < offset.rows; k++)

{

if (max_value < fabs(offset.at<float>(k, 0)))

max_value = fabs(offset.at<float>(k, 0));

}

//std::cout << max_value << std::endl;

if (max_value < 0.5)

{

flag = true;

break;

}

s = round(s + offset.at<float>(2, 0));

i = round(i + offset.at<float>(1, 0));

j = round(j + offset.at<float>(0, 0));

//std::cout << s << " " << i << " " << j << std::endl;

if (i<board_width || i>bot_img.rows - board_width ||

j<board_width || j>bot_img.cols - board_width ||

s<1 || s>octave.size() - 2)

{

break;

}

}

if (!flag)

return {};

float ex_value = mid_img.at<float>(i, j) / 255 + 0.5 * g.dot(offset);

if (fabs(ex_value) * m_num_scale < m_contrast_t)

return {};

cv::Mat hxy = h(cv::Rect(0, 0, 2, 2));

float trace_h = cv::trace(hxy)[0];

float det_h = cv::determinant(hxy);

if(det_h > 0 && (pow(trace_h,2) / det_h) < (pow(m_eigenvalue_r + 1,2) / m_eigenvalue_r))

{

cv::KeyPoint kp;

kp.response = fabs(ex_value);

//std::cout << offset << std::endl;

i = i + offset.at<float>(1, 0);

j = j + offset.at<float>(0, 0);

kp.pt = cv::Point2f(j *1.0 / bot_img.cols, i*1.0 / bot_img.rows);

kp.size = m_sigma * pow(2, (s + offset.at<float>(2, 0)) / m_num_scale) * pow(2, octave_index);

kp.octave = octave_index + s * pow(2, 8) + int(round((offset.at<float>(2, 0) + 0.5) * 255)) * pow(2, 16);

return { kp, s };

}

return {};

}

std::vector<cv::KeyPoint> compute_orientation(cv::KeyPoint kp, int octave_index, cv::Mat img)

{

std::vector<cv::KeyPoint> keypoints_with_orientations;

float cur_scale = kp.size / pow(2, octave_index);

float radius = round(m_radius_factor * m_scale_factor * cur_scale);

float weight_sigma = -0.5 / pow(m_scale_factor * cur_scale, 2);

std::vector<float> raw_histogram(m_num_bins);

int cx = round(kp.pt.x * img.cols);

int cy = round(kp.pt.y * img.rows);

for (int y = cy - radius; y < cy + radius + 1; y++)

{

for (int x = cx - radius; x < cx + radius + 1; x++)

{

if(y > 0 && y < img.rows - 1 && x > 0 && x < img.cols - 1)

{

float dx = img.at<float>(y, x + 1) - img.at<float>(y, x - 1);

float dy = img.at<float>(y - 1, x) - img.at<float>(y + 1, x);

float mag = sqrt(dx * dx + dy * dy);

float angle = atan2(dy, dx) * 180 / M_PI;

if (angle < 0)

angle += 360;

int angle_index = round(angle / (360 / m_num_bins));

angle_index %= m_num_bins;

float weight = exp(weight_sigma * (pow(x - cx, 2) + pow(y - cy, 2)));

raw_histogram[angle_index] = raw_histogram[angle_index] + mag * weight;

}

}

}

std::vector<float> h = raw_histogram;

std::vector<float> ha2{ h[0], h[1] };

ha2.insert(ha2.begin(), h.begin() + 2, h.end());

std::vector<float> hm2{ h[h.size() - 2], h[h.size() - 1] };

hm2.insert(hm2.end(), h.begin(), h.end() - 2);

std::vector<float> ha1{ h[0] };

ha1.insert(ha1.begin(), h.begin() + 1, h.end());

std::vector<float> hm1{ h[h.size() - 1] };

hm1.insert(hm1.end(), h.begin(), h.end() - 1);

std::vector<float> smooth_histogram(h.size());

for (size_t i = 0; i < h.size(); i++)

{

smooth_histogram[i] = (ha2[i] + hm2[i] + 4 * (ha1[i] + hm1[i]) + 6 * h[i]) / 16;

}

std::vector<float> s = smooth_histogram;

float max_v = *std::max_element(s.begin(), s.end());

std::vector<float> s1{ s[s.size() - 1] };

s1.insert(s1.end(), s.begin(), s.end() - 1);

std::vector<float> s2{ s[0] };

s2.insert(s2.begin(), s.begin() + 1, s.end());

std::vector<int> index;

for (size_t i = 0; i < s.size(); i++)

{

if (s[i] >= s1[i] && s[i] >= s2[i])

index.push_back(i);

}

for (int i : index)

{

float peak_v = s[i];

if (peak_v >= m_peak_ratio * max_v)

{

float left_v = s[(i - 1) % m_num_bins < 0 ? (m_num_bins - 1) : (i - 1) % m_num_bins];

float right_v = s[(i + 1) % m_num_bins];

float index_fit = mod_float(i + 0.5 * (left_v - right_v) / (left_v + right_v - 2 * peak_v), m_num_bins);

float angle = 360 - index_fit *1.0 / m_num_bins * 360;

cv::KeyPoint new_kp;

new_kp.pt = kp.pt;

new_kp.size = kp.size;

new_kp.angle = angle;

new_kp.response = kp.response;

new_kp.octave = kp.octave;

keypoints_with_orientations.push_back(new_kp);

}

}

return keypoints_with_orientations;

}

std::vector<cv::KeyPoint> get_keypoints(std::vector<std::vector<cv::Mat>> gau_pyr, std::vector<std::vector<cv::Mat>> dog_pyr)

{

std::vector<cv::KeyPoint> keypoints;

float threshold = std::floor(0.5 * m_contrast_t / m_num_scale * 255);

for (size_t octave_index = 0; octave_index < dog_pyr.size(); octave_index++)

{

std::vector<cv::Mat> octave = dog_pyr[octave_index];

for (size_t s = 1; s < octave.size() - 1; s++)

{

std::cout << octave_index << " " << s << "************************************" << std::endl;

cv::Mat bot_img = octave[s - 1];

cv::Mat mid_img = octave[s];

cv::Mat top_img = octave[s + 1];

int board_width = 5;

int x_st = board_width, y_st = board_width;

int x_ed = bot_img.rows - board_width, y_ed = bot_img.cols - board_width;

for (int i = x_st; i < x_ed; i++)

{

for (int j = y_st; j < y_ed; j++)

{

//std::cout << i << " " << j << std::endl;

//cv::Mat tmp = bot_img(cv::Rect(j - 1, i - 1, 3, 3));

//std::cout << tmp << std::endl;

bool flag = is_extreme(bot_img(cv::Rect(j - 1, i - 1, 3, 3)),

mid_img(cv::Rect(j - 1, i - 1, 3, 3)),

top_img(cv::Rect(j - 1, i - 1, 3, 3)),

threshold);

//std::cout << flag << std::endl;

if (flag)

{

std::pair<cv::KeyPoint, int> reu = try_fit_extreme(octave, s, i, j, board_width, octave_index);

if (reu.first.size != 0)

{

cv::KeyPoint kp = reu.first;

int stemp = reu.second;

std::vector<cv::KeyPoint> kp_orientation = compute_orientation(kp, octave_index, gau_pyr[octave_index][stemp]);

for (auto k : kp_orientation)

keypoints.push_back(k);

//std::cout << octave_index << " " << s << " " << i << " " << j << " " << keypoints.size() << std::endl;

}

}

}

}

}

}

return keypoints;

}

std::vector<cv::KeyPoint> remove_duplicate_points(std::vector<cv::KeyPoint> keypoints)

{

std::sort(keypoints.begin(), keypoints.end(), sort_method);

std::vector<cv::KeyPoint> unique_keypoints = { keypoints[0] };

for (size_t i = 1; i < keypoints.size(); i++)

{

cv::KeyPoint last_unique_keypoint = unique_keypoints.back();

if (last_unique_keypoint.pt.x != keypoints[i].pt.x ||

last_unique_keypoint.pt.y != keypoints[i].pt.y ||

last_unique_keypoint.size != keypoints[i].size ||

last_unique_keypoint.angle != keypoints[i].angle)

{

unique_keypoints.push_back(keypoints[i]);

}

}

return unique_keypoints;

}

cv::Mat get_descriptor(std::vector<cv::KeyPoint> kps, std::vector<std::vector<cv::Mat>> gau_pyr,

int win_N = 4, int num_bins = 8, int scale_multiplier = 3, float des_max_value = 0.2)

{

std::vector<std::vector<float>> descriptors_vec;

for (auto kp : kps)

{

int octave = kp.octave & 255;

int layer = (kp.octave >> 8) & 255;

cv::Mat image = gau_pyr[octave][layer];

float bins_per_degree = float(num_bins / 360.0);

float angle = 360.0 - kp.angle;

float cos_angle = cos(angle * M_PI / 180);

float sin_angle = sin(angle * M_PI / 180);

float weight_multiplier = -0.5 / pow(0.5 * win_N, 2);

std::vector<float> row_bin_list;

std::vector<float> col_bin_list;

std::vector<float> magnitude_list;

std::vector<float> orientation_bin_list;

std::vector<std::vector<std::vector<float>>> histogram_tensor(win_N + 2, std::vector<std::vector<float>>(win_N + 2, std::vector<float>(num_bins, 0.0)));

float hist_width = scale_multiplier * kp.size / pow(2, octave);

int radius = int(round(hist_width * sqrt(2) * (win_N + 1) * 0.5));

radius = int(std::min(double(radius), sqrt(pow(image.rows, 2) + pow(image.cols, 2))));

for (int row = -radius; row < radius + 1; row++)

{

for (int col = -radius; col < radius + 1; col++)

{

float row_rot = col * sin_angle + row * cos_angle;

float col_rot = col * cos_angle - row * sin_angle;

float row_bin = (row_rot / hist_width) + 0.5 * win_N - 0.5;

float col_bin = (col_rot / hist_width) + 0.5 * win_N - 0.5;

if(row_bin > -1 && row_bin < win_N && col_bin > -1 && col_bin < win_N)

{

int window_col = int(round(kp.pt.x * image.cols + col));

int window_row = int(round(kp.pt.y * image.rows + row));

if(window_row > 0 && window_row < image.rows - 1 && window_col > 0 && window_col < image.cols - 1)

{

float dx = image.at<float>(window_row, window_col + 1) - image.at<float>(window_row, window_col - 1);

float dy = image.at<float>(window_row - 1, window_col) - image.at<float>(window_row + 1, window_col);

float gradient_magnitude = sqrt(dx * dx + dy * dy);

float gradient_orientation = mod_float(atan2(dy, dx) * 180 / M_PI, 360);

float weight = exp(weight_multiplier * (pow(row_rot / hist_width, 2) + pow(col_rot / hist_width, 2)));

row_bin_list.push_back(row_bin);

col_bin_list.push_back(col_bin);

magnitude_list.push_back(weight * gradient_magnitude);

orientation_bin_list.push_back((gradient_orientation - angle) * bins_per_degree);

}

}

}

}

for (size_t i = 0; i < row_bin_list.size(); i++)

{

int ri = floor(row_bin_list[i]), ci = floor(col_bin_list[i]), oi = floor(orientation_bin_list[i]);

float rf = row_bin_list[i] - ri, cf = col_bin_list[i] - ci, of = orientation_bin_list[i] - oi;

float c0 = magnitude_list[i] * (1 - rf);

float c1 = magnitude_list[i] * rf;

float c00 = c0 * (1 - cf);

float c01 = c0 * cf;

float c10 = c1 * (1 - cf);

float c11 = c1 * cf;

float c000 = c00 * (1 - of), c001 = c00 * of;

float c010 = c01 * (1 - of), c011 = c01 * of;

float c100 = c10 * (1 - of), c101 = c10 * of;

float c110 = c11 * (1 - of), c111 = c11 * of;

//std::cout << ri << " " << ci << " " << oi << std::endl;

if (oi < 0)

oi += num_bins;

histogram_tensor[ri + 1][ci + 1][oi] += c000;

histogram_tensor[ri + 1][ci + 1][(oi + 1) % num_bins] += c001;

histogram_tensor[ri + 1][ci + 2][oi] += c010;

histogram_tensor[ri + 1][ci + 2][(oi + 1) % num_bins] += c011;

histogram_tensor[ri + 2][ci + 1][oi] += c100;

histogram_tensor[ri + 2][ci + 1][(oi + 1) % num_bins] += c101;

histogram_tensor[ri + 2][ci + 2][oi] += c110;

histogram_tensor[ri + 2][ci + 2][(oi + 1) % num_bins] += c111;

}

std::vector<float> des_vec;//4*4*8

for (size_t i = 1; i < histogram_tensor.size() - 1; i++)

for (size_t j = 1; j < histogram_tensor[0].size() - 1; j++)

for (size_t k = 0; k < histogram_tensor[0][0].size(); k++)

des_vec.push_back(histogram_tensor[i][j][k]);

float norm = 0.0;

for (size_t i = 0; i < des_vec.size(); i++)

norm += pow(des_vec[i], 2);

norm = sqrt(norm);

for (size_t i = 0; i < des_vec.size(); i++)

des_vec[i] /= norm;

for (size_t i = 0; i < des_vec.size(); i++)

if (des_vec[i] > des_max_value)

des_vec[i] = des_max_value;

for (size_t i = 0; i < des_vec.size(); i++)

des_vec[i] = round(512 * des_vec[i]);

for (size_t i = 0; i < des_vec.size(); i++)

if (des_vec[i] < 0)

des_vec[i] = 0;

for (size_t i = 0; i < des_vec.size(); i++)

if (des_vec[i] > 255)

des_vec[i] = 255;

descriptors_vec.push_back(des_vec);

}

cv::Mat descriptors = vec2mat(descriptors_vec);

return descriptors;

}

std::pair<std::vector<cv::KeyPoint>, cv::Mat> do_sift(cv::Mat img_src)

{

cv::Mat img = img_src.clone();

img.convertTo(img, CV_32F);

img = pre_treat_img(img, m_sigma);

m_num_octave = get_numOfOctave(img);

std::vector<std::vector<cv::Mat>> gaussian_pyr = construct_gaussian_pyramid(img);

std::vector<std::vector<cv::Mat>> dog_pyr = construct_DOG(gaussian_pyr);

//std::cout << dog_pyr[0][0].at<float>(0, 0) << std::endl;

std::vector<cv::KeyPoint> keypoints = get_keypoints(gaussian_pyr, dog_pyr);

keypoints = remove_duplicate_points(keypoints);

cv::Mat descriptors = get_descriptor(keypoints, gaussian_pyr);

return { keypoints , descriptors };

}

cv::Mat do_match(cv::Mat img_src1, std::vector<cv::KeyPoint> kp1, cv::Mat des1,

cv::Mat img_src2, std::vector<cv::KeyPoint> kp2, cv::Mat des2,

int pt_flag = 0, int MIN_MATCH_COUNT = 10)

{

cv::FlannBasedMatcher flann(new cv::flann::KDTreeIndexParams(5), new cv::flann::SearchParams(50));

std::vector<std::vector<cv::DMatch>> matches;

flann.knnMatch(des1, des2, matches, 2);

std::vector<cv::DMatch> good_match;

for (auto m : matches)

{

if (m[0].distance < 0.7 * m[1].distance)

good_match.push_back(m[0]);

}

cv::Mat img1 = img_src1.clone();

cv::Mat img2 = img_src2.clone();

int h1 = img1.rows, w1 = img1.cols;

int h2 = img2.rows, w2 = img2.cols;

int new_w = w1 + w2;

int new_h = std::max(h1, h2);

cv::Mat new_img = cv::Mat::zeros(cv::Size(new_w, new_h), CV_8UC1);

int h_offset1 = int(0.5 * (new_h - h1));

int h_offset2 = int(0.5 * (new_h - h2));

cv::Mat roi1 = new_img(cv::Rect(0, h_offset1, w1, h1));

img1.copyTo(roi1);

cv::Mat roi2 = new_img(cv::Rect(w1, h_offset2, w2, h2));

img2.copyTo(roi2);

std::vector<cv::Point> src_pts;

std::vector<cv::Point> dst_pts;

if (good_match.size() > MIN_MATCH_COUNT)

{

for (auto m : good_match)

{

if (pt_flag == 0)

{

src_pts.push_back(cv::Point(kp1[m.queryIdx].pt.x * img1.cols, kp1[m.queryIdx].pt.y * img1.rows));

dst_pts.push_back(cv::Point(kp2[m.trainIdx].pt.x * img2.cols, kp2[m.trainIdx].pt.y * img2.rows));

}

else

{

src_pts.push_back(cv::Point(kp1[m.queryIdx].pt.x, kp1[m.queryIdx].pt.y));

dst_pts.push_back(cv::Point(kp2[m.trainIdx].pt.x, kp2[m.trainIdx].pt.y));

}

}

}

cv::cvtColor(new_img, new_img, cv::COLOR_GRAY2BGR);

for (size_t i = 0; i < src_pts.size(); i++)

{

cv::Point pt1(src_pts[i].x, src_pts[i].y + h_offset1);

cv::Point pt2(dst_pts[i].x + w1, dst_pts[i].y + h_offset2);

cv::line(new_img, pt1, pt2, (0, 0, 255));

}

return new_img;

}

private:

int m_num_octave;

int m_num_scale;

float m_sigma;

float m_contrast_t;

float m_eigenvalue_r;

float m_scale_factor;

float m_radius_factor;

int m_num_bins;

float m_peak_ratio;

};

int main()

{

//std::cout << -1 % 36 << std::endl;

cv::Mat img1 = cv::imread("box.png", 0);

cv::Mat img2 = cv::imread("box_in_scene.png", 0);

SIFT sift(3, 3, 1.6);

std::pair<std::vector<cv::KeyPoint>, cv::Mat> p1, p2;

p1 = sift.do_sift(img1);

p2 = sift.do_sift(img2);

std::vector<cv::KeyPoint> kp1, kp2;

kp1 = p1.first;

kp2 = p2.first;

cv::Mat des1, des2;

des1 = p1.second;

des2 = p2.second;

cv::Mat res;

res = sift.do_match(img1, kp1, des1, img2, kp2, des2, 0, 3);

cv::imwrite("res.png", res);

return 0;

}

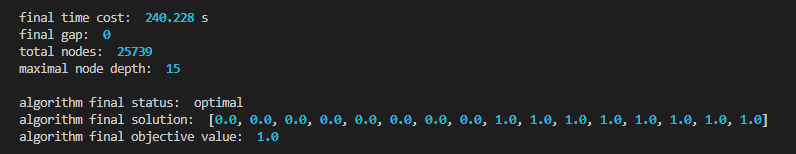

结果:

另一种实现,参考:opencv实战:SIFT的实现

这种实现比上面的实现方式更简略,主要差异在于把构建尺度空间以及生成极值点直接用Harris角点代替。

#include <iostream>

#include <numeric>

#include <opencv2/opencv.hpp>

#define _USE_MATH_DEFINES

#include <math.h>

class Sift

{

public:

Sift(cv::Mat img)

{

m_img = img.clone();

m_r = m_img.rows, m_c = m_img.cols;

m_gradient = cv::Mat::zeros(m_r, m_c, CV_32F);

m_angle = cv::Mat::zeros(m_r, m_c, CV_32F);

};

std::tuple<cv::Mat, cv::Mat, int> get_result()

{

cv::goodFeaturesToTrack(m_img, m_corners, 233, 0.01, 10);

//std::cout << corners << std::endl;

cv::GaussianBlur(m_img, m_img, cv::Size(5, 5), 1, 1);

m_img.convertTo(m_img, CV_32F);

grad(m_img);

m_bins = (m_r + m_c) / 80;

m_length = m_corners.rows;

m_feature = cv::Mat::zeros(m_length, 128, CV_32F);

vote();

for (size_t i = 0; i < m_length; i++)

{

cv::Point2f p(m_corners.at<float>(i, 1), m_corners.at<float>(i, 0));

std::vector<int> val = get_feature(p, m_direct[i]);

float m = 0;

for (float k : val)

m += k * k;

m = sqrt(m);

std::vector<float> l;

for (float k : val)

l.push_back(k / m);

cv::Mat temp_row = cv::Mat(l).reshape(1, 1);

//std::cout << temp_row << std::endl;

temp_row.copyTo(m_feature.row(i));

}

//std::cout << m_feature << std::endl;

return { m_feature, m_corners, m_length };

}

void grad(cv::Mat img)

{

int x = m_r, y = m_c;

cv::Mat kernel_x = (cv::Mat_<float>(3, 3) << -1, -1, -1, 0, 0, 0, 1, 1, 1) / 6;

cv::Mat kernel_y = (cv::Mat_<float>(3, 3) << -1, 0, 1, -1, 0, 1, -1, 0, 1) / 6;

cv::Mat gx, gy;

cv::filter2D(img, gx, -1, kernel_x);

cv::filter2D(img, gy, -1, kernel_y);

//std::cout << gx.at<float>(1, 0) << std::endl;

//std::cout << gy.at<float>(1, 0) << std::endl;

for (size_t i = 0; i < x; i++)

{

for (size_t j = 0; j < y; j++)

{

m_gradient.at<float>(i, j) = sqrt(pow(gx.at<float>(i, j), 2) + pow(gy.at<float>(i, j), 2));

m_angle.at<float>(i, j) = atan2(gy.at<float>(i, j), gx.at<float>(i, j));

}

}

//std::cout << gradient.at<float>(0, 1) << std::endl;

//std::cout << angle.at<float>(0, 1) << std::endl;

}

void vote()

{

for (size_t n = 0; n < m_length; n++)

{

int y = m_corners.at<float>(n, 0), x = m_corners.at<float>(n, 1);

std::vector<int> voting(37);

for (size_t i = std::max(x - m_bins, 0); i < std::min(x + m_bins + 1, m_r); i++)

{

for (size_t j = std::max(y - m_bins, 0); j < std::min(y + m_bins + 1, m_c); j++)

{

int k = int((m_angle.at<float>(i, j) + M_PI) / (M_PI / 18) + 1);

if (k >= 37)

k = 36;

voting[k] += m_gradient.at<float>(i, j);

}

}

int p = 1;

for (size_t i = 2; i < 37; i++)

{

if (voting[i] > voting[p])

p = i;

}

m_direct.push_back(float(p / 18.0 - 1 - 1.0 / 36) * M_PI);

}

}

float get_theta(float x, float y)

{

if ((x < 0 || x >= m_r) || (y < 0 || y >= m_c))

return 0;

float dif = m_angle.at<float>(x, y) - m_theta;

return dif > 0 ? dif : dif + 2 * M_PI;

}

float DB_linear(float x, float y)

{

int xx = int(x), yy = int(y);

float dx1 = x - xx, dx2 = xx + 1 - x;

float dy1 = y - yy, dy2 = yy + 1 - y;

float val = get_theta(xx, yy) * dx2 * dy2 + get_theta(xx + 1, yy) * dx1 * dy2 + get_theta(xx, yy + 1) * dx2 * dy1 + get_theta(xx + 1, yy + 1) * dx1 * dy1;

return val;

}

std::vector<int> cnt(int x1, int x2, int y1, int y2, int xsign, int ysign)

{

std::vector<int> voting(9);

for (size_t x = x1; x < x2; x++)

{

for (size_t y = y1; y < y2; y++)

{

cv::Mat p = m_H * x * xsign + m_V * y * ysign;

int bin = int((DB_linear(p.at<float>(0, 0) + m_pos.x, p.at<float>(0, 1) + m_pos.y)) / (M_PI / 4) + 1);

if (bin > 8)

bin = 8;

voting[bin] += 1;

}

}

std::vector<int> tmp(8);

std::copy(voting.begin()+1, voting.end(), tmp.begin());

return tmp;

}

std::vector<int> get_feature(cv::Point2f pos, float theta)

{

m_pos = pos;

m_theta = theta;

m_H = (cv::Mat_<float>(1, 2) << cos(m_theta), sin(m_theta));

m_V = (cv::Mat_<float>(1, 2) << -sin(m_theta), cos(m_theta));

m_bins = (m_r + m_c) / 150;

std::vector<int> val;

std::vector<int> tmp;

for (int xsign = -1; xsign <= 1; xsign += 2)

{

for (int ysign = -1; ysign <= 1; ysign += 2)

{

tmp = cnt(0, m_bins, 0, m_bins, xsign, ysign);

val.insert(val.end(), tmp.begin(), tmp.end());

tmp = cnt(m_bins, m_bins * 2, 0, m_bins, xsign, ysign);

val.insert(val.end(), tmp.begin(), tmp.end());

tmp = cnt(m_bins, m_bins * 2, m_bins, m_bins * 2, xsign, ysign);

val.insert(val.end(), tmp.begin(), tmp.end());

tmp = cnt(0, m_bins, m_bins, m_bins * 2, xsign, ysign);

val.insert(val.end(), tmp.begin(), tmp.end());

}

}

return val;

}

private:

int m_r;

int m_c;

int m_bins;

int m_length;

float m_theta;

cv::Mat m_img;

cv::Mat m_corners;

cv::Mat m_gradient;

cv::Mat m_angle;

cv::Mat m_H;

cv::Mat m_V;

cv::Mat m_feature;

cv::Point2f m_pos;

std::vector<float> m_direct;

};

cv::Mat merge(cv::Mat img1, cv::Mat img2)

{

int h1 = img1.rows, w1 = img1.cols;

int h2 = img2.rows, w2 = img2.cols;

cv::Mat img;

if (h1 < h2)

{

img = cv::Mat::zeros( h2, w1 + w2, CV_8UC3);

cv::Mat roi = img(cv::Rect(0, 0, img1.cols, img1.rows));

img1.copyTo(roi);

roi = img(cv::Rect(img1.cols, 0, img2.cols, img2.rows));

img2.copyTo(roi);

}

else if (h1 > h2)

{

img = cv::Mat::zeros(h1, w1 + w2, CV_8UC3);

cv::Mat roi = img(cv::Rect(0, 0, img1.cols, img1.rows));

img1.copyTo(roi);

roi = img(cv::Rect(img1.cols, 0, img2.cols, img2.rows));

img2.copyTo(roi);

}

return img;

}

int main(int argc, char** argv)

{

cv::Mat src = cv::imread("source.jpg", 1), src_gray;

cv::Mat tgt = cv::imread("target.jpg", 1), tgt_gray;

cv::cvtColor(src, src_gray, cv::COLOR_BGR2GRAY);

cv::cvtColor(tgt, tgt_gray, cv::COLOR_BGR2GRAY);

Sift sift_src(src_gray);

Sift sift_tgt(tgt_gray);

auto [fs ,cs ,ls] = sift_src.get_result();

auto [ft, ct, lt] = sift_tgt.get_result();

cv::Mat img = merge(tgt, src);

for (size_t i = 0; i < lt; i++)

{

std::vector<float> tmp;

for (size_t j = 0; j < ls; j++)

{

//std::cout << ft.row(i) << std::endl;

//std::cout << fs.row(j) << std::endl;

float sc = (ft.row(i)).dot(fs.row(j));

tmp.push_back(sc);

}

int b = std::max_element(tmp.begin(), tmp.end()) - tmp.begin();

float s = *std::max_element(tmp.begin(), tmp.end());

if (s < 0.8)

continue;

cv::Scalar color(rand() % 255, rand() % 255, rand() % 255);

cv::Point p_start(ct.at<float>(i, 0), ct.at<float>(i, 1));

cv::Point p_end(cs.at<float>(b, 0) + tgt.cols, cs.at<float>(b, 1));

cv::line(img, p_start, p_end, color, 1);

}

cv::imwrite("mysift.jpg", img);

return EXIT_SUCCESS;

}

结果: