基本思想:部署yolov8-seg到mnn、openvino、oak平台上,仅仅做记录

实验模型:链接: https://pan.baidu.com/s/1ilX1YMuhONkisKuGuiqvWw?pwd=75ti 提取码: 75ti

一、是用官方模型转onnx首先,然后进行sim一下,是用模型日期2023-04-22,下载模型然后生成onnx和openvino模型

ubuntu@ubuntu:~$ git clone https://github.com/ultralytics/ultralytics.git

是用它生成onnx和openvino /home/ubuntu/ultralytics/tests/test_python.py其中onnx我是用得optset=11,默认版本不确定能不能用,请自行尝试,大概率可以用,代码中是用了根据当前系统获取最新的onnx_optset版本

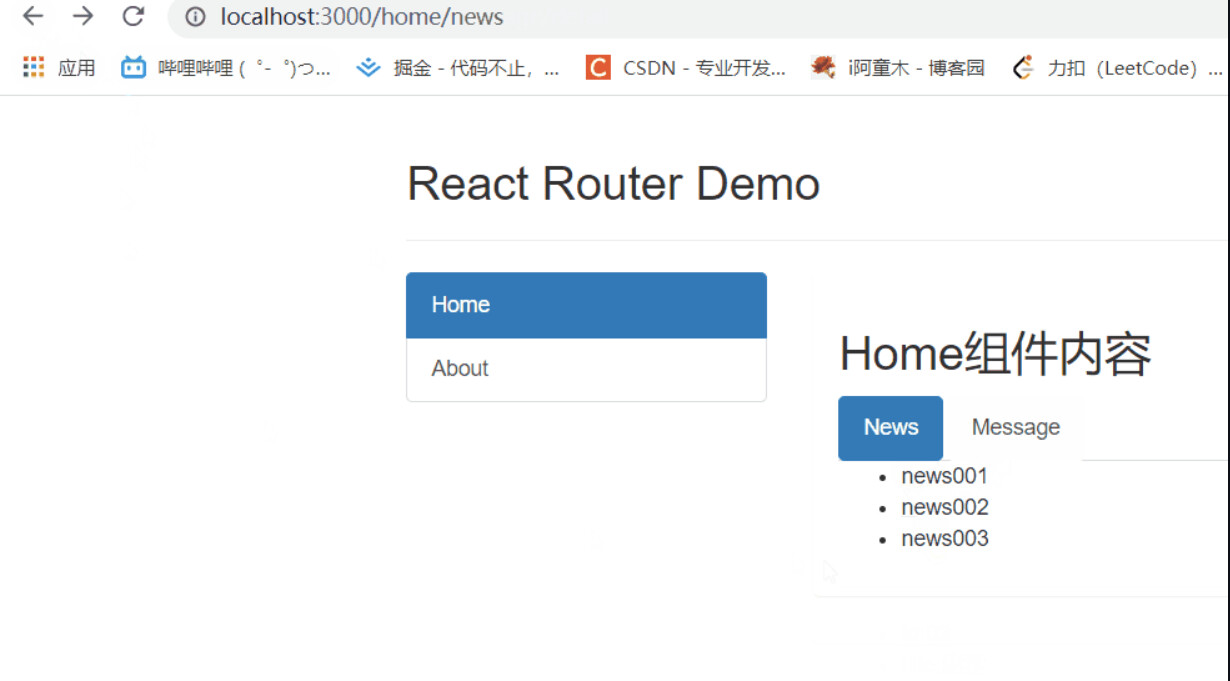

测试图片

转mnn模型

ubuntu@ubuntu:~/MNN/build$ ./MNNConvert -f ONNX --modelFile /home/ubuntu/ultralytics/yolov8n-seg_sim.onnx --MNNModel /home/ubuntu/ultralytics/yolov8n-seg_sim.mnn --bizCode MNN

The device support dot:0, support fp16:0, support i8mm: 0

Start to Convert Other Model Format To MNN Model...

[08:34:54] /home/ubuntu/MNN/tools/converter/source/onnx/onnxConverter.cpp:40: ONNX Model ir version: 6

Start to Optimize the MNN Net...

inputTensors : [ images, ]

outputTensors: [ output0, output1, ]

Converted Success!

cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(SparseInstClion)

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -fopenmp ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fopenmp")

set(CMAKE_CXX_STANDARD 11)

include_directories(${CMAKE_SOURCE_DIR})

include_directories(${CMAKE_SOURCE_DIR}/include)

include_directories(${CMAKE_SOURCE_DIR}/include/MNN)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#链接Opencv库

add_library(libmnn SHARED IMPORTED)

set_target_properties(libmnn PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libMNN.so)

add_executable(SparseInstClion main.cpp )

target_link_libraries(SparseInstClion ${OpenCV_LIBS} libmnn )main.cpp

#include<MNN/Interpreter.hpp>

#include<MNN/ImageProcess.hpp>

#include "opencv2/opencv.hpp"

#include <float.h>

#include <stdio.h>

#include <vector>

#define MAX_STRIDE 32 // if yolov8-p6 model modify to 64

using namespace std;

using namespace cv;

struct OutputSeg {

int id; //结果类别id

float confidence; //结果置信度

cv::Rect box; //矩形框

cv::Mat boxMask; //矩形框内mask,节省内存空间和加快速度

};

cv::Mat preprocess_img(cv::Mat &img, int input_w, int input_h, std::vector<int> &padsize) {

int w, h, x, y;

float r_w = input_w / (img.cols * 1.0);

float r_h = input_h / (img.rows * 1.0);

if (r_h > r_w) {//����ڸ�

w = input_w;

h = r_w * img.rows;

x = 0;

y = (input_h - h) / 2;

} else {

w = r_h * img.cols;

h = input_h;

x = (input_w - w) / 2;

y = 0;

}

cv::Mat re(h, w, CV_8UC3);

cv::resize(img, re, re.size(), 0, 0, cv::INTER_LINEAR);

cv::Mat out(input_h, input_w, CV_8UC3, cv::Scalar(128, 128, 128));

re.copyTo(out(cv::Rect(x, y, re.cols, re.rows)));

padsize.push_back(h);

padsize.push_back(w);

padsize.push_back(y);

padsize.push_back(x);// int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

return out;

}

void DrawPred(Mat &img, std::vector<OutputSeg> result) {

//生成随机颜色

std::vector<Scalar> color;

srand(time(0));

for (int i = 0; i < 80; i++) {//CLASSES

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

Mat mask = img.clone();

for (int i = 0; i < result.size(); i++) {

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num = i;

rectangle(img, result[i].box, color[result[i].id], 2, 8);

mask(result[i].box).setTo(color[result[i].id], result[i].boxMask);

char label[100];

sprintf(label, "%d:%.2f", result[i].id, result[i].confidence);

//std::string label = std::to_string(result[i].id) + ":" + std::to_string(result[i].confidence);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);

}

addWeighted(img, 0.5, mask, 0.8, 1, img); //将mask加在原图上面

}

int main() {

const char *imagepath = "../image/bus.jpg";

cv::Mat bgr = cv::imread(imagepath);

if (bgr.empty()) {

fprintf(stderr, "cv::imread %s failed\n", imagepath);

return -1;

}

cv::Mat src = bgr;

int img_width = src.cols;

int img_height = src.rows;

std::cout << "宽高:" << img_width << " " << img_height << std::endl;

// Subtract mean from image

Mat pr_img0, pr_img;

const int INPUT_H = 640;

const int INPUT_W = 640;

std::vector<int> padsize;

pr_img = preprocess_img(src, INPUT_H, INPUT_W, padsize); // Resize

int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

float ratio_h = (float) src.rows / newh;

float ratio_w = (float) src.cols / neww;

const int _segWidth = 160;

const int _segHeight = 160;

const int _segChannels = 32;

const int CLASSES = 80;

const int Num_box = 8400;

const float CONF_THRESHOLD = 0.1;

const float NMS_THRESHOLD = 0.5;

const float MASK_THRESHOLD = 0.5;

const int OUTPUT_SIZE = Num_box * (CLASSES + 4 + _segChannels);//output0

const int OUTPUT_SIZE1 = _segChannels * _segWidth * _segHeight;//output1

float prob[OUTPUT_SIZE];

float prob1[OUTPUT_SIZE1];

// MNN inference

auto mnnNet = std::shared_ptr<MNN::Interpreter>(

MNN::Interpreter::createFromFile("/home/ubuntu/ultralytics/yolov8n-seg_sim.mnn"));

auto t1 = std::chrono::steady_clock::now();

MNN::ScheduleConfig netConfig;

netConfig.type = MNN_FORWARD_CPU;

netConfig.numThread = 4;

auto session = mnnNet->createSession(netConfig);

auto input = mnnNet->getSessionInput(session, nullptr);

mnnNet->resizeTensor(input, {1, 3, (int) INPUT_H, (int) INPUT_W});

mnnNet->resizeSession(session);

MNN::CV::ImageProcess::Config config;

const float mean_vals[3] = {0, 0, 0};

const float norm_255[3] = {1.f / 255.f, 1.f / 255.f, 1.f / 255.f};

std::shared_ptr<MNN::CV::ImageProcess> pretreat(

MNN::CV::ImageProcess::create(MNN::CV::BGR, MNN::CV::RGB, mean_vals, 3,

norm_255, 3));

pretreat->convert(pr_img.data, (int) INPUT_H, (int) INPUT_W, pr_img.step[0], input);

mnnNet->runSession(session);

auto SparseInst_scores = mnnNet->getSessionOutput(session, "output0");

MNN::Tensor scoresHost(SparseInst_scores, SparseInst_scores->getDimensionType());

SparseInst_scores->copyToHostTensor(&scoresHost);

//

// std::vector<float> vec_host_scores;

for (int i = 0; i < scoresHost.elementSize(); i++) {

prob[i] = scoresHost.host<float>()[i];

}

auto t2 = std::chrono::steady_clock::now();

//毫秒级

double dr_ms = std::chrono::duration<double, std::milli>(t2 - t1).count();

std::cout << dr_ms << " ms" << std::endl;

std::vector<float> vec_scores;

std::vector<float> vec_new_scores;

std::vector<int> vec_labels;

int scoresHost_shape_c = scoresHost.channel();

int scoresHost_shape_d = scoresHost.dimensions();

int scoresHost_shape_w = scoresHost.width();

int scoresHost_shape_h = scoresHost.height();

printf("shape_d=%d shape_c=%d shape_h=%d shape_w=%d scoresHost.elementSize()=%d\n", scoresHost_shape_d,

scoresHost_shape_c, scoresHost_shape_h, scoresHost_shape_w, scoresHost.elementSize());

auto SparseInst_masks = mnnNet->getSessionOutput(session, "output1");

MNN::Tensor masksHost(SparseInst_masks, SparseInst_masks->getDimensionType());

SparseInst_masks->copyToHostTensor(&masksHost);

for (int i = 0; i < masksHost.elementSize(); i++) {

prob1[i] = masksHost.host<float>()[i];

}

// std::vector<float> vec_host_masks;

// for (int i = 0; i < masksHost.elementSize(); i++) {

// vec_host_masks.emplace_back(masksHost.host<float>()[i]);

// }

int masksHost_shape_c = masksHost.channel();

int masksHost_shape_d = masksHost.dimensions();

int masksHost_shape_w = masksHost.width();

int masksHost_shape_h = masksHost.height();

printf("shape_d=%d shape_c=%d shape_h=%d shape_w=%d masksHost.elementSize()=%d\n", masksHost_shape_d,

masksHost_shape_c, masksHost_shape_h, masksHost_shape_w, masksHost.elementSize());

//pretty_print(result1, prob1);

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<cv::Mat> picked_proposals; //后续计算mask

// 处理box

int net_length = CLASSES + 4 + _segChannels;

cv::Mat out1 = cv::Mat(net_length, Num_box, CV_32F, prob);

auto start = std::chrono::system_clock::now();

for (int i = 0; i < Num_box; i++) {

//输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值

cv::Mat scores = out1(Rect(i, 4, 1, CLASSES)).clone();

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float) max_class_socre;

if (max_class_socre >= CONF_THRESHOLD) {

cv::Mat temp_proto = out1(Rect(i, 4 + CLASSES, 1, _segChannels)).clone();

picked_proposals.push_back(temp_proto.t());

float x = (out1.at<float>(0, i) - padw) * ratio_w; //cx

float y = (out1.at<float>(1, i) - padh) * ratio_h; //cy

float w = out1.at<float>(2, i) * ratio_w; //w

float h = out1.at<float>(3, i) * ratio_h; //h

int left = MAX((x - 0.5 * w), 0);

int top = MAX((y - 0.5 * h), 0);

int width = (int) w;

int height = (int) h;

if (width <= 0 || height <= 0) { continue; }

classIds.push_back(classIdPoint.y);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, width, height));

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result);

std::vector<cv::Mat> temp_mask_proposals;

std::vector<OutputSeg> output;

Rect holeImgRect(0, 0, src.cols, src.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = classIds[idx];

result.confidence = confidences[idx];

//printf("ORI %d %d %d %d\n", boxes[idx].x, boxes[idx].y, boxes[idx].width, boxes[idx].height);

//printf("W %d %d %d %d\n", holeImgRect.x, holeImgRect.y, holeImgRect.width, holeImgRect.height);

result.box = boxes[idx] & holeImgRect;

//printf("%d %d %d %d\n", result.box.x, result.box.y, result.box.width, result.box.height);

output.push_back(result);

temp_mask_proposals.push_back(picked_proposals[idx]);

}

// 处理mask

Mat maskProposals;

for (int i = 0; i < temp_mask_proposals.size(); ++i)

maskProposals.push_back(temp_mask_proposals[i]);

if(!maskProposals.empty()) {

Mat protos = Mat(_segChannels, _segWidth * _segHeight, CV_32F, prob1);

Mat matmulRes = (maskProposals * protos).t();//n*32 32*25600 A*B是以数学运算中矩阵相乘的方式实现的,要求A的列数等于B的行数时

Mat masks = matmulRes.reshape(output.size(), {_segWidth, _segHeight});//n*160*160

std::vector<Mat> maskChannels;

cv::split(masks, maskChannels);

Rect roi(int((float)padw / INPUT_W * _segWidth), int((float) padh / INPUT_H * _segHeight), int(

_segWidth - padw / 2), int(_segHeight - padh / 2));

for (int i = 0; i < output.size(); ++i) {

Mat dest, mask;

cv::exp(-maskChannels[i], dest);//sigmoid

dest = 1.0 / (1.0 + dest);//160*160

dest = dest(roi);

resize(dest, mask, cv::Size(src.cols, src.rows), INTER_NEAREST);

//crop----截取box中的mask作为该box对应的mask

Rect temp_rect = output[i].box;

mask = mask(temp_rect) > MASK_THRESHOLD;

output[i].boxMask = mask;

}

auto end = std::chrono::system_clock::now();

std::cout << "后处理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms"

<< std::endl;

DrawPred(src, output);

}

cv::imshow("output", src);

cv::imwrite("a.jpg",src);

char c = cv::waitKey(0);

return 0;

}

测试结果

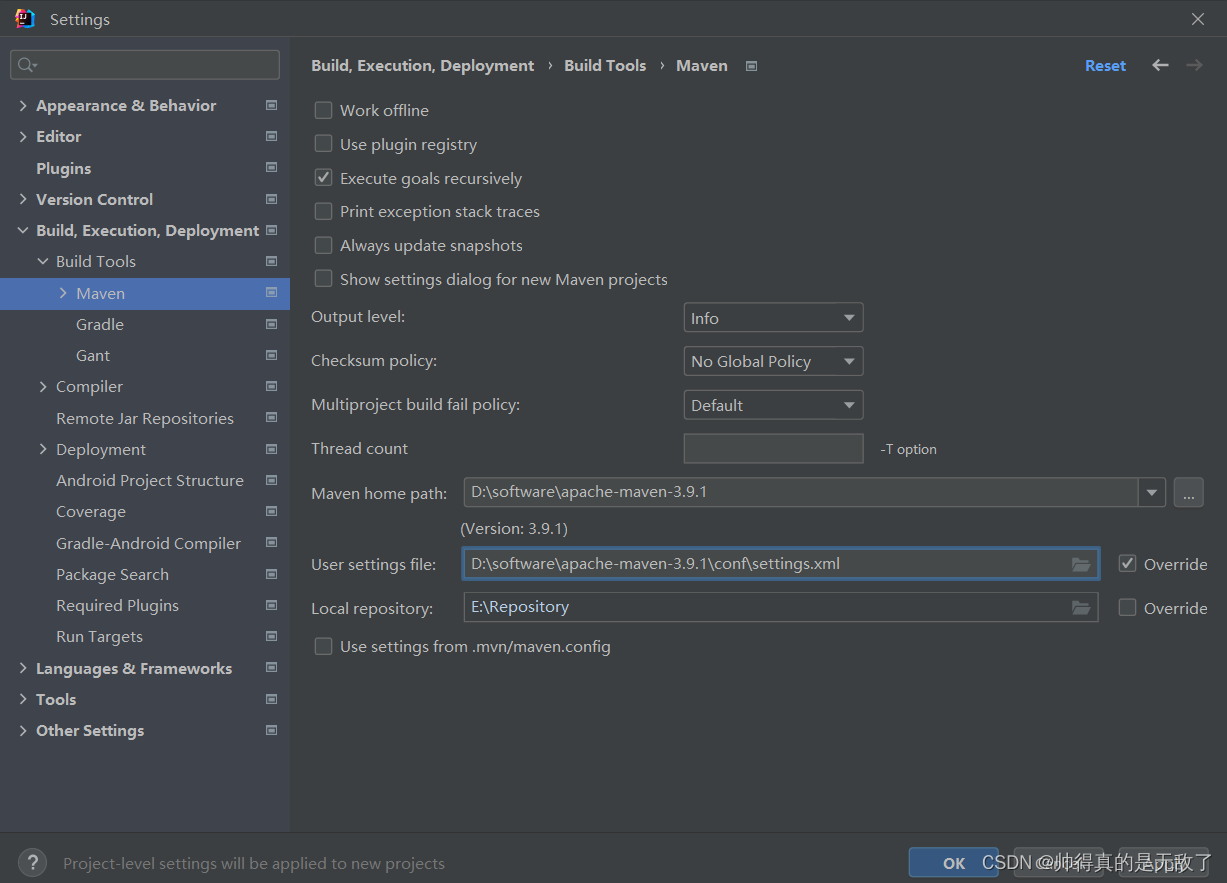

二、转openvino测试

比较奇怪,是用官方转的openvino可能是版本太高,无法是用,我是用自己得转得onnx在本地转得openvino模型,测试官方得xml存在这个错误

/home/ubuntu/openvino/cmake-build-debug/untitled23

宽高:810 1080

terminate called after throwing an instance of 'InferenceEngine::GeneralError'

what(): Unknown model format! Cannot find reader for model format: xml and read the model: /home/ubuntu/ultralytics/yolov8n-seg_openvino_model/yolov8n-seg.xml. Please check that reader library exists in your PATH.

Process finished with exit code 134 (interrupted by signal 6: SIGABRT)然后参考我之前得博客配置环境

https://sxj731533730.blog.csdn.net/article/details/124772210

然后转openvino可用

ubuntu@ubuntu:/opt/intel/openvino_2021/deployment_tools/model_optimizer$ python3 mo.py --input_shape [1,3,640,640] --input_model /home/ubuntu/ultralytics/yolov8n-seg.onnx --data_type FP16 --output_dir=/home/ubuntu/ultralytics/

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /home/ubuntu/ultralytics/yolov8n-seg.onnx

- Path for generated IR: /home/ubuntu/ultralytics/

- IR output name: yolov8n-seg

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: [1,3,640,640]

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

ONNX specific parameters:

- Inference Engine found in: /opt/intel/openvino_2021/python/python3.8/openvino

Inference Engine version: 2021.4.1-3926-14e67d86634-releases/2021/4

Model Optimizer version: 2021.4.1-3926-14e67d86634-releases/2021/4

[ WARNING ]

Detected not satisfied dependencies:

numpy: installed: 1.23.4, required: < 1.20

Please install required versions of components or use install_prerequisites script

/opt/intel/openvino_2021.4.689/deployment_tools/model_optimizer/install_prerequisites/install_prerequisites_onnx.sh

Note that install_prerequisites scripts may install additional components.

/opt/intel/openvino_2021/deployment_tools/model_optimizer/extensions/front/onnx/parameter_ext.py:20: DeprecationWarning: `mapping.TENSOR_TYPE_TO_NP_TYPE` is now deprecated and will be removed in a future release.To silence this warning, please use `helper.tensor_dtype_to_np_dtype` instead.

'data_type': TENSOR_TYPE_TO_NP_TYPE[t_type.elem_type]

/opt/intel/openvino_2021/deployment_tools/model_optimizer/extensions/analysis/boolean_input.py:13: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

nodes = graph.get_op_nodes(op='Parameter', data_type=np.bool)

/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/front/common/partial_infer/concat.py:36: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

mask = np.zeros_like(shape, dtype=np.bool)

[ WARNING ] Const node '/model.10/Resize/Add_input_port_1/value323515685' returns shape values of 'float64' type but it must be integer or float32. During Elementwise type inference will attempt to cast to float32

[ WARNING ] Const node '/model.13/Resize/Add_input_port_1/value326915688' returns shape values of 'float64' type but it must be integer or float32. During Elementwise type inference will attempt to cast to float32

[ WARNING ] Changing Const node '/model.10/Resize/Add_input_port_1/value323516047' data type from float16 to <class 'numpy.float32'> for Elementwise operation

[ WARNING ] Changing Const node '/model.13/Resize/Add_input_port_1/value326915795' data type from float16 to <class 'numpy.float32'> for Elementwise operation

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: /home/ubuntu/ultralytics/yolov8n-seg.xml

[ SUCCESS ] BIN file: /home/ubuntu/ultralytics/yolov8n-seg.bin

[ SUCCESS ] Total execution time: 7.38 seconds.

[ SUCCESS ] Memory consumed: 127 MB.

It's been a while, check for a new version of Intel(R) Distribution of OpenVINO(TM) toolkit here https://software.intel.com/content/www/us/en/develop/tools/openvino-toolkit/download.html?cid=other&source=prod&campid=ww_2021_bu_IOTG_OpenVINO-2021-4-LTS&content=upg_all&medium=organic or on the GitHub*

cmakelists.txt

cmake_minimum_required(VERSION 3.4.1)

set(CMAKE_CXX_STANDARD 14)

project(untitled23)

find_package(OpenCV REQUIRED)

find_package(ngraph REQUIRED)

find_package(InferenceEngine REQUIRED)

include_directories(

${OpenCV_INCLUDE_DIRS}

${CMAKE_CURRENT_SOURCE_DIR}

${CMAKE_CURRENT_BINARY_DIR}

)

add_executable(untitled23 main.cpp)

target_link_libraries(

untitled23

${InferenceEngine_LIBRARIES}

${NGRAPH_LIBRARIES}

${OpenCV_LIBS}

)main.cpp

#include "opencv2/opencv.hpp"

#include <float.h>

#include <stdio.h>

#include <vector>

#include <opencv2/opencv.hpp>

#include <inference_engine.hpp>

#include <iostream>

#include <chrono>

#include <opencv2/dnn/dnn.hpp>

#include <cmath>

using namespace std;

using namespace cv;

using namespace InferenceEngine;

using namespace std;

using namespace cv;

struct OutputSeg {

int id; //结果类别id

float confidence; //结果置信度

cv::Rect box; //矩形框

cv::Mat boxMask; //矩形框内mask,节省内存空间和加快速度

};

cv::Mat preprocess_img(cv::Mat &img, int input_w, int input_h, std::vector<int> &padsize) {

int w, h, x, y;

float r_w = input_w / (img.cols * 1.0);

float r_h = input_h / (img.rows * 1.0);

if (r_h > r_w) {//����ڸ�

w = input_w;

h = r_w * img.rows;

x = 0;

y = (input_h - h) / 2;

} else {

w = r_h * img.cols;

h = input_h;

x = (input_w - w) / 2;

y = 0;

}

cv::Mat re(h, w, CV_8UC3);

cv::resize(img, re, re.size(), 0, 0, cv::INTER_LINEAR);

cv::Mat out(input_h, input_w, CV_8UC3, cv::Scalar(128, 128, 128));

re.copyTo(out(cv::Rect(x, y, re.cols, re.rows)));

padsize.push_back(h);

padsize.push_back(w);

padsize.push_back(y);

padsize.push_back(x);// int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

return out;

}

void DrawPred(Mat &img, std::vector<OutputSeg> result) {

//生成随机颜色

std::vector<Scalar> color;

srand(time(0));

for (int i = 0; i < 80; i++) {//CLASSES

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

Mat mask = img.clone();

for (int i = 0; i < result.size(); i++) {

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num = i;

rectangle(img, result[i].box, color[result[i].id], 2, 8);

mask(result[i].box).setTo(color[result[i].id], result[i].boxMask);

char label[100];

sprintf(label, "%d:%.2f", result[i].id, result[i].confidence);

//std::string label = std::to_string(result[i].id) + ":" + std::to_string(result[i].confidence);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);

}

addWeighted(img, 0.5, mask, 0.8, 1, img); //将mask加在原图上面

}

int main() {

const char *imagepath = "/home/ubuntu/ultralytics/ultralytics/assets/bus.jpg";

cv::Mat bgr = cv::imread(imagepath);

if (bgr.empty()) {

fprintf(stderr, "cv::imread %s failed\n", imagepath);

return -1;

}

cv::Mat src = bgr;

int img_width = src.cols;

int img_height = src.rows;

std::cout << "宽高:" << img_width << " " << img_height << std::endl;

// Subtract mean from image

Mat pr_img0, pr_img;

const int INPUT_H = 640;

const int INPUT_W = 640;

std::vector<int> padsize;

pr_img = preprocess_img(src, INPUT_H, INPUT_W, padsize); // Resize

int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

float ratio_h = (float) src.rows / newh;

float ratio_w = (float) src.cols / neww;

const int _segWidth = 160;

const int _segHeight = 160;

const int _segChannels = 32;

const int CLASSES = 80;

const int Num_box = 8400;

const float CONF_THRESHOLD = 0.1;

const float NMS_THRESHOLD = 0.5;

const float MASK_THRESHOLD = 0.5;

const int OUTPUT_SIZE = Num_box * (CLASSES + 4 + _segChannels);//output0

const int OUTPUT_SIZE1 = _segChannels * _segWidth * _segHeight;//output1

float prob[OUTPUT_SIZE];

float prob1[OUTPUT_SIZE1];

//获取可执行网络

string _xml_path = "/home/ubuntu/ultralytics/yolov8n-seg.xml";

ExecutableNetwork _network;

OutputsDataMap _outputinfo;

//参数区

double _cof_threshold = 0.3;

double _nms_area_threshold = 0.5;

Core ie;

auto cnnNetwork = ie.ReadNetwork(_xml_path);

//输入设置

InputsDataMap inputInfo(cnnNetwork.getInputsInfo());

InputInfo::Ptr& input = inputInfo.begin()->second;

string _input_name = inputInfo.begin()->first;

input->setPrecision(Precision::FP32);

input->getInputData()->setLayout(Layout::NCHW);

ICNNNetwork::InputShapes inputShapes = cnnNetwork.getInputShapes();

SizeVector& inSizeVector = inputShapes.begin()->second;

cnnNetwork.reshape(inputShapes);

//输出设置

_outputinfo = OutputsDataMap(cnnNetwork.getOutputsInfo());

for (auto &output : _outputinfo) {

output.second->setPrecision(Precision::FP32);

}

//获取可执行网络

//_network = ie.LoadNetwork(cnnNetwork, "GPU");

_network = ie.LoadNetwork(cnnNetwork, "CPU");

//_network = ie.LoadNetwork(cnnNetwork, "GPU");

_network = ie.LoadNetwork(cnnNetwork, "CPU");

size_t img_size = INPUT_W*INPUT_W;

// MNN inference

InferRequest::Ptr infer_request = _network.CreateInferRequestPtr();

Blob::Ptr frameBlob = infer_request->GetBlob(_input_name);

InferenceEngine::LockedMemory<void> blobMapped = InferenceEngine::as<InferenceEngine::MemoryBlob>(frameBlob)->wmap();

float* blob_data = blobMapped.as<float*>();

//nchw

for(size_t row =0;row<640;row++){

for(size_t col=0;col<640;col++){

for(size_t ch =0;ch<3;ch++){

blob_data[img_size*ch + row*640 + col] = float(pr_img.at<Vec3b>(row,col)[ch])/255.0f;

}

}

}

//执行预测

infer_request->Infer();

for (auto &output : _outputinfo) {

auto output_name = output.first;

Blob::Ptr blob = infer_request->GetBlob(output_name);

LockedMemory<const void> blobMapped = as<MemoryBlob>(blob)->rmap();

const float *output_blob = blobMapped.as<float *>();

if(strcmp(output_name.c_str(),"output0")==0){

for(int i=0;i<OUTPUT_SIZE;i++)

prob[i]=output_blob[i];

}

if(strcmp(output_name.c_str(),"output1")==0){

for(int i=0;i<OUTPUT_SIZE1;i++)

prob1[i]=output_blob[i];

}

std::cout<<output_name<<std::endl;

}

//pretty_print(result1, prob1);

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<cv::Mat> picked_proposals; //后续计算mask

// 处理box

int net_length = CLASSES + 4 + _segChannels;

cv::Mat out1 = cv::Mat(net_length, Num_box, CV_32F, prob);

auto start = std::chrono::system_clock::now();

for (int i = 0; i < Num_box; i++) {

//输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值

cv::Mat scores = out1(Rect(i, 4, 1, CLASSES)).clone();

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float) max_class_socre;

if (max_class_socre >= CONF_THRESHOLD) {

cv::Mat temp_proto = out1(Rect(i, 4 + CLASSES, 1, _segChannels)).clone();

picked_proposals.push_back(temp_proto.t());

float x = (out1.at<float>(0, i) - padw) * ratio_w; //cx

float y = (out1.at<float>(1, i) - padh) * ratio_h; //cy

float w = out1.at<float>(2, i) * ratio_w; //w

float h = out1.at<float>(3, i) * ratio_h; //h

int left = MAX((x - 0.5 * w), 0);

int top = MAX((y - 0.5 * h), 0);

int width = (int) w;

int height = (int) h;

if (width <= 0 || height <= 0) { continue; }

classIds.push_back(classIdPoint.y);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, width, height));

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result);

std::vector<cv::Mat> temp_mask_proposals;

std::vector<OutputSeg> output;

Rect holeImgRect(0, 0, src.cols, src.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = classIds[idx];

result.confidence = confidences[idx];

//printf("ORI %d %d %d %d\n", boxes[idx].x, boxes[idx].y, boxes[idx].width, boxes[idx].height);

//printf("W %d %d %d %d\n", holeImgRect.x, holeImgRect.y, holeImgRect.width, holeImgRect.height);

result.box = boxes[idx] & holeImgRect;

//printf("%d %d %d %d\n", result.box.x, result.box.y, result.box.width, result.box.height);

output.push_back(result);

temp_mask_proposals.push_back(picked_proposals[idx]);

}

// 处理mask

Mat maskProposals;

for (int i = 0; i < temp_mask_proposals.size(); ++i)

maskProposals.push_back(temp_mask_proposals[i]);

if(!maskProposals.empty()) {

Mat protos = Mat(_segChannels, _segWidth * _segHeight, CV_32F, prob1);

Mat matmulRes = (maskProposals * protos).t();//n*32 32*25600 A*B是以数学运算中矩阵相乘的方式实现的,要求A的列数等于B的行数时

Mat masks = matmulRes.reshape(output.size(), {_segWidth, _segHeight});//n*160*160

std::vector<Mat> maskChannels;

cv::split(masks, maskChannels);

Rect roi(int((float)padw / INPUT_W * _segWidth), int((float) padh / INPUT_H * _segHeight), int(

_segWidth - padw / 2), int(_segHeight - padh / 2));

for (int i = 0; i < output.size(); ++i) {

Mat dest, mask;

cv::exp(-maskChannels[i], dest);//sigmoid

dest = 1.0 / (1.0 + dest);//160*160

dest = dest(roi);

resize(dest, mask, cv::Size(src.cols, src.rows), INTER_NEAREST);

//crop----截取box中的mask作为该box对应的mask

Rect temp_rect = output[i].box;

mask = mask(temp_rect) > MASK_THRESHOLD;

output[i].boxMask = mask;

}

auto end = std::chrono::system_clock::now();

std::cout << "后处理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms"

<< std::endl;

DrawPred(src, output);

}

cv::imshow("output", src);

cv::imwrite("a.jpg",src);

char c = cv::waitKey(0);

return 0;

}

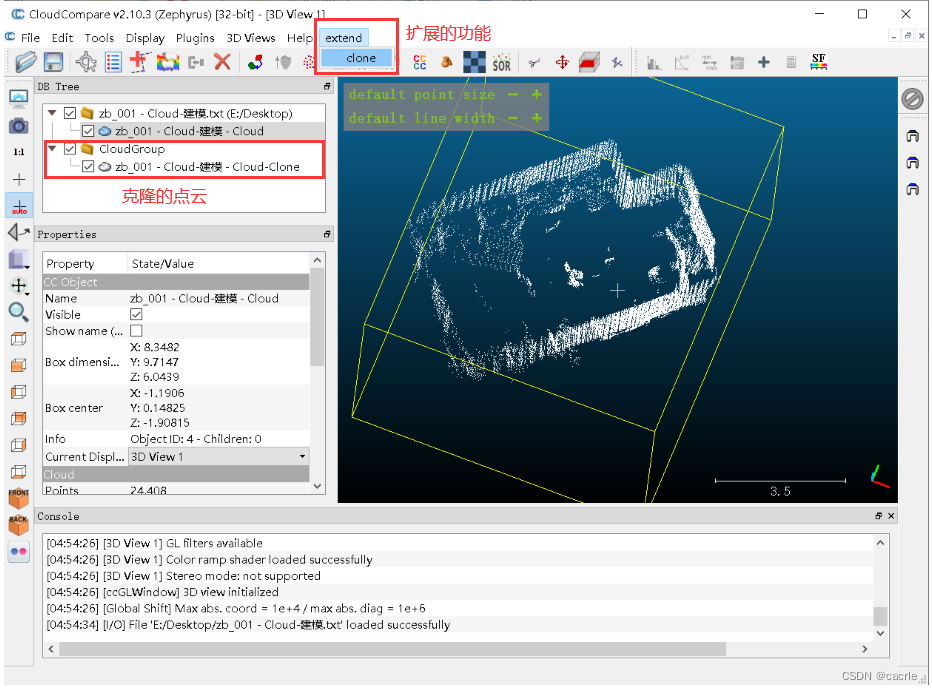

clion测试结果

三、oak测试结果

ubuntu@ubuntu:~$ python3 /opt/intel/openvino_2021/deployment_tools/model_optimizer/mo.py --input_model /home/ubuntu/ultralytics/yolov8n-seg.onnx --output_dir /home/ubuntu/ultralytics/F16_OAK --input_shape [1,3,640,640] --data_type FP16 --scale_values [255.0,255.0,255.0] --mean_values [0,0,0]

ubuntu@ubuntu:/opt/intel/openvino_2021/deployment_tools/tools/compile_tool$ ./compile_tool -m /home/ubuntu/ultralytics/F16_OAK/yolov8n-seg.xml -ip U8 -d MYRIAD -VPU_NUMBER_OF_SHAVES 4 -VPU_NUMBER_OF_CMX_SLICES 4

ubuntu@ubuntu:/opt/intel/openvino_2021/deployment_tools/tools/compile_tool$ cp yolov8n-seg.blob /home/ubuntu/ultralytics/F16_OAK/cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(untitled15)

set(CMAKE_CXX_STANDARD 11)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

include_directories(${CMAKE_SOURCE_DIR}/include)

include_directories(${CMAKE_SOURCE_DIR}/include/utility)

#链接Opencv库

find_package(depthai CONFIG REQUIRED)

add_executable(untitled15 main.cpp include/utility/utility.cpp)

target_link_libraries(untitled15 ${OpenCV_LIBS} depthai::opencv )main.cpp

#include <stdio.h>

#include <string>

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include "utility.hpp"

#include <vector>

#include "depthai/depthai.hpp"

using namespace std;

using namespace std::chrono;

using namespace cv;

using namespace std;

using namespace cv;

struct OutputSeg {

int id; //结果类别id

float confidence; //结果置信度

cv::Rect box; //矩形框

cv::Mat boxMask; //矩形框内mask,节省内存空间和加快速度

};

cv::Mat preprocess_img(cv::Mat &img, int input_w, int input_h, std::vector<int> &padsize) {

int w, h, x, y;

float r_w = input_w / (img.cols * 1.0);

float r_h = input_h / (img.rows * 1.0);

if (r_h > r_w) {//����ڸ�

w = input_w;

h = r_w * img.rows;

x = 0;

y = (input_h - h) / 2;

} else {

w = r_h * img.cols;

h = input_h;

x = (input_w - w) / 2;

y = 0;

}

cv::Mat re(h, w, CV_8UC3);

cv::resize(img, re, re.size(), 0, 0, cv::INTER_LINEAR);

cv::Mat out(input_h, input_w, CV_8UC3, cv::Scalar(128, 128, 128));

re.copyTo(out(cv::Rect(x, y, re.cols, re.rows)));

padsize.push_back(h);

padsize.push_back(w);

padsize.push_back(y);

padsize.push_back(x);// int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

return out;

}

void DrawPred(Mat &img, std::vector<OutputSeg> result) {

//生成随机颜色

std::vector<Scalar> color;

srand(time(0));

for (int i = 0; i < 80; i++) {//CLASSES

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

Mat mask = img.clone();

for (int i = 0; i < result.size(); i++) {

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num = i;

rectangle(img, result[i].box, color[result[i].id], 2, 8);

mask(result[i].box).setTo(color[result[i].id], result[i].boxMask);

char label[100];

sprintf(label, "%d:%.2f", result[i].id, result[i].confidence);

//std::string label = std::to_string(result[i].id) + ":" + std::to_string(result[i].confidence);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);

}

addWeighted(img, 0.5, mask, 0.8, 1, img); //将mask加在原图上面

}

int main(int argc, char **argv) {

Mat pr_img0, pr_img;

const int INPUT_H = 640;

const int INPUT_W = 640;

const int _segWidth = 160;

const int _segHeight = 160;

const int _segChannels = 32;

const int CLASSES = 80;

const int Num_box = 8400;

const float CONF_THRESHOLD = 0.1;

const float NMS_THRESHOLD = 0.5;

const float MASK_THRESHOLD = 0.5;

const int OUTPUT_SIZE = Num_box * (CLASSES + 4 + _segChannels);//output0

const int OUTPUT_SIZE1 = _segChannels * _segWidth * _segHeight;//output1

float prob[OUTPUT_SIZE];

float prob1[OUTPUT_SIZE1];

int target_width=640;

int target_height=640;

dai::Pipeline pipeline;

//定义

auto cam = pipeline.create<dai::node::ColorCamera>();

cam->setBoardSocket(dai::CameraBoardSocket::RGB);

cam->setResolution(dai::ColorCameraProperties::SensorResolution::THE_1080_P);

cam->setFps(60);

cam->setPreviewSize(target_height, target_width); // NN input

cam->setInterleaved(false);

auto net = pipeline.create<dai::node::NeuralNetwork>();

dai::OpenVINO::Blob blob("/home/ubuntu/ultralytics/F16_OAK/yolov8n-seg.blob");

net->setBlob(blob);

net->input.setBlocking(false);

//基本熟练明白oak的函数使用了

cam->preview.link(net->input);

//定义输出

auto xlinkParserOut = pipeline.create<dai::node::XLinkOut>();

xlinkParserOut->setStreamName("parseOut");

auto xlinkoutOut = pipeline.create<dai::node::XLinkOut>();

xlinkoutOut->setStreamName("out");

auto xlinkoutpassthroughOut = pipeline.create<dai::node::XLinkOut>();

xlinkoutpassthroughOut->setStreamName("passthrough");

net->out.link(xlinkParserOut->input);

net->passthrough.link(xlinkoutpassthroughOut->input);

//结构推送相机

dai::Device device(pipeline);

//取帧显示

auto outqueue = device.getOutputQueue("passthrough", 8, false);//maxsize 代表缓冲数据

auto detqueue = device.getOutputQueue("parseOut", 8, false);//maxsize 代表缓冲数据

int counter = 0;

float fps = 0;

auto startTime = steady_clock::now();

while (true) {

counter++;

auto currentTime = steady_clock::now();

auto elapsed = duration_cast<duration<float>>(currentTime - startTime);

if (elapsed > seconds(1)) {

fps = counter / elapsed.count();

counter = 0;

startTime = currentTime;

}

auto ImgFrame = outqueue->get<dai::ImgFrame>();

auto src = ImgFrame->getCvFrame();

std::vector<int> padsize;

pr_img = preprocess_img(src, INPUT_H, INPUT_W, padsize); // Resize

int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

float ratio_h = (float) src.rows / newh;

float ratio_w = (float) src.cols / neww;

auto inNN = detqueue->get<dai::NNData>();

if( inNN) {

std::cout << "Output layer names: ";

for(const auto& output_name : inNN->getAllLayerNames()) {

std::cout << output_name << ", ";

auto output_blob=inNN->getLayerFp16(output_name);

if(strcmp(output_name.c_str(),"output0")==0){

for(int i=0;i<OUTPUT_SIZE;i++)

prob[i]=(float)output_blob[i];

}

if(strcmp(output_name.c_str(),"output1")==0){

for(int i=0;i<OUTPUT_SIZE1;i++)

prob1[i]=(float)output_blob[i];

}

}

// printOutputLayersOnce = false;

}

//pretty_print(result1, prob1);

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<cv::Mat> picked_proposals; //后续计算mask

// 处理box

int net_length = CLASSES + 4 + _segChannels;

cv::Mat out1 = cv::Mat(net_length, Num_box, CV_32F, prob);

auto start = std::chrono::system_clock::now();

for (int i = 0; i < Num_box; i++) {

//输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值

cv::Mat scores = out1(Rect(i, 4, 1, CLASSES)).clone();

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float) max_class_socre;

if (max_class_socre >= CONF_THRESHOLD) {

cv::Mat temp_proto = out1(Rect(i, 4 + CLASSES, 1, _segChannels)).clone();

picked_proposals.push_back(temp_proto.t());

float x = (out1.at<float>(0, i) - padw) * ratio_w; //cx

float y = (out1.at<float>(1, i) - padh) * ratio_h; //cy

float w = out1.at<float>(2, i) * ratio_w; //w

float h = out1.at<float>(3, i) * ratio_h; //h

int left = MAX((x - 0.5 * w), 0);

int top = MAX((y - 0.5 * h), 0);

int width = (int) w;

int height = (int) h;

if (width <= 0 || height <= 0) { continue; }

classIds.push_back(classIdPoint.y);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, width, height));

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result);

std::vector<cv::Mat> temp_mask_proposals;

std::vector<OutputSeg> output;

Rect holeImgRect(0, 0, src.cols, src.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = classIds[idx];

result.confidence = confidences[idx];

//printf("ORI %d %d %d %d\n", boxes[idx].x, boxes[idx].y, boxes[idx].width, boxes[idx].height);

//printf("W %d %d %d %d\n", holeImgRect.x, holeImgRect.y, holeImgRect.width, holeImgRect.height);

result.box = boxes[idx] & holeImgRect;

//printf("%d %d %d %d\n", result.box.x, result.box.y, result.box.width, result.box.height);

output.push_back(result);

temp_mask_proposals.push_back(picked_proposals[idx]);

}

// 处理mask

Mat maskProposals;

for (int i = 0; i < temp_mask_proposals.size(); ++i)

maskProposals.push_back(temp_mask_proposals[i]);

if(!maskProposals.empty()) {

Mat protos = Mat(_segChannels, _segWidth * _segHeight, CV_32F, prob1);

Mat matmulRes = (maskProposals * protos).t();//n*32 32*25600 A*B是以数学运算中矩阵相乘的方式实现的,要求A的列数等于B的行数时

Mat masks = matmulRes.reshape(output.size(), {_segWidth, _segHeight});//n*160*160

std::vector<Mat> maskChannels;

cv::split(masks, maskChannels);

Rect roi(int((float)padw / INPUT_W * _segWidth), int((float) padh / INPUT_H * _segHeight), int(

_segWidth - padw / 2), int(_segHeight - padh / 2));

for (int i = 0; i < output.size(); ++i) {

Mat dest, mask;

cv::exp(-maskChannels[i], dest);//sigmoid

dest = 1.0 / (1.0 + dest);//160*160

dest = dest(roi);

resize(dest, mask, cv::Size(src.cols, src.rows), INTER_NEAREST);

//crop----截取box中的mask作为该box对应的mask

Rect temp_rect = output[i].box;

mask = mask(temp_rect) > MASK_THRESHOLD;

output[i].boxMask = mask;

}

auto end = std::chrono::system_clock::now();

std::cout << "后处理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms"

<< std::endl;

DrawPred(src, output);

}

std::stringstream fpsStr;

fpsStr << "NN fps: " << std::fixed << std::setprecision(2) << fps;

printf("fps %f\n",fps);

cv::putText(src, fpsStr.str(), cv::Point(4, 22), cv::FONT_HERSHEY_TRIPLEX, 1,

cv::Scalar(0, 255, 0));

cv::imshow("output", src);

cv::imwrite("a.jpg",src);

char c = cv::waitKey(1);

}

return 0;

}测试结果

四、转rknn模型,(存在问题,待修复,是用百度云盘最新官方develop得分支)

from rknn.api import RKNN

ONNX_MODEL = '/home/ubuntu/ultralytics/yolov8n-seg.onnx'

RKNN_MODEL = '/home/ubuntu/ultralytics/yolov8n-seg.rknn'

if __name__ == '__main__':

# Create RKNN object

rknn = RKNN(verbose=True)

# pre-process config

print('--> config model')

rknn.config(mean_values=[[127.5, 127.5, 127.5]], std_values=[[127.5, 127.5, 127.5]],

target_platform='rk3588',

quantized_dtype='asymmetric_quantized-8', optimization_level=3)

print('done')

print('--> Loading model')

ret = rknn.load_onnx(model=ONNX_MODEL)

if ret != 0:

print('Load model failed!')

exit(ret)

print('done')

# Build model

print('--> Building model')

ret = rknn.build(do_quantization=True, dataset='datasets.txt') # ,pre_compile=True

if ret != 0:

print('Build pp_liteseg_stdc1_camvid_960x720_10k_model failed!')

exit(ret)

print('done')

# Export rknn model

print('--> Export RKNN model')

ret = rknn.export_rknn(RKNN_MODEL)

if ret != 0:

print('Export failed!')

exit(ret)

print('done')

rknn.release()转模型

/home/ubuntu/anaconda3/envs/rknnpy363_2/bin/python /home/ubuntu/ultralytics/rk3588.py

--> config model

done

--> Loading model

W __init__: rknn-toolkit2 version: 1.4.3b12+c2650923

W config: The quant_img_RGB2BGR of input 0 is set to True, which means that the RGB2BGR conversion will be done first

when the quantized image is loaded (only valid for jpg/jpeg/png/bmp, npy will ignore this flag).

Special note here, if quant_img_RGB2BGR is True and the quantized image is jpg/jpeg/png/bmp,

the mean_values / std_values in the config corresponds the order of BGR.

Loading : 100%|████████████████████████████████████████████████| 164/164 [00:00<00:00, 51843.97it/s]

I base_optimize ...

done

--> Building model

I base_optimize done.cmakelists.txt

cmake_minimum_required(VERSION 3.13)

project(3588_demo)

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -lstdc++ ")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -lstdc++")

include_directories(${CMAKE_SOURCE_DIR})

include_directories(${CMAKE_SOURCE_DIR}/include)

message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

find_package(OpenCV REQUIRED)

#链接Opencv库

add_library(librknn_api SHARED IMPORTED)

set_target_properties(librknn_api PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/librknn_api.so)

add_executable(3588_demo main.cpp)

target_link_libraries(3588_demo ${OpenCV_LIBS} librknn_api)main.cpp

#include <stdio.h>

#include <stdint.h>

#include <stdlib.h>

#include <queue>

#include "rknn_api.h"

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <chrono>

#include <iostream>

#include "opencv2/opencv.hpp"

using namespace std;

using namespace cv;

struct OutputSeg {

int id; //结果类别id

float confidence; //结果置信度

cv::Rect box; //矩形框

cv::Mat boxMask; //矩形框内mask,节省内存空间和加快速度

};

cv::Mat preprocess_img(cv::Mat &img, int input_w, int input_h, std::vector<int> &padsize) {

int w, h, x, y;

float r_w = input_w / (img.cols * 1.0);

float r_h = input_h / (img.rows * 1.0);

if (r_h > r_w) {//����ڸ�

w = input_w;

h = r_w * img.rows;

x = 0;

y = (input_h - h) / 2;

} else {

w = r_h * img.cols;

h = input_h;

x = (input_w - w) / 2;

y = 0;

}

cv::Mat re(h, w, CV_8UC3);

cv::resize(img, re, re.size(), 0, 0, cv::INTER_LINEAR);

cv::Mat out(input_h, input_w, CV_8UC3, cv::Scalar(128, 128, 128));

re.copyTo(out(cv::Rect(x, y, re.cols, re.rows)));

padsize.push_back(h);

padsize.push_back(w);

padsize.push_back(y);

padsize.push_back(x);// int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

return out;

}

void DrawPred(Mat &img, std::vector<OutputSeg> result) {

//生成随机颜色

std::vector<Scalar> color;

srand(time(0));

for (int i = 0; i < 80; i++) {//CLASSES

int b = rand() % 256;

int g = rand() % 256;

int r = rand() % 256;

color.push_back(Scalar(b, g, r));

}

Mat mask = img.clone();

for (int i = 0; i < result.size(); i++) {

int left, top;

left = result[i].box.x;

top = result[i].box.y;

int color_num = i;

rectangle(img, result[i].box, color[result[i].id], 2, 8);

mask(result[i].box).setTo(color[result[i].id], result[i].boxMask);

char label[100];

sprintf(label, "%d:%.2f", result[i].id, result[i].confidence);

//std::string label = std::to_string(result[i].id) + ":" + std::to_string(result[i].confidence);

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 1, color[result[i].id], 2);

}

addWeighted(img, 0.5, mask, 0.8, 1, img); //将mask加在原图上面

}

void printRKNNTensor(rknn_tensor_attr *attr) {

printf("index=%d name=%s n_dims=%d dims=[%d %d %d %d] n_elems=%d size=%d "

"fmt=%d type=%d qnt_type=%d fl=%d zp=%d scale=%f\n",

attr->index, attr->name, attr->n_dims, attr->dims[3], attr->dims[2],

attr->dims[1], attr->dims[0], attr->n_elems, attr->size, 0, attr->type,

attr->qnt_type, attr->fl, attr->zp, attr->scale);

}

int main(int argc, char **argv) {

const char *model_path = "../model/yolov8n-seg.rknn";

const char *post_process_type = "fp";//fp

const char *imagepath = "../bus.jpg";

cv::Mat src = cv::imread(imagepath);

int img_width = src.cols;

int img_height = src.rows;

std::cout << "宽高:" << img_width << " " << img_height << std::endl;

// Subtract mean from image

Mat pr_img0, pr_img;

const int INPUT_H = 640;

const int INPUT_W = 640;

std::vector<int> padsize;

pr_img = preprocess_img(src, INPUT_H, INPUT_W, padsize); // Resize

int newh = padsize[0], neww = padsize[1], padh = padsize[2], padw = padsize[3];

float ratio_h = (float) src.rows / newh;

float ratio_w = (float) src.cols / neww;

const int _segWidth = 160;

const int _segHeight = 160;

const int _segChannels = 32;

const int CLASSES = 80;

const int Num_box = 8400;

const float CONF_THRESHOLD = 0.1;

const float NMS_THRESHOLD = 0.5;

const float MASK_THRESHOLD = 0.5;

const int OUTPUT_SIZE = Num_box * (CLASSES + 4 + _segChannels);//output0

const int OUTPUT_SIZE1 = _segChannels * _segWidth * _segHeight;//output1

float prob[OUTPUT_SIZE];

float prob1[OUTPUT_SIZE1];

// Load model

FILE *fp = fopen(model_path, "rb");

if (fp == NULL) {

printf("fopen %s fail!\n", model_path);

return -1;

}

fseek(fp, 0, SEEK_END);

int model_len = ftell(fp);

void *model = malloc(model_len);

fseek(fp, 0, SEEK_SET);

if (model_len != fread(model, 1, model_len, fp)) {

printf("fread %s fail!\n", model_path);

free(model);

return -1;

}

rknn_context ctx = 0;

int ret = rknn_init(&ctx, model, model_len, 0, 0);

if (ret < 0) {

printf("rknn_init fail! ret=%d\n", ret);

return -1;

}

/* Query sdk version */

rknn_sdk_version version;

ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &version,

sizeof(rknn_sdk_version));

if (ret < 0) {

printf("rknn_init error ret=%d\n", ret);

return -1;

}

printf("sdk version: %s driver version: %s\n", version.api_version,

version.drv_version);

/* Get input,output attr */

rknn_input_output_num io_num;

ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));

if (ret < 0) {

printf("rknn_init error ret=%d\n", ret);

return -1;

}

printf("model input num: %d, output num: %d\n", io_num.n_input,

io_num.n_output);

rknn_tensor_attr input_attrs[io_num.n_input];

memset(input_attrs, 0, sizeof(input_attrs));

for (int i = 0; i < io_num.n_input; i++) {

input_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]),

sizeof(rknn_tensor_attr));

if (ret < 0) {

printf("rknn_init error ret=%d\n", ret);

return -1;

}

printRKNNTensor(&(input_attrs[i]));

}

rknn_tensor_attr output_attrs[io_num.n_output];

memset(output_attrs, 0, sizeof(output_attrs));

for (int i = 0; i < io_num.n_output; i++) {

output_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]),

sizeof(rknn_tensor_attr));

printRKNNTensor(&(output_attrs[i]));

}

int input_channel = 3;

int input_width = 0;

int input_height = 0;

if (input_attrs[0].fmt == RKNN_TENSOR_NCHW) {

printf("model is NCHW input fmt\n");

input_width = input_attrs[0].dims[0];

input_height = input_attrs[0].dims[1];

printf("input_width=%d input_height=%d\n", input_width, input_height);

} else {

printf("model is NHWC input fmt\n");

input_width = input_attrs[0].dims[1];

input_height = input_attrs[0].dims[2];

printf("input_width=%d input_height=%d\n", input_width, input_height);

}

printf("model input height=%d, width=%d, channel=%d\n", input_height, input_width,

input_channel);

/* Init input tensor */

rknn_input inputs[1];

memset(inputs, 0, sizeof(inputs));

inputs[0].index = 0;

inputs[0].buf = pr_img.data;

inputs[0].type = RKNN_TENSOR_UINT8;

inputs[0].size = input_width * input_height * input_channel;

inputs[0].fmt = RKNN_TENSOR_NHWC;

inputs[0].pass_through = 0;

/* Init output tensor */

rknn_output outputs[io_num.n_output];

memset(outputs, 0, sizeof(outputs));

for (int i = 0; i < io_num.n_output; i++) {

if (strcmp(post_process_type, "fp") == 0) {

outputs[i].want_float = 1;

} else if (strcmp(post_process_type, "u8") == 0) {

outputs[i].want_float = 0;

}

}

printf("img.cols: %d, img.rows: %d\n", pr_img.cols, pr_img.rows);

auto t1 = std::chrono::steady_clock::now();

rknn_inputs_set(ctx, io_num.n_input, inputs);

ret = rknn_run(ctx, NULL);

if (ret < 0) {

printf("ctx error ret=%d\n", ret);

return -1;

}

ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL);

if (ret < 0) {

printf("outputs error ret=%d\n", ret);

return -1;

}

memcpy(prob, (float *)outputs[0].buf,OUTPUT_SIZE);

memcpy(prob1, (float *)outputs[1].buf,OUTPUT_SIZE1);

//毫秒级

auto t2 = std::chrono::steady_clock::now();

double dr_ms = std::chrono::duration<double, std::milli>(t2 - t1).count();

printf("%lf ms\n", dr_ms);

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<cv::Rect> boxes;//每个id矩形框

std::vector<cv::Mat> picked_proposals; //后续计算mask

// 处理box

int net_length = CLASSES + 4 + _segChannels;

cv::Mat out1 = cv::Mat(net_length, Num_box, CV_32F, prob);

auto start = std::chrono::system_clock::now();

for (int i = 0; i < Num_box; i++) {

//输出是1*net_length*Num_box;所以每个box的属性是每隔Num_box取一个值,共net_length个值

cv::Mat scores = out1(Rect(i, 4, 1, CLASSES)).clone();

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float) max_class_socre;

if (max_class_socre >= CONF_THRESHOLD) {

cv::Mat temp_proto = out1(Rect(i, 4 + CLASSES, 1, _segChannels)).clone();

picked_proposals.push_back(temp_proto.t());

float x = (out1.at<float>(0, i) - padw) * ratio_w; //cx

float y = (out1.at<float>(1, i) - padh) * ratio_h; //cy

float w = out1.at<float>(2, i) * ratio_w; //w

float h = out1.at<float>(3, i) * ratio_h; //h

int left = MAX((x - 0.5 * w), 0);

int top = MAX((y - 0.5 * h), 0);

int width = (int) w;

int height = (int) h;

if (width <= 0 || height <= 0) { continue; }

classIds.push_back(classIdPoint.y);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, width, height));

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, CONF_THRESHOLD, NMS_THRESHOLD, nms_result);

std::vector<cv::Mat> temp_mask_proposals;

std::vector<OutputSeg> output;

Rect holeImgRect(0, 0, src.cols, src.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = classIds[idx];

result.confidence = confidences[idx];

//printf("ORI %d %d %d %d\n", boxes[idx].x, boxes[idx].y, boxes[idx].width, boxes[idx].height);

//printf("W %d %d %d %d\n", holeImgRect.x, holeImgRect.y, holeImgRect.width, holeImgRect.height);

result.box = boxes[idx] & holeImgRect;

//printf("%d %d %d %d\n", result.box.x, result.box.y, result.box.width, result.box.height);

output.push_back(result);

temp_mask_proposals.push_back(picked_proposals[idx]);

}

// 处理mask

Mat maskProposals;

for (int i = 0; i < temp_mask_proposals.size(); ++i)

maskProposals.push_back(temp_mask_proposals[i]);

if (!maskProposals.empty()) {

Mat protos = Mat(_segChannels, _segWidth * _segHeight, CV_32F, prob1);

Mat matmulRes = (maskProposals * protos).t();//n*32 32*25600 A*B是以数学运算中矩阵相乘的方式实现的,要求A的列数等于B的行数时

Mat masks = matmulRes.reshape(output.size(), {_segWidth, _segHeight});//n*160*160

std::vector<Mat> maskChannels;

cv::split(masks, maskChannels);

Rect roi(int((float)padw / INPUT_W * _segWidth), int((float) padh / INPUT_H * _segHeight), int(

_segWidth - padw / 2), int(_segHeight - padh / 2));

for (int i = 0; i < output.size(); ++i) {

Mat dest, mask;

cv::exp(-maskChannels[i], dest);//sigmoid

dest = 1.0 / (1.0 + dest);//160*160

dest = dest(roi);

resize(dest, mask, cv::Size(src.cols, src.rows), INTER_NEAREST);

//crop----截取box中的mask作为该box对应的mask

Rect temp_rect = output[i].box;

mask = mask(temp_rect) > MASK_THRESHOLD;

output[i].boxMask = mask;

}

auto end = std::chrono::system_clock::now();

std::cout << "后处理时间:" << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms"

<< std::endl;

DrawPred(src, output);

}

//cv::imshow("output", src);

cv::imwrite("a.jpg", src);

// char c = cv::waitKey(0);

ret = rknn_outputs_release(ctx, io_num.n_output, outputs);

if (ret < 0) {

printf("rknn_query fail! ret=%d\n", ret);

goto Error;

}

Error:

if (ctx > 0)

rknn_destroy(ctx);

if (model)

free(model);

if (fp)

fclose(fp);

return 0;

}测试结果,结果有问题,待修复

宽高:810 1080

sdk version: 1.4.3b10 (9c25c9f90@2023-04-14T03:43:27) driver version: 0.8.2

model input num: 1, output num: 2

index=0 name=images n_dims=4 dims=[3 640 640 1] n_elems=1228800 size=1228800 fmt=0 type=2 qnt_type=2 fl=0 zp=0 scale=0.003922

index=0 name=output0 n_dims=4 dims=[1 8400 116 1] n_elems=974400 size=974400 fmt=0 type=2 qnt_type=2 fl=0 zp=-127 scale=2.509845

index=1 name=output1 n_dims=4 dims=[160 160 32 1] n_elems=819200 size=819200 fmt=0 type=2 qnt_type=2 fl=0 zp=-113 scale=0.018982

model is NHWC input fmt

input_width=640 input_height=640

model input height=640, width=640, channel=3

img.cols: 640, img.rows: 640

53.870083 ms

Process finished with exit code 0参考:

https://github.com/fish-kong/Yolov8-instance-seg-tensorrt