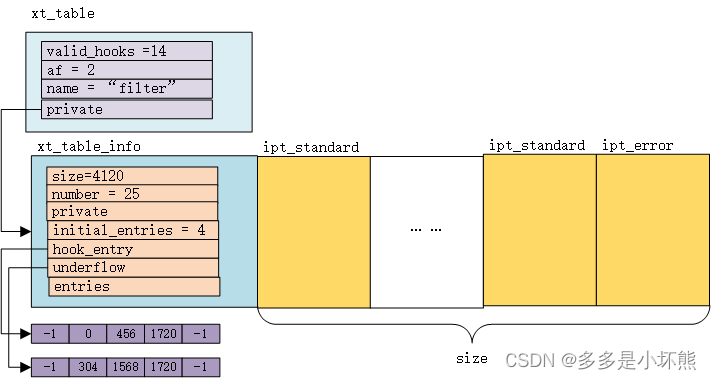

这次继续分析filter表,不同与之前的分析方式,这次通过将内核中的数据打印出来,对比结构关系图来分析。这是本次分析涉及的几个数据结构:

struct xt_table {

struct list_head list;

/* What hooks you will enter on */

unsigned int valid_hooks;

/* Man behind the curtain... */

struct xt_table_info *private;

/* Set this to THIS_MODULE if you are a module, otherwise NULL */

struct module *me;

u_int8_t af; /* address/protocol family */

int priority; /* hook order */

/* called when table is needed in the given netns */

int (*table_init)(struct net *net);

/* A unique name... */

const char name[XT_TABLE_MAXNAMELEN];

};

struct xt_table_info {

/* Size per table */

unsigned int size;

/* Number of entries: FIXME. --RR */

unsigned int number;

/* Initial number of entries. Needed for module usage count */

unsigned int initial_entries;

/* Entry points and underflows */

unsigned int hook_entry[NF_INET_NUMHOOKS]; // hook_entry[NF_INET_LOCAL_IN]

unsigned int underflow[NF_INET_NUMHOOKS];

/*

* Number of user chains. Since tables cannot have loops, at most

* @stacksize jumps (number of user chains) can possibly be made.

*/

unsigned int stacksize;

void ***jumpstack;

unsigned char entries[0] __aligned(8);

};

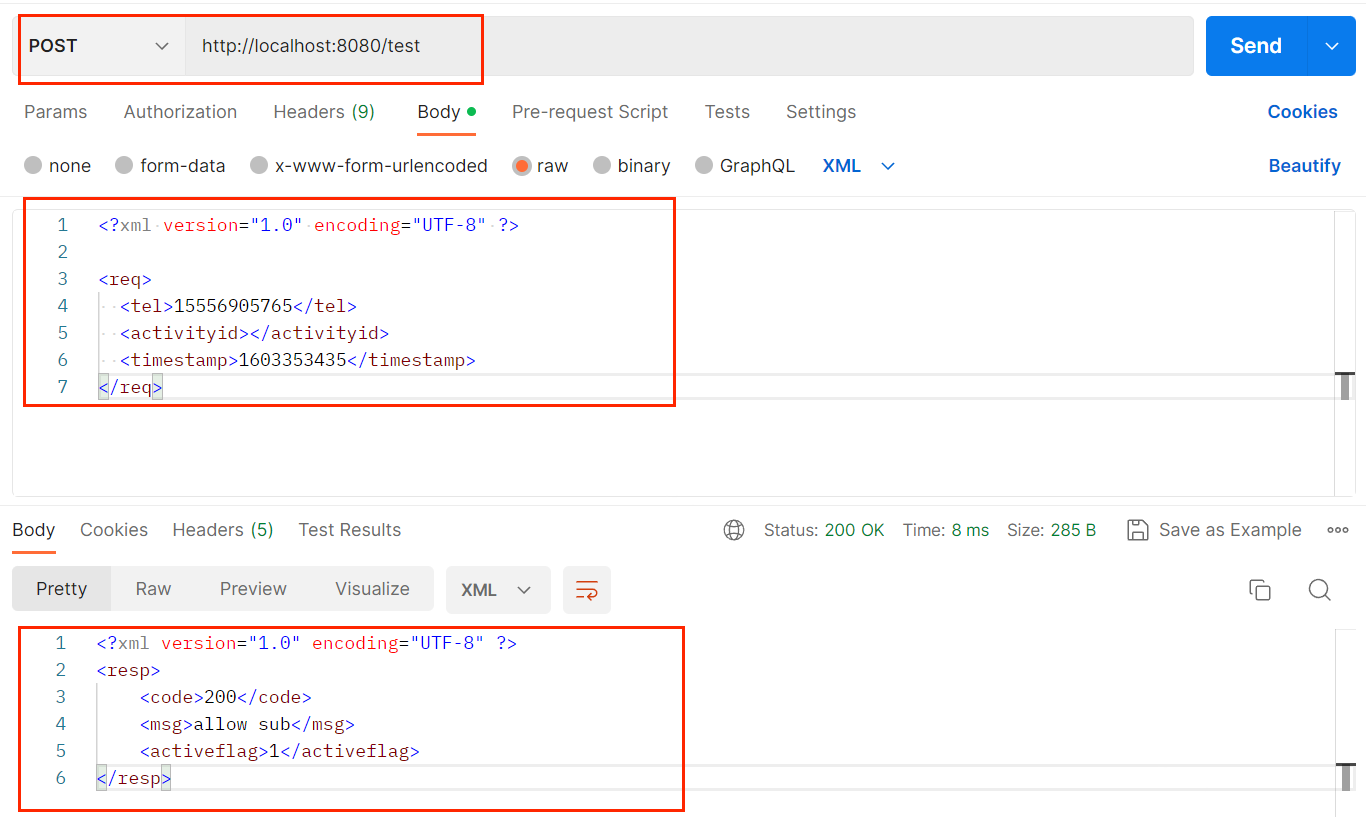

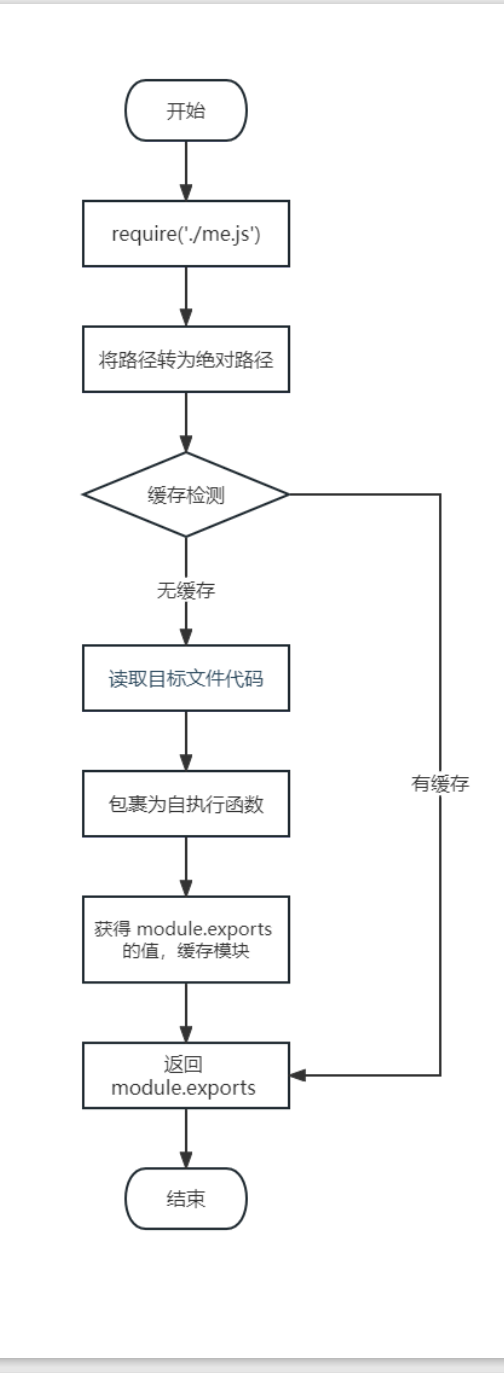

依然用《netfilter filter表》 中的方法,打印内核中的数据,这次hello_open的代码如下:

static int hello_open(struct inode* inode, struct file*filep)

{

printk("hello_open\n");

struct task_struct *tsk = current;

struct net *net;

struct xt_table *xt_filter;

struct xt_table_info *filter_info;

const void* table_base;

int i = 0;

int local_in_hook_entry;

struct ipt_entry* ipt_entry;

struct nsproxy *nsprx = tsk->nsproxy; //命名空间

net = nsprx->net_ns;

xt_filter = net->ipv4.iptable_filter;

// 打印xt_table信息

printk("xt_table: af - %d\n", xt_filter->af);

printk("xt_table: name - %s\n", xt_filter->name);

printk("xt_table: valid_hooks - %d\n", xt_filter->valid_hooks);

printk("xt_table: priority - %d\n", xt_filter->priority);

filter_info = xt_filter->private;

if (NULL == filter_info)

{

printk("filter_info is null\n");

return 0;

}

printk("filter_info: size - %d\n", filter_info->size);

printk("filter_info: number - %d\n", filter_info->number); // 4?

printk("filter_info: initial_entries - %d\n", filter_info->initial_entries);

printk("filter_info: stacksize - %d\n", filter_info->stacksize);

table_base = filter_info->entries;

//local_in_hook_entry = filter_info->hook_entry[NF_INET_LOCAL_IN];

// 打印hook_entry数组

for (i = 0; i < NF_INET_NUMHOOKS; ++i)

{

printk("filter_info: hook_entry[%d] - %d\n", i, filter_info->hook_entry[i]);

}

for (i = 0; i < NF_INET_NUMHOOKS; ++i)

{

printk("filter_info: underflow[%d] - %d\n", i, filter_info->underflow[i]);

}

return 0;

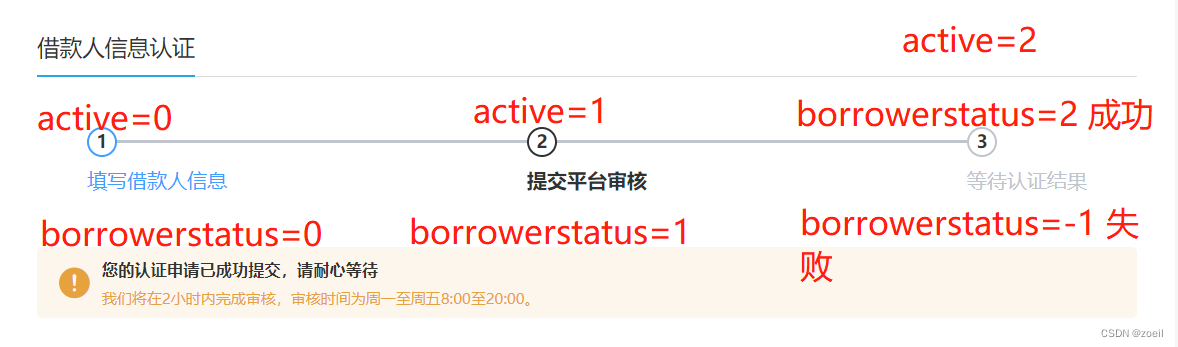

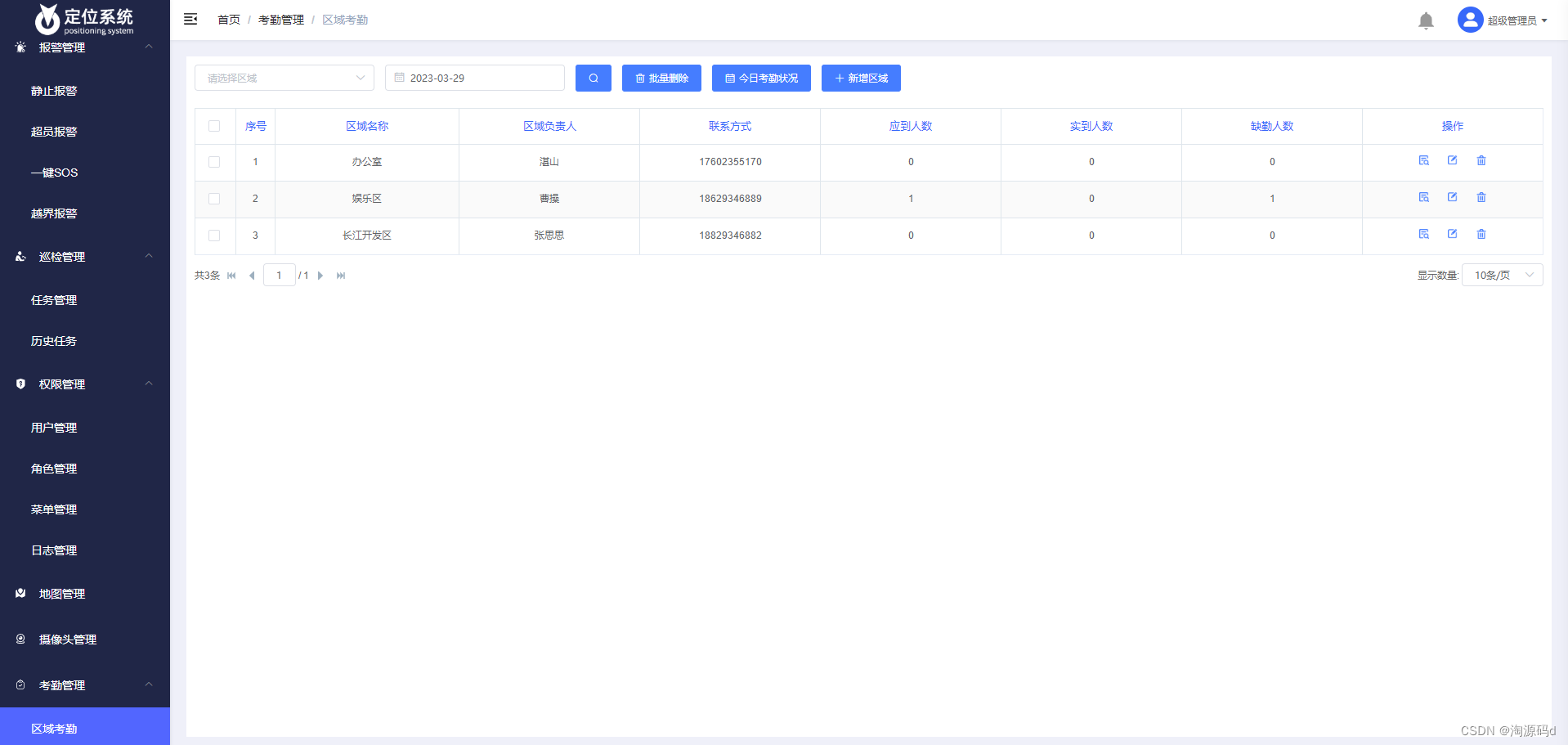

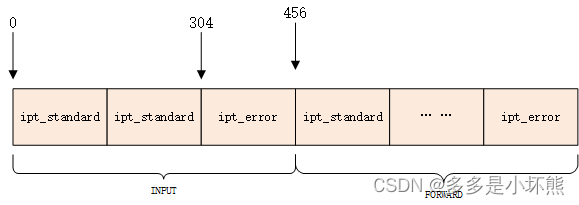

}根据打印的内核日志,整理了下面的图:

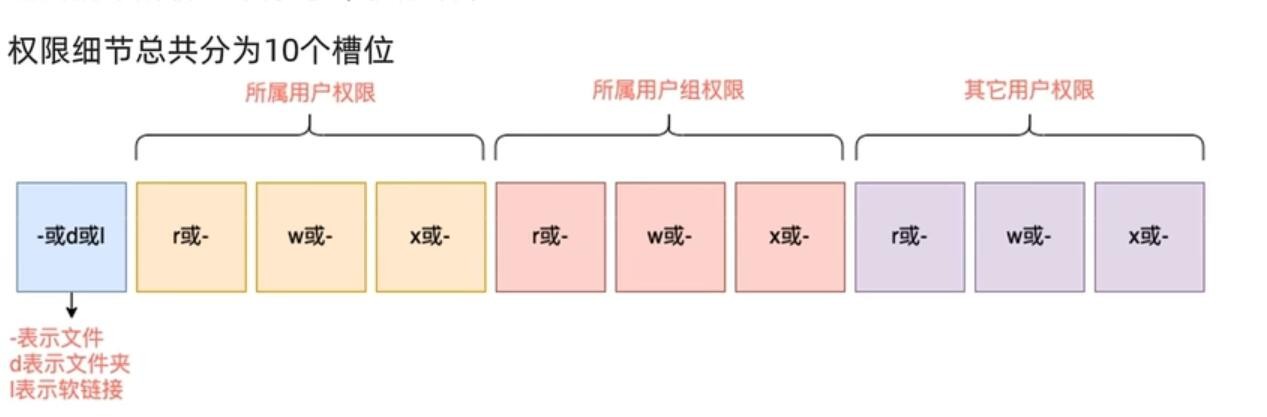

涉及的两个枚举类型:

enum {

NFPROTO_UNSPEC = 0,

NFPROTO_INET = 1,

NFPROTO_IPV4 = 2,

NFPROTO_ARP = 3,

NFPROTO_NETDEV = 5,

NFPROTO_BRIDGE = 7,

NFPROTO_IPV6 = 10,

NFPROTO_DECNET = 12,

NFPROTO_NUMPROTO,

};

enum nf_inet_hooks {

NF_INET_PRE_ROUTING,

NF_INET_LOCAL_IN,

NF_INET_FORWARD,

NF_INET_LOCAL_OUT,

NF_INET_POST_ROUTING,

NF_INET_NUMHOOKS

};

现在开始分析日志,其中以“#”开头或标蓝的为注释信息。

[ 3885.915747] hello_open

# 2对应的是NFPROTO_IPV4,将上面NFPROTO_IPV4的定义

[ 3885.915749] xt_table: af - 2

[ 3885.915749] xt_table: name - filter#14实际是:

#define FILTER_VALID_HOOKS ((1 << NF_INET_LOCAL_IN) | \

(1 << NF_INET_FORWARD) | \

(1 << NF_INET_LOCAL_OUT))

[ 3885.915750] xt_table: valid_hooks - 14

[ 3885.915751] xt_table: priority - 0#下面是xt_table_info信息

#xt_table_info后面,紧接着是4120大小的内存空间,存放ipt_standard和ipt_error

[ 3885.915751] filter_info: size - 4120#一共25个ipt_standard/ipt_error对象

[ 3885.915752] filter_info: number - 25

[ 3885.915752] filter_info: initial_entries - 4

[ 3885.915753] filter_info: stacksize - 5

[ 3885.915753] filter_info: hook_entry[0] - -1

[ 3885.915754] filter_info: hook_entry[1] - 0

[ 3885.915754] filter_info: hook_entry[2] - 456

[ 3885.915755] filter_info: hook_entry[3] - 1720

[ 3885.915755] filter_info: hook_entry[4] - -1

[ 3885.915756] filter_info: underflow[0] - -1

[ 3885.915756] filter_info: underflow[1] - 304

[ 3885.915757] filter_info: underflow[2] - 1568

[ 3885.915757] filter_info: underflow[3] - 1720

[ 3885.915758] filter_info: underflow[4] - -1

xt_table_info的hook_entry和underflow需要单独说一下。内核网络栈有5个钩子挂载点,hook_entry[0]和underflow[0]分别表示第一个挂载点在filter表中配置存放的起始位置和结束位置,以此类推。

filter_info: hook_entry[0] - -1

underflow[0] - -1

表示第一个挂载点,filter表不处理此挂载点的数据,那第一个挂载点是哪个呢?就是NF_INET_PRE_ROUTING,见enum nf_inet_hooks的定义。

hook_entry[1] - 0

underflow[1] - 304

同理,这个表示第2个挂载点(NF_INET_LOCAL_IN)在filter表中配置项的起始位置和结束位置。

可以参考前面的图,加深对上述的理解。

再看下本机filter表INPUT链的配置:

Chain INPUT (policy ACCEPT)

target prot opt source destination

DROP tcp -- 1.2.3.5 0.0.0.0/0

ACCEPT all -- 1.2.3.4 0.0.0.0/0INPUT链有两条配置,每个配置对应一个ipt_standard对象,每个ipt_standard对象大小为152字节。因此INPUT链配置存放的起始位置是0,结束位置是304。

underflow[1] - 304

hook_entry[2] - 456

304和456,中间有152字节的内存,存放的是ipt_error对象。见上图。

hook_entry和 underflow存放的是配置项与xt_table_info的entries距离。

(void*)xt_table_info.entries + xt_table_info. hook_entry[1],得到的是INPUT链第一条配置(ipt_standard)的位置。

(void*)xt_table_info.entries + xt_table_info. hook_entry[2],得到的是FORWARD链第一条配置(ipt_standard)的位置。

下一篇将分析ipt_standard。