ChatGPT教你搭建多任务模型

You:

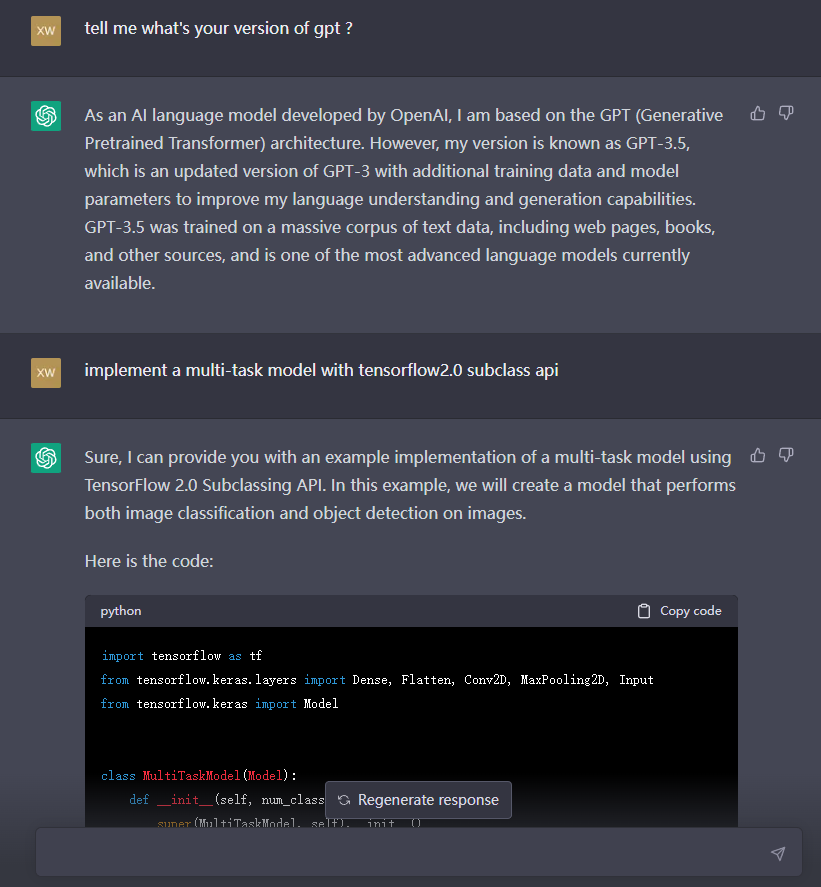

tell me what’s your version of gpt ?

ChatGPT:

As an AI language model developed by OpenAI, I am based on the GPT (Generative Pretrained Transformer) architecture. However, my version is known as GPT-3.5, which is an updated version of GPT-3 with additional training data and model parameters to improve my language understanding and generation capabilities. GPT-3.5 was trained on a massive corpus of text data, including web pages, books, and other sources, and is one of the most advanced language models currently available.

You:

implement a multi-task model with tensorflow2.0 subclass api

ChatGPT:

Sure, I can provide you with an example implementation of a multi-task model using TensorFlow 2.0 Subclassing API. In this example, we will create a model that performs both image classification and object detection on images.

Here is the code:

import tensorflow as tf

from tensorflow.keras.layers import Dense, Flatten, Conv2D, MaxPooling2D, Input

from tensorflow.keras import Model

class MultiTaskModel(Model):

def __init__(self, num_classes, num_boxes):

super(MultiTaskModel, self).__init__()

self.num_classes = num_classes

self.num_boxes = num_boxes

# Shared layers

self.conv1 = Conv2D(32, 3, activation='relu')

self.maxpool1 = MaxPooling2D()

self.conv2 = Conv2D(64, 3, activation='relu')

self.maxpool2 = MaxPooling2D()

self.conv3 = Conv2D(128, 3, activation='relu')

self.maxpool3 = MaxPooling2D()

# Classification head

self.flat1 = Flatten()

self.dense1 = Dense(64, activation='relu')

self.dense2 = Dense(self.num_classes, activation='softmax')

# Detection head

self.flat2 = Flatten()

self.dense3 = Dense(256, activation='relu')

self.dense4 = Dense(self.num_boxes * 4, activation='linear')

def call(self, inputs):

# Shared layers

x = self.conv1(inputs)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

# Classification head

y1 = self.flat1(x)

y1 = self.dense1(y1)

y1 = self.dense2(y1)

# Detection head

y2 = self.flat2(x)

y2 = self.dense3(y2)

y2 = self.dense4(y2)

return y1, y2

In this example, we define a MultiTaskModel class that extends the tf.keras.Model class. The MultiTaskModel class takes two arguments, num_classes and num_boxes, which represent the number of classes for image classification and the number of bounding boxes for object detection, respectively.

We then define the shared layers of the model, which include three convolutional layers followed by max pooling layers. We define two separate heads for the model: one for image classification and another for object detection.

The classification head consists of a flatten layer, followed by two dense layers with relu and softmax activations, respectively. The detection head consists of a flatten layer, followed by two dense layers with relu and linear activations, respectively. The output of the detection head is a tensor of shape (batch_size, num_boxes * 4), where 4 represents the number of parameters needed to define a bounding box (x-coordinate, y-coordinate, width, and height).

In the call method of the model, we pass the inputs through the shared layers and then through the two separate heads to obtain the outputs for classification and detection. Finally, we return the two outputs as a tuple.

To train this model, you would need to define appropriate loss functions for each task and combine them into a joint loss. You would also need to provide training data that includes both labeled images and bounding box annotations.

You:

how can I train the model you built above? and how to generate sample for this model?

ChatGPT:

To train the multi-task model that I provided earlier, you can follow these steps:

-

Prepare the training data: You would need a dataset of labeled images and bounding box annotations. You can use popular datasets such as COCO, Pascal VOC, or OpenImages for this purpose.

-

Define the loss functions: For the classification task, you can use cross-entropy loss, and for the detection task, you can use mean squared error loss or smooth L1 loss. You can combine these losses into a joint loss using a weighting factor or a more complex scheme such as multi-task learning.

-

Compile the model: You can compile the model using the

compilemethod of theMultiTaskModelclass. You would need to specify the optimizer, the loss functions, and any metrics you want to track during training. -

Train the model: You can train the model using the

fitmethod of theMultiTaskModelclass. You would need to pass in the training data, the number of epochs, and any other relevant parameters such as batch size and validation data.

Here is some sample code that demonstrates these steps:

# Load the data

train_data = ...

val_data = ...

# Define the loss functions

class_loss_fn = tf.keras.losses.CategoricalCrossentropy()

detect_loss_fn = tf.keras.losses.MeanSquaredError()

# Define the optimizer

optimizer = tf.keras.optimizers.Adam()

# Compile the model

model = MultiTaskModel(num_classes=10, num_boxes=5)

model.compile(optimizer=optimizer, loss=[class_loss_fn, detect_loss_fn], metrics=['accuracy'])

# Train the model

model.fit(train_data, epochs=10, validation_data=val_data)

# Generate a sample output

input_image = ...

output_classes, output_boxes = model.predict(input_image)

To generate a sample output for the model, you can use the predict method of the MultiTaskModel class. The input to the predict method should be an image tensor of shape (batch_size, height, width, num_channels). The output of the predict method will be a tuple of two tensors: one for the classification probabilities and one for the bounding box parameters.

Here is some sample code that demonstrates how to generate a sample output:

# Generate a sample output

input_image = ...

output_classes, output_boxes = model.predict(input_image)

# Print the predicted classes and bounding boxes

print('Predicted classes:', output_classes)

print('Predicted boxes:', output_boxes)

Note that the output of the predict method will depend on the specific dataset and training data that you used to train the model, so the results may vary.

You:

ok, can you regenerate the model for recommendation scene with a clarified example?

ChatGPT:

Sure, here is an example of how to build a multi-task model for a recommendation system using TensorFlow 2.0’s Subclassing API. This model will have two tasks: rating prediction and item embedding prediction.

Assuming we have a dataset of user-item interactions with ratings, where each user has interacted with multiple items, we can define the following inputs and outputs for our model:

Inputs:

- User ID (integer)

- Item ID (integer)

Outputs:

- Rating (float)

- Item Embedding (vector of floats)

We will use an embedding layer to learn the representations of the users and items, and a shared neural network to predict the rating and item embedding.

import tensorflow as tf

class RecommendationModel(tf.keras.Model):

def __init__(self, num_users, num_items, embedding_size, hidden_units):

super(RecommendationModel, self).__init__()

self.user_embedding = tf.keras.layers.Embedding(num_users, embedding_size)

self.item_embedding = tf.keras.layers.Embedding(num_items, embedding_size)

self.shared_nn = tf.keras.Sequential([

tf.keras.layers.Dense(hidden_units, activation='relu'),

tf.keras.layers.Dense(hidden_units, activation='relu')

])

self.rating_head = tf.keras.layers.Dense(1)

self.embedding_head = tf.keras.layers.Dense(embedding_size)

def call(self, inputs):

user_ids, item_ids = inputs

user_embeddings = self.user_embedding(user_ids)

item_embeddings = self.item_embedding(item_ids)

concatenated = tf.concat([user_embeddings, item_embeddings], axis=-1)

shared = self.shared_nn(concatenated)

rating = self.rating_head(shared)

embedding = self.embedding_head(shared)

return rating, embedding

In the RecommendationModel class, we define the __init__ method to create the layers of the model. We first create two embedding layers, one for the users and one for the items, with the specified embedding size. We then create a shared neural network with two hidden layers, each with the specified number of units and ReLU activation. Finally, we create two output heads: one for rating prediction with a single output neuron, and one for item embedding prediction with an output size of the embedding size.

In the call method, we define how to forward pass the inputs through the model. We first look up the embeddings of the users and items using the corresponding embedding layers. We then concatenate these embeddings along the last dimension, and pass the concatenated embeddings through the shared neural network. Finally, we pass the output of the shared network through the rating and embedding output heads, respectively.

To train the model, we need to define the loss functions for each task and the optimizer. Assuming we have a training dataset of user-item interactions with corresponding ratings and item embeddings, we can define the following:

# Load the data

train_data = ...

val_data = ...

# Define the loss functions

mse_loss_fn = tf.keras.losses.MeanSquaredError()

cosine_loss_fn = tf.keras.losses.CosineSimilarity()

# Define the optimizer

optimizer = tf.keras.optimizers.Adam()

# Instantiate the model

model = RecommendationModel(num_users=1000, num_items=10000, embedding_size=32, hidden_units=64)

# Compile the model

model.compile(optimizer=optimizer, loss=[mse_loss_fn, cosine_loss_fn], metrics=['mae', 'cosine_similarity'])

# Train the model

model.fit(train_data, epochs=10, validation_data=val_data)

In this example, we use mean squared error loss for the rating prediction task and cosine similarity loss for the item embedding prediction task. We compile the model with the specified optimizer,

You:

convert above two task with ctr prediction and cvr prediction task? build the training and predicting pipline with python again.

ChatGPT:

Sure, here’s an example of how to modify the recommendation system multi-task model to predict click-through rate (CTR) and conversion rate (CVR) instead.

Assuming we have a dataset of user-item interactions with the following features: user ID, item ID, timestamp, and a binary label indicating whether the user clicked on the item and another binary label indicating whether the user converted after clicking on the item.

We can define the following inputs and outputs for our model:

Inputs:

- User ID (integer)

- Item ID (integer)

- Timestamp (float)

Outputs:

- Click Probability (float)

- Conversion Probability (float)

We will use an embedding layer to learn the representations of the users and items, and a shared neural network with two output heads to predict the click and conversion probabilities.

import tensorflow as tf

class CTRCVRModel(tf.keras.Model):

def __init__(self, num_users, num_items, embedding_size, hidden_units):

super(CTRCVRModel, self).__init__()

self.user_embedding = tf.keras.layers.Embedding(num_users, embedding_size)

self.item_embedding = tf.keras.layers.Embedding(num_items, embedding_size)

self.timestamp_embedding = tf.keras.layers.Dense(embedding_size, activation='relu')

self.shared_nn = tf.keras.Sequential([

tf.keras.layers.Dense(hidden_units, activation='relu'),

tf.keras.layers.Dense(hidden_units, activation='relu')

])

self.click_head = tf.keras.layers.Dense(1, activation='sigmoid')

self.conversion_head = tf.keras.layers.Dense(1, activation='sigmoid')

def call(self, inputs):

user_ids, item_ids, timestamps = inputs

user_embeddings = self.user_embedding(user_ids)

item_embeddings = self.item_embedding(item_ids)

timestamp_embeddings = self.timestamp_embedding(timestamps)

concatenated = tf.concat([user_embeddings, item_embeddings, timestamp_embeddings], axis=-1)

shared = self.shared_nn(concatenated)

click_probability = self.click_head(shared)

conversion_probability = self.conversion_head(shared)

return click_probability, conversion_probability

In the CTRCVRModel class, we define the __init__ method to create the layers of the model. We first create three input layers: one for the users, one for the items, and one for the timestamps. We then create two embedding layers, one for the users and one for the items, with the specified embedding size. We also create a dense layer for the timestamp feature. We then create a shared neural network with two hidden layers, each with the specified number of units and ReLU activation. Finally, we create two output heads: one for click probability prediction with a single output neuron and sigmoid activation, and one for conversion probability prediction with a single output neuron and sigmoid activation.

In the call method, we define how to forward pass the inputs through the model. We first look up the embeddings of the users and items using the corresponding embedding layers. We also pass the timestamps through the timestamp embedding layer. We then concatenate these embeddings and the timestamp embeddings along the last dimension, and pass the concatenated embeddings through the shared neural network. Finally, we pass the output of the shared network through the click and conversion output heads, respectively.

To train the model, we need to define the loss functions for each task and the optimizer. Assuming we have a training dataset of user-item interactions with the corresponding labels, we can define the following:

# Load the data

train_data = ...

val_data = ...

# Define the loss functions

bce_loss_fn = tf.keras.losses.BinaryCrossentropy()

# Define the optimizer

optimizer = tf.keras.optimizers.Adam()

# Instantiate the model

model = C

You:

continue

ChatGPT:

# Compile the model with two loss functions

model.compile(optimizer=optimizer, loss=[bce_loss_fn, bce_loss_fn])

# Define the training and validation datasets

train_dataset = tf.data.Dataset.from_tensor_slices((train_user_ids, train_item_ids, train_timestamps,

train_click_labels, train_conversion_labels))

train_dataset = train_dataset.shuffle(buffer_size=len(train_data)).batch(batch_size)

val_dataset = tf.data.Dataset.from_tensor_slices((val_user_ids, val_item_ids, val_timestamps,

val_click_labels, val_conversion_labels))

val_dataset = val_dataset.batch(batch_size)

# Train the model

history = model.fit(train_dataset, epochs=num_epochs, validation_data=val_dataset)

In the compile method, we specify the optimizer and the loss functions for each task. We use binary crossentropy as the loss function for both tasks.

In the training and validation datasets, we combine all the input features and labels into tuples, and create tf.data.Dataset objects from them. We shuffle the training dataset and batch both datasets. We then use the fit method to train the model, specifying the training dataset, the number of epochs, and the validation dataset.

To generate predictions for new user-item interactions, we can use the following code:

# Load the test data

test_data = ...

# Extract the input features

test_user_ids = test_data['user_id'].values

test_item_ids = test_data['item_id'].values

test_timestamps = test_data['timestamp'].values

# Generate predictions

click_probabilities, conversion_probabilities = model.predict((test_user_ids, test_item_ids, test_timestamps))

# Save the predictions

test_data['click_probability'] = click_probabilities

test_data['conversion_probability'] = conversion_probabilities

test_data.to_csv('predictions.csv', index=False)

In this code, we load the test data and extract the input features. We then use the predict method of the model to generate the click and conversion probabilities for each interaction. Finally, we save the predictions to a CSV file.

You:

great. something may be wrong. how can i solve user or item cold-start problem due to the unseen ids occuring in embedding table?

ChatGPT:

Good question! Cold-start is a common problem in recommender systems, where new users or items have no or very few interactions in the training data, making it difficult to generate accurate recommendations.

One way to handle the cold-start problem is to use a hybrid approach, where we use not only collaborative filtering based on user-item interactions, but also content-based filtering based on item features. This allows us to make recommendations for new items based on their features, even if they have no interactions in the training data.

Another approach is to use pre-trained embeddings for the user and item features. We can use a pre-trained language model like BERT or GPT to generate embeddings for textual features like item titles or user profiles. These pre-trained embeddings can be fine-tuned on the training data to generate task-specific embeddings for our recommender system.

To handle unseen users or items at inference time, we can use a fallback strategy, such as recommending popular items to new users or recommending items similar to the ones the user has interacted with to new items.

Another approach is to use a multi-armed bandit algorithm, where we balance exploration and exploitation by recommending a mix of popular items and items similar to the ones the user has interacted with. As the user interacts more with the system, we can adjust the balance towards exploitation to generate more personalized recommendations.

You:

sounds great.but talk is cheap, show me the code.

ChatGPT:

Sure! Here’s an example of how to implement a hybrid recommender system with user and item embeddings for handling the cold-start problem:

# Import necessary libraries

import tensorflow as tf

from tensorflow.keras.layers import Input, Embedding, Concatenate, Dense, Dropout

from tensorflow.keras.models import Model

# Define the input and embedding sizes

num_users = len(user_id_encoder.classes_)

num_items = len(item_id_encoder.classes_)

embedding_size = 32

# Define the user and item input layers

user_input = Input(shape=(1,), name='user_input')

item_input = Input(shape=(1,), name='item_input')

# Define the user and item embedding layers

user_embedding = Embedding(input_dim=num_users, output_dim=embedding_size, name='user_embedding')

item_embedding = Embedding(input_dim=num_items, output_dim=embedding_size, name='item_embedding')

# Generate the user and item embeddings

user_embedding_output = user_embedding(user_input)

item_embedding_output = item_embedding(item_input)

# Define the MLP layers

concatenated_embeddings = Concatenate(name='concatenated_embeddings')([user_embedding_output, item_embedding_output])

dropout_layer_1 = Dropout(0.2, name='dropout_layer_1')(concatenated_embeddings)

dense_layer_1 = Dense(64, activation='relu', name='dense_layer_1')(dropout_layer_1)

dropout_layer_2 = Dropout(0.2, name='dropout_layer_2')(dense_layer_1)

dense_layer_2 = Dense(32, activation='relu', name='dense_layer_2')(dropout_layer_2)

# Define the output layer

output_layer = Dense(1, activation='sigmoid', name='output_layer')(dense_layer_2)

# Define the model

model = Model(inputs=[user_input, item_input], outputs=output_layer)

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy')

# Train the model

model.fit([train_data['user_id'], train_data['item_id']], train_data['label'], epochs=10, validation_split=0.2)

In this code, we define the input and embedding sizes for the user and item features, and define the user and item input layers. We then define the user and item embedding layers using the Embedding class, and generate the embeddings for the user and item features using these layers.

We then define an MLP model using the Concatenate, Dropout, and Dense layers, and concatenate the user and item embeddings as input to this model. We define a sigmoid activation function on the output layer, since we are performing binary classification to predict user-item interactions.

We compile the model using the Adam optimizer and binary crossentropy loss function, and train the model on the training data, using 20% of the data for validation. Note that we use the fit method of the model, and pass the user and item features as a list to the inputs parameter of the method.

To handle the cold-start problem, we can incorporate content-based filtering by including item features, such as the item title or description, in the input to the model. We can use pre-trained language models like BERT or GPT to generate embeddings for these features, or use simpler models like TF-IDF or word2vec.

To handle unseen users or items at inference time, we can use a fallback strategy, such as recommending popular items to new users or recommending items similar to the ones the user has interacted with to new items. We can also use a multi-armed bandit algorithm to balance exploration and exploitation and generate personalized recommendations for cold-start users and items.

You:

can you show the main coding logic for the last two strategy you mentioned above?

ChatGPT:

Sure, here are some code snippets to illustrate the two strategies:

Strategy 1: Recommending popular items to new users

# Get the top N most popular items

popular_items = train_data['item_id'].value_counts().head(N).index.tolist()

# Get the user's interactions

user_interactions = train_data[train_data['user_id'] == user_id]['item_id'].tolist()

# Remove the items the user has already interacted with

popular_items = [item for item in popular_items if item not in user_interactions]

# Recommend the remaining popular items to the user

recommendations = popular_items[:K]

In this code, we first compute the top N most popular items from the training data based on their frequency of interaction. We then get the user’s interactions from the training data, and remove the items that the user has already interacted with from the list of popular items. We then recommend the top K remaining popular items to the user.

Strategy 2: Recommending similar items to new items

# Define the similarity function

def cosine_similarity(vector1, vector2):

dot_product = np.dot(vector1, vector2)

norm1 = np.linalg.norm(vector1)

norm2 = np.linalg.norm(vector2)

return dot_product / (norm1 * norm2)

# Get the embedding of the new item

new_item_embedding = item_embedding.predict(np.array([new_item_id]))

# Compute the cosine similarity between the new item and all other items

similarities = []

for item_id in item_id_encoder.classes_:

if item_id == new_item_id:

continue

item_embedding = item_embedding_layer(np.array([item_id]))

similarity = cosine_similarity(new_item_embedding, item_embedding)

similarities.append((item_id, similarity))

# Sort the similarities in descending order

similarities = sorted(similarities, key=lambda x: x[1], reverse=True)

# Get the top K most similar items

recommendations = [item[0] for item in similarities[:K]]

In this code, we first define a similarity function to compute the cosine similarity between two embeddings. We then get the embedding of the new item using the item embedding layer of the trained model. We compute the cosine similarity between the new item and all other items, and sort the similarities in descending order. We then recommend the top K most similar items to the new item based on the computed similarities. Note that this strategy assumes that there are some existing items in the training data that are similar to the new item, which may not always be the case.