最近想训练遥感实例分割,纵观博客发现较少相关 iSAID数据集的切分及数据集转换内容,思来想去应该在繁忙之中抽出时间写个详细的教程。

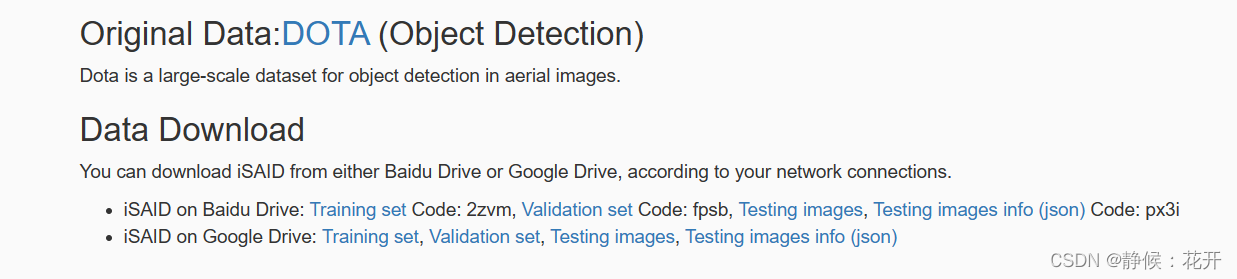

iSAID数据集下载

iSAID数据集链接

下载上述数据集。

百度网盘中的train和val中包含了实例和语义分割标签。

上述过程只能下载标签,原始图像为DOTA,DOTA图像链接

上述下载完毕后,

train图像:1411张原始图像;1411张实例标签;1411张语义标签。

将所有训练图像放置在一起创建iSAID/train/

val图像:458张原始图像;458张实例标签;458张语义标签。

将所有验证图像放置在一起创建iSAID/val/

切图并分割标签

下载切图代码:切图及标签转换

如果不将图像切分,则会造成显存溢出,原因在于图像具有较多实例,以及大分辨率。

根据readme运行split.py,运行时将’–set’,改为 default=“train,val”

此时执行切图运算(时间较长)。

切割完毕后在iSAID_patches文件夹中

train/84087图像数量

val/19024图像数量

第二步:标签生成:

运行preprocess.py。

注:需要安装lycon库,如果失败,在ubuntu命令行执行:

sudo apt-get install cmake build-essential libjpeg-dev libpng-dev

运行完毕后将生成coco格式的大json文件。

转成YOLO格式并训练

利用coco官方API统计一下目标类别:

# -*- coding: utf-8 -*-

# -----------------------------------------------------

# Time : 2023/2/27 11:28

# Auth : Written by zuofengyuan

# File : 统计coco信息.py

# Copyright (c) Shenyang Pedlin Technolofy Co., Ltd.

# -----------------------------------------------------

"""

Description: TODO

"""

from pycocotools.coco import COCO

# 文件路径

dataDir = r'l/'

dataType = 'train2017' #val2017

annFile = '{}/instances_{}.json'.format(dataDir, dataType)

# initialize COCO api for instance annotations

coco_train = COCO(annFile)

# display COCO categories and supercategories

# 显示所有类别

cats = coco_train.loadCats(coco_train.getCatIds())

cat_nms = [cat['name'] for cat in cats]

print('COCO categories:\n{}'.format('\n'.join(cat_nms)) + '\n')

# 统计单个类别的图片数量与标注数量

for cat_name in cat_nms:

catId = coco_train.getCatIds(catNms=cat_name)

if cat_name == "person":

print(catId)

imgId = coco_train.getImgIds(catIds=catId)

annId = coco_train.getAnnIds(imgIds=imgId, catIds=catId, iscrowd=False)

print("{:<15} {:<6d} {:<10d}\n".format(cat_name, len(imgId), len(annId)))

if cat_name == "motorcycle":

print(catId)

imgId = coco_train.getImgIds(catIds=catId)

annId = coco_train.getAnnIds(imgIds=imgId, catIds=catId, iscrowd=False)

print("{:<15} {:<6d} {:<10d}\n".format(cat_name, len(imgId), len(annId)))

# 统计全部的类别及全部的图片数量和标注数量

print("NUM_categories: " + str(len(coco_train.dataset['categories'])))

print("NUM_images: " + str(len(coco_train.dataset['images'])))

print("NUM_annotations: " + str(len(coco_train.dataset['annotations'])))

loading annotations into memory...

Done (t=19.50s)

creating index...

index created!

COCO categories:

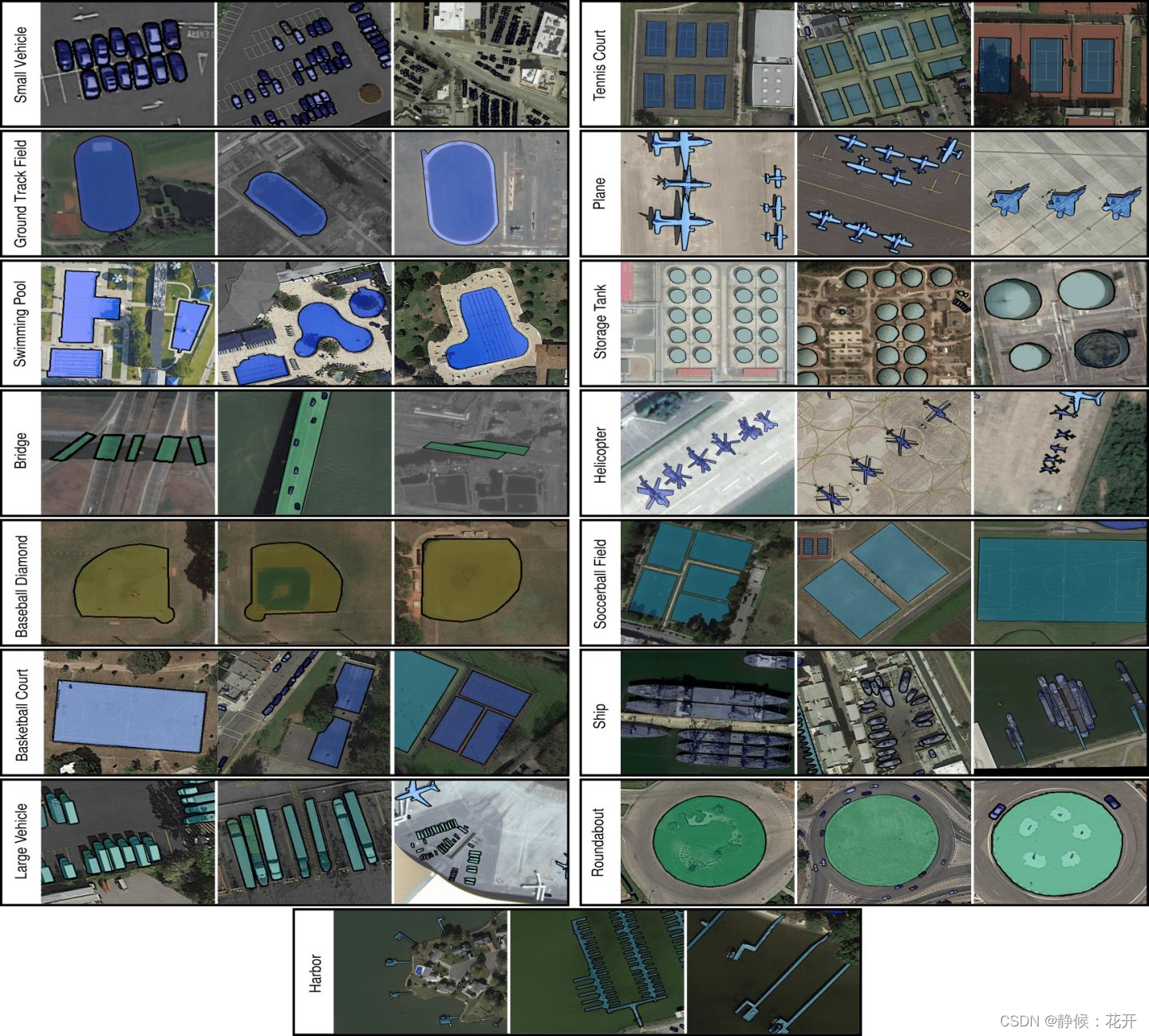

Small_Vehicle

Large_Vehicle

plane

storage_tank

ship

Swimming_pool

Harbor

tennis_court

Ground_Track_Field

Soccer_ball_field

baseball_diamond

Bridge

basketball_court

Roundabout

Helicopter

NUM_categories: 15

NUM_images: 28029

NUM_annotations: 704684

根据官方转换代码JSON-yolomask

将coco格式的大json数据转换成总多yolo格式的关键点数据,

更改yaml数据文件:

train: ../JSON2YOLO-master/new_dir/images/train2017 # train images (relative to 'path') 128 images

val: ../JSON2YOLO-master/new_dir/images/train2017 # val images (relative to 'path') 128 images

test: # test images (optional)

# Classes

names:

0: Small_Vehicle

1: Large_Vehicle

2: plane

3: ship

4: Swimming_pool

5: Harbor

6: tennis_court

7: Swimming_pool

8: Ground_Track_Field

9: Soccer_ball_field

10: baseball_diamond

11: Bridge

12: basketball_court

13: Roundabout

14: Helicopter

然后执行更改配置后执行

python segment/train.py

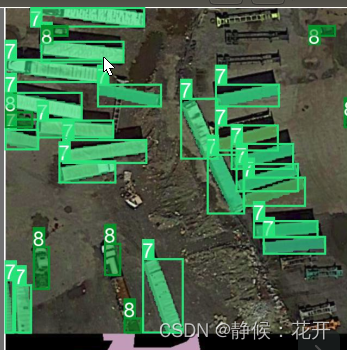

查看训练图像

![[网络工程师]-网络规划与设计-逻辑网络设计(二)](https://img-blog.csdnimg.cn/642cd6915d0b41b091a0d03b6cf6cd74.png)