写在前面

- 分享一个

k8s集群流量查看器 - 很轻量的一个工具,监控方便

- 博文内容涉及:

Kubeshark简单介绍- Windows、Linux 下载运行监控Demo

Kubeshark特性功能介绍

- 理解不足小伙伴帮忙指正

对每个人而言,真正的职责只有一个:找到自我。然后在心中坚守其一生,全心全意,永不停息。所有其它的路都是不完整的,是人的逃避方式,是对大众理想的懦弱回归,是随波逐流,是对内心的恐惧 ——赫尔曼·黑塞《德米安》

简单介绍

Kubeshark 是 2021 年由 UP9 公司开源的一个 K8s API 流量查看器 Mizu 发展而来,试图成为一款 K8s 全过程流量监控工具。

Kubeshark 也被叫做 kubernetes 的 API 流量查看器,它提供对进出 Kubernetes 集群内的 pod 的所有 API 流量和负载的深度可见性和监控。类似于针对 Kubernetes 而重新发明的 TCPDump 和 Wireshark。

Kubeshark 是占用空间最小的分布式数据包捕获,专门为在大规模生产集群上运行而构建。

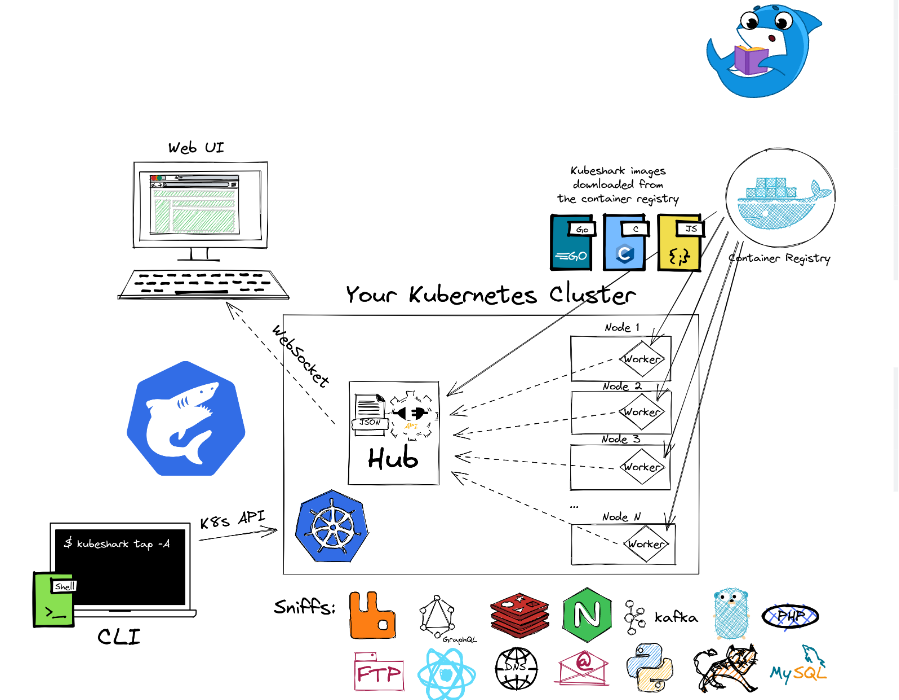

Kubeshark 架构

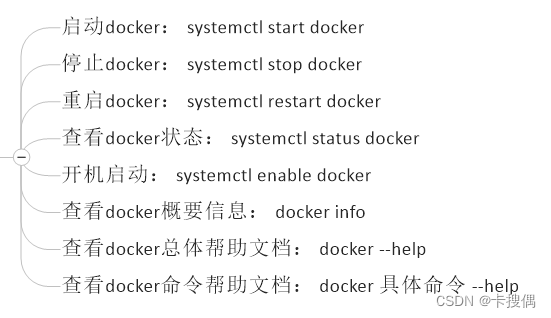

Kubeshark 由四个不同的软件组成,它们可以协同工作:CLI、Hub 和 Worker、基于 PCAP 的分布式存储。

CLI(命令行界面) : Kubeshark 客户端的二进制分布,它是用 Go 语言编写的。它通过 K8s API 与集群通信,以部署 Hub 和 Worker Pod。。用于启动关闭 Kubeshark.

Hub(枢纽) : 它协调 worker 部署,接收来自每个 worker 的嗅探和剖析,并收集到一个中心位置。它还提供一个 Web 界面,用于在浏览器上显示收集到的流量。

Work : 作为 DaemonSet 部署到集群中,以确保集群中的每个节点都被 Kubeshark 覆盖。

基于 PCAP 的分布式存储 : Kubeshark 使用基于 PCAP 的分布式存储,其中每个工作线程将捕获的 TCP 流存储在节点的根文件系统中。Kubeshark 的配置包括默认设置为 200MB 的存储限制。可以通过 CLI 选项更改该限制。

下载安装 & 功能Demo介绍

windows 下载安装

通过下面的方式安装

PS C:\Users\山河已无恙> curl -o kubeshark.exe https://github.com/kubeshark/kubeshark/releases/download/38.5/kubeshark.exe

运行时需要提前复制 集群的 kubeconfig 文件。只能通过命令行运行,运行方式.\kubeshark.exe tap -A 监控所有命名空间的流量

PS C:\Users\山河已无恙> .\kubeshark.exe tap -A

2023-03-03T12:08:20-05:00 INF versionCheck.go:23 > Checking for a newer version...

2023-03-03T12:08:20-05:00 INF tapRunner.go:45 > Using Docker: registry=docker.io/kubeshark/ tag=latest

2023-03-03T12:08:20-05:00 INF tapRunner.go:53 > Kubeshark will store the traffic up to a limit (per node). Oldest TCP streams will be removed once the limit is reached. limit=200MB

2023-03-03T12:08:20-05:00 INF common.go:69 > Using kubeconfig: path="C:\\Users\\山河已无恙\\.kube\\config"

2023-03-03T12:08:20-05:00 INF tapRunner.go:74 > Targeting pods in: namespaces=[""]

2023-03-03T12:08:20-05:00 INF tapRunner.go:129 > Targeted pod: cadvisor-5v7hl

2023-03-03T12:08:20-05:00 INF tapRunner.go:129 > Targeted pod: cadvisor-7dnmk

2023-03-03T12:08:20-05:00 INF tapRunner.go:129 > Targeted pod: cadvisor-7l4zf

2023-03-03T12:08:20-05:00 INF tapRunner.go:129 > Targeted pod: cadvisor-dj6dm

2023-03-03T12:08:20-05:00 INF tapRunner.go:129 > Targeted pod: cadvisor-sjpq8

2023-03-03T12:08:20-05:00 INF tapRunner.go:129 > Targeted pod: alertmanager-release-name-kube-promethe-alertmanager-0

2023-03-03T12:08:20-05:00 INF tapRunner.go:129 > Targeted pod: details-v1-5ffd6b64f7-wfbl2

...............................

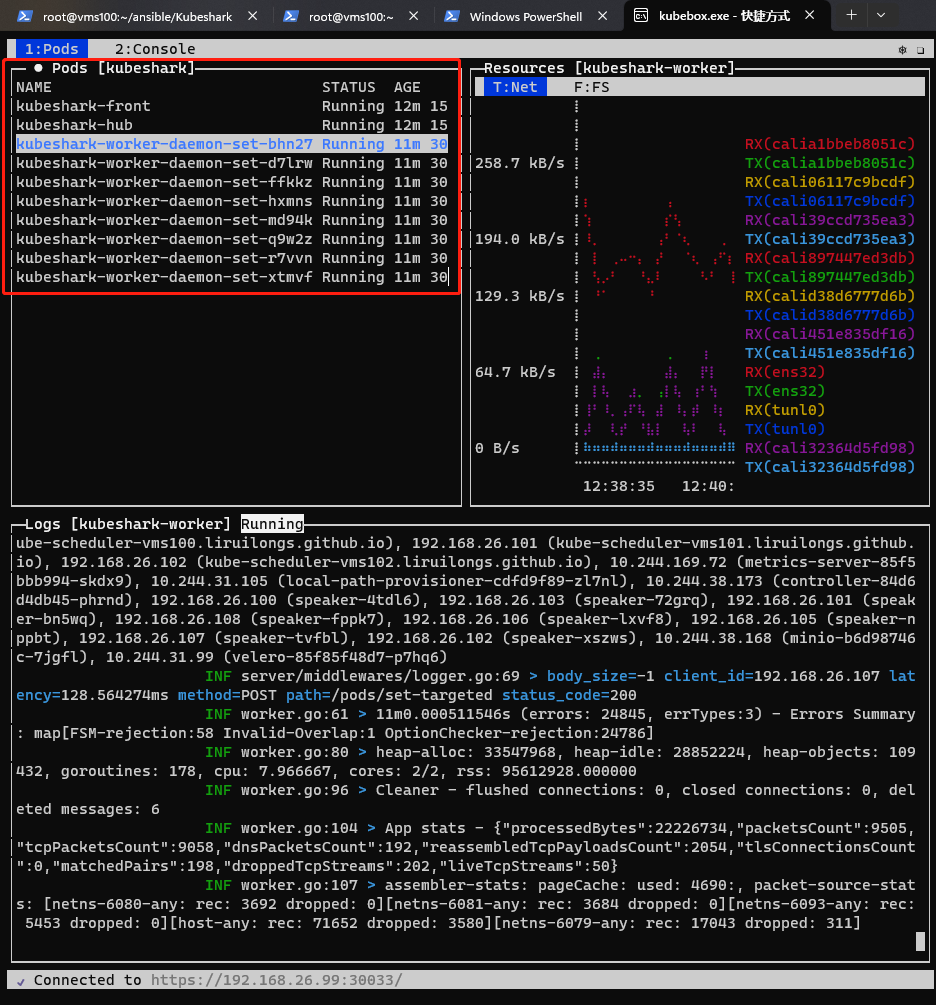

确定所有 pod 启动成功

如果 pod 可以正常创建,那么会直接打开监控页面。

如果启动失败, pod 没有创建成功,或者监控页面没有正常打开,提示 8899,8898 端口不通,尝试使用kubeshark.exe clean 清理环境重新安装。也可以尝试通过 --storagelimit 5000MB 指定存储大小

PS C:\Users\山河已无恙> .\kubeshark.exe clean

2023-03-03T12:59:58-05:00 INF versionCheck.go:23 > Checking for a newer version...

2023-03-03T12:59:58-05:00 INF common.go:69 > Using kubeconfig: path="C:\\Users\\山河已无恙\\.kube\\config"

2023-03-03T12:59:58-05:00 WRN cleanResources.go:16 > Removing Kubeshark resources...

PS C:\Users\山河已无恙> .\kubeshark.exe tap -A --storagelimit 5000MB

重新启动

PS C:\Users\山河已无恙> .\kubeshark.exe tap -A --storagelimit 5000MB

2023-03-03T12:30:36-05:00 INF versionCheck.go:23 > Checking for a newer version...

2023-03-03T12:30:36-05:00 INF tapRunner.go:45 > Using Docker: registry=docker.io/kubeshark/ tag=latest

2023-03-03T12:30:36-05:00 INF tapRunner.go:53 > Kubeshark will store the traffic up to a limit (per node). Oldest TCP streams will be removed once the limit is reached. limit=5000MB

2023-03-03T12:30:36-05:00 INF common.go:69 > Using kubeconfig: path="C:\\Users\\山河已无恙\\.kube\\config"

2023-03-03T12:30:37-05:00 INF tapRunner.go:74 > Targeting pods in: namespaces=[""]

2023-03-03T12:30:37-05:00 INF tapRunner.go:129 > Targeted pod: cadvisor-5v7hl

。。。。。。。。。。

2023-03-03T12:31:21-05:00 INF proxy.go:29 > Starting proxy... namespace=kubeshark service=kubeshark-hub src-port=8898

2023-03-03T12:31:21-05:00 INF workers.go:32 > Creating the worker DaemonSet...

2023-03-03T12:31:21-05:00 INF workers.go:51 > Successfully created the worker DaemonSet.

2023-03-03T12:31:23-05:00 INF tapRunner.go:402 > Hub is available at: url=http://localhost:8898

2023-03-03T12:31:23-05:00 INF proxy.go:29 > Starting proxy... namespace=kubeshark service=kubeshark-front src-port=8899

2023-03-03T12:31:23-05:00 INF tapRunner.go:418 > Kubeshark is available at: url=http://localhost:8899

多次测试,发现,启动不成功时一个偶然性的问题,并且会经常发生,有可能镜像拉取超时,或者代理没有创建成功 ,本地端口无法访问。

特新介绍

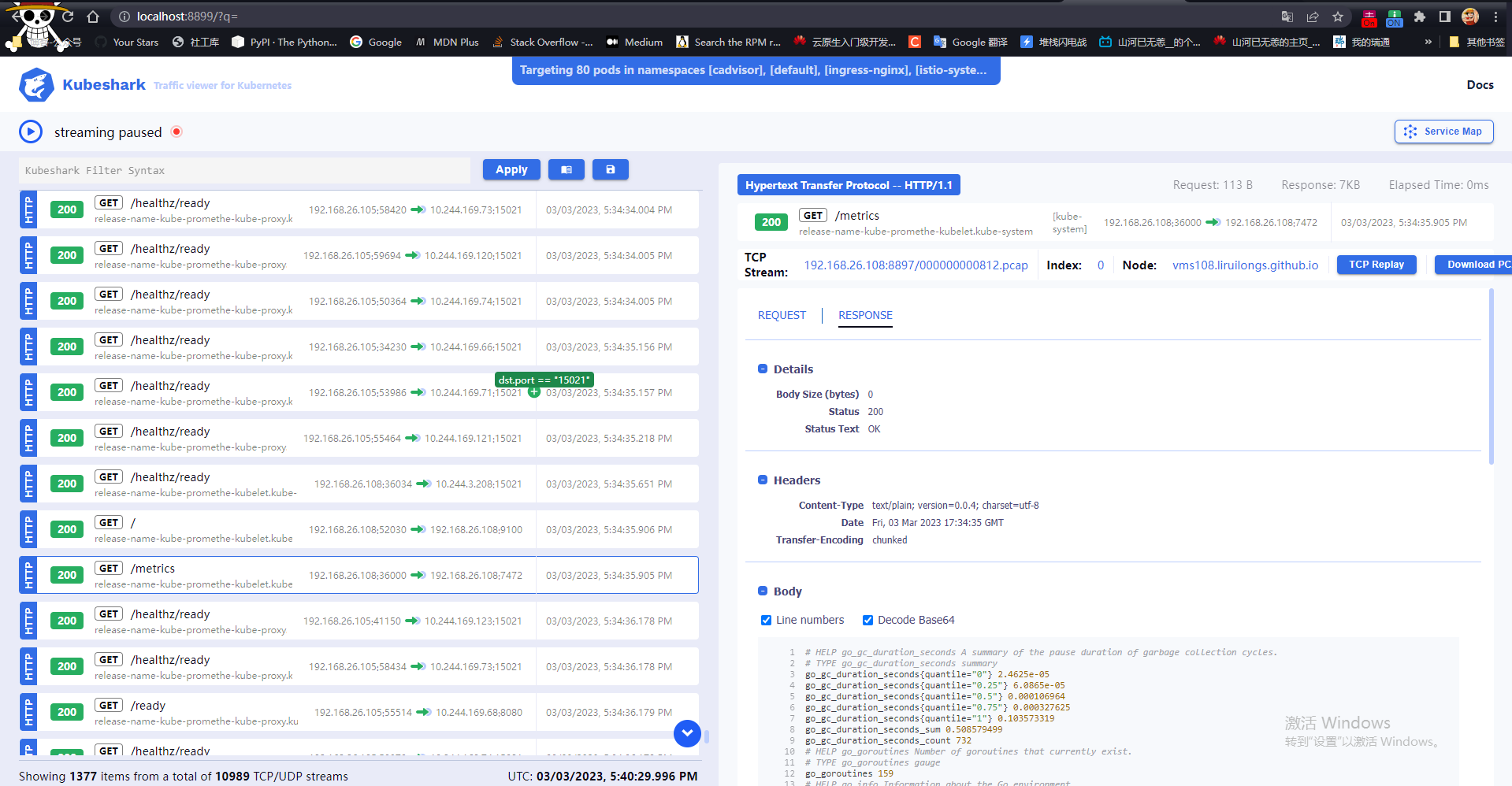

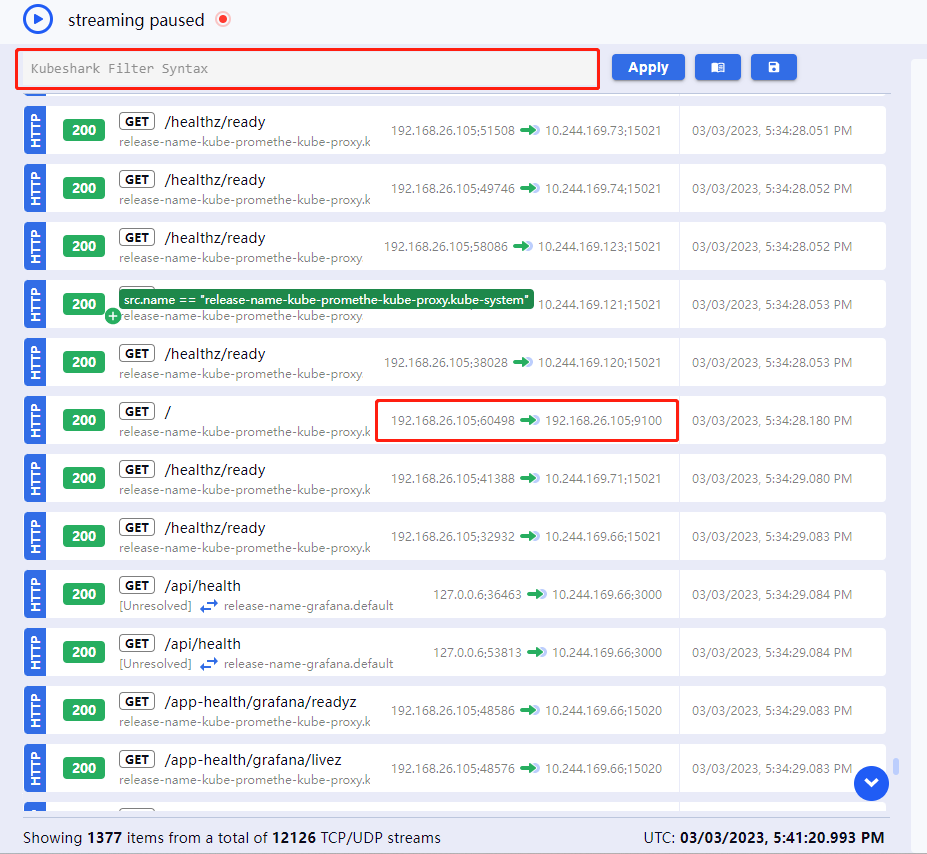

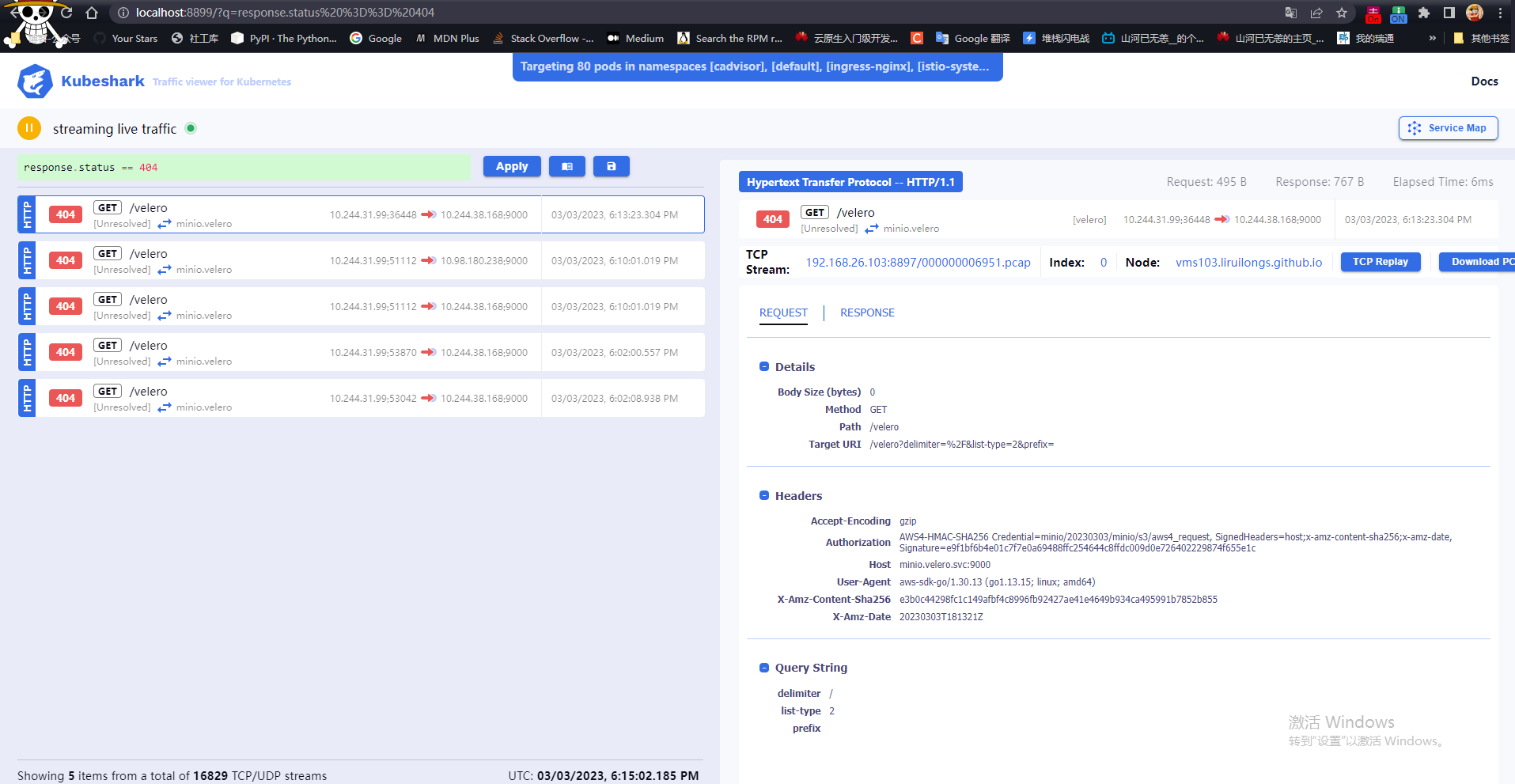

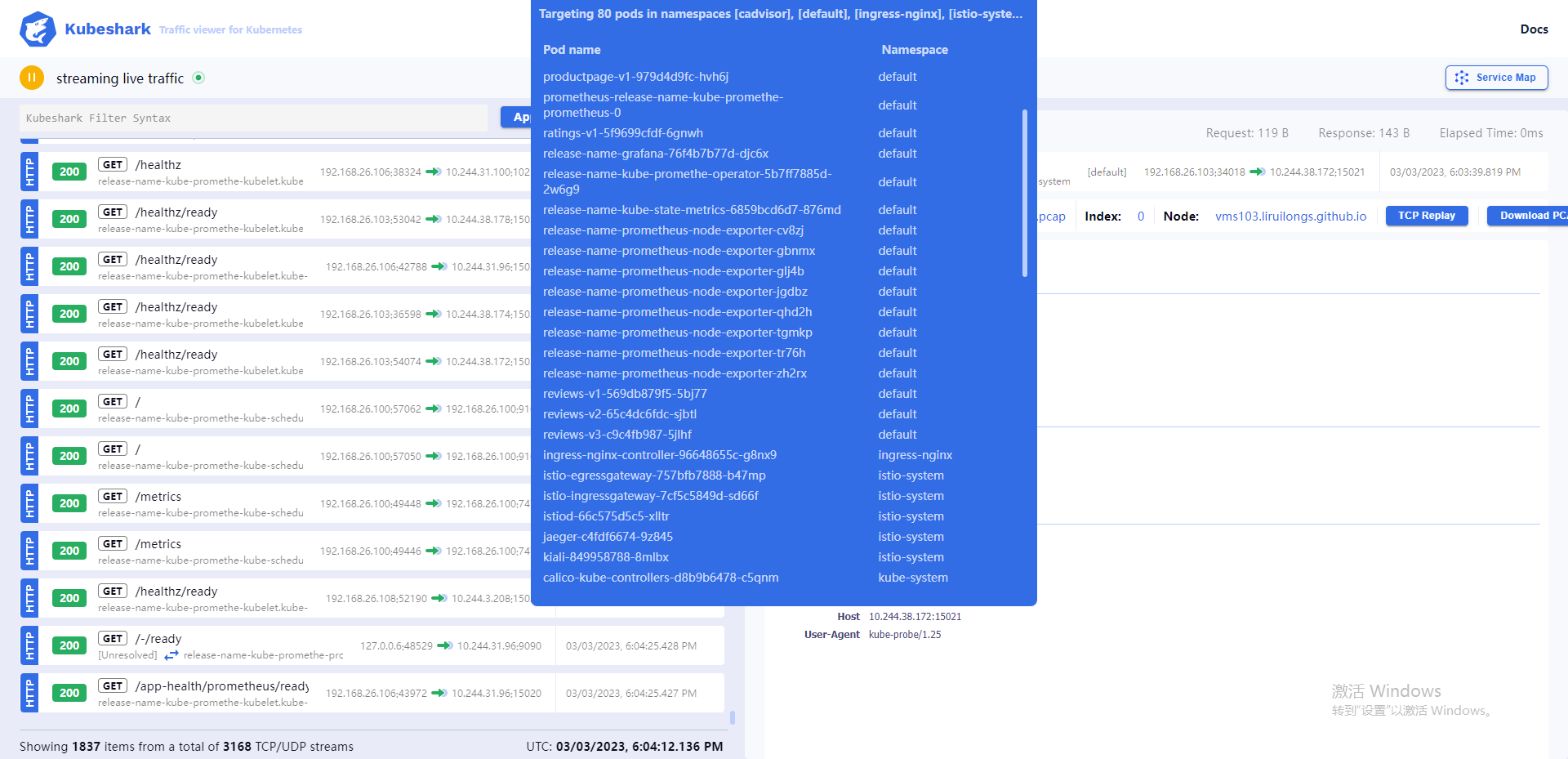

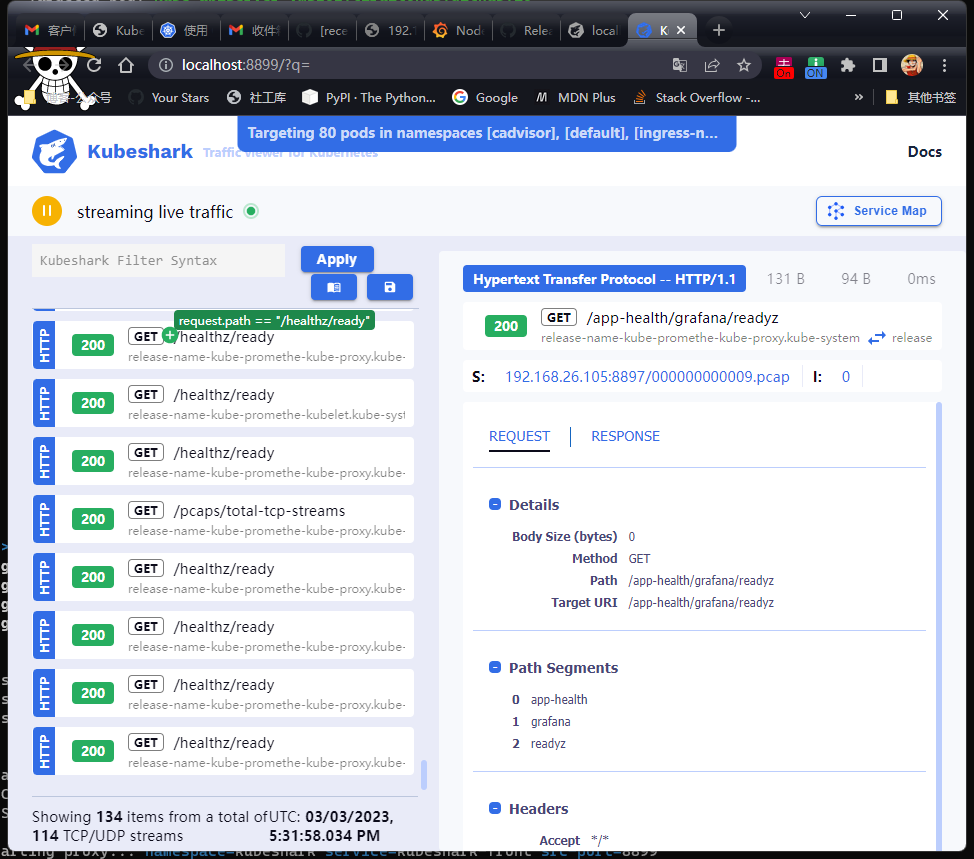

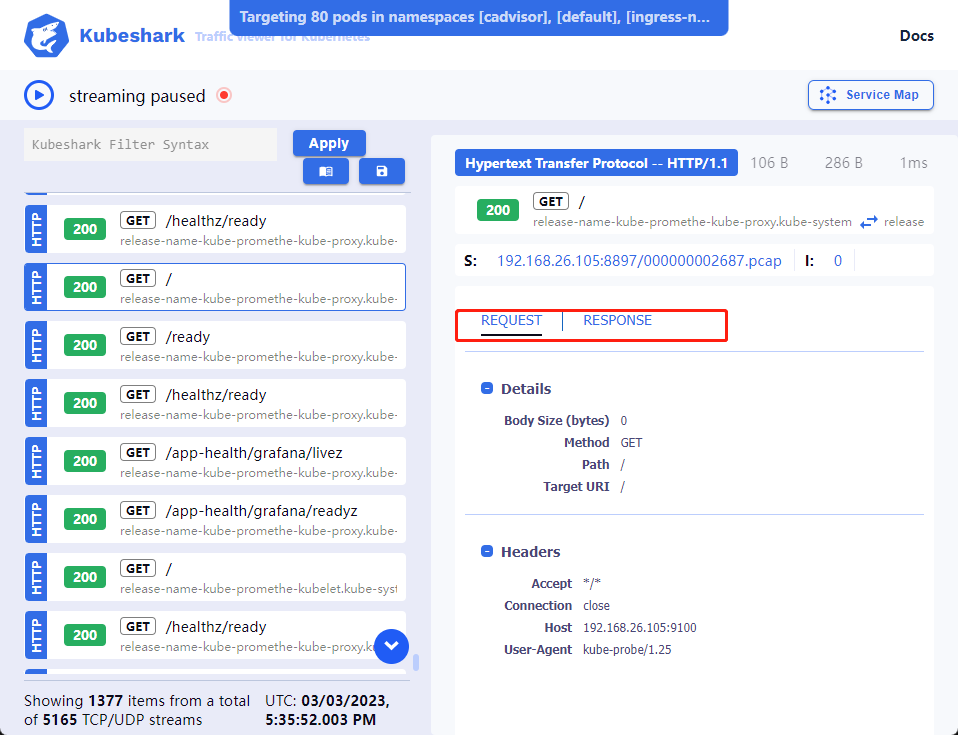

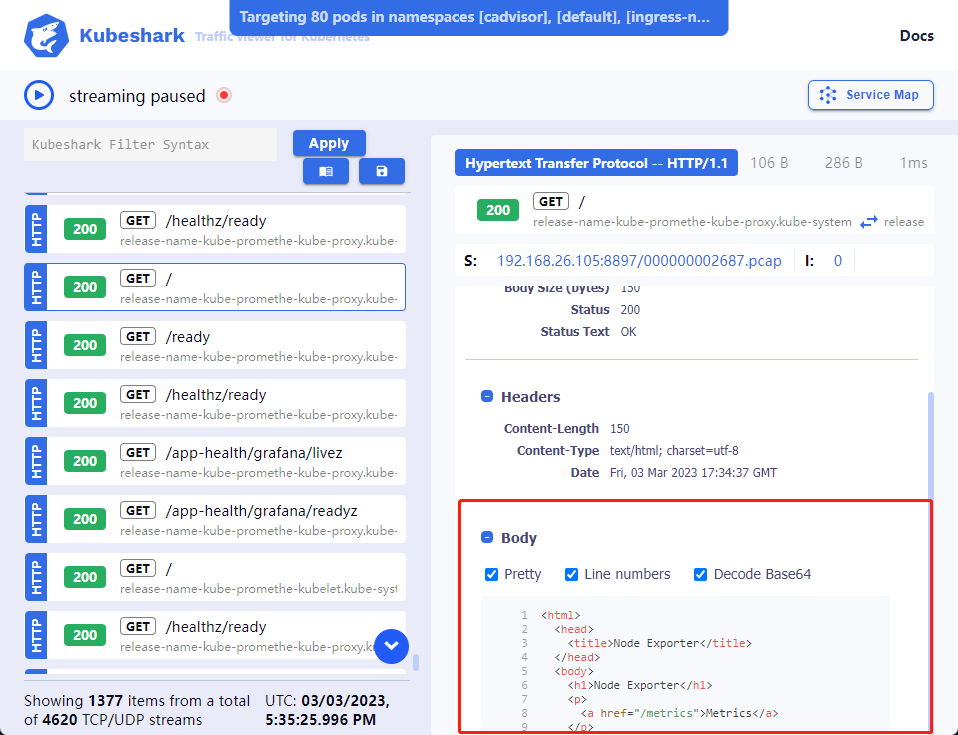

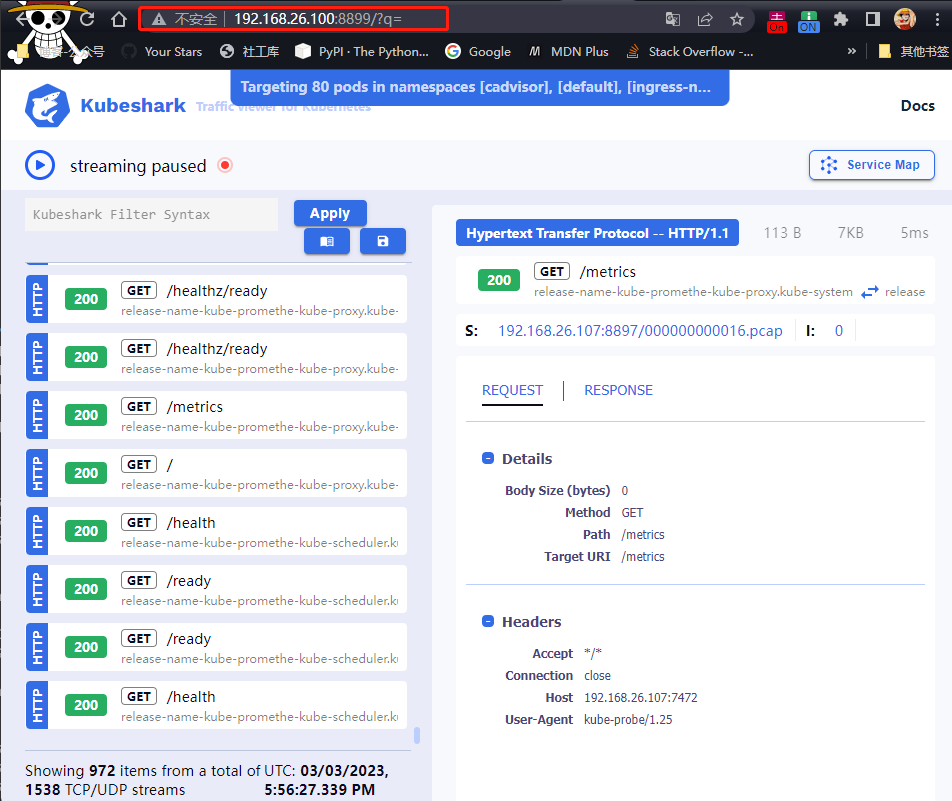

通过监控页面可以看到流量协议,请求路由, 请求响应状态,请求方式,请求源/目标地址 IP,由那个 POD 发起。可以通过过滤器对 包进行过滤。

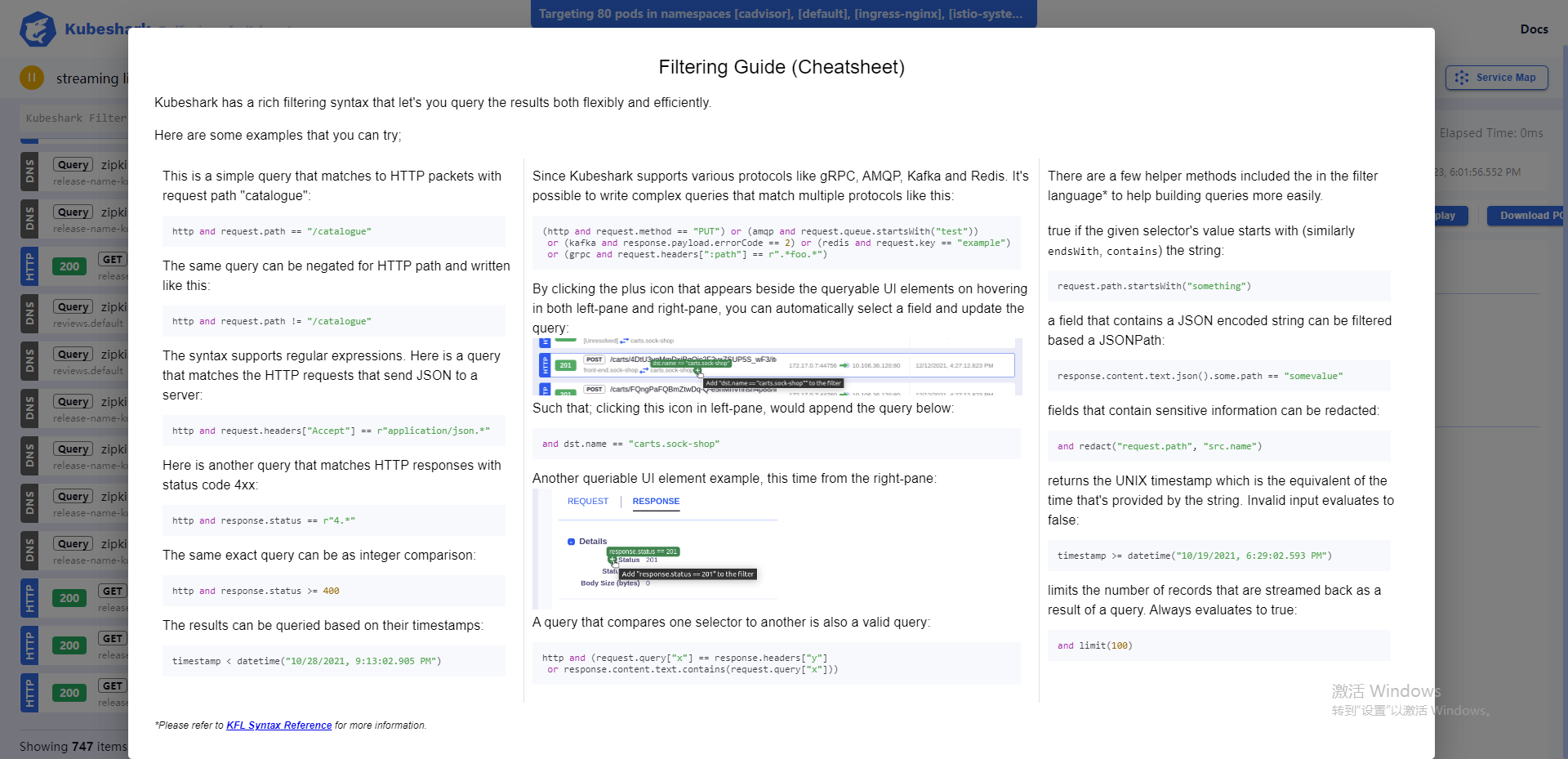

过滤表达式有专门的文档 Demo

过滤 HTTP 请求返回状态码为 404 的请求

可以看到 集群备份工具 velero 可能有问题,查看对应的拓扑关系确认

顶部可以展示所有的 Pod 列表

选择对应的包,可以查看详细信息

请求报文,响应报文

可以查看报文的具体内容

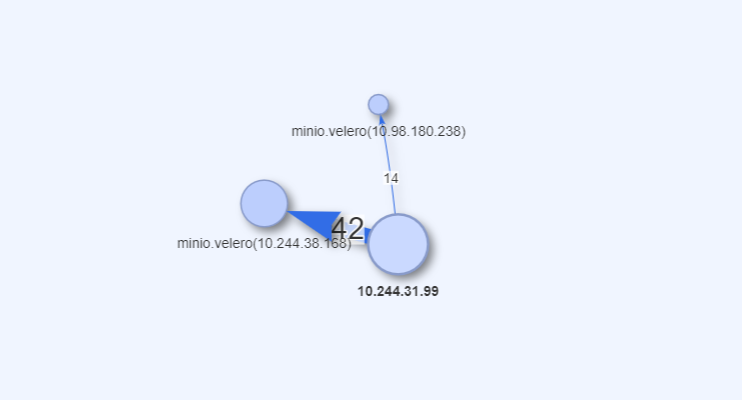

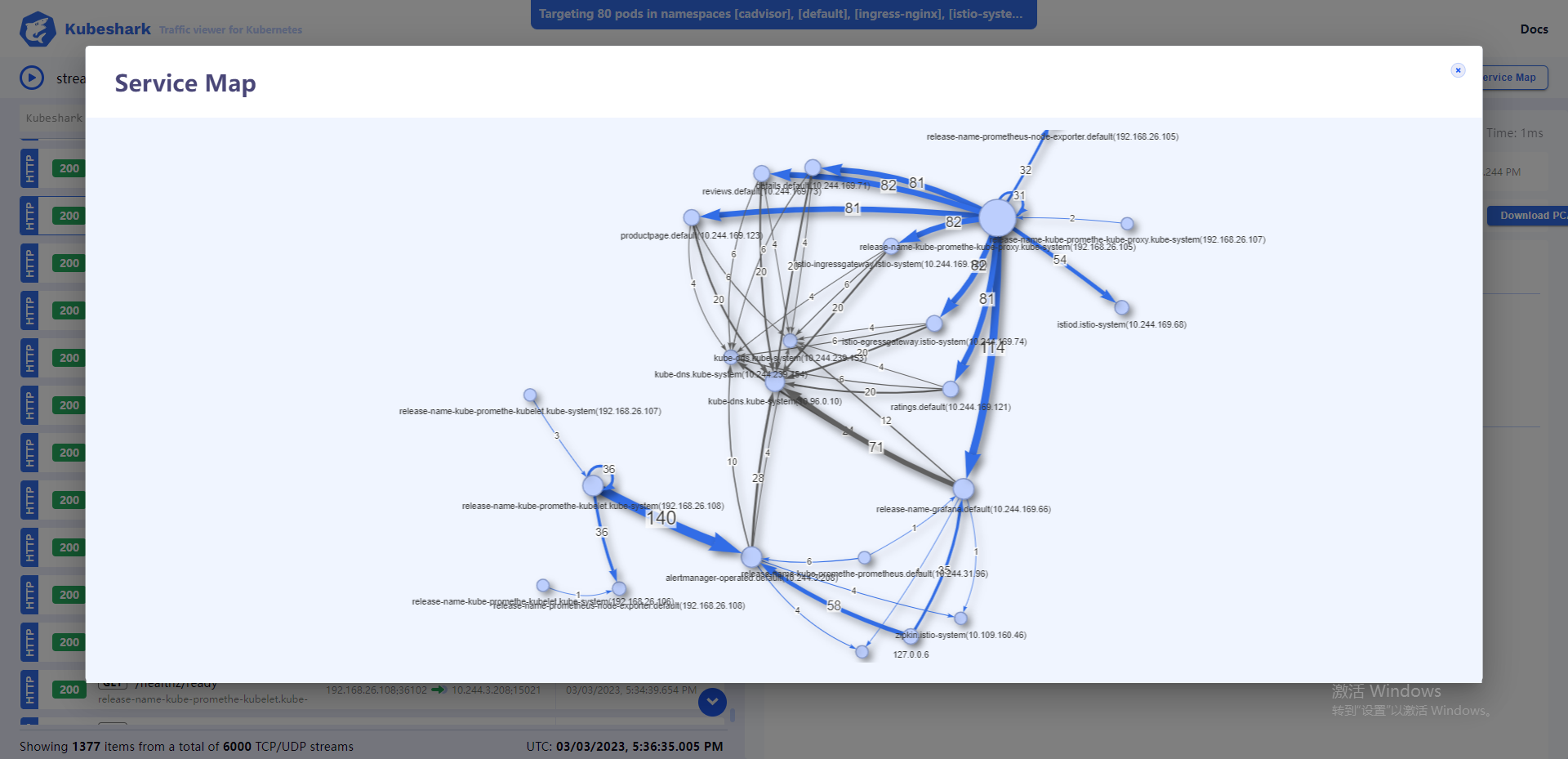

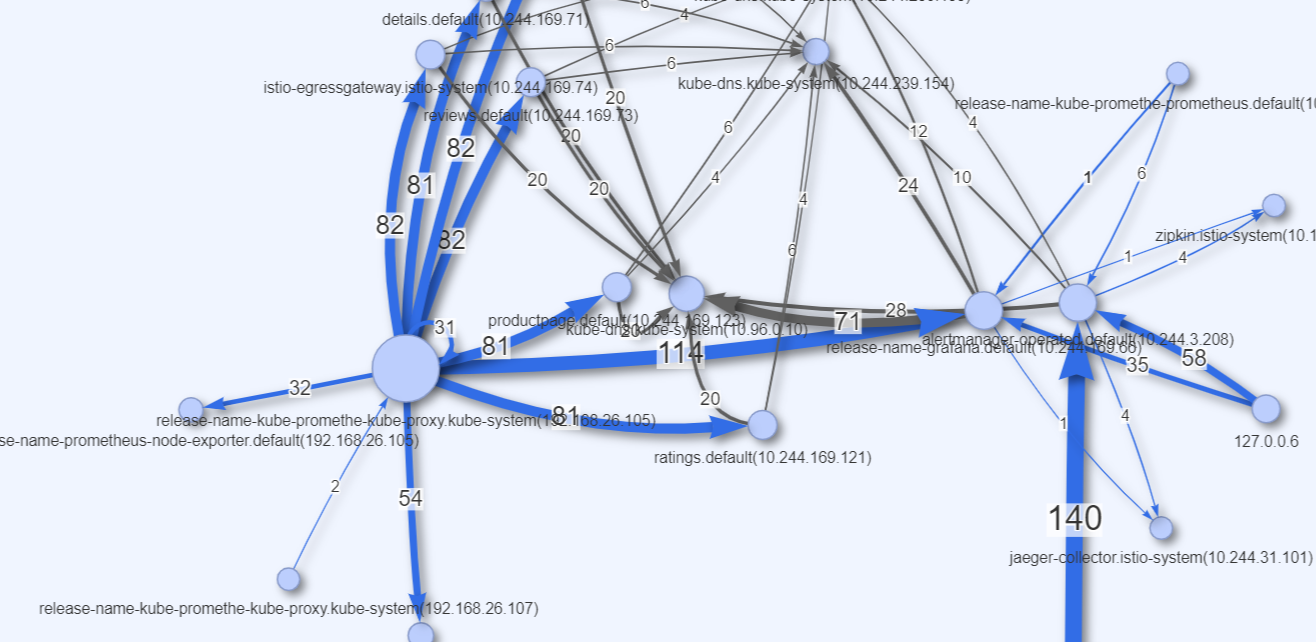

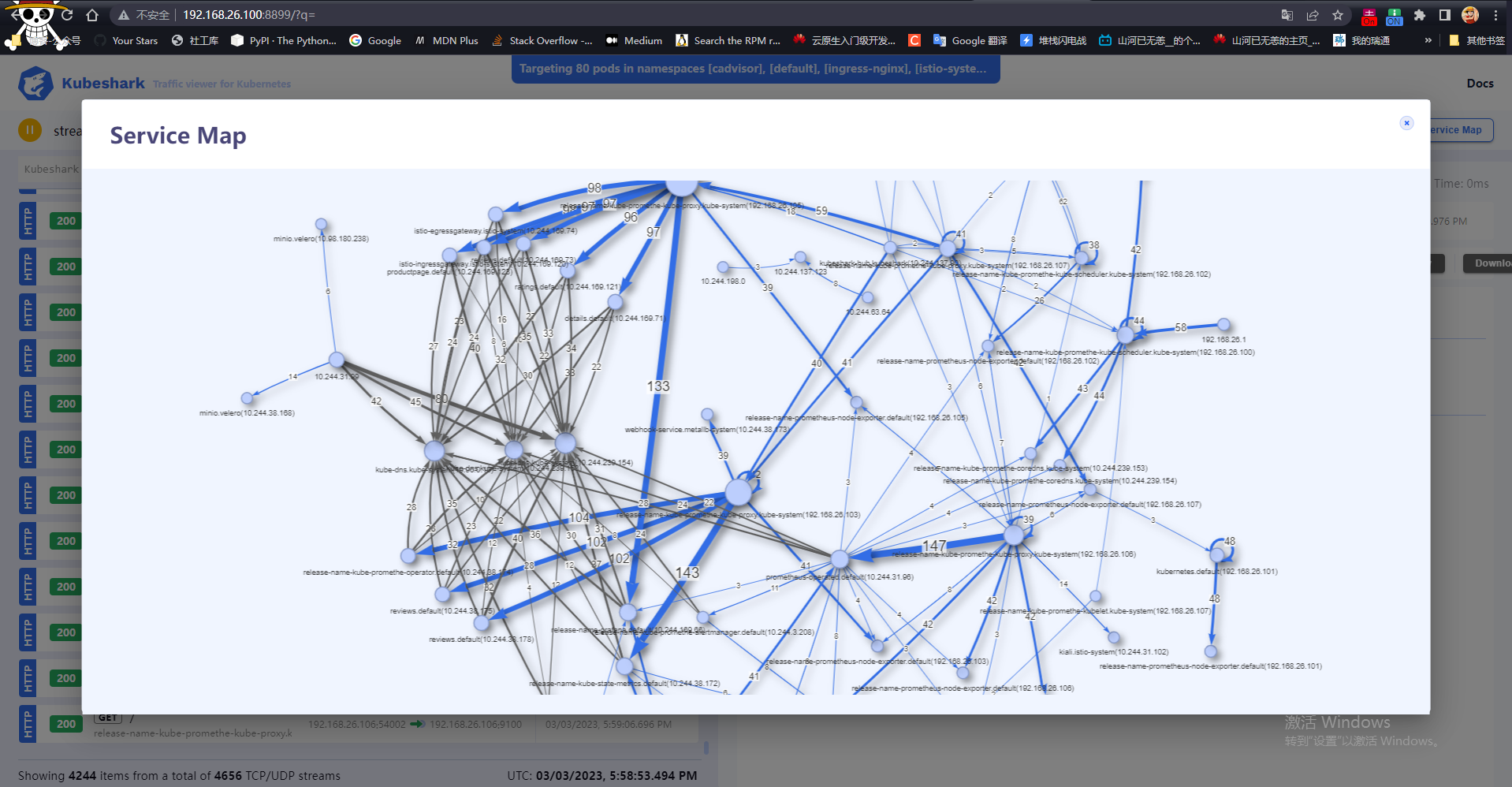

不加包过滤,默认情况下右上角的拓扑信息可以查看当前监控信息的整体视图

箭头的粗细表示流量数量。颜色表示不同的协议

Linux 下载安装

也可以在 Linux 下安装。需要注意一下几点

- 默认情况下

kubeshark会自动创建部署节点的端口代理,所以不需要修改 创建的 hubSVC为NodePort或LB - 如果当前环境没有桌面端,需要添加

--set headless=true,--proxy-host 0.0.0.0,限制其打开浏览器,并且运行外部IP访问

下载运行的二进制文件

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$curl -s -Lo kubeshark https://github.com/kubeshark/kubeshark/releases/download/38.5/kubeshark_linux_amd64 && chmod 755 kubeshark

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$ls

kubeshark_linux_amd64

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$

移动文件修改名字,是其可以直接执行

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$mv kubeshark_linux_amd64 /usr/local/bin/kubeshark

┌──[root@vms100.liruilongs.github.io]-[/usr/local/bin]

└─$chmod +x kubeshark

kubeshark clean 用于清空当前部署环境

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$kubeshark clean

2023-03-03T09:44:32+08:00 INF versionCheck.go:23 > Checking for a newer version...

2023-03-03T09:44:32+08:00 INF common.go:69 > Using kubeconfig: path=/root/.kube/config

2023-03-03T09:44:32+08:00 WRN cleanResources.go:16 > Removing Kubeshark resources...

运行 kubeshark 监控所有的命名空间

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$kubeshark tap -A --storagelimit 2000MB --proxy-host 0.0.0.0 --set headless=true

2023-03-04T01:53:47+08:00 INF tapRunner.go:45 > Using Docker: registry=docker.io/kubeshark/ tag=latest

2023-03-04T01:53:47+08:00 INF tapRunner.go:53 > Kubeshark will store the traffic up to a limit (per node). Oldest TCP streams will be removed once the limit is reached. limit=2000MB

2023-03-04T01:53:47+08:00 INF versionCheck.go:23 > Checking for a newer version...

。。。。。。。。。

2023-03-04T01:53:48+08:00 INF tapRunner.go:240 > Added: pod=kubeshark-front

2023-03-04T01:53:48+08:00 INF tapRunner.go:160 > Added: pod=kubeshark-hub

2023-03-04T01:54:25+08:00 WRN watch.go:61 > K8s watch channel closed, restarting watcher...

2023-03-04T01:54:25+08:00 WRN watch.go:61 > K8s watch channel closed, restarting watcher...

2023-03-04T01:54:25+08:00 WRN watch.go:61 > K8s watch channel closed, restarting watcher...

2023-03-04T01:54:30+08:00 INF tapRunner.go:240 > Added: pod=kubeshark-front

2023-03-04T01:54:30+08:00 INF tapRunner.go:160 > Added: pod=kubeshark-hub

2023-03-04T01:54:58+08:00 INF proxy.go:29 > Starting proxy... namespace=kubeshark service=kubeshark-hub

src-port=8898

2023-03-04T01:54:58+08:00 INF workers.go:32 > Creating the worker DaemonSet...

2023-03-04T01:54:59+08:00 INF workers.go:51 > Successfully created the worker DaemonSet.

2023-03-04T01:55:00+08:00 INF tapRunner.go:402 > Hub is available at: url=http://localhost:8898

2023-03-04T01:55:00+08:00 INF proxy.go:29 > Starting proxy... namespace=kubeshark service=kubeshark-front src-port=8899

2023-03-04T01:55:00+08:00 INF tapRunner.go:418 > Kubeshark is available at: url=http://localhost:8899

2023-03-04T01:55:56+08:00 WRN watch.go:61 > K8s watch channel closed, restarting watcher...

2023-03-04T01:55:56+08:00 WRN watch.go:61 > K8s watch channel closed, restarting watcher...

2023-03-04T01:55:56+08:00 WRN watch.go:61 > K8s watch channel closed, restarting watcher...

2023-03-04T01:56:01+08:00 INF tapRunner.go:240 > Added: pod=kubeshark-front

2023-03-04T01:56:01+08:00 INF tapRunner.go:160 > Added: pod=kubeshark-hub

浏览器访问

拓扑信息查看

部署中遇到的问题

如果启动失败,可以通过 kubeshark check命名检查, 该命令用于检查部署pod,代理是否成功

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$kubeshark check

2023-03-03T22:33:22+08:00 INF checkRunner.go:21 > Checking the Kubeshark resources...

2023-03-03T22:33:22+08:00 INF versionCheck.go:23 > Checking for a newer version...

2023-03-03T22:33:22+08:00 INF kubernetesApi.go:11 > Checking: procedure=kubernetes-api

..........

2023-03-03T22:33:22+08:00 INF kubernetesPermissions.go:89 > Can create services

2023-03-03T22:33:23+08:00 INF kubernetesPermissions.go:89 > Can create daemonsets in api group 'apps'

2023-03-03T22:33:23+08:00 INF kubernetesPermissions.go:89 > Can patch daemonsets in api group 'apps'

2023-03-03T22:33:23+08:00 INF kubernetesPermissions.go:89 > Can list namespaces

..........

2023-03-03T22:33:23+08:00 INF kubernetesResources.go:116 > Resource exist. name=kubeshark-cluster-role-binding type="cluster role binding"

2023-03-03T22:33:23+08:00 INF kubernetesResources.go:116 > Resource exist. name=kubeshark-hub type=service

2023-03-03T22:33:23+08:00 INF kubernetesResources.go:64 > Pod is running. name=kubeshark-hub

2023-03-03T22:33:23+08:00 INF kubernetesResources.go:92 > All 8 pods are running. name=kubeshark-worker

2023-03-03T22:33:23+08:00 INF serverConnection.go:11 > Checking: procedure=server-connectivity

2023-03-03T22:33:23+08:00 INF serverConnection.go:33 > Connecting: url=http://localhost:8898

2023-03-03T22:33:26+08:00 ERR serverConnection.go:16 > Couldn't connect to Hub using proxy! error="Couldn't reach the URL: http://localhost:8898 after 3 retries!"

2023-03-03T22:33:26+08:00 INF serverConnection.go:33 > Connecting: url=http://localhost:8899

2023-03-03T22:33:29+08:00 ERR serverConnection.go:23 > Couldn't connect to Front using proxy! error="Couldn't reach the URL: http://localhost:8899 after 3 retries!"

2023-03-03T22:33:29+08:00 ERR checkRunner.go:50 > There are issues in your Kubeshark resources! Run these commands: command1="kubeshark clean" command2="kubeshark tap [POD REGEX]"

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$

如果代理创建不成功,可以尝试下面的方式使用。

https://github.com/kubeshark/kubeshark/wiki/CHANGELOG

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$jobs

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$coproc kubectl port-forward -n kubeshark service/kubeshark-hub 8898:80;

[1] 125248

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$coproc kubectl port-forward -n kubeshark service/kubeshark-front 8899:80;

-bash: 警告:execute_coproc: coproc [125248:COPROC] still exists

[2] 125784

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$jobs

[1]- 运行中 coproc COPROC kubectl port-forward -n kubeshark service/kubeshark-hub 8898:80 &

[2]+ 运行中 coproc COPROC kubectl port-forward -n kubeshark service/kubeshark-front 8899:80 &

重新检查校验

┌──[root@vms100.liruilongs.github.io]-[~/ansible/Kubeshark]

└─$kubeshark check

2023-03-03T23:35:50+08:00 INF checkRunner.go:21 > Checking the Kubeshark resources...

2023-03-03T23:35:50+08:00 INF kubernetesApi.go:11 > Checking: procedure=kubernetes-api

2023-03-03T23:35:50+08:00 INF versionCheck.go:23 > Checking for a newer version...

2023-03-03T23:35:50+08:00 INF kubernetesApi.go:18 > Initialization of the client is passed.

2023-03-03T23:35:50+08:00 INF kubernetesApi.go:25 > Querying the Kubernetes API is passed.

2023-03-03T23:35:50+08:00 INF kubernetesVersion.go:13 > Checking: procedure=kubernetes-version

2023-03-03T23:35:50+08:00 INF kubernetesVersion.go:20 > Minimum required Kubernetes API version is passed. k8s-version=v1.25.1

2023-03-03T23:35:50+08:00 INF kubernetesPermissions.go:16 > Checking: procedure=kubernetes-permissions

2023-03-03T23:35:50+08:00 INF kubernetesPermissions.go:89 > Can list pods

。。。。。。。

2023-03-03T23:35:51+08:00 INF kubernetesResources.go:64 > Pod is running. name=kubeshark-hub

2023-03-03T23:35:51+08:00 INF kubernetesResources.go:92 > All 8 pods are running. name=kubeshark-worker

2023-03-03T23:35:51+08:00 INF serverConnection.go:11 > Checking: procedure=server-connectivity

2023-03-03T23:35:51+08:00 INF serverConnection.go:33 > Connecting: url=http://localhost:8898

2023-03-03T23:35:52+08:00 INF serverConnection.go:19 > Connected successfully to Hub using proxy.

2023-03-03T23:35:52+08:00 INF serverConnection.go:33 > Connecting: url=http://localhost:8899

2023-03-03T23:35:52+08:00 INF serverConnection.go:26 > Connected successfully to Front using proxy.

2023-03-03T23:35:52+08:00 INF checkRunner.go:45 > All checks are passed.

博文部分内容参考

© 文中涉及参考链接内容版权归原作者所有,如有侵权请告知, 这是一个开源项目,如果你认可它,不要吝啬星星哦 😃

https://github.com/kubeshark/kubeshark

https://medium.com/kernel-space/kubeshark-wireshark-for-kubernetes-4069a5f5aa3d

https://docs.kubeshark.co/en/config

https://github.com/kubeshark/kubeshark/wiki/CHANGELOG

© 2018-2023 liruilonger@gmail.com, All rights reserved. 保持署名-非商用-相同方式共享(CC BY-NC-SA 4.0)