第五章.与学习相关技巧

5.4 正则化&超参数

在机器学习中,过拟合是一个很常见的问题。过拟合指的是只能拟合训练数据,但不能很好的拟合不包含在训练数据中的其他数据状态。

1.发生过拟合的原因

- 模型拥有大量参数,表现力强。

- 训练数据少。

2.抑制过拟合的方法

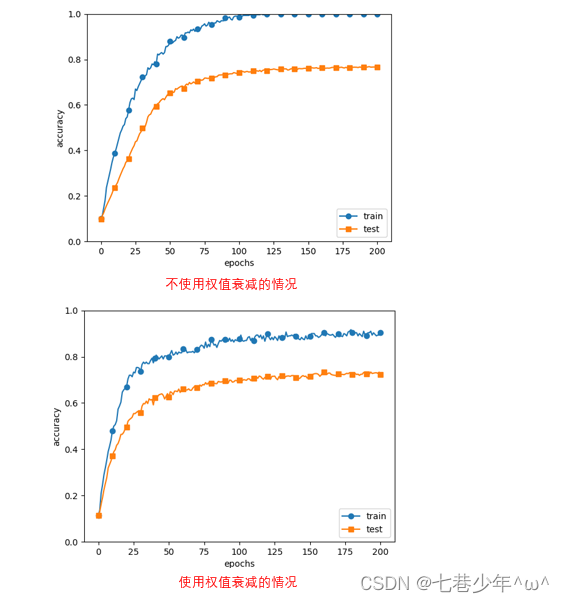

1).权值衰减

-

通过在学习过程中对大的权重进行惩罚,来抑制过拟合。[很多过拟合发生原因是因为权重参数取值过大才发生的]

-

原理:

为损失函数加上权重的平方范数(L2范数),用符号表示:

①.如果将权重记为W,L2范数的权值衰减就是 1/2λW2,然后将 1/2λW2加到损失函数上。[λ:控制正则化强度的超参数]

②.L2范数:各元素的平方和;L1范数:各元素的绝对值之和;L∞:各元素的绝对值中最大的哪一个。

-

对于所有权重,权值衰减方法都会为损失函数加上1/2λW2,因此在求权值梯度时,要在误差反向传播法的结果加上正则化项的导数λW。

-

示例:

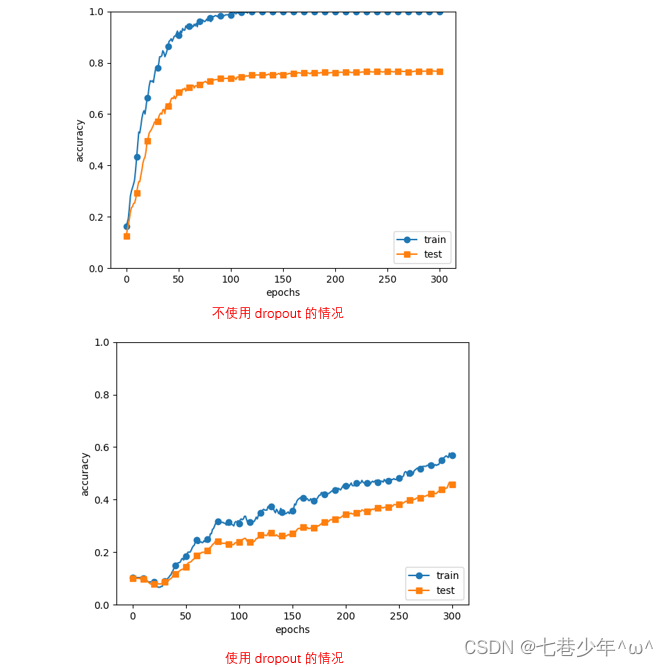

过拟合现象应用权值衰减方法后的示意图:

-

代码实现:

import sys, os

sys.path.append(os.pardir)

import numpy as np

import matplotlib.pyplot as plt

from dataset.mnist import load_mnist

from collections import OrderedDict

# 加载数据

def get_data():

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True)

return (x_train, t_train), (x_test, t_test)

class Sigmoid:

def __init__(self):

self.out = None

# 正向传播

def forward(self, x):

out = 1 / (1 + np.exp(-x))

self.out = out

return out

# 反向传播

def backward(self, dout):

dx = dout * (1.0 - self.out) * self.out

return dx

class Relu:

def __init__(self):

self.mask = None

# 正向传播

def forward(self, x):

self.mask = (x <= 0)

out = x.copy()

out[self.mask] = 0

return out

# 反向传播

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

def numerical_gradient(f, x):

h = 1e-4 # 0.0001

grad = np.zeros_like(x)

it = np.nditer(x, flags=['multi_index'], op_flags=['readwrite'])

while not it.finished:

idx = it.multi_index

tmp_val = x[idx]

x[idx] = float(tmp_val) + h

fxh1 = f(x) # f(x+h)

x[idx] = tmp_val - h

fxh2 = f(x) # f(x-h)

grad[idx] = (fxh1 - fxh2) / (2 * h)

x[idx] = tmp_val # 还原值

it.iternext()

return grad

class SoftmaxWithLoss:

def __init__(self):

self.loss = None # 损失

self.y = None # softmax的输出

self.t = None # 监督数据(one_hot vector)

# 输出层函数:softmax

def softmax(self, x):

if x.ndim == 2:

x = x.T

x = x - np.max(x, axis=0)

y = np.exp(x) / np.sum(np.exp(x), axis=0)

return y.T

x = x - np.max(x) # 溢出对策

return np.exp(x) / np.sum(np.exp(x))

# 交叉熵误差

def cross_entropy_error(self, y, t):

if y.ndim == 1:

t = t.reshape(1, t.size)

y = y.reshape(1, y.size)

# 监督数据是one-hot-vector的情况下,转换为正确解标签的索引

if t.size == y.size:

t = t.argmax(axis=1)

batch_size = y.shape[0]

return -np.sum(np.log(y[np.arange(batch_size), t] + 1e-7)) / batch_size

# 正向传播

def forward(self, x, t):

self.t = t

self.y = self.softmax(x)

self.loss = self.cross_entropy_error(self.y, self.t)

return self.loss

# 反向传播

def backward(self, dout=1):

batch_size = self.t.shape[0]

if self.t.size == self.y.size: # 监督数据是one-hot-vector的情况

dx = (self.y - self.t) / batch_size

else:

dx = self.y.copy()

dx[np.arange(batch_size), self.t] -= 1

dx = dx / batch_size

return dx

class Affine:

def __init__(self, W, b):

self.W = W

self.b = b

self.x = None

self.original_x_shape = None

# 权重和偏置参数的导数

self.dW = None

self.db = None

def forward(self, x):

# 对应张量

self.original_x_shape = x.shape

x = x.reshape(x.shape[0], -1)

self.x = x

out = np.dot(self.x, self.W) + self.b

return out

def backward(self, dout):

dx = np.dot(dout, self.W.T)

self.dW = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

dx = dx.reshape(*self.original_x_shape) # 还原输入数据的形状(对应张量)

return dx

class SGD:

def __init__(self, lr):

self.lr = lr

def update(self, params, grads):

for key in params.keys():

params[key] -= self.lr * grads[key]

class MultiLayerNet:

# 全连接的多层神经网络

def __init__(self, input_size, hidden_size_list, output_size,

activation='relu', weight_init_std='relu', weight_decay_lambda=0):

self.input_size = input_size

self.output_size = output_size

self.hidden_size_list = hidden_size_list

self.hidden_layer_num = len(hidden_size_list)

self.weight_decay_lambda = weight_decay_lambda

self.params = {}

# 初始化权重

self.__init_weight(weight_init_std)

# 生成层

activation_layer = {'sigmoid': Sigmoid, 'relu': Relu}

self.layers = OrderedDict()

for idx in range(1, self.hidden_layer_num + 1):

self.layers['Affine' + str(idx)] = Affine(self.params['W' + str(idx)],

self.params['b' + str(idx)])

self.layers['Activation_function' + str(idx)] = activation_layer[activation]()

idx = self.hidden_layer_num + 1

self.layers['Affine' + str(idx)] = Affine(self.params['W' + str(idx)],

self.params['b' + str(idx)])

self.last_layer = SoftmaxWithLoss()

def __init_weight(self, weight_init_std):

# 设定权重的初始值

all_size_list = [self.input_size] + self.hidden_size_list + [self.output_size]

for idx in range(1, len(all_size_list)):

scale = weight_init_std

if str(weight_init_std).lower() in ('relu', 'he'):

scale = np.sqrt(2.0 / all_size_list[idx - 1]) # 使用ReLU的情况下推荐的初始值

elif str(weight_init_std).lower() in ('sigmoid', 'xavier'):

scale = np.sqrt(1.0 / all_size_list[idx - 1]) # 使用sigmoid的情况下推荐的初始值

self.params['W' + str(idx)] = scale * np.random.randn(all_size_list[idx - 1], all_size_list[idx])

self.params['b' + str(idx)] = np.zeros(all_size_list[idx])

def predict(self, x):

for layer in self.layers.values():

x = layer.forward(x)

return x

def loss(self, x, t):

# 求损失函数

y = self.predict(x)

weight_decay = 0

for idx in range(1, self.hidden_layer_num + 2):

W = self.params['W' + str(idx)]

weight_decay += 0.5 * self.weight_decay_lambda * np.sum(W ** 2)

return self.last_layer.forward(y, t) + weight_decay

def accuracy(self, x, t):

y = self.predict(x)

y = np.argmax(y, axis=1)

if t.ndim != 1: t = np.argmax(t, axis=1)

accuracy = np.sum(y == t) / float(x.shape[0])

return accuracy

def numerical_gradient(self, x, t):

# 求梯度(数值微分)

loss_W = lambda W: self.loss(x, t)

grads = {}

for idx in range(1, self.hidden_layer_num + 2):

grads['W' + str(idx)] = numerical_gradient(loss_W, self.params['W' + str(idx)])

grads['b' + str(idx)] = numerical_gradient(loss_W, self.params['b' + str(idx)])

return grads

def gradient(self, x, t):

# 求梯度(误差反向传播法)

# forward

self.loss(x, t)

# backward

dout = 1

dout = self.last_layer.backward(dout)

layers = list(self.layers.values())

layers.reverse()

for layer in layers:

dout = layer.backward(dout)

# 设定

grads = {}

for idx in range(1, self.hidden_layer_num + 2):

grads['W' + str(idx)] = self.layers['Affine' + str(idx)].dW + self.weight_decay_lambda * self.layers[

'Affine' + str(idx)].W

grads['b' + str(idx)] = self.layers['Affine' + str(idx)].db

return grads

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True)

# 为了再现过拟合,减少学习数据

x_train = x_train[:300]

t_train = t_train[:300]

# weight decay(权值衰减)的设定

# weight_decay_lambda = 0 # 不使用权值衰减的情况

weight_decay_lambda = 0.1

# ====================================================

network = MultiLayerNet(input_size=784, hidden_size_list=[100, 100, 100, 100, 100, 100], output_size=10,

weight_decay_lambda=weight_decay_lambda)

optimizer = SGD(lr=0.01)

iter_num = 100000

max_epochs = 201

train_size = x_train.shape[0]

batch_size = 100

train_loss_list = []

train_acc_list = []

test_acc_list = []

iter_per_epoch = max(train_size / batch_size, 1)

epoch_cnt = 0

for i in range(iter_num):

batch_mask = np.random.choice(train_size, batch_size)

x_batch = x_train[batch_mask]

t_batch = t_train[batch_mask]

grads = network.gradient(x_batch, t_batch)

optimizer.update(network.params, grads)

if i % iter_per_epoch == 0:

train_acc = network.accuracy(x_train, t_train)

test_acc = network.accuracy(x_test, t_test)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

print("epoch:" + str(epoch_cnt) + ", train acc:" + str(train_acc) + ", test acc:" + str(test_acc))

epoch_cnt += 1

if epoch_cnt >= max_epochs:

break

# 绘制图形

markers = {'train': 'o', 'test': 's'}

x = np.arange(max_epochs)

plt.plot(x, train_acc_list, marker='o', label='train', markevery=10)

plt.plot(x, test_acc_list, marker='s', label='test', markevery=10)

plt.xlabel("epochs")

plt.ylabel("accuracy")

plt.ylim(0, 1.0)

plt.legend(loc='lower right')

plt.show()

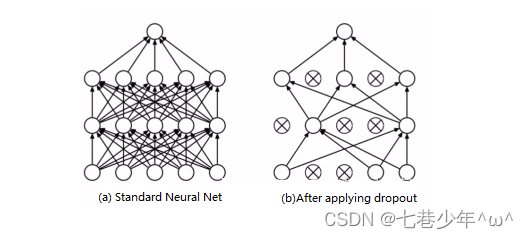

2).Dropout

-

如果网络的模型变得很复杂,只用权值衰减就难以应付了,这种情况下,我们就经常会使用Dropout方法。

-

原理:

- Dropout是一种在学习过程随机删除神经元的方法。

- 在训练时,随机选出隐藏层的神经元,然后进行删除,被删除的神经元不在进行信号的传递;

- 在测试时,虽然会传递所有的神经元信号,但是对于各个神经元的输出要乘上训练时的删除比后在输出。

-

示例:

过拟合现象应用Dropout方法后的示意图:

-

代码实现:

import sys, os

sys.path.append(os.pardir)

import numpy as np

import matplotlib.pyplot as plt

from dataset.mnist import load_mnist

from collections import OrderedDict

# 加载数据

def get_data():

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True)

return (x_train, t_train), (x_test, t_test)

class Sigmoid:

def __init__(self):

self.out = None

# 正向传播

def forward(self, x):

out = 1 / (1 + np.exp(-x))

self.out = out

return out

# 反向传播

def backward(self, dout):

dx = dout * (1.0 - self.out) * self.out

return dx

class Relu:

def __init__(self):

self.mask = None

# 正向传播

def forward(self, x):

self.mask = (x <= 0)

out = x.copy()

out[self.mask] = 0

return out

# 反向传播

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

def numerical_gradient(f, x):

h = 1e-4 # 0.0001

grad = np.zeros_like(x)

it = np.nditer(x, flags=['multi_index'], op_flags=['readwrite'])

while not it.finished:

idx = it.multi_index

tmp_val = x[idx]

x[idx] = float(tmp_val) + h

fxh1 = f(x) # f(x+h)

x[idx] = tmp_val - h

fxh2 = f(x) # f(x-h)

grad[idx] = (fxh1 - fxh2) / (2 * h)

x[idx] = tmp_val # 还原值

it.iternext()

return grad

class SoftmaxWithLoss:

def __init__(self):

self.loss = None # 损失

self.y = None # softmax的输出

self.t = None # 监督数据(one_hot vector)

# 输出层函数:softmax

def softmax(self, x):

if x.ndim == 2:

x = x.T

x = x - np.max(x, axis=0)

y = np.exp(x) / np.sum(np.exp(x), axis=0)

return y.T

x = x - np.max(x) # 溢出对策

return np.exp(x) / np.sum(np.exp(x))

# 交叉熵误差

def cross_entropy_error(self, y, t):

if y.ndim == 1:

t = t.reshape(1, t.size)

y = y.reshape(1, y.size)

# 监督数据是one-hot-vector的情况下,转换为正确解标签的索引

if t.size == y.size:

t = t.argmax(axis=1)

batch_size = y.shape[0]

return -np.sum(np.log(y[np.arange(batch_size), t] + 1e-7)) / batch_size

# 正向传播

def forward(self, x, t):

self.t = t

self.y = self.softmax(x)

self.loss = self.cross_entropy_error(self.y, self.t)

return self.loss

# 反向传播

def backward(self, dout=1):

batch_size = self.t.shape[0]

if self.t.size == self.y.size: # 监督数据是one-hot-vector的情况

dx = (self.y - self.t) / batch_size

else:

dx = self.y.copy()

dx[np.arange(batch_size), self.t] -= 1

dx = dx / batch_size

return dx

class Affine:

def __init__(self, W, b):

self.W = W

self.b = b

self.x = None

self.original_x_shape = None

# 权重和偏置参数的导数

self.dW = None

self.db = None

def forward(self, x):

# 对应张量

self.original_x_shape = x.shape

x = x.reshape(x.shape[0], -1)

self.x = x

out = np.dot(self.x, self.W) + self.b

return out

def backward(self, dout):

dx = np.dot(dout, self.W.T)

self.dW = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

dx = dx.reshape(*self.original_x_shape) # 还原输入数据的形状(对应张量)

return dx

class SGD:

def __init__(self, lr):

self.lr = lr

def update(self, params, grads):

for key in params.keys():

params[key] -= self.lr * grads[key]

class Momentum:

def __init__(self, lr=0.01, momentum=0.9):

self.lr = lr

self.momentum = momentum

self.v = None

def update(self, params, grads):

if self.v is None:

self.v = {}

for key, val in params.items():

self.v[key] = np.zeros_like(val)

for key in params.keys():

self.v[key] = self.momentum * self.v[key] - self.lr * grads[key]

params[key] += self.v[key]

class Nesterov:

def __init__(self, lr=0.01, momentum=0.9):

self.lr = lr

self.momentum = momentum

self.v = None

def update(self, params, grads):

if self.v is None:

self.v = {}

for key, val in params.items():

self.v[key] = np.zeros_like(val)

for key in params.keys():

self.v[key] *= self.momentum

self.v[key] -= self.lr * grads[key]

params[key] += self.momentum * self.momentum * self.v[key]

params[key] -= (1 + self.momentum) * self.lr * grads[key]

class AdaGrad:

def __init__(self, lr=0.01):

self.lr = lr

self.h = None

def update(self, params, grads):

if self.h is None:

self.h = {}

for key, val in params.items():

self.h[key] = np.zeros_like(val)

for key in params.keys():

self.h[key] += grads[key] * grads[key]

params[key] -= self.lr * grads[key] / (np.sqrt(self.h[key]) + 1e-7)

class RMSprop:

def __init__(self, lr=0.01, decay_rate=0.99):

self.lr = lr

self.decay_rate = decay_rate

self.h = None

def update(self, params, grads):

if self.h is None:

self.h = {}

for key, val in params.items():

self.h[key] = np.zeros_like(val)

for key in params.keys():

self.h[key] *= self.decay_rate

self.h[key] += (1 - self.decay_rate) * grads[key] * grads[key]

params[key] -= self.lr * grads[key] / (np.sqrt(self.h[key]) + 1e-7)

class Adam:

def __init__(self, lr=0.001, beta1=0.9, beta2=0.999):

self.lr = lr

self.beta1 = beta1

self.beta2 = beta2

self.iter = 0

self.m = None

self.v = None

def update(self, params, grads):

if self.m is None:

self.m, self.v = {}, {}

for key, val in params.items():

self.m[key] = np.zeros_like(val)

self.v[key] = np.zeros_like(val)

self.iter += 1

lr_t = self.lr * np.sqrt(1.0 - self.beta2 ** self.iter) / (1.0 - self.beta1 ** self.iter)

for key in params.keys():

# self.m[key] = self.beta1*self.m[key] + (1-self.beta1)*grads[key]

# self.v[key] = self.beta2*self.v[key] + (1-self.beta2)*(grads[key]**2)

self.m[key] += (1 - self.beta1) * (grads[key] - self.m[key])

self.v[key] += (1 - self.beta2) * (grads[key] ** 2 - self.v[key])

params[key] -= lr_t * self.m[key] / (np.sqrt(self.v[key]) + 1e-7)

class Dropout:

def __init__(self, dropout_ratio=0.5):

self.dropout_ratio = dropout_ratio

self.mask = None

def forward(self, x, train_flg=True):

if train_flg:

self.mask = np.random.rand(*x.shape) > self.dropout_ratio

return x * self.mask

else:

return x * (1.0 - self.dropout_ratio)

def backward(self, dout):

return dout * self.mask

class BatchNormalization:

def __init__(self, gamma, beta, momentum=0.9, running_mean=None, running_var=None):

self.gamma = gamma

self.beta = beta

self.momentum = momentum

self.input_shape = None # Conv层的情况下为4维,全连接层的情况下为2维

# 测试时使用的平均值和方差

self.running_mean = running_mean

self.running_var = running_var

# backward时使用的中间数据

self.batch_size = None

self.xc = None

self.std = None

self.dgamma = None

self.dbeta = None

def forward(self, x, train_flg=True):

self.input_shape = x.shape

if x.ndim != 2:

N, C, H, W = x.shape

x = x.reshape(N, -1)

out = self.__forward(x, train_flg)

return out.reshape(*self.input_shape)

def __forward(self, x, train_flg):

if self.running_mean is None:

N, D = x.shape

self.running_mean = np.zeros(D)

self.running_var = np.zeros(D)

if train_flg:

mu = x.mean(axis=0)

xc = x - mu

var = np.mean(xc ** 2, axis=0)

std = np.sqrt(var + 10e-7)

xn = xc / std

self.batch_size = x.shape[0]

self.xc = xc

self.xn = xn

self.std = std

self.running_mean = self.momentum * self.running_mean + (1 - self.momentum) * mu

self.running_var = self.momentum * self.running_var + (1 - self.momentum) * var

else:

xc = x - self.running_mean

xn = xc / ((np.sqrt(self.running_var + 10e-7)))

out = self.gamma * xn + self.beta

return out

def backward(self, dout):

if dout.ndim != 2:

N, C, H, W = dout.shape

dout = dout.reshape(N, -1)

dx = self.__backward(dout)

dx = dx.reshape(*self.input_shape)

return dx

def __backward(self, dout):

dbeta = dout.sum(axis=0)

dgamma = np.sum(self.xn * dout, axis=0)

dxn = self.gamma * dout

dxc = dxn / self.std

dstd = -np.sum((dxn * self.xc) / (self.std * self.std), axis=0)

dvar = 0.5 * dstd / self.std

dxc += (2.0 / self.batch_size) * self.xc * dvar

dmu = np.sum(dxc, axis=0)

dx = dxc - dmu / self.batch_size

self.dgamma = dgamma

self.dbeta = dbeta

return dx

class MultiLayerNetExtend:

# 扩展版的全连接的多层神经网络

def __init__(self, input_size, hidden_size_list, output_size,

activation='relu', weight_init_std='relu', weight_decay_lambda=0,

use_dropout=False, dropout_ration=0.5, use_batchnorm=False):

self.input_size = input_size

self.output_size = output_size

self.hidden_size_list = hidden_size_list

self.hidden_layer_num = len(hidden_size_list)

self.use_dropout = use_dropout

self.weight_decay_lambda = weight_decay_lambda

self.use_batchnorm = use_batchnorm

self.params = {}

# 初始化权重

self.__init_weight(weight_init_std)

# 生成层

activation_layer = {'sigmoid': Sigmoid, 'relu': Relu}

self.layers = OrderedDict()

for idx in range(1, self.hidden_layer_num + 1):

self.layers['Affine' + str(idx)] = Affine(self.params['W' + str(idx)],

self.params['b' + str(idx)])

if self.use_batchnorm:

self.params['gamma' + str(idx)] = np.ones(hidden_size_list[idx - 1])

self.params['beta' + str(idx)] = np.zeros(hidden_size_list[idx - 1])

self.layers['BatchNorm' + str(idx)] = BatchNormalization(self.params['gamma' + str(idx)],

self.params['beta' + str(idx)])

self.layers['Activation_function' + str(idx)] = activation_layer[activation]()

if self.use_dropout:

self.layers['Dropout' + str(idx)] = Dropout(dropout_ration)

idx = self.hidden_layer_num + 1

self.layers['Affine' + str(idx)] = Affine(self.params['W' + str(idx)], self.params['b' + str(idx)])

self.last_layer = SoftmaxWithLoss()

def __init_weight(self, weight_init_std):

# 设定权重的初始值

all_size_list = [self.input_size] + self.hidden_size_list + [self.output_size]

for idx in range(1, len(all_size_list)):

scale = weight_init_std

if str(weight_init_std).lower() in ('relu', 'he'):

scale = np.sqrt(2.0 / all_size_list[idx - 1]) # 使用ReLU的情况下推荐的初始值

elif str(weight_init_std).lower() in ('sigmoid', 'xavier'):

scale = np.sqrt(1.0 / all_size_list[idx - 1]) # 使用sigmoid的情况下推荐的初始值

self.params['W' + str(idx)] = scale * np.random.randn(all_size_list[idx - 1], all_size_list[idx])

self.params['b' + str(idx)] = np.zeros(all_size_list[idx])

def predict(self, x, train_flg=False):

for key, layer in self.layers.items():

if "Dropout" in key or "BatchNorm" in key:

x = layer.forward(x, train_flg)

else:

x = layer.forward(x)

return x

def loss(self, x, t, train_flg=False):

# 求损失函数

y = self.predict(x, train_flg)

weight_decay = 0

for idx in range(1, self.hidden_layer_num + 2):

W = self.params['W' + str(idx)]

weight_decay += 0.5 * self.weight_decay_lambda * np.sum(W ** 2)

return self.last_layer.forward(y, t) + weight_decay

def accuracy(self, X, T):

Y = self.predict(X, train_flg=False)

Y = np.argmax(Y, axis=1)

if T.ndim != 1: T = np.argmax(T, axis=1)

accuracy = np.sum(Y == T) / float(X.shape[0])

return accuracy

def numerical_gradient(self, X, T):

# 求梯度(数值微分)

loss_W = lambda W: self.loss(X, T, train_flg=True)

grads = {}

for idx in range(1, self.hidden_layer_num + 2):

grads['W' + str(idx)] = numerical_gradient(loss_W, self.params['W' + str(idx)])

grads['b' + str(idx)] = numerical_gradient(loss_W, self.params['b' + str(idx)])

if self.use_batchnorm and idx != self.hidden_layer_num + 1:

grads['gamma' + str(idx)] = numerical_gradient(loss_W, self.params['gamma' + str(idx)])

grads['beta' + str(idx)] = numerical_gradient(loss_W, self.params['beta' + str(idx)])

return grads

def gradient(self, x, t):

# forward

self.loss(x, t, train_flg=True)

# backward

dout = 1

dout = self.last_layer.backward(dout)

layers = list(self.layers.values())

layers.reverse()

for layer in layers:

dout = layer.backward(dout)

# 设定

grads = {}

for idx in range(1, self.hidden_layer_num + 2):

grads['W' + str(idx)] = self.layers['Affine' + str(idx)].dW + self.weight_decay_lambda * self.params[

'W' + str(idx)]

grads['b' + str(idx)] = self.layers['Affine' + str(idx)].db

if self.use_batchnorm and idx != self.hidden_layer_num + 1:

grads['gamma' + str(idx)] = self.layers['BatchNorm' + str(idx)].dgamma

grads['beta' + str(idx)] = self.layers['BatchNorm' + str(idx)].dbeta

return grads

class Trainer:

"""进行神经网络的训练的类

"""

def __init__(self, network, x_train, t_train, x_test, t_test,

epochs=20, mini_batch_size=100,

optimizer='SGD', optimizer_param={'lr': 0.01},

evaluate_sample_num_per_epoch=None, verbose=True):

self.network = network

self.verbose = verbose

self.x_train = x_train

self.t_train = t_train

self.x_test = x_test

self.t_test = t_test

self.epochs = epochs

self.batch_size = mini_batch_size

self.evaluate_sample_num_per_epoch = evaluate_sample_num_per_epoch

# optimzer

optimizer_class_dict = {'sgd': SGD, 'momentum': Momentum, 'nesterov': Nesterov,

'adagrad': AdaGrad, 'rmsprpo': RMSprop, 'adam': Adam}

self.optimizer = optimizer_class_dict[optimizer.lower()](**optimizer_param)

self.train_size = x_train.shape[0]

self.iter_per_epoch = max(self.train_size / mini_batch_size, 1)

self.max_iter = int(epochs * self.iter_per_epoch)

self.current_iter = 0

self.current_epoch = 0

self.train_loss_list = []

self.train_acc_list = []

self.test_acc_list = []

def train_step(self):

batch_mask = np.random.choice(self.train_size, self.batch_size)

x_batch = self.x_train[batch_mask]

t_batch = self.t_train[batch_mask]

grads = self.network.gradient(x_batch, t_batch)

self.optimizer.update(self.network.params, grads)

loss = self.network.loss(x_batch, t_batch)

self.train_loss_list.append(loss)

if self.verbose: print("train loss:" + str(loss))

if self.current_iter % self.iter_per_epoch == 0:

self.current_epoch += 1

x_train_sample, t_train_sample = self.x_train, self.t_train

x_test_sample, t_test_sample = self.x_test, self.t_test

if not self.evaluate_sample_num_per_epoch is None:

t = self.evaluate_sample_num_per_epoch

x_train_sample, t_train_sample = self.x_train[:t], self.t_train[:t]

x_test_sample, t_test_sample = self.x_test[:t], self.t_test[:t]

train_acc = self.network.accuracy(x_train_sample, t_train_sample)

test_acc = self.network.accuracy(x_test_sample, t_test_sample)

self.train_acc_list.append(train_acc)

self.test_acc_list.append(test_acc)

if self.verbose: print(

"=== epoch:" + str(self.current_epoch) + ", train acc:" + str(train_acc) + ", test acc:" + str(

test_acc) + " ===")

self.current_iter += 1

def train(self):

for i in range(self.max_iter):

self.train_step()

test_acc = self.network.accuracy(self.x_test, self.t_test)

if self.verbose:

print("=============== Final Test Accuracy ===============")

print("test acc:" + str(test_acc))

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True)

# 为了再现过拟合,减少学习数据

x_train = x_train[:300]

t_train = t_train[:300]

# 设定是否使用Dropuout,以及比例 ========================

use_dropout = True # 不使用Dropout的情况下为False

dropout_ratio = 0.2

# ====================================================

network = MultiLayerNetExtend(input_size=784, hidden_size_list=[100, 100, 100, 100, 100, 100],

output_size=10, use_dropout=use_dropout, dropout_ration=dropout_ratio)

trainer = Trainer(network, x_train, t_train, x_test, t_test,

epochs=301, mini_batch_size=100,

optimizer='sgd', optimizer_param={'lr': 0.01}, verbose=True)

trainer.train()

train_acc_list, test_acc_list = trainer.train_acc_list, trainer.test_acc_list

# 绘制图形==========

markers = {'train': 'o', 'test': 's'}

x = np.arange(len(train_acc_list))

plt.plot(x, train_acc_list, marker='o', label='train', markevery=10)

plt.plot(x, test_acc_list, marker='s', label='test', markevery=10)

plt.xlabel("epochs")

plt.ylabel("accuracy")

plt.ylim(0, 1.0)

plt.legend(loc='lower right')

plt.show()

3.集成学习

- 机器学习中经常使用集成学习,所谓集成学习,就是让多个模型单独进行训练,推理时再取多个模型输出数据的均值。[用神经网络的语境来说:比如,准备5个结构相同的网络,分别进行学习,测试时,以这5个网络的输出均值作为最后的答案]

4.超参数

1).数据集的分类

①.训练数据:用于参数(权重和偏置)的学习

②.验证数据:用于超参数的性能评估

③.测试数据:确认泛化能力,要在最后使用。

2).超参数最优化的步骤

这里介绍的超参数最优化方法是实践性方法,,如果需要更精炼的方法,可以使用贝叶斯最优化。

-

步骤1:

设定超参数的范围。 -

步骤2:

从设定的超参数范围中随机取样。 -

步骤3:

使用步骤1中的采样到的超参数的值进行学习,通过验证数据评估识别精度(但要将epoch设置的很小)。 -

步骤4:

重复步骤1和步骤2,根据他们的识别精度结果,缩小超参数范围。

2).超参数最优化的实现

· 示例中:权值衰减的初始范围:10-8-10-4,学习率的初始范围是10-6-10-2

# 指定搜索的超参数的范围===============

weight_decay = 10 ** np.random.uniform(-8, -4)

lr = 10 ** np.random.uniform(-6, -2)

· 完整的代码:

import sys, os

sys.path.append(os.pardir)

import numpy as np

import matplotlib.pyplot as plt

from dataset.mnist import load_mnist

from collections import OrderedDict

# 加载数据

def get_data():

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True)

return (x_train, t_train), (x_test, t_test)

def shuffle_dataset(x, t):

"""打乱数据集

Parameters

----------

x : 训练数据

t : 监督数据

Return

-------

x, t : 打乱的训练数据和监督数据

"""

permutation = np.random.permutation(x.shape[0]) # 随机排列函数

x = x[permutation, :] if x.ndim == 2 else x[permutation, :, :, :]

t = t[permutation]

return x, t

class Sigmoid:

def __init__(self):

self.out = None

# 正向传播

def forward(self, x):

out = 1 / (1 + np.exp(-x))

self.out = out

return out

# 反向传播

def backward(self, dout):

dx = dout * (1.0 - self.out) * self.out

return dx

class Relu:

def __init__(self):

self.mask = None

# 正向传播

def forward(self, x):

self.mask = (x <= 0)

out = x.copy()

out[self.mask] = 0

return out

# 反向传播

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

def numerical_gradient(f, x):

h = 1e-4 # 0.0001

grad = np.zeros_like(x)

it = np.nditer(x, flags=['multi_index'], op_flags=['readwrite'])

while not it.finished:

idx = it.multi_index

tmp_val = x[idx]

x[idx] = float(tmp_val) + h

fxh1 = f(x) # f(x+h)

x[idx] = tmp_val - h

fxh2 = f(x) # f(x-h)

grad[idx] = (fxh1 - fxh2) / (2 * h)

x[idx] = tmp_val # 还原值

it.iternext()

return grad

class SoftmaxWithLoss:

def __init__(self):

self.loss = None # 损失

self.y = None # softmax的输出

self.t = None # 监督数据(one_hot vector)

# 输出层函数:softmax

def softmax(self, x):

if x.ndim == 2:

x = x.T

x = x - np.max(x, axis=0)

y = np.exp(x) / np.sum(np.exp(x), axis=0)

return y.T

x = x - np.max(x) # 溢出对策

return np.exp(x) / np.sum(np.exp(x))

# 交叉熵误差

def cross_entropy_error(self, y, t):

if y.ndim == 1:

t = t.reshape(1, t.size)

y = y.reshape(1, y.size)

# 监督数据是one-hot-vector的情况下,转换为正确解标签的索引

if t.size == y.size:

t = t.argmax(axis=1)

batch_size = y.shape[0]

return -np.sum(np.log(y[np.arange(batch_size), t] + 1e-7)) / batch_size

# 正向传播

def forward(self, x, t):

self.t = t

self.y = self.softmax(x)

self.loss = self.cross_entropy_error(self.y, self.t)

return self.loss

# 反向传播

def backward(self, dout=1):

batch_size = self.t.shape[0]

if self.t.size == self.y.size: # 监督数据是one-hot-vector的情况

dx = (self.y - self.t) / batch_size

else:

dx = self.y.copy()

dx[np.arange(batch_size), self.t] -= 1

dx = dx / batch_size

return dx

class Affine:

def __init__(self, W, b):

self.W = W

self.b = b

self.x = None

self.original_x_shape = None

# 权重和偏置参数的导数

self.dW = None

self.db = None

def forward(self, x):

# 对应张量

self.original_x_shape = x.shape

x = x.reshape(x.shape[0], -1)

self.x = x

out = np.dot(self.x, self.W) + self.b

return out

def backward(self, dout):

dx = np.dot(dout, self.W.T)

self.dW = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

dx = dx.reshape(*self.original_x_shape) # 还原输入数据的形状(对应张量)

return dx

class SGD:

def __init__(self, lr):

self.lr = lr

def update(self, params, grads):

for key in params.keys():

params[key] -= self.lr * grads[key]

class Momentum:

def __init__(self, lr=0.01, momentum=0.9):

self.lr = lr

self.momentum = momentum

self.v = None

def update(self, params, grads):

if self.v is None:

self.v = {}

for key, val in params.items():

self.v[key] = np.zeros_like(val)

for key in params.keys():

self.v[key] = self.momentum * self.v[key] - self.lr * grads[key]

params[key] += self.v[key]

class Nesterov:

def __init__(self, lr=0.01, momentum=0.9):

self.lr = lr

self.momentum = momentum

self.v = None

def update(self, params, grads):

if self.v is None:

self.v = {}

for key, val in params.items():

self.v[key] = np.zeros_like(val)

for key in params.keys():

self.v[key] *= self.momentum

self.v[key] -= self.lr * grads[key]

params[key] += self.momentum * self.momentum * self.v[key]

params[key] -= (1 + self.momentum) * self.lr * grads[key]

class AdaGrad:

def __init__(self, lr=0.01):

self.lr = lr

self.h = None

def update(self, params, grads):

if self.h is None:

self.h = {}

for key, val in params.items():

self.h[key] = np.zeros_like(val)

for key in params.keys():

self.h[key] += grads[key] * grads[key]

params[key] -= self.lr * grads[key] / (np.sqrt(self.h[key]) + 1e-7)

class RMSprop:

def __init__(self, lr=0.01, decay_rate=0.99):

self.lr = lr

self.decay_rate = decay_rate

self.h = None

def update(self, params, grads):

if self.h is None:

self.h = {}

for key, val in params.items():

self.h[key] = np.zeros_like(val)

for key in params.keys():

self.h[key] *= self.decay_rate

self.h[key] += (1 - self.decay_rate) * grads[key] * grads[key]

params[key] -= self.lr * grads[key] / (np.sqrt(self.h[key]) + 1e-7)

class Adam:

def __init__(self, lr=0.001, beta1=0.9, beta2=0.999):

self.lr = lr

self.beta1 = beta1

self.beta2 = beta2

self.iter = 0

self.m = None

self.v = None

def update(self, params, grads):

if self.m is None:

self.m, self.v = {}, {}

for key, val in params.items():

self.m[key] = np.zeros_like(val)

self.v[key] = np.zeros_like(val)

self.iter += 1

lr_t = self.lr * np.sqrt(1.0 - self.beta2 ** self.iter) / (1.0 - self.beta1 ** self.iter)

for key in params.keys():

# self.m[key] = self.beta1*self.m[key] + (1-self.beta1)*grads[key]

# self.v[key] = self.beta2*self.v[key] + (1-self.beta2)*(grads[key]**2)

self.m[key] += (1 - self.beta1) * (grads[key] - self.m[key])

self.v[key] += (1 - self.beta2) * (grads[key] ** 2 - self.v[key])

params[key] -= lr_t * self.m[key] / (np.sqrt(self.v[key]) + 1e-7)

class Dropout:

def __init__(self, dropout_ratio=0.5):

self.dropout_ratio = dropout_ratio

self.mask = None

def forward(self, x, train_flg=True):

if train_flg:

self.mask = np.random.rand(*x.shape) > self.dropout_ratio

return x * self.mask

else:

return x * (1.0 - self.dropout_ratio)

def backward(self, dout):

return dout * self.mask

class BatchNormalization:

def __init__(self, gamma, beta, momentum=0.9, running_mean=None, running_var=None):

self.gamma = gamma

self.beta = beta

self.momentum = momentum

self.input_shape = None # Conv层的情况下为4维,全连接层的情况下为2维

# 测试时使用的平均值和方差

self.running_mean = running_mean

self.running_var = running_var

# backward时使用的中间数据

self.batch_size = None

self.xc = None

self.std = None

self.dgamma = None

self.dbeta = None

def forward(self, x, train_flg=True):

self.input_shape = x.shape

if x.ndim != 2:

N, C, H, W = x.shape

x = x.reshape(N, -1)

out = self.__forward(x, train_flg)

return out.reshape(*self.input_shape)

def __forward(self, x, train_flg):

if self.running_mean is None:

N, D = x.shape

self.running_mean = np.zeros(D)

self.running_var = np.zeros(D)

if train_flg:

mu = x.mean(axis=0)

xc = x - mu

var = np.mean(xc ** 2, axis=0)

std = np.sqrt(var + 10e-7)

xn = xc / std

self.batch_size = x.shape[0]

self.xc = xc

self.xn = xn

self.std = std

self.running_mean = self.momentum * self.running_mean + (1 - self.momentum) * mu

self.running_var = self.momentum * self.running_var + (1 - self.momentum) * var

else:

xc = x - self.running_mean

xn = xc / ((np.sqrt(self.running_var + 10e-7)))

out = self.gamma * xn + self.beta

return out

def backward(self, dout):

if dout.ndim != 2:

N, C, H, W = dout.shape

dout = dout.reshape(N, -1)

dx = self.__backward(dout)

dx = dx.reshape(*self.input_shape)

return dx

def __backward(self, dout):

dbeta = dout.sum(axis=0)

dgamma = np.sum(self.xn * dout, axis=0)

dxn = self.gamma * dout

dxc = dxn / self.std

dstd = -np.sum((dxn * self.xc) / (self.std * self.std), axis=0)

dvar = 0.5 * dstd / self.std

dxc += (2.0 / self.batch_size) * self.xc * dvar

dmu = np.sum(dxc, axis=0)

dx = dxc - dmu / self.batch_size

self.dgamma = dgamma

self.dbeta = dbeta

return dx

class MultiLayerNet:

"""全连接的多层神经网络

Parameters

----------

input_size : 输入大小(MNIST的情况下为784)

hidden_size_list : 隐藏层的神经元数量的列表(e.g. [100, 100, 100])

output_size : 输出大小(MNIST的情况下为10)

activation : 'relu' or 'sigmoid'

weight_init_std : 指定权重的标准差(e.g. 0.01)

指定'relu'或'he'的情况下设定“He的初始值”

指定'sigmoid'或'xavier'的情况下设定“Xavier的初始值”

weight_decay_lambda : Weight Decay(L2范数)的强度

"""

def __init__(self, input_size, hidden_size_list, output_size,

activation='relu', weight_init_std='relu', weight_decay_lambda=0):

self.input_size = input_size

self.output_size = output_size

self.hidden_size_list = hidden_size_list

self.hidden_layer_num = len(hidden_size_list)

self.weight_decay_lambda = weight_decay_lambda

self.params = {}

# 初始化权重

self.__init_weight(weight_init_std)

# 生成层

activation_layer = {'sigmoid': Sigmoid, 'relu': Relu}

self.layers = OrderedDict()

for idx in range(1, self.hidden_layer_num+1):

self.layers['Affine' + str(idx)] = Affine(self.params['W' + str(idx)],

self.params['b' + str(idx)])

self.layers['Activation_function' + str(idx)] = activation_layer[activation]()

idx = self.hidden_layer_num + 1

self.layers['Affine' + str(idx)] = Affine(self.params['W' + str(idx)],

self.params['b' + str(idx)])

self.last_layer = SoftmaxWithLoss()

def __init_weight(self, weight_init_std):

"""设定权重的初始值

Parameters

----------

weight_init_std : 指定权重的标准差(e.g. 0.01)

指定'relu'或'he'的情况下设定“He的初始值”

指定'sigmoid'或'xavier'的情况下设定“Xavier的初始值”

"""

all_size_list = [self.input_size] + self.hidden_size_list + [self.output_size]

for idx in range(1, len(all_size_list)):

scale = weight_init_std

if str(weight_init_std).lower() in ('relu', 'he'):

scale = np.sqrt(2.0 / all_size_list[idx - 1]) # 使用ReLU的情况下推荐的初始值

elif str(weight_init_std).lower() in ('sigmoid', 'xavier'):

scale = np.sqrt(1.0 / all_size_list[idx - 1]) # 使用sigmoid的情况下推荐的初始值

self.params['W' + str(idx)] = scale * np.random.randn(all_size_list[idx-1], all_size_list[idx])

self.params['b' + str(idx)] = np.zeros(all_size_list[idx])

def predict(self, x):

for layer in self.layers.values():

x = layer.forward(x)

return x

def loss(self, x, t):

"""求损失函数

Parameters

----------

x : 输入数据

t : 教师标签

Returns

-------

损失函数的值

"""

y = self.predict(x)

weight_decay = 0

for idx in range(1, self.hidden_layer_num + 2):

W = self.params['W' + str(idx)]

weight_decay += 0.5 * self.weight_decay_lambda * np.sum(W ** 2)

return self.last_layer.forward(y, t) + weight_decay

def accuracy(self, x, t):

y = self.predict(x)

y = np.argmax(y, axis=1)

if t.ndim != 1 : t = np.argmax(t, axis=1)

accuracy = np.sum(y == t) / float(x.shape[0])

return accuracy

def numerical_gradient(self, x, t):

"""求梯度(数值微分)

Parameters

----------

x : 输入数据

t : 教师标签

Returns

-------

具有各层的梯度的字典变量

grads['W1']、grads['W2']、...是各层的权重

grads['b1']、grads['b2']、...是各层的偏置

"""

loss_W = lambda W: self.loss(x, t)

grads = {}

for idx in range(1, self.hidden_layer_num+2):

grads['W' + str(idx)] = numerical_gradient(loss_W, self.params['W' + str(idx)])

grads['b' + str(idx)] = numerical_gradient(loss_W, self.params['b' + str(idx)])

return grads

def gradient(self, x, t):

"""求梯度(误差反向传播法)

Parameters

----------

x : 输入数据

t : 教师标签

Returns

-------

具有各层的梯度的字典变量

grads['W1']、grads['W2']、...是各层的权重

grads['b1']、grads['b2']、...是各层的偏置

"""

# forward

self.loss(x, t)

# backward

dout = 1

dout = self.last_layer.backward(dout)

layers = list(self.layers.values())

layers.reverse()

for layer in layers:

dout = layer.backward(dout)

# 设定

grads = {}

for idx in range(1, self.hidden_layer_num+2):

grads['W' + str(idx)] = self.layers['Affine' + str(idx)].dW + self.weight_decay_lambda * self.layers['Affine' + str(idx)].W

grads['b' + str(idx)] = self.layers['Affine' + str(idx)].db

return grads

class Trainer:

"""进行神经网络的训练的类

"""

def __init__(self, network, x_train, t_train, x_test, t_test,

epochs=20, mini_batch_size=100,

optimizer='SGD', optimizer_param={'lr': 0.01},

evaluate_sample_num_per_epoch=None, verbose=True):

self.network = network

self.verbose = verbose

self.x_train = x_train

self.t_train = t_train

self.x_test = x_test

self.t_test = t_test

self.epochs = epochs

self.batch_size = mini_batch_size

self.evaluate_sample_num_per_epoch = evaluate_sample_num_per_epoch

# optimzer

optimizer_class_dict = {'sgd': SGD, 'momentum': Momentum, 'nesterov': Nesterov,

'adagrad': AdaGrad, 'rmsprpo': RMSprop, 'adam': Adam}

self.optimizer = optimizer_class_dict[optimizer.lower()](**optimizer_param)

self.train_size = x_train.shape[0]

self.iter_per_epoch = max(self.train_size / mini_batch_size, 1)

self.max_iter = int(epochs * self.iter_per_epoch)

self.current_iter = 0

self.current_epoch = 0

self.train_loss_list = []

self.train_acc_list = []

self.test_acc_list = []

def train_step(self):

batch_mask = np.random.choice(self.train_size, self.batch_size)

x_batch = self.x_train[batch_mask]

t_batch = self.t_train[batch_mask]

grads = self.network.gradient(x_batch, t_batch)

self.optimizer.update(self.network.params, grads)

loss = self.network.loss(x_batch, t_batch)

self.train_loss_list.append(loss)

if self.verbose: print("train loss:" + str(loss))

if self.current_iter % self.iter_per_epoch == 0:

self.current_epoch += 1

x_train_sample, t_train_sample = self.x_train, self.t_train

x_test_sample, t_test_sample = self.x_test, self.t_test

if not self.evaluate_sample_num_per_epoch is None:

t = self.evaluate_sample_num_per_epoch

x_train_sample, t_train_sample = self.x_train[:t], self.t_train[:t]

x_test_sample, t_test_sample = self.x_test[:t], self.t_test[:t]

train_acc = self.network.accuracy(x_train_sample, t_train_sample)

test_acc = self.network.accuracy(x_test_sample, t_test_sample)

self.train_acc_list.append(train_acc)

self.test_acc_list.append(test_acc)

if self.verbose: print(

"=== epoch:" + str(self.current_epoch) + ", train acc:" + str(train_acc) + ", test acc:" + str(

test_acc) + " ===")

self.current_iter += 1

def train(self):

for i in range(self.max_iter):

self.train_step()

test_acc = self.network.accuracy(self.x_test, self.t_test)

if self.verbose:

print("=============== Final Test Accuracy ===============")

print("test acc:" + str(test_acc))

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True)

# 为了实现高速化,减少训练数据

x_train = x_train[:500]

t_train = t_train[:500]

# 分割验证数据

validation_rate = 0.20

validation_num = int(x_train.shape[0] * validation_rate)

x_train, t_train = shuffle_dataset(x_train, t_train)

x_val = x_train[:validation_num]

t_val = t_train[:validation_num]

x_train = x_train[validation_num:]

t_train = t_train[validation_num:]

def __train(lr, weight_decay, epocs=50):

network = MultiLayerNet(input_size=784, hidden_size_list=[100, 100, 100, 100, 100, 100],

output_size=10, weight_decay_lambda=weight_decay)

trainer = Trainer(network, x_train, t_train, x_val, t_val,

epochs=epocs, mini_batch_size=100,

optimizer='sgd', optimizer_param={'lr': lr}, verbose=False)

trainer.train()

return trainer.test_acc_list, trainer.train_acc_list

# 超参数的随机搜索======================================

optimization_trial = 100

results_val = {}

results_train = {}

for _ in range(optimization_trial):

# 指定搜索的超参数的范围===============

weight_decay = 10 ** np.random.uniform(-8, -4)

lr = 10 ** np.random.uniform(-6, -2)

# ================================================

val_acc_list, train_acc_list = __train(lr, weight_decay)

print("val acc:" + str(val_acc_list[-1]) + " | lr:" + str(lr) + ", weight decay:" + str(weight_decay))

key = "lr:" + str(lr) + ", weight decay:" + str(weight_decay)

results_val[key] = val_acc_list

results_train[key] = train_acc_list

# 绘制图形========================================================

print("=========== Hyper-Parameter Optimization Result ===========")

graph_draw_num = 20

col_num = 5

row_num = int(np.ceil(graph_draw_num / col_num))

i = 0

for key, val_acc_list in sorted(results_val.items(), key=lambda x: x[1][-1], reverse=True):

print("Best-" + str(i + 1) + "(val acc:" + str(val_acc_list[-1]) + ") | " + key)

plt.subplot(row_num, col_num, i + 1)

plt.title("Best-" + str(i + 1))

plt.ylim(0.0, 1.0)

if i % 5: plt.yticks([])

plt.xticks([])

x = np.arange(len(val_acc_list))

plt.plot(x, val_acc_list)

plt.plot(x, results_train[key], "--")

i += 1

if i >= graph_draw_num:

break

plt.show()

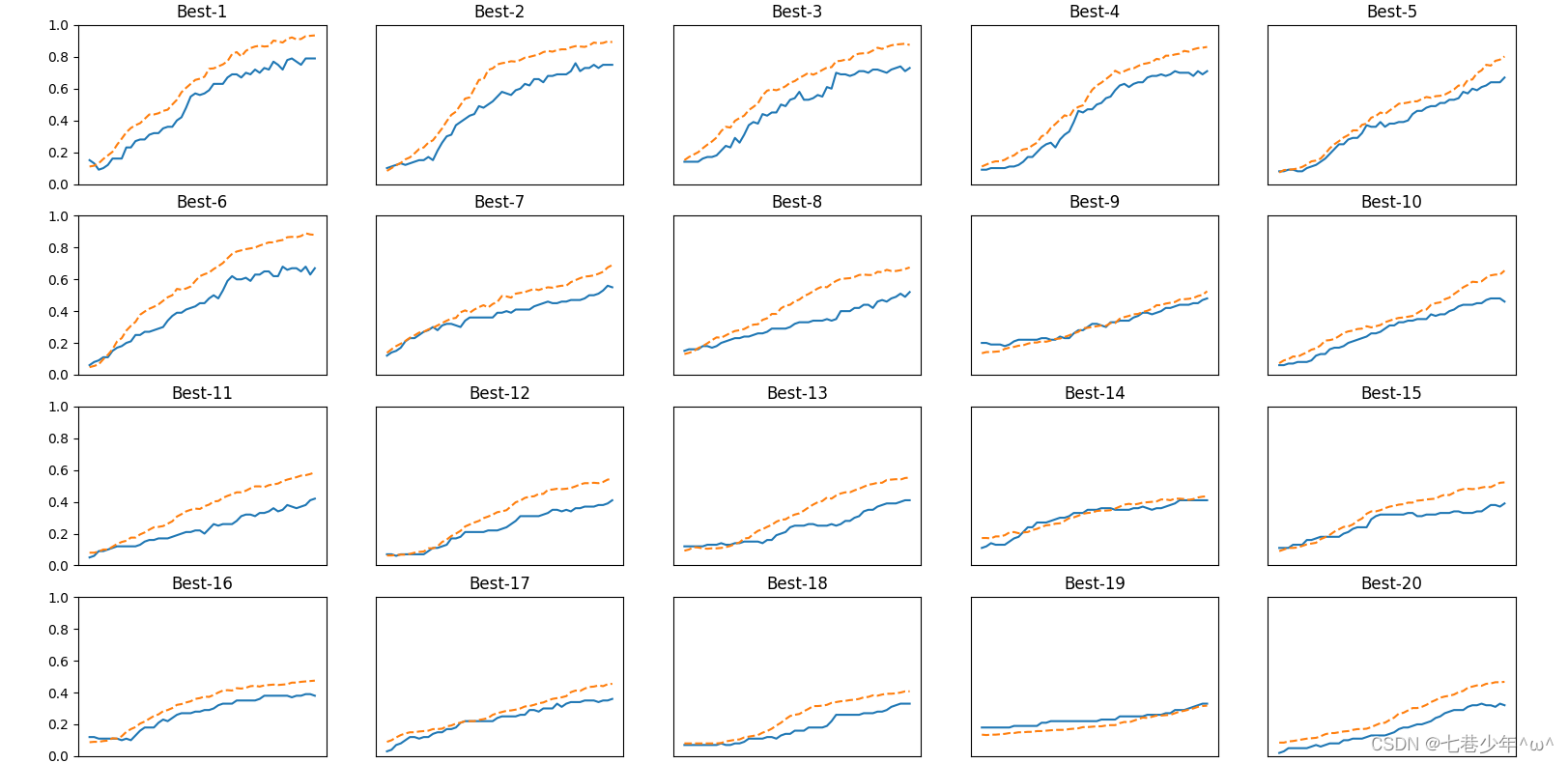

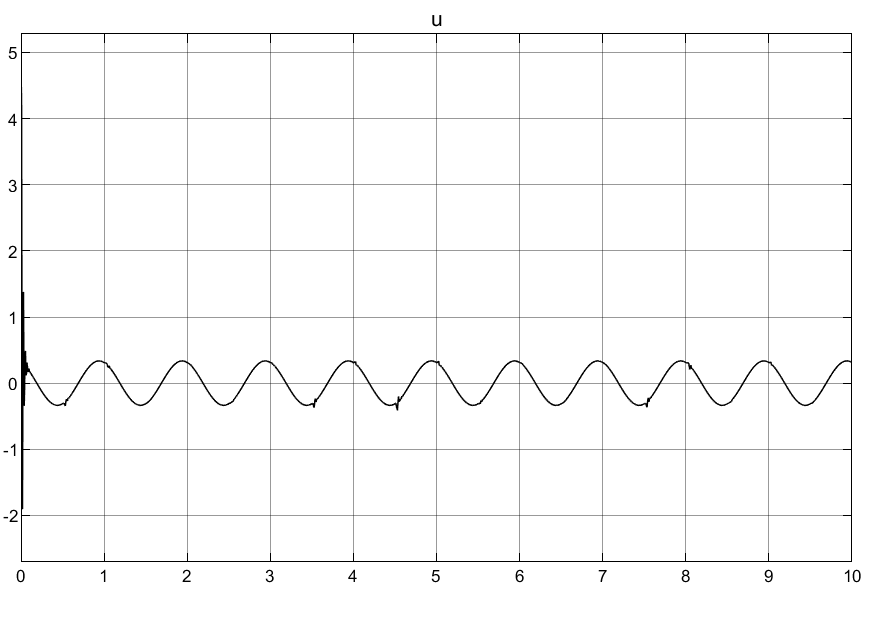

3).结果展示

识别精度从高到低的顺序排列了验证数据学习的变化。[实线是验证数据的识别精度,虚线是训练数据的识别精度]

![[一键CV] Blazor 拖放上传文件转换格式并推送到浏览器下载](https://user-images.githubusercontent.com/8428709/205942253-8ff5f9ca-a033-4707-9c36-b8c9950e50d6.png)