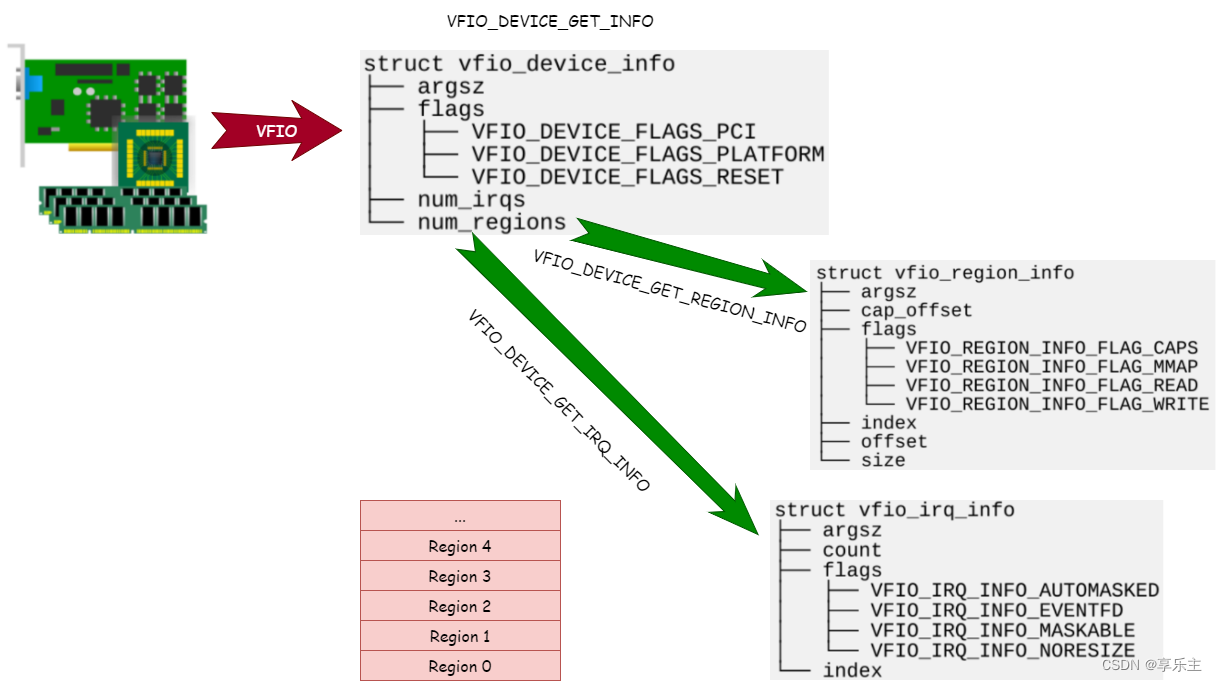

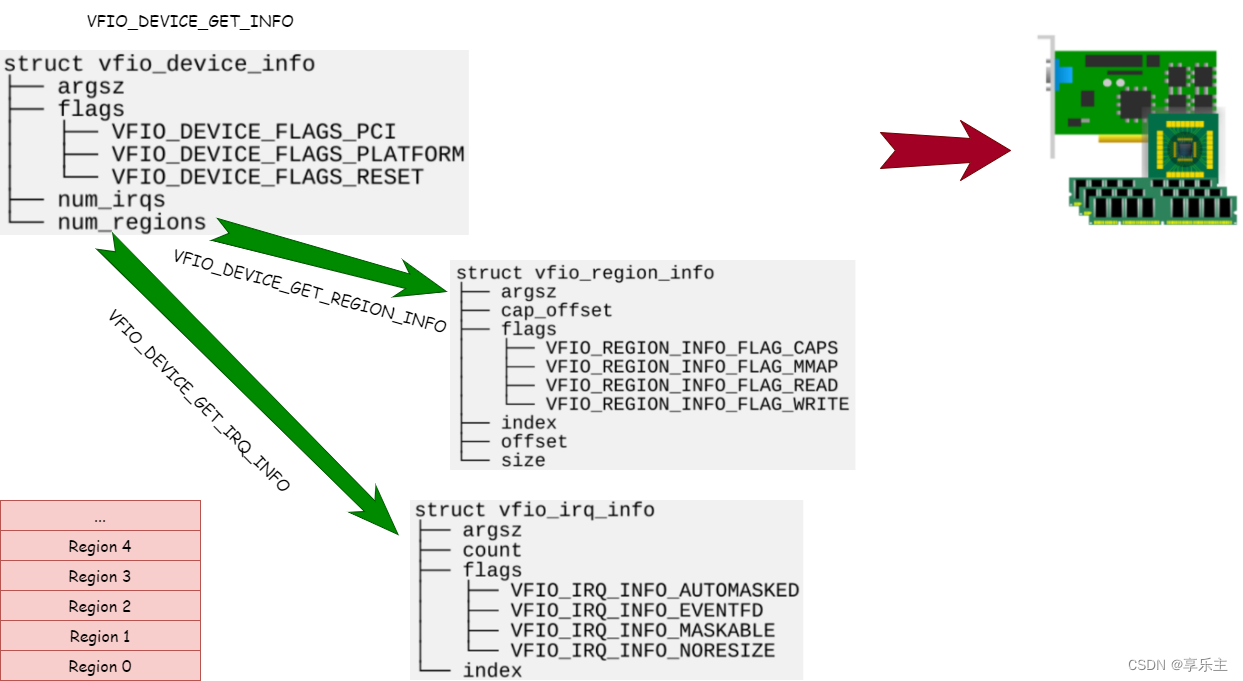

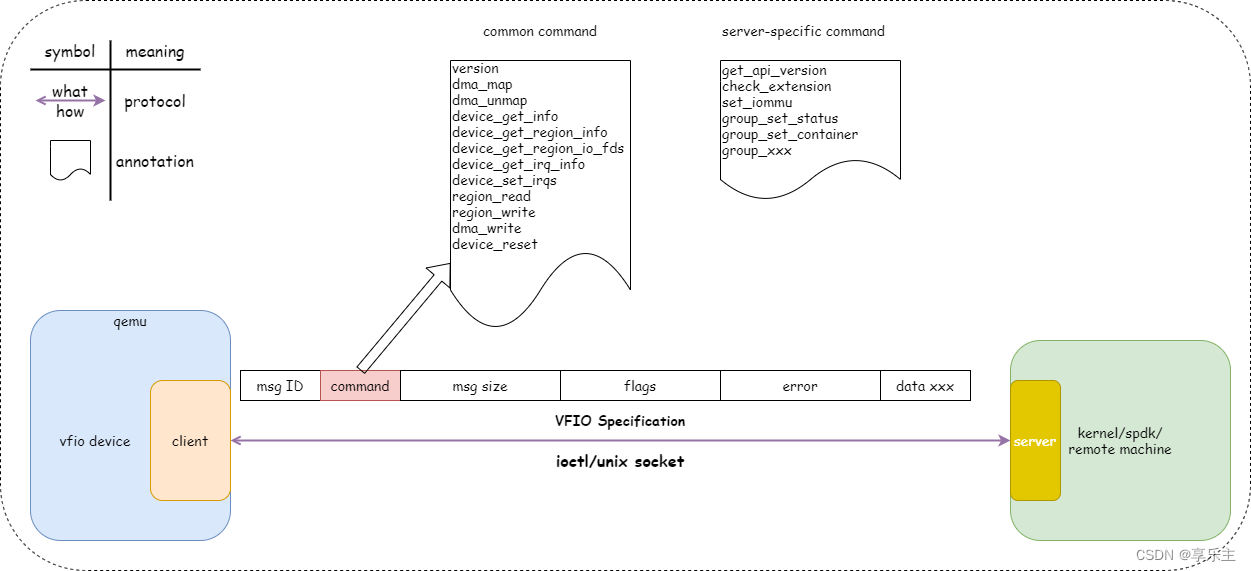

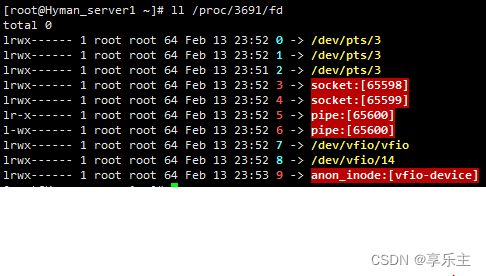

在虚拟化应用场景中,虚拟机想要在访问PCI设备时达到IO性能最优,最直接的方法就是将物理设备暴露给虚拟机,虚拟机对设备的访问不经过任何中间层的转换,没有虚拟化的损耗。 但我们知道Linux没有为用户程序提供这样的设备访问机制,所有PCI设备都在kernel的管理之下,即使我们能够让一个PCI物理设备不被kernel管理,直接供用户态的程序使用,在安全上也不被允许,因为我们知道PCI设备可以DMA,用户程序管理的PCI设备一旦能够DMA,那么用户程序就能借助PCI设备访问整个系统内存。延伸到虚拟化场景下,一旦一个虚拟机拥有的PCI设备能够做DMA,这个虚机就能借助PCI设备入侵整个主机系统。 想要提供高IO性能的PCI设备给虚拟机,除尽量减少PCI设备访问损耗,还需要增加一层保护,防止访问PCI设备的应用程序借助PCI的DMA能力入侵系统。 VFIO协议应时而生,它通过定义一套标准接口将PCI设备的信息提供给用户程序,替代了传统的通过PCI driver来获取PCI设备信息的方式,从而让用户态程序也能直接访问PCI设备信息。 我们知道访问PCI设备的传统步骤是首先通过PCI驱动框架枚举pci总线上所有设备,根据PCI硬件的要求读取其配置空间内容、bar空间类型和大小,记录到内核PCI设备相关数据结构并为内存BAR空间映射系统内存。 以上方式是访问PCI最自然的方式:根据厂商要求编写驱动,访问硬件设备。而用户态程序要访问PCI设备的信息,不能采用此方式,因为用户态程序无法像内核一样利用驱动框架枚举PCI设备,也无法针对设定PCI设备进行读写以获取其信息。那用户态程序应该怎么做才能访问PCI设备信息呢?其实用户态程序并不关心何种方式获取PCI信息,只关心最终结果,即PCI设备的信息,因此只要内核为用户态程序这样的信息即可。 为达到上述目的,内核的VFIO框架对PCI设备进行了抽象,将PCI设备的所有信息抽象为一组Region。如下图所示: PCI设备所有信息记录在上述region中,通过ioctl命令字暴露给用户态程序。用户态程序在虚拟化场景下可以是Qemu或者ovs程序,我们知道Qemu可以软件模拟PCI设备,在使用VFIO设备后,当客户机有访问PCI设备的请求时,Qemu可以直接通过ioctl命令从内核获取信息,直接返回给客户机,Qemu完成了将Region重新组装成PCI设备,呈现给客户机的工作,如下图所示: 上一节中定义了一套接口和对应的数据结构,用于描述PCI设备信息,这套接口即所谓的VFIO协议,在最初的实现中,VFIO协议用来支持硬件PCI设备透传功能,因此协议两端分别是用户态程序和kernel,协议通过ioctl命令字实现。但该协议可以扩展到任何场景,比如vfio-user设备就是另一种场景,协议两端都在用户态,协议通过unix socket实现。我们将发起调用的一方称为客户端(client),接受调用并返回的一方称为服务端(server)。如下图所示: 在传统的VFIO-PCI硬件设备透传场景, 由于硬件机制的原因,某些PCI设备做IO可能通过物理关联的另一个设备进行,为防止这种侵入,内核在实现上提出了组和容器的概念,用于定义隔离PCI设备的最小单元,这部分实现与VFIO标准协议无关,相关命令可以认为是具体实现相关的,如上图的中server-specific command。 VFIO User 用户态的VFIO协议不涉及PCI设备的隔离,定义了如下命令字: USER_VERSION

DMA_MAP

DMA_UNMAP

DEVICE_GET_INFO

DEVICE_GET_REGION_INFO

DEVICE_GET_REGION_IO_FDS

DEVICE_GET_IRQ_INFO

EVICE_SET_IRQS

REGION_READ

REGION_WRITE

DMA_WRITE

DEVICE_RESET

VFIO Kernel 对于kernel场景,VFIO PCI设备除了实现标准的VFIO协议外,还需要定义PCI设备隔离最小单元相关命令字,如下: 实现相关命令字: GET_API_VERSION

CHECK_EXTENSION

SET_IOMMU

GROUP_SET_STATUS

GROUP_SET_CONTAINER

......

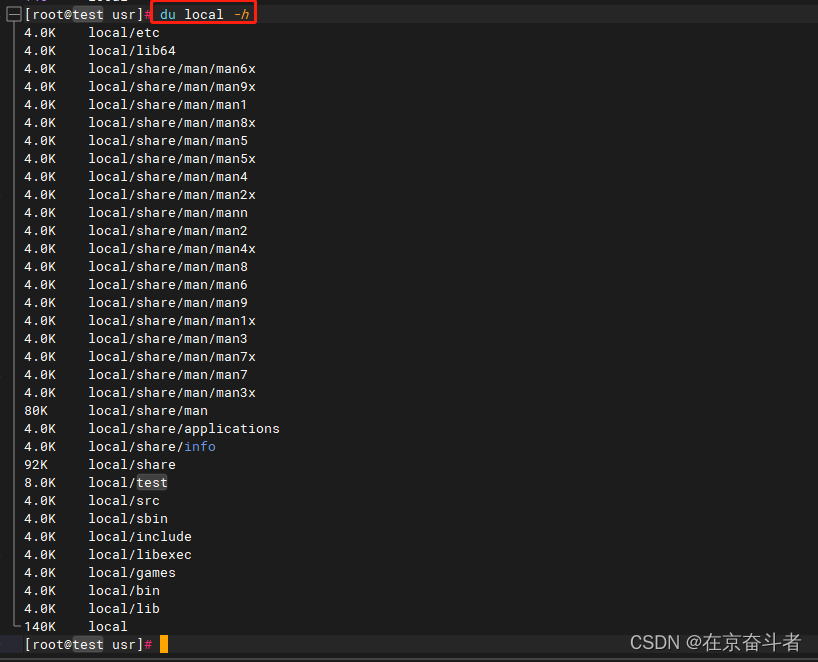

我们的实验非常简单,选取一个PCI设备,将其加载为VFIO PCI驱动,然后编写用户态程序访问该设备并获取其信息,具体实现我们参考了内核的VFIO文档。实验我们以透传显卡为例。 查看显卡信息 [root@Hyman_server1 ~]# lspci -s 04:00.0

04:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Turks [Radeon HD 7600 Series]

查看显卡所属的group [root@Hyman_server1 ~]# readlink /sys/bus/pci/devices/0000\:04\:00.0/iommu_group

../../../../kernel/iommu_groups/14

查看显卡所属组包含的设备 [root@Hyman_server1 ~]# ll /sys/kernel/iommu_groups/14/devices/

total 0

lrwxrwxrwx 1 root root 0 Feb 13 23:27 0000:04:00.0 -> ../../../../devices/pci0000:00/0000:00:07.0/0000:04:00.0

lrwxrwxrwx 1 root root 0 Feb 13 23:27 0000:04:00.1 -> ../../../../devices/pci0000:00/0000:00:07.0/0000:04:00.1

卸载同组内的所有设备的内核驱动,加载为vfio-pci驱动,这里我们通过Libvirt提供的工具操作 查看显卡设备,显卡所属的group包含两个设备,一个显卡,一个声卡 [root@Hyman_server1 ~]# virsh nodedev-dumpxml pci_0000_04_00_0

<device>

<name>pci_0000_04_00_0</name>

<path>/sys/devices/pci0000:00/0000:00:07.0/0000:04:00.0</path>

<parent>pci_0000_00_07_0</parent>

<driver>

<name>radeon</name>

</driver>

<capability type='pci'>

<class>0x030000</class>

<domain>0</domain>

<bus>4</bus>

<slot>0</slot>

<function>0</function>

<product id='0x675b'>Turks [Radeon HD 7600 Series]</product>

<vendor id='0x1002'>Advanced Micro Devices, Inc. [AMD/ATI]</vendor>

<iommuGroup number='14'>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x1'/>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x0'/>

</iommuGroup>

<pci-express>

<link validity='cap' port='0' speed='5' width='16'/>

<link validity='sta' speed='5' width='16'/>

</pci-express>

</capability>

</device>

[root@Hyman_server1 ~]# virsh nodedev-dumpxml pci_0000_04_00_1

<device>

<name>pci_0000_04_00_1</name>

<path>/sys/devices/pci0000:00/0000:00:07.0/0000:04:00.1</path>

<parent>pci_0000_00_07_0</parent>

<driver>

<name>snd_hda_intel</name>

</driver>

<capability type='pci'>

<class>0x040300</class>

<domain>0</domain>

<bus>4</bus>

<slot>0</slot>

<function>1</function>

<product id='0xaa90'>Turks HDMI Audio [Radeon HD 6500/6600 / 6700M Series]</product>

<vendor id='0x1002'>Advanced Micro Devices, Inc. [AMD/ATI]</vendor>

<iommuGroup number='14'>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x1'/>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x0'/>

</iommuGroup>

<pci-express>

<link validity='cap' port='0' speed='5' width='16'/>

<link validity='sta' speed='5' width='16'/>

</pci-express>

</capability>

</device>

[root@Hyman_server1 ~]# virsh nodedev-detach pci_0000_04_00_0

Device pci_0000_04_00_0 detached

[root@Hyman_server1 ~]# virsh nodedev-detach pci_0000_04_00_1

Device pci_0000_04_00_1 detached

[root@Hyman_server1 ~]# virsh nodedev-dumpxml pci_0000_04_00_0

<device>

<name>pci_0000_04_00_0</name>

<path>/sys/devices/pci0000:00/0000:00:07.0/0000:04:00.0</path>

<parent>pci_0000_00_07_0</parent>

<driver>

<name>vfio-pci</name>

</driver>

<capability type='pci'>

<class>0x030000</class>

<domain>0</domain>

<bus>4</bus>

<slot>0</slot>

<function>0</function>

<product id='0x675b'>Turks [Radeon HD 7600 Series]</product>

<vendor id='0x1002'>Advanced Micro Devices, Inc. [AMD/ATI]</vendor>

<iommuGroup number='14'>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x1'/>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x0'/>

</iommuGroup>

<pci-express>

<link validity='cap' port='0' speed='5' width='16'/>

<link validity='sta' speed='5' width='16'/>

</pci-express>

</capability>

</device>

[root@Hyman_server1 ~]# virsh nodedev-dumpxml pci_0000_04_00_1

<device>

<name>pci_0000_04_00_1</name>

<path>/sys/devices/pci0000:00/0000:00:07.0/0000:04:00.1</path>

<parent>pci_0000_00_07_0</parent>

<driver>

<name>vfio-pci</name>

</driver>

<capability type='pci'>

<class>0x040300</class>

<domain>0</domain>

<bus>4</bus>

<slot>0</slot>

<function>1</function>

<product id='0xaa90'>Turks HDMI Audio [Radeon HD 6500/6600 / 6700M Series]</product>

<vendor id='0x1002'>Advanced Micro Devices, Inc. [AMD/ATI]</vendor>

<iommuGroup number='14'>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x1'/>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x0'/>

</iommuGroup>

<pci-express>

<link validity='cap' port='0' speed='5' width='16'/>

<link validity='sta' speed='5' width='16'/>

</pci-express>

</capability>

</device>

编写应用程序,访问vfio pci设备,参考demo #include <stdio.h>

#include <errno.h>

#include <string.h>

#include <sys/ioctl.h>

#include <sys/types.h>

#include <sys/stat.h>

#include <sys/mman.h>

#include <unistd.h>

#include <linux/vfio.h>

#include <fcntl.h>

void main (int argc, char *argv[]) {

int container, group, device, i;

struct vfio_group_status group_status =

{ .argsz = sizeof(group_status) };

struct vfio_iommu_type1_info iommu_info = { .argsz = sizeof(iommu_info) };

struct vfio_iommu_type1_dma_map dma_map = { .argsz = sizeof(dma_map) };

struct vfio_device_info device_info = { .argsz = sizeof(device_info) };

int ret;

/* Create a new container */

container = open("/dev/vfio/vfio", O_RDWR);

if (ioctl(container, VFIO_GET_API_VERSION) != VFIO_API_VERSION) {

/* Unknown API version */

fprintf(stderr, "unknown api version\n");

return;

}

if (!ioctl(container, VFIO_CHECK_EXTENSION, VFIO_TYPE1_IOMMU)) {

/* Doesn't support the IOMMU driver we want. */

fprintf(stderr, "doesn't support the IOMMU driver we want\n");

return;

}

/* Open the group and get group fd

* readlink /sys/bus/pci/devices/0000\:04\:00.0/iommu_group

* */

group = open("/dev/vfio/14", O_RDWR);

if (group == -ENOENT) {

fprintf(stderr, "group is not managed by VFIO driver\n");

return;

}

/* Test the group is viable and available */

ret = ioctl(group, VFIO_GROUP_GET_STATUS, &group_status);

if (ret) {

fprintf(stderr, "cannot get VFIO group status, "

"error %i (%s)\n", errno, strerror(errno));

close(group);

return;

} else if (!(group_status.flags & VFIO_GROUP_FLAGS_VIABLE)) {

fprintf(stderr, "VFIO group is not viable! "

"Not all devices in IOMMU group bound to VFIO or unbound\n");

close(group);

return;

}

if (!(group_status.flags & VFIO_GROUP_FLAGS_CONTAINER_SET)) {

/* Add the group to the container */

ret = ioctl(group, VFIO_GROUP_SET_CONTAINER, &container);

if (ret) {

fprintf(stderr,

"cannot add VFIO group to container, error "

"%i (%s)\n", errno, strerror(errno));

close(group);

return;

}

/* Enable the IOMMU model we want */

ret = ioctl(container, VFIO_SET_IOMMU, VFIO_TYPE1_IOMMU);

if (!ret) {

fprintf(stderr, "using IOMMU type 1\n");

} else {

fprintf(stderr, "failed to select IOMMU type\n");

return;

}

}

/* Get addition IOMMU info */

ioctl(container, VFIO_IOMMU_GET_INFO, &iommu_info);

/* Allocate some space and setup a DMA mapping */

dma_map.vaddr = mmap(0, 1024 * 1024, PROT_READ | PROT_WRITE,

MAP_PRIVATE | MAP_ANONYMOUS, 0, 0);

dma_map.size = 1024 * 1024;

dma_map.iova = 0; /* 1MB starting at 0x0 from device view */

dma_map.flags = VFIO_DMA_MAP_FLAG_READ | VFIO_DMA_MAP_FLAG_WRITE;

ioctl(container, VFIO_IOMMU_MAP_DMA, &dma_map);

/* Get a file descriptor for the device */

device = ioctl(group, VFIO_GROUP_GET_DEVICE_FD, "0000:04:00.0");

/* Test and setup the device */

ioctl(device, VFIO_DEVICE_GET_INFO, &device_info);

for (i = 0; i < device_info.num_regions; i++) {

struct vfio_region_info reg = { .argsz = sizeof(reg) };

reg.index = i;

ioctl(device, VFIO_DEVICE_GET_REGION_INFO, ®);

/* Setup mappings... read/write offsets, mmaps

* For PCI devices, config space is a region */

}

for (i = 0; i < device_info.num_irqs; i++) {

struct vfio_irq_info irq = { .argsz = sizeof(irq) };

irq.index = i;

ioctl(device, VFIO_DEVICE_GET_IRQ_INFO, &irq);

/* Setup IRQs... eventfds, VFIO_DEVICE_SET_IRQS */

}

/* Gratuitous device reset and go... */

ioctl(device, VFIO_DEVICE_RESET);

ioctl(device, VFIO_DEVICE_GET_INFO, &device_info);

}

编译测试 make

gdb demo

b main

r

Breakpoint 1, main (argc=1, argv=0x7fffffffe478) at demo.c:14

14 struct vfio_group_status group_status =

(gdb) bt

#0 main (argc=1, argv=0x7fffffffe478) at demo.c:14

(gdb) list

9 #include <linux/vfio.h>

10 #include <fcntl.h>

11

12 void main (int argc, char *argv[]) {

13 int container, group, device, i;

14 struct vfio_group_status group_status =

15 { .argsz = sizeof(group_status) };

16 struct vfio_iommu_type1_info iommu_info = { .argsz = sizeof(iommu_info) };

17 struct vfio_iommu_type1_dma_map dma_map = { .argsz = sizeof(dma_map) };

18 struct vfio_device_info device_info = { .argsz = sizeof(device_info) };

(gdb) n

16 struct vfio_iommu_type1_info iommu_info = { .argsz = sizeof(iommu_info) };

(gdb) n

17 struct vfio_iommu_type1_dma_map dma_map = { .argsz = sizeof(dma_map) };

(gdb) n

18 struct vfio_device_info device_info = { .argsz = sizeof(device_info) };

(gdb) n

22 container = open("/dev/vfio/vfio", O_RDWR);

(gdb) n

24 if (ioctl(container, VFIO_GET_API_VERSION) != VFIO_API_VERSION) {

(gdb) n

30 if (!ioctl(container, VFIO_CHECK_EXTENSION, VFIO_TYPE1_IOMMU)) {

(gdb) n

39 group = open("/dev/vfio/14", O_RDWR);

(gdb) n

41 if (group == -ENOENT) {

(gdb) p group

$1 = 8

(gdb) n

47 ret = ioctl(group, VFIO_GROUP_GET_STATUS, &group_status);

(gdb) n

48 if (ret) {

(gdb) p ret

$2 = 0

(gdb) p group_status

$3 = {argsz = 8, flags = 1}

(gdb) n

53 } else if (!(group_status.flags & VFIO_GROUP_FLAGS_VIABLE)) {

(gdb) n

60 if (!(group_status.flags & VFIO_GROUP_FLAGS_CONTAINER_SET)) {

(gdb) n

62 ret = ioctl(group, VFIO_GROUP_SET_CONTAINER, &container);

(gdb) p container

$4 = 7

(gdb) n

63 if (ret) {

(gdb) p ret

$5 = 0

(gdb) n

72 ret = ioctl(container, VFIO_SET_IOMMU, VFIO_TYPE1_IOMMU);

(gdb) n

73 if (!ret) {

(gdb) n

74 fprintf(stderr, "using IOMMU type 1\n");

(gdb) n

using IOMMU type 1

82 ioctl(container, VFIO_IOMMU_GET_INFO, &iommu_info);

(gdb) n

85 dma_map.vaddr = mmap(0, 1024 * 1024, PROT_READ | PROT_WRITE,

(gdb) p iommu_info

$6 = {argsz = 116, flags = 3, iova_pgsizes = 4096, cap_offset = 0}

(gdb) n

87 dma_map.size = 1024 * 1024;

(gdb) n

88 dma_map.iova = 0; /* 1MB starting at 0x0 from device view */

(gdb) n

89 dma_map.flags = VFIO_DMA_MAP_FLAG_READ | VFIO_DMA_MAP_FLAG_WRITE;

(gdb) n

91 ioctl(container, VFIO_IOMMU_MAP_DMA, &dma_map);

(gdb) n

94 device = ioctl(group, VFIO_GROUP_GET_DEVICE_FD, "0000:04:00.0");

(gdb) n

97 ioctl(device, VFIO_DEVICE_GET_INFO, &device_info);

(gdb) p device

$7 = 9

(gdb) n

99 for (i = 0; i < device_info.num_regions; i++) {

(gdb) p device_info

$8 = {argsz = 20, flags = 2, num_regions = 9, num_irqs = 5, cap_offset = 0}

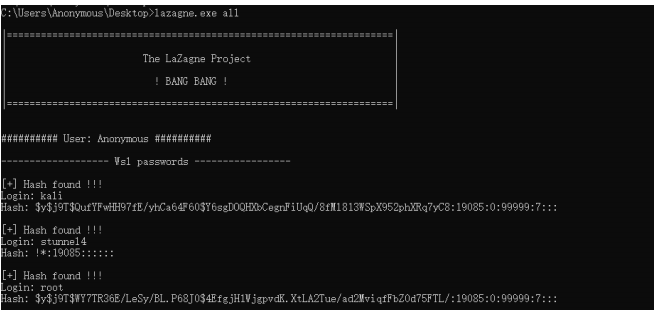

查看demo程序打开的文件 使用VFIO透传的设备,主机上必须开启IOMMU透传吗? 是的。IOMMU如果不透传,所有设备的DMA请求,都会通过内核配置来实现地址转换,用户进程无法决定映射关系。开启IOMMU透传后,IOMMU的DMA映射关系交给了用户程序,这样用户态程序(Qemu)才能通过接口VFIO_IOMMU_MAP_DMA灵活配置虚机做DMA的空间。