在分布式环境中一般统一收集日志,但是在并发大时不好定位问题,大量的日志导致无法找出日志的链路关系。

可以为每一个请求分配一个traceId,记录日志时,记录此traceId,从网关开始,依次将traceId记录到请求头中,并借用log4j2的MDC功能实现traceId的打印。

1、添加traceId过滤器

其中TRACE_ID_HEADER和LOG_TRACE_ID为上下游约定好的key。

public class LogTraceIdFilter extends OncePerRequestFilter implements Constants {

@Override

protected void doFilterInternal(HttpServletRequest request, HttpServletResponse response, FilterChain chain) throws ServletException, IOException {

String traceId = request.getHeader(TRACE_ID_HEADER);

if (StringUtils.isBlank(traceId)) {

traceId = UUID.randomUUID().toString();

}

MDC.put(LOG_TRACE_ID, traceId);

log.debug("设置traceId:{}", traceId);

chain.doFilter(request, response);

}

}

2、注册traceId过滤器

@AutoConfiguration

@ConditionalOnClass(LogTraceIdFilter.class)

@Slf4j

public class LogTraceIdAutoConfiguration {

@Bean

public LogTraceIdFilter logTraceIdFilter() {

return new LogTraceIdFilter();

}

@SuppressWarnings("unchecked")

@Bean

public FilterRegistrationBean traceIdFilterRegistrationBean() {

log.info("-----注册LogTraceId过滤器-------");

FilterRegistrationBean frb = new FilterRegistrationBean();

frb.setOrder(Ordered.HIGHEST_PRECEDENCE);

frb.setFilter(logTraceIdFilter());

frb.addUrlPatterns("/*");

frb.setName("logTraceIdFilter");

log.info("-----注册LogTraceId过滤器结束-------");

return frb;

}

}

将LogTraceIdAutoConfiguration全限定名 写入resources/META-INF/spring/org.springframework.boot.autoconfigure.AutoConfiguration.imports,如果是老版的springboot,写入resources/spring.factories

3、调用其他服务时传递traceId

如果需调用其他服务,需要将获取到的traceId,写入请求头的TRACE_ID_HEADER中,例如使用openfeign可统一处理,这里略。

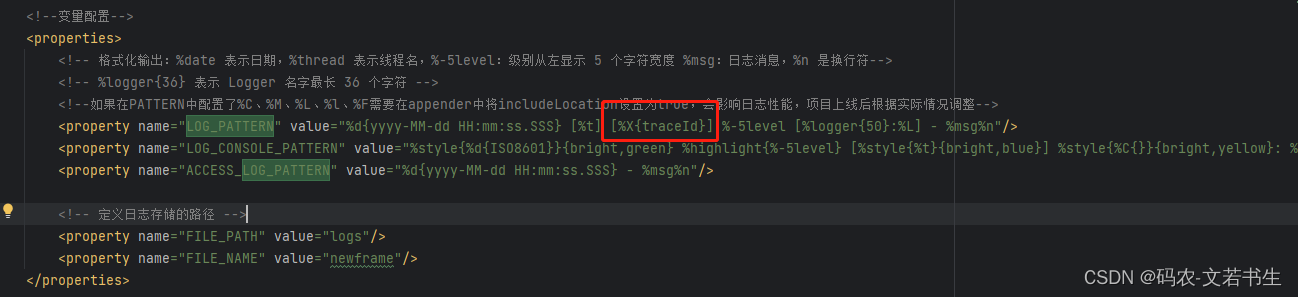

4、log4j2的配置文件中添加打印traceId的配置

完整的配置文件文件如下:

<?xml version="1.0" encoding="UTF-8"?>

<!--Configuration 后面的 status,这个用于设置 log4j2 自身内部的信息输出级别,可以不设置,当设置成 trace 时,你会看到 log4j2 内部各种详细输出-->

<!--monitorInterval:Log4j2 能够自动检测修改配置 文件和重新配置本身,设置间隔秒数-->

<configuration status="error" monitorInterval="30">

<!--日志级别以及优先级排序: OFF > FATAL > ERROR > WARN > INFO > DEBUG > TRACE > ALL -->

<!--变量配置-->

<properties>

<!-- 格式化输出:%date 表示日期,%thread 表示线程名,%-5level:级别从左显示 5 个字符宽度 %msg:日志消息,%n 是换行符-->

<!-- %logger{36} 表示 Logger 名字最长 36 个字符 -->

<!--如果在PATTERN中配置了%C、%M、%L、%l、%F需要在appender中将includeLocation设置为true,会影响日志性能,项目上线后根据实际情况调整-->

<property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} [%t] [%X{traceId}] %-5level [%logger{50}:%L] - %msg%n"/>

<property name="LOG_CONSOLE_PATTERN" value="%style{%d{ISO8601}}{bright,green} %highlight{%-5level} [%style{%t}{bright,blue}] %style{%C{}}{bright,yellow}: %msg%n%style{%throwable}{red}"/>

<property name="ACCESS_LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} - %msg%n"/>

<!-- 定义日志存储的路径 -->

<property name="FILE_PATH" value="logs"/>

<property name="FILE_NAME" value="newframe"/>

</properties>

<Appenders>

<!--*********************控制台日志***********************-->

<Console name="consoleAppender" target="SYSTEM_OUT">

<!--设置日志格式及颜色-->

<PatternLayout pattern="${LOG_PATTERN}" charset="UTF-8"/>

</Console>

<!--info级别日志-->

<!-- 这个会打印出所有的info及以上级别的信息,每次大小超过size,则这size大小的日志会自动存入按年份-月份建立的文件夹下面并进行压缩,作为存档-->

<RollingFile name="infoFileAppender"

fileName="${FILE_PATH}/${FILE_NAME}/log_info.log"

filePattern="${FILE_PATH}/${FILE_NAME}/info/log-info-%d{yyyy-MM-dd}_%i.log.gz"

append="true">

<!--设置日志格式-->

<PatternLayout pattern="${LOG_PATTERN}" charset="UTF-8"/>

<Filters>

<!--过滤掉warn及更高级别日志-->

<ThresholdFilter level="warn" onMatch="DENY" onMismatch="NEUTRAL" />

<ThresholdFilter level="info" onMatch="ACCEPT" onMismatch="DENY"/>

</Filters>

<Policies>

<!-- 基于时间的触发策略。该策略主要是完成周期性的log文件封存工作。有两个参数:

interval,integer型,指定两次封存动作之间的时间间隔。单位:以日志的命名精度来确定单位,

比如yyyy-MM-dd-HH 单位为小时,yyyy-MM-dd-HH-mm 单位为分钟

modulate,boolean型,说明是否对封存时间进行调制。若modulate=true,

则封存时间将以0点为边界进行偏移计算。比如,modulate=true,interval=4hours,

那么假设上次封存日志的时间为00:00,则下次封存日志的时间为04:00,

之后的封存时间依次为08:00,12:00,16:00-->

<TimeBasedTriggeringPolicy interval="1"/>

<SizeBasedTriggeringPolicy size="10MB"/>

</Policies>

<!-- DefaultRolloverStrategy 属性如不设置,则默认为最多同一文件夹下当天 7 个文件后开始覆盖-->

<DefaultRolloverStrategy max="30">

<!-- 删除处理策略,在配置的路径中搜索,maxDepth 表示往下搜索的最大深度 -->

<Delete basePath="${FILE_PATH}/${FILE_NAME}/" maxDepth="2">

<!-- 文件名搜索匹配,支持正则 -->

<IfFileName glob="*.log.gz"/>

<!--!Note: 这里的 age 必须和 filePattern 协调, 后者是精确到 dd, 这里就要写成 xd, xD 就不起作用

另外, 数字最好 >2, 否则可能造成删除的时候, 最近的文件还处于被占用状态,导致删除不成功!-->

<!--7天-->

<IfLastModified age="7d"/>

</Delete>

</DefaultRolloverStrategy>

</RollingFile>

<!--warn级别日志-->

<!-- 这个会打印出所有的warn及以上级别的信息,每次大小超过size,则这size大小的日志会自动存入按年份-月份建立的文件夹下面并进行压缩,作为存档-->

<RollingFile name="warnFileAppender"

fileName="${FILE_PATH}/${FILE_NAME}/log_warn.log"

filePattern="${FILE_PATH}/${FILE_NAME}/warn/log-warn-%d{yyyy-MM-dd}_%i.log.gz"

append="true">

<!--设置日志格式-->

<PatternLayout pattern="${LOG_PATTERN}" charset="UTF-8"/>

<Filters>

<!--过滤掉error及更高级别日志-->

<ThresholdFilter level="error" onMatch="DENY" onMismatch="NEUTRAL" />

<ThresholdFilter level="warn" onMatch="ACCEPT" onMismatch="DENY"/>

</Filters>

<Policies>

<!-- 基于时间的触发策略。该策略主要是完成周期性的log文件封存工作。有两个参数:

interval,integer型,指定两次封存动作之间的时间间隔。单位:以日志的命名精度来确定单位,

比如yyyy-MM-dd-HH 单位为小时,yyyy-MM-dd-HH-mm 单位为分钟

modulate,boolean型,说明是否对封存时间进行调制。若modulate=true,

则封存时间将以0点为边界进行偏移计算。比如,modulate=true,interval=4hours,

那么假设上次封存日志的时间为00:00,则下次封存日志的时间为04:00,

之后的封存时间依次为08:00,12:00,16:00-->

<TimeBasedTriggeringPolicy interval="1"/>

<SizeBasedTriggeringPolicy size="10MB"/>

</Policies>

<!-- DefaultRolloverStrategy 属性如不设置,则默认为最多同一文件夹下当天 7 个文件后开始覆盖-->

<DefaultRolloverStrategy max="30">

<!-- 删除处理策略,在配置的路径中搜索,maxDepth 表示往下搜索的最大深度 -->

<Delete basePath="${FILE_PATH}/${FILE_NAME}/" maxDepth="2">

<!-- 文件名搜索匹配,支持正则 -->

<IfFileName glob="*.log.gz"/>

<!--!Note: 这里的 age 必须和 filePattern 协调, 后者是精确到 dd, 这里就要写成 xd, xD 就不起作用

另外, 数字最好 >2, 否则可能造成删除的时候, 最近的文件还处于被占用状态,导致删除不成功!-->

<!--7天-->

<IfLastModified age="7d"/>

</Delete>

</DefaultRolloverStrategy>

</RollingFile>

<!--error级别日志-->

<!-- 这个会打印出所有的error及以上级别的信息,每次大小超过size,则这size大小的日志会自动存入按年份-月份建立的文件夹下面并进行压缩,作为存档-->

<RollingFile name="errorFileAppender"

fileName="${FILE_PATH}/${FILE_NAME}/log_error.log"

filePattern="${FILE_PATH}/${FILE_NAME}/error/log-error-%d{yyyy-MM-dd}_%i.log.gz"

append="true">

<!--设置日志格式-->

<PatternLayout pattern="${LOG_PATTERN}" charset="UTF-8"/>

<Filters>

<ThresholdFilter level="error" onMatch="ACCEPT" onMismatch="DENY"/>

</Filters>

<Policies>

<!-- 基于时间的触发策略。该策略主要是完成周期性的log文件封存工作。有两个参数:

interval,integer型,指定两次封存动作之间的时间间隔。单位:以日志的命名精度来确定单位,

比如yyyy-MM-dd-HH 单位为小时,yyyy-MM-dd-HH-mm 单位为分钟

modulate,boolean型,说明是否对封存时间进行调制。若modulate=true,

则封存时间将以0点为边界进行偏移计算。比如,modulate=true,interval=4hours,

那么假设上次封存日志的时间为00:00,则下次封存日志的时间为04:00,

之后的封存时间依次为08:00,12:00,16:00-->

<TimeBasedTriggeringPolicy interval="1"/>

<SizeBasedTriggeringPolicy size="10MB"/>

</Policies>

<!-- DefaultRolloverStrategy 属性如不设置,则默认为最多同一文件夹下当天 7 个文件后开始覆盖-->

<DefaultRolloverStrategy max="30">

<!-- 删除处理策略,在配置的路径中搜索,maxDepth 表示往下搜索的最大深度 -->

<Delete basePath="${FILE_PATH}/${FILE_NAME}/" maxDepth="2">

<!-- 文件名搜索匹配,支持正则 -->

<IfFileName glob="*.log.gz"/>

<!--!Note: 这里的 age 必须和 filePattern 协调, 后者是精确到 dd, 这里就要写成 xd, xD 就不起作用

另外, 数字最好 >2, 否则可能造成删除的时候, 最近的文件还处于被占用状态,导致删除不成功!-->

<!--7天-->

<IfLastModified age="7d"/>

</Delete>

</DefaultRolloverStrategy>

</RollingFile>

<!--访问日志-->

<RollingFile name="accessAppender"

fileName="${FILE_PATH}/${FILE_NAME}/log_access.log"

filePattern="${FILE_PATH}/${FILE_NAME}/access/log-access-%d{yyyy-MM-dd}_%i.log.gz"

append="true">

<!--设置日志格式-->

<PatternLayout pattern="${ACCESS_LOG_PATTERN}" charset="UTF-8"/>

<Filters>

<ThresholdFilter level="debug" onMatch="ACCEPT" onMismatch="DENY"/>

</Filters>

<Policies>

<!-- 基于时间的触发策略。该策略主要是完成周期性的log文件封存工作。有两个参数:

interval,integer型,指定两次封存动作之间的时间间隔。单位:以日志的命名精度来确定单位,

比如yyyy-MM-dd-HH 单位为小时,yyyy-MM-dd-HH-mm 单位为分钟

modulate,boolean型,说明是否对封存时间进行调制。若modulate=true,

则封存时间将以0点为边界进行偏移计算。比如,modulate=true,interval=4hours,

那么假设上次封存日志的时间为00:00,则下次封存日志的时间为04:00,

之后的封存时间依次为08:00,12:00,16:00-->

<TimeBasedTriggeringPolicy interval="1"/>

<SizeBasedTriggeringPolicy size="10MB"/>

</Policies>

<!-- DefaultRolloverStrategy 属性如不设置,则默认为最多同一文件夹下当天 7 个文件后开始覆盖-->

<DefaultRolloverStrategy max="30">

<!-- 删除处理策略,在配置的路径中搜索,maxDepth 表示往下搜索的最大深度 -->

<Delete basePath="${FILE_PATH}/${FILE_NAME}/" maxDepth="2">

<!-- 文件名搜索匹配,支持正则 -->

<IfFileName glob="*.log.gz"/>

<!--!Note: 这里的 age 必须和 filePattern 协调, 后者是精确到 dd, 这里就要写成 xd, xD 就不起作用

另外, 数字最好 >2, 否则可能造成删除的时候, 最近的文件还处于被占用状态,导致删除不成功!-->

<!--7天-->

<IfLastModified age="7d"/>

</Delete>

</DefaultRolloverStrategy>

</RollingFile>

<!--<Async name="Async" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</Async>-->

</Appenders>

<Loggers>

<AsyncLogger name="org.apache.http" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="io.lettuce" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="io.netty" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.quartz" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.springframework" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.springdoc" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="druid.sql" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="io.undertow" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="sun.rmi" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="com.sun.mail" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="javax.management" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="de.codecentric" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.hibernate.validator" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.mybatis.spring.mapper" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.xnio" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="springfox" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="com.baomidou" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="io.micrometer.core" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="Validator" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.neo4j" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.apache.zookeeper" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.apache.curator" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="oshi.util" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="net.javacrumbs" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="com.atomikos" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.springframework.boot.actuate.mail.MailHealthIndicator" level="error" includeLocation="true" additivity="false">

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.flowable" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.apache.ibatis.transaction" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="com.iscas.base.biz.schedule" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="com.sun.jna.Native" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="io.swagger" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="com.sun.jna.NativeLibrary" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="com.alibaba.druid.pool.PreparedStatementPool" level="info" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="com.iscas.biz.config.log.AccessLogInterceptor" level="debug" includeLocation="true" additivity="false">

<AppenderRef ref="accessAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncLogger name="org.apache.http.impl.execchain.RetryExec" level="error" includeLocation="true" additivity="false">

<AppenderRef ref="errorFileAppender"/>

</AsyncLogger>

<AsyncLogger name="accessLogger" level="debug" includeLocation="true" additivity="false">

<AppenderRef ref="accessAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncLogger>

<AsyncRoot level="debug" includeLocation="true" additivity="false">

<AppenderRef ref="infoFileAppender"/>

<AppenderRef ref="warnFileAppender"/>

<AppenderRef ref="errorFileAppender"/>

<AppenderRef ref="consoleAppender"/>

</AsyncRoot>

</Loggers>

</configuration>

5、添加阿里巴巴transmittable依赖

为解决调用子线程后,无法打印traceId的问题,引用transmittable依赖

gradle:

// https://mvnrepository.com/artifact/com.alibaba/transmittable-thread-local

implementation group: 'com.alibaba', name: 'transmittable-thread-local', version: '2.14.2'

maven:

<!-- https://mvnrepository.com/artifact/com.alibaba/transmittable-thread-local -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>transmittable-thread-local</artifactId>

<version>2.14.2</version>

</dependency>

6、自定义ThreadContextMap

package org.slf4j;

import com.alibaba.ttl.TransmittableThreadLocal;

import org.apache.logging.log4j.spi.ThreadContextMap;

import java.util.Collections;

import java.util.HashMap;

import java.util.Map;

public class TtlThreadContextMap implements ThreadContextMap {

private final ThreadLocal<Map<String, String>> localMap;

public TtlThreadContextMap() {

this.localMap = new TransmittableThreadLocal<Map<String, String>>();

}

@Override

public void put(final String key, final String value) {

Map<String, String> map = localMap.get();

map = map == null ? new HashMap<String, String>() : new HashMap<String, String>(map);

map.put(key, value);

localMap.set(Collections.unmodifiableMap(map));

}

@Override

public String get(final String key) {

final Map<String, String> map = localMap.get();

return map == null ? null : map.get(key);

}

@Override

public void remove(final String key) {

final Map<String, String> map = localMap.get();

if (map != null) {

final Map<String, String> copy = new HashMap<String, String>(map);

copy.remove(key);

localMap.set(Collections.unmodifiableMap(copy));

}

}

@Override

public void clear() {

localMap.remove();

}

@Override

public boolean containsKey(final String key) {

final Map<String, String> map = localMap.get();

return map != null && map.containsKey(key);

}

@Override

public Map<String, String> getCopy() {

final Map<String, String> map = localMap.get();

return map == null ? new HashMap<String, String>() : new HashMap<String, String>(map);

}

@Override

public Map<String, String> getImmutableMapOrNull() {

return localMap.get();

}

@Override

public boolean isEmpty() {

final Map<String, String> map = localMap.get();

return map == null || map.size() == 0;

}

@Override

public String toString() {

final Map<String, String> map = localMap.get();

return map == null ? "{}" : map.toString();

}

@Override

public int hashCode() {

final int prime = 31;

int result = 1;

final Map<String, String> map = this.localMap.get();

result = prime * result + ((map == null) ? 0 : map.hashCode());

return result;

}

@Override

public boolean equals(final Object obj) {

if (this == obj) {

return true;

}

if (obj == null) {

return false;

}

if (!(obj instanceof TtlThreadContextMap)) {

return false;

}

final TtlThreadContextMap other = (TtlThreadContextMap) obj;

final Map<String, String> map = this.localMap.get();

final Map<String, String> otherMap = other.getImmutableMapOrNull();

if (map == null) {

if (otherMap != null) {

return false;

}

} else if (!map.equals(otherMap)) {

return false;

}

return true;

}

}

7、注册ThreadContextMap

在resources下添加log4j2.component.properties文件,文件中配置自定义的ThreadContextMap

log4j2.threadContextMap=org.slf4j.TtlThreadContextMap

8、自定义MDCAdapter

注意这里包名最好使用org.slf4j,否则访问MDC中的mdcAdapter属性需要使用反射。

package org.slf4j;

import com.alibaba.ttl.TransmittableThreadLocal;

import org.apache.logging.log4j.Logger;

import org.apache.logging.log4j.ThreadContext;

import org.apache.logging.log4j.status.StatusLogger;

import org.slf4j.spi.MDCAdapter;

import java.util.ArrayDeque;

import java.util.Deque;

import java.util.Map;

import java.util.Objects;

/**

* @author zhuquanwen

* @version 1.0

* @date 2023/2/12 11:46

*/

public class TtlMDCAdapter implements MDCAdapter {

private static Logger LOGGER = StatusLogger.getLogger();

private final ThreadLocalMapOfStacks mapOfStacks = new ThreadLocalMapOfStacks();

private static TtlMDCAdapter mtcMDCAdapter;

static {

mtcMDCAdapter = new TtlMDCAdapter();

MDC.mdcAdapter = mtcMDCAdapter;

}

public static MDCAdapter getInstance() {

return mtcMDCAdapter;

}

@Override

public void put(final String key, final String val) {

ThreadContext.put(key, val);

}

@Override

public String get(final String key) {

return ThreadContext.get(key);

}

@Override

public void remove(final String key) {

ThreadContext.remove(key);

}

@Override

public void clear() {

ThreadContext.clearMap();

}

@Override

public Map<String, String> getCopyOfContextMap() {

return ThreadContext.getContext();

}

@Override

public void setContextMap(final Map<String, String> map) {

ThreadContext.clearMap();

ThreadContext.putAll(map);

}

@Override

public void pushByKey(String key, String value) {

if (key == null) {

ThreadContext.push(value);

} else {

final String oldValue = mapOfStacks.peekByKey(key);

if (!Objects.equals(ThreadContext.get(key), oldValue)) {

LOGGER.warn("The key {} was used in both the string and stack-valued MDC.", key);

}

mapOfStacks.pushByKey(key, value);

ThreadContext.put(key, value);

}

}

@Override

public String popByKey(String key) {

if (key == null) {

return ThreadContext.getDepth() > 0 ? ThreadContext.pop() : null;

}

final String value = mapOfStacks.popByKey(key);

if (!Objects.equals(ThreadContext.get(key), value)) {

LOGGER.warn("The key {} was used in both the string and stack-valued MDC.", key);

}

ThreadContext.put(key, mapOfStacks.peekByKey(key));

return value;

}

@Override

public Deque<String> getCopyOfDequeByKey(String key) {

if (key == null) {

final ThreadContext.ContextStack stack = ThreadContext.getImmutableStack();

final Deque<String> copy = new ArrayDeque<>(stack.size());

stack.forEach(copy::push);

return copy;

}

return mapOfStacks.getCopyOfDequeByKey(key);

}

@Override

public void clearDequeByKey(String key) {

if (key == null) {

ThreadContext.clearStack();

} else {

mapOfStacks.clearByKey(key);

ThreadContext.put(key, null);

}

}

private static class ThreadLocalMapOfStacks {

private final ThreadLocal<Map<String, Deque<String>>> tlMapOfStacks = new TransmittableThreadLocal<>();

public void pushByKey(String key, String value) {

tlMapOfStacks.get()

.computeIfAbsent(key, ignored -> new ArrayDeque<>())

.push(value);

}

public String popByKey(String key) {

final Deque<String> deque = tlMapOfStacks.get().get(key);

return deque != null ? deque.poll() : null;

}

public Deque<String> getCopyOfDequeByKey(String key) {

final Deque<String> deque = tlMapOfStacks.get().get(key);

return deque != null ? new ArrayDeque<>(deque) : null;

}

public void clearByKey(String key) {

final Deque<String> deque = tlMapOfStacks.get().get(key);

if (deque != null) {

deque.clear();

}

}

public String peekByKey(String key) {

final Deque<String> deque = tlMapOfStacks.get().get(key);

return deque != null ? deque.peek() : null;

}

}

}

9、初始化MDCAdapter

public class TtlMDCAdapterInitializer implements ApplicationContextInitializer<ConfigurableApplicationContext> {

@Override

public void initialize(ConfigurableApplicationContext applicationContext) {

//加载TtlMDCAdapter实例

TtlMDCAdapter.getInstance();

}

}

在resources下spring.factories中注册:

org.springframework.context.ApplicationContextInitializer=\

com.iscas.base.biz.config.TtlMDCAdapterInitializer

10、自定义线程池

package com.iscas.base.biz.schedule;

import com.alibaba.ttl.TtlCallable;

import com.alibaba.ttl.TtlRunnable;

import org.springframework.scheduling.concurrent.ThreadPoolTaskExecutor;

import org.springframework.util.concurrent.ListenableFuture;

import java.util.concurrent.Callable;

import java.util.concurrent.Future;

/**

* @author zhuquanwen

* @version 1.0

* @date 2023/2/12 12:11

*/

public class CustomThreadPoolTaskExecutor extends ThreadPoolTaskExecutor {

@Override

public void execute(Runnable runnable) {

Runnable ttlRunnable = TtlRunnable.get(runnable);

super.execute(ttlRunnable);

}

@Override

public <T> Future<T> submit(Callable<T> task) {

Callable ttlCallable = TtlCallable.get(task);

return super.submit(ttlCallable);

}

@Override

public Future<?> submit(Runnable task) {

Runnable ttlRunnable = TtlRunnable.get(task);

return super.submit(ttlRunnable);

}

@Override

public ListenableFuture<?> submitListenable(Runnable task) {

Runnable ttlRunnable = TtlRunnable.get(task);

return super.submitListenable(ttlRunnable);

}

@Override

public <T> ListenableFuture<T> submitListenable(Callable<T> task) {

Callable ttlCallable = TtlCallable.get(task);

return super.submitListenable(ttlCallable);

}

}

11、注册线程池

package com.iscas.biz.config;

import com.iscas.base.biz.schedule.CustomThreadPoolTaskExecutor;

import org.springframework.boot.autoconfigure.AutoConfiguration;

import org.springframework.context.annotation.Bean;

import org.springframework.scheduling.annotation.AsyncConfigurer;

import org.springframework.scheduling.annotation.EnableAsync;

import org.springframework.scheduling.concurrent.ThreadPoolTaskExecutor;

import java.util.concurrent.ThreadPoolExecutor;

/**

*

* @author zhuquanwen

* @version 1.0

* @date 2021/2/26 10:41

* @since jdk1.8

*/

@SuppressWarnings("unused")

@AutoConfiguration

@EnableAsync

public class AsyncConfig implements AsyncConfigurer, BizConstant {

private static final int ASYNC_KEEPALIVE_SECONDS = 60;

private static final int ASYNC_QUEUE_CAPACITY = 20000;

@Bean("asyncExecutor")

public ThreadPoolTaskExecutor taskExecutor() {

ThreadPoolTaskExecutor executor = new CustomThreadPoolTaskExecutor();

executor.setCorePoolSize(Runtime.getRuntime().availableProcessors());

executor.setMaxPoolSize(Runtime.getRuntime().availableProcessors() * 2);

executor.setQueueCapacity(20000);

executor.setKeepAliveSeconds(ASYNC_KEEPALIVE_SECONDS);

executor.setThreadNamePrefix(ASYNC_EXECUTOR_NAME_PREFIX);

executor.setRejectedExecutionHandler(new ThreadPoolExecutor.CallerRunsPolicy());

executor.initialize();

return executor;

}

}

12、编写示例

package com.iscas.base.biz.test.controller;

import com.iscas.base.biz.util.LogLevelUtils;

import com.iscas.base.biz.util.SpringUtils;

import com.iscas.templet.common.ResponseEntity;

import lombok.extern.slf4j.Slf4j;

import org.springframework.boot.logging.LogLevel;

import org.springframework.scheduling.concurrent.ThreadPoolTaskExecutor;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

/**

* @author zhuquanwen

* @version 1.0

* @date 2023/2/11 17:51

*/

@RestController

@RequestMapping("/test/log")

@Slf4j

public class LogControllerTest {

@GetMapping

public ResponseEntity t1 () {

log.debug("test-debug");

return ResponseEntity.ok(null);

}

/**

* 调整日志级别

* */

@GetMapping("/t2")

public ResponseEntity t2 () {

LogLevelUtils.updateLevel("com.iscas.base.biz.test.controller", LogLevel.INFO);

return ResponseEntity.ok(null);

}

/**

* 测试子线程打印traceId

* */

@GetMapping("/t3")

public ResponseEntity t3() {

ThreadPoolTaskExecutor threadPoolTaskExecutor = SpringUtils.getBean("asyncExecutor");

threadPoolTaskExecutor.execute(() -> {

log.info("测试子线程打印traceId");

});

return ResponseEntity.ok(null);

}

}

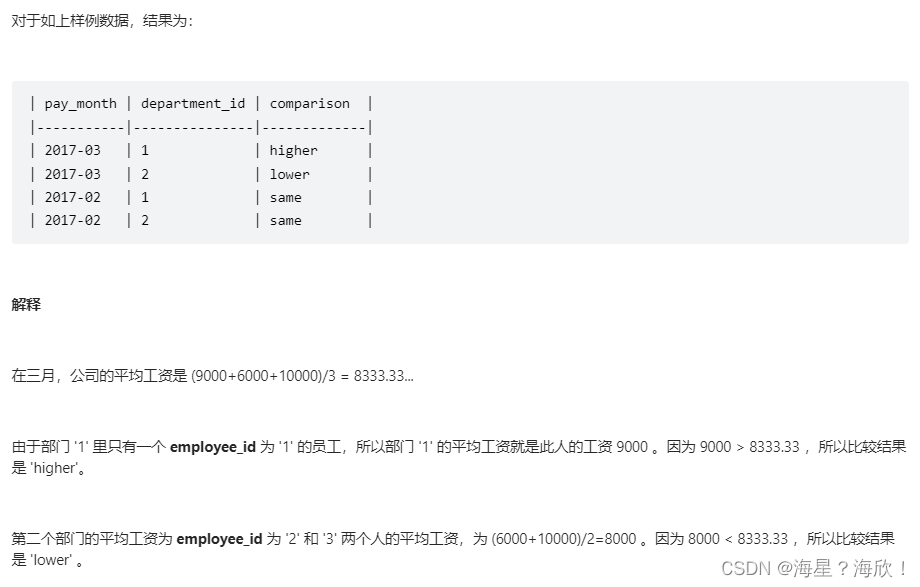

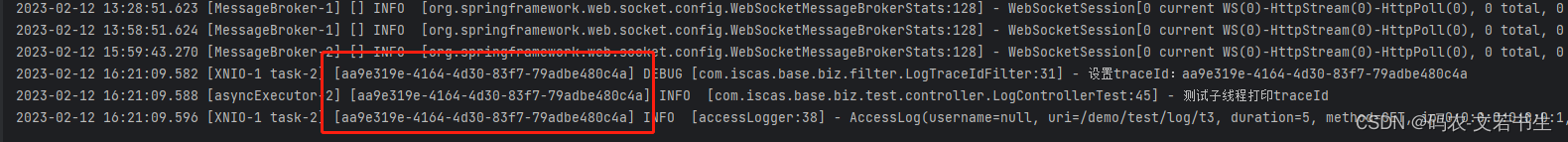

日志打印如下:

![【C#】[带格式的字符串] 复合格式设置字符串与使用 $ 的字符串内插 | 如何格式化输出字符串](https://img-blog.csdnimg.cn/176899f47ce848d38989dff6fee5a0bb.png)