增加了hdfs

package com.qyt;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.runtime.state.storage.FileSystemCheckpointStorage;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.util.Collector;

/**

* DataStreamSource API使用

*/

public class StreamWordCount {

public static void main(String[] args) throws Exception {

//TODO 1、获取流的类

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

System.setProperty("HADOOP_USER_NAME", "root");

env.enableCheckpointing(3000);

// 配置存储检查点到文件系统

env.getCheckpointConfig().setCheckpointStorage(new FileSystemCheckpointStorage("hdfs://hadoop01:9000/flink"));

env.getCheckpointConfig().setCheckpointTimeout(2000l);

env.getCheckpointConfig().setExternalizedCheckpointCleanup(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

//TODO 2、获取无界流

DataStreamSource<String> stringDataStreamSource = env.socketTextStream("192.168.1.10", 9000, "\n");

//TODO 3 ETL

//TODO 3.1 转换成二元数组,简单ETL的过程

SingleOutputStreamOperator<Tuple2<String, Integer>> process = stringDataStreamSource.process(new ProcessFunction<String, Tuple2<String, Integer>>() {

@Override

public void processElement(String value, ProcessFunction<String, Tuple2<String, Integer>>.Context ctx, Collector<Tuple2<String, Integer>> out) throws Exception {

String[] words = value.split(" ");

for (String word : words) {

Tuple2<String, Integer> tuple2 = Tuple2.of(word, 1);

out.collect(tuple2);

}

}

}).uid("etl");

//TODO 3.1 分组

KeyedStream<Tuple2<String, Integer>, String> tuple2StringKeyedStream = process.keyBy(new KeySelector<Tuple2<String, Integer>, String>() {

@Override

public String getKey(Tuple2<String, Integer> value) throws Exception {

return value.f0;

}

});

//TODO 3.2 聚合计算

SingleOutputStreamOperator<Tuple2<String, Integer>> sum = tuple2StringKeyedStream.sum(1);

//TODO 4、打印

sum.print();

//TODO 5、无界流需要这个不断执行的方法

env.execute();

}

}

要增加hadoop客户端的使用

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="http://maven.apache.org/POM/4.0.0"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>flink-demo</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<flink.version>1.17.0</flink.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.3.4</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.2.4</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<artifactSet>

<excludes>

<exclude>com.google.code.findbugs:jsr305</exclude>

<exclude>org.slf4j:*</exclude>

<exclude>log4j:*</exclude>

</excludes>

</artifactSet>

<filters>

<filter>

<!-- Do not copy the signatures in the META-INF folder.

Otherwise, this might cause SecurityExceptions when using the JAR. -->

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers combine.children="append">

<transformer

implementation="org.apache.maven.plugins.shade.resource.ServicesResourceTransformer">

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

提交flink集群

#生成对应的任务

./flink run -m 192.168.1.161:8081 -c com.qyt.StreamWordCount /root/soft/flink-demo-1.0-SNAPSHOT.jar

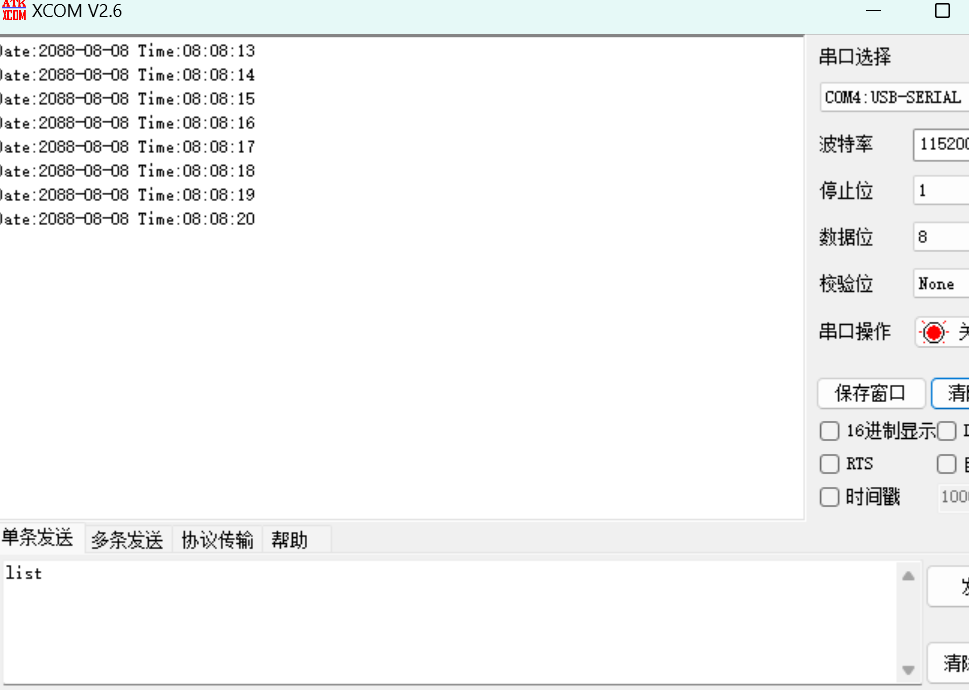

# 恢复上一次保存点,bc5fae2e282247486003ed259f2f37a7为jobID

./flink run -s hdfs://hadoop01:9000/flink/bc5fae2e282247486003ed259f2f37a7/chk-33 -m 192.168.1.161:8081 -c com.qyt.StreamWordCount /root/soft/flink-demo-1.0-SNAPSHOT.jar

查看对应的jobId

![NSSCTF [HNCTF 2022 Week1]超级签到](https://i-blog.csdnimg.cn/direct/fc9a369c6e15409f9d5d3433f78506d9.png)