一、项目分析

1.1 项目任务

kaggle二手车价格回归预测项目,目的根据各种属性预测二手车的价格。

1.2 评估准则

评估的标准是均方根误差:

1.3 数据介绍

数据连接https://www.kaggle.com/competitions/playground-series-s4e9/data?select=train.csv

其中:

id:唯一标识符(或编号)brand:品牌model:型号model_year:车型年份mileage(注意这里可能是拼写错误,应该是mileage而不是milage):里程数fuel_type:燃油类型engine:发动机transmission:变速器ext_col:车身颜色(外部)int_col:内饰颜色(内部)accident:事故记录clean_title:清洁标题(通常指车辆是否有清晰的产权记录,无抵押、无重大事故等)price:价格

二、读取数据

2.1 导入相应的库

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeRegressor

from sklearn.metrics import mean_squared_error

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import train_test_split, GridSearchCV

import xgboost as xgb

2.2 读取数据

file_path = '/kaggle/input/playground-series-s4e9/train.csv'

df = pd.read_csv(file_path)

df.head()

df.shape()

三、Exploratory Data Analysis(EDA)

3.1 车型年份与价格的关系

plt.figure(figsize=(10, 6))

sns.scatterplot(x='model_year', y='price', data=df)

plt.title('Model Year vs Price')

plt.xlabel('Model Year')

plt.ylabel('Price')

plt.show()

3.2 滞留量与价格的关系

plt.figure(figsize=(10, 6))

sns.scatterplot(x='milage', y='price', data=df)

plt.title('Milage vs Price')

plt.xlabel('Milage')

plt.ylabel('Price')

plt.show()

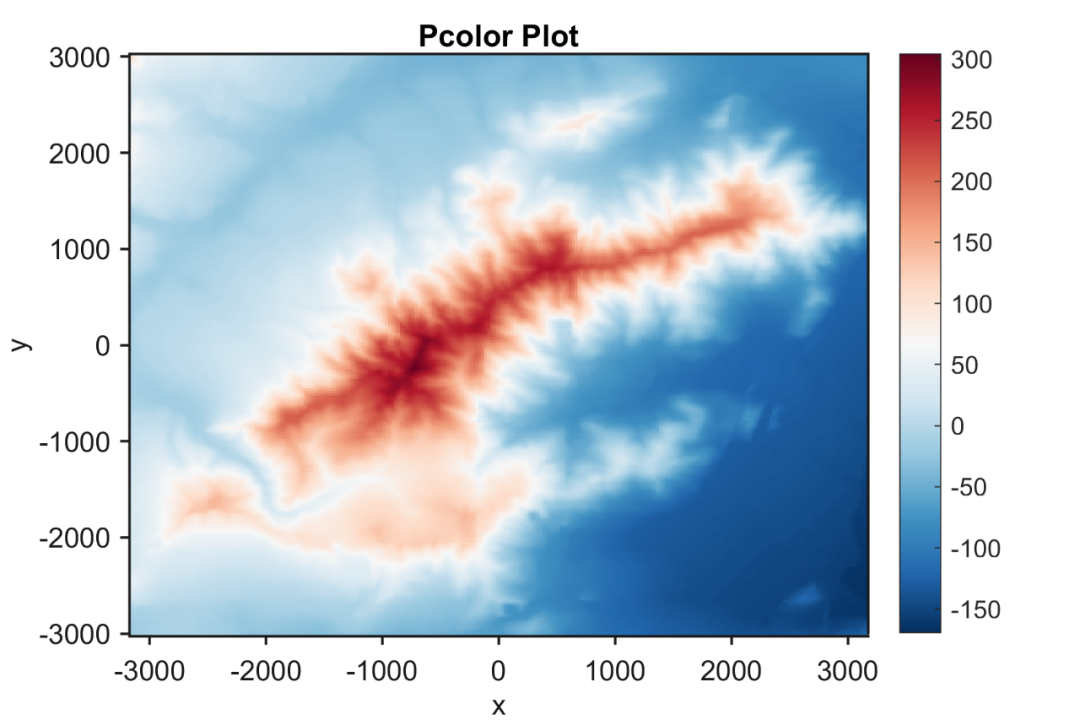

3.3 热图检查数值特征之间的关系

num_df = df.select_dtypes(include=['float64', 'int64'])

plt.figure(figsize=(12, 8))

corr_matrix = num_df.corr()

sns.heatmap(corr_matrix, annot=True, fmt=".2f", cmap="coolwarm", linewidths=0.5, annot_kws={"size": 10})

plt.title('Correlation Matrix', fontsize=16)

plt.xticks(rotation=45, ha='right')

plt.yticks(rotation=0)

plt.tight_layout()

plt.show()

3.4 按品牌统计图表

plt.figure(figsize=(12, 6))

sns.countplot(data=df, x='brand', order=df['brand'].value_counts().index)

plt.title('Count of Cars by Brand', fontsize=16)

plt.xticks(rotation=45)

plt.tight_layout()

plt.show()

3.5 箱线图

plt.figure(figsize=(12, 6))

sns.boxplot(data=df, x='fuel_type', y='milage')

plt.title('Mileage by Fuel Type', fontsize=16)

plt.xticks(rotation=45)

plt.tight_layout()

plt.show()

1.6 各品牌平均里程数

plt.figure(figsize=(12, 6))

sns.barplot(data=df, x='brand', y='milage', estimator=np.mean, ci=None)

plt.title('Average Mileage by Brand', fontsize=16)

plt.xticks(rotation=45)

plt.tight_layout()

plt.show()

四、 数据预测处理

4.1 检查每个特征是否具有不同的值

for i in df.columns:

if df[i].nunique()<2:

print(f'{i} has only one unique value. ')

clean_title has only one unique value.

“Clean ”功能只有一个唯一值,所以我们可以将其删除。

df.drop(['id','clean_title'],axis=1,inplace=True)

df.shape

(188533, 11)

4.2 缺失值处理

df.isnull().sum().sum()

7535

df.dropna(inplace=True)

df.isnull().sum().sum()

0

没有缺失的值,所以我们可以继续了。

4.3

使用一热编码将分类变量转换为数值格式

df = pd.get_dummies(df, columns=['brand', 'model', 'fuel_type', 'transmission', 'ext_col', 'int_col', 'accident','engine' ], drop_first=True)

五、数据预测

5.1 数据样本和标签分离

X = df.drop('price', axis=1)

y = df['price']

5.2 切分数据集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

5.3 模型训练和评估

5.3.1 Xgboost回归模型

xgb_model = xgb.XGBRegressor(

n_estimators=100,

max_depth=5,

learning_rate=0.1,

subsample=0.8,

random_state=42

)

xgb_model.fit(X_train, y_train)

y_pred_xgb = xgb_model.predict(X_test)

rmse_xgb = np.sqrt(mean_squared_error(y_test, y_pred_xgb))

print(f'XGBoost Root Mean Squared Error: {rmse_xgb}')

XGBoost Root Mean Squared Error: 67003.09126576487

5.3.2 Random Forest回归模型

rf_model = RandomForestRegressor(

n_estimators=100,

max_depth=10,

min_samples_split=2,

min_samples_leaf=1,

random_state=42

)

rf_model.fit(X_train, y_train)

y_pred_rf = rf_model.predict(X_test)

rmse_rf = np.sqrt(mean_squared_error(y_test, y_pred_rf))

print(f'Random Forest Root Mean Squared Error: {rmse_rf}')

Random Forest Root Mean Squared Error: 68418.85393408517

参考文献:

1 https://www.kaggle.com/code/muhammaadmuzammil008/eda-random-forest-xgboost

![[深度学习]循环神经网络](https://i-blog.csdnimg.cn/direct/5feeb608abbb490f80abeeff3c3a9ebb.png)