准确工作,安装配置好CUDA,cudnn,vs2019,TensorRT

可以参考我博客(下面博客有CUDA11.2,CUDNN11.2,vs2019,TensorRT配置方法)

(70条消息) WIN11+CUAD11.2+vs2019+tensorTR8.6+Yolov3/4/5模型加速_Vertira的博客-CSDN博客

下面我们在上面的基础上,下载opencv4(备用),然后创建onnx2TensorRT 项目

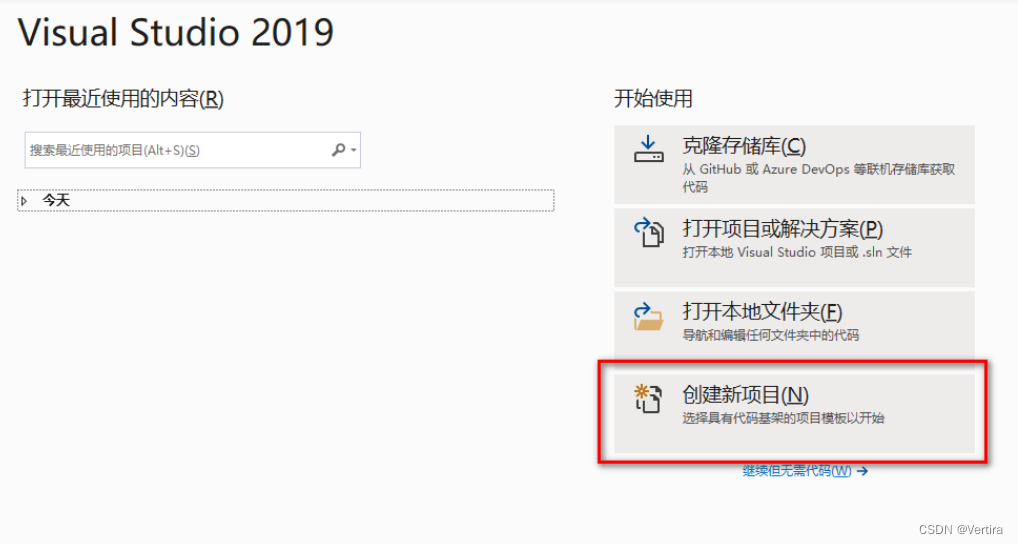

1.vs2019创建控制台程序,

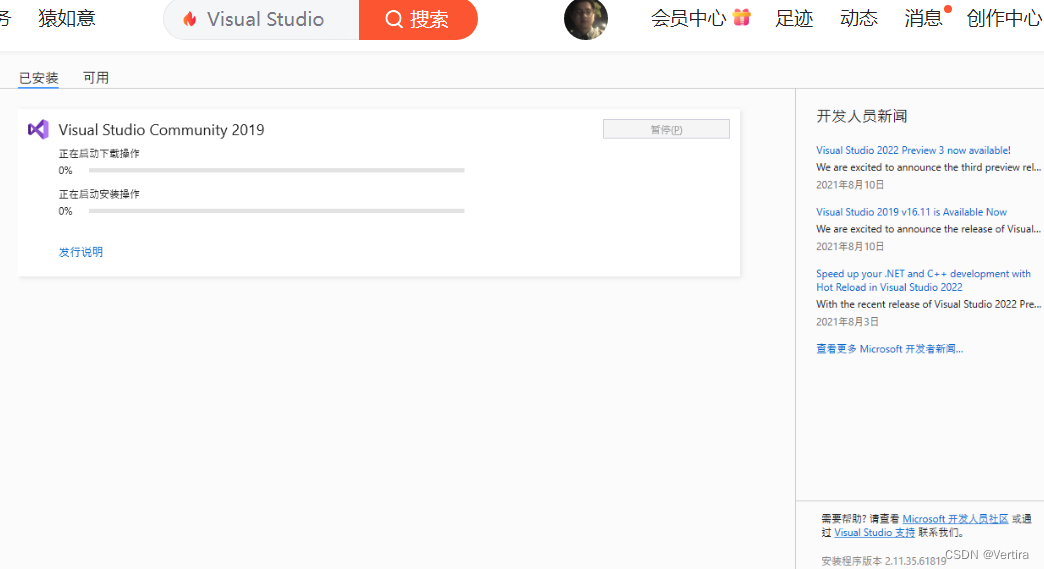

如果你是初次安装,没有c++套件(如果你安装了C++套件 ,忽略此步,直接进行第2步),

打开 Visual Studio Installer , 点击 " 修改 " 按钮

进入 可以安装套件的界面

安装 " 使用 C++ 的桌面开发 " 组件 ; 有很多组件,这里咱们只安装C++

选中后 , 右下角会显示 " 修改 " 按钮 , 点击该按钮 , 即可开始

然后等待安装完成即可 ;(时间有点长)

然后:

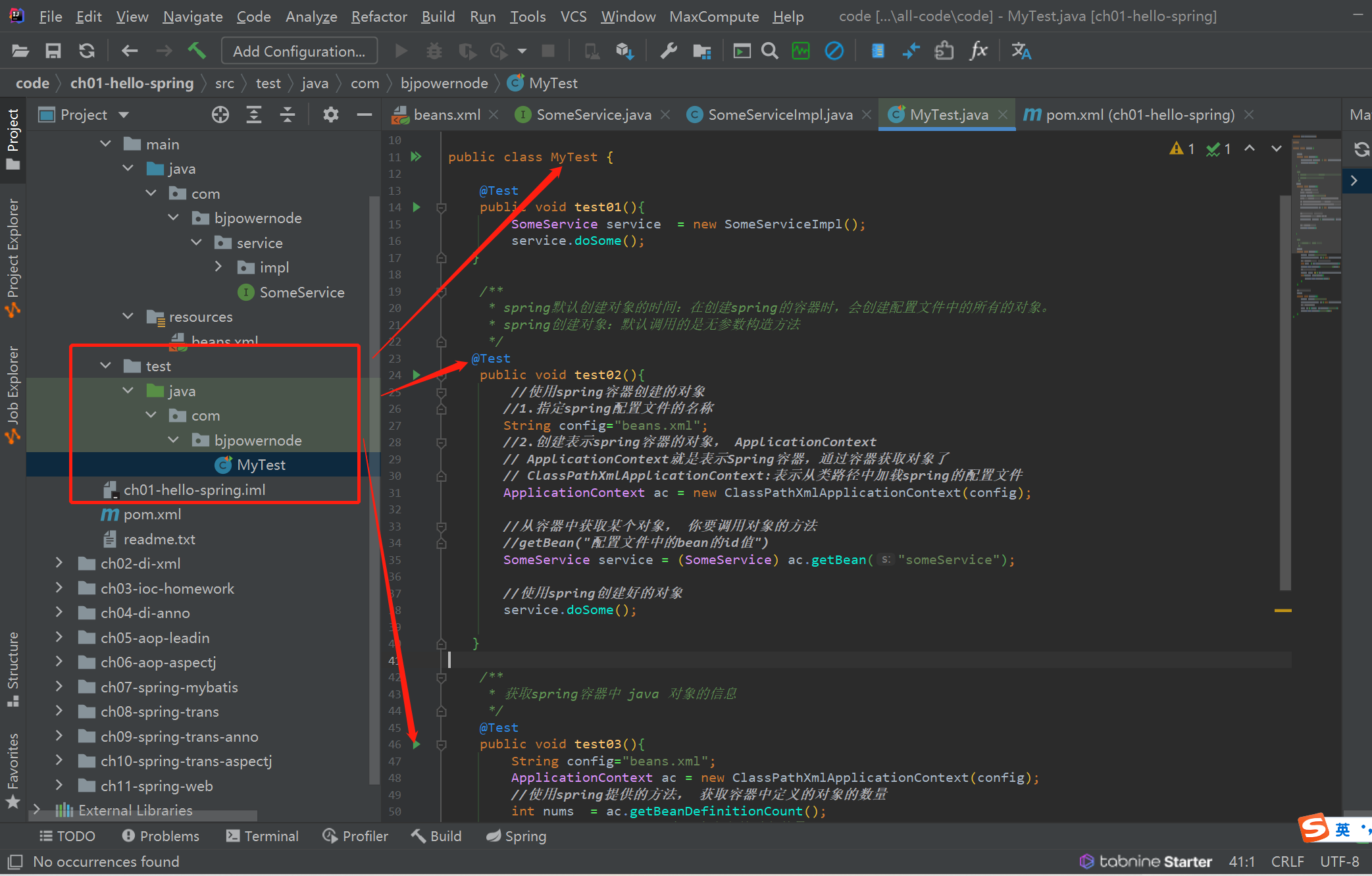

2、创建并运行 Windows 控制台程序

文件-->新建---》项目

选择创建 " 控制台应用 " ,

下一步,

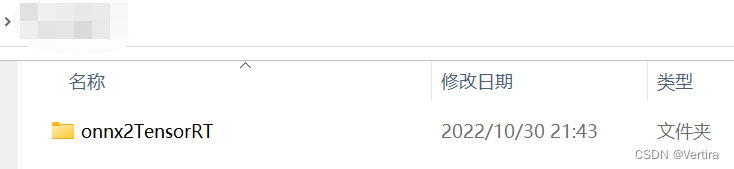

在弹出的界面中 定义好工程名称onnx2TensorRT 和路径,确定创建 即可

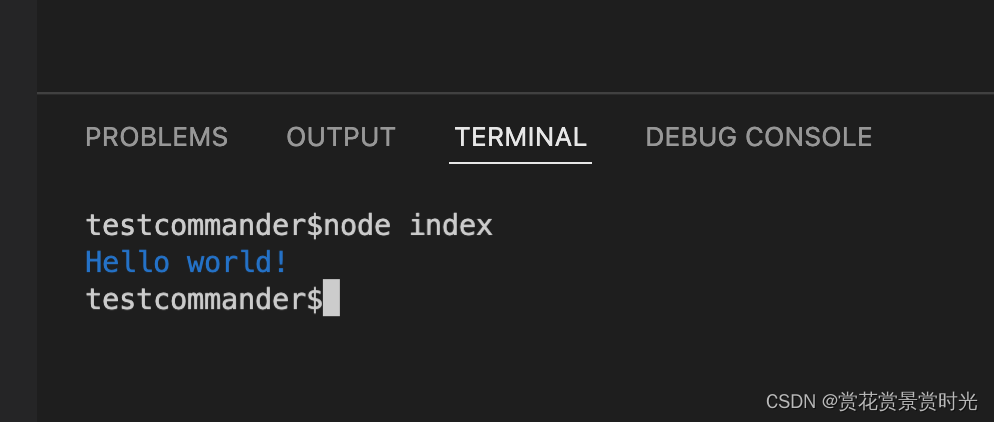

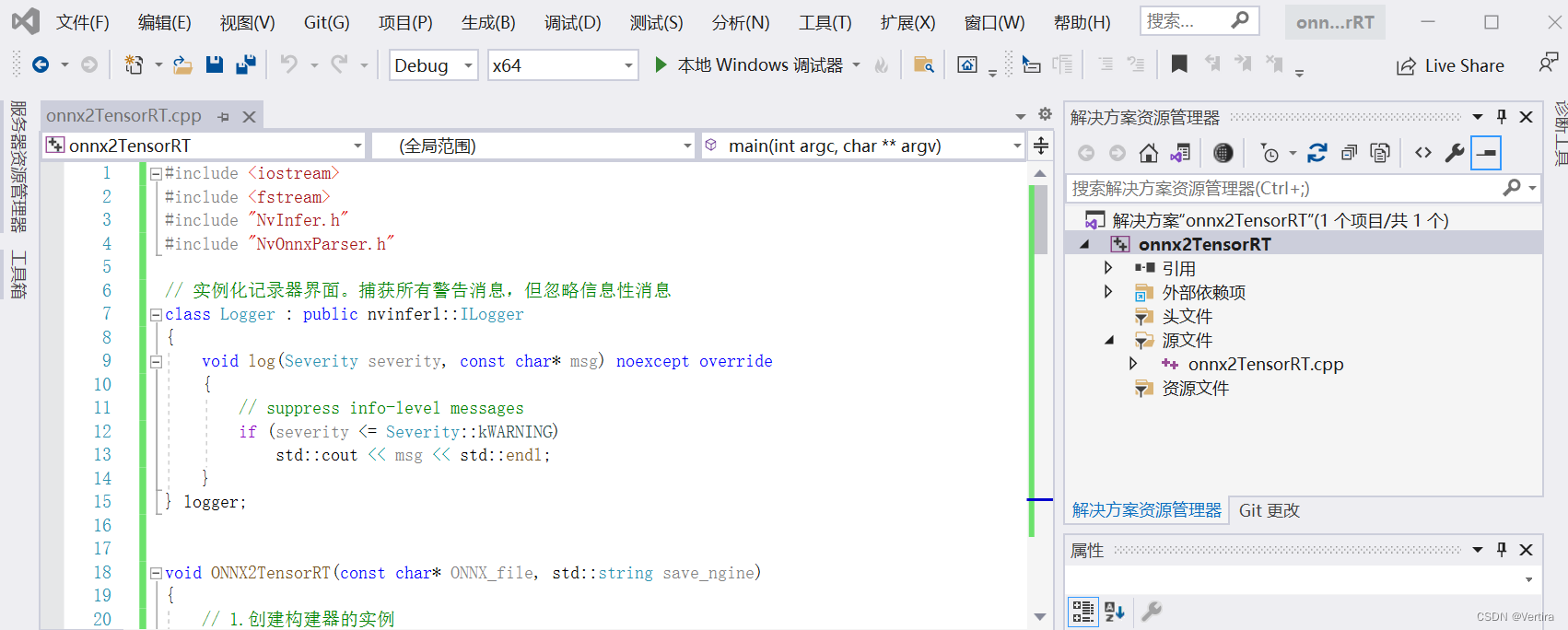

然后进入工程文件夹,打开工程,工程自动创建带有输出hello world的cpp源文件,把文件清空

然后粘贴下面的代码:(由于没有配置路径,会出现很多红线,这个不用担心,下面就开始配置CUDA,TensorRT,这里没有用的图片显示,我暂时没有配置opencv)

#include <iostream>

#include <fstream>

#include "NvInfer.h"

#include "NvOnnxParser.h"

// 实例化记录器界面。捕获所有警告消息,但忽略信息性消息

class Logger : public nvinfer1::ILogger

{

void log(Severity severity, const char* msg) noexcept override

{

// suppress info-level messages

if (severity <= Severity::kWARNING)

std::cout << msg << std::endl;

}

} logger;

void ONNX2TensorRT(const char* ONNX_file, std::string save_ngine)

{

// 1.创建构建器的实例

nvinfer1::IBuilder* builder = nvinfer1::createInferBuilder(logger);

// 2.创建网络定义

uint32_t flag = 1U << static_cast<uint32_t>(nvinfer1::NetworkDefinitionCreationFlag::kEXPLICIT_BATCH);

nvinfer1::INetworkDefinition* network = builder->createNetworkV2(flag);

// 3.创建一个 ONNX 解析器来填充网络

nvonnxparser::IParser* parser = nvonnxparser::createParser(*network, logger);

// 4.读取模型文件并处理任何错误

parser->parseFromFile(ONNX_file, static_cast<int32_t>(nvinfer1::ILogger::Severity::kWARNING));

for (int32_t i = 0; i < parser->getNbErrors(); ++i)

{

std::cout << parser->getError(i)->desc() << std::endl;

}

// 5.创建一个构建配置,指定 TensorRT 应该如何优化模型

nvinfer1::IBuilderConfig* config = builder->createBuilderConfig();

// 6.设置属性来控制 TensorRT 如何优化网络

// 设置内存池的空间

config->setMemoryPoolLimit(nvinfer1::MemoryPoolType::kWORKSPACE, 16 * (1 << 20));

// 设置低精度 注释掉为FP32

if (builder->platformHasFastFp16())

{

config->setFlag(nvinfer1::BuilderFlag::kFP16);

}

// 7.指定配置后,构建引擎

nvinfer1::IHostMemory* serializedModel = builder->buildSerializedNetwork(*network, *config);

// 8.保存TensorRT模型

std::ofstream p(save_ngine, std::ios::binary);

p.write(reinterpret_cast<const char*>(serializedModel->data()), serializedModel->size());

// 9.序列化引擎包含权重的必要副本,因此不再需要解析器、网络定义、构建器配置和构建器,可以安全地删除

delete parser;

delete network;

delete config;

delete builder;

// 10.将引擎保存到磁盘,并且可以删除它被序列化到的缓冲区

delete serializedModel;

}

void exportONNX(const char* ONNX_file, std::string save_ngine)

{

std::ifstream file(ONNX_file, std::ios::binary);

if (!file.good())

{

std::cout << "Load ONNX file failed! No file found from:" << ONNX_file << std::endl;

return ;

}

std::cout << "Load ONNX file from: " << ONNX_file << std::endl;

std::cout << "Starting export ..." << std::endl;

ONNX2TensorRT(ONNX_file, save_ngine);

std::cout << "Export success, saved as: " << save_ngine << std::endl;

}

int main(int argc, char** argv)

{

// 输入信息

const char* ONNX_file = "../weights/test.onnx";

std::string save_ngine = "../weights/test.engine";

exportONNX(ONNX_file, save_ngine);

return 0;

}

然后,开始配置

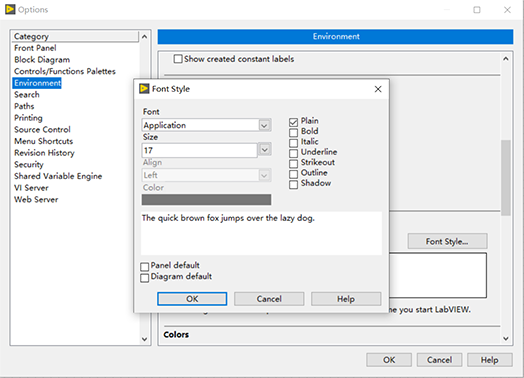

属性界面如下:

包含目录:是CUDA的include文件夹所在路径和TensorRT的 include文件夹

$(CUDA_PATH)\include

D:\software\TensorRT-8.4.1.5_CUDA11.6_Cudnn8.4.1\include

D:\software\TensorRT-8.4.1.5_CUDA11.6_Cudnn8.4.1\samples\common

库目录:指的是 CUDA的lib 和TensorRT的lib

$(CUDA_PATH)\lib

$(CUDA_PATH)\lib\x64

D:\software\TensorRT-8.4.1.5_CUDA11.6_Cudnn8.4.1\lib

注意:$(CUDA_PATH) 也可以用绝对路径代替

3. 设置属性 — 链接器

输入的内容:

nvinfer.lib

nvinfer_plugin.lib

nvonnxparser.lib

nvparsers.lib

cudnn.lib

cublas.lib

cudart.lib然后确定,确定 即可

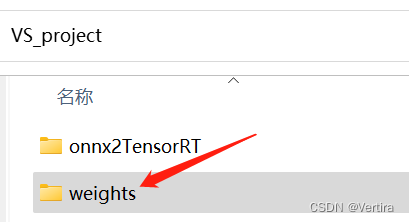

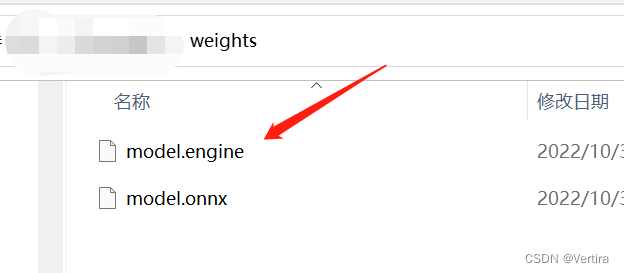

5. 创建模型文件夹

新建weights文件夹,将你的onnx文件放在里面

注意:weights路径的位置一定要和程序 最下面的路径位置一样

然后,在debug模型在运行,如果你需要release版本,那就在release模式下运行。

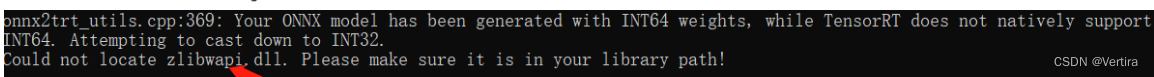

运行结果:

Load ONNX file from: ../weights/model.onnx

Starting export ...

onnx2trt_utils.cpp:369: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

TensorRT was linked against cuDNN 8.4.1 but loaded cuDNN 8.2.1

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Conv_3 + Relu_4.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

- Values less than smallest positive FP16 Subnormal value detected. Converting to FP16 minimum subnormalized value.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_8 + Relu_9.bias] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

Weights [name=Gemm_10.weight] had the following issues when converted to FP16:

- Subnormal FP16 values detected.

If this is not the desired behavior, please modify the weights or retrain with regularization to reduce the magnitude of the weights.

The getMaxBatchSize() function should not be used with an engine built from a network created with NetworkDefinitionCreationFlag::kEXPLICIT_BATCH flag. This function will always return 1.

The getMaxBatchSize() function should not be used with an engine built from a network created with NetworkDefinitionCreationFlag::kEXPLICIT_BATCH flag. This function will always return 1.

Export success, saved as: ../weights/model.engine

D:\VS_project\onnx2TensorRT\x64\Debug\onnx2TensorRT.exe (进程 17936)已退出,代码为 0。

要在调试停止时自动关闭控制台,请启用“工具”->“选项”->“调试”->“调试停止时自动关闭控制台”。

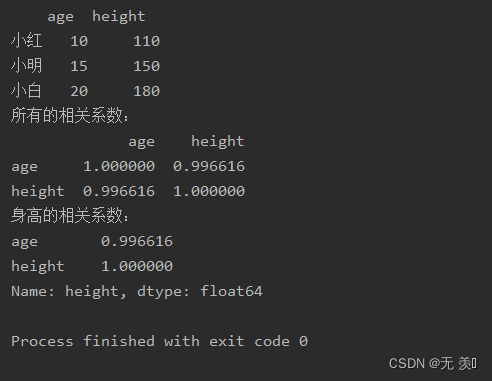

按任意键关闭此窗口. . .从最后两句话可以看出

The getMaxBatchSize() function should not be used with an engine built from a network created with NetworkDefinitionCreationFlag::kEXPLICIT_BATCH flag. This function will always return 1.

Export success, saved as: ../weights/model.engine

D:\VS_project\onnx2TensorRT\x64\Debug\onnx2TensorRT.exe (进程 17936)已退出,代码为 0。

要在调试停止时自动关闭控制台,请启用“工具”->“选项”->“调试”->“调试停止时自动关闭控制台”。

按任意键关闭此窗口. . .程序已经运行成功,没有报错。

7.注意:如果运行时出现 如下警告

问题 — 缺少 zlibwapi.dll 文件

若出现缺少zlibwapi.dll,需要下载,

若出现缺少zlibwapi.dll,需要下载,

链接:https://pan.baidu.com/s/12sVdiDH-NOOZNI9QqJoZuA?pwd=a0n0

链接:百度网盘 请输入提取码百度网盘为您提供文件的网络备份、同步和分享服务。空间大、速度快、安全稳固,支持教育网加速,支持手机端。注册使用百度网盘即可享受免费存储空间https://pan.baidu.com/s/12sVdiDH-NOOZNI9QqJoZuA?pwd=a0n0

提取码:a0n0

下载后解压,将zlibwapi.dll 放入 …\NVIDIA GPU Computing Toolkit\CUDA\v11.2\bin(我的路径)

重新运行程序。如果没有错误,就忽略这个注意。

8. 生成engine文件

选择Release模式,然后重新配置一遍

点击,更换为Release

结束

欢迎 点赞 收藏 加 关注

参考:

(56条消息) Win10系统C++将ONNX转换为TensorRT_田小草呀的博客-CSDN博客_c++ onnx转tensorrt