文章目录

- 前言

- 一、kitti标签label坐标转换的主函数

- 1、主函数调用代码

- 2、数据格式示意图

- 二、kitti数据获取

- 1、图像获取

- 2、label标签数据获取

- 3、标定文件数据获取

- 三、kitti标签坐标转换方法

- 1、集成主函数-labels_boxes2pixel_in_image

- 2、标签3d坐标转像素坐标-compute_box_3d(obj, calib.P)

- 1、函数输入内容

- 2、旋转角转换

- 3、原点基准获取3d坐标(l w h)

- 4、绕Y轴坐标旋转

- 5、获得目标3d坐标

- 6、3d坐标转像素坐标

- 四、完整代码与结果现实

- 1、结果现实

- 2、完整代码

前言

kitti数据是一个通用数据,但里面标定文件或标签文件等相互关心很有可能把大家陷入其中。为此,本文分享kitti数据的label标签内容转换,特别是标签的3d坐标转换到图像像素坐标,这也是本文重点介绍内容。而本文与其它文章不太相同,我们不注重kitti原理介绍,而是使用代码将其转换,并给出完整代码。

一、kitti标签label坐标转换的主函数

1、主函数调用代码

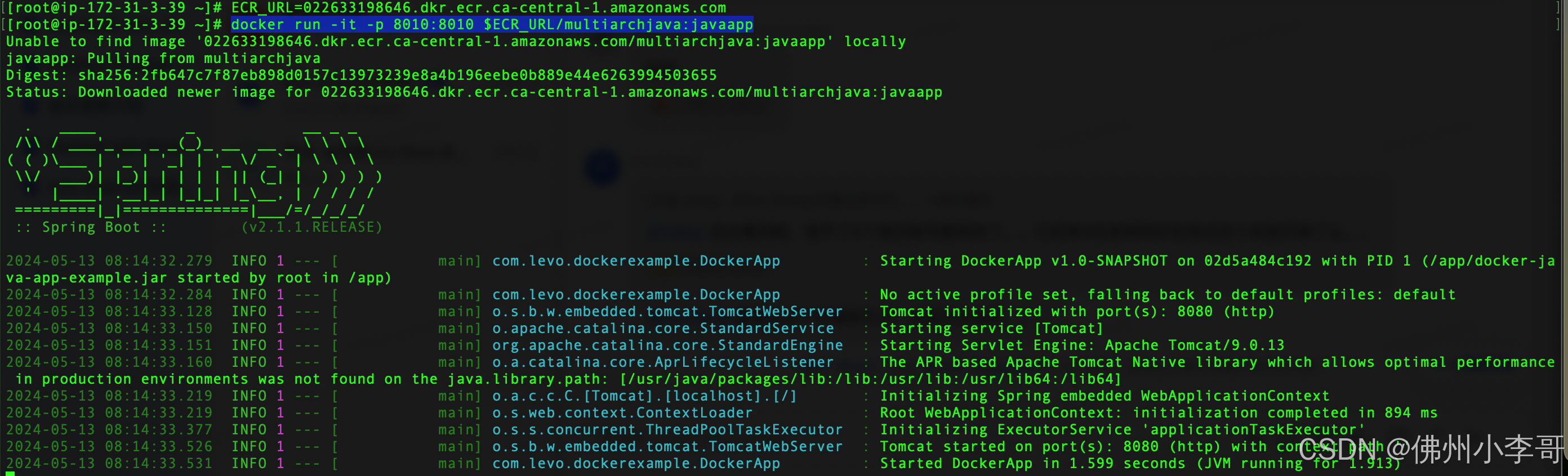

先给出调用的主函数,然后在分别讲解,直接看代码如下:

if __name__ == '__main__':

path = r'C:\Users\Administrator\Desktop\kitti_data\KITTI\training'

index = '000008'

calib_path = os.path.join(path, 'calib', index + '.txt') # 获得标定文件

image_2_path = os.path.join(path, 'image_2', index + '.png') # 获得图像

label_2_path = os.path.join(path, 'label_2', index + '.txt') # 获得标签lebl

img = get_image(image_2_path) # 读取图像

objects = read_label(label_2_path) # 处理标签

calib = Calibration(calib_path) # 处理标定文件

img_bbox2d, img_bbox3d, box3d_to_pixel2d = labels_boxes2pixel_in_image(img, objects, calib) # 重点内容,集成转换

cv2.imwrite('./bbox2d.png',img_bbox2d)

cv2.imwrite('./bbox3d.png',img_bbox3d)

2、数据格式示意图

二、kitti数据获取

1、图像获取

因为要将其2d与3d坐标展现到图像中,需要获取图像数据,其代码如下:

def get_image(img_path):

img = cv2.imread(img_path)

return img

2、label标签数据获取

读取标签txt文件内容,将其每个目标内容赋值给Object3d类中,构建一个列表,如下:

# 读取label标签

def read_label(label_filename):

lines = [line.rstrip() for line in open(label_filename)]

objects = [Object3d(line) for line in lines]

return objects

而Object类是对标签的解析,如下:

# 转换kitti数据标签labels

class Object3d(object):

""" 3d object label """

def __init__(self, label_file_line):

data = label_file_line.split(" ")

data[1:] = [float(x) for x in data[1:]]

# extract label, truncation, occlusion

self.type = data[0] # 'Car', 'Pedestrian', ...

self.truncation = data[1] # truncated pixel ratio [0..1]

self.occlusion = int(

data[2]

) # 0=visible, 1=partly occluded, 2=fully occluded, 3=unknown

self.alpha = data[3] # object observation angle [-pi..pi]

# extract 2d bounding box in 0-based coordinates

self.xmin = data[4] # left

self.ymin = data[5] # top

self.xmax = data[6] # right

self.ymax = data[7] # bottom

self.box2d = np.array([self.xmin, self.ymin, self.xmax, self.ymax])

# extract 3d bounding box information

self.h = data[8] # box height

self.w = data[9] # box width

self.l = data[10] # box length (in meters)

self.t = (data[11], data[12], data[13]) # location (x,y,z) in camera coord.

self.ry = data[14] # yaw angle (around Y-axis in camera coordinates) [-pi..pi]

后面标签内容我不在解析了。

3、标定文件数据获取

其代码如下:

class Calibration(object):

""" Calibration matrices and utils

3d XYZ in <label>.txt are in rect camera coord.

2d box xy are in image2 coord

Points in <lidar>.bin are in Velodyne coord.

y_image2 = P^2_rect * x_rect

y_image2 = P^2_rect * R0_rect * Tr_velo_to_cam * x_velo

x_ref = Tr_velo_to_cam * x_velo

x_rect = R0_rect * x_ref

P^2_rect = [f^2_u, 0, c^2_u, -f^2_u b^2_x;

0, f^2_v, c^2_v, -f^2_v b^2_y;

0, 0, 1, 0]

= K * [1|t]

image2 coord:

----> x-axis (u)

|

|

v y-axis (v)

velodyne coord:

front x, left y, up z

rect/ref camera coord:

right x, down y, front z

Ref (KITTI paper): http://www.cvlibs.net/publications/Geiger2013IJRR.pdf

TODO(rqi): do matrix multiplication only once for each projection.

"""

def __init__(self, calib_filepath, from_video=False):

if from_video:

calibs = self.read_calib_from_video(calib_filepath)

else:

calibs = self.read_calib_file(calib_filepath)

# Projection matrix from rect camera coord to image2 coord

self.P = calibs["P2"]

self.P = np.reshape(self.P, [3, 4])

# Rigid transform from Velodyne coord to reference camera coord

self.V2C = calibs["Tr_velo_to_cam"]

self.V2C = np.reshape(self.V2C, [3, 4])

self.C2V = self.inverse_rigid_trans(self.V2C)

# Rotation from reference camera coord to rect camera coord

self.R0 = calibs["R0_rect"]

self.R0 = np.reshape(self.R0, [3, 3])

# Camera intrinsics and extrinsics

self.c_u = self.P[0, 2]

self.c_v = self.P[1, 2]

self.f_u = self.P[0, 0]

self.f_v = self.P[1, 1]

self.b_x = self.P[0, 3] / (-self.f_u) # relative

self.b_y = self.P[1, 3] / (-self.f_v)

旋转可参考:

https://zhuanlan.zhihu.com/p/86223712

1 -------- 0

/| /|

2 -------- 3 .

| | | |

. 5 -------- 4

|/ |/

6 -------- 7

三、kitti标签坐标转换方法

1、集成主函数-labels_boxes2pixel_in_image

我先给出坐标转换集成主函数,该函数实现了kitti标签2d与3d坐标转换为对应像素坐标实现方法,也将其kitti的标签2d坐标与3d转换坐标显示在图中,其代码如下:

def labels_boxes2pixel_in_image(img, objects, calib):

"""

Show image with 2D bounding boxes

:param img: 图像内容

:param objects: label标签内容

:param calib: 标定文件内容

:return: img1, img2,box3d_to_pixel2d,第一个值是2d坐标图像,第二个值是3d坐标转到像素画的图像,第三个值是相机坐标与像素坐标对应点

"""

img1 = np.copy(img) # for 2d bbox

img2 = np.copy(img) # for 3d bbox

# TODO: change the color of boxes

box3d_to_pixel2d = {"corners_3d":[],"box3d_pts_2d":[]}

for obj in objects:

# 画2d坐标

if obj.type == "DontCare":

continue

else:

cv2.rectangle(img1,(int(obj.xmin),int(obj.ymin)),(int(obj.xmax), int(obj.ymax)),(0, 255, 0),2,)

cv2.putText(img1, str(obj.type), (int(obj.xmin), int(obj.ymin)), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2)

# 这个非常重要,该代码就是将其label的3d坐标转为像素2d坐标关键函数

box3d_pts_2d, corners_3d = compute_box_3d(obj, calib.P)

box3d_to_pixel2d['corners_3d'].append(corners_3d)

box3d_to_pixel2d['box3d_pts_2d'].append(box3d_pts_2d)

if box3d_pts_2d is None:

print("something wrong in the 3D box.")

continue

img2 = draw_projected_box3d(img2, box3d_pts_2d, color=(0, 255, 0))

return img1, img2,box3d_to_pixel2d

在这个函数中只解读compute_box_3d(obj, calib.P)函数,其它画框啥的我不在说明了。

2、标签3d坐标转像素坐标-compute_box_3d(obj, calib.P)

1、函数输入内容

输入是kitti标签内容,已被类给实例化或处理了,输入是标定文件相机内参内容,calib.P是第二个相机内参。

2、旋转角转换

kitti标签最后一个数字是旋转角的转换,该角是绕y轴的旋转,其调用函数是R = roty(obj.ry)。至于该函数实现如下代码:

def roty(t):

""" Rotation about the y-axis. """

c = np.cos(t)

s = np.sin(t)

return np.array([[c, 0, s], [0, 1, 0], [-s, 0, c]])

不要问我为什么,请看坐标转换矩阵:

你也可以参考知乎:https://zhuanlan.zhihu.com/p/86223712

3、原点基准获取3d坐标(l w h)

假设XYZ坐标轴以(0,0,0)原点为中心,通过kitti标签的长宽高得到八个点实际坐标,如下代码:

# 3d bounding box dimensions

l = obj.l

w = obj.w

h = obj.h

# 3d bounding box corners

x_corners = [l / 2, l / 2, -l / 2, -l / 2, l / 2, l / 2, -l / 2, -l / 2]

y_corners = [0, 0, 0, 0, -h, -h, -h, -h]

z_corners = [w / 2, -w / 2, -w / 2, w / 2, w / 2, -w / 2, -w / 2, w / 2]

上面点坐标依次是下图数字编号顺序,如下:

4、绕Y轴坐标旋转

这个不在解释,如下代码直接np.dot实现旋转,如下:

# rotate and translate 3d bounding box

corners_3d = np.dot(R, np.vstack([x_corners, y_corners, z_corners]))

5、获得目标3d坐标

旋转之后,直接加上标签XYZ坐标获取3d坐标,如下:

corners_3d[0, :] = corners_3d[0, :] + obj.t[0]

corners_3d[1, :] = corners_3d[1, :] + obj.t[1]

corners_3d[2, :] = corners_3d[2, :] + obj.t[2]

6、3d坐标转像素坐标

该函数是3d坐标转像素坐标方法调用函数,如下:

# project the 3d bounding box into the image plane,相机坐标转像素坐标

corners_2d = project_to_image(np.transpose(corners_3d), P)

该函数就是实现np.dot(P内参,3d坐标),我也不在解释,代码如下:

def project_to_image(pts_3d, P):

""" Project 3d points to image plane.

Usage: pts_2d = projectToImage(pts_3d, P)

input: pts_3d: nx3 matrix

P: 3x4 projection matrix

output: pts_2d: nx2 matrix

P(3x4) dot pts_3d_extended(4xn) = projected_pts_2d(3xn)

=> normalize projected_pts_2d(2xn)

<=> pts_3d_extended(nx4) dot P'(4x3) = projected_pts_2d(nx3)

=> normalize projected_pts_2d(nx2)

"""

n = pts_3d.shape[0]

pts_3d_extend = np.hstack((pts_3d, np.ones((n, 1))))

# print(('pts_3d_extend shape: ', pts_3d_extend.shape))

# pts_2d = np.dot(P, pts_3d_extend.T).T # 这一句与下面一句是等价的

pts_2d = np.dot(pts_3d_extend, np.transpose(P)) # nx3

pts_2d[:, 0] /= pts_2d[:, 2]

pts_2d[:, 1] /= pts_2d[:, 2]

return pts_2d[:, 0:2]

四、完整代码与结果现实

1、结果现实

2、完整代码

这个代码可以直接使用,如下:

import os

import cv2

import numpy as np

import matplotlib.pyplot as plt

def get_image(img_path):

img = cv2.imread(img_path)

return img

def show_img(img):

plt.imshow(img)

plt.show()

# 转换kitti数据标签labels

class Object3d(object):

""" 3d object label """

def __init__(self, label_file_line):

data = label_file_line.split(" ")

data[1:] = [float(x) for x in data[1:]]

# extract label, truncation, occlusion

self.type = data[0] # 'Car', 'Pedestrian', ...

self.truncation = data[1] # truncated pixel ratio [0..1]

self.occlusion = int(

data[2]

) # 0=visible, 1=partly occluded, 2=fully occluded, 3=unknown

self.alpha = data[3] # object observation angle [-pi..pi]

# extract 2d bounding box in 0-based coordinates

self.xmin = data[4] # left

self.ymin = data[5] # top

self.xmax = data[6] # right

self.ymax = data[7] # bottom

self.box2d = np.array([self.xmin, self.ymin, self.xmax, self.ymax])

# extract 3d bounding box information

self.h = data[8] # box height

self.w = data[9] # box width

self.l = data[10] # box length (in meters)

self.t = (data[11], data[12], data[13]) # location (x,y,z) in camera coord.

self.ry = data[14] # yaw angle (around Y-axis in camera coordinates) [-pi..pi]

def estimate_diffculty(self):

""" Function that estimate difficulty to detect the object as defined in kitti website"""

# height of the bounding box

bb_height = np.abs(self.xmax - self.xmin)

if bb_height >= 40 and self.occlusion == 0 and self.truncation <= 0.15:

return "Easy"

elif bb_height >= 25 and self.occlusion in [0, 1] and self.truncation <= 0.30:

return "Moderate"

elif (

bb_height >= 25 and self.occlusion in [0, 1, 2] and self.truncation <= 0.50

):

return "Hard"

else:

return "Unknown"

def print_object(self):

print(

"Type, truncation, occlusion, alpha: %s, %d, %d, %f"

% (self.type, self.truncation, self.occlusion, self.alpha)

)

print(

"2d bbox (x0,y0,x1,y1): %f, %f, %f, %f"

% (self.xmin, self.ymin, self.xmax, self.ymax)

)

print("3d bbox h,w,l: %f, %f, %f" % (self.h, self.w, self.l))

print(

"3d bbox location, ry: (%f, %f, %f), %f"

% (self.t[0], self.t[1], self.t[2], self.ry)

)

print("Difficulty of estimation: {}".format(self.estimate_diffculty()))

def project_to_image(pts_3d, P):

""" Project 3d points to image plane.

Usage: pts_2d = projectToImage(pts_3d, P)

input: pts_3d: nx3 matrix

P: 3x4 projection matrix

output: pts_2d: nx2 matrix

P(3x4) dot pts_3d_extended(4xn) = projected_pts_2d(3xn)

=> normalize projected_pts_2d(2xn)

<=> pts_3d_extended(nx4) dot P'(4x3) = projected_pts_2d(nx3)

=> normalize projected_pts_2d(nx2)

"""

n = pts_3d.shape[0]

pts_3d_extend = np.hstack((pts_3d, np.ones((n, 1))))

# print(('pts_3d_extend shape: ', pts_3d_extend.shape))

# pts_2d = np.dot(P, pts_3d_extend.T).T # 这一句与下面一句是等价的

pts_2d = np.dot(pts_3d_extend, np.transpose(P)) # nx3

pts_2d[:, 0] /= pts_2d[:, 2]

pts_2d[:, 1] /= pts_2d[:, 2]

return pts_2d[:, 0:2]

def roty(t):

""" Rotation about the y-axis. """

c = np.cos(t)

s = np.sin(t)

return np.array([[c, 0, s], [0, 1, 0], [-s, 0, c]])

def compute_box_3d(obj, P):

""" Takes an object and a projection matrix (P) and projects the 3d

bounding box into the image plane.

Returns:

corners_2d: (8,2) array in left image coord.

corners_3d: (8,3) array in in rect camera coord.

"""

# compute rotational matrix around yaw axis

R = roty(obj.ry)

# 3d bounding box dimensions

l = obj.l

w = obj.w

h = obj.h

# 3d bounding box corners

x_corners = [l / 2, l / 2, -l / 2, -l / 2, l / 2, l / 2, -l / 2, -l / 2]

y_corners = [0, 0, 0, 0, -h, -h, -h, -h]

z_corners = [w / 2, -w / 2, -w / 2, w / 2, w / 2, -w / 2, -w / 2, w / 2]

# rotate and translate 3d bounding box

corners_3d = np.dot(R, np.vstack([x_corners, y_corners, z_corners]))

# print corners_3d.shape

corners_3d[0, :] = corners_3d[0, :] + obj.t[0]

corners_3d[1, :] = corners_3d[1, :] + obj.t[1]

corners_3d[2, :] = corners_3d[2, :] + obj.t[2]

# print 'cornsers_3d: ', corners_3d

# only draw 3d bounding box for objs in front of the camera

if np.any(corners_3d[2, :] < 0.1):

corners_2d = None

return corners_2d, np.transpose(corners_3d)

# project the 3d bounding box into the image plane,相机坐标转像素坐标

corners_2d = project_to_image(np.transpose(corners_3d), P)

# print 'corners_2d: ', corners_2d

return corners_2d, np.transpose(corners_3d)

# 读取label标签

def read_label(label_filename):

lines = [line.rstrip() for line in open(label_filename)]

objects = [Object3d(line) for line in lines]

return objects

class Calibration(object):

""" Calibration matrices and utils

3d XYZ in <label>.txt are in rect camera coord.

2d box xy are in image2 coord

Points in <lidar>.bin are in Velodyne coord.

y_image2 = P^2_rect * x_rect

y_image2 = P^2_rect * R0_rect * Tr_velo_to_cam * x_velo

x_ref = Tr_velo_to_cam * x_velo

x_rect = R0_rect * x_ref

P^2_rect = [f^2_u, 0, c^2_u, -f^2_u b^2_x;

0, f^2_v, c^2_v, -f^2_v b^2_y;

0, 0, 1, 0]

= K * [1|t]

image2 coord:

----> x-axis (u)

|

|

v y-axis (v)

velodyne coord:

front x, left y, up z

rect/ref camera coord:

right x, down y, front z

Ref (KITTI paper): http://www.cvlibs.net/publications/Geiger2013IJRR.pdf

TODO(rqi): do matrix multiplication only once for each projection.

"""

def __init__(self, calib_filepath, from_video=False):

if from_video:

calibs = self.read_calib_from_video(calib_filepath)

else:

calibs = self.read_calib_file(calib_filepath)

# Projection matrix from rect camera coord to image2 coord

self.P = calibs["P2"]

self.P = np.reshape(self.P, [3, 4])

# Rigid transform from Velodyne coord to reference camera coord

self.V2C = calibs["Tr_velo_to_cam"]

self.V2C = np.reshape(self.V2C, [3, 4])

self.C2V = self.inverse_rigid_trans(self.V2C)

# Rotation from reference camera coord to rect camera coord

self.R0 = calibs["R0_rect"]

self.R0 = np.reshape(self.R0, [3, 3])

# Camera intrinsics and extrinsics

self.c_u = self.P[0, 2]

self.c_v = self.P[1, 2]

self.f_u = self.P[0, 0]

self.f_v = self.P[1, 1]

self.b_x = self.P[0, 3] / (-self.f_u) # relative

self.b_y = self.P[1, 3] / (-self.f_v)

def read_calib_file(self, filepath):

""" Read in a calibration file and parse into a dictionary.

Ref: https://github.com/utiasSTARS/pykitti/blob/master/pykitti/utils.py

"""

data = {}

with open(filepath, "r") as f:

for line in f.readlines():

line = line.rstrip()

if len(line) == 0:

continue

key, value = line.split(":", 1)

# The only non-float values in these files are dates, which

# we don't care about anyway

try:

data[key] = np.array([float(x) for x in value.split()])

except ValueError:

pass

return data

def read_calib_from_video(self, calib_root_dir):

""" Read calibration for camera 2 from video calib files.

there are calib_cam_to_cam and calib_velo_to_cam under the calib_root_dir

"""

data = {}

cam2cam = self.read_calib_file(

os.path.join(calib_root_dir, "calib_cam_to_cam.txt")

)

velo2cam = self.read_calib_file(

os.path.join(calib_root_dir, "calib_velo_to_cam.txt")

)

Tr_velo_to_cam = np.zeros((3, 4))

Tr_velo_to_cam[0:3, 0:3] = np.reshape(velo2cam["R"], [3, 3])

Tr_velo_to_cam[:, 3] = velo2cam["T"]

data["Tr_velo_to_cam"] = np.reshape(Tr_velo_to_cam, [12])

data["R0_rect"] = cam2cam["R_rect_00"]

data["P2"] = cam2cam["P_rect_02"]

return data

def cart2hom(self, pts_3d):

""" Input: nx3 points in Cartesian

Oupput: nx4 points in Homogeneous by pending 1

"""

n = pts_3d.shape[0]

pts_3d_hom = np.hstack((pts_3d, np.ones((n, 1))))

return pts_3d_hom

# ===========================

# ------- 3d to 3d ----------

# ===========================

def project_velo_to_ref(self, pts_3d_velo):

pts_3d_velo = self.cart2hom(pts_3d_velo) # nx4

return np.dot(pts_3d_velo, np.transpose(self.V2C))

def project_ref_to_velo(self, pts_3d_ref):

pts_3d_ref = self.cart2hom(pts_3d_ref) # nx4

return np.dot(pts_3d_ref, np.transpose(self.C2V))

def project_rect_to_ref(self, pts_3d_rect):

""" Input and Output are nx3 points """

return np.transpose(np.dot(np.linalg.inv(self.R0), np.transpose(pts_3d_rect)))

def project_ref_to_rect(self, pts_3d_ref):

""" Input and Output are nx3 points """

return np.transpose(np.dot(self.R0, np.transpose(pts_3d_ref)))

def project_rect_to_velo(self, pts_3d_rect):

""" Input: nx3 points in rect camera coord.

Output: nx3 points in velodyne coord.

"""

pts_3d_ref = self.project_rect_to_ref(pts_3d_rect)

return self.project_ref_to_velo(pts_3d_ref)

def project_velo_to_rect(self, pts_3d_velo):

pts_3d_ref = self.project_velo_to_ref(pts_3d_velo)

return self.project_ref_to_rect(pts_3d_ref)

# ===========================

# ------- 3d to 2d ----------

# ===========================

def project_rect_to_image(self, pts_3d_rect):

""" Input: nx3 points in rect camera coord.

Output: nx2 points in image2 coord.

"""

pts_3d_rect = self.cart2hom(pts_3d_rect)

pts_2d = np.dot(pts_3d_rect, np.transpose(self.P)) # nx3

pts_2d[:, 0] /= pts_2d[:, 2]

pts_2d[:, 1] /= pts_2d[:, 2]

return pts_2d[:, 0:2]

def project_velo_to_image(self, pts_3d_velo):

""" Input: nx3 points in velodyne coord.

Output: nx2 points in image2 coord.

"""

pts_3d_rect = self.project_velo_to_rect(pts_3d_velo)

return self.project_rect_to_image(pts_3d_rect)

def project_8p_to_4p(self, pts_2d):

x0 = np.min(pts_2d[:, 0])

x1 = np.max(pts_2d[:, 0])

y0 = np.min(pts_2d[:, 1])

y1 = np.max(pts_2d[:, 1])

x0 = max(0, x0)

# x1 = min(x1, proj.image_width)

y0 = max(0, y0)

# y1 = min(y1, proj.image_height)

return np.array([x0, y0, x1, y1])

def project_velo_to_4p(self, pts_3d_velo):

""" Input: nx3 points in velodyne coord.

Output: 4 points in image2 coord.

"""

pts_2d_velo = self.project_velo_to_image(pts_3d_velo)

return self.project_8p_to_4p(pts_2d_velo)

# ===========================

# ------- 2d to 3d ----------

# ===========================

def project_image_to_rect(self, uv_depth):

""" Input: nx3 first two channels are uv, 3rd channel

is depth in rect camera coord.

Output: nx3 points in rect camera coord.

"""

n = uv_depth.shape[0]

x = ((uv_depth[:, 0] - self.c_u) * uv_depth[:, 2]) / self.f_u + self.b_x

y = ((uv_depth[:, 1] - self.c_v) * uv_depth[:, 2]) / self.f_v + self.b_y

pts_3d_rect = np.zeros((n, 3))

pts_3d_rect[:, 0] = x

pts_3d_rect[:, 1] = y

pts_3d_rect[:, 2] = uv_depth[:, 2]

return pts_3d_rect

def project_image_to_velo(self, uv_depth):

pts_3d_rect = self.project_image_to_rect(uv_depth)

return self.project_rect_to_velo(pts_3d_rect)

def inverse_rigid_trans(self,Tr):

""" Inverse a rigid body transform matrix (3x4 as [R|t])

[R'|-R't; 0|1]

"""

inv_Tr = np.zeros_like(Tr) # 3x4

inv_Tr[0:3, 0:3] = np.transpose(Tr[0:3, 0:3])

inv_Tr[0:3, 3] = np.dot(-np.transpose(Tr[0:3, 0:3]), Tr[0:3, 3])

return inv_Tr

def draw_projected_box3d(image, qs, color=(0, 255, 0), thickness=2):

""" Draw 3d bounding box in image

qs: (8,3) array of vertices for the 3d box in following order:

1 -------- 0

/| /|

2 -------- 3 .

| | | |

. 5 -------- 4

|/ |/

6 -------- 7

"""

qs = qs.astype(np.int32)

for k in range(0, 4):

# Ref: http://docs.enthought.com/mayavi/mayavi/auto/mlab_helper_functions.html

i, j = k, (k + 1) % 4

# use LINE_AA for opencv3

# cv2.line(image, (qs[i,0],qs[i,1]), (qs[j,0],qs[j,1]), color, thickness, cv2.CV_AA)

cv2.line(image, (qs[i, 0], qs[i, 1]), (qs[j, 0], qs[j, 1]), color, thickness)

i, j = k + 4, (k + 1) % 4 + 4

cv2.line(image, (qs[i, 0], qs[i, 1]), (qs[j, 0], qs[j, 1]), color, thickness)

i, j = k, k + 4

cv2.line(image, (qs[i, 0], qs[i, 1]), (qs[j, 0], qs[j, 1]), color, thickness)

return image

def labels_boxes2pixel_in_image(img, objects, calib):

"""

Show image with 2D bounding boxes

:param img: 图像内容

:param objects: label标签内容

:param calib: 标定文件内容

:return: img1, img2,box3d_to_pixel2d,第一个值是2d坐标图像,第二个值是3d坐标转到像素画的图像,第三个值是相机坐标与像素坐标对应点

"""

img1 = np.copy(img) # for 2d bbox

img2 = np.copy(img) # for 3d bbox

# TODO: change the color of boxes

box3d_to_pixel2d = {"corners_3d":[],"box3d_pts_2d":[]}

for obj in objects:

# 画2d坐标

if obj.type == "DontCare":

continue

else:

cv2.rectangle(img1,(int(obj.xmin),int(obj.ymin)),(int(obj.xmax), int(obj.ymax)),(0, 255, 0),2,)

cv2.putText(img1, str(obj.type), (int(obj.xmin), int(obj.ymin)), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2)

# 这个非常重要,该代码就是将其label的3d坐标转为像素2d坐标关键函数

box3d_pts_2d, corners_3d = compute_box_3d(obj, calib.P)

box3d_to_pixel2d['corners_3d'].append(corners_3d)

box3d_to_pixel2d['box3d_pts_2d'].append(box3d_pts_2d)

if box3d_pts_2d is None:

print("something wrong in the 3D box.")

continue

img2 = draw_projected_box3d(img2, box3d_pts_2d, color=(0, 255, 0))

return img1, img2,box3d_to_pixel2d

if __name__ == '__main__':

path = r'C:\Users\Administrator\Desktop\kitti_data\KITTI\training'

index = '000019'

calib_path = os.path.join(path, 'calib', index + '.txt') # 获得标定文件

image_2_path = os.path.join(path, 'image_2', index + '.png') # 获得图像

label_2_path = os.path.join(path, 'label_2', index + '.txt') # 获得标签lebl

img = get_image(image_2_path) # 读取图像

objects = read_label(label_2_path) # 处理标签

calib = Calibration(calib_path) # 处理标定文件

img_bbox2d, img_bbox3d, box3d_to_pixel2d = labels_boxes2pixel_in_image(img, objects, calib) # 重点内容,集成转换

cv2.imwrite('./bbox2d.png',img_bbox2d)

cv2.imwrite('./bbox3d.png',img_bbox3d)

![[译] 大模型推理的极限:理论分析、数学建模与 CPU/GPU 实测(2024)](https://img-blog.csdnimg.cn/img_convert/8cc69429dbd37a1fe4d7dd1519fe9173.png)