文章目录

- 四、Neural Network 神经网络

- 1、Containers - Module

- 2、Convolution Layers - functional.conv2d

- 2.1 stride

- 2.2 padding

- 3、Convolution Layers - Conv2d

- 3.1 in_channels out_channels

- 4、Pooling layers - MaxPool2d

- 4.1 ceil_mode

- 4.2 TensorBoard

- 5、Non-linear Activations

- 5.1 ReLU

- 5.2 Sigmoid

- 6、Linear Layers - Linear

- 6.1 flatten

- 7、CIFAR 10 Model and Sequential

- 8、Loss Functions

- 8.1 L1Loss

- 8.2 MSELoss

- 8.3 CrossEntropyLoss

- 8.4 Sequential

- 8.5 backward

四、Neural Network 神经网络

参考文档:https://pytorch.org/docs/stable/nn.html

1、Containers - Module

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.Module.html#torch.nn.Module

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self):

super().__init__()

def forward(self, input):

output = input + 1

return output

tudui = Tudui()

x = torch.tensor(1.0)

output = tudui(x)

print(output)

tensor(2.)

2、Convolution Layers - functional.conv2d

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.functional.conv2d.html#torch.nn.functional.conv2d

2.1 stride

import torch

import torch.nn.functional as F

input = torch.tensor([

[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]

])

kernel = torch.tensor([

[1, 2, 1],

[0, 1, 0],

[2, 1, 0]

])

input = torch.reshape(input, (1, 1, 5, 5)) # torch.Size([1, 1, 5, 5])

kernel = torch.reshape(kernel, (1, 1, 3, 3)) # torch.Size([1, 1, 3, 3])

output1 = F.conv2d(input, kernel, stride=1)

print(output1)

output2 = F.conv2d(input, kernel, stride=2)

print(output2)

tensor([[[[10, 12, 12],

[18, 16, 16],

[13, 9, 3]]]])

tensor([[[[10, 12],

[13, 3]]]])

2.2 padding

import torch

import torch.nn.functional as F

input = torch.tensor([

[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]

])

kernel = torch.tensor([

[1, 2, 1],

[0, 1, 0],

[2, 1, 0]

])

input = torch.reshape(input, (1, 1, 5, 5)) # torch.Size([1, 1, 5, 5])

kernel = torch.reshape(kernel, (1, 1, 3, 3)) # torch.Size([1, 1, 3, 3])

output1 = F.conv2d(input, kernel, stride=1, padding=1)

print(output1)

output2 = F.conv2d(input, kernel, stride=2, padding=1)

print(output2)

tensor([[[[ 1, 3, 4, 10, 8],

[ 5, 10, 12, 12, 6],

[ 7, 18, 16, 16, 8],

[11, 13, 9, 3, 4],

[14, 13, 9, 7, 4]]]])

tensor([[[[ 1, 4, 8],

[ 7, 16, 8],

[14, 9, 4]]]])

3、Convolution Layers - Conv2d

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.Conv2d.html#torch.nn.Conv2d

动画实现:https://github.com/vdumoulin/conv_arithmetic/blob/master/README.md

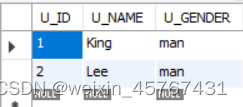

3.1 in_channels out_channels

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

print(imgs.shape)

print(output.shape)

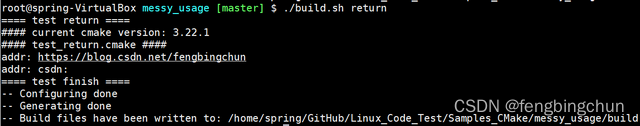

Files already downloaded and verified

torch.Size([64, 3, 32, 32]) # in_channels=3

torch.Size([64, 6, 30, 30]) # out_channels=6 卷积之后 32 -> 30

...

TensorBoard展示:

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

print(imgs.shape) # torch.Size([64, 3, 32, 32])

print(output.shape) # torch.Size([64, 6, 30, 30])

writer.add_images("input", imgs, step)

output = torch.reshape(output, (-1, 3, 30, 30)) # -> [xxx, 3, 30, 30]

writer.add_images("output", output, step)

print(output.shape) # torch.Size([128, 3, 30, 30])

step += 1

writer.close()

4、Pooling layers - MaxPool2d

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.MaxPool2d.html#torch.nn.MaxPool2d

4.1 ceil_mode

import torch

from torch import nn

from torch.nn import MaxPool2d

input = torch.Tensor([

[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1],

])

input = torch.reshape(input, (-1, 1, 5, 5)) # torch.Size([1, 1, 5, 5])

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=True)

def forward(self, input):

output = self.maxpool1(input)

return output

tudui = Tudui()

output = tudui(input)

print(output)

tensor([[[[2., 3.],

[5., 1.]]]])

4.2 TensorBoard

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=False)

def forward(self, input):

output = self.maxpool1(input)

return output

tudui = Tudui()

writer = SummaryWriter("../logs")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, step)

output = tudui(imgs)

writer.add_images("output", output, step)

step += 1

writer.close()

5、Non-linear Activations

5.1 ReLU

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.ReLU.html#torch.nn.ReLU

inplace说明:

①input = -1 – ReLU(input, inplace = True) – input = 0

②input = -1 – output = ReLU(input, inplace = True) – input = -1 output = 0

import torch

from torch import nn

from torch.nn import ReLU

input = torch.Tensor([[1, -0.5], [-1, 3]]) # torch.Size([2, 2])

input = torch.reshape(input, (-1, 1, 2, 2)) # torch.Size([1, 1, 2, 2])

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = ReLU()

def forward(self, input):

output = self.relu1(input)

return output

tudui = Tudui()

output = tudui(input)

print(output) # torch.Size([1, 1, 2, 2])

tensor([[[[1., 0.],

[0., 3.]]]])

5.2 Sigmoid

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.Sigmoid.html#torch.nn.Sigmoid

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.sigmoid1 = Sigmoid()

def forward(self, input):

output = self.sigmoid1(input)

return output

tudui = Tudui()

writer = SummaryWriter("../logs")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, global_step=step)

output = tudui(imgs)

writer.add_images("output", output, global_step=step)

step += 1

writer.close()

6、Linear Layers - Linear

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.Linear.html#torch.nn.Linear

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.liner1 = Linear(in_features=196608, out_features=10)

def forward(self, input):

output = self.liner1(input)

return output

tudui = Tudui()

for data in dataloader:

imgs, targets = data

print(imgs.shape) # torch.Size([64, 3, 32, 32])

output = torch.reshape(imgs, (1, 1, 1, -1))

print(output.shape) # torch.Size([1, 1, 1, 196608])

output = tudui(output)

print(output.shape) # torch.Size([1, 1, 1, 10])

Files already downloaded and verified

torch.Size([64, 3, 32, 32])

torch.Size([1, 1, 1, 196608])

torch.Size([1, 1, 1, 10])

...

6.1 flatten

参考文档:https://pytorch.org/docs/stable/generated/torch.flatten.html?highlight=flatten#torch.flatten

output = torch.reshape(imgs, (1, 1, 1, -1))

print(output.shape) # torch.Size([1, 1, 1, 196608])

改为

output = torch.flatten(imgs)

print(output.shape) # torch.Size([196608]) output --> torch.Size([10])

7、CIFAR 10 Model and Sequential

CIFAR 10:https://www.cs.toronto.edu/~kriz/cifar.html

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2)

self.maxpool1 = MaxPool2d(kernel_size=2)

self.conv2 = Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2)

self.maxpool2 = MaxPool2d(kernel_size=2)

self.conv3 = Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2)

self.maxpool3 = MaxPool2d(kernel_size=2)

self.flatten = Flatten()

self.linear1 = Linear(1024, 64)

self.linear2 = Linear(64, 10)

def forward(self, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

Tudui(

(conv1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(linear1): Linear(in_features=1024, out_features=64, bias=True)

(linear2): Linear(in_features=64, out_features=10, bias=True)

)

torch.Size([64, 10])

Sequential:https://pytorch.org/docs/stable/generated/torch.nn.Sequential.html#torch.nn.Sequential

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

writer = SummaryWriter("../logs")

writer.add_graph(tudui, input)

writer.close()

Tudui(

(model1): Sequential(

(0): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(4): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Flatten(start_dim=1, end_dim=-1)

(7): Linear(in_features=1024, out_features=64, bias=True)

(8): Linear(in_features=64, out_features=10, bias=True)

)

)

torch.Size([64, 10])

8、Loss Functions

8.1 L1Loss

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.L1Loss.html#torch.nn.L1Loss

import torch

from torch.nn import L1Loss

input = torch.tensor([1, 2, 3], dtype=torch.float32) # torch.Size([3])

target = torch.tensor([1, 2, 5], dtype=torch.float32) # torch.Size([3])

input = torch.reshape(input, (1, 1, 1, 3)) # torch.Size([1, 1, 1, 3])

target = torch.reshape(target, (1, 1, 1, 3)) # torch.Size([1, 1, 1, 3])

loss1 = L1Loss(reduction='mean') # 默认为mean

result = loss1(input, target)

print(result)

loss2 = L1Loss(reduction='sum') # sum

result = loss2(input, target)

print(result)

tensor(0.6667)

tensor(2.)

8.2 MSELoss

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.MSELoss.html#torch.nn.MSELoss

import torch

from torch.nn import MSELoss

input = torch.tensor([1, 2, 3], dtype=torch.float32)

target = torch.tensor([1, 2, 5], dtype=torch.float32)

input = torch.reshape(input, (1, 1, 1, 3))

target = torch.reshape(target, (1, 1, 1, 3))

loss = MSELoss()

result = loss(input, target)

print(result)

tensor(1.3333)

8.3 CrossEntropyLoss

参考文档:https://pytorch.org/docs/stable/generated/torch.nn.CrossEntropyLoss.html#torch.nn.CrossEntropyLoss

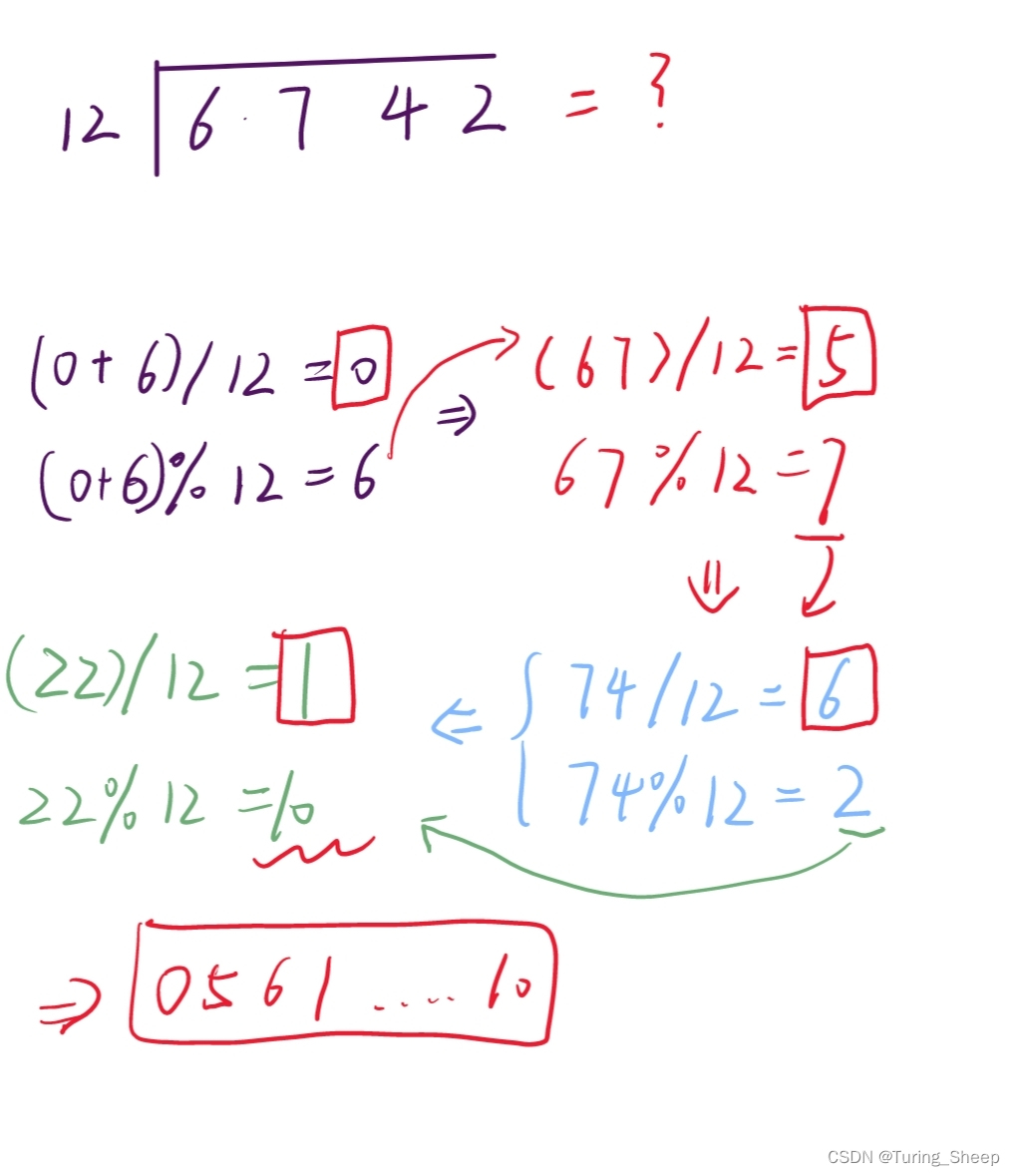

计算公式:

import torch

from torch.nn import CrossEntropyLoss

input = torch.tensor([0.1, 0.2, 0.3])

target = torch.tensor([1])

input = torch.reshape(input, (1, 3))

cross = CrossEntropyLoss()

result = cross(input, target)

print(result)

tensor(1.1019)

8.4 Sequential

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

print(output)

print(targets)

Files already downloaded and verified

tensor([[-0.0715, 0.0221, -0.0562, -0.0901, 0.0627, -0.0606, 0.0137, 0.0783,

-0.0951, -0.1070]], grad_fn=<AddmmBackward0>)

tensor([3])

tensor([[-0.0715, 0.0304, -0.0729, -0.0767, 0.0554, -0.0834, -0.0089, 0.0624,

-0.0777, -0.0848]], grad_fn=<AddmmBackward0>)

tensor([8])

...

加入Loss Functions:

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear, CrossEntropyLoss

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = CrossEntropyLoss()

tudui = Tudui()

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

result_loss = loss(output, targets)

print(result_loss)

Files already downloaded and verified

tensor(2.3437, grad_fn=<NllLossBackward0>)

tensor(2.3600, grad_fn=<NllLossBackward0>)

tensor(2.3680, grad_fn=<NllLossBackward0>)

...

8.5 backward

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear, CrossEntropyLoss

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("../data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = CrossEntropyLoss()

tudui = Tudui()

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

result_loss = loss(output, targets)

result_loss.backward() # 反向传播

print("OK")